Extracting Regular Building Footprints Using Projection Histogram Method from UAV-Based 3D Models

Abstract

:1. Introduction

2. Related Works

2.1. Methods Based on Remote Sensing Images

2.2. Methods Based on Point Cloud Data

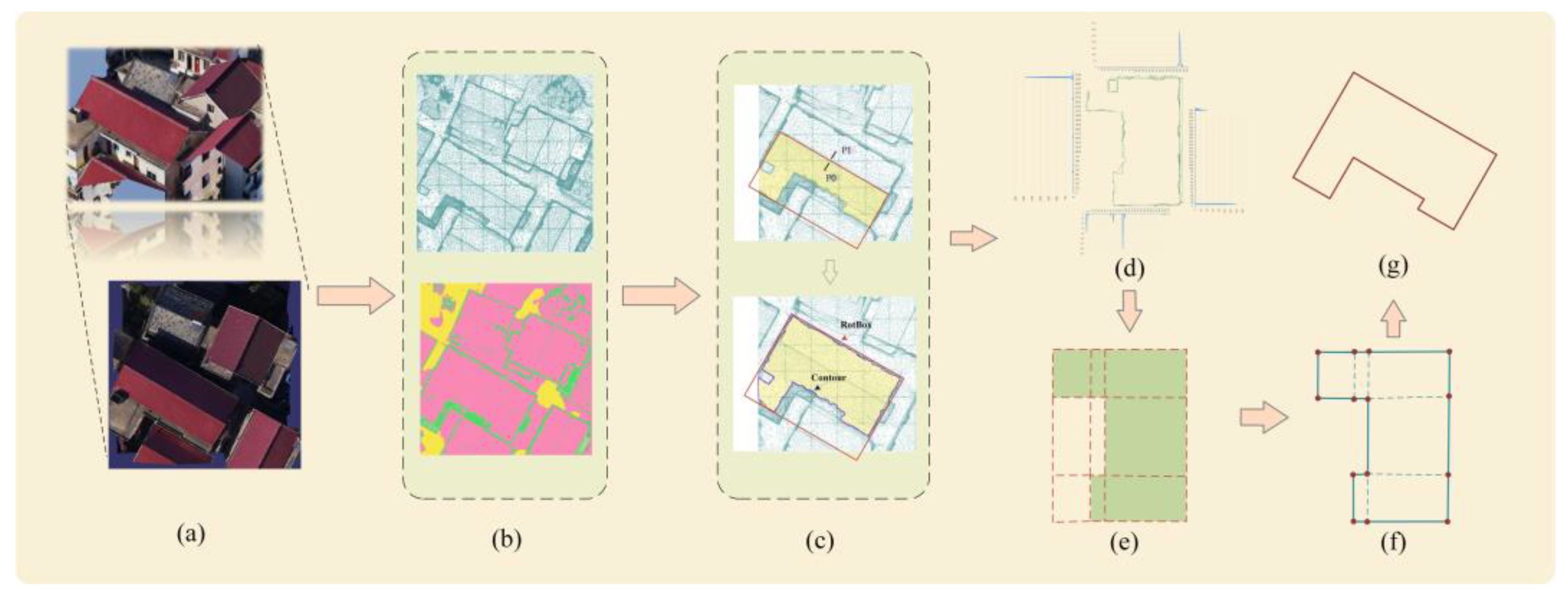

3. Methods

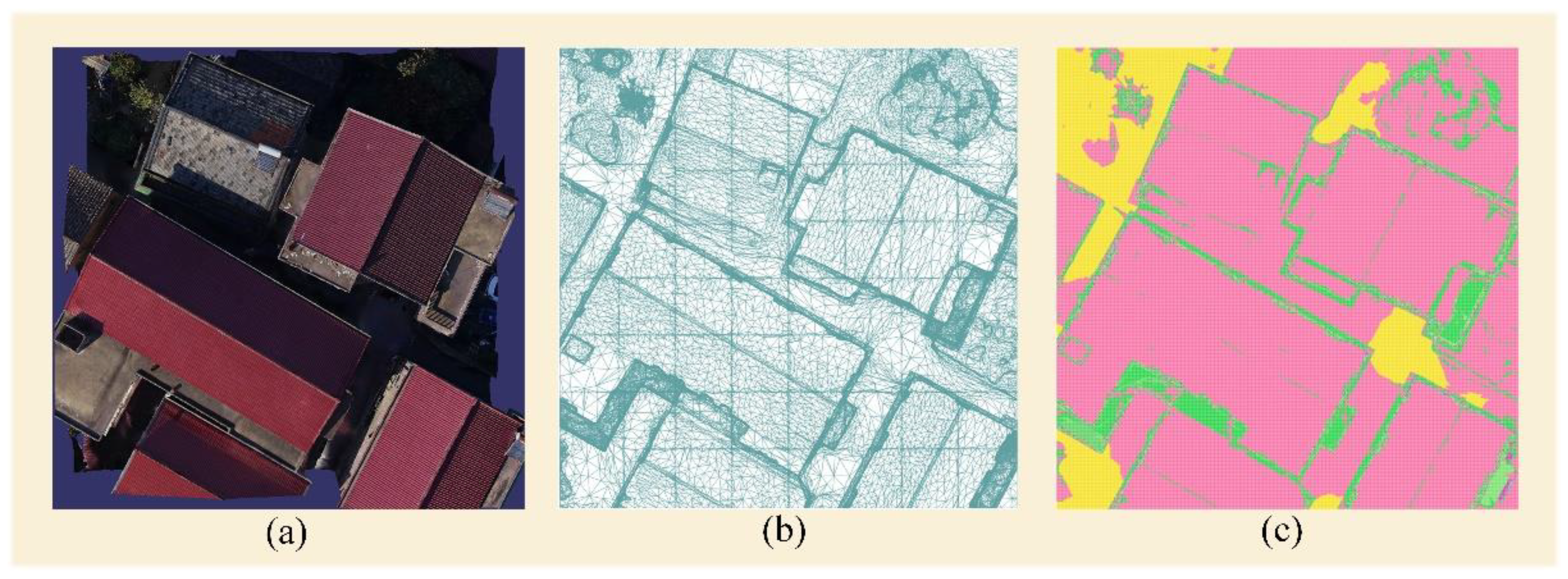

3.1. Extraction of Triangular Facets Data

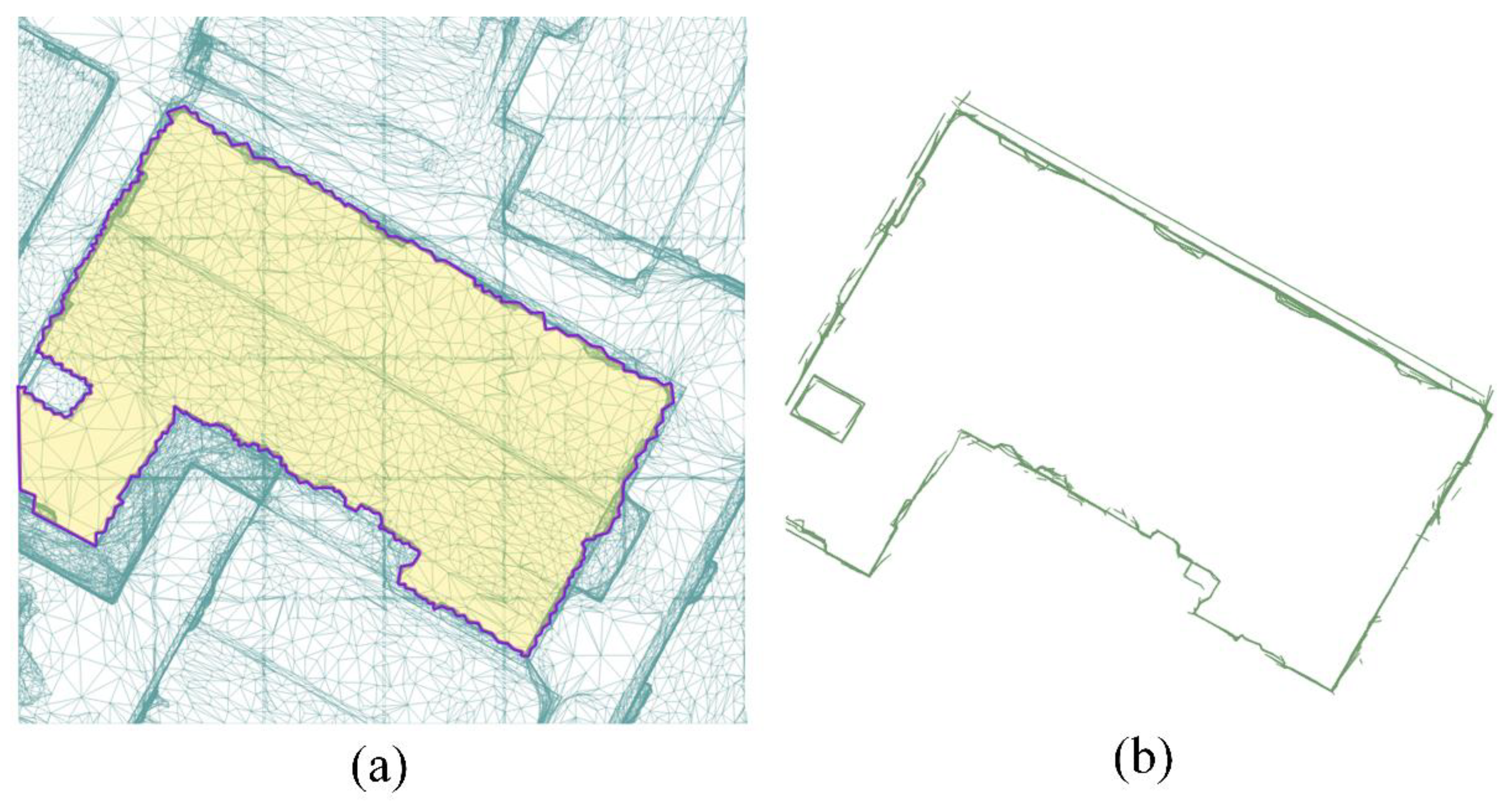

3.2. Selection of Roof Grids and Outer Walls’ Triangular Facets

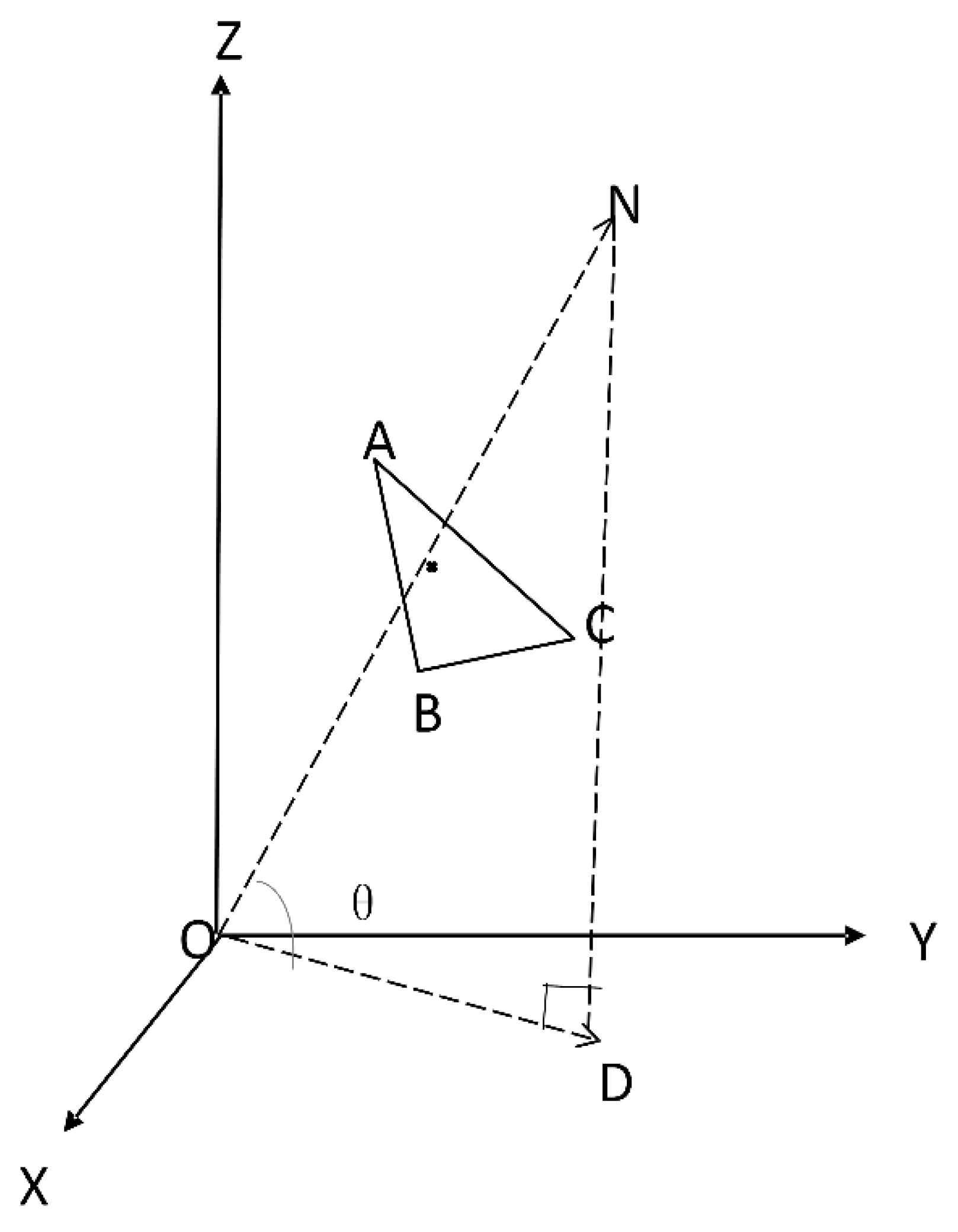

3.3. Generation of Regular Building Footprint Using Projection Histogram

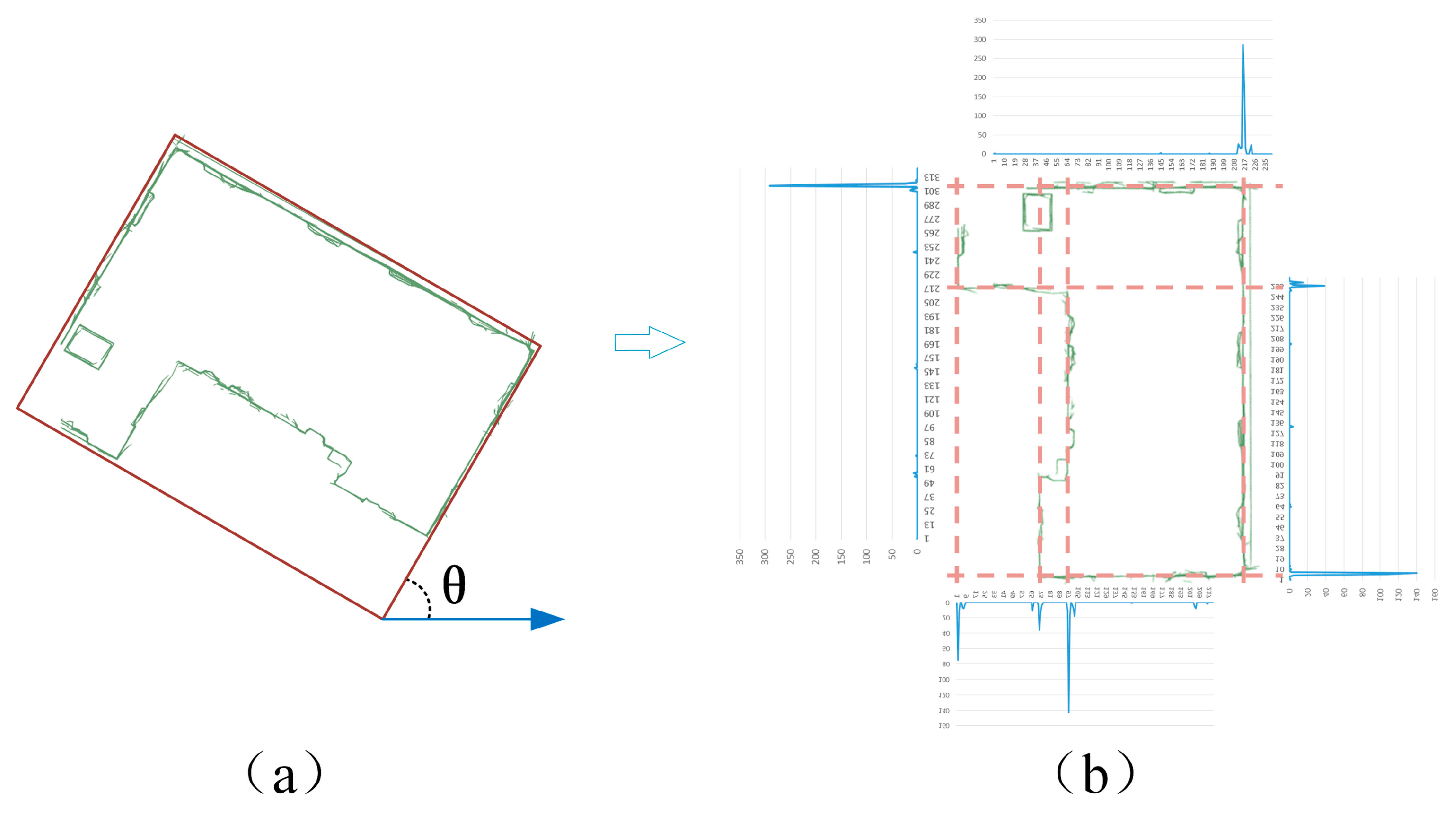

- (1)

- Coordinate transformation was performed based on the building’s principal direction, rotating the selected triangular facets horizontally or vertically.

- (2)

- Typically, building outlines consist of predominantly right-angled polygons, resulting in four primary orientations for outer walls. Consequently, the normal vectors of the wall triangular facets are partitioned into four groups, each representing walls facing a specific direction.

- (3)

- Employing the projection histogram method with an appropriate bin width, data for each group of wall triangular facets is projected onto the vertical direction of the wall’s orientation. This creates a histogram by tallying the count of triangular facets. Wall locations exhibit more triangular facets, leading to pronounced peaks in the histogram (see Figure 5). Features such as doors, windows, and noise introduce smaller peaks, while non-building areas register a count of 0.

- (4)

- Identifying peaks in the histogram corresponds to the edges of the building’s outer walls, facilitating the detection of straight lines representing each outer wall.

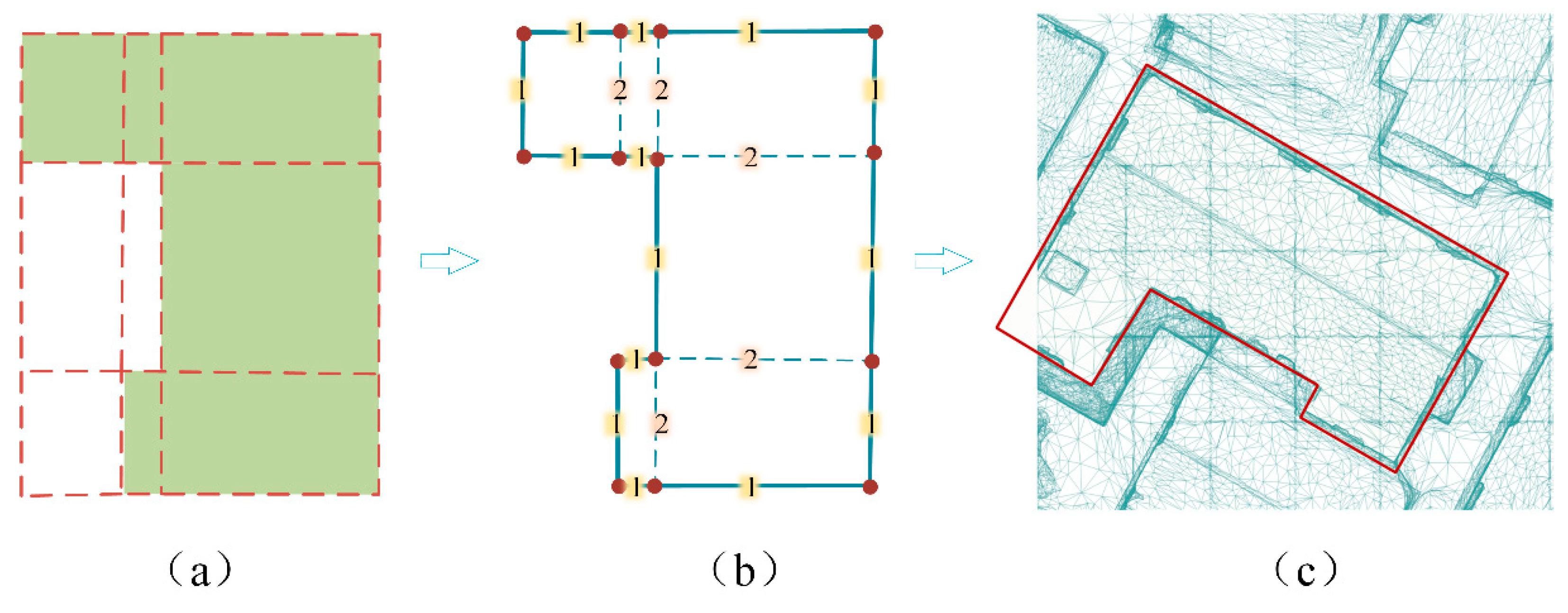

- (5)

- All straight lines form numerous rectangles of varying sizes. By assessing whether these rectangles intersect with the roof counting grid, those outside the building area can be excluded.

- (6)

- Within the remaining rectangles, internal line segments are shared between two rectangles, whereas line segments of the outer walls belong to only one. Utilizing this distinction facilitates the removal of internal lines. Finally, redundant points are eliminated, and the regular outline of the building’s outer walls, referred to as the building footprint, is obtained by rotating the results back to the original orientation (see Figure 6).

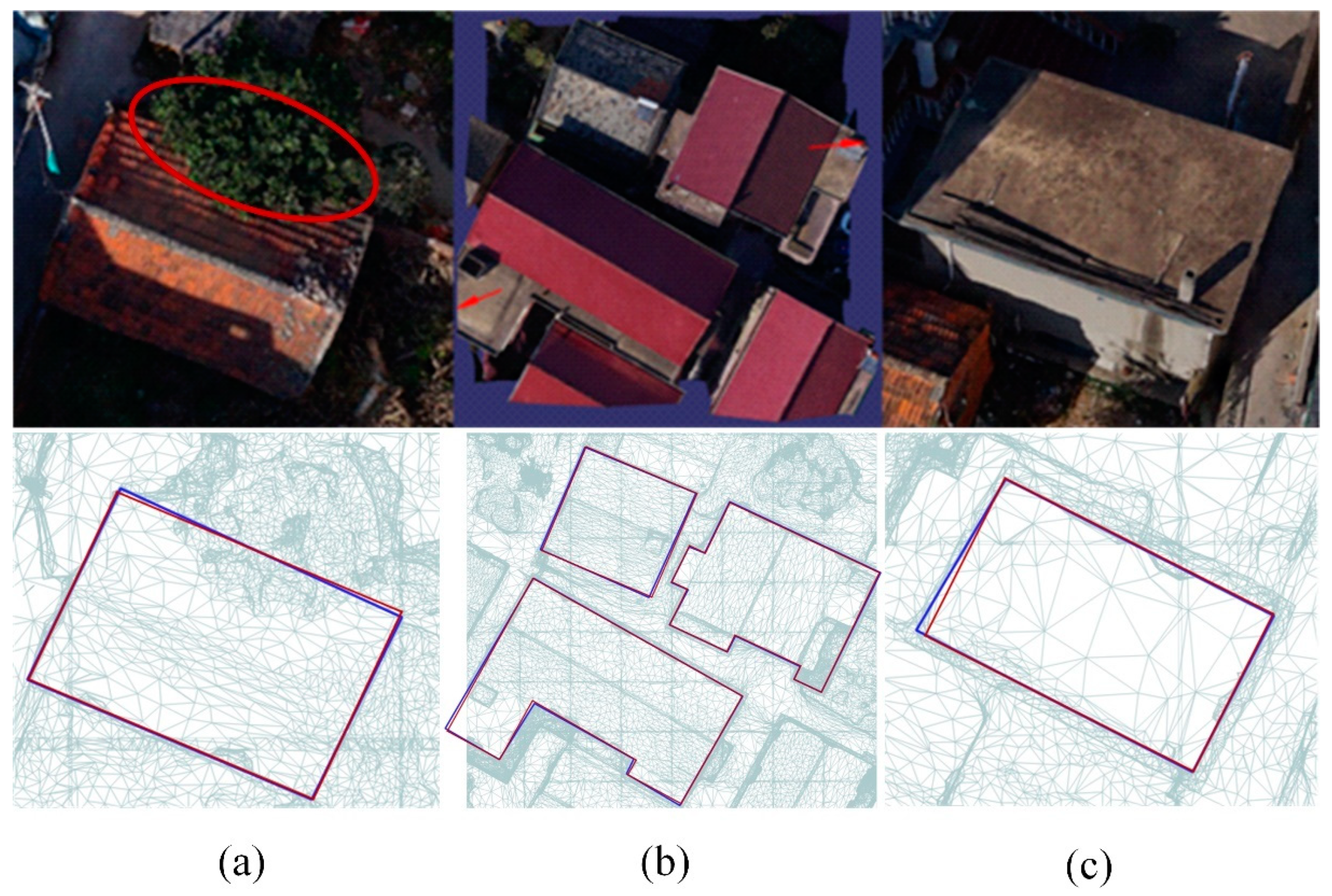

4. Results and Discussion

4.1. Experimental Data

4.2. Accuracy Evaluation

4.3. Extraction and Evaluation

5. Conclusions

- (1)

- The proposed method reduces the time and effort needed for building footprint extraction by requiring only a single seed point on the roof, significantly enhancing the efficiency of 3D mapping workflows compared to traditional techniques.

- (2)

- The methodology demonstrates robustness in challenging conditions, effectively handling partial occlusions and missing wall data, ensuring reliable performance in real-world applications.

- (3)

- By generating precise building footprints, the approach supports advanced applications such as 3D reconstruction and individual building modeling, with potential benefits for urban planning and cadastral management.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Fang, S.; Meng, X.; Li, R. Building Extraction with Vision Transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5625711. [Google Scholar] [CrossRef]

- Gilani, S.A.N.; Awrangjeb, M.; Lu, G. An Automatic Building Extraction and Regularisation Technique Using LiDAR Point Cloud Data and Orthoimage. Remote Sens. 2016, 8, 258. [Google Scholar] [CrossRef]

- Turker, M.; Koc-San, D. Building extraction from high-resolution optical spaceborne images using the integration of support vector machine (SVM) classification, Hough transformation and perceptual grouping. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 58–69. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDLines: A real-time line segment detector with a false detection control. Pattern Recognit. Lett. 2011, 32, 1633–1642. [Google Scholar] [CrossRef]

- Yang, X.; Wang, J.; Qin, X.; Wang, J.; Ye, X.; Qin, Q. Fast Urban Aerial Image Matching Based on Rectangular Building Extraction. IEEE Geosci. Remote Sens. Mag. 2015, 3, 21–27. [Google Scholar] [CrossRef]

- Liu, C.; Huang, X.; Zhu, Z.; Chen, H.; Tang, X.; Gong, J. Automatic extraction of built-up area from ZY3 multi-view satellite imagery: Analysis of 45 global cities. Remote Sens. Environ. 2019, 226, 51–73. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, X.; Zhang, J.; Wu, W.; Gao, X. Refined extraction of buildings with the semantic edge-assisted approach from very high-resolution remotely sensed imagery. Int. J. Remote Sens. 2020, 41, 8352–8365. [Google Scholar] [CrossRef]

- Li, J.; Huang, X.; Tu, L.; Zhang, T.; Wang, L. A review of building detection from very high resolution optical remote sensing images. GISci. Remote Sens. 2022, 59, 1199–1225. [Google Scholar] [CrossRef]

- Luo, L.; Li, P.; Yan, X. Deep Learning-Based Building Extraction from Remote Sensing Images: A Comprehensive Review. Energies 2021, 14, 7982. [Google Scholar] [CrossRef]

- Ding, L.; Tang, H.; Liu, Y.; Shi, Y.; Zhu, X.X.; Bruzzone, L. Adversarial Shape Learning for Building Extraction in VHR Remote Sensing Images. IEEE Trans. Image Process. 2022, 31, 678–690. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, Z.; Wang, B.; Li, S.; Liu, H.; Xu, D.; Ma, C. BOMSC-Net: Boundary Optimization and Multi-Scale Context Awareness Based Building Extraction From High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5618617. [Google Scholar] [CrossRef]

- Chen, S.; Ogawa, Y.; Zhao, C.; Sekimoto, Y. Large-scale individual building extraction from open-source satellite imagery via super-resolution-based instance segmentation approach. ISPRS J. Photogramm. Remote Sens. 2023, 195, 129–152. [Google Scholar] [CrossRef]

- Masouleh, M.K.; Shah-Hosseini, R. Fusion of deep learning with adaptive bilateral filter for building outline extraction from remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 046018. [Google Scholar] [CrossRef]

- Guo, H.; Su, X.; Wu, C.; Du, B.; Zhang, L. Decoupling Semantic and Edge Representations for Building Footprint Extraction from Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5613116. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, T.; Ji, S.; Luo, M.; Gong, J. BuildMapper: A fully learnable framework for vectorized building contour extraction. ISPRS J. Photogramm. Remote Sens. 2023, 197, 87–104. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, Z.; Huang, Z.; Liu, Y. PolyBuilding: Polygon transformer for building extraction. ISPRS J. Photogramm. Remote Sens. 2023, 199, 15–27. [Google Scholar] [CrossRef]

- Cao, S.; Hu, D.; Zhao, W.; Du, M.; Mo, Y.; Chen, S. Integrating multiview optical point clouds and multispectral images from ZiYuan-3 satellite remote sensing data to generate an urban digital surface model. J. Appl. Remote Sens. 2020, 14, 014505. [Google Scholar] [CrossRef]

- Zhu, L.; Shortridge, A.; Lusch, D. Conflating LiDAR data and multispectral imagery for efficient building detection. J. Appl. Remote Sens. 2012, 6, 063602. [Google Scholar] [CrossRef]

- Mongus, D.; Lukač, N.; Žalik, B. Ground and building extraction from LiDAR data based on differential morphological profiles and locally fitted surfaces. ISPRS J. Photogramm. Remote Sens. 2014, 93, 145–156. [Google Scholar] [CrossRef]

- Du, S.; Zhang, Y.; Zou, Z.; Xu, S.; He, X.; Chen, S. Automatic building extraction from LiDAR data fusion of point and grid-based features. ISPRS J. Photogramm. Remote Sens. 2017, 130, 294–307. [Google Scholar] [CrossRef]

- Huang, H.; Brenner, C.; Sester, M. A generative statistical approach to automatic 3D building roof reconstruction from laser scanning data. ISPRS J. Photogramm. Remote Sens. 2013, 79, 29–43. [Google Scholar] [CrossRef]

- Green, P.J. Reversible Jump Markov Chain Monte Carlo Computation and Bayesian Model Determination. Biometrika 1995, 82, 711–732. [Google Scholar] [CrossRef]

- Zeng, S.; Chen, J.; Cho, Y.K. User exemplar-based building element retrieval from raw point clouds using deep point-level features. Autom. Constr. 2020, 114, 103159. [Google Scholar] [CrossRef]

- Pérez-Suárez, A.; Martínez-Trinidad, J.F.; Carrasco-Ochoa, J.A. A review of conceptual clustering algorithms. Artif. Intell. Rev. 2019, 52, 1267–1296. [Google Scholar] [CrossRef]

- Widyaningrum, E.; Peters, R.Y.; Lindenbergh, R.C. Building outline extraction from ALS point clouds using medial axis transform descriptors. Pattern Recognit. 2020, 106, 107447. [Google Scholar] [CrossRef]

- Li, L.; Song, N.; Sun, F.; Liu, X.; Wang, R.; Yao, J.; Cao, S. Point2Roof: End-to-end 3D building roof modeling from airborne LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 193, 17–28. [Google Scholar] [CrossRef]

- Sharma, M.; Garg, R.D. Building footprint extraction from aerial photogrammetric point cloud data using its geometric features. J. Build. Eng. 2023, 76, 107387. [Google Scholar] [CrossRef]

- Zhang, R.; Li, G.; Li, M.; Wang, L. Fusion of images and point clouds for the semantic segmentation of large-scale 3D scenes based on deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 143, 85–96. [Google Scholar] [CrossRef]

- Kabolizade, M.; Ebadi, H.; Mohammadzadeh, A. Design and implementation of an algorithm for automatic 3D reconstruction of building models using genetic algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 104–114. [Google Scholar] [CrossRef]

- Lin, H.; Hao, M.; Luo, W.; Yu, H.; Zheng, N. BEARNet: A Novel Buildings Edge-Aware Refined Network for Building Extraction from High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6005305. [Google Scholar] [CrossRef]

| Dataset | mIOU | RMSE | Cm | Cr | F1 Score |

|---|---|---|---|---|---|

| Dataset-1 | 99.2% | 0.07 | 98.8% | 90.2% | 94.3% |

| Dataset-2 | 99.4% | 0.05 | 94.7% | 98.8% | 96.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, Y.; Li, X.; Jin, F.; Li, C.; Liu, W.; Li, E.; Zhang, L. Extracting Regular Building Footprints Using Projection Histogram Method from UAV-Based 3D Models. ISPRS Int. J. Geo-Inf. 2025, 14, 6. https://doi.org/10.3390/ijgi14010006

Ren Y, Li X, Jin F, Li C, Liu W, Li E, Zhang L. Extracting Regular Building Footprints Using Projection Histogram Method from UAV-Based 3D Models. ISPRS International Journal of Geo-Information. 2025; 14(1):6. https://doi.org/10.3390/ijgi14010006

Chicago/Turabian StyleRen, Yaoyao, Xing Li, Fangyuqing Jin, Chunmei Li, Wei Liu, Erzhu Li, and Lianpeng Zhang. 2025. "Extracting Regular Building Footprints Using Projection Histogram Method from UAV-Based 3D Models" ISPRS International Journal of Geo-Information 14, no. 1: 6. https://doi.org/10.3390/ijgi14010006

APA StyleRen, Y., Li, X., Jin, F., Li, C., Liu, W., Li, E., & Zhang, L. (2025). Extracting Regular Building Footprints Using Projection Histogram Method from UAV-Based 3D Models. ISPRS International Journal of Geo-Information, 14(1), 6. https://doi.org/10.3390/ijgi14010006