Use and Effectiveness of Chatbots as Support Tools in GIS Programming Course Assignments

Abstract

1. Introduction

1.1. Research Motivation and Contributions

- RQ1: How is prior student programming experience related to perceived improvements in programming skills?

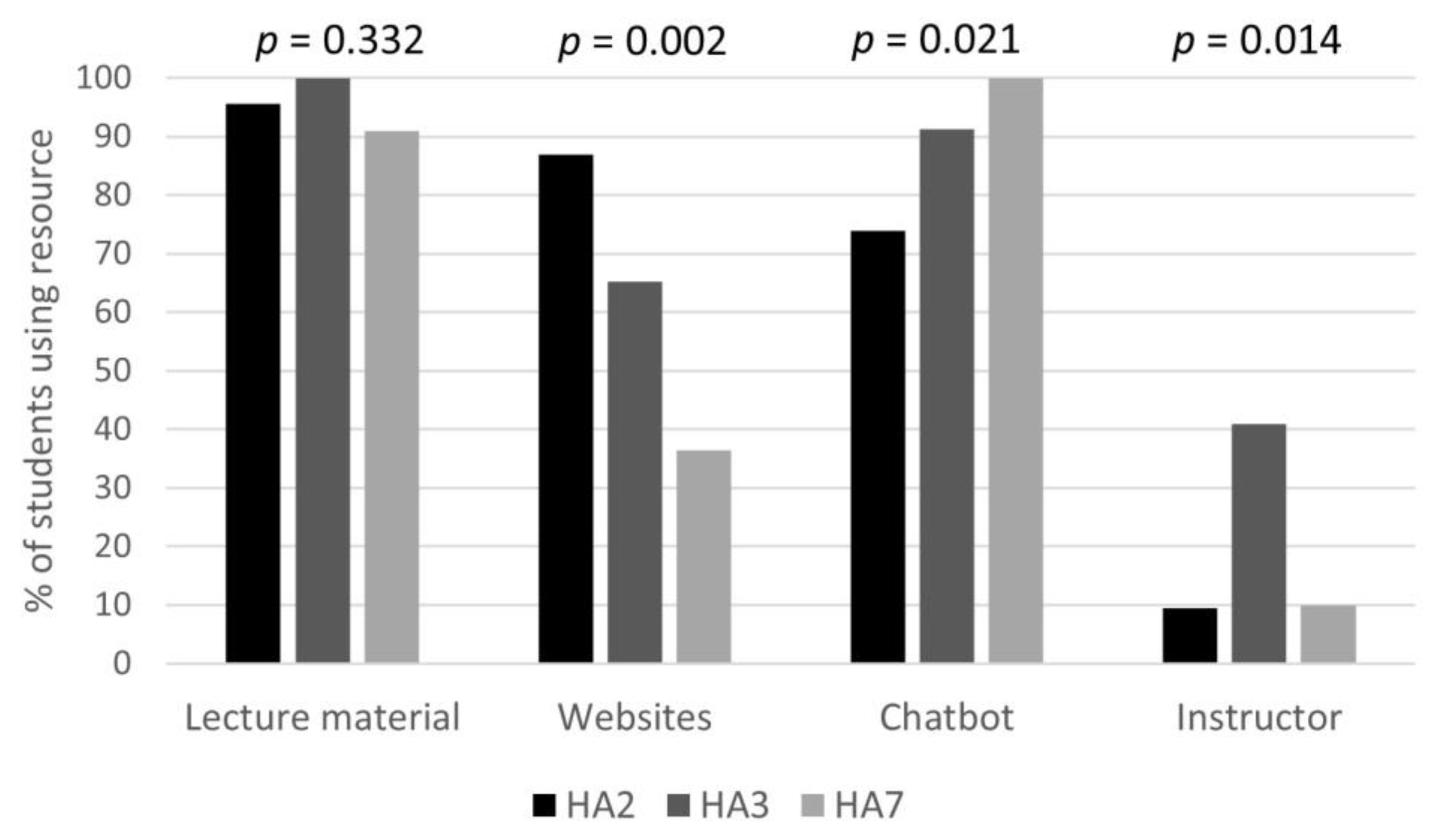

- RQ2: How does the use of programming resources evolve throughout the course?

- RQ3: Does the introduction of chatbots prior to programming tasks negatively affect students’ code comprehension abilities?

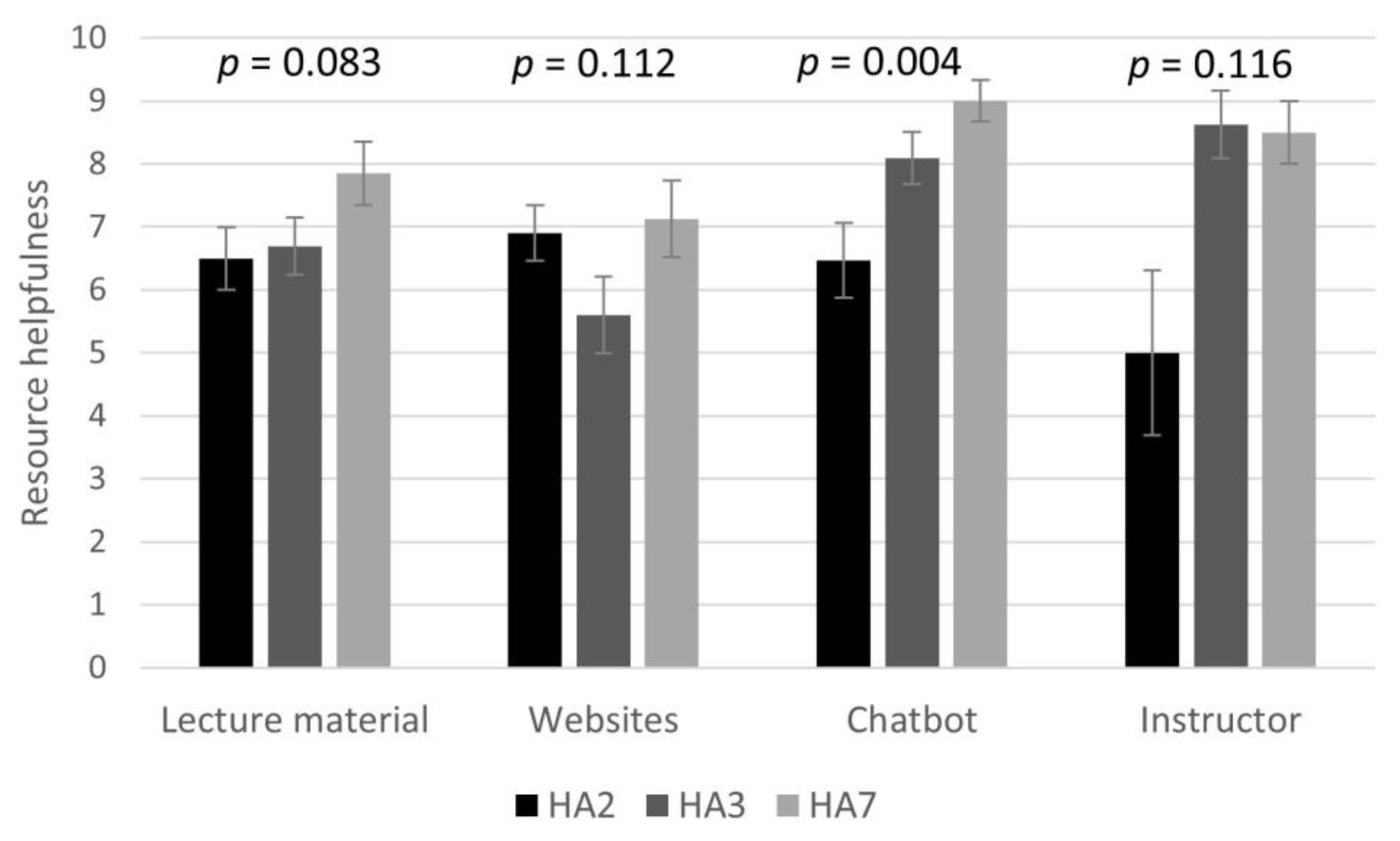

- RQ4: How is chatbot helpfulness related to the complexity of assignments and their spatial focus?

- RQ5: Is the quality of chatbot responses linked to the extent of code modifications needed to achieve functional code?

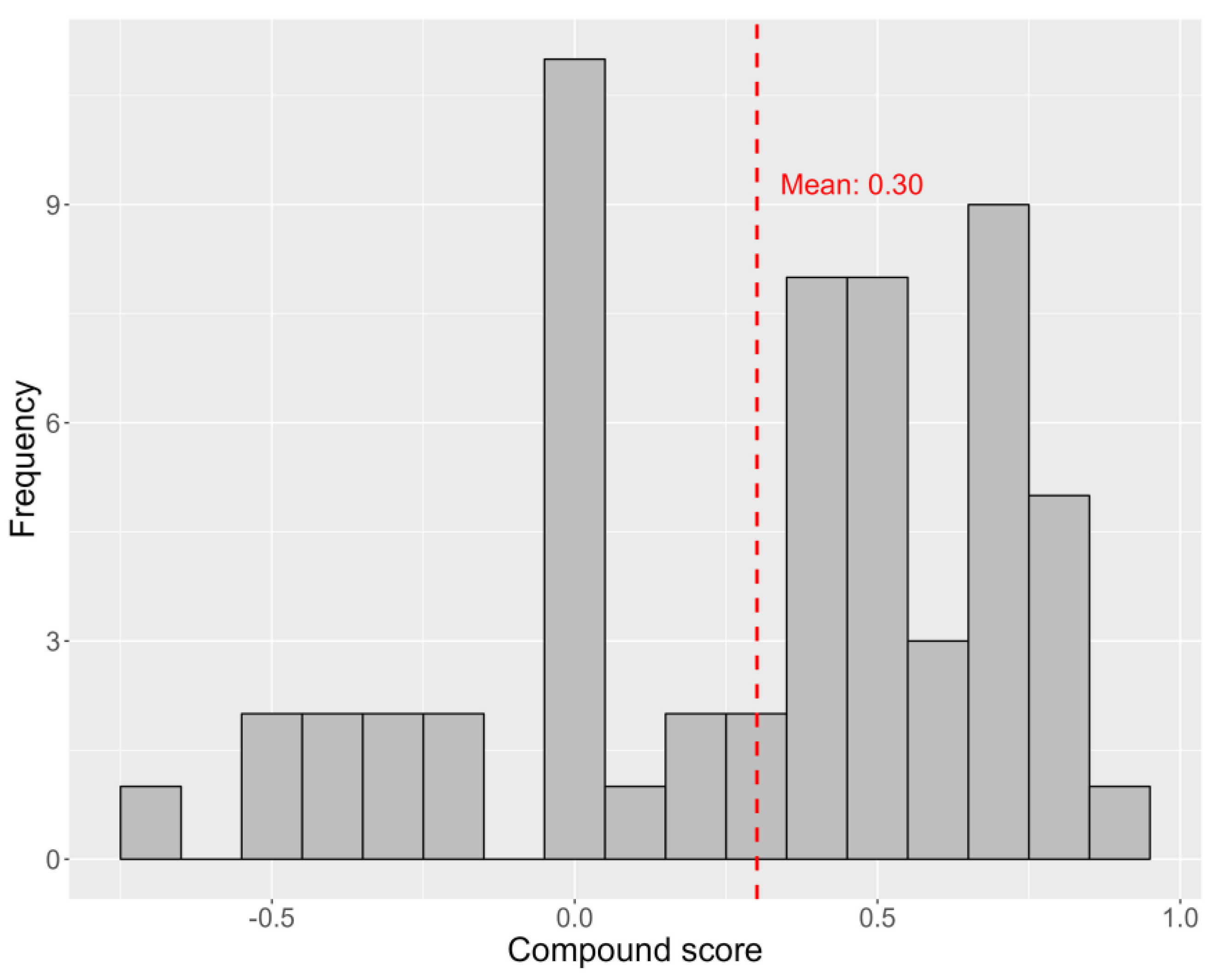

- RQ6: What sentiment scores emerge from student discussions regarding the usefulness of chatbot responses?

1.2. Paper Organization

2. Materials and Methods

2.1. Course Content and Enrollment

- Week/Assignment 1–3: Basics of Python programming and editing, including Python editors, syntax, variables, control structures (loops, conditional statements), data types, object oriented programming, and reading/writing from/to text files.

- Week/Assignment 4–8: ArcPy functionality, including spatial data management, table and feature class manipulation through cursors, manipulation of feature geometries, raster processing, and Python access to built-in geoprocessing tools.

- Week/Assignment 9: Customized ArcGIS script tools.

- Week/Assignment 10: ArcGIS and Jupyter notebooks.

- Week/Assignment 11: Open libraries for spatial analysis, including Shapely and GeoPandas.

- Week/Assignment 12: Open-source geospatial scripting in QGIS.

2.2. Student Tasks

2.2.1. Assignments

- Prior programming experience (0…10)—Assignment 2 only.

- Prior Python experience (0…10)—Assignment 2 only.

- Assignment completion time—Assignments 2 and 3 only.

- Perceived enhancement in programming skills (0…10)—Assignments 2 and 3 only.

- Use of help resources: Lecture material (Yes/No), other websites (Yes/No), chatbot (Yes/No), instructor/TA (Yes/No).

- Helpfulness of resources: Lecture materials (0…10), other websites (0…10), chatbot (0…10), instructor/TA (0…10).

- Quality of chatbot generated code (0…10)—Assignment 7 only.

- Handling of problems with chatbot generated code (Text)—Assignment 7 only.

2.2.2. Group Discussion

- Usability of chatbots for course work (e.g., relevance, accuracy, completeness of responses).

- Strategies or techniques for improving the usability of chatbot responses.

- The potential use of chatbots for educational purposes in the future.

2.3. Analysis Methods

2.3.1. Assignment Surveys

2.3.2. Group Discussions and Sentiment Analysis

3. Results

3.1. Programming Tasks

3.1.1. RQ1: Skill Improvement

3.1.2. RQ2: Programming Resources

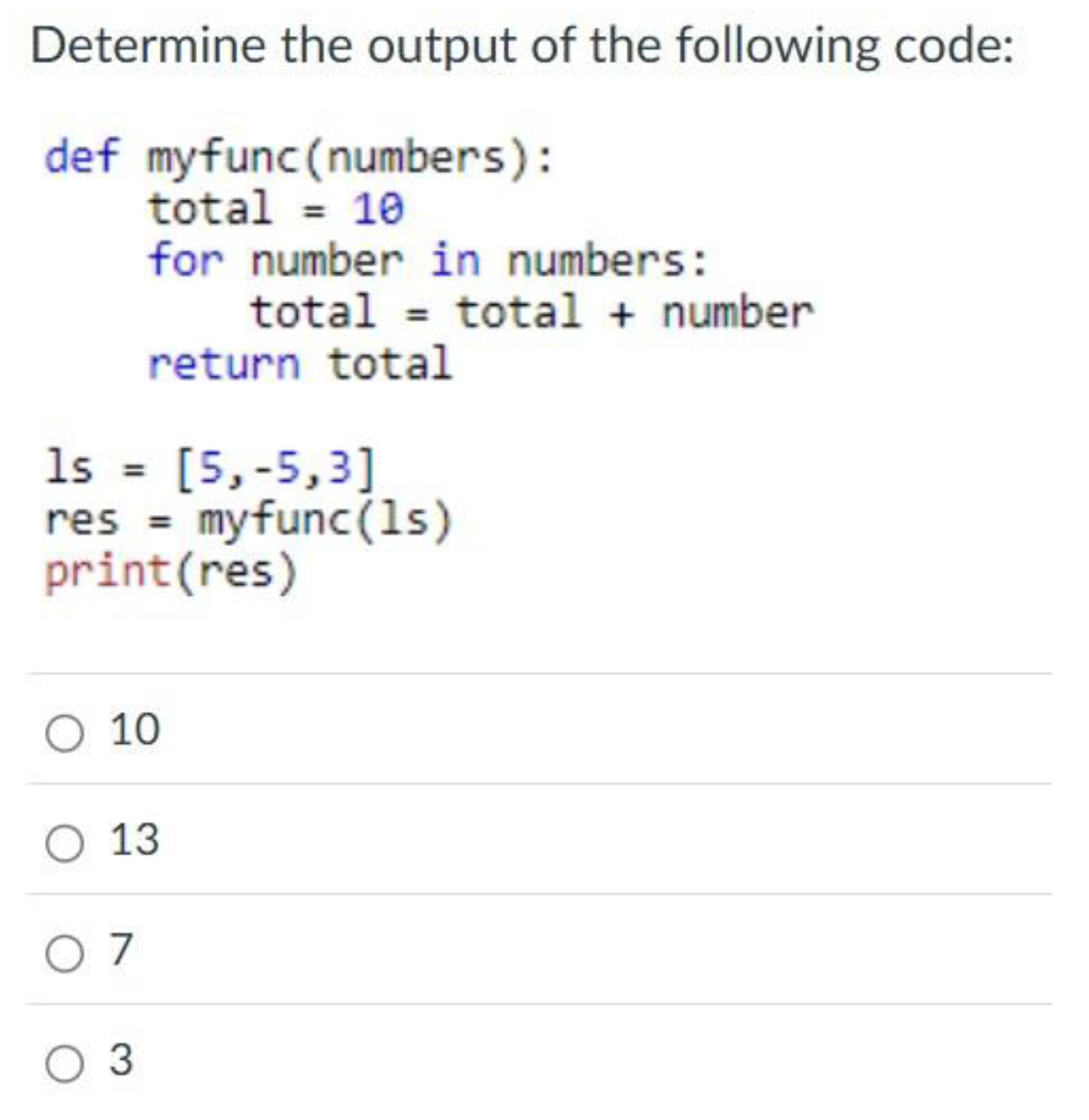

3.1.3. RQ3: Code Comprehension

3.1.4. RQ4: Chatbot Helpfulness

3.1.5. RQ5: Code Readiness

3.2. Discussion Results

3.2.1. Assessment of Response Usefulness (Topic 1, RQ6)

3.2.2. Strategies for Response Improvement (Topic 2)

3.2.3. Use of Chatbots for Future Education (Topic 3)

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Griol, D.; Callejas, Z. A Neural Network Approach to Intention Modeling for User-Adapted Conversational Agents. Comput. Intell. Neurosci. 2016, 2016, 8402127. [Google Scholar] [PubMed]

- Nirala, K.K.; Singh, N.K.; Purani, V.S. A survey on providing customer and public administration based services using AI: Chatbot. Multimed. Tools Appl. 2022, 81, 22215–22246. [Google Scholar] [PubMed]

- Labadze, L.; Grigolia, M.; Machaidze, L. Role of AI chatbots in education: Systematic literature review. Int. J. Educ. Technol. High. Educ. 2023, 20, 56. [Google Scholar]

- Pham, X.L.; Pham, T.; Nguyen, Q.M.; Nguyen, T.H.; Cao, T.T.H. Chatbot as an intelligent personal assistant for mobile language learning. In Proceedings of the 2nd International Conference on Education and E-Learning (ICEEL 2018), Bali, Indonesia, 5–7 November 2018; pp. 16–21. [Google Scholar]

- Rahman, A.; Alqahtani, L.; Albooq, A.; Ainousah, A. A Survey on Security and Privacy of Large Multimodal Deep Learning Models: Teaching and Learning Perspective. In Proceedings of the 21st Learning and Technology Conference, Jeddah, Saudi Arabia, 15 January 2024. [Google Scholar]

- Ilieva, G.; Yankova, T.; Klisarova-Belcheva, S.; Dimitrov, A.; Bratkov, M.; Angelov, D. Effects of Generative Chatbots in Higher Education. Inf. Fusion 2023, 14, 492. [Google Scholar]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. 2023, 28, 973–1018. [Google Scholar]

- Deng, X.; Yu, Z. A Meta-Analysis and Systematic Review of the Effect of Chatbot Technology Use in Sustainable Education. Sustainability 2023, 154, 2940. [Google Scholar]

- Tegos, S.; Demetriadis, S.; Karakostas, A. Promoting academically productive talk with conversational agent interventions in collaborative learning settings. Comput. Educ. 2015, 87, 309–325. [Google Scholar]

- Yilmaz, R.; Yilmaz, F.G.K. The effect of generative artificial intelligence (AI) based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar]

- Retscher, G. Exploring the intersection of artificial intelligence and higher education: Opportunities and challenges in the context of geomatics education. Appl. Geomat. 2025, 17, 49–61. [Google Scholar]

- Groothuijsen, S.; Beemt, A.v.d.; Remmers, J.C.; Meeuwen, L.W.v. AI chatbots in programming education: Students’ use in a scientific computing course and consequences for learning. Comput. Educ. Artif. Intell. 2024, 7, 100290. [Google Scholar]

- Winkler, R.; Hobert, S.; Salovaara, A.; Söllner, M.; Leimeister, J.M. Sara, the Lecturer: Improving Learning in Online Education with a Scaffolding-Based Conversational Agent. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Essel, H.B.; Vlachopoulos, D.; Tachie-Menson, A.; Johnson, E.E.; Baah, P.K. The impact of a virtual teaching assistant (chatbot) on students’ learning in Ghanaian higher education. Int. J. Educ. Technol. High. Educ. 2022, 19, 57. [Google Scholar] [CrossRef]

- Kosar, T.; Ostojić, D.; Liu, Y.D.; Mernik, M. Computer Science Education in ChatGPT Era: Experiences from an Experiment in a Programming Course for Novice Programmers. Mathematics 2024, 12, 629. [Google Scholar] [CrossRef]

- Sun, D.; Boudouaia, A.; Zhu, C.; Li, Y. Would ChatGPT-facilitated programming mode impact college students’ programming behaviors, performances, and perceptions? An empirical study. Int. J. Educ. Technol. High. Educ. 2024, 21, 14. [Google Scholar] [CrossRef]

- Haindl, P.; Weinberger, G. Students’ Experiences of Using ChatGPT in an Undergraduate Programming Course. IEEE Access 2024, 12, 43519–43529. [Google Scholar] [CrossRef]

- Ouh, E.L.; Gan, B.K.S.; Shim, K.J.; Wlodkowski, S. ChatGPT, Can You Generate Solutions for my Coding Exercises? An Evaluation on its Effectiveness in an undergraduate Java Programming Course. In Proceedings of the ITiCSE: Innovation and Technology in Computer Science Education, Turku, Finland, 7–12 July 2023. [Google Scholar]

- Popovici, M.-D. ChatGPT in the Classroom. Exploring Its Potential and Limitations in a Functional Programming Course. Int. J. Hum.–Comput. Interact. 2024, 40, 7743–7754. [Google Scholar] [CrossRef]

- Tao, R.; Xu, J. Mapping with ChatGPT. ISPRS Int. J. Geo-Inf. 2023, 12, 284. [Google Scholar] [CrossRef]

- Hu, X.; Kersten, J.; Klan, F.; Farzana, S.M. Toponym resolution leveraging lightweight and open-source large language models and geo-knowledge. Int. J. Geogr. Inf. Sci. 2024, 1–28. [Google Scholar] [CrossRef]

- Mooney, P.; Cui, W.; Guan, B.; Juhász, L. Towards Understanding the Spatial Literacy of ChatGPT. In Proceedings of the ACM SIGSPATIAL International Conference, Hamburg, Germany, 13–16 November 2023. [Google Scholar]

- Hochmair, H.H.; Juhász, L.; Kemp, T. Correctness Comparison of ChatGPT-4, Gemini, Claude-3, and Copilot for Spatial Tasks. Trans. GIS 2024, 28, 2219–2231. [Google Scholar] [CrossRef]

- Li, F.; Hogg, D.C.; Cohn, A.G. Advancing Spatial Reasoning in Large Language Models: An In-Depth Evaluation and Enhancement Using the StepGame Benchmark. In Proceedings of the The Thirty-Eighth AAAI Conference on Artificial Intelligence (AAAI-24), 20–27 February 2024; pp. 18500–18507. [Google Scholar]

- Redican, K.; Gonzalez, M.; Zizzamia, B. Assessing ChatGPT for GIS education and assignment creation. J. Geogr. High. Educ. 2025, 49, 113–129. [Google Scholar] [CrossRef]

- Wilby, R.L.; Esson, J. AI literacy in geographic education and research: Capabilities, caveats, and criticality. Geogr. J. 2024, 190, e12548. [Google Scholar] [CrossRef]

- van Staden, C.J. Geography’s ability to interpret topographic maps and orthophotographs, adoption prediction, and learning activities to promote responsible usage in classrooms. J. Geogr. Educ. Afr. 2025, 8, 16–32. [Google Scholar] [CrossRef]

- Xue, Y.; Chen, H.; Bai, G.R.; Tairas, R.; Huang, Y. Does ChatGPT Help With Introductory Programming? An Experiment of Students Using ChatGPT in CS1. In Proceedings of the 46th International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET ’24), Lisbon, Portugal, 14–20 April 2024; pp. 331–341. [Google Scholar]

- Enzmann, D. Notes on Effect Size Measures for the Difference of Means from Two Independent Groups: The Case of Cohen’s d and Hedges’ g. Available online: https://www.researchgate.net/publication/270703432_Notes_on_Effect_Size_Measures_for_the_Difference_of_Means_From_Two_Independent_Groups_The_Case_of_Cohen’s_d_and_Hedges’_g (accessed on 14 March 2025).

- Tomczak, M.; Tomczak, E. The need to report effect size estimates revisited. An overview of some recommended measures of effect size. Trends Sport Sci. 2014, 1, 19–25. [Google Scholar]

- Bobbitt, Z. How to Interpret Cramer’s V (With Examples). Available online: https://www.statology.org/interpret-cramers-v/ (accessed on 14 March 2025).

- Shuwandy, M.L.; Zaidan, B.B.; Zaidan, A.A.; Albahri, A.S.; Alamoodi, A.H.; Alazab, O.S.A.M. mHealth Authentication Approach Based 3D Touchscreen and Microphone Sensors for Real-Time Remote Healthcare Monitoring System: Comprehensive Review, Open Issues and Methodological Aspects. Comput. Sci. Rev. 2020, 38, 100300. [Google Scholar] [CrossRef]

- Hutto, C.J.; Gilbert, E. VADER: A Parsimonious Rule-Based Model for Sentiment Analysis of Social Media Text. Proc. Int. AAAI Conf. Web Soc. Media 2014, 8, 216–225. [Google Scholar] [CrossRef]

- Pano, T.; Kashef, R. A Complete VADER-Based Sentiment Analysis of Bitcoin (BTC) Tweets during the Era of COVID-19. Big Data Cogn. Comput. 2020, 4, 33. [Google Scholar] [CrossRef]

- Uddin, S.M.J.; Albert, A.; Tamanna, M.; Ovid, A.; Alsharef, A. ChatGPT as an educational resource for civil engineering students. Comput. Appl. Eng. Educ. 2024, 32, e22747. [Google Scholar]

- Zamfrescu-Pereira, J.D.; Wong, R.; Hartmann, B.; Yang, Q. Why Johnny Can’t Prompt: How Non-AI Experts Try (and Fail) to Design LLM Prompts. In Proceedings of the CHI conference on human factors in computing systems (CHI ’23), Hamburg, Germany, 23–28 April 2023. [Google Scholar]

- Xia, Q.; Chiu, T.K.F.; Chai, C.S.; Xie, K. The mediating effects of needs satisfaction on the relationships between prior knowledge and self-regulated learning through artificial intelligence chatbot. Br. J. Educ. Technol. 2023, 54, 967–986. [Google Scholar]

- Alam, A. Should Robots Replace Teachers? Mobilisation of AI and Learning Analytics in Education. In Proceedings of the International Conference on Advances in Computing, Communication, and Control (ICAC3), Mumbai, India, 3–4 December 2021. [Google Scholar]

- Parsakia, K. The Effect of Chatbots and AI on The Self-Efficacy, Self-Esteem, Problem-Solving and Critical Thinking of Students. Health Nexus 2023, 1, 71–76. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Mosleh, S.M.; Alsaadi, F.A.; Alnaqbi, F.K.; Alkhzaimi, M.A.; Alnaqbi, S.W.; Alsereidi, W.M. Examining the association between emotional intelligence and chatbot utilization in education: A cross-sectional examination of undergraduate students in the UAE. Heliyon 2024, 10, e31952. [Google Scholar] [CrossRef]

- Manvi, R.; Khanna, S.; Mai, G.; Burke, M.; Lobell, D.; Ermon, S. GeoLLM: Extracting Geospatial Knowledge from Large Language Models. In Proceedings of the International Conference on Learning Representations (ICLR 2024), Vienna Austria, 7–11 May 2024. [Google Scholar]

- Sternberg, R.J. Critical Thinking in STEM Disciplines. In Critical Thinking in Psychology, 2nd ed.; Sternberg, R.J., Halpern, D.F., Eds.; Cambridge University Press: Cambridge, UK, 2020; pp. 309–327. [Google Scholar]

- Kooli, C. Chatbots in Education and Research: A Critical Examination of Ethical Implications and Solutions. Sustainability 2023, 15, 5614. [Google Scholar] [CrossRef]

| Subject Area | Study Design | Findings | Reference |

|---|---|---|---|

| Programming | |||

| Skill retention in Python programming course | 2 × 2 (scaffold/no scaffold, text/voice) | Conversational, scaffolding agent in lecture recordings facilitated retention of Python knowledge | [13] |

| Student performance in multimedia programming course (HTML, CSS) | Experimental vs. control student cohort | Better course performance with chatbot assistant than with instructor | [14] |

| Student performance in C++ programming course | Experimental vs. control student cohort | Student performance not influenced by ChatGPT usage | [15] |

| Student performance in Java programming course | Experimental vs. control student cohort | Student performance not influenced by ChatGPT usage | [28] |

| Student performance in Python programming course | Experimental vs. control student cohort | Student performance not influenced by ChatGPT usage | [16] |

| Student attitudes in Java programming course | Experimental vs. control student cohort | Using ChatGPT in weekly programming practice enhanced student morale, programming confidence, and computational reasoning competence | [10] |

| Performance and chatbot use in the course scientific computing for mechanical engineering (C and Python) | Student questionnaires, semi-structured interviews with students and teacher | Students with prior experience used ChatGPT for more varied tasks; decline in code quality and student learning with increased ChatGPT usage | [12] |

| Quality of ChatGPT generated Java code | Student questionnaires | ChatGPT-generated code required little modification | [17] |

| Readability of ChatGPT-generated Java code | Analysis of ChatGPT code solutions for course programming tasks | ChatGPT-generated code was readable and well structured | [18] |

| Code correctness of ChatGPT-generated Scala functional programming code | Analysis of ChatGPT code solutions for course programming tasks | Initial ChatGPT solutions were correct in 68% of cases; only 57% of correct solutions were legible | [19] |

| GIS/Geography | |||

| Quality of ChatGPT-generated GIS lab assignment | Analysis of generated lab instructions | Prompt refining led to more useful GIS assignment instructions | [25] |

| Completeness of a ChatGPT-generated 2 h lecture program on climate and society | Comparison of generated topics with existing curriculum | ChatGPT offered some useful suggestions to supplement existing lecture topics | [26] |

| Sentence | Negative | Neutral | Positive | Compound | Label |

|---|---|---|---|---|---|

| The code resulting from a chatbot can be easily improved according to the developer’s needs. | 0 | 0.703 | 0.297 | 0.6705 | Positive |

| Despite these concerns, using chatbots helped [to] develop technical skills, particularly in automating tasks in GIS with ArcPy. | 0 | 1 | 0 | 0 | Neutral |

| Ultimately, students agreed that chatbots are not a perfect solution | 0.353 | 0.498 | 0.149 | −0.4329 | Negative |

| Coefficients | Estimate | Std. Error | t | p |

|---|---|---|---|---|

| Intercept | 4.52 | 0.61 | 7.33 | <0.001 ** |

| Python experience | 0.17 | 0.19 | 0.89 | 0.387 |

| Chatbot introduction | −2.22 | 0.95 | −2.33 | 0.033 * |

| Python × chatbot | 0.60 | 0.41 | 1.48 | 0.158 |

| N | 20 | |||

| R2 | 0.405 | |||

| Adj. R2 | 0.293 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hochmair, H.H. Use and Effectiveness of Chatbots as Support Tools in GIS Programming Course Assignments. ISPRS Int. J. Geo-Inf. 2025, 14, 156. https://doi.org/10.3390/ijgi14040156

Hochmair HH. Use and Effectiveness of Chatbots as Support Tools in GIS Programming Course Assignments. ISPRS International Journal of Geo-Information. 2025; 14(4):156. https://doi.org/10.3390/ijgi14040156

Chicago/Turabian StyleHochmair, Hartwig H. 2025. "Use and Effectiveness of Chatbots as Support Tools in GIS Programming Course Assignments" ISPRS International Journal of Geo-Information 14, no. 4: 156. https://doi.org/10.3390/ijgi14040156

APA StyleHochmair, H. H. (2025). Use and Effectiveness of Chatbots as Support Tools in GIS Programming Course Assignments. ISPRS International Journal of Geo-Information, 14(4), 156. https://doi.org/10.3390/ijgi14040156