Abstract

The objective of this research was to investigate the impact of seasonality on urban land-cover mapping and to explore better classification accuracy by using multi-season Sentinel-1A and GF-1 wide field view (WFV) images, and the combinations of both types of images in subtropical monsoon-climate regions in Southeast China. We obtained multi-season Sentinel-1A and GF-1 WFV images, as well as the combinations of both data, by using a support vector machine (SVM) and a random forest (RF) classifier. The backscatter intensity, texture, and interference-coherence images were extracted from Sentinel-1A images, and different combinations of these Sentinel-1A-derived images were used to evaluate their ability to map urban land cover. The results showed that the performance of winter images was better than that of any other season, while the summer images performed the worst. Higher classification accuracy was achieved by using multi-season images, and satisfactory classification results were obtained when using Sentinel-1A images from only three seasons. The best classification result was achieved using a combination of all Sentinel-1A data from all four seasons and GF-1 WFV data from winter, with an overall accuracy of up to 96.02% and a kappa coefficient reaching 0.9502. The performance of textures was slightly better than that of the backscatter-intensity images. Although the coherence data performed the worst, it was still able to distinguish urban impervious surfaces well. In addition, the overall classification accuracy of RF was better than that of SVM.

1. Introduction

Urbanization is one of the most dynamic processes in global land-use change [1,2] and a major force in determining land-use and land-cover change [3]. The level of urbanization in China is expected to reach about 50% in 2020. The accelerated urbanization process has negative impacts on the current economy and environment, such as global warming, traffic congestion, the deterioration of urban ecological environments, and so on. In order to avoid these negative consequences, sustainable urban development is necessary. The key is how to get up-to-date and reliable information about the current state of urban areas, such as urban land use and land cover (LULC) [2], which is important for urban planners and decision-makers. Remote sensing technology has become the main means of obtaining information on LULC because of its frequent and large area detection and rich spatial information. Optical remote sensing imagery is one of the most commonly used data sources for LULC change, and is widely used in urban mapping [4,5,6,7]. However, because optical remote sensing is susceptible to the effects of cloudy and rainy weather, accurate mapping using optical images is limited. It has been demonstrated that by using all-weather, day-and-night imaging, as well as canopy penetration and high-resolution capabilities [8,9,10], Synthetic Aperture Radar (SAR) images effectively overcome these limitations in land-cover classification.

Earlier studies that investigated LULC information via SAR data mostly used single-frequency and single-polarization images as data sources. However, the limited information derived from single-frequency and single-polarization SAR data leads to limited classification accuracy [11]. As a result of the continuous development of radar technology, ALOS-PALSAR, Terra-SAR, and RADARSAT-2 satellites were launched; some researchers used multi-frequency and multi-polarization SAR data for urban mapping [12,13]. Tan et al. [12] reported that multi-polarization achieved better classification results than single-polarization, and that HV contributed more than the other three polarizations. Pellizzeri et al. [13] used multi-temporal/multi-band SAR data for urban mapping and obtained satisfactory classification results. There are also some studies that show how the fusion of optical and SAR images improves the classification accuracy of urban LULC [14,15,16].

In addition to backscatter-intensity information, some other information can be extracted and applied to the classification of SAR data. This mainly includes texture and coherence information. Roychowdhury et al. [17] used a gray-level co-occurrence matrix (GLCM) algorithm to calculate texture elements for urban-area classification and found that the use of texture information effectively improved classification accuracy. Similar results were reported by Fard et al. [18], who used SAR and optical images for urban mapping and found that texture information was useful. Some researchers, in using the SAR-data coherence information to classify LULC, found that it is important for SAR-data classification [19,20]. Optical data can reflect the spectral-feature information of ground objects better than SAR data. Because of the different characteristics that SAR and optical remote sensing bring to the classification of LULC, some studies have used the combination of SAR and optical images to improve classification accuracy. Zhang et al. [21] combined SPOT-5 and ENVISAT data for urban LULC classification and found that the classification accuracy of combined SAR and optical data was higher than that of optical data alone. Similar results were reported by Aimaiti et al. [22], who used the combination of SAR and optical data to extract urban land-cover information and conduct urban-landscape analyses. The selection of a classifier is also an important factor. Random forests (RF) [23] and support vector machines (SVM) [24] have been used in many SAR-data classification studies [12,25,26], and both have been proven to perform well.

Although good progress has been made in the LULC classification of SAR data, some challenges remain. For example, although the effect of seasonality on mapping urban areas has been examined in previous studies, most only used optical data, with the main types of climate studied being the tropical monsoon climate and the humid continental or oceanic climate. Zhu et al. [26] compared single-season and multi-season classification accuracy using Landsat Enhanced Thematic Mapper Plus (ETM+) images. Deng et al. [27] used Landsat-8 OLI data from different seasons to map urban impervious areas in different climate types and found that different climatic conditions favored different seasonal images. Scott et al. [28] used multi-temporal Landsat TM/ETM+ images to extract impervious surface areas in Wales, UK (oceanic climate). Tsutsumida et al. [29] studied the impervious surface-area classification of optical data in a tropical rainforest-climate region and found that sub-pixel classification was a better method than per-pixel classification. Myint et al. [30] used Landsat ETM+ data to study the classification of urban land cover and found that multiple endmember spectral mixture analysis (MESMA) was effective for desert cities. However, there is still too little SAR data derived from different seasons that is being used to examine the impact of seasonality on urban LULC classification.

The overall objective of this study was therefore to examine the impact of seasonality on the mapping of urban LULC and to improve classification by using Sentinel-1A images, GF-1 data, and the combination of both types of images in a subtropical monsoon-climate region in Southeast China. For this purpose, we obtained backscatter intensity, coherence, and texture images from Sentinel-1A imagery. First, we explored the impact of seasonality on urban LULC classification by using single-season Sentinel-1A and GF-1 data respectively, via the RF and SVM classifiers. Then, we investigated the performance of multi-season images (Sentinel-1A and GF-1 images) and a SAR-optical dataset (four-season Sentinel-1A images and winter GF-1 data) on the classification results separately. Finally, we assessed the contributions of the backscatter intensity, coherence, texture images, and their different combinations.

2. Study Area and Datasets

2.1. Study Area

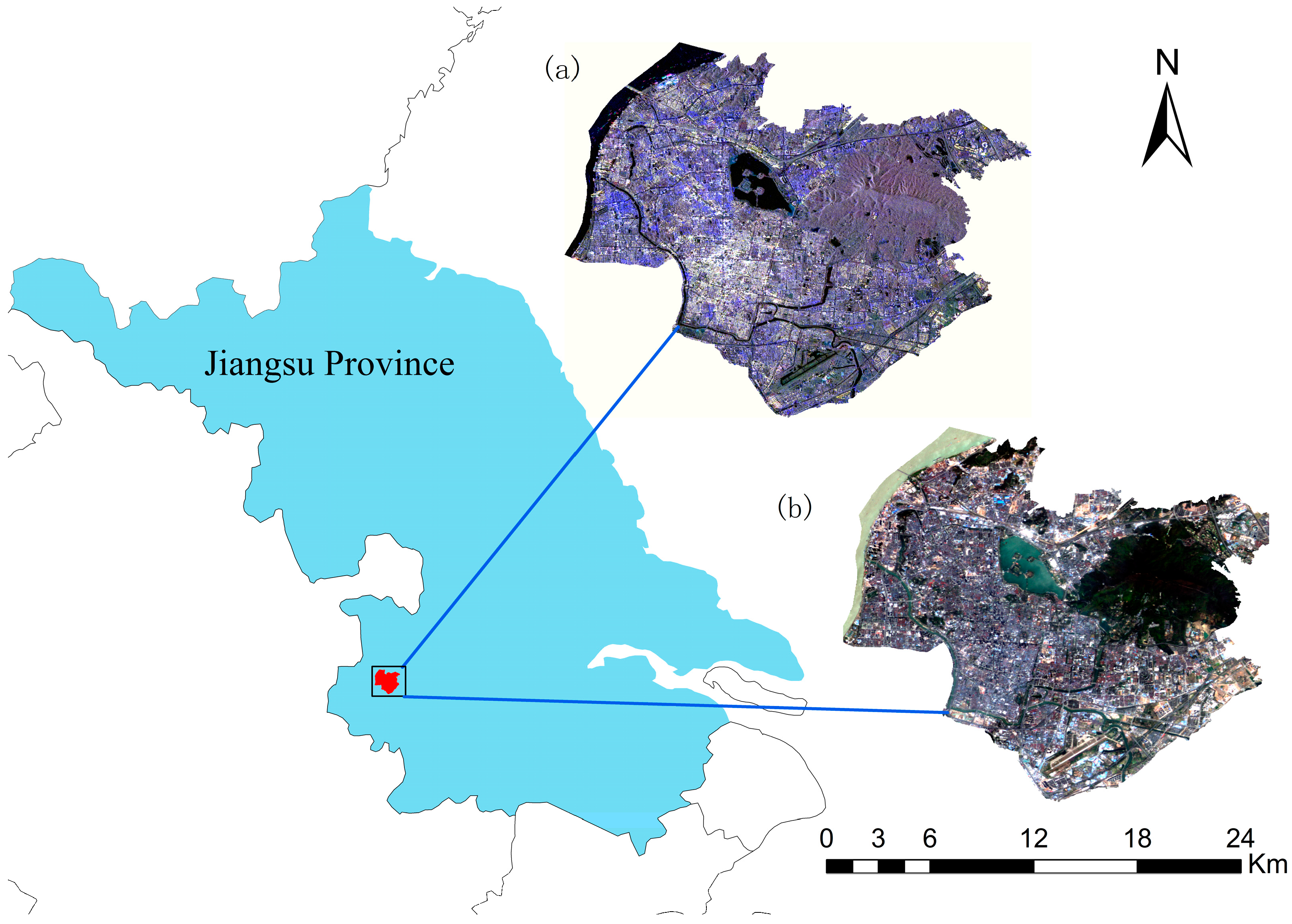

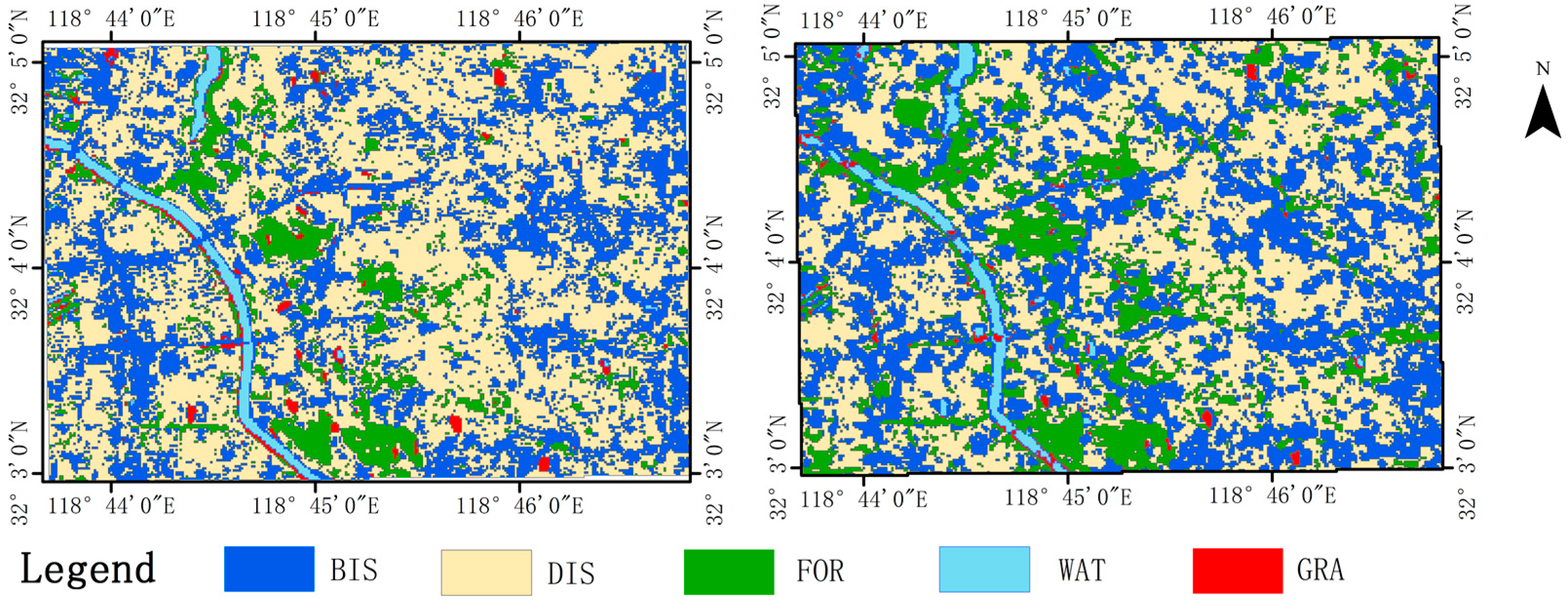

The study area was the central urban-area of Nanjing (including Gulou District, Xuanwu District, Qinhuai District, and Jianye District), the capital of Jiangsu Province (see Figure 1). The city is located in the Yangtze River Delta, which is the fastest expanding metropolitan area in eastern China due to large-scale migrations from neighboring rural areas. The total area is 6587 km², and the resident population is 8.27 million; it is located between the latitudinal parallels of 31°14′ N and 32°37′ N and the longitudinal parallels of 118°22′ E and 119°14’ E. It has a subtropical monsoon climate, with an average rainfall of 1090.4 mm and an annual average temperature of 15.4 °C. Its topography is complex, with low mountains and hills accounting for about 60.8% of the total city area (plains, rivers, and lakes account for about 39.2%).

Figure 1.

The research area, located in the Yangtze River Delta in eastern China; and an overview of the Sentinel-1A and GF-1 data: (a) The Sentinel-1A composite image (R: 22 December 2016 VH polarization, G: 11 October 2016 VH polarization, B: 12 August 2016 VV polarization); (b) The GF-1 WFV composite image (28 March 2016, RGB = 321).

Rapid urbanization has brought great pressure to the ecological environment, resulting in a series of problems, such as water shortage, air pollution, and desertification. In order to address the various ensuing socio-economic and ecological problems, it is important to monitor Nanjing’s dynamic urbanization process.

2.2. Sentinel-1A Data

Sentinel-1 is an important part of the Global Monitoring for Environment and Security (GMES) [31], co-sponsored by the European Commission and the European Space Agency. The Sentinel 1 mission includes Sentinel-1A and Sentinel-1B satellites. The first satellite (Sentinel-1A) was launched on 3 April 2014, while the second satellite (Sentinel-1B) was launched on 25 April 2016. As part of the European Union’s Copernicus Programme, each satellite carries an imaging C-band SAR instrument (5.405 GHz), conducts a 12-day repeat orbit cycle, and has a total of four operational modes: Stripmap mode (SM), Extra Wide Swath mode (EWS), Interferometric Wide Swath mode (IWS), and Wave mode (WM). In this study, we obtained four Sentinel-1A images, corresponding to the four seasons. Table 1 shows specific information about these images, such as the acquisition dates and the operational modes.

Table 1.

Detailed information of the Sentinal-1A images acquired in this study.

2.3. GF-1 Data

The GaoFen-1 (GF-1) was launched in April 2013 and carries two panchromatic/multi-spectral (P/MS) and four wide field view (WFV) cameras [32]. GF-1 WFV data have a large swath (800 km) and a spatial resolution of 16 m, with four spectral channels distributed in the visible and a near-infrared spectroscopy (NIR) spectral domain ranging from 450 to 890 nm [33]. GF-1 P/MS data have one panchromatic band and four multispectral bands with spatial resolutions of 2 m and 8 m, respectively [34]. We obtained four GF-1 WFV images corresponding to the four seasons (a spring image from 28 March 2016, a summer image from 12 September 2016, an autumn image from 11 November 2016, and a winter image from 13 January 2017). In addition, a GF-1 P/MS image with a spatial resolution of 2 m was obtained on 17 June 2016 as reference data. The percentage of cloud coverage for all these images was 0%.

2.4. Ground Reference Data

We identified five land-cover types in our study: dark impervious surface (DIS), bright impervious surface (BIS), forest (FOR), water (WAT), and grass (GRA). We randomly extracted the sample data of the five classes in the study area by visually interpreting the GF-1 P/MS image and referring to the Google Earth images. Fifty percent of these samples were divided into training samples by stratified random sampling, and the remaining 50% were used to test the results and calculate their accuracy (Table 2).

Table 2.

Numbers of training and validation data used in urban land cover classification.

Both the overall accuracy and kappa coefficient were calculated using the confusion matrix, and the F1 measure (Equation (1)) was computed to evaluate the classification accuracy. Overall accuracy is computed by dividing the total number of correctly classified pixels by the entire validation dataset [35,36]. As the harmonic mean of the producer’s and user’s accuracy [37], the F1 measure is considered to be more meaningful than the kappa coefficient and the overall accuracy [38].

3. Methods

3.1. Satellite Data Pre-Processing

The Sentinel-1A images were preprocessed using SARscape 5.2 software, including the following: multi-look, registration, speckle filtering, geocoding, and radiometric calibration. We used a Lee filter with a 3 × 3 window [39] to filter the speckle noise in the Sentinel-1A data. The SAR images were geocoded using the 3-arcsecond SRTM DEM; the digital numbers (DN) were converted to a decibel (dB) scale backscatter coefficient (σ˚), with a spatial resolution of 20 m.

The GF-1 images were preprocessed by ENVI 5.3 software, which included radiance calibration, atmospheric correction, and geometric correction. For radiometric calibration, the DN value of the original image was converted to the surface spectral reflectance [32]. The atmospheric correction of these GF-1 data was performed by an ENVI FLAASH model. Finally, both the Sentinel-1A and GF-1 images were geometrically rectified at a spatial resolution of 20 m, with 25 ground control points and the root-mean-square error (RMSE) below 0.5 pixels. We obtained the ground coordinates for these points through the 1:10,000 LULC map developed by the Nanjing Institute of Surveying and Geotechnical Investigation.

3.2. Feature Sets

3.2.1. Texture Features

Texture is an effective representation of spatial relationships [40] and is widely used in the interpretation of remote-sensing images. It has been widely considered to be important to the improvement of SAR image based urban-classification accuracy [41,42,43,44]. The gray-level co-occurrence matrix (GLCM) is both an effective and commonly used texture measure. In this study, we used GLCM in order to obtain SAR’s texture features. These texture features included mean, variance, entropy, angular second moment, contrast, correlation, dissimilarity, and homogeneity.

3.2.2. Coherence Features

SAR interferometry is a powerful quantitative tool for land cover, which exploits the phase differences of SAR images obtained at different times. As a correlation coefficient, coherence can detect small surface changes over a period of time [20]. The value of these changes is between 0 and 1; a high coherence indicates that the change is small or non-existent, whereas a low coherence signifies an important change. In this study, we acquired six Sentinel-1A-data coherence images, computed using SARscape 5.2 software. The images were obtained using the following InSAR data pairs: (1) 14 April 2016 and 12 August 2016; (2) 12 August 2016 and 11 October 2016; and (3) 11 October 2016 and 12 December 2016.

3.2.3. Feature Combination

In order to both evaluate the impact of seasonality on urban land-cover classification using Sentinel-1A and GF-1 imagery and explore how different Sentinel-1A-derived information identifies urban land-cover types, the following Sentinel-1A and GF-1 data feature combinations were considered (Table 3).

Table 3.

Different combinations of Sentinel-1A-derived information and GF-1 images.

3.3. Classifiers

We selected two classifiers, Support Vector Machine (SVM) and Random Forest (RF), for the urban land-cover classification.

The SVM classifier, proposed by Vapnik in 1995, is a machine-learning method based on institutional-risk minimization. It has been widely used in urban land-cover classification and has achieved high classification accuracy [45,46]. The SVM algorithm separates two classes by fitting an optimal linear separating hyperplane (OSH) onto their training datasets in a multidimensional feature space [46,47]. This study employed the radial basis function (RBF); the kernel parameters Gamma (G) and penalty (C) were set to 0.125 and 100, respectively.

The RF classifier is an ensemble learning technique based on multiple decision trees. A large number of decision trees were generated using a resampling technique with replacement, with each tree being fitted to a different bootstrapped training sample and a randomly-selected set of predictive variables [26,40,48]. The final classification was performed through a majority vote of the trees. Many studies have shown that the use of an RF classifier for urban land-cover classification studies can result in high accuracy [12,21]. For each RF classifier, a default number of 500 trees were grown using the square root of the total number of features that were located at each node.

4. Results and Discussion

4.1. Analysis of Temporal Variables Used for Classification

In order to explore the feasibility of urban land-cover mapping using Sentinel-1A-derived information, the average coherence and backscatter values of the five urban land-cover classes were analyzed. The corresponding results are shown in Table 4 and Table 5, respectively.

Table 4.

Comparison of the average coherence values for each urban land cover class.

Table 5.

Comparison of the average backscatter values for each urban land cover class.

In Table 4, the coherence values of bright impervious surfaces were the highest (in the range of 0.529–0.625), while those of dark impervious surfaces were slightly lower. In contrast, the coherence values of water bodies were the lowest (in the range of 0.163–0.187). This is because water bodies consist of changing scattering centers as a result of waves; they are therefore very unstable [49]. Conversely, the backscatter property of urban buildings is more stable than that of non-manmade objects. Built-up structures had very high phase-stability properties and were still able to maintain a high coherence over long periods of time [50]. On the contrary, water, forest, and grass could become completely incoherent within a few days.

Compared with urban areas, the coherence values for forest and grass areas were smaller: 0.188–0.209 and 0.178–0.339, respectively. The main factors to cause low coherence values in vegetation were changes in both the vegetation canopy and moisture content in between two SAR acquisitions [20].

In Table 5, the average backscatter values of dark impervious surfaces were the highest for VV polarization (in the range of −4.99 to −4.49), whereas for VH polarization they were lower (in the range of −11.26 to −10.86). The average backscatter values of bright impervious surfaces were slightly lower than those of dark impervious surfaces for both VV and VH polarizations, with average values of −6.65 to −4.50 and −11.98 to −11.46, respectively. In contrast, the average backscatter values of water bodies for both VV and VH polarizations were the lowest, with average values of −18.40 to −16.50 and −21.39 to −20.91, respectively. The main reason for the high-average backscatter values in urban areas was the predominance of single or double bounce from roof- or wall-ground structures and other metallic structures [51]. We can attribute very low backscatter values associated with water bodies to the specular reflection of water, which causes less backscattering towards the radar antenna [20,52]. Moreover, for both VH and VV polarizations, the backscatter values of forest areas were higher than those of grass areas.

The five urban land cover classes therefore have different backscatter-intensity characteristics and interference-coherence characteristics, and can be very useful to the classification of urban land covers.

4.2. Urban Land-Cover Mapping

4.2.1. Classification Results Using a Single-Season Image

Table 6 shows the classification accuracy of two classifiers for each land cover using individual Sentinel-1A imaging. The classification results of RF were always better than SVM in terms of F1 measure, overall accuracy, and kappa coefficient. This indicates that RF is more suitable for urban land-cover classification when using a single-season SAR image. Classifications using the winter Sentinel-1A image produced the highest classification accuracy, with an overall accuracy of 87.82% for RF (kappa coefficient = 0.8470) and 81.30% for SVM (kappa coefficient = 0.7650), followed by autumn and spring. The summer Sentinel-1A image performed the worst, with an overall accuracy of 84.70% for RF and 75.07% for SVM. This shows that the performance of the winter SAR image was better than that of any other season in this subtropical monsoon-climate area. For BIS and GRA, the best performance came from the winter Sentinel-1A image, with an F1 measure of 88.57% and 87.18%, respectively; the poorest result was found in the spring Sentinel-1A image. For DIS and FOR, the spring Sentinel-1A image produced the best accuracy, with an F1 measure of 85.48% and 93.42%, respectively; the poorest accuracy came from the summer Sentinel-1A image. For WAT, the best performance for RF was found in the winter Sentinel-1A image (F1 measure = 86.08%), whereas for SVM it was found in the autumn Sentinel-1A image (F1 measure = 82.84%); the poorest performance came from the spring Sentinel-1A image.

Table 6.

Classification results using single-season Sentinel-1A images.

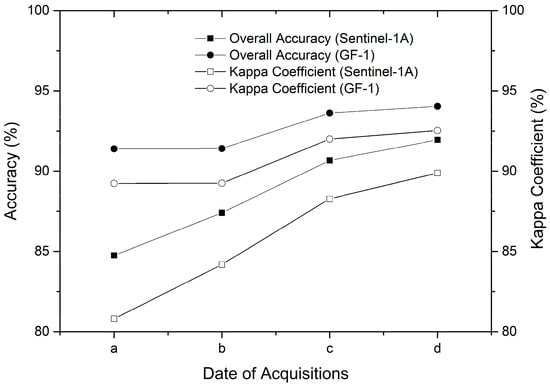

Table 7 presents the urban land-cover classification results of two classifiers using single- season GF-1 imaging. Similarly, the performance of single-season GF-1 data using RF was better than those that used SVM, in terms of overall accuracy and kappa coefficient. However, in terms of F1 measure, SVM obtained better WAT and FOR classification results than RF. The overall classification results for the GF-1 data were similar to the Sentinel-1A data. The winter GF-1 image obtained the best accuracy among the four seasons (with an overall accuracy of 93.22% for RF (kappa coefficient = 0.9151) and 90.96% for SVM (kappa coefficient = 0.8868), followed by autumn and spring. The poorest result was found in the summer GF-1 image, which exhibited an overall accuracy of 90.46% for RF (kappa coefficient = 0.8807), and 86.71% for SVM (kappa coefficient = 0.8338). The classification results that used the single-season GF-1 data were better than those that used the corresponding single-season Sentinel-1A data. Consistent with previous studies, the classification accuracy of single-date optical images was higher than that of single date SAR images [53,54]. For WAT and FOR, the best performance was found in the winter and autumn GF-1 data, with an F1 measure of 93.83% and 96.85%, respectively; the summer GF-1 provided the poorest results. For BIS and DIS, the best accuracy was found in the winter GF-1 image, with an F1 measure of 90.37% and 89.43%, respectively; the poorest accuracy came from the summer GF-1 image. However, the latter also yielded the best classification result for GRA, with an F1 measure of 99.17%. This shows that different seasons’ remote sensing images are suitable for the classification of different types of urban land cover.

Table 7.

Classification results using a single-season GF-1 image.

In this subtropical monsoon climate area, when using single-season SAR and optical data for urban land-cover classification, the classification results show that winter images perform better than spring and summer images, which in turn perform better than summer data. In Nanjing, the rainy season mainly occurs between March and August; from late June to early July, the climate segues into the plum-rains season, with abundant rainfall and heat. When using summer images for urban land-cover classification, water can be mistaken for urban impervious surfaces due to the spectral similarity between water and dark impervious surfaces [27]. By contrast, Nanjing’s climate in winter is relatively dry with less rain. Consequently, during that season, there is less spectral confusion.

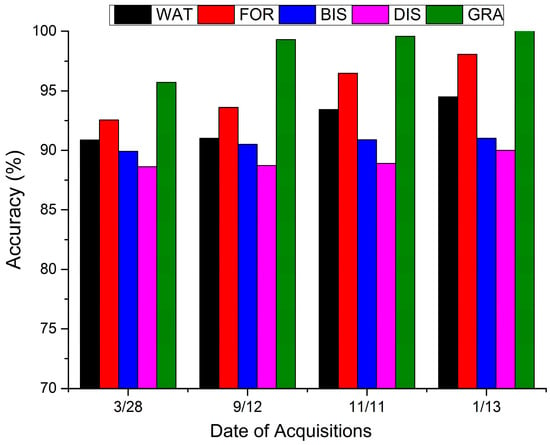

4.2.2. Incremental Classification Results Using Multi-Season Images

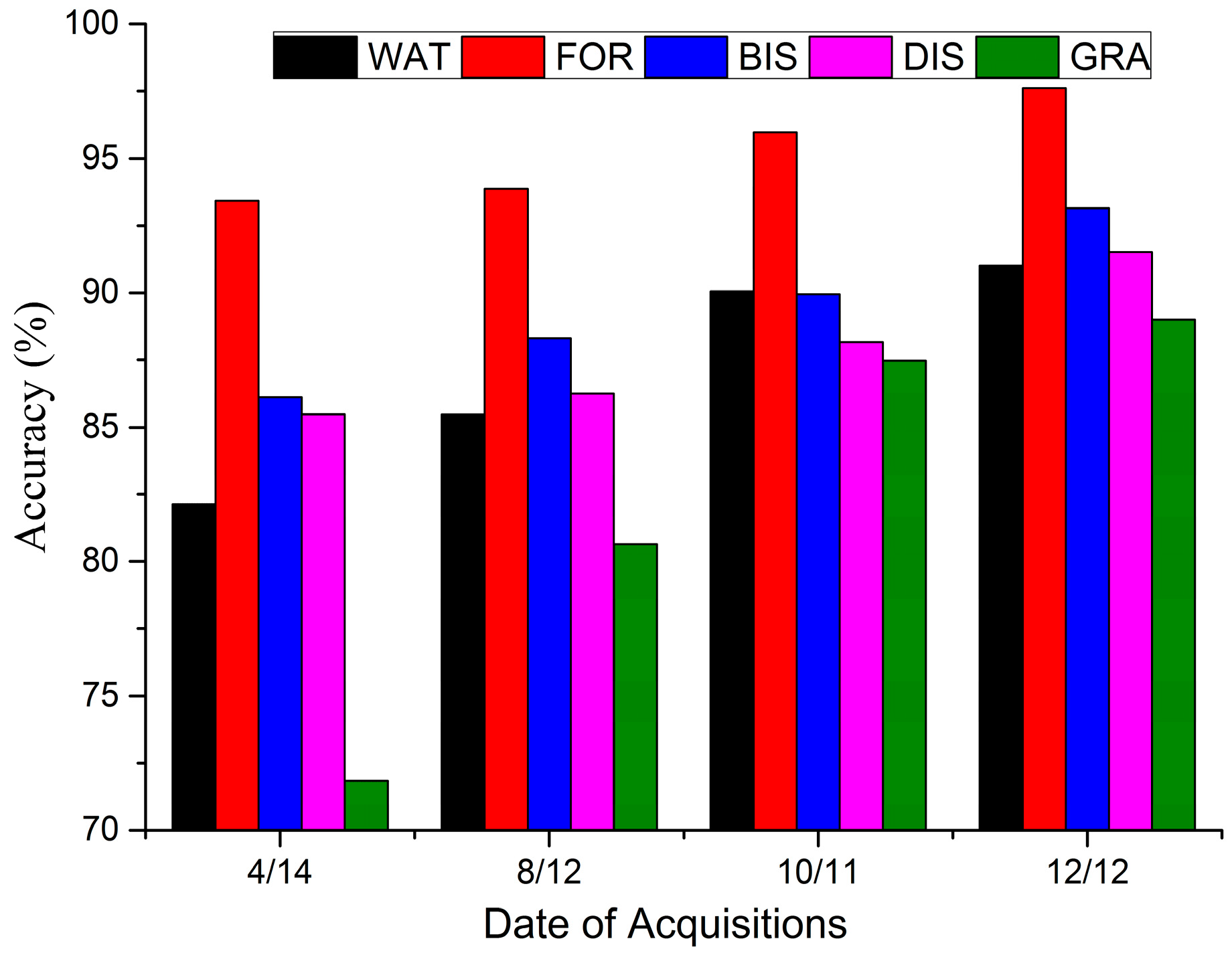

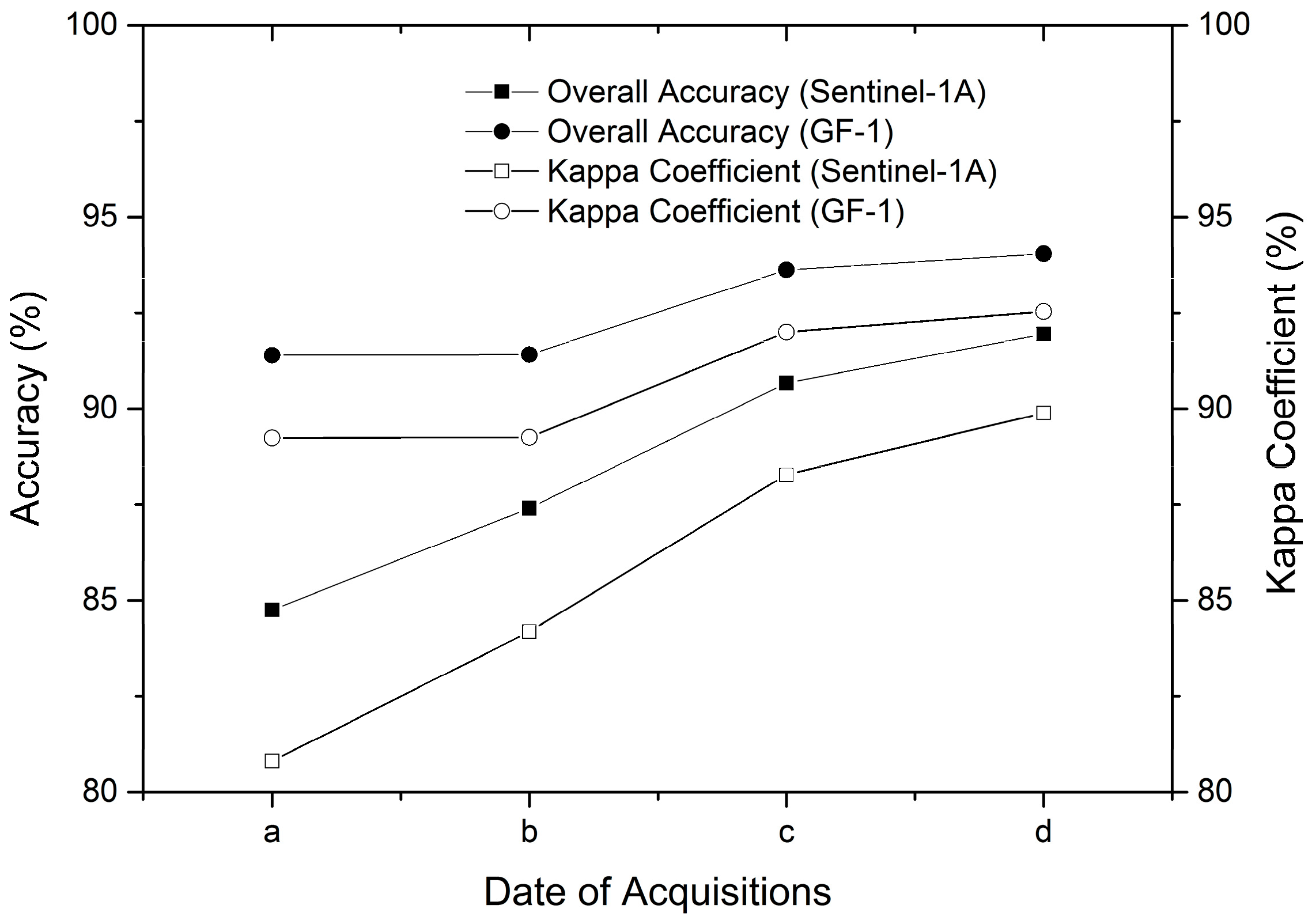

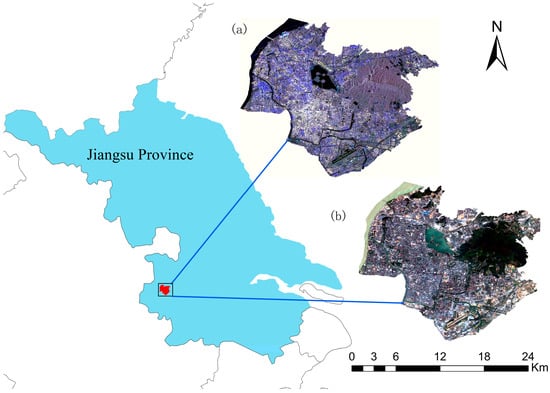

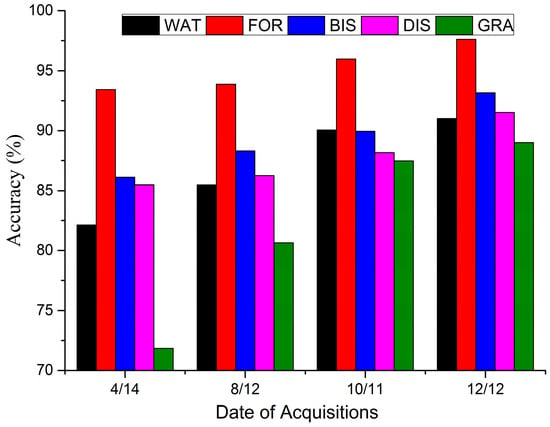

We conducted incremental classifications with seasonal combinations using Sentinel-1A and GF-1 data. The corresponding results are shown in Figure 2, Figure 3 and Figure 4. Incremental classification meant that every new seasonal image was added to all of the previously available images. It was conducted using the RF classifier. Figure 3 shows that the classification accuracy with seasonal combinations using Sentinel-1A improved as the season progressed. The best performance resulted from the combination of all four seasons, with an overall accuracy of up to 91.95% and a kappa coefficient of up to 0.8989. As shown in Figure 2, the F1 measure of each urban land-cover class also improved with added seasonal combinations. This indicates that the use of multi-seasonal SAR images can improve the classification accuracy of urban land cover because multi-season SAR can provide more abundant radar scattering information of ground objects. Deng et al. also showed that urban land-cover classification can be improved by using multi-season imagery [27]. For all seasonal combinations, the F1 measure of FOR was the highest, followed by BIS and WAT, while the classification result of GRA was the poorest. In addition, when using SAR images with only three combined seasons, the F1 measure of each urban land cover was higher than 85%, with an overall accuracy of up to 90.67% and a kappa coefficient of up to 0.8828. Some studies have shown that classification results are satisfactory when the classification accuracy is higher than 85% [55,56,57]. Therefore, optical images can be replaced by SAR images for urban land-cover classification, and SAR images that only combine three seasons can meet the classification accuracy requirements.

Figure 2.

Classification accuracy using different seasonal combinations of Sentinel-1A.

Figure 3.

Comparison of overall accuracy and kappa coefficient using different seasonal combinations. a: spring image; b: the combination of spring and summer images; c: the combination of spring, summer, and autumn images; d: the combination of the four season images.

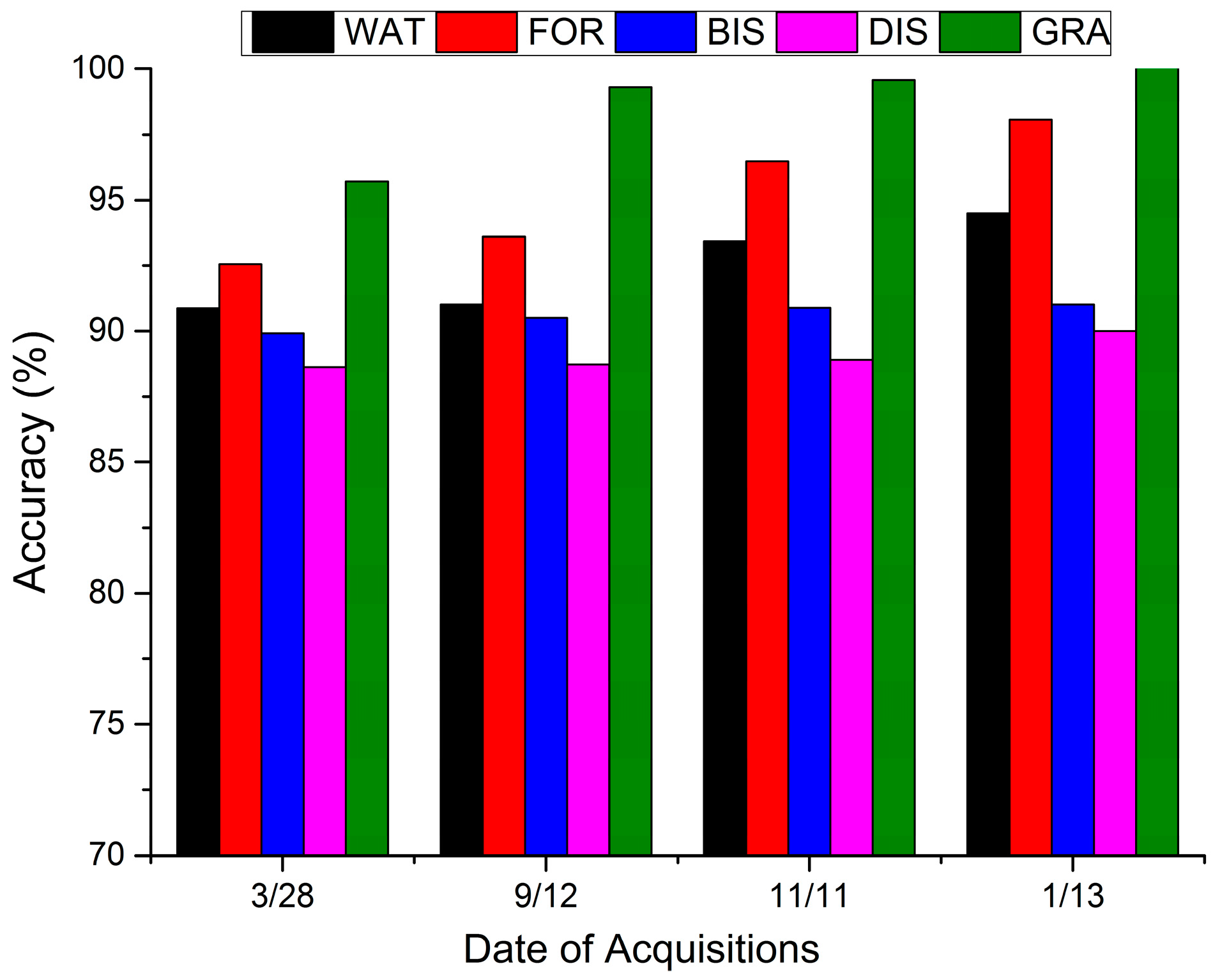

Figure 4.

Classification accuracy using different seasonal combinations of GF-1.

Figure 3 and Figure 4 show that the overall incremental classification results using GF-1 were similar to the Sentinel-1A classification results with different seasonal combinations. The incremental classification accuracy using GF-1 improved as the season progressed and was higher than that of Sentinel-1A. The highest classification accuracy was achieved when using the four season GF-1 images, with an overall accuracy of up to 94.04% and a kappa coefficient of up to 0.9253. Conversely, the lowest classification accuracy was obtained using a GF-1 image with only one season. The use of multi-season GF-1 images can therefore significantly improve the classification accuracy when compared to the use of GF-1 images with only one season. Consistent with previous observations, the classification accuracy can be improved when using multi-temporal SAR and optical images [26,34,56,58]. Figure 4 shows that when more seasonal GF-1 images are used, the F1 measure of each urban land-cover type improved. However, contrary to the incremental classification results of Sentinel-1A, the F1 measure of GRA was the highest, followed by FOR and WAT. The classification accuracy for urban impervious surfaces (BIS and DIS) was markedly lower than that of other land-cover types. This shows that optical data is more suitable for vegetation classification, because it contains abundant vegetation spectral information.

4.2.3. Classification Results Using Sentinel-1A and GF-1 WFV Images

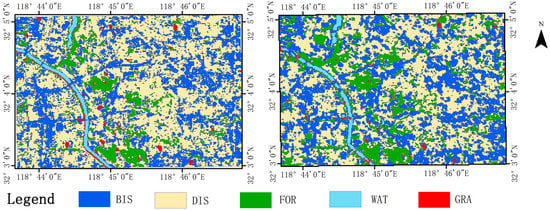

Because the winter GF-1 image was the most accurate out of the four seasons, it was selected for combination with the four-season Sentinel-1A images. Table 8 shows the corresponding results using RF. The best classification results were obtained when using the combined GF-1 and Sentinel-1A images, with an overall accuracy of up to 96.02% and a kappa coefficient of up to 0.9502. The addition of the winter GF-1 image allowed us to increase the overall accuracy and kappa coefficient by 4.07% and 0.0513, respectively. This is because microwaves are more sensitive to canopy-structure features, and SAR data have more spatial-texture information. Optical data, on the other hand, contain more spectral information. In order to provide more useful information on different types of urban land cover, the SAR and optical images were able to use their information in a complementary way, thereby improving the classification accuracy. The importance of using both SAR and optical images has also been reported by a few previous studies [15,59,60,61]. The highest F1 measure belonged to GRA (F1 measure = 99.94), followed by FOR, WAT, and BIS. However, at 90.37%, DIS’s F1 measure was the lowest, similar to the results we reported above. This shows that DIS gets mistaken for other types more often than the other types of urban land cover do. Figure 5 presents samples of the final classification maps.

Table 8.

Classification results using the combination the four-season Sentinel-1A and winter GF-1 data.

Figure 5.

Classification maps using the RF classifier. (Left) Using the combination of the four-season Sentinel-1A and winter GF-1 data. (Right) Using the four-season Sentinel-1A data.

4.2.4. Classification Results Using Different Sentinel-1A-Derived Information

In order to fully explore how Sentinel-1A-derived information can be used for urban land cover classification, different combinations of Sentinel-1A-derived information were tested using the RF classifier (Table 9). As shown in Table 9, all of the overall accuracy results obtained using single Sentinel-1A-derived information were lower than 85%. Furthermore, the performance of T was better than that of VV + VH and C, which indicates that three combinations of single Sentinel-1A-derived information could not meet the urban land-cover classification accuracy requirements. C’s overall classification accuracy came in last, whereas the F1 measure of urban impervious surfaces (BIS and DIS) was markedly higher than that of other land cover types. This is because the coherence values of BIS and DIS were significantly higher than that of other types and BIS and DIS are highly separable. This shows that the texture and intensity information of Sentinal-1A data is very important for urban land-cover classification, and that the coherence information is more suitable to the classification of urban impervious surfaces.

Table 9.

Classification results using different combinations of Sentinel-1A-derived information (using the RF classifier).

C+T had the highest classification result (with an overall accuracy of 89.24% and a kappa coefficient of 0.8650), and all its F1 measures were above 85%. Consequently, satisfactory classification results can be obtained by using combined texture and coherence information from Sentinel-1A data. When adding coherence images to the backscatter intensity information, the overall accuracy and kappa coefficient improved by 8.22% and 0.1029, respectively. These improvements were also observed when the texture information was added to the backscatter intensity images with both the overall accuracy and kappa coefficient increasing from 79.32 to 84.42% and from 0.7408 to 0.8046, respectively. This indicates that these Sentinel-1A-derived images, when using their information in a complementary way, can effectively improve classification accuracy. Some previous studies have also reported on the importance of using the coherence and texture information of SAR data [17,19,40].

5. Conclusions

In this study, we classified Sentinel-1A data and GF-1 images taken during all four seasons in a subtropical monsoon climate region of Southeast China using both SVM and RF classifiers. The following conclusions can be drawn from this study: (1) In this subtropical monsoon-climate area, for both Sentinel-1A and GF-1 data, the winter images performed better than any other season, while the summer images performed the worst. Although, in terms of the F1 measure, SVM obtained better WAT and FOR classification results than RF did using single-season GF-1 data, the overall classification accuracy of RF was still better than that of SVM; (2) Classification accuracy was improved by using multi-season images, and the best performance resulted from combining all four seasons. When using Sentinel-1A images from only three seasons, the F1 measure of each urban land cover was higher than 85%, with an overall accuracy of up to 90.67% and a kappa coefficient of up to 0.8828. This shows that optical images can be replaced by Sentinel-1A images for urban mapping; (3) The best result was obtained through combining GF-1 and Sentinel-1A images, with an overall accuracy of up to 96.02% and a kappa coefficient of up to 0.9502. When adding the winter GF-1 image to the four-season Sentinel-1A image, the overall accuracy and kappa coefficient improved by 4.07% and 0.0513, respectively. This indicates that SAR data and optical images can use their complementary information in order to improve classification accuracy; and (4) The textures’ classification results were slightly better than those of the backscatter-intensity images. Although the coherence data performed the worst, it was still able to distinguish urban impervious surfaces (BIS and DIS) well. When using different combinations of Sentinel-1A-derived information, the highest classification accuracy was obtained with the combination of coherence and texture images, with all of the F1 measures above 85% (with an overall accuracy of 89.24% and a kappa coefficient of 0.8650).

Our results apply to more regions than those examined by previous studies that used optical images to investigate the impact of seasonality on urban land-cover mapping. This is because optical images are susceptible to the effects of cloudy and rainy weather. This study’s method can also be applied to other types of land covers. In a future study, in order to use SAR data to investigate the impact of seasonality on urban land cover mapping, further climate types will be planned, along with comparisons between them.

Acknowledgments

This research was supported by the National Natural Science Foundation of China (No. 41771247) and the Priority Academic Program Development of the Jiangsu Higher Education Institutions.

Author Contributions

Tao Zhou conceived and designed this study and wrote the paper. Jianjun Pan provided comments and suggestions. Tao Zhou performed the experiments and conducted the analyses. Tao Zhou, Meifang Zhao and Chuanliang Sun revised the manuscript draft.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Esch, T.; Thiel, M.; Schenk, A.; Roth, A.; Muller, A.; Dech, S. Delineation of urban footprints from TerraSAR-X data by analyzing speckle characteristics and intensity information. IEEE Trans. Geosci. Remote Sens. 2010, 48, 905–916. [Google Scholar] [CrossRef]

- Niu, X.; Ban, Y. Multi-temporal RADARSAT-2 polarimetric SAR data for urban land-cover classification using an object-based support vector machine and a rule-based approach. Int. J. Remote Sens. 2013, 34, 1–26. [Google Scholar] [CrossRef]

- Sun, Z.C.; Li, X.W.; Fu, W.X.; Li, Y.K.; Tang, D.S. Long-term effects of land use/land cover change on surface runoff in urban areas of Beijing, China. J. Appl. Remote Sens. 2013, 8, 084596. [Google Scholar] [CrossRef]

- Deng, C.; Wu, C. Examining the impacts of urban biophysical compositions on surface urban heat island: A spectral unmixing and thermal mixing approach. Remote Sens. Environ. 2013, 131, 262–274. [Google Scholar] [CrossRef]

- Weng, Q.H. Remote sensing of impervious surfaces in the urban areas: Requirements, methods, and trends. Remote Sens. Environ. 2012, 117, 34–49. [Google Scholar] [CrossRef]

- Van de Voorde, T.; Jacquet, W.; Canters, F. Mapping form and function in urban areas: An approach based on urban metrics and continuous impervious surface data. Landsc. Urban Plan. 2011, 102, 143–155. [Google Scholar] [CrossRef]

- Corbane, C.; Faure, J.-F.; Baghdadi, N.; Villeneuve, N.; Petit, M. Rapid urban mapping using SAR/optical imagery synergy. Sensors 2008, 8, 7125–7143. [Google Scholar] [CrossRef] [PubMed]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of optical and synthetic aperture radar imagery for improving crop mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef]

- Oyoshi, K.; Tomiyama, N.; Okumura, T.; Sobue, S.; Sato, J. Mapping rice-planted areas using time-series synthetic aperture radar data for the Asia-RiCE activity. Paddy Water Environ. 2016, 14, 463–472. [Google Scholar] [CrossRef]

- Hong, S.; Jang, H.; Kim, N.; Sohn, H.-G. Water area extraction using RADARSAT SAR imagery combined with landsat imagery and terrain information. Sensors 2015, 15, 6652–6667. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Yeh, A.G. Multitemporal sar images for monitoring cultivation systems using case-based reasoning. Remote Sens. Environ. 2004, 90, 524–534. [Google Scholar] [CrossRef]

- Tan, W.; Liao, R.; Du, Y.; Lu, J.; Li, J. Improving urban impervious surface classification by combining Landsat and PolSAR images: A case study in Kitchener-Waterloo, Ontario, Canada. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1917–1920. [Google Scholar]

- Pellizzeri, T.M.; Gamba, P.; Lombardo, P.; Dell’Acqua, F. Multitemporal/multiband SAR classification of urban areas using spatial analysis: Statistical versus neural kernel-based approach. Geosci. Remote Sens. IEEE Trans. 2003, 41, 2338–2353. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, H.; Li, Y. Impacts of feature normalization on optical and SAR data fusion for land use/land cover classification. IEEE Geosci. Remote Sens. Lett. 2017, 12, 1061–1065. [Google Scholar] [CrossRef]

- Lopez, C.V.; Anglberger, H.; Stilla, U. Fusion of very high resolution sar and optical images for the monitoring of urban areas. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, UAE, 6–8 March 2017. [Google Scholar]

- Teimouri, M.; Mokhtarzade, M.; Zoej, M.J.V. Optimal fusion of optical and sar high-resolution images for semiautomatic building detection. Gisci. Remote Sens. 2016, 53, 45–62. [Google Scholar] [CrossRef]

- Roychowdhury, K. Comparison between Spectral, Spatial and Polarimetric Classification of Urban and Periurban Landcover Using Temporal Sentinel-1 Images. In Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; pp. 789–796. [Google Scholar]

- Fard, T.A.; Hasanlou, M.; Arefi, H. Classifier fusion of high-resolution optical and synthetic aperture radar (SAR) satellite imagery for classification in urban area. In Proceedings of the ISPRS—International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Tehran, Iran, 21 October 2014; pp. 25–29. [Google Scholar]

- Sonobe, R.; Tani, H.; Wang, X.; Kobayashi, N.; Shimamura, H. Discrimination of crop types with TerraSAR-X-derived information. Phys. Chem. Earth Parts A/B/C 2015, 83–84, 2–13. [Google Scholar] [CrossRef]

- Parihar, N.; Das, A.; Rathore, V.S.; Nathawat, M.S.; Mohan, S. Analysis of L-band SAR backscatter and coherence for delineation of land-use/land-cover. Int. J. Remote Sens. 2014, 35, 6781–6798. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Lin, H. Urban land cover mapping using random forest combined with optical and SAR data. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 6809–6812. [Google Scholar]

- Aimaiti, Y.; Kasimu, A.; Jing, G. Urban landscape extraction and analysis based on optical and microwave alos satellite data. Earth Sci. Inf. 2016, 9, 1–11. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Vapnik, V.N.; Vapnik, V. Statistical Learning Theory; Wiley: Hoboken, NJ, USA, 1998. [Google Scholar]

- Ban, Y.; Jacob, A. Object-based fusion of multitemporal multiangle ENVISAT ASAR and HJ-1b multispectral data for urban land-cover mapping. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1998–2006. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E.; Rogan, J.; Kellndorfer, J. Assessment of spectral, polarimetric, temporal, and spatial dimensions for urban and peri-urban land cover classification using Landsat and SAR data. Remote Sens. Environ. 2012, 117, 72–82. [Google Scholar] [CrossRef]

- Deng, C.; Li, C.; Zhu, Z.; Lin, W.; Xi, L. Evaluating the impacts of atmospheric correction, seasonality, environmental settings, and multi-temporal images on subpixel urban impervious surface area mapping with Landsat data. ISPRS J. Photogramm. Remote Sens. 2017, 133, 89–103. [Google Scholar] [CrossRef]

- Scott, D.; Petropoulos, G.P.; Moxley, J.; Malcolm, H. Quantifying the physical composition of urban morphology throughout wales based on the time series (1989–2011) analysis of Landsat TM/ETM plus images and supporting GIS data. Remote Sens. 2014, 6, 11731–11752. [Google Scholar] [CrossRef]

- Tsutsumida, N.; Comber, A.; Barrett, K.; Saizen, I.; Rustiadi, E. Sub-pixel classification of MODIS EVI for annual mappings of impervious surface areas. Remote Sens. 2016, 8, 143. [Google Scholar] [CrossRef]

- Myint, S.W.; Okin, G.S. Modelling land-cover types using multiple endmember spectral mixture analysis in a desert city. Int. J. Remote Sens. 2009, 30, 2237–2257. [Google Scholar] [CrossRef]

- Schönfeldt, U.; Braubach, H. Electrical architecture of the SENTINEL-1 SAR antenna subsystem. In Proceedings of the 2008 7th European Conference on Synthetic Aperture Radar (EUSAR), Friedrichshafen, Germany, 2–5 June 2008. [Google Scholar]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional vegetation cover estimation algorithm for chinese GF-1 wide field view data. Remote Sens. Environ. 2016, 177, 184–191. [Google Scholar] [CrossRef]

- Chen, X.; Xing, J.; Liu, L.; Li, Z.; Mei, X.; Fu, Q.; Xie, Y.; Ge, B.; Li, K.; Xu, H. In-flight calibration of GF-1/WFV visible channels using rayleigh scattering. Remote Sens. 2017, 9, 513. [Google Scholar] [CrossRef]

- Zhou, T.; Pan, J.; Zhang, P.; Wei, S.; Han, T. Mapping winter wheat with multi-temporal SAR and optical images in an urban agricultural region. Sensors 2017, 17, 1210. [Google Scholar] [CrossRef] [PubMed]

- Clevers, J. Assessing the Accuracy of Remotely Sensed Data—Principles and Practices, Russell G. Congalton, Kass Green, Second edition, CRC Press, Taylor & Francis Group, Boca Raton, FL (2009), 183 pp., Price: $99.95, ISBN: 978-1-4200-5512-2. Int. J. Appl. Earth Obs. Geoinform. 2009, 11, 448–449. [Google Scholar]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. Remote Sensing and Image Interpretation, 5th ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Schuster, C.; Förster, M.; Kleinschmit, B. Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- Schuster, C.; Schmidt, T.; Conrad, C.; Kleinschmit, B.; Förster, M. Grassland habitat mapping by intra-annual time series analysis—Comparison of RapidEye and TerraSAR-X satellite data. Int. J. Appl. Earth Obs. Geoinform. 2015, 34, 25–34. [Google Scholar] [CrossRef]

- Arsenault, H.H. Speckle suppression and analysis for synthetic aperture radar images. Opt. Eng. 1985, 25, 636–643. [Google Scholar]

- Du, P.J.; Samat, A.; Waske, B.; Liu, S.C.; Li, Z.H. Random forest and rotation forest for fully polarized SAR image classification using polarimetric and spatial features. ISPRS J. Photogramm. Remote Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Voisin, A.; Krylov, V.A.; Moser, G.; Serpico, S.B.; Zerubia, J. Classification of very high resolution sar images of urban areas using copulas and texture in a hierarchical markov random field model. IEEE Geosci. Remote Sens. 2013, 10, 96–100. [Google Scholar] [CrossRef]

- Gamba, P.; Aldrighi, M. Sar data classification of urban areas by means of segmentation techniques and ancillary optical data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1140–1148. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, H.; Li, Y.; Zhang, Y.; Fang, C. Mapping urban impervious surface with dual-polarimetric SAR data: An improved method. Landsc. Urban Plan. 2016, 151, 55–63. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, R. Exploring the optimal integration levels between SAR and optical data for better urban land cover mapping in the pearl river delta. Int. J. Appl. Earth Obs. Geoinform. 2018, 64, 87–95. [Google Scholar] [CrossRef]

- Jia, K.; Wei, X.; Gu, X.; Yao, Y.; Xie, X.; Li, B. Land cover classification using Landsat 8 operational land imager data in Beijing, China. Geocarto Int. 2014, 29, 941–951. [Google Scholar] [CrossRef]

- Waske, B.; Benediktsson, J.A. Fusion of support vector machines for classification of multisensor data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3858–3866. [Google Scholar] [CrossRef]

- Navarro, A.; Rolim, J.; Miguel, I.; Catalao, J.; Silva, J.; Painho, M.; Vekerdy, Z. Crop monitoring based on SPOT-5 take-5 and Sentinel-1A data for the estimation of crop water requirements. Remote Sens. 2016, 8, 525. [Google Scholar] [CrossRef]

- Hutt, C.; Koppe, W.; Miao, Y.X.; Bareth, G. Best accuracy land use/land cover (LULC) classification to derive crop types using multitemporal, multisensor, and multi-polarization SAR satellite images. Remote Sens. 2016, 8, 15. [Google Scholar] [CrossRef]

- Jung, H.C.; Alsdorf, D. Repeat-Pass Multi-Temporal Interferometric Sar Coherence Variations with Amazon Floodplain and Lake Habitats; Taylor & Francis, Inc.: Melbourne, Australia, 2010; pp. 881–901. [Google Scholar]

- Liao, M.; Jiang, L.; Lin, H.; Li, D. Urban change detection using coherence and intensity characteristics of multi-temporal ERS-1/2 imagery. In Proceedings of the Fringe 2005 Workshop, Frascati, Italy, 28 November–2 December 2005. [Google Scholar]

- Weydahl, D.J. Analysis of ERS SAR coherence images acquired over vegetated areas and urban features. Int. J. Remote Sens. 2001, 22, 2811–2830. [Google Scholar] [CrossRef]

- O’Grady, D.; Leblanc, M. Radar mapping of broad-scale inundation: Challenges and opportunities in Australia. Stoch. Environ. Res. Risk Assess. 2014, 28, 29–38. [Google Scholar] [CrossRef]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and synthetic aperture radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Lin, H. A comparison study of impervious surfaces estimation using optical and SAR remote sensing images. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 148–156. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Skakun, S.; Kussul, N.; Shelestov, A.Y.; Lavreniuk, M.; Kussul, O. Efficiency assessment of multitemporal C-Band Radarsat-2 intensity and Landsat-8 surface reflectance satellite imagery for crop classification in Ukraine. IEEE J. Sel. Top. Appl. Earth Obs. Romote Sens. 2016, 9, 3712–3719. [Google Scholar] [CrossRef]

- Wit, A.J.W.D.; Clevers, J.G.P.W. Efficiency and accuracy of per-field classification for operational crop mapping. Int. J. Remote Sens. 2004, 25, 4091–4112. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution SAR and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Chen, P.; Xu, X.; Cheng, C.; Chen, B. Spatiotemporal fuzzy clustering strategy for urban expansion monitoring based on time series of pixel-level optical and SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1769–1779. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Lin, H. Improving the impervious surface estimation with combined use of optical and SAR remote sensing images. Remote Sens. Environ. 2014, 141, 155–167. [Google Scholar] [CrossRef]

- Sprohnle, K.; Fuchs, E.M.; Pelizari, P.A. Object-based analysis and fusion of optical and SAR satellite data for dwelling detection in refugee camps. IEEE J. Sel. Top. Appl. Earth Obs. Romote Sens. 2017, 10, 1780–1791. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).