Abstract

Urban Air Mobility (UAM) emerges as a transformative approach to address urban congestion and pollution, offering efficient and sustainable transportation for people and goods. Central to UAM is the Operational Digital Twin (ODT), which plays a crucial role in real-time management of air traffic, enhancing safety and efficiency. This study introduces a YOLOTransfer-DT framework specifically designed for Artificial Intelligence (AI) training in simulated environments, focusing on its utility for experiential learning in realistic scenarios. The framework’s objective is to augment AI training, particularly in developing an object detection system that employs visual tasks for proactive conflict identification and mission support, leveraging deep and transfer learning techniques. The proposed methodology combines real-time detection, transfer learning, and a novel mix-up process for environmental data extraction, tested rigorously in realistic simulations. Findings validate the use of existing deep learning models for real-time object recognition in similar conditions. This research underscores the value of the ODT framework in bridging the gap between virtual and actual environments, highlighting the safety and cost-effectiveness of virtual testing. This adaptable framework facilitates extensive experimentation and training, demonstrating its potential as a foundation for advanced detection techniques in UAM.

1. Introduction

Urban Air Mobility (UAM) emerges as a transformative approach to urban transportation, integrating various aircraft into city transit networks to offer swift and efficient travel alternatives. This innovation aims to mitigate road congestion and improve accessibility to remote locations through the utilization of manned and unmanned aerial vehicles [1,2]. The UAM ecosystem features a range of aircraft, including helicopters, compact fixed-wing planes, and electric vertical take-off and landing (eVTOL) vehicles, all designed for urban use with minimal noise and vertical landing capabilities. A key application of UAM is the use of eVTOL aircraft for on-demand transport services, akin to current ride-sharing platforms, enabling users to book flights through mobile apps for quick and direct city travel. Nonetheless, establishing a reliable UAM system necessitates comprehensive research and detailed planning to ensure operational security and efficiency [3,4,5]. Ensuring flight safety within UAM involves addressing regulatory, infrastructural, and cost issues, while its introduction as a new airspace user presents substantial challenges to existing air traffic management, requiring innovative solutions for integration and to leverage its potential for urban transit improvement [6,7,8].

In UAM, Operational Digital Twins (ODTs) are essentially digital versions of real-world elements that greatly improve UAM’s operations’ efficiency and effectiveness. These ODTs cover a variety of vehicles like drones and flying cars and include the necessary infrastructure and key parts of the UAM system. ODTs use data from sensors and other sources to create up-to-date virtual models for detailed simulations and analyses of how the system behaves in different situations. This is crucial for evaluating system performance, identifying possible issues, and supporting strategic maintenance and operational planning, thus increasing UAM systems’ safety, effectiveness, and dependability. The use of ODTs in urban air mobility covers important areas. (i) Performance Modeling and Simulation: ODTs model and simulate UAM vehicle performance, including flight dynamics and propulsion, to help optimize vehicle design and identify risks. (ii) Fleet Management and Maintenance: ODTs monitor vehicle conditions in real time, aiding in fleet management and maintenance based on accurate health data of vehicles. (iii) Traffic Management and Control: ODTs improve traffic management in UAM by providing detailed data on vehicle paths, which helps coordinate movements and prevent collisions. (iv) Emergency Response and Rescue: ODTs enhance emergency responses by quickly providing information on vehicle locations and conditions, crucial for managing emergencies efficiently. ODTs play a key role in simulating, optimizing, and managing aerial vehicles and traffic within urban airspaces. They reproduce and analyze the physical and algorithmic aspects of the system, allowing for system design testing, immediate operational adjustments, and continuous improvements. Vital for operational efficiency and safety, ODTs significantly aid in enhancing performance, advancing technology, and coordinating efforts in urban air mobility.

Unmanned vehicles use object detection to recognize items around them, employing various methods for spotting obstacles in aerial vehicles like UAVs and UAMs. Techniques such as ultrasonic, infrared, and lidar are common, where UAVs emit sound, infrared waves, or laser pulses and measure the reflection time to detect obstacles [9]. Camera-based systems, using either single (monocular) or multiple (stereo-vision) cameras, capture images to identify obstacles [10]. Moreover, obstacle detection utilizes image-based, sensor-based, and hybrid methods. Image-based approaches include analyzing appearance, motion, depth, and size changes, while sensor-based techniques rely on devices like LiDAR and ultrasonic sensors [11]. Recently, deep learning and edge computing have become popular for real-time obstacle detection and tracking, offering improved accuracy. Deep learning models such as YOLO, CNNs, and DSODs are especially noted for their efficacy in aerial vehicle obstacle detection [12,13]. However, the success of these models largely depends on the quality and variety of training data. Challenges in gathering effective training data include identifying small, fast-moving drones, avoiding false positives with other flying objects, and distinguishing drones from similar items in the sky. These issues underline the need for diverse and comprehensive training datasets to enhance the accuracy and reliability of deep learning-based drone detection systems. Addressing these data collection challenges and managing costs are essential for improving the performance of deep learning models like YOLO in drone detection [14,15,16,17].

In the existing literature, discussions on operational digital twin frameworks employing Artificial Intelligence (AI) for UAM are sparse. The majority of current research focuses on optimizing UAM operations, infrastructure development, and integration with established urban transport networks, aiming to deliver efficient, secure, and eco-friendly air mobility solutions. However, these studies seldom address AI-enhanced collision detection and situational awareness within the digital twin architecture comprehensively. Among the studies most pertinent to our research, Yeon (2023) [18] and Tuchen (2022) [19] explore UAM integration within Boston’s transportation system through digital twin and economic analyses, assessing UAM’s urban impact and its potential to support employment, environmental sustainability, and urban improvement initiatives. Bachechi (2022) [20] examines digital modeling for urban traffic and air quality, leveraging data and simulations to enhance urban planning, with a focus on ground mobility digital twins. Kapteyn (2021) [21] proposes a novel approach for constructing scalable digital twins capable of real-time monitoring and decision-making based on advanced mathematical modeling. This study closely aligns with ours by providing an analytical framework for vehicle digital twin development, contrasting our data-driven methodology that utilizes computational fluid analysis. Souanef (2023) [22] details the creation of a digital twin for drone management, aiming to enhance the safety and efficiency of drone operations through a blend of real and simulated drones, focusing on small-scale drones or quadrotors within a realistic simulation framework. In contrast, our work targets air mobility within urban contexts, employing models of passenger transport aircraft. Our prior study, Costa (2023) [23], investigates the impact of software aging on UAM’s digital infrastructure, proposing innovative strategies for maintaining operational integrity and safety, including the application of deep learning for improved drone safety.

In this study, we introduce a novel framework named YOLOTransfer-DT, which combines deep learning and transfer learning within a digital twin setup to enhance situation awareness and obstacle detection capabilities for UAM vehicles. This approach seeks to overcome the difficulties of detecting obstacles in real time and the expensive process of gathering varied, high-quality data for drone detection and evasion. By utilizing a digital twin, YOLOTransfer-DT creates a simulated urban environment where YOLO-based models can be trained and evaluated, leading to better detection system performance and dependability. The digital twin framework emerges as a promising alternative to traditional methods of data collection and real-time obstacle detection for drones. This research makes significant contributions to the advancement of related fields in several key aspects:

- Operational Digital Twin Framework for Urban Air Mobility: This study introduces the YOLOTransfer-DT framework, a novel approach for the digitization of UAM environments. The framework adopts an ODT model to enhance the safety of UAM operations. It facilitates the modeling and simulation of both existing and prospective scenarios within UAM, employing continuously updated data streams. This enables the identification and implementation of safety measures essential for the efficient management of UAM operational systems.

- Aerial Detection and Situation Awareness Framework Utilizing Deep and Transfer Learning: Addressing the critical need for enhanced detection and situation awareness in UAM within urban settings, the research proposes the YOLOTransfer framework. This framework integrates deep and transfer learning methodologies to effectively address safety concerns. Given the data-intensive nature of deep learning, the YOLOTransfer framework employs extensive datasets to achieve high precision in situation identification. The YOLOTransfer-DT framework, in particular, leverages data derived from a digital twin to train systems in detection and situation awareness, with its performance validated through tests in both virtual and physical UAM operation scenarios.

- Comprehensive Digital-to-Physical Experiments and Validation: The research encompasses three distinct experimental scenarios to validate the system’s efficacy in physical environments: scenarios involving solely ground-level obstacles, aerial obstacles, and a combination of both. These experiments are crucial for assessing the framework’s applicability in real-world settings.

The findings of this study are outlined as follows:

- Validation of objects within physical environments can effectively be conducted using a bespoke dataset derived from digital simulations. In this study, 20% of the physical dataset was allocated for validation purposes, while the remaining 80% of the virtual dataset was utilized for training.

- The framework demonstrated its capability to employ simulated data for real-time object detection within specified scenarios, effectively reducing computational demands while maintaining a satisfactory level of detection accuracy.

- The concept of “digitalization of physical assets” is introduced, delineating a strategy where digital representations act as idealized proxies for physical realities. This approach is posited as a solution to mitigate the risks and costs associated with data collection in physical settings, particularly when employing autonomous vehicles, a process often characterized by high expense and inherent risks.

The scientific soundness of the paper is robustly justified through its innovative integration of Operational Digital Twins (ODTs) with advanced deep and transfer learning techniques tailored for UAM challenges. The methodology rigorously combines YOLO for precise object detection with transfer learning to adapt models for specific UAM scenarios, validated through extensive experiments in both virtual and physical settings. This novel approach not only pioneers the application of ODTs in UAM for enhanced safety and efficiency but also mitigates the risks and costs associated with data collection in physical environments by effectively leveraging simulated data. The paper’s contributions towards developing safe, efficient, and scalable UAM operations mark a significant advancement in the field, addressing critical needs as Advanced Air Mobility (AAM) initiatives continue to expand globally.

The structure of the paper is as follows: Section 2 reviews previous research, providing a background for our study. Section 3 explains the infrastructure and safety needs for UAM and introduces our digital twin framework for object detection and awareness in UAM. Section 4 shares the outcomes of testing our framework in both simulated and real-world settings. This section also explores the framework’s limitations and significant findings. The paper concludes with a summary and final thoughts in Section 5.

2. Related Works

Operational Digital Twins (ODTs) are advanced virtual models that replicate physical systems, processes, or products. They play a crucial role in real-time analysis, optimization, and prediction of system behaviors, especially in UAM. In UAM, ODTs are vital for monitoring and improving the operations of Electric Vertical Takeoff and Landing (eVTOL) aircraft and vertiports. Digital twins have significantly enhanced performance in various sectors by facilitating simulation, prediction, and informed decision-making [24,25]. A digital twin consists of the physical item, its virtual counterpart, and the connecting data [24]. NASA describes a digital twin as a comprehensive simulation model that mirrors the life of its physical twin [26]. Current research in UAM highlights ODTs’ potential in optimizing eVTOL routes, maintenance forecasting, and vertiport management, promising improved efficiency, safety, and reduced costs despite challenges like data accuracy and privacy concerns [27,28]. ODTs also offer insights into environmental impacts, energy use, and demand forecasting for UAM services, supporting AI model development with realistic sensor data simulation [29]. The aerospace industry’s growing interest in UAM for urban point-to-point transportation underscores the need for advanced technologies like digital twins to ensure safety and efficiency [19]. For example, studies in the Boston area have used digital twins to assess UAM in populated settings [19], while NASA advocates for their use in vehicle certification and safety [26]. Beyond UAM, digital twins have been applied in various innovative ways, such as creating 3D digital models of urban parks, comprehensive modeling approaches integrating physical and virtual elements, and smart city applications for energy analysis in buildings [30,31,32,33,34]. Major companies like Airbus and Boeing are advancing digital twin technology [35]. However, research on integrating detection and avoidance systems within digital twin frameworks for UAM, particularly those involving LiDAR and UAV communication security, remains limited and presents an area ripe for exploration [36,37,38,39,40].

The most related works up to date are as follows. The paper [18] presents a comprehensive case study on the integration of UAM into the Greater Boston transportation network, focusing on a digital twin for Boston and economic analysis. It explores the potential impact of UAM on cities, addressing the need for multimodal transportation planning, economic analysis, and community integration of new air vehicles. The novelty lies in offering a detailed government-focused perspective on UAM integration, providing a framework and tools for cities to plan and evaluate UAM’s economic and social impacts, including job creation, environmental considerations, and urban planning enhancements. The paper [19] presents a comprehensive case study on the integration of UAM into the Greater Boston transportation network, focusing on a digital twin for Boston and economic analysis. It explores the potential impact of UAM on cities, addressing the need for multimodal transportation planning, economic analysis, and community integration of new air vehicles. The novelty lies in offering a detailed government-focused perspective on UAM integration, providing a framework and tools for cities to plan and evaluate UAM’s economic and social impacts, including job creation, environmental considerations, and urban planning enhancements. The paper [20] discusses the development of Digital Twins (DTs) for urban mobility, focusing on the integration of Traffic and Air Quality DTs and a Graph-Based Multi-Modal Mobility DT. These DTs utilize real-time data, simulation models, and graph databases to analyze and improve urban traffic flows, air quality, and multi-modal transport interactions, demonstrating potential benefits for urban planning and management. The novelty lies in the comprehensive approach to model urban mobility through simulation and graph-based representations, enabling detailed analysis and scenario testing for informed decision-making in urban environments. The paper [21] proposes a probabilistic graphical model as a foundational mathematical framework for developing predictive digital twins at scale, offering a systematic approach to integrate dynamic data-driven applications with computational models for real-time monitoring and decision-making. The novelty lies in its formal mathematical representation of digital twins as sets of coupled dynamical systems, leveraging Bayesian statistics, control theory, and dynamical systems theory to enable scalable, application-agnostic digital twin implementations across various engineering and scientific domains. The paper [22] outlines the creation of a digital twin for the National Beyond Visual Line of Sight Experimentation Corridor to enable safe and efficient drone operations through synthetic and hybrid flight testing of Unmanned Traffic Management (UTM) concepts. The main novelty lies in its detailed design and implementation of a digital twin that integrates both simulated and real drones within a realistic airspace environment, offering a platform for comprehensive testing and validation of UTM services, thereby advancing the development of safer and more efficient drone operations in future airspace systems. Our study in this paper presents a novel approach to enhancing UAM safety and efficiency through the development of an ODT framework. This framework leverages deep learning and transfer learning for real-time object detection and situation awareness. We provide a more detailed analogy among most recent works on different perspectives on UAM and digital twin in Table 1.

Table 1.

Comparison with related papers.

Overall, this research introduces a digital twin framework designed for UAM simulations, which utilizes actual data from flight controllers and sensors. It merges AirSim, which provides flight dynamics and AI functionalities, with Unity for creating static environments. We incorporate a new Detection and Avoidance (DAA) system within the digital twin for UAM, using YoloV3 and deep sort algorithms for spotting obstacles. This system is trained using digital assist data, offering a solution to the expensive and logistically complex process of gathering real-world assist data through numerous flight trials. Our validation, using real assist data from drone cameras, shows that our digital twin DAA system achieves high accuracy and efficiency, reducing the necessity for extensive physical flight tests.

3. YOLOTransfer-DT Framework

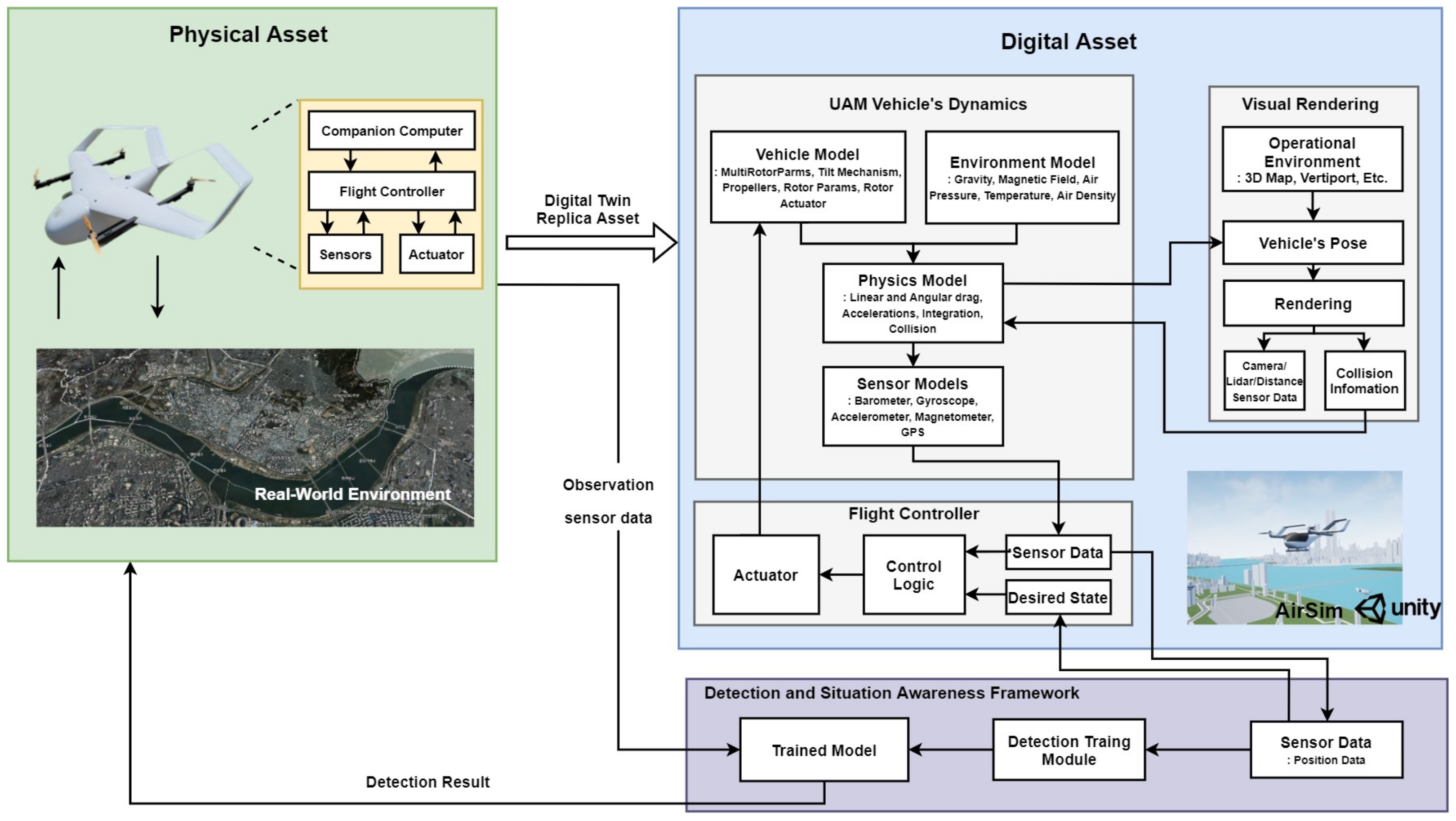

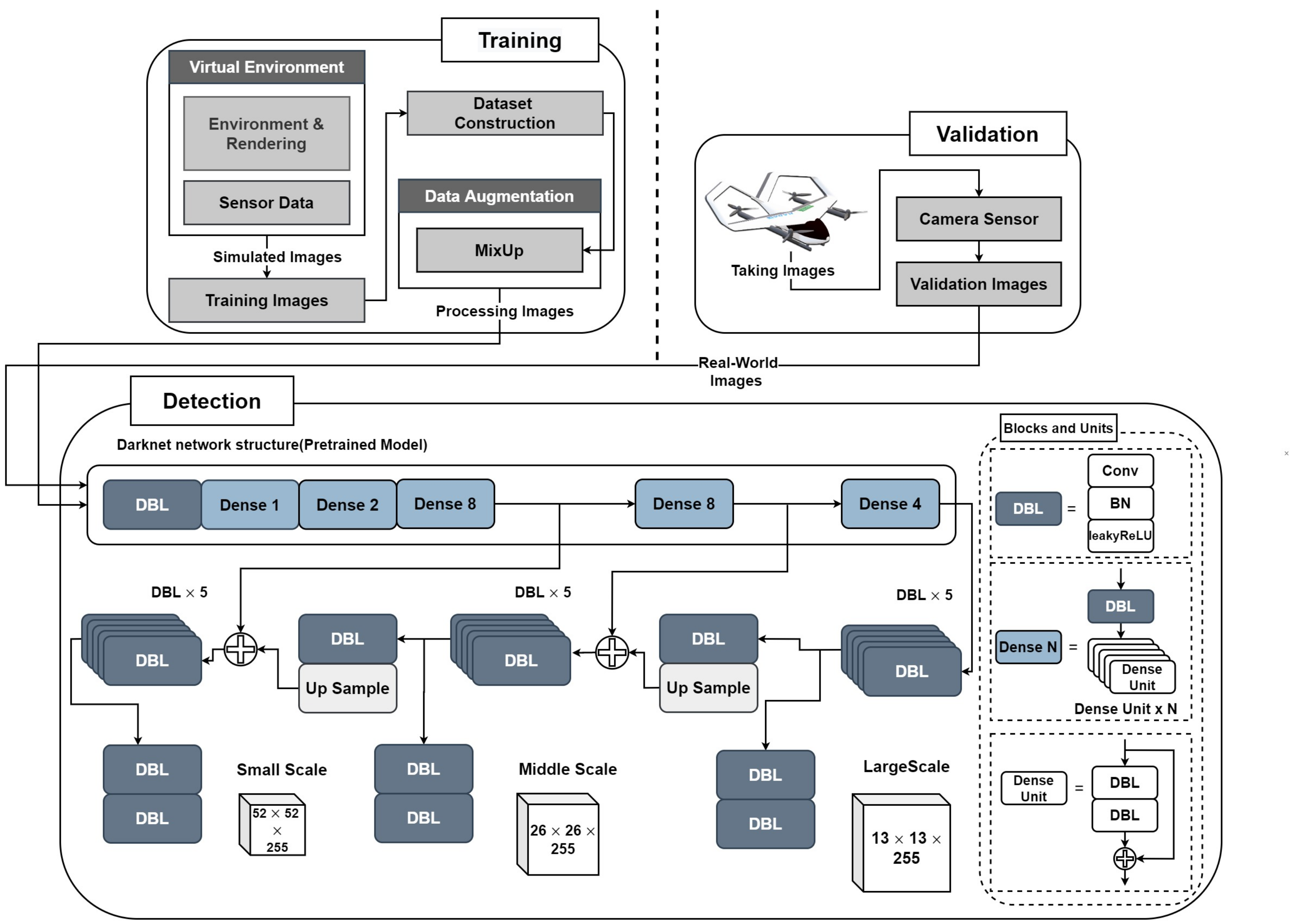

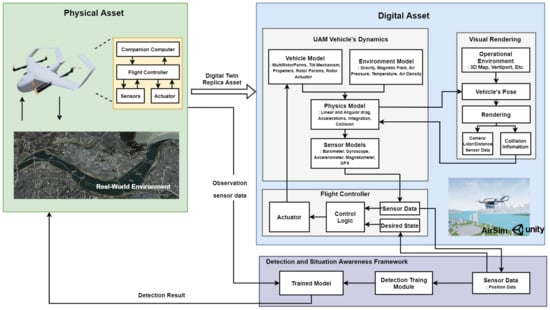

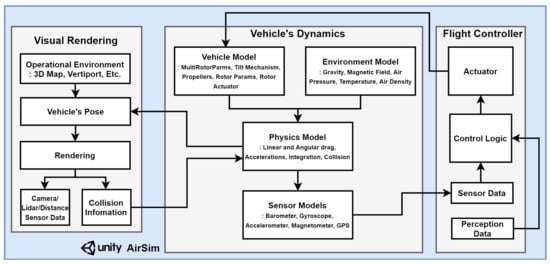

The proposed framework for detection and situation awareness in UAM operations, which uses digital twin technology, is clearly shown in Figure 1. This figure shows how the physical and digital worlds interact within our proposed system. Our approach includes important steps like turning the physical environment into a digital form, creating a digital twin for experiments, training artificial intelligence models, and using strong methods to evaluate everything.

Figure 1.

Overall architecture of proposed detection and situation awareness digital twin framework.

For this study, we used the Unity game engine and the AirSim simulation platform together to train AI agents on how to interact in a digital setting. This combination is key for creating 3D models that look like real-world objects, such as people, roads, bodies of water, and vehicles, making a detailed digital world that reflects our real one. AirSim is notable for its realistic simulation of vehicle movements, flight controls, and sensor data, helping to produce true-to-life sensor information in a digital space. This makes it possible to develop advanced AI models by using simulated data, avoiding the high costs usually linked with learning from real-life experiments. An important feature of our framework is its ability to mimic and record how it understands situations within the digital environment. This capability is crucial for checking the precision of sensor and camera data and for testing whether the AI can correctly identify and react to dangers based on this data alone. By comparing the digital situation awareness with real-world sensor data, we can confirm the accuracy and reliability of the AI models and systems we are developing for actual UAM operations.

Utilizing the proposed framework facilitates the integration of new features and functionalities, thereby enhancing scalability in response to evolving requirements and enabling customization to address distinct needs. This framework offers versatile modeling alternatives across various domains. Moreover, the employment of simulation proves to be efficacious within urban settings and engineering contexts, ensuring system reliability and optimizing their placement for practical utility.

3.1. Digitalization Framework for Urban Air Mobility

3.1.1. Overall Process

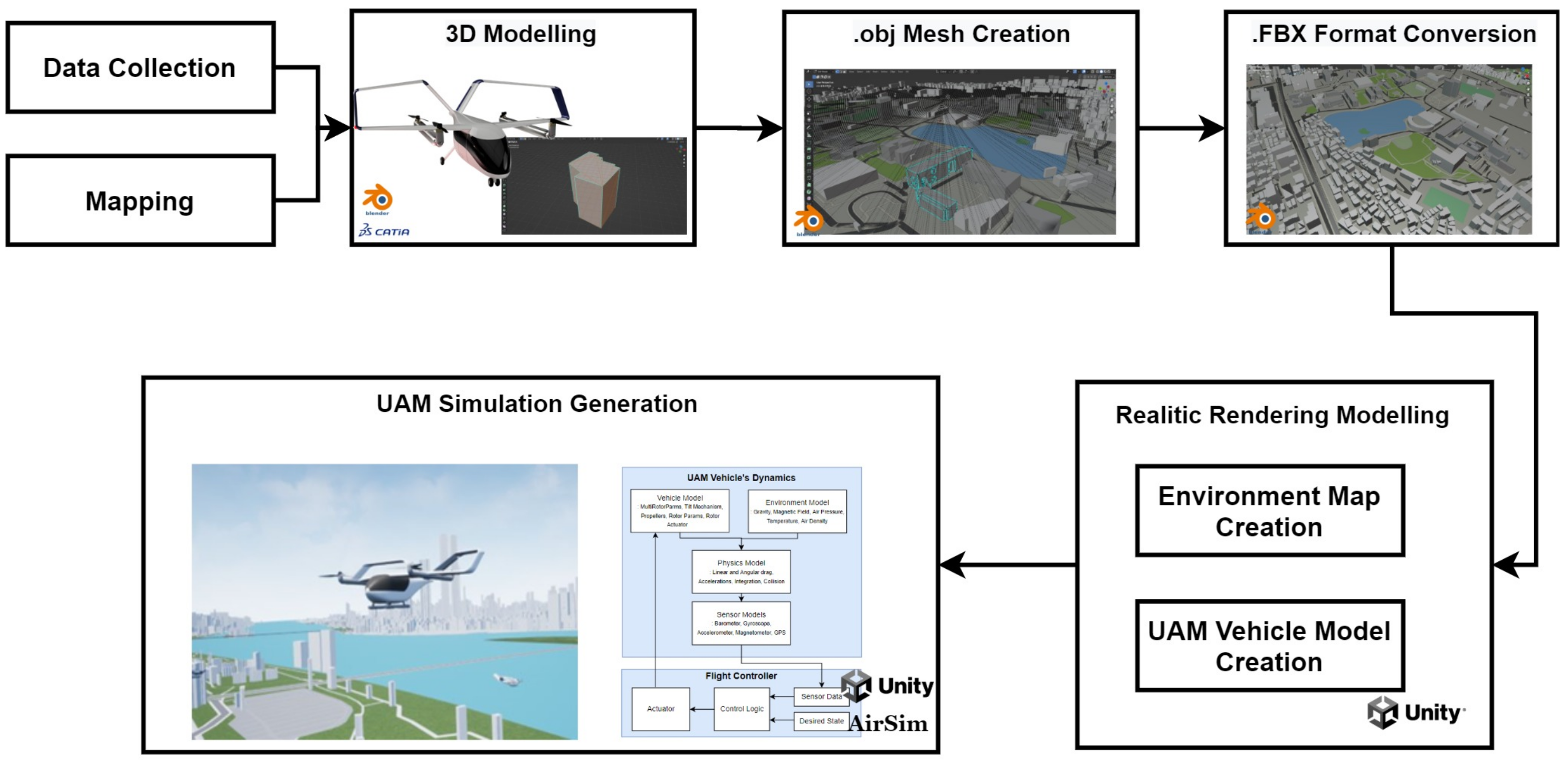

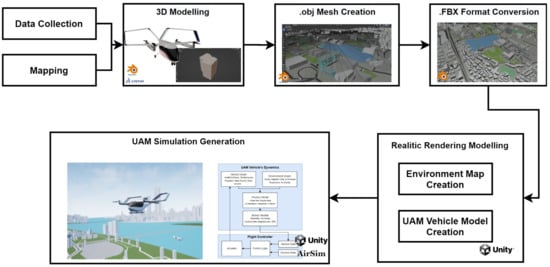

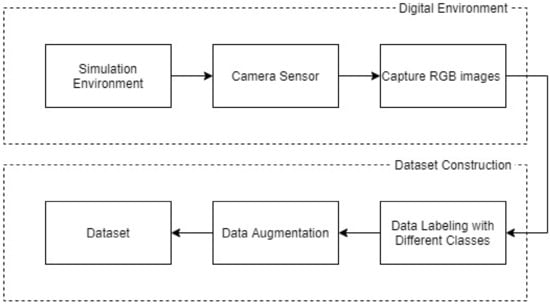

In this paper, we discuss the “digitalization of physical assets”, highlighting how digital versions serve as refined representations of the real world. In the UAM sector, this involves creating a digital system that contains detailed data and models of relevant physical aspects and their environment at a chosen level of detail. This digital model is crucial to our framework. Creating a digital version for UAM vehicles and their operating environments involves two main steps: modeling the vehicle and its surroundings, and building a simulation platform. We start by collecting real-world data to create a digital model using technologies like S-Map [51] and drone mapping. Then, we develop the UAM simulation with tools like the Unity Engine and AirSim simulator [52,53]. This allows us to simulate sensor data and create interfaces for controlling the UAM in a virtual environment that mimics reality. Figure 2 shows the detailed process of making this digital model.

Figure 2.

Digitalization model of the UAM and operating environment: overall process.

3.1.2. Simulation Development

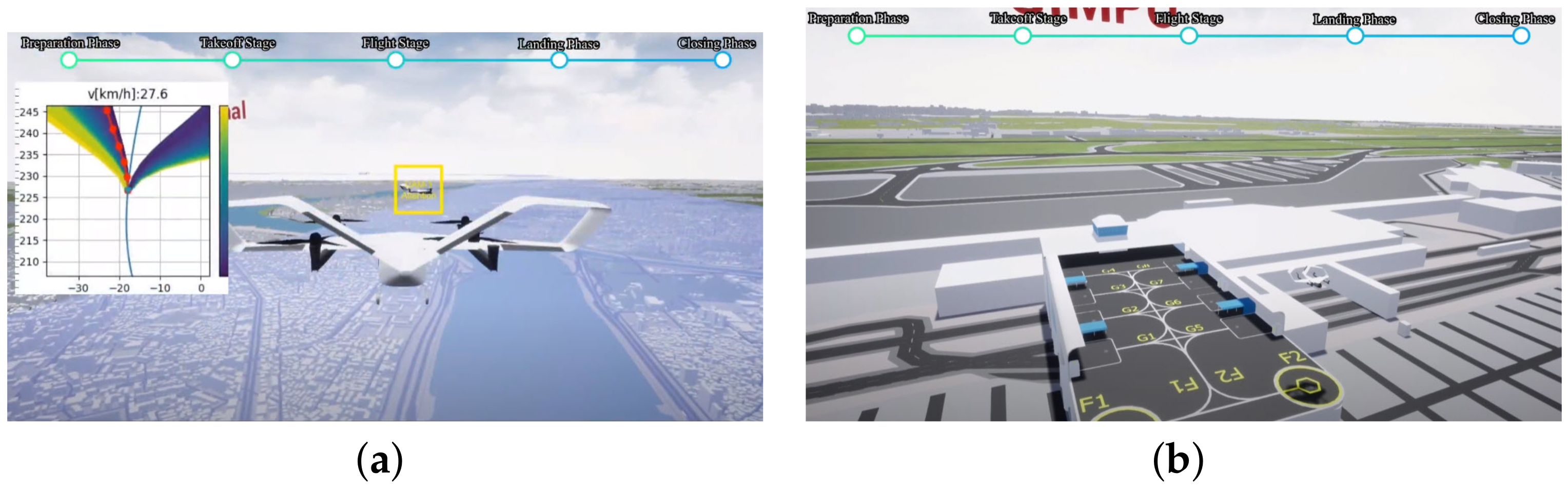

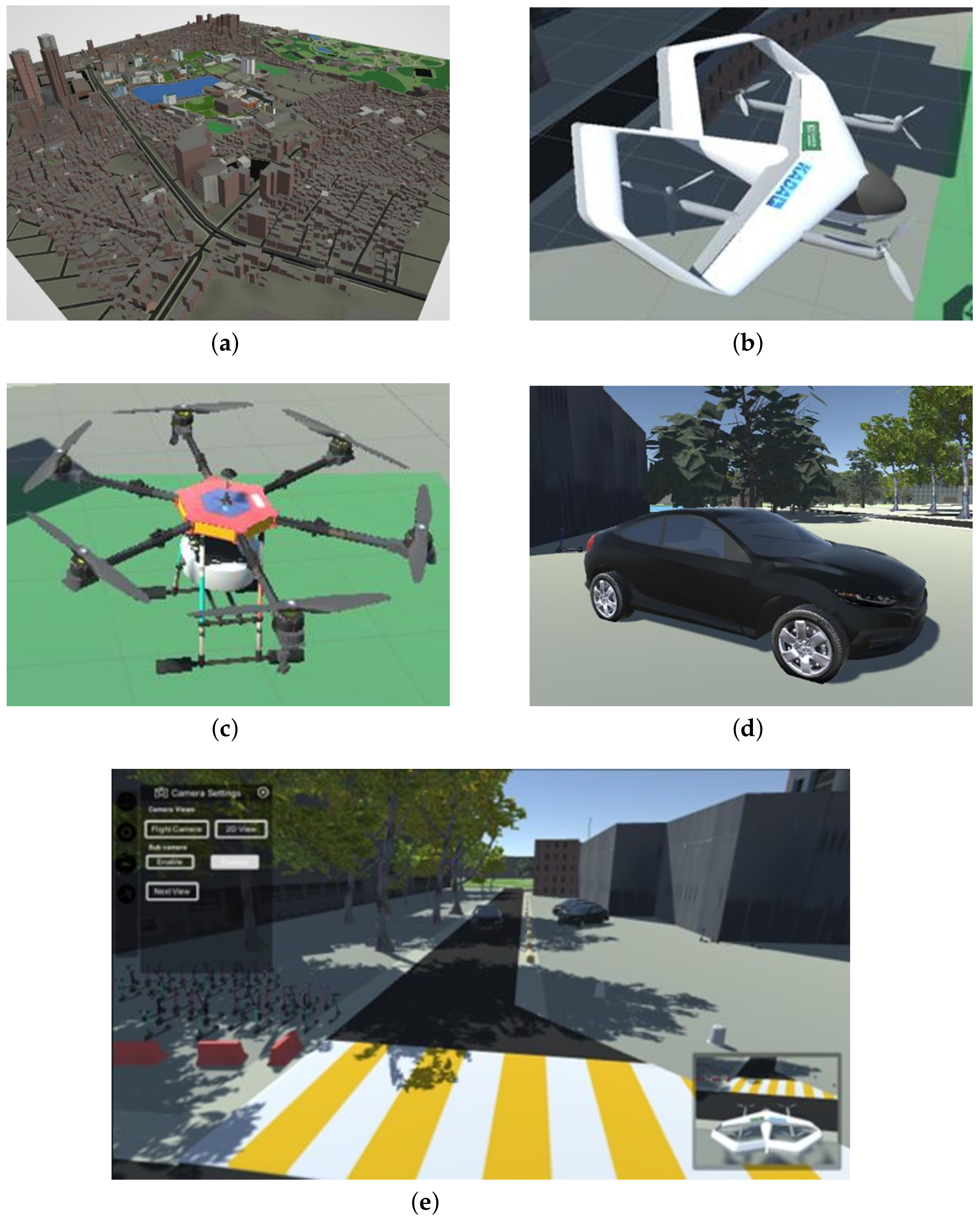

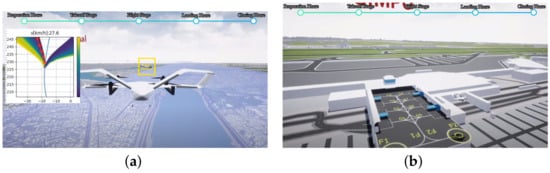

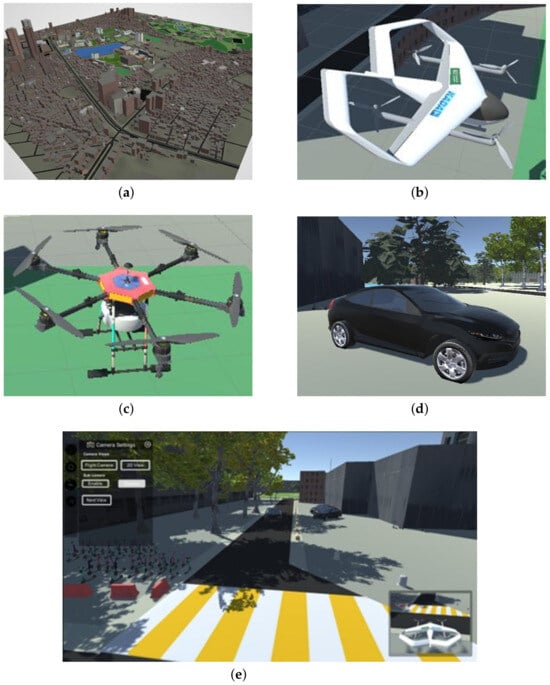

A UAM operation in Figure 3 uses cutting-edge technologies such as AI for system operation, high-reliability simulation, and specific UAM operation technology to ensure the safety of UAM. These developments guarantee effective and safe aerial transportation by establishing a safe foundation for the seamless integration of UAM into our transportation environment. In the configuration of our experiment, the Unity Engine [53] was utilized in combination with the AirSim Plugin to establish a simulated environment replete with a variety of static and dynamic obstructions. AirSim [52], a cross-platform and open-source tool founded upon the Unity Engine, enables the development of highly realistic three-dimensional environments. The resultant environment encompasses a three-dimensional map interspersed with an assortment of objects. Unity Technologies offers an array of pre-constructed scenes that depict a wide range of environments. Concurrently, AirSim provides support for software-in-the-loop (SITL) simulations, which are compatible with prevalent flight controllers employed in the realm of autonomous aerial vehicles.

Figure 3.

Visualization of UAM operation. (a) UAM attempting to follow a predefined trajectory while verifying obstacle detection, (b) UAM operation for the development of automatic takeoff and landing at vertiport.

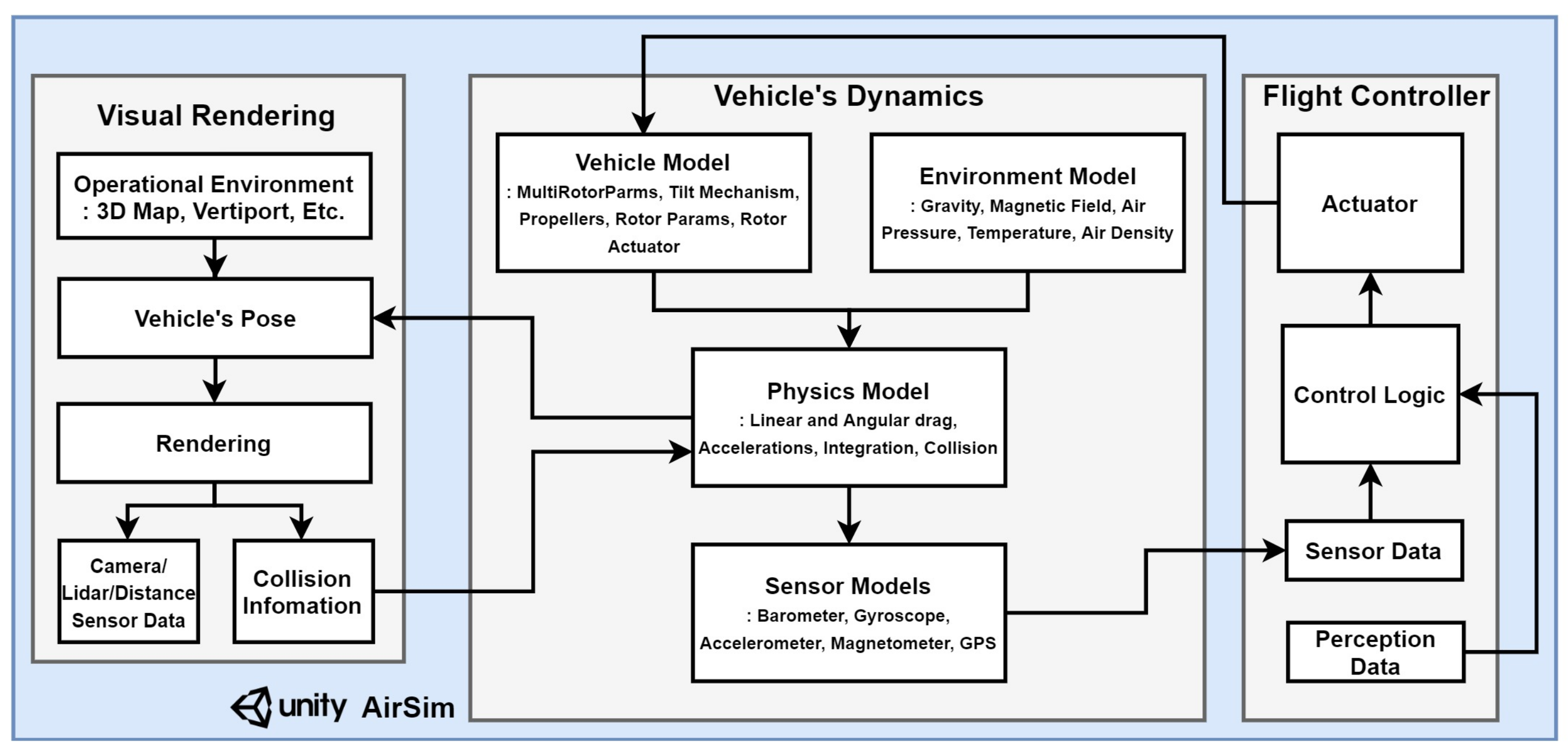

In this phase, we present a virtual simulation of Konkuk University based on the AirSim-Unity simulator. The simulator offers a configurable environment equipped with static and dynamic obstacles, which can be customized in terms of texture, color, and size, thereby ensuring a realistic testbed for the detection algorithm. AirSim is a plugin for the Unity Engine, providing high-fidelity simulation dynamics for UAVs, along with a suite of sensors such as radar and cameras for the simulated environment. Figure 4 displays the designed virtual environment using the Unity Engine and AirSim plugin. AirSim delivers a physics engine, vehicle model, environmental model, and sensor model, all crucial for testing AI models. It enables real-time operation of multiple vehicles in a rich simulated environment, as depicted in Figure 5.

Figure 4.

Overall system of interaction between environment and AirSim simulator.

Figure 5.

Custom environment generation in AirSim-Unity for UAM simulator: (a) map, (b) aircraft, (c) aerial obstacle, (d) ground obstacles, (e) overall environment.

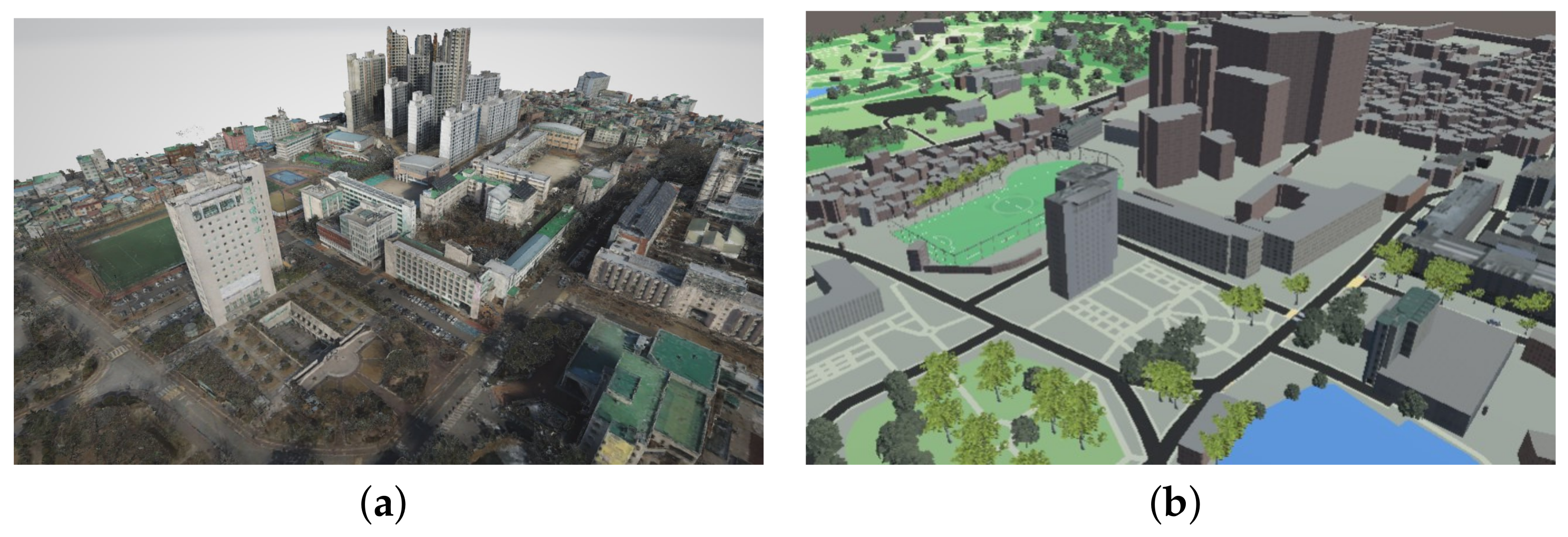

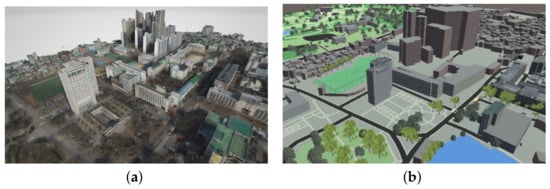

In detailing the environment modeling process, the AirSim environment model was combined with a customized adaptation of the Konkuk University map. The Konkuk University map, obtained through drone mapping techniques for enhanced fidelity, was incorporated into the dataset. However, for the specific research goals, integration with the AirSim environment model, based on Google Maps data, was chosen to construct a custom environment meeting fidelity requirements as in Figure 6. This approach facilitated the creation of realistic graphics and the inclusion of static and dynamic obstacles, closely resembling real-world scenarios. Our experiments are more realistic because of this careful approach, which makes sure that our simulation accurately mimics real-world situations necessary for UAM operations.The simulation environment, integrating AirSim with Unity, emphasizes the incorporation of diverse sensors, especially cameras, to collect RGB imagery for object recognition. These images are then utilized as inputs for deep learning algorithms, which undergo training and testing on this image-based data. The inclusion of these sensors and the application of deep learning techniques are intended to augment the realism and applicability of the simulation environment for UAM operations. Additionally, the intention is to utilize AirSim’s built-in weather system and integrate external weather simulation to incorporate weather effects into the simulation environment. The goal is to replicate realistic weather conditions to test detection capabilities by dynamically adjusting weather based on real-world data or predefined scenarios. Additionally, there is awareness of the necessity to replicate traffic dynamics in an urban setting, including vehicle movement and other activities. While the focus has been on static and dynamic obstacles thus far, it is acknowledged that accurately simulating urban environments requires incorporating realistic traffic patterns. Occlusion effects have also been created to simulate scenarios where objects may be partially obscured from view, allowing for testing of the framework’s object identification capabilities. Careful consideration has been given to addressing variability across various dimensions in the real world within the simulation.

Figure 6.

Konkuk University environment: (a) Konkuk University mapping, (b) Konkuk University.

In the context of 3D modeling simulation, digital replicas of real-world objects, including UAM, are created and added to a data structure that replicates the real-world environment. The dynamics serve as a physical engine replicating the motion state of a digital model. Using the MAVlink router to act as a vital bridge between the simulation and the physical model. To construct a system with the same mechanism as a real object that estimates the condition based on sensor values and generates actuator signals through the flight control process, a digital system that operates as a single aircraft system by integrating flight dynamic simulations and flight control firmware based on the SITL interface.

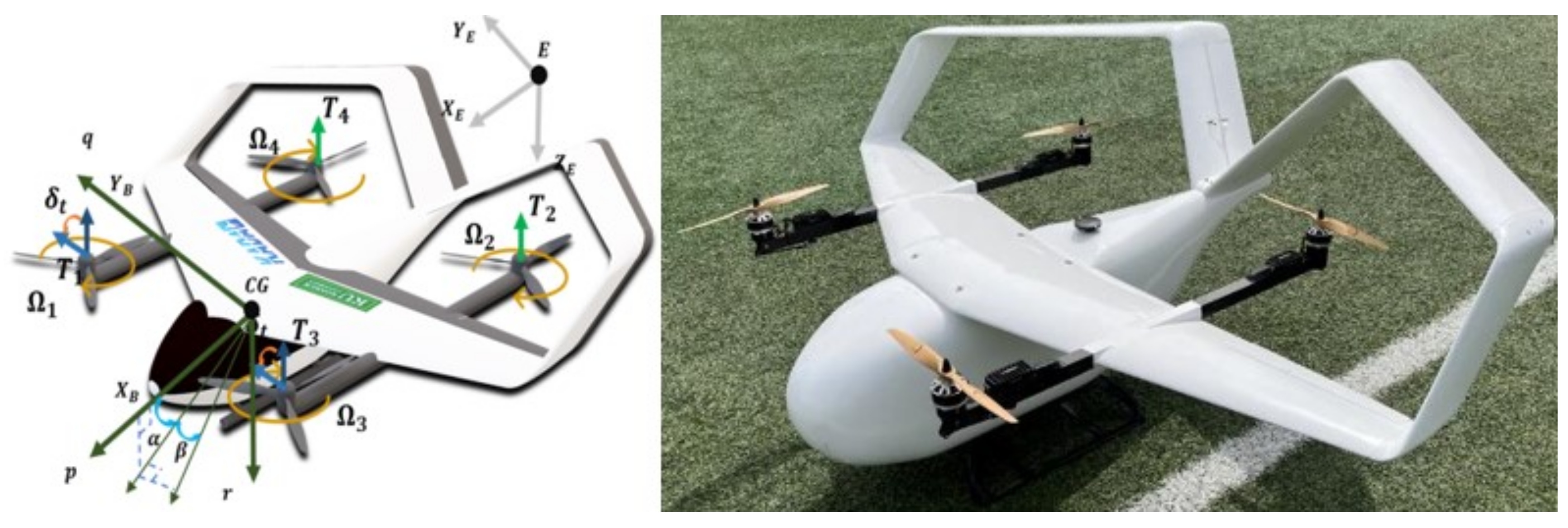

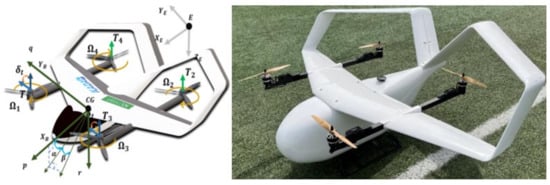

The aircraft is the KP-2C UAM scale model Figure 7, which was designed and manufactured in collaboration with the carbon technology at the Konkuk University Aerospace Design Airworthiness Institute (KADA). The KP-2C features box-wing wings, equipped with a total of four propellers, two Ailerons, and two Ruddervators. For vertical takeoff and landing, it generates vertical thrust through all four propellers, flying like a rotary-wing aircraft, while in cruise flight, it transitions the tilt of the front two propellers to horizontal and flies like a fixed-wing aircraft using only the front propellers without utilizing the rear two propellers. During transition flight, it uses all four propellers and control surfaces to adjust attitude and speed, including transitioning the tilt of the front propellers.

Figure 7.

KP-2C.

The physical forces acting on an aircraft include aerodynamic forces, thrust, and gravity. Aerodynamic forces, generated by the wings, act on the center of pressure, which is the origin of the wind coordinate system. Thrust acts at the center of each propeller, while gravity acts on the center of mass. In the case of transition flight, the tilt angle of the propeller changes, significantly affecting the longitudinal dynamics.

For aerodynamic modeling, an element-based approach was taken to model the wings and tail control surfaces, which influence the aerodynamic coefficients. Using the condition where the tail control surface angle is 0 degrees as a reference, the change in aerodynamic coefficients with varying angles of attack on the wings was modeled as static stability derivatives. These were then used to model the change in derivatives with varying tail control surface angles at those angles of attack as control stability derivatives. Changes in derivatives due to the rate of change of angular velocity were ignored due to their minimal impact on aircraft attitude changes. Below Table 2 are the modeled equations for lift, drag, and pitching moment.

Table 2.

KP-2C configuration.

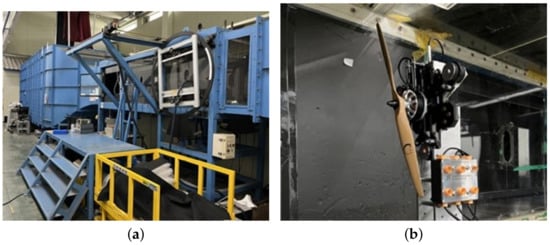

For thrust, wind tunnel tests on the propellers of the target aircraft were conducted to model its maximum performance, taking into account performance limits. During the trim analysis process, thrust is derived as the required thrust, necessitating only the information on maximum performance to consider physical limits. The wind tunnel tests were carried out in Konkuk University’s subsonic wind tunnel, using the same electric propulsion system as the actual unmanned aerial vehicle manufactured. The tests varied the propeller inflow velocity to account for performance changes with different speeds of incoming air to the propellers vertically. Below are the photographs (Figure 8) of the wind tunnel test equipment and the results.

Figure 8.

Experimental wind tunnel test: (a) Konkuk subsonic wind tunnel test facilities, (b) KP-2C’s motor thrust test.

Utilizing a 6-degree flight model developed in Konkuk Flight Dynamics Simulation (KFDS), which employs a Look-Up-Table (LUT) method, this model integrates an enhancement analysis module for JSBSim, an open-source flight dynamic simulation software. By incorporating aircraft database-based integration, each axis’ flight state is determined through motion equation calculations. This methodology extends to the modeling of electric propulsion systems like batteries, ESCs, motors, and propellers for eVTOLs, ensuring potential reflection of the same actuator model as the real object. The vertical takeoff and landing are made possible by its design, which harnesses the thrust produced by its four vertically oriented propellers. It functions like a rotorcraft on takeoff and landing, but it switches to a fixed-wing aircraft during cruise by using only the front two propellers for forward motion. As a result of both ends controlling position and speed throughout this transition, the front propeller’s tilt can be adjusted for the best possible flight performance.

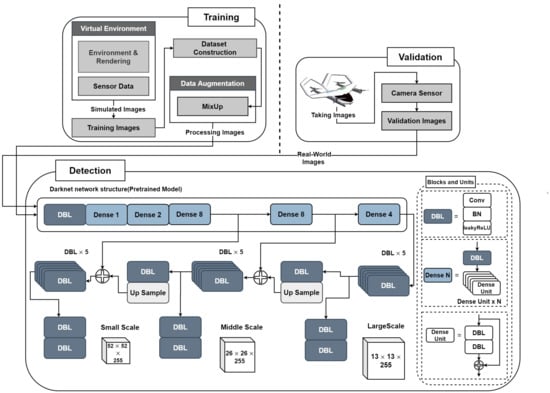

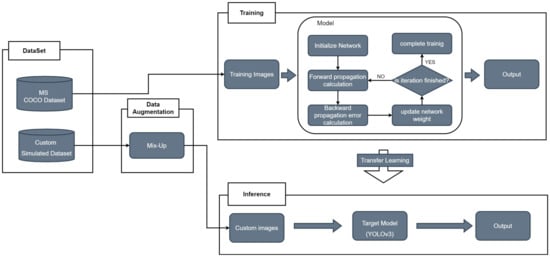

3.2. Aerial Detection and Situation Awareness Framework

In our framework designed for autonomous vehicle operation, we integrate the YOLOv3 algorithm with transfer learning to enhance its performance. The crucial part of this framework is the perception phase, where the vehicle uses visual sensors, like cameras, to collect data about its surroundings. This phase is vital for allowing the vehicle to understand, analyze, and interpret its environment, thus recognizing potential obstacles or dangers. Deep learning models, highly effective for computer vision tasks such as object detection and tracking, are key at this stage. With these models, the vehicle can accurately detect and track objects, enabling it to make decisions and avoid hazards, crucial for safe and efficient operation. This framework’s concept of situational awareness is illustrated in Figure 9. Our method includes creating training images in the AirSim-Unity simulation environments, which mimic the virtual world, and then gathering validation images using the vehicle’s onboard camera in the real world. Digital twin methods are applied to map object detection results from the virtual environment to the actual real-world scenario. Using a simulator to generate training images with a camera sensor facilitates the creation of custom datasets for training YOLOv3. This showcases how deep learning can be applied to obstacle detection, simulating the learning of real-world tasks within a virtual environment.

Figure 9.

Proposed framework for situation awareness.

Our framework uses the YOLOv3 algorithm [39], a deep learning model for quick object detection. YOLOv3 excels at finding objects and drawing boxes around them, then figuring out what they are. It achieves this using one neural network, making it fast and efficient for spotting many items at once. Including YOLOv3 improves our system’s ability to see and identify objects quickly, which is essential for autonomous vehicles to operate safely.

The operational tasks of the YOLOv3 algorithm are outlined as follows:

- The algorithm processes image pixels as input, employing RGB color space to derive bounding boxes that are compatible with real-time detection.

- YOLOv3 applies logistic regression to calculate the ‘objectness’ score for each bounding box, ensuring that a box is assigned a score of 1 if it overlaps a ground-truth object more significantly than any other box.

- Predictions are disregarded if a bounding box, despite not being the best fit, overlaps with a ground-truth object beyond a predetermined threshold.

- The final output bounding boxes are determined through the application of a Non-Maximum Suppression (NMS) method.

- The output of the YOLOv3 algorithm comprises these bounding boxes, along with the class probabilities associated with them.

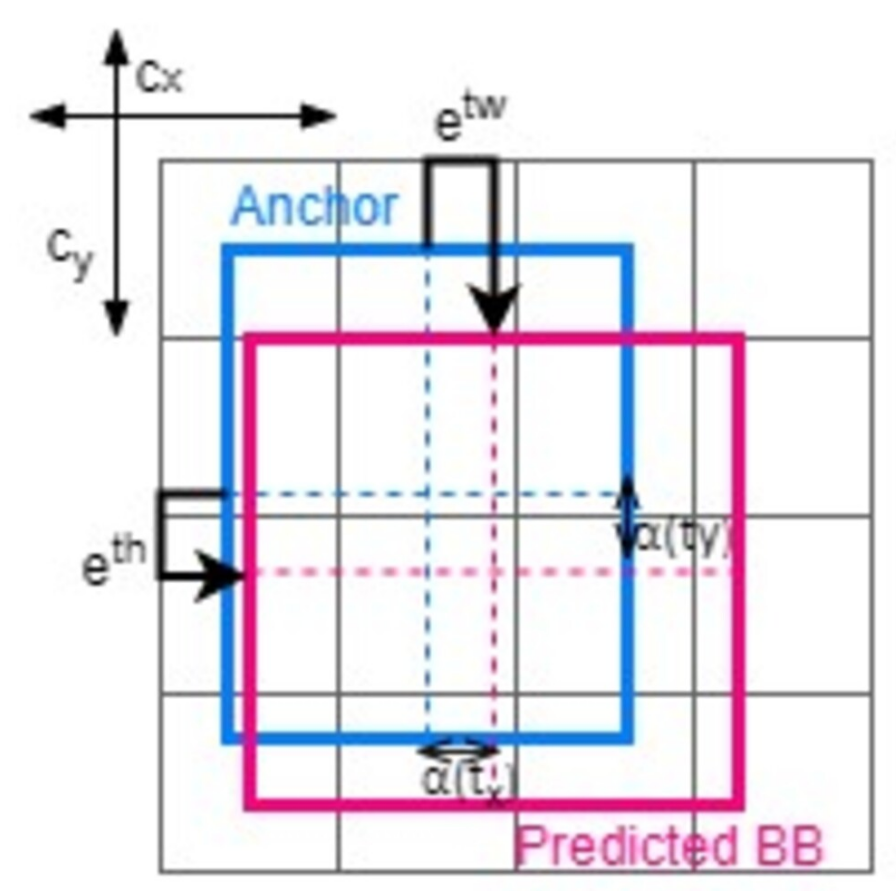

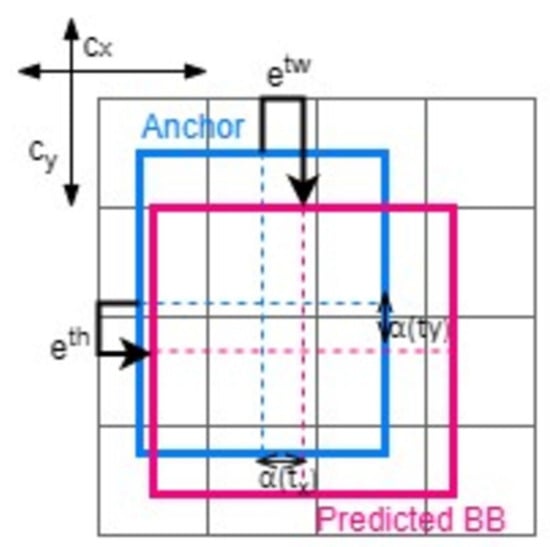

The log-space transform is used to calculate offsets in order to forecast the real height and width of YOLO’s bounding box.

where

- , , , and are center, width, and height of the predicted BB (bounding box).

- , , , and are the predicted outputs of the neural network.

- and are cells in the top-left corner of the anchor box.

- , are the anchor’s width and height.

So, to anticipate the center coordinates of the bounding boxes (, ), the YOLO model passes outputs (, ) through the sigmoid function. The center coordinates, as well as the width and height of the bounding boxes Figure 10, are obtained using the preceding formulae. Then, all the redundant bounding boxes are suppressed using NMS.

Figure 10.

Anchor boxes.

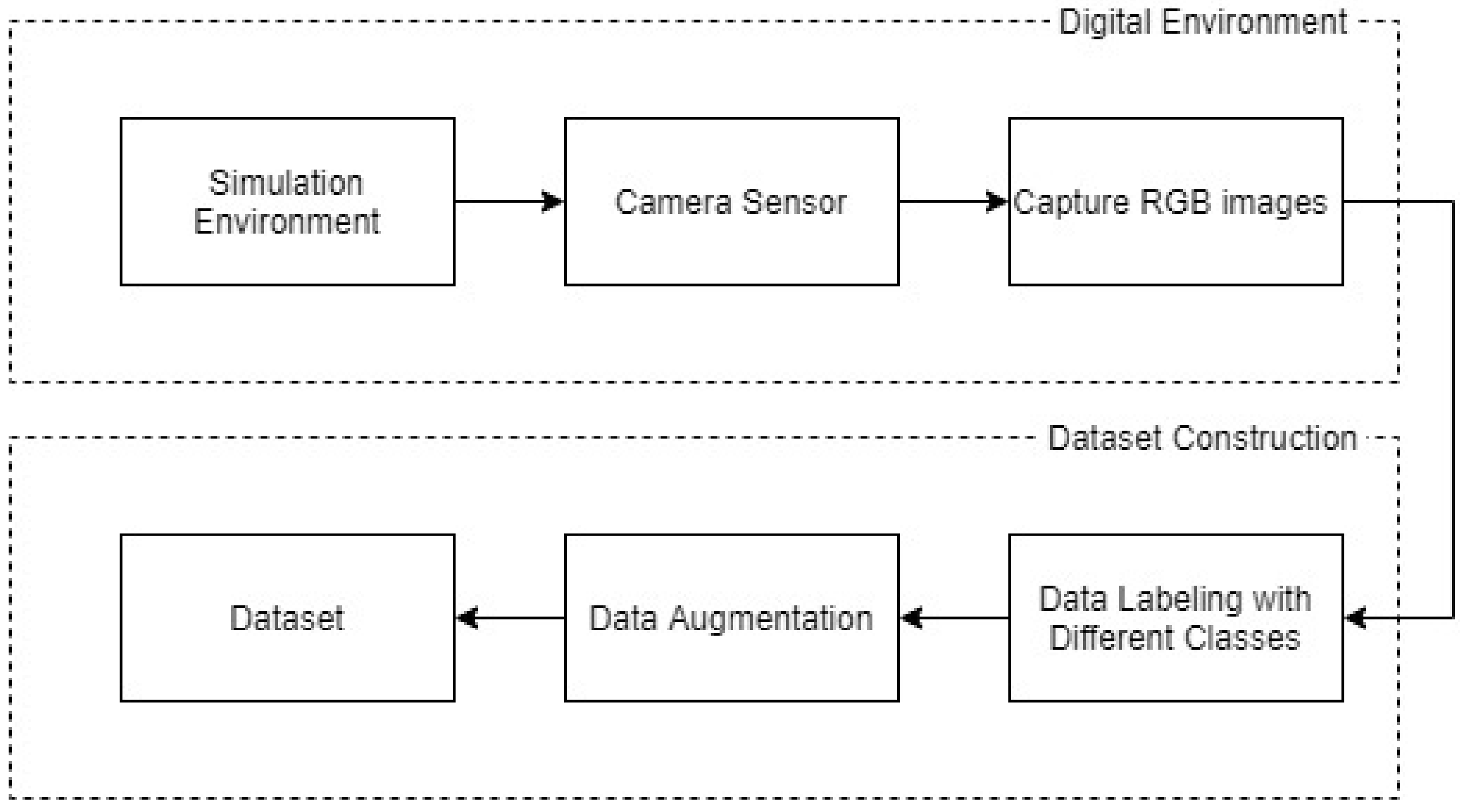

3.2.1. Dataset Construction

In the field of computer vision, datasets constitute an indispensable resource for the operational efficacy of algorithms. These datasets, comprising a collection of data, are bifurcated into two subsets: training data and test data. The datasets for object classification and detection employed in our proposed framework are instrumental in facilitating the algorithm’s comprehension of the scene. Predominantly, the training data, which typically comprise approximately 80% of the total dataset, are utilized by the algorithm for learning and understanding scene dynamics. The remaining 20%, designated as the test data, serve a critical role in evaluating the algorithm’s performance.

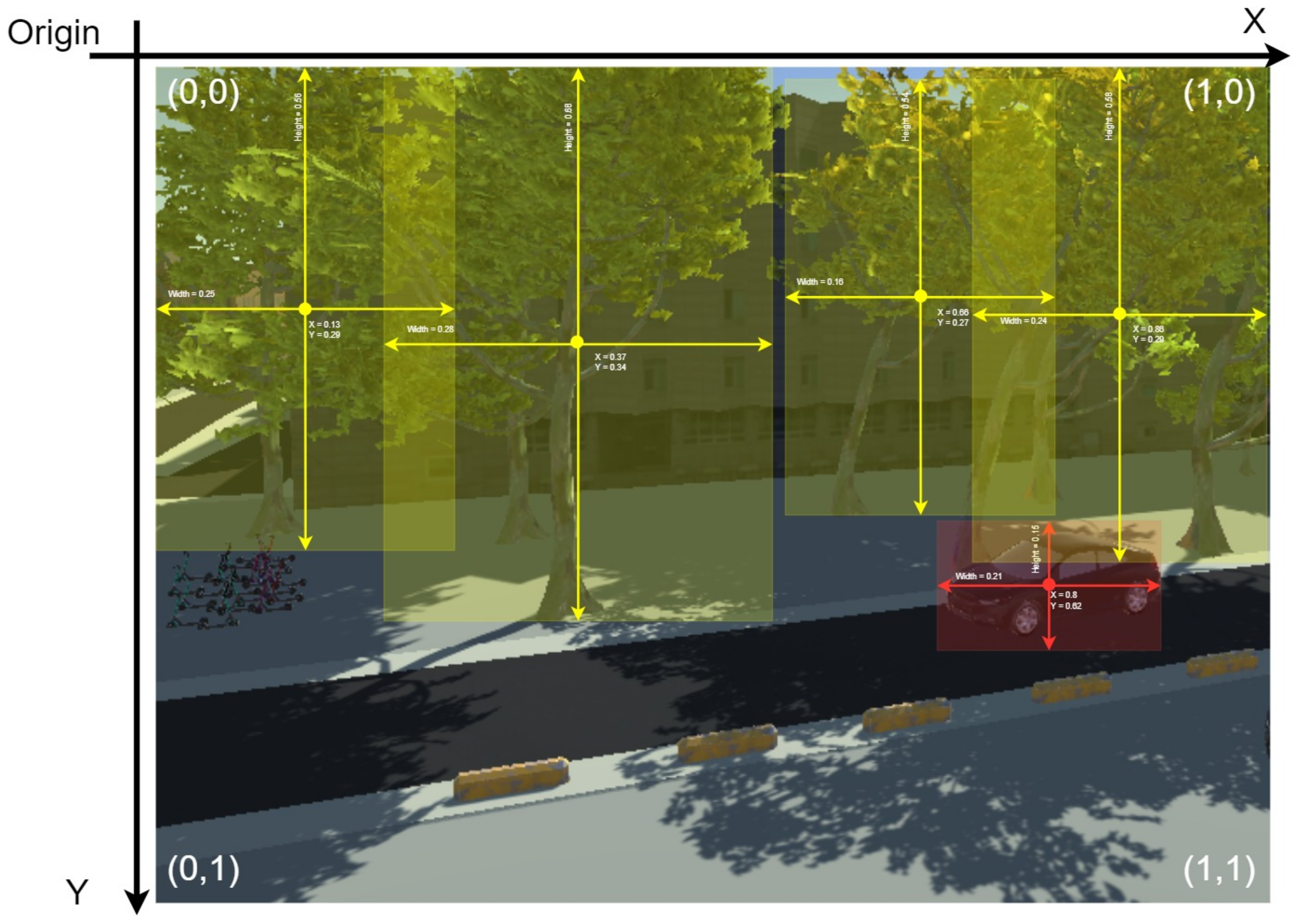

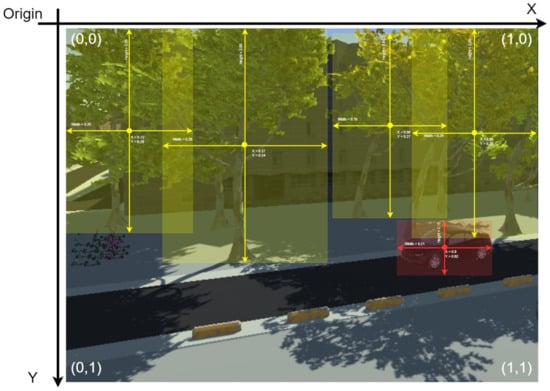

Our focus during this phase of research is on the utilization of datasets within object detection algorithms. We employ the YOLOv3 algorithm, augmented with pre-trained weights, which is conventionally trained and evaluated using the COCO (Common Objects in Context) [54] and ImageNet [55] datasets. Additionally, we have developed a custom dataset derived from a simulated environment. A number of thoughtful choices have been made in the study to ensure the accuracy and suitability of the simulation-based method for UAM operations. Firstly, we carefully replicated different environmental features, including buildings, roads, and terrain, in our simulation environment, which we designed based on a real-world environment (Konkuk University). Our experiments are more realistic because of this careful approach, which makes sure that our simulation accurately mimics real-world situations necessary for UAM operations. In order to create our training dataset, we employed virtual sensor cameras in the virtualized environment to record a wide variety of situations and difficulties that arise in actual UAM operations. These virtual sensor cameras captured RGB imagery of the surroundings by carefully placing them to mimic the viewpoint of sensors installed on the UAM Figure 11. A comprehensive dataset was created with this method, faithfully capturing the complexity of the dynamic environment, including changing lighting conditions and weather effects. To guarantee diversity and quality, the dataset generation procedure required careful data labeling and augmentation approaches. We added variations in lighting, weather effects, and object orientations to the dataset using advanced data augmentation techniques like mix-up methodology. In order to ensure the accuracy and dependability of the training data, we also used robust labeling methods to annotate the dataset with ground-truth labels for object detection tasks. This dataset encompasses a collection of images, label files, and object class files, each requiring meticulous annotation to facilitate the supervised learning process inherent to the YOLO model, as illustrated in Figure 9. Each image within the dataset is accompanied by a corresponding label file, comprising multiple rows. Each row within the label file encapsulates five elements: the first being a numerical identifier corresponding to the object’s class, followed by the x and y coordinates of the bounding box’s center, and the dimensions of the bounding box, specifically its width and height.

Figure 11.

Dataset construction from simulation environment.

The efficacy of datasets in training and evaluating the performance of object detection algorithms is indisputable. As delineated in Figure 12, our research identifies three distinct classes of objects: Car (Class ID: 0), Tree (Class ID: 1), and Aerial (Class ID: 2). These classifications are further elaborated in Table 3, providing a detailed taxonomy of the object types identified in our study. This structured approach to dataset creation and annotation is essential for advancing the field of computer vision, particularly in the context of object detection algorithms, by providing a comprehensive and accurate training and testing framework.

Figure 12.

Create labels with different classes.

Table 3.

Classification.

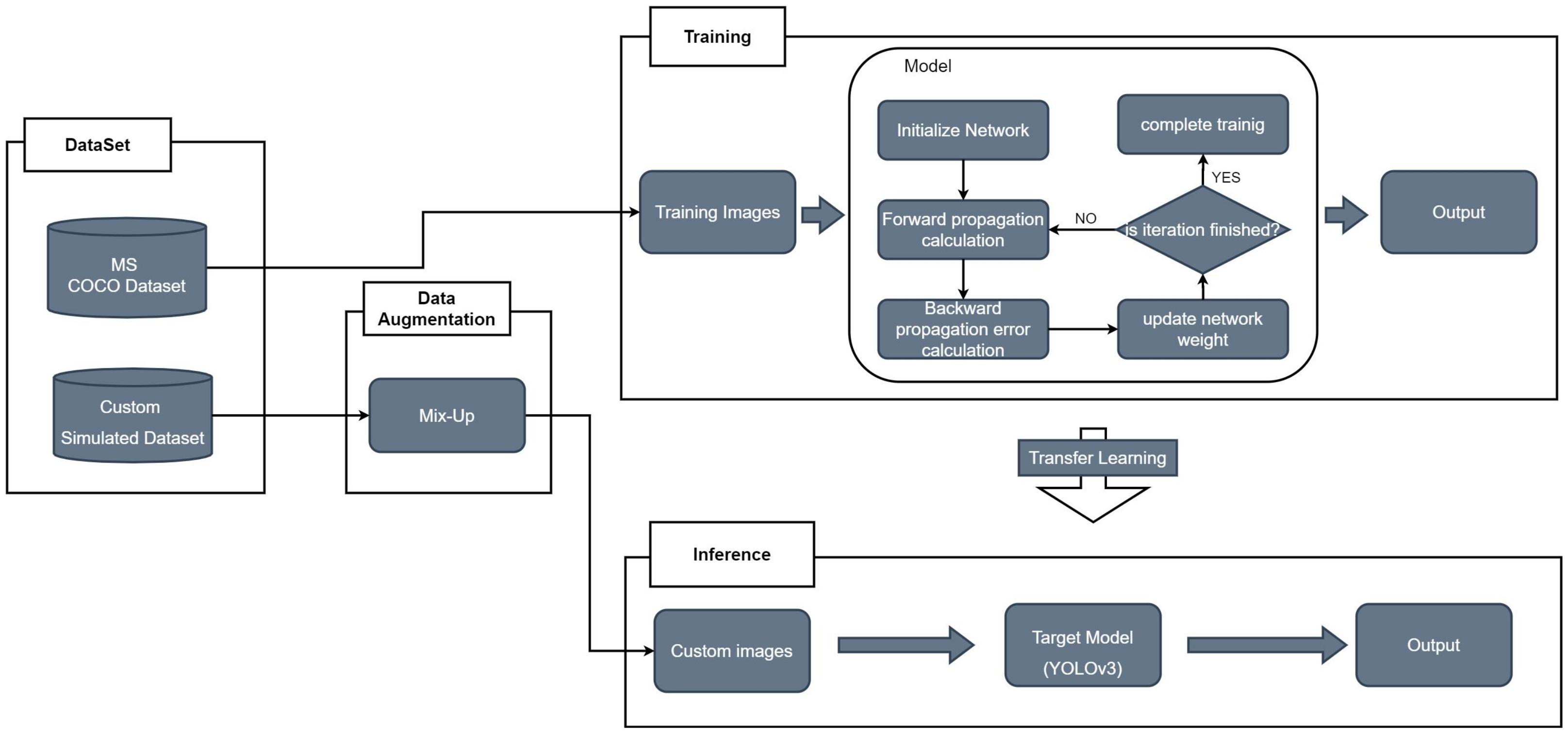

To avoid the need for large datasets and the high computational power required for training a model from scratch, this study uses transfer learning with YOLO technology. Transfer learning, as described by Athanasiadis et al. [56], involves using a pre-trained model’s weights as a starting point and retraining only the final layers to recognize a new set of classes. This method allows for model training with smaller datasets and less computational effort while achieving good accuracy. It combines deep learning and transfer learning to offer a quick and resource-efficient method for computer vision tasks, especially useful when large, labeled datasets are scarce. Transfer learning shifts knowledge from one task to another, making it crucial for efficiently training models for object detection and classification, as shown in Figure 13.

Figure 13.

YOLOv3 with transfer learning.

For object recognition, especially in complex environments like vehicle navigation, having a lot of data is important. However, the variety of data for training can be limited, affecting object detection performance. Data augmentation is a strategy to overcome this by increasing data diversity without collecting more data. It modifies existing data, such as images, to create more variations, helping the model learn from a wider array of examples. Data augmentation addresses the challenges of limited dataset variability, significantly boosting object detection model performance in various conditions.

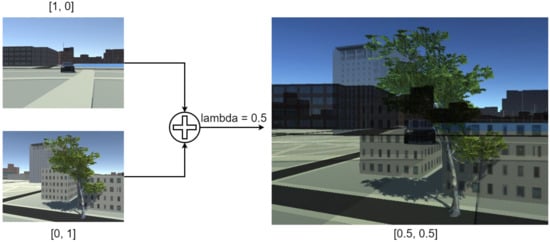

3.2.2. Data Augmentation Using Mix-UpMethod

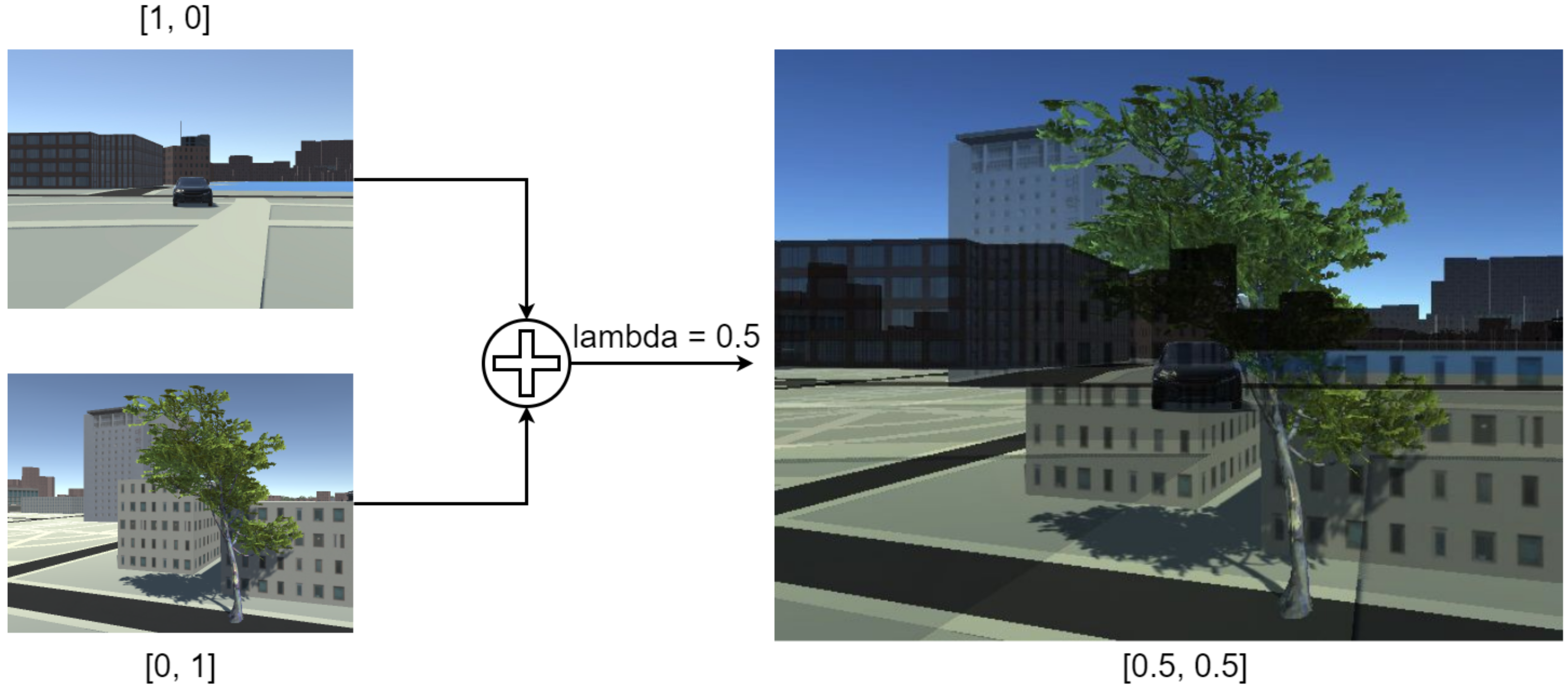

The adoption of the mix-up method in data augmentation presents an innovative strategy to enhance the diversity of training data for model development, without necessitating the acquisition of new data. This technique is particularly efficacious in augmenting the generalization capabilities of models. In the realm of image classification tasks, as delineated by Zhang et al. [57], the mix-up approach regularizes neural networks to promote simplified linear behaviors. This method employs pixel blending to interpolate between pairs of training images, effectively creating new, composite images.

In the implementation of the mix-up technique, the process begins by selecting the maximum width and height from a pair of images. A linear interpolation of these images is then conducted, resulting in a composite image as illustrated in Figure 14. The blending ratios for this interpolation are calculated using the beta distribution. Following this, annotations from both original images are merged into a unified list, signifying that bounding boxes from each image contribute to the final, blended image.

Figure 14.

Data augmentation with mix-up process.

This approach, when applied within the context of object detection, yields visually coherent images that are a blend of the original pairs. Furthermore, the mix-up method has demonstrated its efficacy in enhancing the mean average precision (mAP) scores of models. These scores are a crucial metric in object detection, indicating the accuracy of the model in identifying and classifying objects within images. The mix-up method, therefore, stands as a valuable tool in the data augmentation process, particularly in scenarios where the diversification of training data is essential yet constrained by the availability of original images.

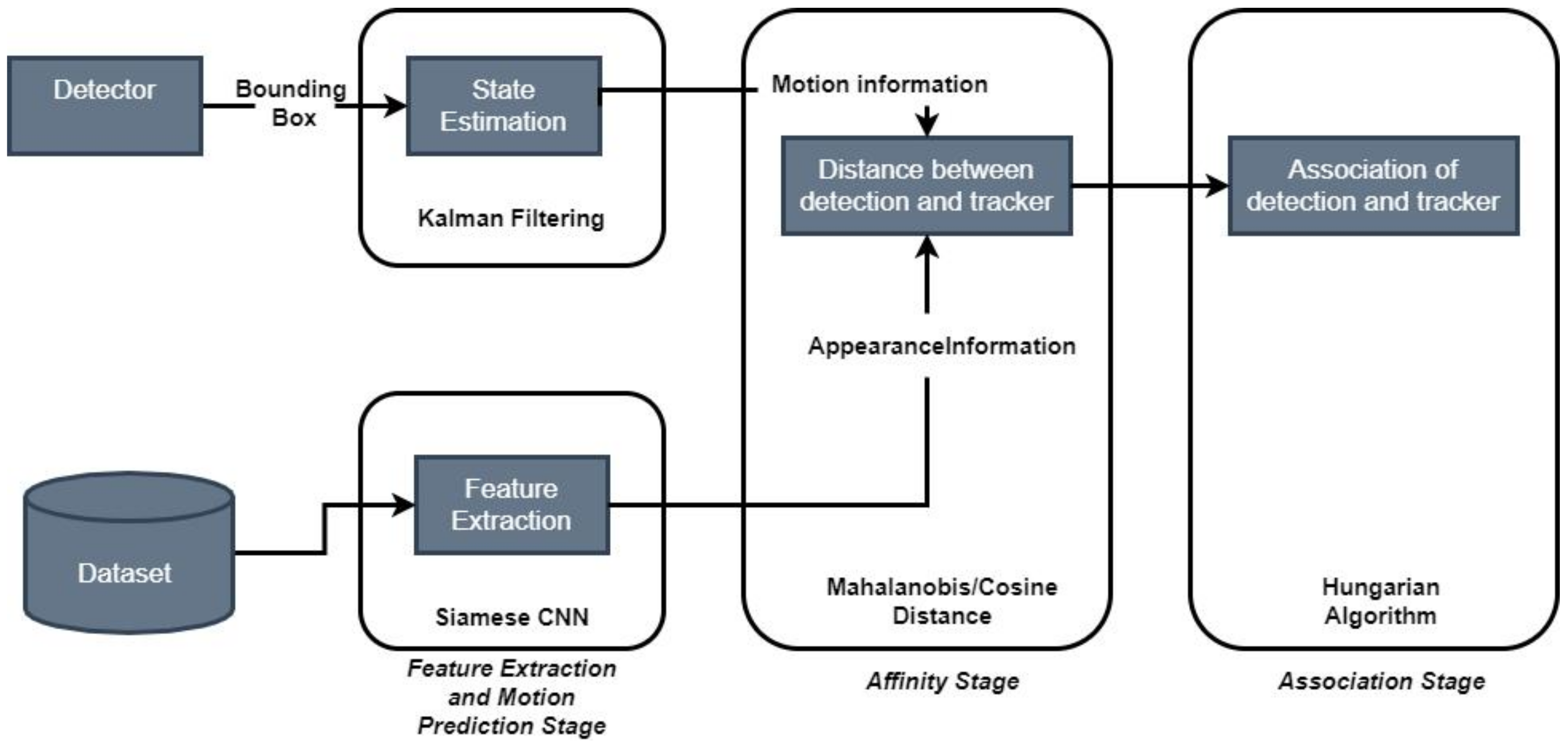

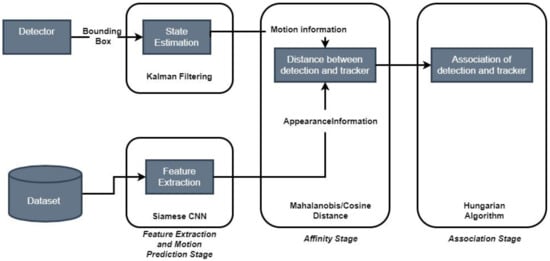

The process of tracking in object detection is a critical component, involving the identification and localization of objects across a sequence of frames. This process comprises two primary stages:

- Defining Objects for Tracking: Initially, objects of interest are defined within the initial frame by delineating bounding boxes around them. This stage sets the parameters for the objects to be tracked throughout the sequence.

- Appearance Modeling: The second stage focuses on the visual characteristics of the objects. As objects traverse through the frames, their appearances can alter due to varying lighting conditions, angles, and other environmental factors. Such changes pose challenges in consistent tracking.

To address these challenges, motion estimation techniques are employed to predict the future positions of the objects. This predictive information, when combined with the appearance model, facilitates precise localization of the objects in subsequent frames.

A notable advancement in this area is the DeepSort algorithm [58], illustrated in Figure 15. DeepSort enhances the Simple Online and Real-time Tracking (SORT) algorithm by enabling the tracking of multiple objects across a frame. Its principal advantage lies in its re-identification model equipped with a deep appearance descriptor, effectively resolving issues related to ID switching commonly encountered in multi-target tracking. Upon initialization with the selected tracker model, detected objects are fed into the tracker for continuous monitoring.

Figure 15.

Overview dataflow of obstacle tracking using DeepSort method.

Key aspects of the DeepSort algorithm include:

- Kalman Filter: This filter predicts the positions of object bounding boxes based on their previous velocities. A Kalman filter processes data with bounding box elements. The eight-dimensional state space:Contains the bounding box center position () aspect ratio a, height h, and , , , represent the speed of each element. The Kalman filter helps to process noise generated during detections and uses prior states in predicting a good fit for bounding boxes. Predictions are then cross-referenced with detected bounding boxes using the Intersection over Union (IoU) metric, enhancing the accuracy of object tracking throughout the frames.

- Feature Vectors and Motion Predictions: Utilizing feature vectors and motion predictions derived from the Kalman filter, the algorithm computes a similarity or distance score between pairs of detections. This score helps in determining whether an object detected in one frame corresponds to the same object in subsequent frames, thus enabling continuous tracking.

- Tentative Tracks: If a new bounding box is detected and does not align with existing tracks, it is marked as a tentative track. The algorithm then attempts to associate this tentative track with other tracks in subsequent frames. Successful associations update the tracker, while unsuccessful ones lead to the removal of the tentative track. This process ensures effective tracking of objects as they move and change appearances over time.

Overall, the implementation of the DeepSort algorithm within object detection systems provides an advanced framework for the accurate and continuous tracking of objects, accounting for their dynamic nature in various environments.

4. Experiments and Results

4.1. Digitalized UAM

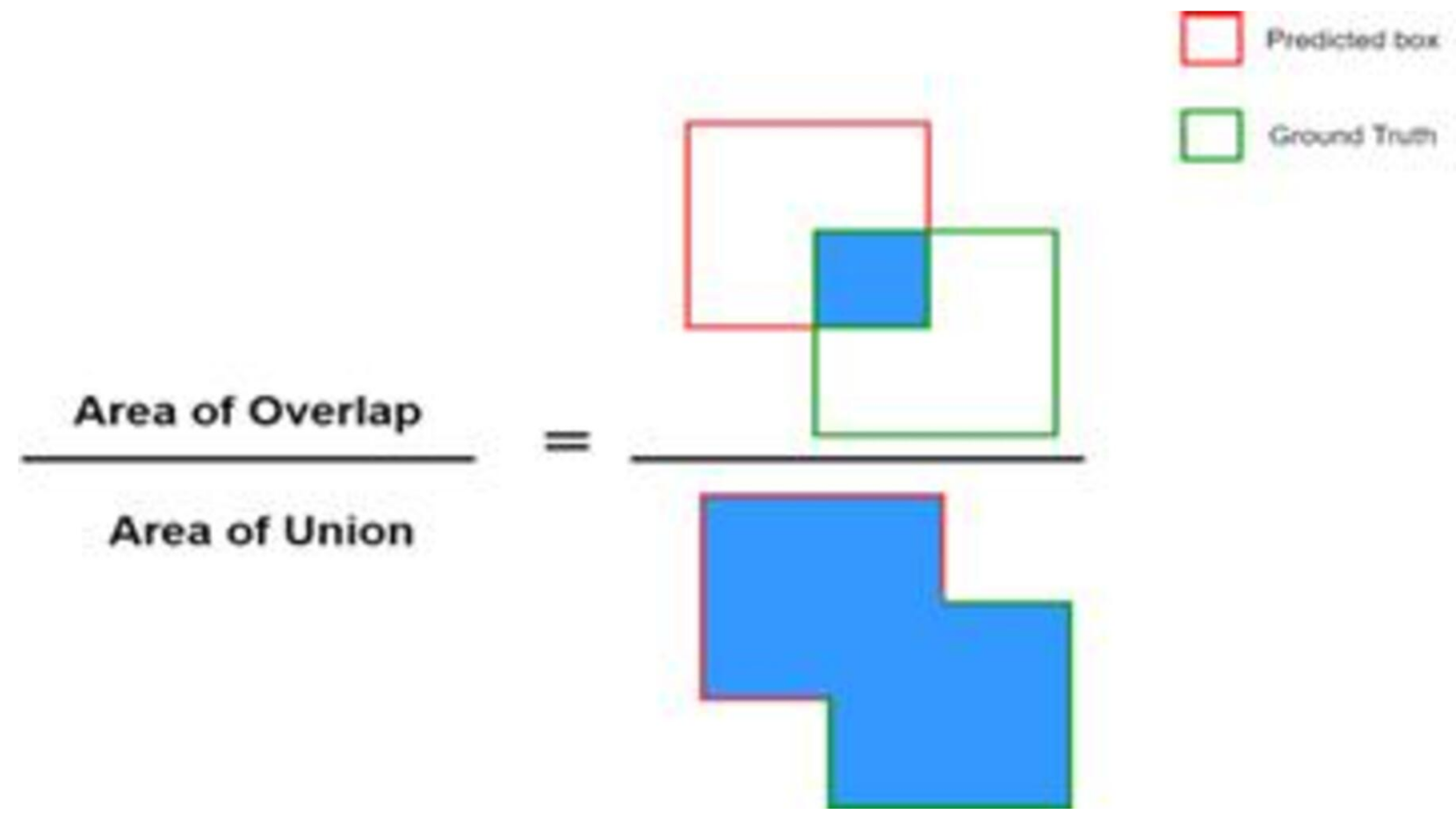

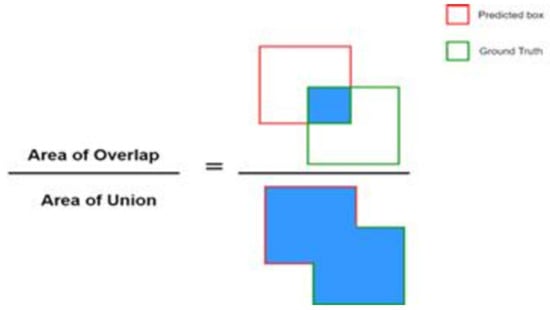

In this segment, we conduct an analytical evaluation of our object detection model and the overarching architectural framework. The output from the object detection process manifests in the form of bounding boxes and associated object classifications. To quantitatively assess the performance of our machine learning and deep learning models, we employ various metrics, among which mean average precision (mAP) is a key indicator. In our research, mAP serves as the primary metric to evaluate the accuracy of the object detector. This metric involves a comparative analysis between the ground-truth bounding boxes and those detected by the model. A higher mAP score is indicative of superior accuracy in object detection. The computation of the mAP score entails averaging the average precision (AP) across different levels of the Intersection over Union (IoU) threshold, as depicted in Figure 16.

Figure 16.

Intersection over union.

In object detection, models use bounding boxes and labels to make predictions. The Intersection over Union (IoU) metric checks the accuracy of these predictions by comparing them to the actual (ground-truth) boxes. IoU is key for assessing precision and recall, which together measure the model’s accuracy and ability to detect all relevant objects. Precision is the fraction of correct positive predictions out of all positive predictions made, showing how precise the model’s predictions are. Recall, on the other hand, shows how good the model is at finding all positive cases, calculated as the fraction of correct positive predictions out of all actual positives. Having high precision or recall alone does not mean the model is accurate; it is the balance between them that counts. The F1 score, which is the harmonic mean of precision and recall, is often used to evaluate a model’s overall accuracy. This balanced measure provides a more detailed and accurate evaluation of how well a model performs in object detection tasks.

4.2. YOLOTransfer Model Performance Analysis

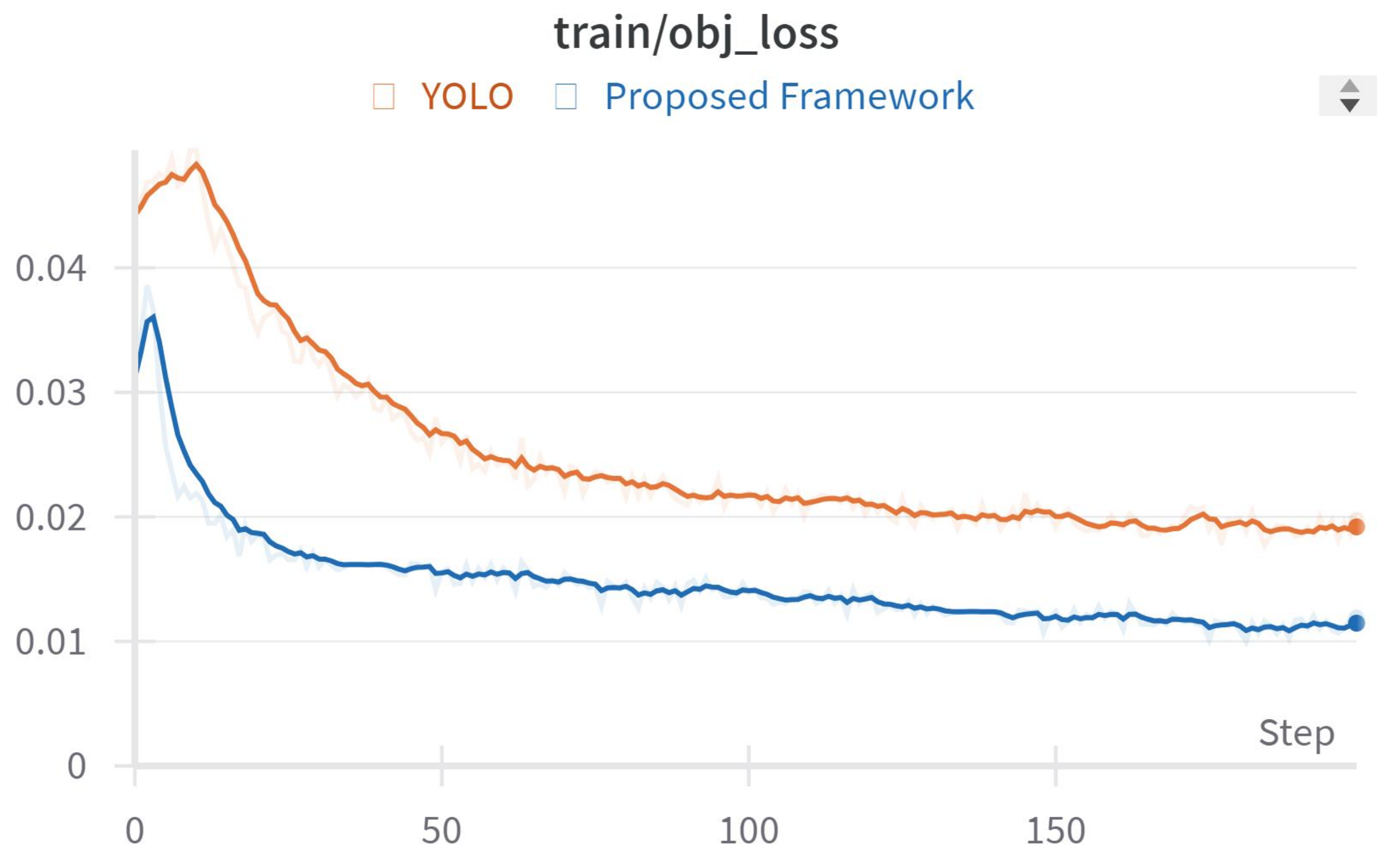

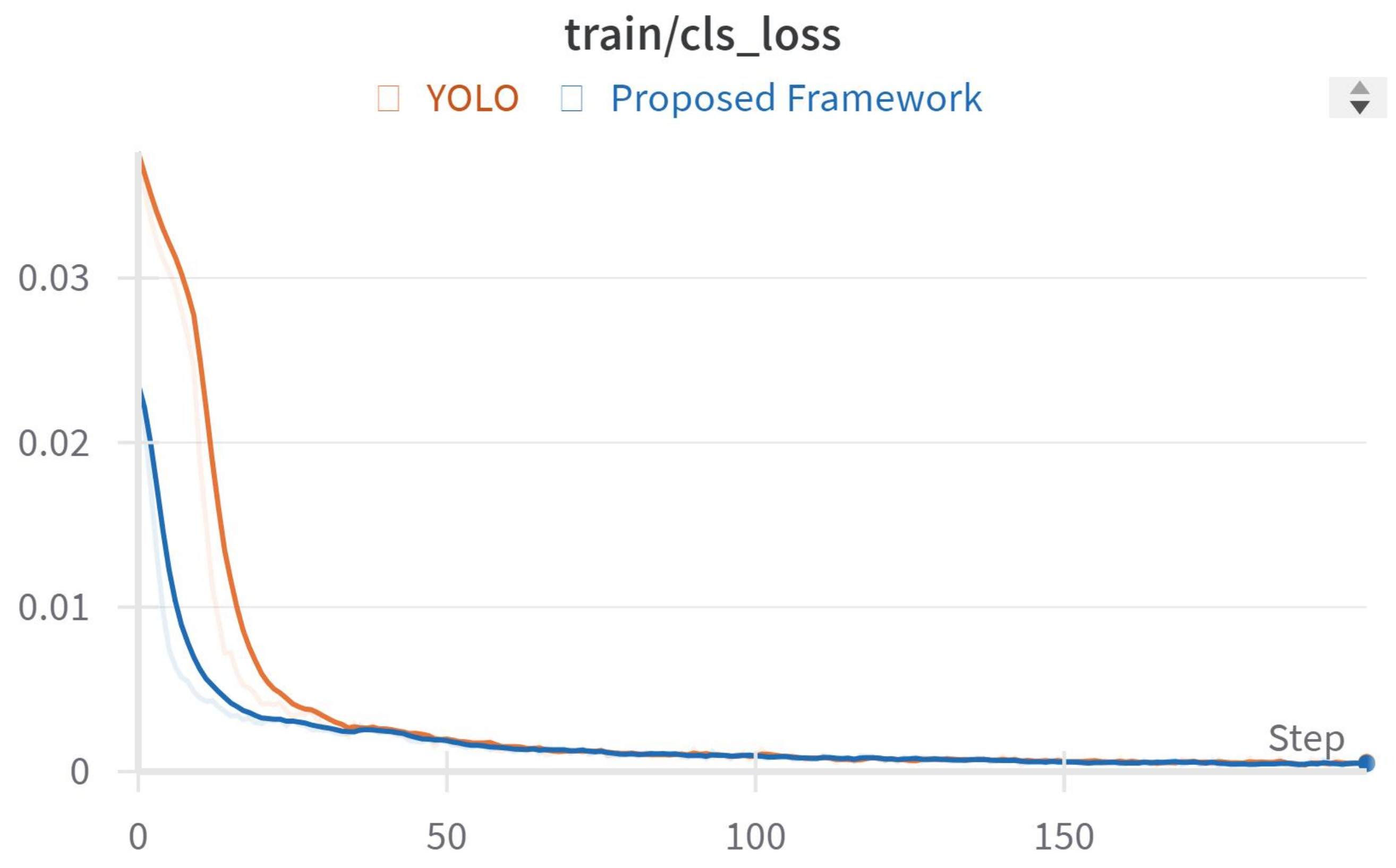

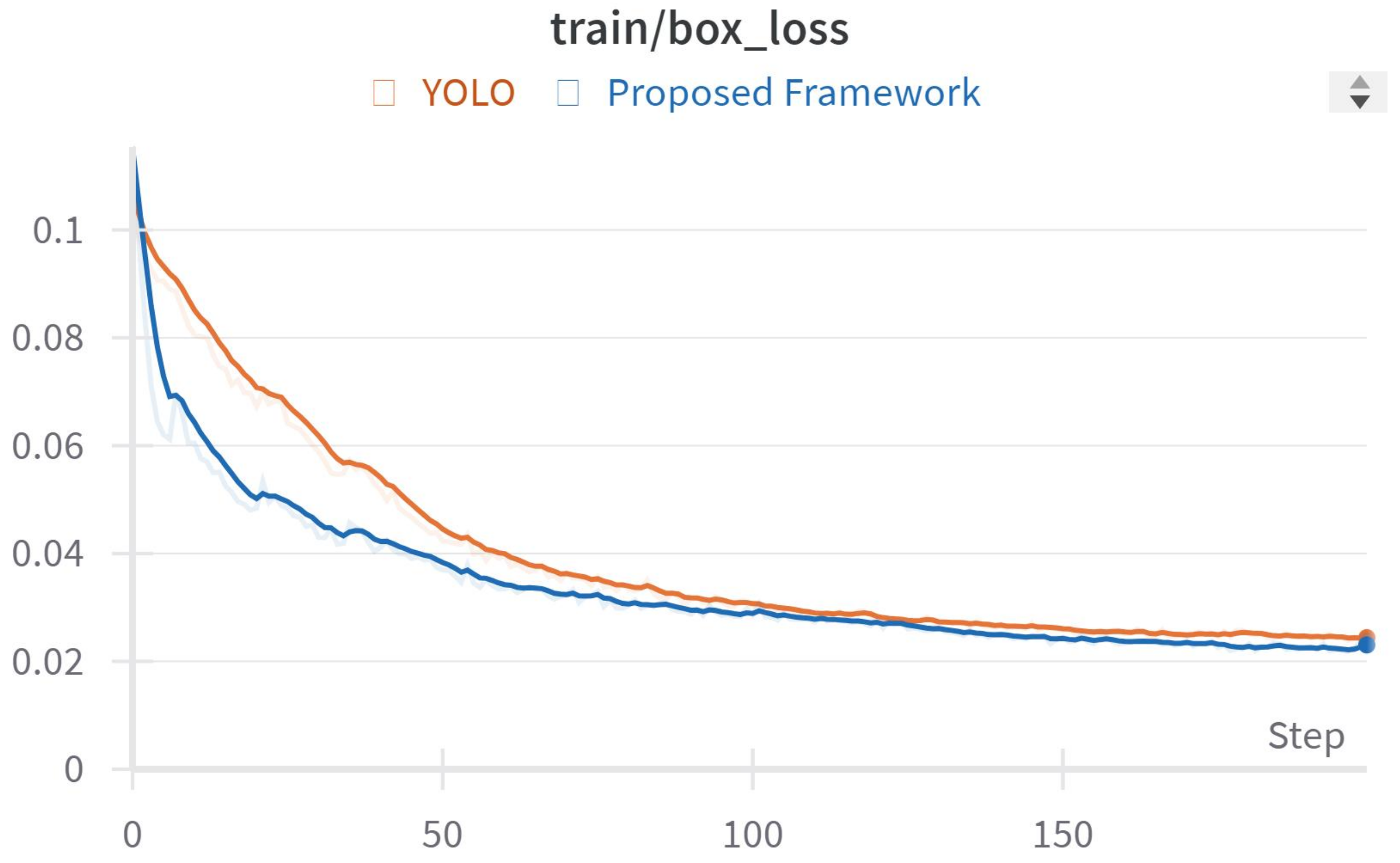

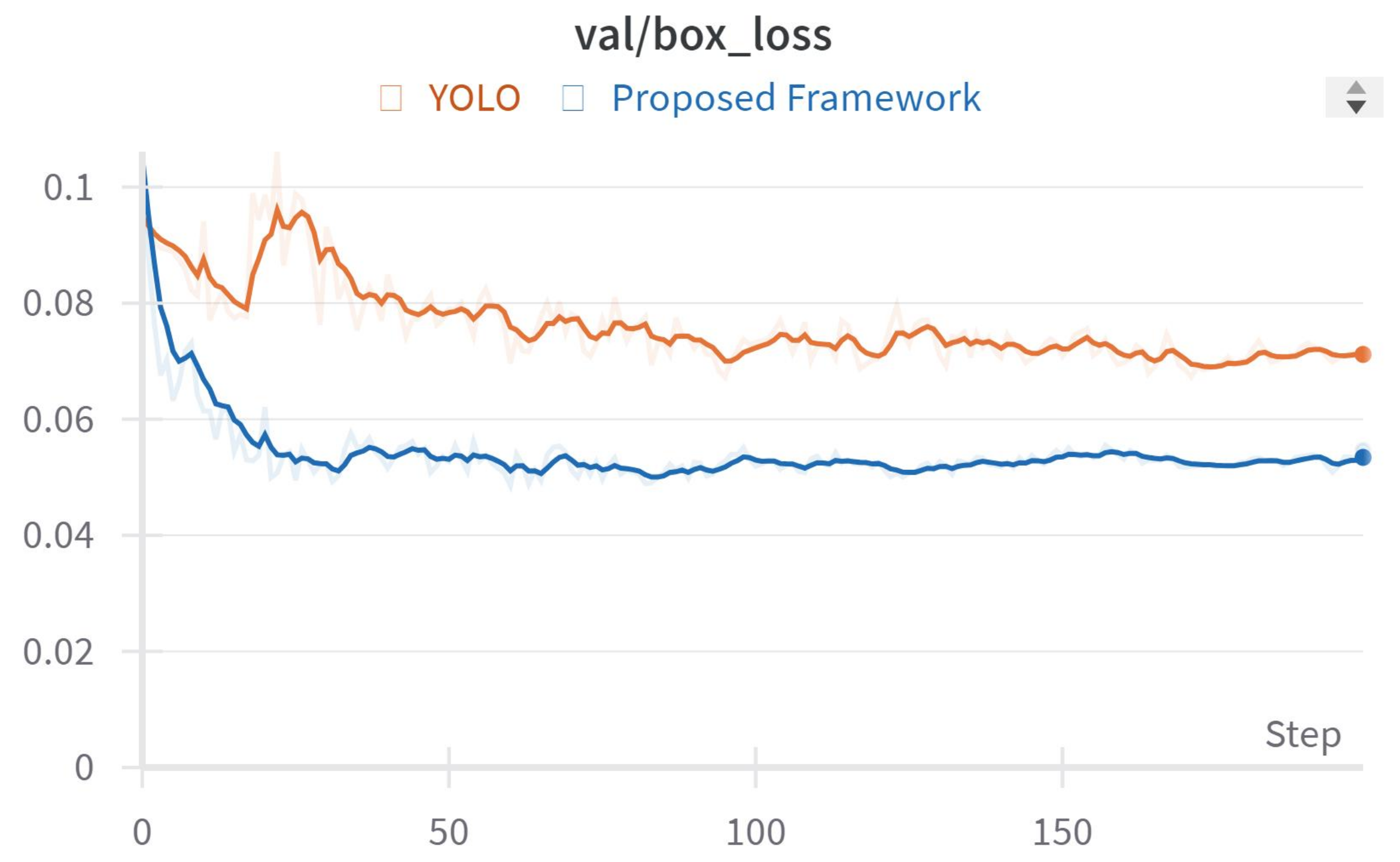

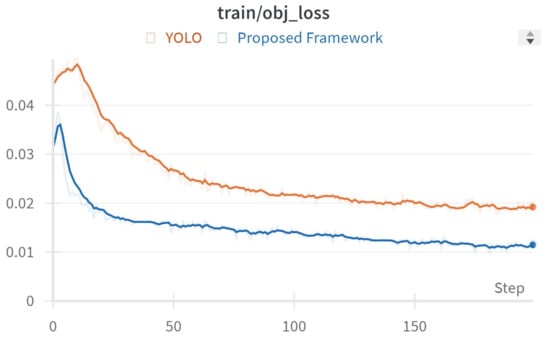

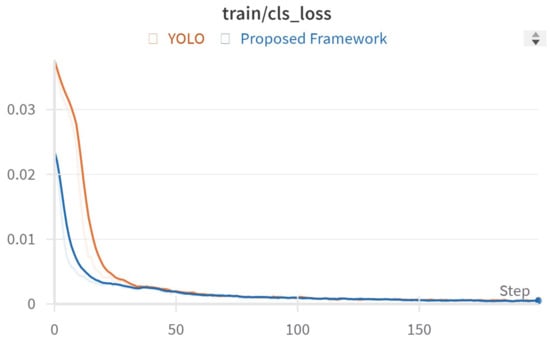

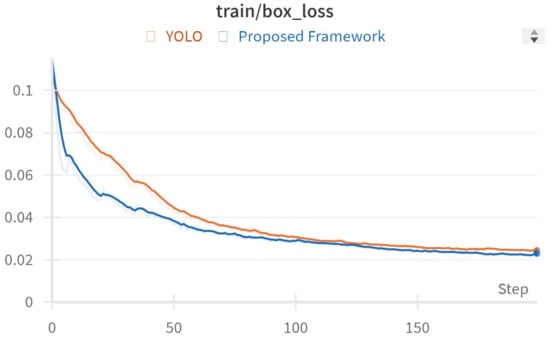

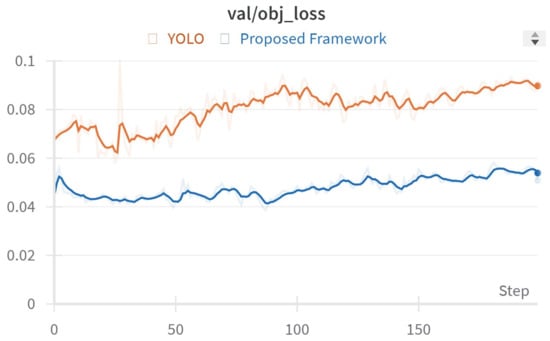

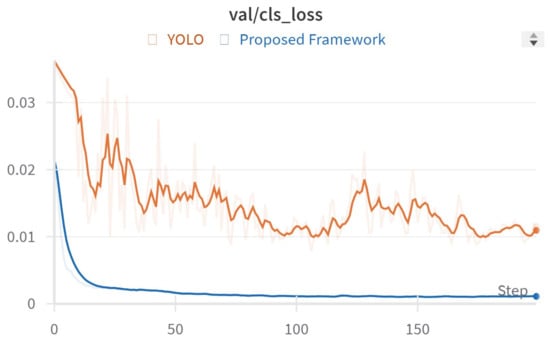

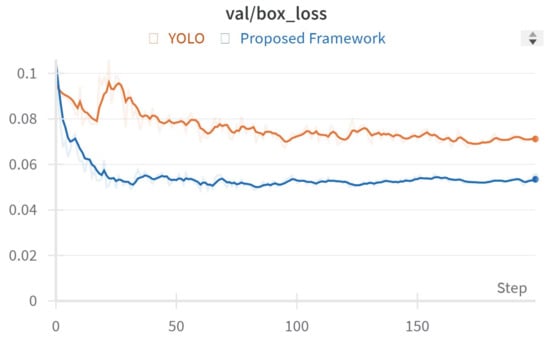

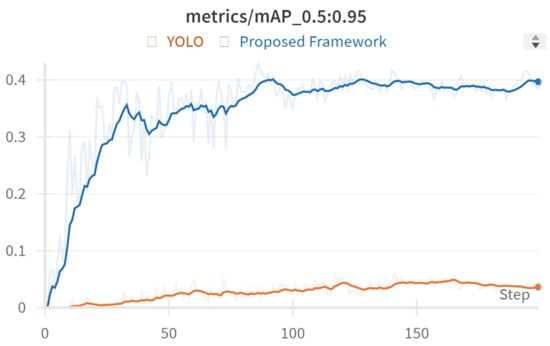

Figure 17, Figure 18 and Figure 19 show results from testing our framework, which uses different loss functions for neural network training:

Figure 17.

Objectness loss during training.

Figure 18.

Classification loss during training.

Figure 19.

Box loss during training.

- Objectness Loss helps the network predict the best bounding box for an object by measuring how well the box covers the object.

- Classification Loss is used to identify the object within the bounding box, ensuring the network accurately recognizes the object’s type.

- Box Regression Loss improves bounding box selection for objects, especially when multiple boxes are predicted for one object in a grid cell.

Our training data showed the lowest loss values before 200 epochs, indicating effective training focused on refining the YOLOv3 model and our YOLOTransfer approach. We specifically designed the YOLOTransfer framework to work with custom simulation datasets, using data augmentation to train the model effectively for our simulated environment and object detection needs. The results highlight our framework’s success in reducing loss and optimizing the model, offering important insights for future improvements to increase accuracy and reliability in simulated object detection tasks.

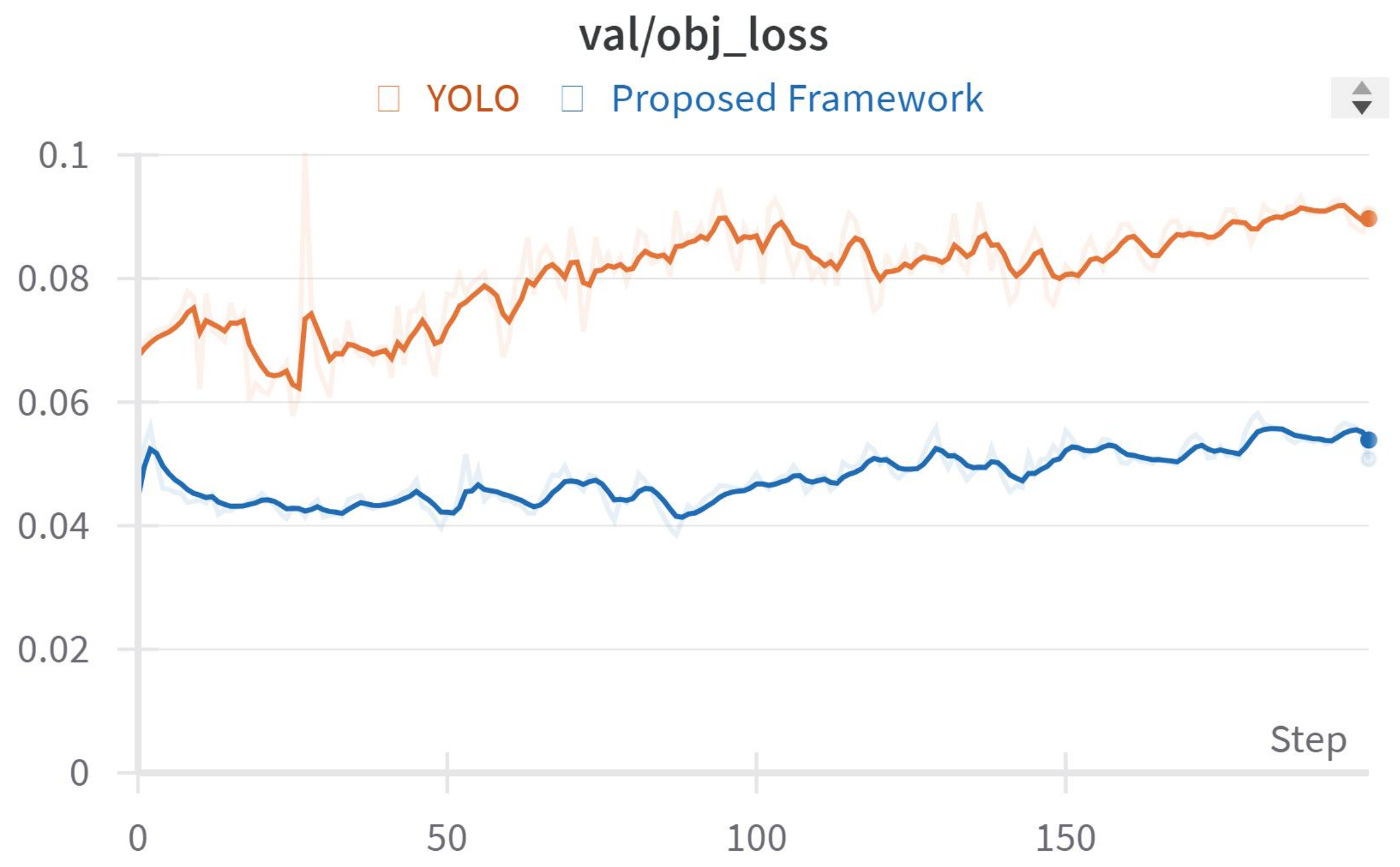

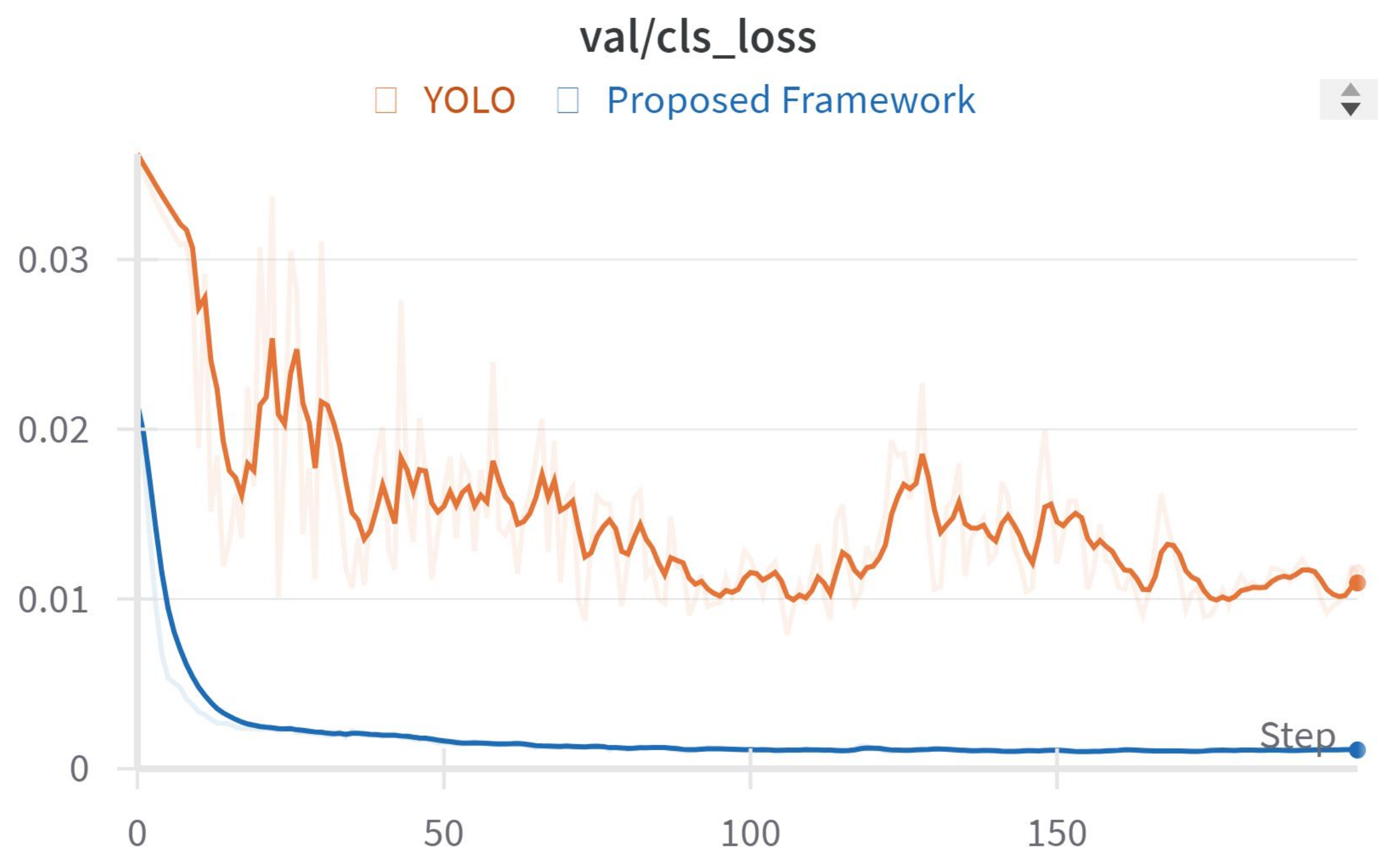

4.3. Digital–Physical Result Validation and Tests

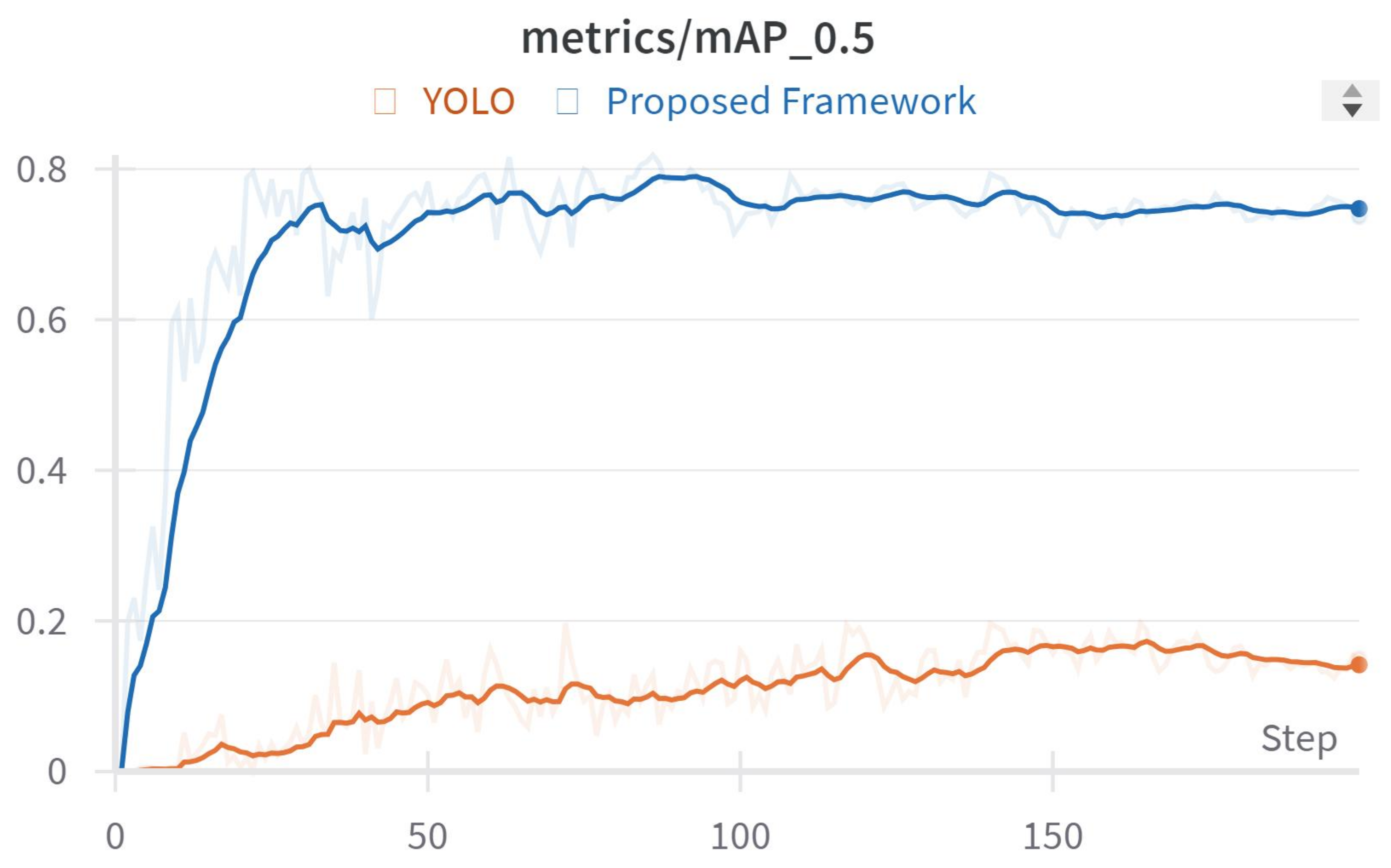

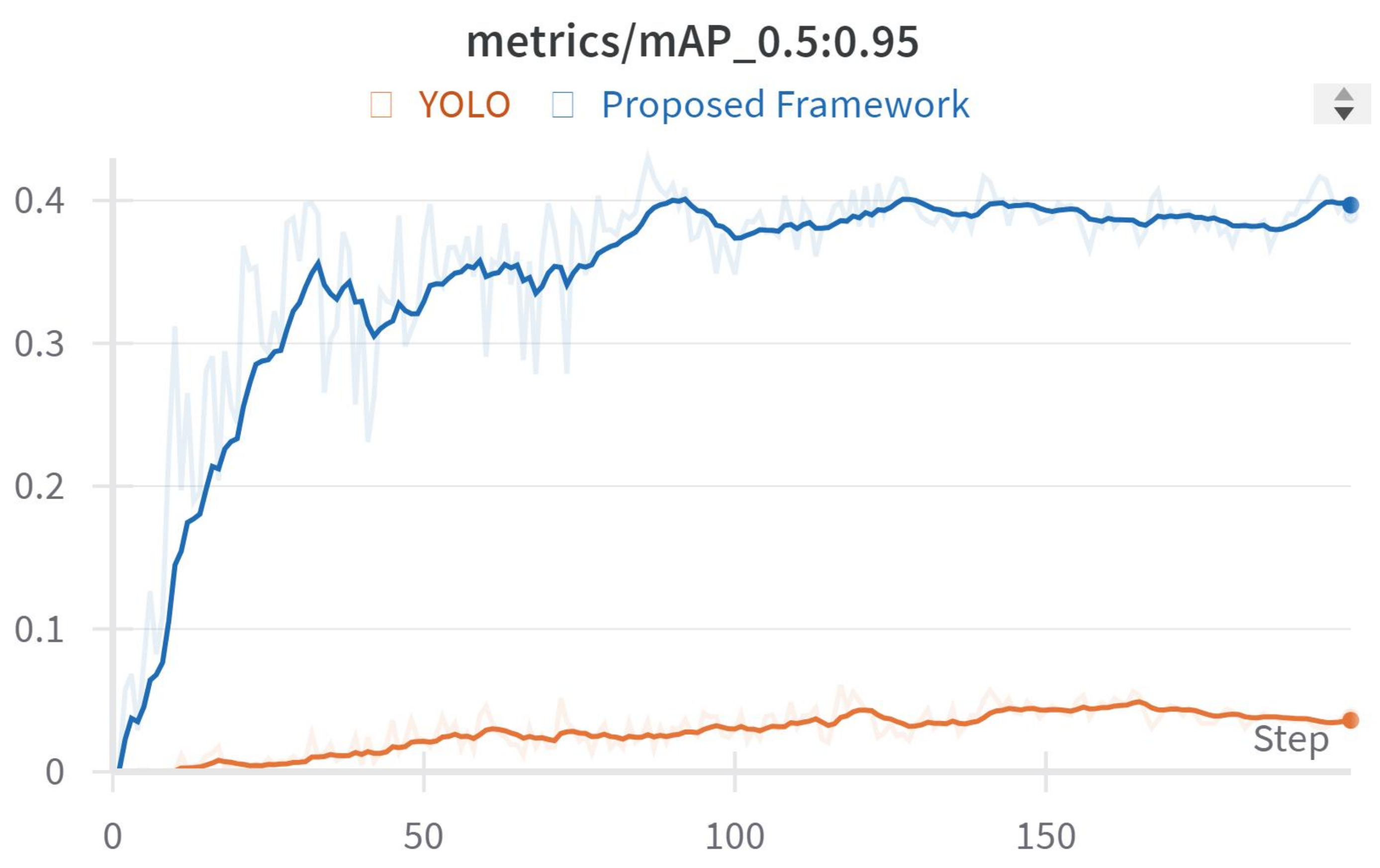

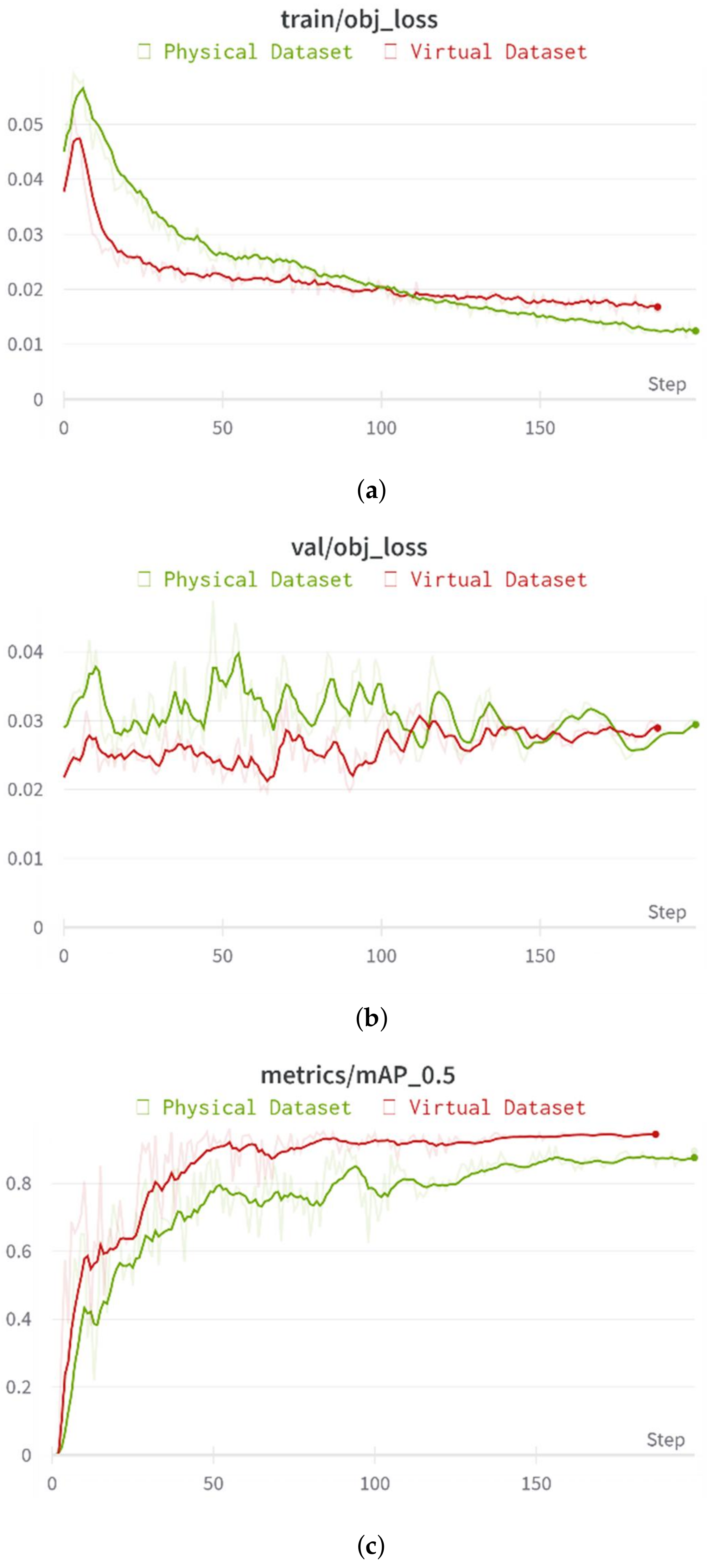

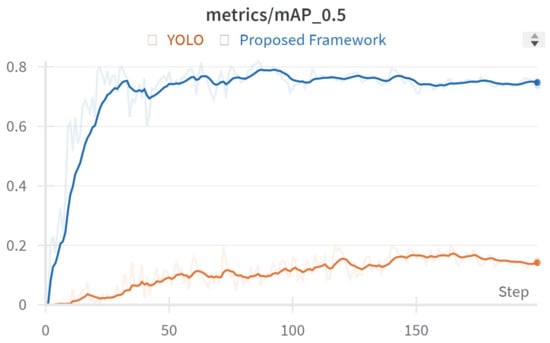

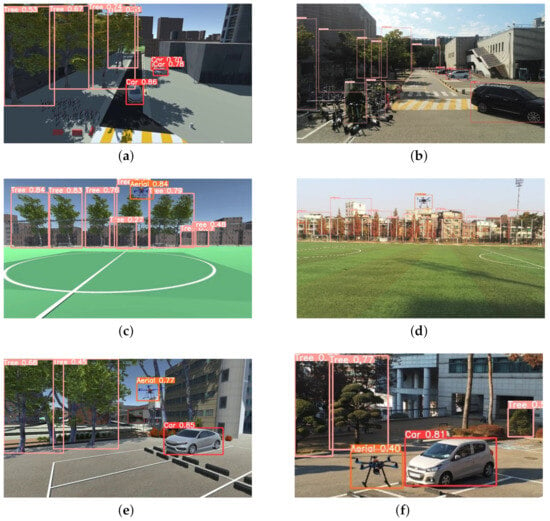

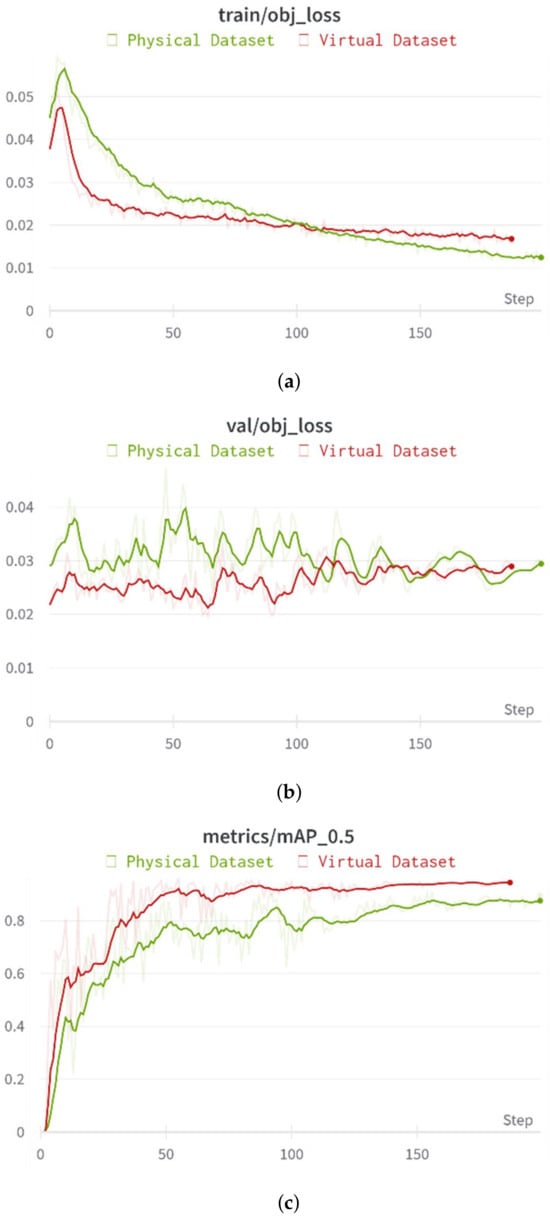

After finishing the training of the algorithm, we evaluate our model with a test dataset that includes three categories: Car, Tree, and Aerial. These categories are based on images taken by a drone in the real world. To check the model’s validity, we measure the validation loss using a validation dataset and apply metrics like precision, recall, and the F1 score. We also test the model with real-world data to create datasets that are effective both in virtual settings and in real-life applications. The object detector successfully recognizes and categorizes all items in the virtual dataset, including results from models that learned through transfer learning and the mix-up technique. The outcomes of this validation test are displayed in Figure 20, Figure 21, Figure 22, Figure 23 and Figure 24.

Figure 20.

Objectness loss for validation.

Figure 21.

Classification loss for validation.

Figure 22.

Box loss for validation.

Figure 23.

mAP score (threshold at 0.5).

Figure 24.

mAP score (threshold at 0.95).

The accuracy of a model is assessed based on its true-positive and false-positive predictions for precision, and true-positive and false-negative predictions for recall. A specific threshold converts these scores into a class label, classifying an object as part of a class if both precision and recall meet or exceed this threshold. The mean average precision (mAP) for object detection is calculated by averaging the precision–recall scores, as depicted in Figure 23 and Figure 24. This mAP score serves as an indicator of the model’s overall accuracy.

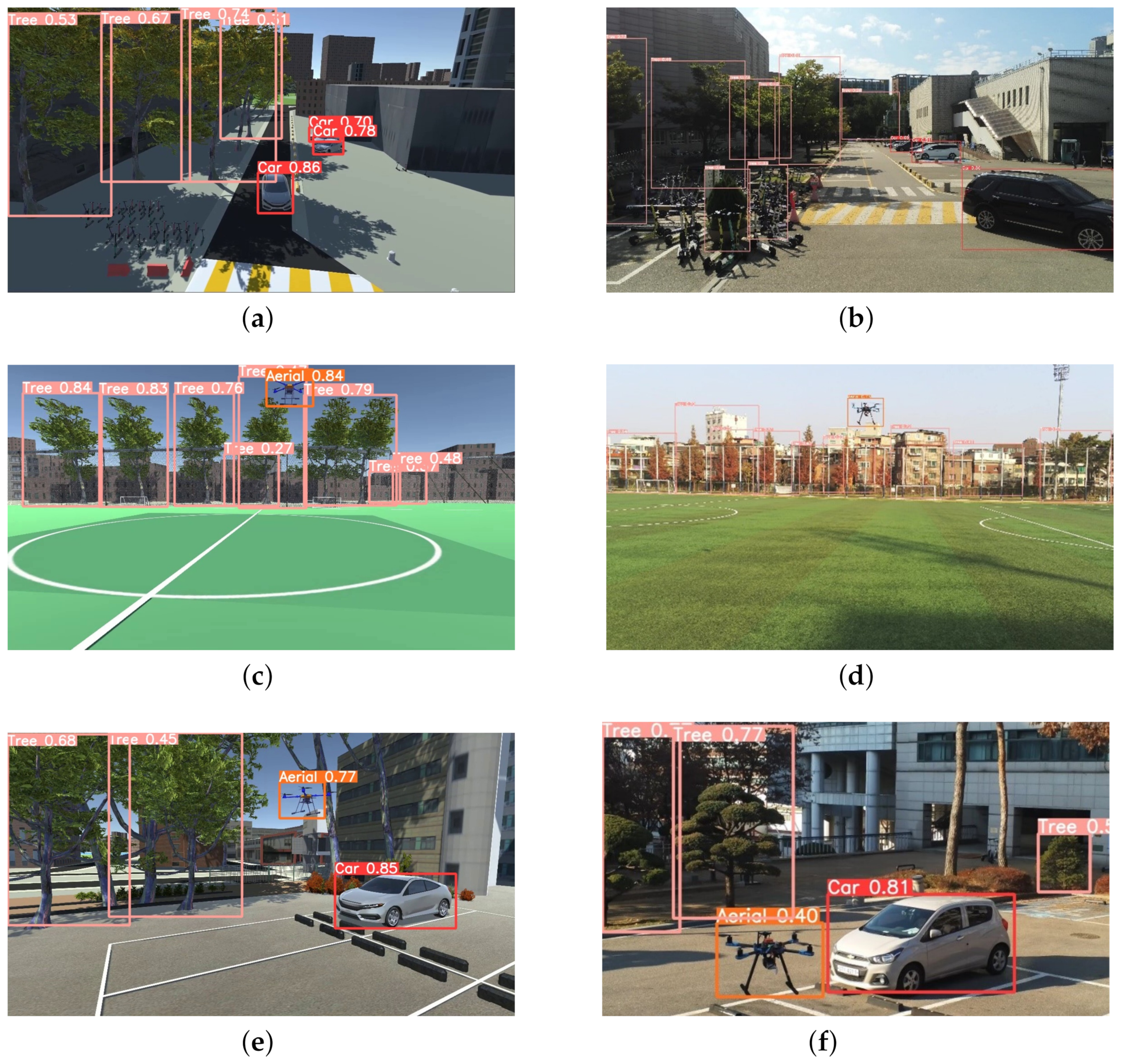

4.4. Case Studies

In this study, we show our results by drawing colored boxes around objects in images and identifying what each object is. We tested our system using special virtual datasets made with the AirSim-Unity simulator. Each type of object is marked with a different color box: red for cars, pink for trees, and orange for objects in the air. We checked how well our system can recognize objects by using it in three different real-world situations, as shown in Figure 25. These images not only prove our system’s ability to correctly identify objects but also highlight its usefulness in various real-life settings.

Figure 25.

Validation on situation awareness framework for digital twin (https://youtu.be/ByTFSfT0MQM): 16 December 2022 (a) virtual environment for Scenario One, (b) physical environment for Scenario One, (c) virtual environment for Scenario Two, (d) physical environment for Scenario Two, (e) virtual environment for Scenario Three, (f) physical environment for Scenario Three.

In this paper, we present a case study on creating and using a deep learning digital twin for AI training in a simulated environment, which is then applied to real-world situations. As shown in Figure 26, we tested various scenarios including ground obstacles, aerial challenges, and situations with both types of obstacles. The main goal was to see if virtual datasets could be used to check real-world objects and situations within our situation awareness framework. We compared results from real and virtual datasets and found similar accuracy in object detection between them. This outcome suggests that digital twins can effectively mimic real-life conditions, and virtual datasets can serve as reliable substitutes for real-world tests in training AI for situation awareness.

Figure 26.

Validation on digital twin: (a) training for objectness loss between physical dataset and virtual dataset, (b) validation for objectness loss between physical dataset and virtual dataset, (c) mAP score (threshold at 0.5) between physical dataset and virtual dataset.

4.5. Discussions

4.5.1. Justification of Methodology

In our research focused on UAM operations, we integrated the YOLOv3 and DeepSORT algorithms—renowned for their real-time object detection and tracking capabilities, respectively—into a digital twin framework designed to enhance situational awareness and safety. YOLOv3, notable for its swift processing and accuracy in object identification, combined with DeepSORT’s ability to track object movements over time, form the technological backbone of our study. These algorithms, deeply rooted in complex deep neural networks and machine learning techniques, involve intricate mathematical computations, though specific equations are embedded within the algorithms and not explicitly outlined. Our study also delves into key modeling principles essential for digital twin technology, emphasizing the digitalization of physical assets and the importance of high fidelity in replicating both static and dynamic aspects of the environment. By employing the AirSim environmental model and a detailed Konkuk University map refined through drone mapping for high fidelity, we created a realistic simulation environment. This environment includes lifelike graphics and both static and dynamic obstacles, enhanced by AirSim’s weather system for simulating real-world weather conditions, thus ensuring a comprehensive testing ground for our framework’s detection capabilities. The trustworthiness of our model, particularly for Detect and Avoid (DAA) systems, is underscored by its ability to accurately identify and alert to safety concerns, demonstrating a high degree of fidelity and reliability essential for practical DAA applications.

4.5.2. Offline Dataset Validation and Real-Time Simulation Scenarios

In this section, we discuss the evaluation of our framework, which encompasses both offline dataset validation and real-time simulation scenarios. Our evaluation approach aims to comprehensively assess the performance and adaptability of the framework in various settings, including controlled environments and dynamic digital twin scenarios. The validation of our framework using offline datasets is presented in Figure 24. This figure illustrates the results obtained from rigorous evaluation using offline datasets, providing insights into the accuracy and robustness of our algorithms. Through meticulous analysis and validation against ground-truth data, we demonstrate the efficacy of our framework in accurately detecting and avoiding obstacles in simulated UAM environments. Figure 25 illustrates the distinction between real-flight testing in physical environments and real-time simulation scenarios. The right side of the figure represents real-flight tests conducted in physical environments, while the left side depicts real-time simulation scenarios. This figure highlights the synchronization process between real-flight tests and real-time simulations, showcasing the integration of our framework into real-world operational environments and its adaptability to dynamic digital twin scenarios. Our evaluation approach, which includes both offline dataset validation and real-time simulation scenarios, provides a comprehensive understanding of the performance and adaptability of our framework. By leveraging offline datasets for rigorous validation and conducting real-time simulations to assess responsiveness and adaptability, we demonstrate the effectiveness of our framework in diverse UAM operational environments.

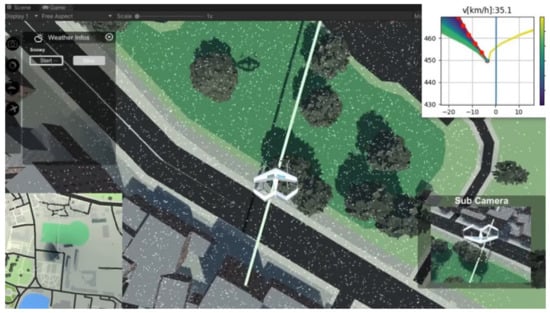

4.5.3. Various Simulation Scenarios

In addition to replicating various weather conditions such as rain and fog within our simulation environment, we are actively testing our framework’s performance in snowy conditions (Figure 27). This includes assessing the impact of snowfall on visibility and sensor performance, as well as evaluating the framework’s ability to detect and navigate obstacles effectively in snowy weather. By incorporating these diverse weather scenarios into our testing regimen, we aim to ensure the robustness and reliability of our framework across a range of real-world conditions.

Figure 27.

Weather simulation environment.

4.5.4. Impact of Real-World Factors

Recognizing the potential impact of real-world conditions on the effectiveness of the operational digital twin framework for collision detection, particularly concerning environment and sensor modeling, integration of existing sensor models like AirSim’s camera sensor model has been undertaken to enhance accuracy in simulating real-world scenarios. However, external factors such as adverse weather conditions can still affect sensor performance; for example, heavy snowfall may obscure the camera lens, impacting detection accuracy. To bridge the simulation-physical reality gap, the MAVlink router serves as a critical bridge, enabling the construction of a system that mirrors real-world conditions by estimating conditions based on sensor inputs and generating actuator signals through the flight control process. Integration of flight dynamic simulations and flight control firmware based on the SITL interface creates a digital system operating similarly to a single aircraft system. Active exploration of strategies to enhance the framework’s robustness in adverse environmental conditions, including the development of adaptive algorithms and additional sensor fusion techniques, is ongoing. Continuous refinement of modeling approaches and the utilization of advanced sensor technologies aim to improve the resilience of the digital twin framework and ensure its effectiveness across diverse real-world scenarios. Although certain elements of UAM operations, like traffic simulation or other urban dynamics, may not be perfectly replicated in our simulation environment, it constantly provides an essential training and evaluation environment. Our hybrid approach addresses concerns regarding simulation reality and applicability by training the model in a digital environment and validating it in real-world conditions. The model’s performance is tested in real-life situations as part of our validation methods, which offer a thorough evaluation of its applicability even though our simulation environment might not capture all real-world complexities.

4.5.5. Computational Performance

This analysis aims to demonstrate the operational viability of our framework in practical UAM scenarios. Our experiments have been conducted using standard Personal Computer (PC) hardware, the specifications of which are provided in the manuscript. Additionally, we have utilized a GPU (Graphics Processing Unit) to optimize computational performance and enhance real-time responsiveness. The framework was implemented and evaluated on a standard Personal Computer (PC) equipped with the following specifications:

- Processor: AMD Ryzen 7 5800X 8-Core Processor 3.80 GHz.

- Memory: 32 GB DDR4 RAM.

- Graphics Card: NVIDIA GeForce GTX 1660.

- Storage: 1 TB SSD.

- Operating System: Windows 10.

For the drone used in our experiments, we selected hardware with specifications tailored to meet the computational demands of our system. These specifications, including the hardware configuration of the drone, are now included in the manuscript for transparency and reproducibility. Additionally, our framework was tested and evaluated on the drone platform to assess its performance in real-world UAM scenarios.

The specification of Pixhawk 5x is presented in Table 4.

Table 4.

Specification of Pixhawk 5x.

The specification of the companion computer (Raspberry Pi 4) is presented in Table 5.

Table 5.

Specification of Companion Computer (Raspberry Pi 4).

5. Conclusions

This paper introduces a new simulation and communication framework that uses a digital twin to connect virtual agents with their real-world equivalents, improving data gathering, predictive analysis, and simulation capabilities. We developed a virtual training space with Unity and AirSim to teach obstacle detection skills, applicable in real-life scenarios. Our research focuses on the YOLOTransfer-DT framework, which employs deep and transfer learning within a simulator to train a digital twin. We conducted a case study to prove the effectiveness of this framework in identifying objects, demonstrating how it merges the digital twin’s physical and virtual elements. The experiments show that deep learning models can accurately identify objects in real-time situations based on simulated data, which reduces the computational demands while keeping high accuracy. Future research will aim at creating a more precise detection system that uses both real and simulated data to improve situational awareness through both static and dynamic data. This will include developing a sophisticated digital twin model for virtual training and testing in the aviation sector, potentially advancing digital twin technology in autonomous systems significantly.

Author Contributions

Conceptualization, J.-W.L.; methodology, N.L.Y. and A.A.M.; software, N.L.Y.; validation, N.L.Y., A.A.M. and T.A.N.; formal analysis, N.L.Y.; investigation, T.A.N.; resources, N.L.Y. and A.A.M.; data curation, N.L.Y.; writing—original draft preparation, N.L.Y.; writing—review and editing, A.A.M. and T.A.N.; visualization, N.L.Y.; supervision, A.A.M. and T.A.N.; project administration, A.A.M.; funding acquisition, J.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. 2020R1A6A1A03046811).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Thipphavong, D.P.; Apaza, R.; Barmore, B.; Battiste, V.; Burian, B.; Dao, Q.; Feary, M.; Go, S.; Goodrich, K.H.; Homola, J.; et al. Urban Air Mobility Airspace Integration Concepts and Considerations. In Proceedings of the 2018 Aviation Technology, Integration, and Operations Conference, Reston, VA, USA, 25 June 2018. [Google Scholar] [CrossRef]

- Pons-Prats, J.; Živojinović, T.; Kuljanin, J. On the understanding of the current status of urban air mobility development and its future prospects: Commuting in a flying vehicle as a new paradigm. Transp. Res. Part E Logist. Transp. Rev. 2022, 166, 102868. [Google Scholar] [CrossRef]

- Hill, B.P.; DeCarme, D.; Metcalfe, M.; Griffin, C.; Wiggins, S.; Metts, C.; Bastedo, B.; Patterson, M.D.; Mendonca, N.L. Urban Air Mobility (UAM) Vision Concept of Operations (ConOps) UAM Maturity Level (UML)-4 Overview. In UAM UML-4 Vision ConOps Workshops; NASA: Greenbelt, MD, USA, 2020. [Google Scholar]

- Song, K.; Yeo, H. Development of optimal scheduling strategy and approach control model of multicopter VTOL aircraft for urban air mobility (UAM) operation. Transp. Res. Part C Emerg. Technol. 2021, 128, 103181. [Google Scholar] [CrossRef]

- Garrow, L.A.; German, B.J.; Leonard, C.E. Urban air mobility: A comprehensive review and comparative analysis with autonomous and electric ground transportation for informing future research. Transp. Res. Part C Emerg. Technol. 2021, 132, 103377. [Google Scholar] [CrossRef]

- Barmpounakis, E.N.; Vlahogianni, E.I.; Golias, J.C. Unmanned Aerial Aircraft Systems for transportation engineering: Current practice and future challenges. Int. J. Transp. Sci. Technol. 2016, 5, 111–122. [Google Scholar] [CrossRef]

- Duvall, T.; Green, A.; Langstaff, M.; Miele, K. Unmanned Air Mobility: The Challenges Ahead for Drone Infrastructure, 15 March 2021. Available online: https://www.mckinsey.com/industries/travel-logistics-and-infrastructure/our-insights/air-mobility-solutions-what-theyll-need-to-take-off (accessed on 18 December 2023).

- Rimjha, M.; Hotle, S.; Trani, A.; Hinze, N. Commuter demand estimation and feasibility assessment for Urban Air Mobility in Northern California. Transp. Res. Part A Policy Pract. 2021, 148, 506–524. [Google Scholar] [CrossRef]

- Jian, L.; Xiao-Min, L. Vision-based navigation and obstacle detection for UAV. In Proceedings of the 2011 International Conference on Electronics, Communications and Control (ICECC), Ningbo, China, 9–11 September 2011; pp. 1771–1774. [Google Scholar] [CrossRef]

- Badrloo, S.; Varshosaz, M.; Pirasteh, S.; Li, J. Image-Based Obstacle Detection Methods for the Safe Navigation of Unmanned Vehicles: A Review. Remote Sens. 2022, 14, 3824. [Google Scholar] [CrossRef]

- Chandran, N.K.; Sultan, M.T.H.; Łukaszewicz, A.; Shahar, F.S.; Holovatyy, A.; Giernacki, W. Review on Type of Sensors and Detection Method of Anti-Collision System of Unmanned Aerial Vehicle. Sensors 2023, 23, 6810. [Google Scholar] [CrossRef]

- Ben Atitallah, A.; Said, Y.; Ben Atitallah, M.A.; Albekairi, M.; Kaaniche, K.; Boubaker, S. An effective obstacle detection system using deep learning advantages to aid blind and visually impaired navigation. Ain Shams Eng. J. 2024, 15, 102387. [Google Scholar] [CrossRef]

- Pang, D.; Guan, Z.; Luo, T.; Su, W.; Dou, R. Real-time detection of road manhole covers with a deep learning model. Sci. Rep. 2023, 13, 16479. [Google Scholar] [CrossRef]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Unlu, E.; Zenou, E.; Riviere, N.; Dupouy, P.E. Deep learning-based strategies for the detection and tracking of drones using several cameras. IPSJ Trans. Comput. Vis. Appl. 2019, 11, 7. [Google Scholar] [CrossRef]

- Aydin, B.; Singha, S. Drone Detection Using YOLOv5. Eng 2023, 4, 416–433. [Google Scholar] [CrossRef]

- Lindenheim-Locher, W.; Świtoński, A.; Krzeszowski, T.; Paleta, G.; Hasiec, P.; Josiński, H.; Paszkuta, M.; Wojciechowski, K.; Rosner, J. YOLOv5 Drone Detection Using Multimodal Data Registered by the Vicon System. Sensors 2023, 23, 6396. [Google Scholar] [CrossRef]

- Yeon, H.; Eom, T.; Jang, K.; Yeo, J. DTUMOS, digital twin for large-scale urban mobility operating system. Sci. Rep. 2023, 13, 5154. [Google Scholar] [CrossRef] [PubMed]

- Tuchen, S.; LaFrance-Linden, D.; Hanley, B.; Lu, J.; McGovern, S.; Litvack-Winkler, M. Urban Air Mobility (UAM) and Total Mobility Innovation Framework and Analysis Case Study: Boston Area Digital Twin and Economic Analysis. In Proceedings of the 2022 IEEE/AIAA 41st Digital Avionics Systems Conference (DASC), Portsmouth, VA, USA, 18–22 September 2022; pp. 1–14. [Google Scholar] [CrossRef]

- Bachechi, C. Digital Twins for Urban Mobility. In New Trends in Database and Information Systems; Chiusano, S., Cerquitelli, T., Wrembel, R., Nørvåg, K., Catania, B., Vargas-Solar, G., Zumpano, E., Eds.; Springer: Cham, Switzerland, 2022; pp. 657–665. [Google Scholar]

- Kapteyn, M.G.; Pretorius, J.V.R.; Willcox, K.E. A probabilistic graphical model foundation for enabling predictive digital twins at scale. Nat. Comput. Sci. 2021, 1, 337–347. [Google Scholar] [CrossRef] [PubMed]

- Souanef, T.; Al-Rubaye, S.; Tsourdos, A.; Ayo, S.; Panagiotakopoulos, D. Digital Twin Development for the Airspace of the Future. Drones 2023, 7, 484. [Google Scholar] [CrossRef]

- Costa, J.; Matos, R.; Araujo, J.; Li, J.; Choi, E.; Nguyen, T.A.; Lee, J.W.; Min, D. Software Aging Effects on Kubernetes in Container Orchestration Systems for Digital Twin Cloud Infrastructures of Urban Air Mobility. Drones 2023, 7, 35. [Google Scholar] [CrossRef]

- Agrawal, A.; Fischer, M.; Singh, V. Digital Twin: From Concept to Practice. J. Manag. Eng. 2022, 38, 06022001. [Google Scholar] [CrossRef]

- Boje, C.; Guerriero, A.; Kubicki, S.; Rezgui, Y. Towards a semantic Construction Digital Twin: Directions for future research. Autom. Constr. 2020, 114, 103179. [Google Scholar] [CrossRef]

- Glaessgen, E.H.; Stargel, D.S. The Digital Twin Paradigm for Future NASA and U.S. Air Force Vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference, Honolulu, HI, USA, 23–26 April 2012. [Google Scholar]

- Kim, S.H. Receding Horizon Scheduling of on-Demand Urban Air Mobility with Heterogeneous Fleet. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 2751–2761. [Google Scholar] [CrossRef]

- Brunelli, M.; Ditta, C.C.; Postorino, M.N. A Framework to Develop Urban Aerial Networks by Using a Digital Twin Approach. Drones 2022, 6, 387. [Google Scholar] [CrossRef]

- Botín-Sanabria, D.M.; Mihaita, A.S.; Peimbert-García, R.E.; Ramírez-Moreno, M.A.; Ramírez-Mendoza, R.A.; Lozoya-Santos, J.D.J. Digital Twin Technology Challenges and Applications: A Comprehensive Review. Remote Sens. 2022, 14, 1335. [Google Scholar] [CrossRef]

- Luo, J.; Liu, P.; Cao, L. Coupling a Physical Replica with a Digital Twin: A Comparison of Participatory Decision-Making Methods in an Urban Park Environment. ISPRS Int. J. -Geo-Inf. 2022, 11, 452. [Google Scholar] [CrossRef]

- Tao, F.; Sui, F.; Liu, A.; Qi, Q.; Zhang, M.; Song, B.; Guo, Z.; Lu, S.C.Y.; Nee, A.Y.C. Digital twin-driven product design framework. Int. J. Prod. Res. 2019, 57, 3935–3953. [Google Scholar] [CrossRef]

- Austin, M.; Delgoshaei, P.; Coelho, M.; Heidarinejad, M. Architecting Smart City Digital Twins: Combined Semantic Model and Machine Learning Approach. J. Manag. Eng. 2020, 36, 04020026. [Google Scholar] [CrossRef]

- Dembski, F.; Wössner, U.; Letzgus, M.; Ruddat, M.; Yamu, C. Urban Digital Twins for Smart Cities and Citizens: The Case Study of Herrenberg, Germany. Sustainability 2020, 12, 2307. [Google Scholar] [CrossRef]

- Chen, X.; Kang, E.; Shiraishi, S.; Preciado, V.M.; Jiang, Z. Digital Behavioral Twins for Safe Connected Cars. In Proceedings of the 21th ACM/IEEE International Conference on Model Driven Engineering Languages and Systems, New York, NY, USA, 14–19 October 2018; pp. 144–153. [Google Scholar] [CrossRef]

- Li, L.; Aslam, S.; Wileman, A.; Perinpanayagam, S. Digital Twin in Aerospace Industry: A Gentle Introduction. IEEE Access 2022, 10, 9543–9562. [Google Scholar] [CrossRef]

- Aldao, E.; González-de Santos, L.; González-Jorge, H. LiDAR Based Detect and Avoid System for UAV Navigation in UAM Corridors. Drones 2022, 6, 185. [Google Scholar] [CrossRef]

- Lv, Z.; Chen, D.; Feng, H.; Lou, R.; Wang, H. Beyond 5G for digital twins of UAVs. Comput. Netw. 2021, 197, 108366. [Google Scholar] [CrossRef]

- Fraser, B.; Al-Rubaye, S.; Aslam, S.; Tsourdos, A. Enhancing the Security of Unmanned Aerial Systems using Digital-Twin Technology and Intrusion Detection. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Kotwicz Herniczek, M.T.; German, B.J. Nationwide Demand Modeling for an Urban Air Mobility Commuting Mission. J. Air Transp. 2023, 1–15. [Google Scholar] [CrossRef]

- Fu, M.; Straubinger, A.; Schaumeier, J. Scenario-Based Demand Assessment of Urban Air Mobility in the Greater Munich Area. J. Air Transp. 2022, 30, 125–136. [Google Scholar] [CrossRef]

- Hearn, T.A.; Kotwicz Herniczek, M.T.; German, B.J. Conceptual Framework for Dynamic Optimal Airspace Configuration for Urban Air Mobility. J. Air Transp. 2023, 31, 68–82. [Google Scholar] [CrossRef]

- Campbell, N.H.; Gregory, I.M.; Acheson, M.J.; Ilangovan, H.S.; Ranganathan, S. Benchmark Problem for Autonomous Urban Air Mobility. In Proceedings of the AIAA SCITECH 2024 Forum, Orlando, FL, USA, 8–12 January 2024. [Google Scholar] [CrossRef]

- Ayyalasomayajula, S.K.; Ryabov, Y.; Nigam, N. A Trajectory Generator for Urban Air Mobility Flights. In Proceedings of the AIAA AVIATION 2021 FORUM, Virtual Event, 2–6 August 2021. [Google Scholar] [CrossRef]

- Wright, E.; Gunady, N.; Chao, H.; Li, P.C.; Crossley, W.A.; DeLaurentis, D.A. Assessing the Impact of a Changing Climate on Urban Air Mobility Viability. In Proceedings of the AIAA AVIATION 2022 Forum, Chicago, IL, USA, 27 June–1 July 2022. [Google Scholar] [CrossRef]

- Perez, D.G.; Diaz, P.V.; Yoon, S. High-Fidelity Simulations of a Tiltwing Vehicle for Urban Air Mobility. In Proceedings of the AIAA SCITECH 2023 Forum, National Harbor, MD, USA, 23–27 January 2023. [Google Scholar] [CrossRef]

- Wang, X.; Balchanos, M.G.; Mavris, D.N. A Feasibility Study for the Development of Air Mobility Operations within an Airport City (Aerotropolis). In Proceedings of the AIAA SCITECH 2023 Forum, National Harbor, MD, USA, 23–27 January 2023. [Google Scholar] [CrossRef]

- Chakraborty, I.; Comer, A.M.; Bhandari, R.; Mishra, A.A.; Schaller, R.; Sizoo, D.; McGuire, R. Flight Simulation Based Assessment of Simplified Vehicle Operations for Urban Air Mobility. In Proceedings of the AIAA SCITECH 2023 Forum, National Harbor, MD, USA, 23–27 January 2023. [Google Scholar] [CrossRef]

- Yedavalli, P.S.; Onat, E.B.; Peng, X.; Sengupta, R.; Waddell, P.; Bulusu, V.; Xue, M. SimUAM: A Toolchain to Integrate Ground and Air to Evaluate Urban Air Mobility’s Impact on Travel Behavior. In Proceedings of the AIAA AVIATION 2022 Forum, Chicago, IL, USA, 27 June–1 July 2022. [Google Scholar] [CrossRef]

- Nánásiová, O.; Pulmannová, S. S-map and tracial states. Inf. Sci. 2009, 179, 515–520. [Google Scholar] [CrossRef]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. arXiv 2017, arXiv:1705.05065. [Google Scholar]

- Kishor, K.; Rani, R.; Rai, A.K.; Sharma, V. 3D Application Development Using Unity Real Time Platform; Springer: Singapore, 2023; pp. 665–675. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Athanasiadis, I.; Mousouliotis, P.; Petrou, L. A Framework of Transfer Learning in Object Detection for Embedded Systems. arXiv 2018, arXiv:1811.04863. [Google Scholar]

- Zhang, Z.; He, T.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of Freebies for Training Object Detection Neural Networks. arXiv 2019, arXiv:1902.04103. [Google Scholar]

- Azhar, M.I.H.; Zaman, F.H.K.; Tahir, N.M.; Hashim, H. People Tracking System Using DeepSORT. In Proceedings of the 2020 10th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 21–22 August 2020; pp. 137–141. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).