1. Introduction

Micro Air Vehicles (MAVs) are expected to enable many new applications, especially when they can navigate autonomously. They can reach places where larger unmanned air vehicles cannot go and can have significantly reduced risks when operating close to people. Indoor navigation inside buildings brings out both advantages. However, the indoor autonomous operation of size-constrained MAVs is a major challenge in the field of robotics. While operating in complex environments, these MAV need to rely on a severely limited amount of sensing and processing resources.

With larger vehicles, several studies have been performed that obtained impressive results by using small laser scanners [

1,

2], RGB-D devices [

3,

4] and stereo vision systems [

5,

6]. In these studies, vehicles typically had a weight of at least 500 g–1000 g.

Studies using smaller vehicles typically are restrained to either off-board processing or adaptation to a specific environment [

7,

8]. No study has shown autonomous exploration in unprepared rooms with flying robots under 50 g.

A specific group of MAVs is formed by Flapping Wing MAVs (FWMAVs). These vehicles have several advantageous characteristics: a fast transition between multiple flight regimes, high maneuverability, higher lift coefficients, high robustness to impacts and better performance at low Reynolds numbers. These advantages especially show up in smaller vehicles, and many FWMAVs found in the literature have a weight of less than 50 g. Examples are the ‘Nano Hummingbird’ that weighs 19 g including a camera [

9]. Another example is the exceptionally small 0.7 g ‘RoboBee’ that uses an external power source [

10].

Despite their low weight, several studies have focused on autonomous control of FWMAVs and have obtained different levels of autonomy. Active attitude control was shown onboard the Nano Hummingbird [

9]. The RoboBee was stabilized using an external motion tracking system [

10]. Various studies performed experiments with visual information used in control loops. Different categories of tests can be identified. Some studies have used off-board sensing and processing [

11,

12,

13,

14,

15,

16], while others have used onboard sensing combined with off-board processing [

11,

17,

18,

19]. Finally, onboard sensing and processing have been performed onboard a 13-g flapping wing MAV [

20]. Height control has also been studied in different ways. This was done using external cameras [

11,

12,

13,

14], using onboard sensing and off-board processing [

11,

17] and using onboard sensing and processing [

8].

Obstacle avoidance is currently the highest level of autonomy that has been studied for FWMAVs. Obstacle avoidance using an onboard camera and off-board optic flow processing [

8,

18] resulted in autonomous flights of up to 30 s. Using two cameras and off-board stereo processing, autonomous flights up to 6 min were realized [

19,

21]. Previously, the DelFly Explorer was demonstrated with an onboard stereo vision and processing system. The onboard processing and algorithmic improvements led to autonomous flights of up to 9 minutes [

22] - a limit determined by the battery capacity.

Obstacle avoidance forms an important basis for autonomous navigation. However, pure obstacle avoidance methods where continuation of flight is the only goal result in rather random flight trajectories [

22]. In contrast, other studies have demonstrated MAVs that are able to perform other aspects of autonomous navigation, such as path planning and waypoint navigation. SLAM (Simultaneous Localization and Mapping)-based systems are very successful in performing these tasks. Unfortunately, SLAM is still rather demanding on processing power and memory resources and is most often found in systems with a weight of over 500 g [

5,

23]. Moreover, in many cases, there is a human operator in the loop who makes decisions on which places need to be visited. Mapping and map storage are not essential to perform navigation. Fast indoor maneuvering was demonstrated on a quadrotor MAV relying only on stereo vision cameras [

5]. In this study, the autonomous navigation capability is restricted, since an operator needs to define waypoints and the system does not guarantee obstacle-free trajectories.

To enable fully-autonomous flight of small FWMAVs, much lighter systems are needed that do not rely on high accuracy measurements for navigation The work in [

24], for instance, developed and demonstrated a 46-g quadrotor that uses a small sensor board of 7.7 g for ego-motion estimation based on optic flow and inertial sensors. Their goal was to achieve obstacle avoidance using optic flow.

Autonomous missions in indoor environments require a combination of capabilities like obstacle avoidance, corridor following, door or window passage traversal, flying over stairs, etc. Many studies focus on only one or a few of these aspects. For instance, flying through a corridor based on optic flow measurements was demonstrated on a quadrotor MAV [

25]. Several specific problems like corridor following were also demonstrated successfully in ground-based robots [

26,

27].

In this article, we demonstrate stereo vision-based navigation tasks on a 20-g FWMAV called “DelFly Explorer”. The system uses basic control routines to simultaneously perform the tasks of obstacle avoidance, door traversing and corridor following. The strength of this combination of sensors and processing is that the aforementioned tasks all rely on the same hardware and low-level routines. No adaptation to the environment is required, and the robot does not even need to adjust its behavior to a specific task. In

Section 2, the design of the DelFly Explorer is discussed. The stereo vision method and control routines are discussed in

Section 3. Several experiments in different environments are described and evaluated in

Section 4. Conclusions follow in

Section 5.

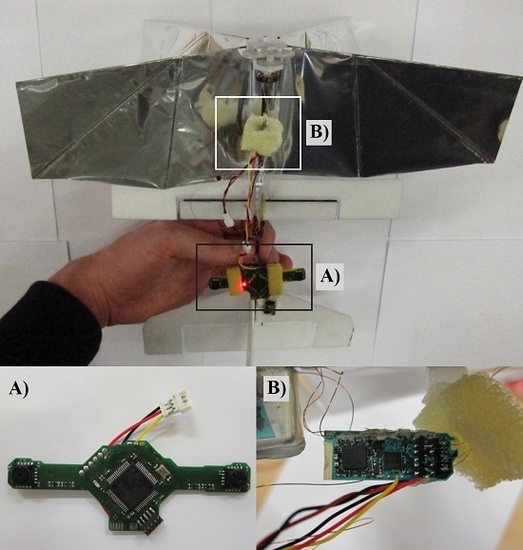

2. DelFly Explorer

The DelFly (

http://www.delfly.nl) is an FWMAV with two pairs of wings on top of each other. The DelFly Explorer, as shown in

Figure 1a, is the newest version in the DelFly project [

22]. It is based on the DelFly II, which was first demonstrated in 2007 [

28]. The DelFly Explorer has a wingspan of 28 cm and a weight of 20 g.

There are three main differences between the DelFly Explorer and the older DelFly II. It is equipped with a

-g autopilot board, which includes an ATmega328P-MLF28 micro-controller (

Figure 1d). The board also features an MPU9050 IMU-sensor with three-axis gyroscopes, accelerometers and magnetometers. The autopilot board also contains a BMP180 absolute pressure sensor as the altitude sensor. The motor controller for the brushless motor is also integrated into the autopilot board to save weight. Finally, the autopilot features a two-way radio control and telemetry link using a CYRF69103 chip that allows transmission of onboard telemetry data while receiving radio control commands. The radio control is used during the development phase to switch back to manual flight.

Another difference between earlier DelFly versions [

28] and the DelFly Explorer is the camera system. Previous designs were equipped with analog cameras and analog video transmitters, while image processing was done on the ground. The video stream could be captured by a ground station and used for vision-based control. The DelFly Explorer is equipped with two digital cameras that are used as a stereo vision system with a baseline of 6 cm. The camera images are not transmitted, but processed onboard using a 168-MHz STM32F405 micro-controller that performs the stereo vision processing (

Figure 1c). The stereo vision board uses TCM8230 camera sensors.

Finally, some modifications on the airframe have been made for better flight performance. The weight increase to 20 g (compared to 17 g in previous versions) required some increase in thrust from the flapping wings. This was achieved by reducing the number of coil windings of the electric motor, which in turn increases the current and power for a given battery voltage. This comes at the cost of slightly reduced efficiency. Nevertheless, in this configuration, the DelFly Explorer can fly in slow forward flight for around 10 min. For better directional control, the DelFly Explorer is equipped with aileron surfaces.

Figure 1e shows the definition of the body axes of the DelFly. The aileron surfaces provide a moment around the

X-axis, which is referred to as rolling. However, due to the high pitch angle attitude of the DelFly Explorer in slow forward flight, a moment around the body

X-axis mainly controls heading (see

Figure 1b). Accurate control of this axis is therefore important. This is in contrast to previous DelFly designs where a vertical tail rudder was used for heading control. This rudder provides a yaw moment around the

Z-axis, which gives indirect control of the heading through the vehicle bank dynamics. Both aileron surfaces in DelFly Explorer are actuated by a single servo. The tail of DelFly Explorer contains an elevator surface, which is also actuated by a servo. The elevator deflection regulates the pitch angle, and thereby the forward flight speed. All versions of DelFly have a stable slow forward flight with a center of gravity relatively far back. In DelFly Explorer, this is obtained by placing the relatively heavy stereo camera in between the main wing and the tail section. In this condition, DelFly is not stable at fast forward flight, but very stable in slow indoor flight. The maximum elevator deflection is kept small and is insufficient to transition to fast forward flight.

3. Vision and Control Algorithms

When using stereo vision as the basis for control, stereo matching is the most demanding part of the whole processing routine. Obtaining real-time stereo vision-based navigation onboard a very limited processor like the one on DelFly Explorer requires a very efficient algorithm. Based on the 32-bit processing speed of only 168 MHz and, even worse, the availability of only 196 kB of RAM, images are processed at a resolution of only 128 × 96 pixels.

The implemented stereo vision algorithm and the description of how the resulting disparity maps are used for control will further be discussed in this section.

3.1. Stereo Vision Algorithm

Stereo vision algorithms are extensively studied in the field of image processing. These algorithms can be divided into two types. They provide either sparse or dense disparity maps. Sparse methods can be more efficient, since only specific image features are selected and further processed. However, for the purpose of obstacle detection and avoidance, these algorithms have the disadvantage that the relation/connection between the feature points is missing. In other words, they provide more restricted information.

In this study, a slightly modified implementation of the method proposed by [

29] is used. This local stereo matching algorithm focuses on the trade-off between accuracy and computational complexity. According to the Middlebury stereo benchmark [

30], it is one of the most effective local stereo algorithms.

To ensure a real-time performance of at least 10 Hz, several modifications are applied. First, it is implemented such that the algorithm operates on individual image lines. This limits the amount of required memory. Furthermore, the stereo vision system has only access to the grayscale values by design. Namely, to merge both camera feeds into a single DCMI (Digital Camera Media Interface) input of the memory-limited micro-controller, the Altera MAX II CPLD (Complex Programmable Logic Device) replaces the color information of one image with the intensity of the other. The resulting image stream contains alternating 8-bit gray-scale values of both cameras.

The algorithm itself is also changed. The first difference is in the cost calculation. The work in [

29] based it on the Sum of Absolute Difference (SAD) and the CENSUS transform, which is a non-parametric local transform used in visual correspondence. Instead, we use the SAD calculation only, which is further reduced to a one-dimensional window (

in our implementation). The permeability weight calculation is slightly changed. In our implementation, it is only based on the pixel intensity. Then, the exponent calculation is implemented using a look-up table for efficiency. As a smoothing factor, we use

. Finally, the costs are aggregated based on horizontal support only to keep the algorithm line based. After aggregation, the disparity value corresponding to minimum pixel cost is selected. For more details about the method, the reader is referred to [

29].

Figure 2 shows the result of our implementation on the Tsukuba image from the well-known Middlebury stereo benchmark. Note that there is a significant streaking effect because all calculations in our implementation are line-based.

Figure 3 shows the result of this algorithm on real images of the corridor used in further testing. These images are taken by and processed by the DelFly Explorer stereo vision system. From these figures, it can be seen that our simplified version of [

29] is still very effective at retrieving the overall depth structure.

3.2. Disparity Map Updating

The disparity maps from the stereo vision algorithm are used directly for control purposes. Tests with the algorithm showed that the matching quality is very bad when the cameras are close to a wall with poor texture. This is illustrated in

Figure 4, which shows an image of a corridor and the corresponding disparity map. In this case, the wall is particularly complex to process since the wall is curved. A solution to this problem is to use the matching certainty as a measure to detect image regions with very poor stereo correspondence. The matching certainty is defined as:

is the matching cost for a certain disparity. Only if the certainty

c of a pixel is above a threshold

, it is considered to be reliable. The disparity image is updated according to this uncertainty. For each image line, the algorithm checks which pixels, starting from both the left and right border, are regarded as unreliable. As soon as a reliable pixel is found, all disparities up to that point in the disparity image line are discarded.

Figure 5 shows the reliability of all pixels (left) from

Figure 4 and which pixels are regarded as uncertain based on

. A second method is used to cope with wall regions that are considered to be reliable, but that are actually wrong. Since the matching performance of stereo vision algorithms relies on image texture, a simplified form of linear edge detection is used. Edge detection is based on the intensity difference of neighboring pixels:

in which

is the intensity of a pixel and

is the absolute difference in intensity in the

x direction of two neighboring pixels. Only the difference

in the horizontal direction is taken into account since it is already calculated for the permeability weight calculation in the stereo vision algorithm. Again, a threshold,

, is used to define if a pixel difference corresponds to a line. The disparity map is updated in the same way as for the certainty measure.

3.3. Control Logic

The updated disparity map is used to define the control input. The focus of this work is on heading control of the DelFly Explorer. As described in

Section 2, the heading is controlled by the aileron surfaces

. In forward flight, these control surfaces are trimmed such that the DelFly flies in a straight line. Feedback from the gyroscopes stabilizes the heading angle of DelFly to counteract disturbances.

where

p is the turn-rate along the body

X-axis,

is the desired turn rate and

is the aileron trim command. The navigation control

is then selected based on the simple rule that the DelFly should always fly in the direction where the disparity values are the smallest

. A saturated

P controller is used with gain

:

This guides the DelFly in the visible direction where obstacles are furthest away. The advantage of this choice is that it works both with doors and corridors. Furthermore, when approaching an obstacle, the region next to the obstacle will have a lower disparity and guides the DelFly away from the obstacle. While this guidance rule is sufficiently simple to fit onboard smaller processors and is particularly effective to traverse corridors, it does have some failure cases. For instance, when the direction of minimal disparity is very close to the edge of an obstacle, it can fail to add sufficient clearance to the obstacle.

The aiming point

is the point in the disparity map with the lowest disparity values. It is found by calculating the average position of all pixels that have a certain disparity value. Again, only the horizontal position in the image is taken into account. It is computed by counting how many times each disparity value occurs and what the average horizontal coordinate of this group of pixels is. Then, the lowest disparity value is found that occurs at least more than selected threshold

. The average horizontal position of this group of pixels is selected as the aiming point. The control input

is then defined as the difference between the coordinate of the aiming point

and the center coordinate

of the image divided by the horizontal field of view of the camera

.

The stereo vision camera and the autopilot board are separate systems, as shown in

Figure 1. The whole image processing routine, up to the calculation of the control input

, is performed on the micro-controller of the stereo vision system. This signal is sent to the autopilot, which runs the gyroscope feedback from Equation (

3) and drives the actuators.

5. Conclusions

In this paper, we present navigation experiments on an FWMAV that is equipped with an onboard stereo vision system. Based on real-time computed disparity maps, the best navigation direction is defined by searching the furthest point in the disparity map. By following this rule, the MAV is guided along obstacles, through doors and through corridors.

Experiments on different tasks and in different environments show that the method is effective at guiding the MAV in many cases. However, several cases were encountered where the current method produced the wrong control inputs, leading to unexpected behavior and crashes. In some cases, the aiming point was selected too close to borders of the door. In other cases, the low-resolution four-gram stereo vision system had too low resolution to properly detect the texture. Finally, some hardware issues and wind issues did play a role. Future work aims at solving these problems. Furthermore, the method will be expanded by integrating speed control based on obstacle proximity and by integrating height control.