Optimizable Image Segmentation Method with Superpixels and Feature Migration for Aerospace Structures

Abstract

:1. Introduction

2. Materials and Methods

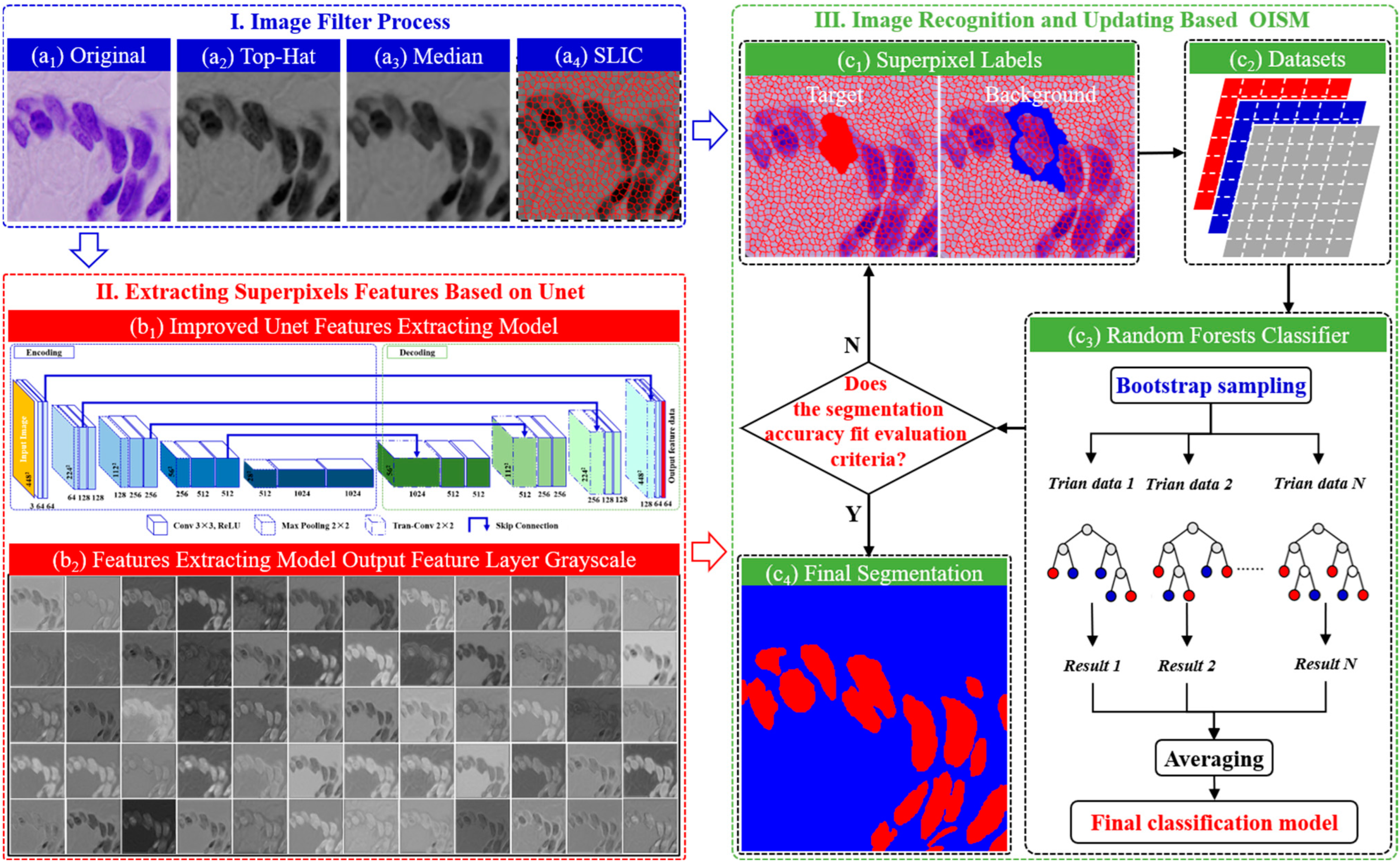

2.1. Image Filter Processing and Superpixels Partition

2.2. Superpixels Feature Extraction Algorithm with Improved Unet and SLIC

2.3. Optimizable Image Segmentation Method

- (1)

- Label the interest superpixels to add the category, such as cell superpixels and background superpixels, according to human’s prior knowledge;

- (2)

- The superpixels feature data and labeled data are divided into the train datasets (labeled superpixels) and the test datasets (non-labeled superpixels);

- (3)

- Train the RF classification model using the train datasets, and predict the category of the test datasets in the final trained model;

- (4)

- If the evaluation indicators of the image segmentation are good, output the final segmentation map; Otherwise, return to Step (1) to add new superpixels labels.

3. Results and Discussion

3.1. Method Validation

3.2. Engineering Application in Turbine Blade Image Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, W. Automatic segmentation of liver tumor in CT images with deep convolutional neural networks. J. Comput. Commun. 2015, 3, 146. [Google Scholar] [CrossRef]

- Zhou, Z.-H.; Jiang, Y.; Yang, Y.-B.; Chen, S.-F. Lung cancer cell identification based on artificial neural network ensembles. Artif. Intell. Med. 2002, 24, 25–36. [Google Scholar] [CrossRef]

- Adeniji, D.; Oligee, K.; Schoop, J. A Novel Approach for Real-Time Quality Monitoring in Machining of Aerospace Alloy through Acoustic Emission Signal Transformation for DNN. J. Manuf. Mater. Process. 2022, 6, 18. [Google Scholar] [CrossRef]

- Fei, C.W.; Li, H.; Lu, C.; Han, L.; Keshtegar, B.; Taylan, O. Vectorial surrogate modeling method for multi-objective reliability design. Appl. Math. Model. 2022, 109, 1–20. [Google Scholar] [CrossRef]

- Li, H.; Bu, S.; Wen, J.-R.; Fei, C.-W. Synthetical Modal Parameters Identification Method of Damped Oscillation Signals in Power System. Appl. Sci. 2022, 12, 4668. [Google Scholar] [CrossRef]

- Fei, C.W.; Liu, H.T.; Rhea PLiem Choy, Y.S.; Han, L. Hierarchical model updating strategy of complex assembled structures with uncorrelated dynamic modes. Chin. J. Aeronaut. 2022, 35, 281–296. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Yu, D.; Xu, Q.; Guo, H.; Zhao, C.; Lin, Y.; Li, D. An Efficient and Lightweight Convolutional Neural Network for Remote Sensing Image Scene Classification. Sensors 2020, 20, 1999. [Google Scholar] [CrossRef]

- Swain, M.J.; Ballard, D.H. Color indexing. Int. J. Comput. Vis. 1991, 7, 11–32. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Bhagavathy, S.; Manjunath, B.S. Modeling and detection of geospatial objects using texture motifs. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3706–3715. [Google Scholar] [CrossRef]

- Risojević, V.; Momić, S.; Babić, Z. Gabor descriptors for aerial image classification. In Proceedings of the International Conference on Adaptive and Natural Computing Algorithms, Ljubljana, Slovenia, 14–16 April 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 51–60. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2169–2178. [Google Scholar]

- Yang, Y.; Newsam, S. Spatial pyramid co-occurrence for image classification. In Proceedings of the 2011 International Conference on Computer Vision, Washington, DC, USA, 6–13 November 2011; pp. 1465–1472. [Google Scholar]

- Zhong, Y.; Cui, M.; Zhu, Q.; Zhang, L. Scene classification based on multifeature probabilistic latent semantic analysis for high spatial resolution remote sensing images. J. Appl. Remote Sens. 2015, 9, 095064. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.; Zhang, L.; Huang, B. The Fisher Kernel Coding Framework for High Spatial Resolution Scene Classification. Remote Sens. 2016, 8, 157. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhu, Q.; Zhong, Y.; Liu, Y.; Zhang, L.; Li, D. A Deep-Local-Global Feature Fusion Framework for High Spatial Resolution Imagery Scene Classification. Remote Sens. 2018, 10, 568. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, Y.; Zhao, D.; Chen, J. Graph-FCN for image semantic segmentation. In International Symposium on Neural Networks; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Yan, W.; Wang, Y.; Gu, S.; Huang, L.; Yan, F.; Xia, L.; Tao, Q. The domain shift problem of medical image segmentation and vendor-adaptation by Unet-GAN. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar]

- Ahmad, P.; Jin, H.; Alroobaea, R.; Qamar, S.; Zheng, R.; Alnajjar, F.; Aboudi, F. MH UNet: A multi-scale hierarchical based architecture for medical image segmentation. IEEE Access 2021, 9, 148384–148408. [Google Scholar] [CrossRef]

- Shuvo, M.B.; Ahommed, R.; Reza, S.; Hashem, M.M.A. CNL-UNet: A novel lightweight deep learning architecture for multimodal biomedical image segmentation with false output suppression. Biomed. Signal Process. Control 2021, 70, 102959. [Google Scholar] [CrossRef]

- Kaymak, Ç.; Uçar, A. Semantic image segmentation for autonomous driving using fully convolutional networks. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–8. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Sornapudi, S.; Stanley, R.J.; Stoecker, W.V.; Almubarak, H.; Long, R.; Antani, S.; Thoma, G.; Zuna, R.; Frazier, S.R. Deep learning nuclei detection in digitized histology images by superpixels. J. Pathol. Inform. 2018, 9, 5. [Google Scholar] [CrossRef]

- Yang, F.; Ma, Z.; Xie, M. Image classification with superpixels and feature fusion method. J. Electron. Sci. Technol. 2021, 19, 100096. [Google Scholar] [CrossRef]

- Cai, L.; Xu, X.; Liew, J.H.; Foo, C.S. Revisiting superpixels for active learning in semantic segmentation with realistic annotation costs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 25 June 2021; pp. 10988–10997. [Google Scholar]

- Kanezaki, A. Unsupervised image segmentation by backpropagation. In Proceedings of the 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1543–1547. [Google Scholar]

- Lv, N.; Chen, C.; Qiu, T.; Sangaiah, A.K. Deep learning and superpixel feature extraction based on contractive autoencoder for change detection in SAR images. IEEE Trans. Ind. Inform. 2018, 14, 5530–5538. [Google Scholar] [CrossRef]

- Xiong, X.; Duan, L.; Liu, L.; Tu, H.; Yang, P.; Wu, D.; Chen, G.; Xiong, L.; Yang, W.; Liu, Q. Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 2017, 13, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Afza, F.; Sharif, M.; Mittal, M.; Khan, M.A.; Hemanth, J. A hierarchical three-step superpixels and deep learning framework for skin lesion classification. Methods 2022, 202, 88–102. [Google Scholar] [CrossRef] [PubMed]

- Ali, J.; Khan, R.; Ahmad, N.; Maqsood, I. Random forests and decision trees. Int. J. Comput. Sci. Issues (IJCSI) 2012, 9, 272. [Google Scholar]

- Liu, B.; Guo, W.; Chen, X.; Gao, K.; Zuo, X.; Wang, R.; Yu, A. Morphological attribute profile cube and deep random forest for small sample classification of hyperspectral image. IEEE Access 2020, 8, 117096–117108. [Google Scholar] [CrossRef]

- Kong, Y.; Yu, T. A deep neural network model using random forest to extract feature representation for gene expression data classification. Sci. Rep. 2018, 8, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Luan, J.; Zhang, C.; Xu, B.; Xue, Y.; Ren, Y. The predictive performances of random forest models with limited sample size and different species traits. Fish. Res. 2020, 227, 105534. [Google Scholar] [CrossRef]

- Zeng, M.; Li, J.; Peng, Z. The design of top-hat morphological filter and application to infrared target detection. Infrared Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Brownrigg, D.R.K. The weighted median filter. Commun. ACM 1984, 27, 807–818. [Google Scholar] [CrossRef]

- Townsend, J.T. Theoretical analysis of an alphabetic confusion matrix. Percept. Psychophys. 1971, 9, 40–50. [Google Scholar] [CrossRef]

- Li, S.; Zhao, X.; Zhou, G. Automatic pixel-level multiple damage detection of concrete structure using fully convolutional network. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 616–634. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Dual attention deep learning network for automatic steel surface defect segmentation. Comput.-Aided Civ. Infrastruct. Eng. 2022, 37, 1468–1487. [Google Scholar] [CrossRef]

- Shi, J.; Dang, J.; Cui, M.; Zuo, R.; Shimizu, K.; Tsunoda, A.; Suzuki, Y. Improvement of Damage Segmentation Based on Pixel-Level Data Balance Using VGG-Unet. Appl. Sci. 2021, 11, 518. [Google Scholar] [CrossRef]

- Ye, S.; Wu, K.; Zhou, M.; Yang, Y.; Tan, S.; Xu, K.; Song, J.; Bao, C.; Ma, K. Light-weight calibrator: A separable component for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13736–13745. [Google Scholar]

- Caicedo, J.C.; Goodman, A.; Karhohs, K.W.; Cimini, B.A.; Ackerman, J.; Haghighi, M.; Heng, C.K.; Becker, T.; Doan, M.; McQuin, C.; et al. Nucleus segmentation across imaging experiments: The 2018 Data Science Bowl. Nat. Methods 2019, 16, 1247–1253. [Google Scholar] [CrossRef]

- Han, L.; Li, P.Y.; Yu, S.J.; Chen, C.; Fei, C.W.; Lu, C. Creep/fatigue accelerated failure of Ni-based superalloy turbine blade: Microscopic characteristics and void migration mechanism. Int. J. Fatigue 2022, 154, 106558. [Google Scholar] [CrossRef]

- Li, X.Q.; Song, L.K.; Bai, G.C. Deep learning regression-based stratified probabilistic combined cycle fatigue damage evaluation for turbine bladed disks. Int. J. Fatigue 2022, 159, 106812. [Google Scholar] [CrossRef]

| Ground Truth | Prediction | |

|---|---|---|

| Positive | Negative | |

| Positive | Ture Positive | False Negative |

| Negative | False Positive | Ture Negative |

| NO. | Method | PA | MPA | MIoU | FWIoU |

|---|---|---|---|---|---|

| a | Unet | 0.834259 | 0.851848 | 0.679433 | 0.733431 |

| OISM (K = 0.8%) | 0.887360 | 0.902457 | 0.763478 | 0.808452 | |

| OISM (K = 5.0%) | 0.957993 | 0.971772 | 0.899906 | 0.922028 | |

| b | Unet | 0.838364 | 0.871663 | 0.673284 | 0.746117 |

| OISM (K = 0.8%) | 0.913635 | 0.943578 | 0.799415 | 0.852301 | |

| OISM (K = 5.0%) | 0.951767 | 0.969375 | 0.876222 | 0.912522 | |

| c | Unet | 0.762253 | 0.762783 | 0.576126 | 0.640748 |

| OISM (K = 0.8%) | 0.686020 | 0.776503 | 0.510159 | 0.548241 | |

| OISM (K = 5.0%) | 0.941086 | 0.959623 | 0.865410 | 0.893312 | |

| d | Unet | 0.834915 | 0.895797 | 0.617159 | 0.764119 |

| OISM (K = 0.8%) | 0.946335 | 0.964344 | 0.816673 | 0.908712 | |

| OISM (K = 5.0%) | 0.979523 | 0.988267 | 0.917164 | 0.961958 | |

| e | Unet | 0.806793 | 0.811859 | 0.632902 | 0.697995 |

| OISM (K = 0.8%) | 0.937119 | 0.958537 | 0.855316 | 0.887218 | |

| OISM (K = 5.0%) | 0.957596 | 0.972039 | 0.897415 | 0.921520 |

| Type | K Value (%) | PA | MPA | MIoU | FWIoU |

|---|---|---|---|---|---|

| Crack | K = 0.8 | 0.874330 | 0.865002 | 0.762658 | 0.778716 |

| K = 1.6 | 0.875681 | 0.856561 | 0.761054 | 0.779182 | |

| K = 5.0 | 0.947255 | 0.954521 | 0.894341 | 0.901013 | |

| Void | K = 0.8 | 0.942570 | 0.945785 | 0.862369 | 0.895216 |

| K = 1.6 | 0.932300 | 0.951606 | 0.844659 | 0.879264 | |

| K = 5.0 | 0.981622 | 0.985598 | 0.952157 | 0.964483 | |

| Microstructure | K = 0.8 | 0.944552 | 0.899092 | 0.646619 | 0.922001 |

| K = 1.6 | 0.979505 | 0.893149 | 0.778808 | 0.964833 | |

| K = 5.0 | 0.992388 | 0.971998 | 0.903307 | 0.985876 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fei, C.; Wen, J.; Han, L.; Huang, B.; Yan, C. Optimizable Image Segmentation Method with Superpixels and Feature Migration for Aerospace Structures. Aerospace 2022, 9, 465. https://doi.org/10.3390/aerospace9080465

Fei C, Wen J, Han L, Huang B, Yan C. Optimizable Image Segmentation Method with Superpixels and Feature Migration for Aerospace Structures. Aerospace. 2022; 9(8):465. https://doi.org/10.3390/aerospace9080465

Chicago/Turabian StyleFei, Chengwei, Jiongran Wen, Lei Han, Bo Huang, and Cheng Yan. 2022. "Optimizable Image Segmentation Method with Superpixels and Feature Migration for Aerospace Structures" Aerospace 9, no. 8: 465. https://doi.org/10.3390/aerospace9080465

APA StyleFei, C., Wen, J., Han, L., Huang, B., & Yan, C. (2022). Optimizable Image Segmentation Method with Superpixels and Feature Migration for Aerospace Structures. Aerospace, 9(8), 465. https://doi.org/10.3390/aerospace9080465