Abstract

An increasing emphasis on health professional competency in recent times has been matched by an increased prevalence of competency-based education models. Assessments can generate information on competence, and authentic, practice-based assessment methods are critical. Assessment reform has emerged as an academic response to the demands of the pharmacy profession and the need to equip graduates with the necessary knowledge, skills and attributes to face the challenges of the modern workforce. The objective of this review was to identify and appraise the range of assessment methods used in entry-level pharmacy education and examine current trends in health professional assessment. The initial search located 2854 articles. After screening, 36 sources were included in the review, 13 primary research studies, 12 non-experimental pharmacy research papers, and 11 standards and guidelines from the grey literature. Primary research studies were critically appraised using the Medical Education Research Study Quality Instrument (MERSQI). This review identified three areas in pharmacy practice assessment which provide opportunities for expansion and improvement of assessment approaches: (1) integrated approaches to performance assessment; (2) simulation-based assessment approaches, and; (3) collection of validity evidence to support assessment decisions. Competency-based assessment shows great potential for expanded use in pharmacy, but there is a need for further research and development to ensure its appropriate and effective use.

1. Introduction

Assessment is a multi-faceted function that has a powerful influence on learning [1]. Assessment is widespread in health professional education, motivated by accountability to external sources such as accrediting authorities or institutions that wish to improve services and programs [2]. Huba and Freed define assessment as “the process of gathering and discussing information from multiple and diverse sources in order to develop a deep understanding of what students know, understand, and can do with their knowledge as a result of their educational experiences [3]. This process is realised when assessment results are used to improve subsequent learning” [3]. This definition of assessment is particularly useful as it highlights important characteristics of quality assessment processes: (1) a systematic and continuous process; (2) having emphasis on student learning, focusing on what students can do, and (3) focusing on improvement of future performance (for individual students), and of educational programs [2,3]. There are several reasons documented to justify the increasing emphasis on the assessment of health professionals, as outlined in Table 1.

Table 1.

Reasons for change in focus on practice-based assessments in health professional education.

Traditionally, assessments have been used to make summative evaluation decisions, serving as a concrete and clear approach for educators to assure external stakeholders of the competence of students, often referred to as Assessment of Learning (AOL). While feedback has always been part of the assessment process, in the last decade, educators have recognised the positive impact that formative assessments can have on student learning [11]. Indeed, there is now broad agreement among professional associations, accrediting agencies and educational institutions that student learning should be a primary goal of assessment [2,11]. This is evidenced by the increasing emphasis on assessment for learning (AFL), an approach using formative assessment or informal assessment approaches to specifically improve students’ learning [11,12]. Current literature insists that assessment should not be dichotomised into approaches that are either formative or summative [11]. Examples of assessment frameworks that combine both purposes, include the Objective Structured Clinical Examinations (OSCE) which may include an overall global assessment, as well as assessing different skill sets in a deconstructed manner that can be leveraged to provide feedback to students on their strengths and weaknesses [13,14].

A large body of evidence demonstrates the need for multiple assessments throughout a student’s learning trajectory, rather than a single high-stakes capstone assessment, to ensure students build towards minimum acceptable level of knowledge or performance [12,15,16]. This has been reinforced through the evolution of competency-based medical education literature [17]. While there is little argument about the importance of using competencies to frame educational outcomes, there is less certainty around how we equate competency statements and frameworks into relevant measures of professional practice [18]. Similarly, approaches for the design and development of trackable paths for students to ultimately reach independent and/or advanced practice are not widely established. Entrustable Professional Activities (EPAs) offer promise in this area and are currently a focus of educational research in pharmacy [19,20]. Furthermore, capstone courses in degree programs with multiple comprehensive and integrated student assessments are emerging and have been shown to provide faculty members with feedback regarding curriculum outcomes attained through robust assessments [21].

In an attempt to maintain and improve the quality and safety of patient care, a framework for assessment of work-ready pharmacy graduates should promote the application of expertise and professional judgement of technical and non-technical skills in areas of practice that have the potential to impact patient safety, such as medicine dispensing [22]. However, despite the widespread interest in competency-based education programs and practice-based assessments, there is a scarcity of literature reporting on valid and reliable assessment instruments in pharmacy education. Here, we attempt to provide an overview of assessments used to evaluate the competence of pharmacists graduating from their degree program and entering the pharmacy profession.

2. Background

The medical education field has been utilising competency-based assessment approaches for several years [23], and these approaches are now beginning to be adapted and implemented in pharmacy education [24]. The competency-based approach is learner-centred and is underpinned by enabling the progression of students who demonstrate adequate knowledge and/or skills in assessments developed within the program of study, while simultaneously preventing students from graduating without demonstrating the required level of competence [24]. A formal competency-based education (CBE) model removes traditional semester timeframes as the yardstick for determining readiness and enables students to learn and progress at their own pace [24]. However, given various practicalities, attention is currently still focused on students demonstrating competencies during the traditional time-limited and structured curricula. Both approaches highlight the increasing need for adequate assessment, and why the concept of EPAs is growing in popularity as an approach to ensure students possess the required skills, knowledge and attitudes both prior to, and beyond program completion.

Outcome-based education in pharmacy programs is evolving to embrace the competency-based assessment frameworks set forth by national and international governing bodies. These frameworks are increasingly used to describe the skills pharmacists require to effectively meet the health needs of patients. The International Pharmaceutical Federation (FIP) states that assessment and quality assurance is the key to guarantee student capabilities, a recommendation that would be difficult to achieve without a competency-based approach [25]. For example, The Center for the Advancement of Pharmacy Education (CAPE) provide educational outcomes that focus on the knowledge, skills, and attitudes that entry-level pharmacists require. The CAPE learning objectives provide a structured framework for measuring the outcomes of a degree program in pharmacy, including those that are necessary for the safe and appropriate supply of medicines [26,27]. Some other examples include National Competency Standards Framework for Pharmacists in Australia [28] and Standards for the initial education and training of pharmacists in Great Britain [29]. In an international review of the use of competency standards in undergraduate pharmacy education, it was shown that competency standards were reflected in pharmacy program assessments, particularly OSCEs and portfolios, as well as being used to design, develop and review pharmacy curricula [9]. While this provides a quality assurance mechanism to enhance the quality and employability of the final graduate, we must be cognisant of the limitations of translating these competency frameworks into the tasks that are activities of daily Pharmacy practice when designing assessments [18,30].

While competency-based education is evident in the field, the challenges associated with competence assessment have not been fully resolved. Broader cooperation in research and practice is required to ensure validity and authenticity of competence-based education and assessment internationally [2].

3. Review Methodology

3.1. Aim

The following review aims to identify opportunities for future research in assessment of entry-level pharmacists.

3.2. Methods

Medline, International Pharmaceutical Abstracts (IPA), EMBASE, CINAHL, PsycINFO and Scopus were searched for articles in English published between January 2000 and May 2019, to identify studies that reported on the use of assessments in pharmacy education. The literature search was performed iteratively, with broad search terms used initially, primarily focusing on teaching and assessment in health professional education. The following key search terms were used: clinical assessment [assessment OR evaluation OR measurement OR competence OR standard, OR outcomes OR entrustment OR workplace OR preceptorship OR placement OR work-integrated learning], assess*, studen*, educ*, health professional student [students OR undergraduates]. Subsequently, additional terms and filters were added to increase the sensitivity and specificity of our search to pharmacy education. These include pharm*, “assessment, pharmacy”, “education, pharmacy”, “practice, pharmacy”.

Thirteen experimental research studies specific to pharmacy assessment approaches were identified, and these are outlined in Table 2. Studies were predominantly data-based evaluations of assessment practice, including validity studies, with some comparisons of assessment practice. Quantitative research papers were appraised using the Medical Education Research Study Quality Instrument (MERSQI). This instrument assesses studies’ according to (1) Design; (2) Sampling; (3) Type of data; (4) Validity evidence for evaluation instrument; (5) Data analysis; and (6) Outcome.

Table 2.

Description of assessments used in pharmacy education.

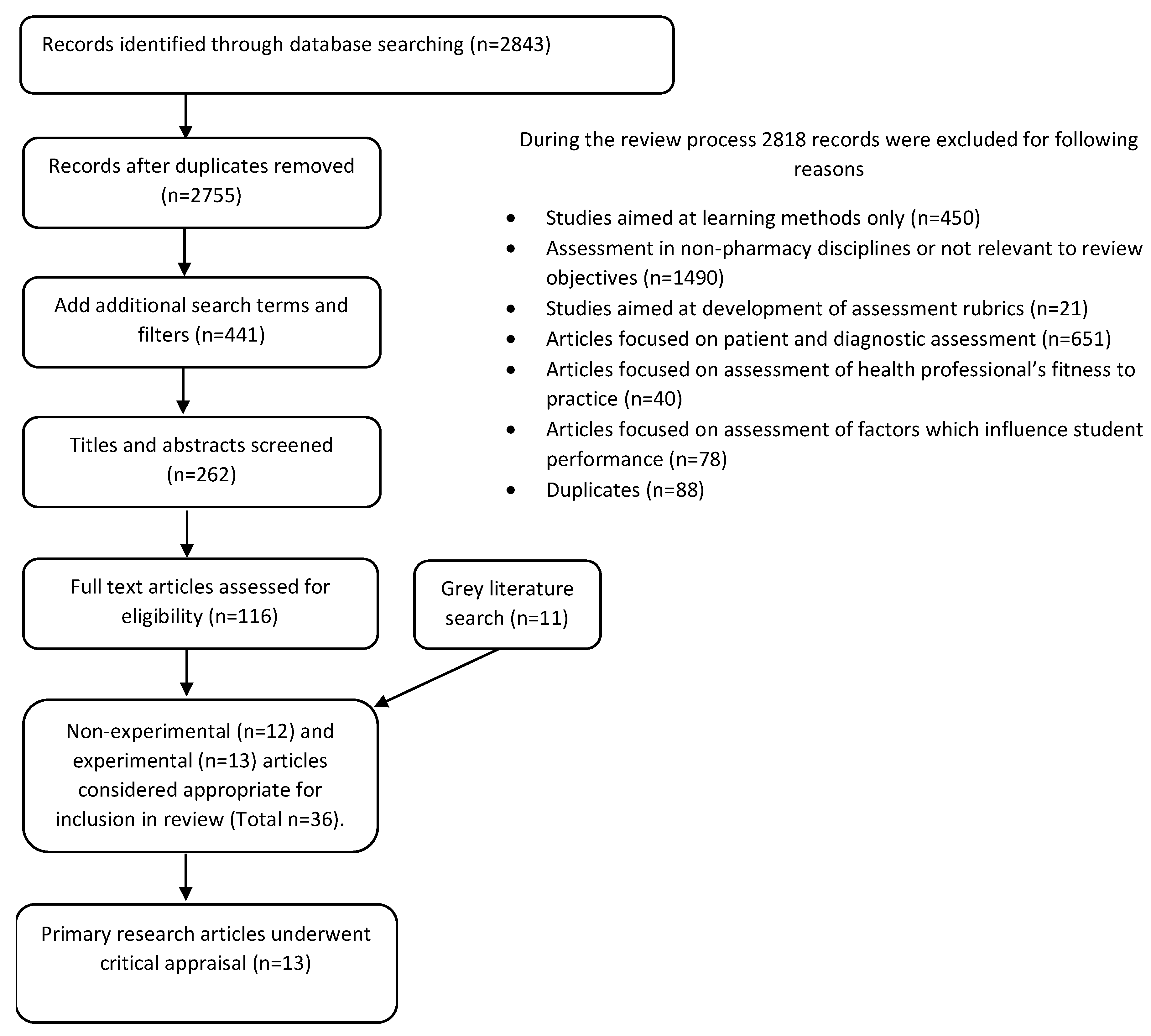

Further, 12 non-experimental pharmacy research papers (commentary, review, editorial, practice applications) were identified and drawn upon in the following discussion. A grey literature search was completed, and 11 relevant documents were identified including competency frameworks, standards and policies that inform assessment practice. This gave a total of 36 relevant literature sources pertaining to assessment in pharmacy. Millers Pyramid has been used as a tool against which we compare the application of various assessment methods [15,31]. The Preferred Reporting Items for Systematic Reviews and Meta- Analysesis (PRISMA) approach has been used for reporting on the literature search as shown in Figure 1. For completeness we have drawn on research completed in other health disciplines to develop a wider appreciation of the trends in health professional education. Therefore, in addition to pharmacy literature, 49 relevant papers from other health professional fields were used to inform the analysis and interpretation of our findings.

Figure 1.

PRISMA Flow Diagram for the literature review search [32].

3.3. Inclusion/Exclusion Criteria

Articles were included if they reported in English language on assessment approaches used in pharmacy education. Reasons for exclusion are shown in Figure 1.

3.4. Method of Analysis

A data reduction process was used to extract, simplify and organise data from the articles. Each article was analysed to identify data relevant to the objective of this review, and this information was recorded. The data categories were refined iteratively as the literature was analysed. Thematic analysis was then used to capture ideas and concepts that recurred across the data set to develop themes.

4. Results

The review results presented describe the variety of assessments used in pharmacy education and mapped to the levels in Miller’s Pyramid [15] (Table 2 and Table 3); as well as describe the included experimental research studies (n = 13) pertaining to assessment in pharmacy education (Table 4). Across 13 studies, the mean overall MERSQI score was 10.6 (range 5–15, of possible 18).

Table 3.

Mapping of assessment approaches to Millers Pyramid.

Table 4.

Description of the included experimental research studies in pharmacy assessment (n = 13) and quality appraisal using the Medical Education Research Study Quality Instrument (MERSQI) [53].

The implications for current and future pharmacy education practice are then synthesised in a discussion of key themes emerging from the results and drawing on the wider literature for health professional assessment. Three main themes relating to competence assessment in pharmacy education were identified in this literature review: (1) integrated approaches to performance assessment; (2) authentic and simulation-based assessment approaches, and; (3) collection of validity evidence to support assessment decision.

The articles retrieved from this review collectively describe the pedagogical challenges faced by educators in their efforts to evaluate pharmacist competence in their activities of daily practice, such as medicine dispensing which represents a core activity of daily pharmacy practice [63]. Dispensing is the process of preparing and supplying a medicine for use by a patient, in a manner that optimises its effectiveness and safety [64,65]. Dispensing practice by pharmacists has traditionally taken a product-orientated approach but has more recently been extended to incorporate a more patient focused clinical role incorporating communications with patients and other health professionals, medicines reconcilliation and review of clinical data [63,65,66]. Indeed, pharmacy practice has evolved to incorporate a variety of professional tasks in designing, implementing and monitoring a therapeutic plan to optimise therapeutic outcomes for the patient, and it is imperative that competence assessment evolves to meet these demands [66].

This review highlights six key challenges in the assessment of health professionals that impact evaluation of pharmacists’ professional skills, grouped under the themes identified from the literature review.

Theme 1.

Authentic, simulation-based assessment approaches.

4.1. Achieving Authenticity in Assessment Tasks and Activities

A shift from the application of a predominantly behavioural pedagogy to a constructivist learning paradigm has placed a much greater emphasis on authentic teaching and learning practices [67]. This has led to authentic assessment strategies becoming a focal strategy in higher education over the last two decades, aimed to provide a connection between performance and real-world work environments. However there is not always consensus on what constitutes authenticity in assessment [54].

In the past two decades, there has been a significant increase in the use of simulation-based training and assessment as part of the education of competent and safe healthcare professionals [68,69], including pharmacists [70,71,72,73]. Simulation refers to an artificial representation of a real-world process to achieve educational goals through experiential learning [74]. Simulation-based training and assessment activities are those that use simulation methodology to recreate a clinical scenario that replicates key aspects of an actual patient encounter, and provide opportunities for students to learn and practice skills in a safe environment with no risk of harm [75]. The increased use of simulation for teaching and assessment in healthcare education has been driven by a range of factors, including increased student numbers and changes in the structure of both academic programs and healthcare delivery. This has been compounded by a decrease in the availability of patients for educational opportunities, and the need to standardise the clinical situation faced by students for assessment purposes [68,69,76]. Simulation applications in assessments range from high fidelity, technical simulations with manikins to role-plays with simulated patients [72,73], however reports on such assessment approaches in pharmacy education to evaluate learner readiness for patient care in the clinical environment are limited.

Theme 2.

Collection of validity evidence.

4.2. Reporting on the Validity and Reliability of Assessment Methods

Educators and accrediting bodies for health professionals are required to use various types of assessment and examine the validity and reliability of evaluation methods used [77,78,79]. Validity and reliability of assessment methods is pivotal in being able to consistently produce valid judgements based on assessment information [37]. For this reason, the selection and construction of appropriate metrics to evaluate competence, minimisation of measurement errors and validation procedures are currently major focus areas in health professional education [69,76]. What is less clear from our review, is what is required in measurement models to enable assessors to move from using inferences obtained from simply observing the actions and behaviours of students, to having clear evidence for a student’s skills, strategies and proficiencies, based on a valid and reliable framework, and this represents opportunity for future research.

The process of validation has evolved significantly, and our understanding of validity theory, as it relates to assessment in health professional education, has become increasingly complex. This is further complicated by ongoing transformations of health education curricula which support a move towards competency-based, programmatic assessment and authentic assessment methodologies [80]. As we move towards an increased reliance on performance-based assessments for decisions about health professionals’ readiness and ability to perform in the workplace, there is a need to defend the processes used and decisions made, particularly for high-stakes assessment.

4.3. Selection of Appropriate Assessment Metrics

Analytic and holistic scoring are two common approaches to assessment, although these assessment scales are not always mutually exclusive, in that analytic scoring may also influence an overall holistic rating. Checklists are one of the most common methods used for analytic scores [81,82,83,84,85,86,87], constructed with items that measure specific steps or processes across varying domains that include history taking, patient counselling and use of clinical devices (e.g., questions that should be asked, manoeuvres that should be performed). Although checklists provide modestly reproducible scores and have good internal validity, there are some drawbacks to this methodology [76,82]. A checklist-based assessment could alter the behaviour of the candidate, who may employ rote-learned behaviours or alter the sequence or timing for a task, in order to accrue more points. A checklist may also ignore important factors relating to the assessment such as the order and timing of actions, which may be critical where a series of patient management activities needs to be employed [76].

Conversely, holistic scoring can effectively measure complex and multidimensional constructs such as communication and teamwork. The psychometric properties of holistic scoring are often adequate [76], and one of the key benefits is that they allow the rater to evaluate implicit actions of the candidate, which are unnecessary or have a negative impact on patient care, something that is difficult to achieve with the use of a checklist. Global rating scores are the prevailing methodology for holistic scoring, whereby a multitude of performance factors are considered in an overall rating or evaluation of health professional competence [86,88,89,90,91,92]. Challenges for the application of global rating scales include inter-rater standardisation and appropriate consideration of the individual elements contributing to the global scale. Within pharmacy education there is an absence of integrated assessment models that enable a student to be assessed holistically, where the entire performance can be rated.

4.4. Accounting for Different Levels of Ability and Practice

Another of the challenges in competence assessment is avoiding a ‘one-size-fits-all’ approach [93]. Often, assessment tools are standardised using competency standard frameworks, and fail to take account of differing levels of knowledge, skills and experience in health practitioner development. For example, the same competency assessments may be used in an undergraduate pharmacy program, postgraduate training, newly employed pharmacists, ongoing CPD and advancing practice without adequate alteration of the level of expectation or difficulty of the task. This ‘tick-box’ approach where the same competency tool is used among all pharmacists is pedagogically unsound [93]. This raises questions about the validity and reliability of generic assessment tools that are expected to fulfil the assessment requirements of all health professionals in different clinical contexts.

Although focusing primarily on knowledge assessment, computer-adaptive tests (CAT) have been increasingly used to customise the assessment process using advances in technology [37]. CATs are designed to adjust their level of difficulty based on the responses provided, to match the knowledge and ability of the test candidate and are intended to be appropriately challenging for each student [94,95]. This approach may overcome issues associated with ‘one-size-fits-all’ standardised tests. For example, a candidate that answers a question correctly will then be given a question that is more difficult, with scoring that not only considers the percentage of correct responses, but also the difficulty level of items completed [37,95].

Theme 3.

Integrated approaches to assessment.

4.5. Integration of Competencies in Assessments

Medicine dispensing by pharmacists is commonly assumed to be a simple, routine process. However the dispensing process is underpinned by multiple, parallel steps that include interpreting and evaluating a prescription, retrieval and review of a medication history, selection, preparation, packaging, labelling, record-keeping, and transfer of the prescribed medicine to the patient, including counselling as appropriate [63,96]. The dispensing process may also incorporate other associated tasks such as communication with the prescriber, and provision of more complex advice to the patient [96]. These steps are often assessed in isolation [55,56,58], however in the practice environment, these tasks are frequently performed concurrently, whereby a pharmacist performs two or more of these actions simultaneously, to complete the process as a single encounter, with elements rarely performed in isolation [97].

OSCE/simulation hybrid assessments are those which incorporate simulation methodology into one or more OSCE stations, where each station is built around a specific task or specific component of the more extensive process. This generates information about several dimensions of competence, which may be integrated by combining stations where one builds on another. This blended-simulation methodology has been used successfully in Pharmacy education, most recently in a study evaluating patient care outcomes in first- and third-year pharmacy students and postgraduate pharmacy residents as an overall assessment of practice readiness [63]. It is unclear, however, how well such blended assessment approaches represent a students’ competency to perform the task as an integrated whole.

The OSCE is commonly used as an acceptable way of evaluating clinical skills in undergraduate medicine and pharmacy programs worldwide, as it can facilitate assessment across a wide range of clinical contexts [37,98]. This method of examination enables the rater to tease out deficiencies in specific competency areas, thus providing feedback for the students’ progress, particularly during the early stages of learning. The emergence of ‘reductionism’ in assessment has led to the breakdown of the competencies we wish to evaluate into smaller, discrete tasks, which are then assessed separately. However, mastery of the parts does not automatically imply a competent performance of the integrated whole process being examined. The traditional OSCE format tends to compartmentalise candidates’ skills and knowledge and fails to assess some important multidimensional domains of pharmacy practice including integration of core knowledge into practice, clinical reasoning, multi-tasking and time-management.

EPAs by definition are statements of specific task-related activities that require integration of multiple competencies [18,37], e.g., ‘gather a history and assess a patient’s current medication regimen to ensure medications are indicated, effective, safe, and convenient’ [18] and therefore play an important role in ensuring we view competence as an integration of knowledge, skills and attitudes into a practice-orientated situation. The most extensive example of the application of the EPA model to pharmacy education is in the Doctor of Pharmacy curriculum, USA, an entry-level pharmacy degree. The American Association of Colleges of Pharmacy (AACP) has published 15 core EPAs essential for all pharmacists to perform without supervision as entrusted by stakeholder groups [99,100], and these EPAs have been used to develop an assessment framework across the Advanced Pharmacy Practice Experiences (APPE) curriculum in the United States [18]. The APPE comprise the fourth and final year of the Doctor of Pharmacy curriculum, commonly referred to as ‘rotations’ where students participate solely in experiential learning. While on APPE rotations students can be assessed using the 15 core EPAs. There is current effort to include the same approach within the Introductory Pharmacy Practice Experiences (IPPEs) across the earlier years of the curriculum. Beyond this, despite the increasing momentum for EPAs as a novel framework for competency-based pharmacy education, including the attainment of educational outcomes [18,26], other examples are more isolated small scale trials, or only limited to use in specific parts of a pharmacy program such as capstone course components [101,102]. No evidence supporting the effectiveness of an EPA framework in Pharmacy education has been published. Additionally, EPAs have not yet been used widely in pharmacy undergraduate education and further research and collaboration is needed to implement this approach more extensively [20].

4.6. Assessment of Cognitive Processes

Assessment of medicine dispensing skills can be challenging because it involves complex clinical and inter-relational judgements, rather than simply a series of technical or psychomotor actions to determine clinical competence. Clinical judgements relating to the safety and appropriateness of medicine are complex and dynamic, underpinned by several cognitive processes including retrieving and reviewing information, processing information, identifying issues, collaborative planning, decision-making and reflection [63,103]. Therefore, practitioner competence can be difficult to measure. Similar pedagogical challenges are documented in other professions [93,104]. A holistic approach to competence assessment is gaining momentum due to its ability to blend a range of processes that underpin clinical reasoning, however further work to incorporate this approach into assessment is needed [2,105,106].

5. Discussion

This review investigated the utilisation of competency assessment tools in pharmacy education. While there is no gold-standard assessment methodology, three key themes have been identified which provide opportunities for future development and research: (1) integrated approaches to performance assessment; (2) authentic and simulation-based assessment approaches, and; (3) collection of validity evidence to support assessment decisions.

The competency movement in medical education argues that an integrated approach to competence [16], and an integrated skills assessment, which evaluates a candidate’s ability to view the patient and dispensing process holistically, is more suitable during the advanced stages of training [37,44,107,108,109]. EPAs provide a mode for integrated approaches to assessment in pharmacy education, and although there is a strong theoretical basis for using EPAs to assess the performance during workplace-based training, less is known about how entrustment decisions can be incorporated into other assessment types and settings.

Our understanding of the utility of simulation-based assessments to offer an authentic and integrated skills assessment is increasing [77]. The most recent systematic review of simulation-based assessments in healthcare focused on ‘technical’ skills only which is indicative of a gap in the literature in relation to the use of simulation for the assessment of ‘non-technical’ skills, including interpersonal communication, teamwork and clinical decision-making [69]. There is a general consensus on the need to do further research into simulation-based assessment methods that focus on non-technical skills, particularly in studies in nursing [90,91,110,111,112]. Most simulation in pharmacy uses blended simulation in an OSCE context. Outside of the traditional OSCE format, there are a lack of studies specifically pertaining to the integrated skills assessment of pharmacists or pharmacy students’ competence, and fully integrated simulation assessments have not been reported. An opportunity exists to develop and evaluate integrated skills assessment that requires the candidate to manage all aspects of the patient encounter, incorporating important skills for clinical work including multitasking and task switching [97].

Although there are examples of validated tools in pharmacy education [47], many have been validated based on psychometric properties alone. There is increasing understanding that the isolated use of such approaches to the validation of current trends in complex assessment models may give an incomplete evaluation of the quality of the assessment overall [80,113,114]. More work is needed to ensure evidence-based validation approaches with multiple sources of evidence are used to support the proposed score interpretations, including the type of evidence collected, depending on the assessment instrument in question, and its intended application.

6. Conclusions

A dichotomy in the purpose of assessment is clear: accountability and student learning. While the concept of competency-based education is growing internationally, the future is not certain, and more work is required to ensure a valid and reliable measure of competency. This review of literature pertaining to assessments in pharmacy education is particularly useful in identifying opportunities to work towards the development of valid and reliable assessment frameworks. The goal of such frameworks is judgements which represent a valid reflection of the level of competence of pharmacy students and pharmacists. The review shows a need exists to explore key areas of assessment in pharmacy education, including: (1) integrated approaches to performance assessment; (2) authentic and simulation-based assessment approaches, and; (3) collection of validity evidence to support assessment decisions. There is a scarcity of published literature demonstrating the use of truly integrated simulation-based assessments in pharmacy. The results of this review provide motivation for further developing integrated assessment methods for assessing the competency of future pharmacists.

Author Contributions

H.C., R.R., C.G. and T.L.-J. conceived and designed the review; H.C. and C.G. completed the literature search and analysed the data; H.C., R.R., C.G., T.L.-J. and J.S. reviewed articles and wrote the paper.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lambert Schuwirth, W.T.; van der Vleuten, C. How to design a useful test: The principles of assessment. In Understanding Medical Education: Evidence, Theory and Practice; Swanwick, T., Ed.; The Association for the Study of Medical Education: Edinburgh, UK, 2010. [Google Scholar]

- Anderson, H.; Anaya, G.; Bird, E.; Moore, D. Review of Educational Assessment. Am. J. Pharm. Educ. 2005, 69, 84–100. [Google Scholar]

- Huba, M.; Freed, J. Learner-Centered Assessment on College Campus. In Shifting the Focus from Teaching to Learning; Allyn and Bacon: Boston, MA, USA, 2000; Volume 8. [Google Scholar]

- Koster, A.; Schalekamp, T.; Meijerman, I. Implementation of Competency-Based Pharmacy Education (CBPE). Pharmacy 2017, 5, 10. [Google Scholar] [CrossRef] [PubMed]

- Australian Qualifications Framework Council. Australian Qualifications Framework; Department of Industry, Science, Research and Tertiary Education Adelaide: Adelaide, Australia, 2013.

- Croft, H.; Nesbitt, K.; Rasiah, R.; Levett-Jones, T.; Gilligan, C. Safe dispensing in community pharmacies: How to apply the SHELL model for catching errors. Clin. Pharm. 2017, 9, 214–224. [Google Scholar]

- Kohn, L.; Corrigan, J.; Donaldson, M. To Err Is Human: Building a Safer Health System; National Academy Press: Washington, DC, USA, 1999. [Google Scholar]

- Universities Australia; Australian Chamber of Commerce and Inductry; Australian Inductry Group; The Business Council of Australia and the Australian Collaborative Education Network. National Strategy on Work Integrated Learning in University Education. Available online: http:///D:/UNIVERSITY%20COMPUTER/Pharmacist%20Reports/National%20Strategy%20on%20Work%20Integrated%20Learning%20in%20University%20Education.pdf (accessed on 19 December 2018).

- Nash, R.; Chalmers, L.; Brown, N.; Jackson, S.; Peterson, G. An international review of the use of competency standards in undergraduate pharmacy education. Pharm. Educ. 2015, 15, 131–141. [Google Scholar]

- Australian Health Practitioner Regulation Agency. National Registration and Accreditation Scheme Strategy 2015–2020. Available online: https://www.ahpra.gov.au/About-AHPRA/What-We-Do/NRAS-Strategy-2015-2020.aspx (accessed on 6 March 2019).

- Peeters, M.J. Targeting Assessment for Learning within Pharmacy Education. Am. J. Pharm. Educ. 2016, 81, 5–9. [Google Scholar] [CrossRef] [PubMed]

- Schuwirth, L.; van der Vleuten, C.P. Programmatic assessment: From assessment of learning to assessment for learning. Med. Teacher 2011, 33, 478–485. [Google Scholar] [CrossRef]

- Salinitri, F.; O’Connell, M.B.; Garwood, C.; Lehr, V.T.; Abdallah, K. An Objective Structured Clinical Examination to Assess Problem-Based Learning. Am. J. Pharm. Educ. 2012, 76, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Sturpe, D. Objective Structured Clinical Examinations in Doctor of Pharmacy Programs in the United States. Am. J. Pharm. Educ. 2010, 74, 1–6. [Google Scholar]

- Miller, G. The assessment of clinical skills/competence/performance. Acad. Med. 1990, 65, S63–S67. [Google Scholar] [CrossRef]

- Van der Vleuten, C.; Schuwirth, L.W. Assessing professional competence: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef]

- O’Brien, B.; May, W.; Horsley, T. Scholary conversations in medical education. Acad. Med. 2016, 91, S1–S9. [Google Scholar] [CrossRef] [PubMed]

- Pittenger, A.; Chapman, S.; Frail, C.; Moon, J.; Undeberg, M.; Orzoff, J. Entrustable Professional Activities for Pharmacy Practice. Am. J. Pharm. Educ. 2016, 80. [Google Scholar] [CrossRef] [PubMed]

- Health and Social Care Information Centre. NHS Statistics Prescribing. In Key Facts for England; Health and Social Care Information Centre: Leeds, UK, 2014. [Google Scholar]

- Jarrett, J.; Berenbrok, L.; Goliak, K.; Meyer, S.; Shaughnessy, A. Entrustable Professional Activities as a Novel Framework for Pharmacy Education. Am. J. Pharm. Educ. 2018, 82, 368–375. [Google Scholar] [CrossRef] [PubMed]

- Hirsch, A.; Parihar, H. A Capstone Course with a Comprehensive and Integrated Review of the Pharmacy Curiculum and Student Assessment as a Preparation for Advanced Pharmacy Practice Experience. Am. J. Pharm. Educ. 2014, 78, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Nestel, D.; Krogh, K.; Harlim, J.; Smith, C.; Bearman, M. Simulated Learning Technologies in Undergraduate Curricula: An Evidence Check Review for HETI; Health Education Training Institute: Melbourne, Australia, 2014. Available online: https://www.academia.edu/16527321/Simulated_Learning_Technologies_in_Undergraduate_Curricula_An_Evidence_Check_review_for_HETI (accessed on 20 April 2019).

- Van Zuilen, M.H.; Kaiser, R.M.; Mintzer, M.J. A competency-based medical student curriculum: Taking the medication history in older adults. J. Am. Geriatr. Soc. 2012, 60, 781–785. [Google Scholar] [CrossRef] [PubMed]

- Medina, M. Does Competency-Based Education Have a Role in Academic Pharmacy in the United States? Pharmacy 2017, 5, 13. [Google Scholar] [CrossRef]

- International Pharmaceutical Federation. FIP Statement Of Policy On Good Pharmacy Education Practice Vienna (Online); International Pharmaceutical Federation: Den Haag, The Netherlands, 2000. [Google Scholar]

- Medina, M.; Plaza, C.; Stowe, C.; Robinson, E.; DeLander, G.; Beck, D.; Melchert, R.; Supernaw, R.; Gleason, B.; Strong, M.; et al. Center for the Advancement of Pharmacy Education 2013 Educational Outcomes. Am. J. Pharm. Educ. 2013, 77, 162. [Google Scholar] [CrossRef]

- Webb, D.D.; Lambrew, C.T. Evaluation of physician skills in cardiopulmonary resuscitation. J. Am. Coll. Emerg. Phys. Univ. Ass. Emerg. Med. Serv. 1978, 7, 387–389. [Google Scholar] [CrossRef]

- Pharmaceutical Society of Australia. National Competency Standards Framework for Pharmacists in Australia; Pharmaceutical Society of Australia: Canberra, Australia, 2016; pp. 11–15. [Google Scholar]

- General Pharmaceutical Council. Future Pharmacists Standards for the Initial Education and Training of Pharmacists; General Pharmaceutical Council (online): London, UK, 2011. [Google Scholar]

- Olle tenCate. Entrustment as Assessment: Recognizing the Ability, the Right and the Duty to Act. J. Grad. Med. Educ. 2016, 8, 261–262. [Google Scholar] [CrossRef]

- Creuss, R.; Creuss, S.; Steinert, Y. Amending Miller’s Pyramid to Include Professional Identity Formation. Acad. Med. 2016, 91, 180–185. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Considine, J.; Botti, M.; Thomas, S. Design, format, validity and reliability of multiple choice questions for use in nursing research and education. Collegian 2005, 12, 19–24. [Google Scholar] [CrossRef]

- Case, S.; Swanson, D. Extended-matching items: A practical alternative to free-response questions. Teach. Learn. Med. 1993, 5, 107–115. [Google Scholar] [CrossRef]

- McCoubrie, P. Improving the fairness of multiple-choice questions: A literature review. Med. Teach. 2004, 26, 709–712. [Google Scholar] [CrossRef] [PubMed]

- Moss, E. Multiple choice questions: Their value as an assessment tool. Curr. Opin. Anaesthesiol. 2001, 14, 661–666. [Google Scholar] [CrossRef] [PubMed]

- Australian Pharmacy Council. Intern Year Blueprint Literature Review; Australian Pharmacy Council Limited: Canberra, ACT, Australia, 2017. [Google Scholar]

- Cobourne, M. What’s wrong with the traditional viva as a method of assessment in orthodontic education? J. Orthod. 2010, 37, 128–133. [Google Scholar] [CrossRef]

- Rastegarpanah, M.; Mafinejad, M.K.; Moosavi, F.; Shirazi, M. Developing and validating a tool to evaluate communication and patient counselling skills of pharmacy students: A pilot study. Pharm. Educ. 2019, 19, 19–25. [Google Scholar]

- Salas, E.; Wilson, K.; Burke, S.; Priest, H. Using Simulation-Based Training to Improve Patient Safety: What Does It Take? J. Qual. Patient Saf. 2005, 31, 363–371. [Google Scholar] [CrossRef]

- Abdel-Tawab, R.; Higman, J.D.; Fichtinger, A.; Clatworthy, J.; Horne, R.; Davies, G. Development and validation of the Medication-Related Consultation Framework (MRCF). Patient Educ. Couns. 2011, 83, 451–457. [Google Scholar] [CrossRef]

- Khan, K.; Ramachandran, S.; Gaunt, K.; Pushkar, P. The Objective Structured Clinical Examination (OSCE): AMEE Guide No. 81. Part I: An historical and theoretical perspective. Med. Teacher 2013, 35, e1437–e1446. [Google Scholar] [CrossRef]

- Van der Vleuten, C.P.M.; Schuwirth, L.; Scheele, F.; Driessen, E.; Hodges, B. The assessment of professional competence: Building blocks for theory development. Best Practice and Research. Clin. Obstet. Gynaecol. 2010, 24, 703–719. [Google Scholar]

- Williamson, J.; Osborne, A. Critical analysis of case based discussions. Br. J. Med. Pract. 2012, 5, a514. [Google Scholar]

- Davies, J.; Ciantar, J.; Jubraj, B.; Bates, I. Use of multisource feedback tool to develop pharmacists in a postgraduate training program. Am. J. Pharm. Educ. 2013, 77. [Google Scholar] [CrossRef] [PubMed]

- Patel, J.; Sharma, A.; West, D.; Bates, I.; Davies, J.; Abdel-Tawab, R. An evaluation of using multi-source feedback (MSF) among junior hospital pharmacists. Int. J. Pharm. Pract. 2011, 19, 276–280. [Google Scholar] [CrossRef] [PubMed]

- Patel, J.; West, D.; Bates, I.; Eggleton, A.; Davies, G. Early experiences of the mini-PAT (Peer Assessment Tool) amongst hospital pharmacists in South East London. Int. J. Pharm. Pract. 2009, 17, 123–126. [Google Scholar] [CrossRef]

- Archer, J.; Norcini, J.; Davies, H. Use of SPRAT for peer review of paediatricians in training. Br. Med. J. 2005, 330, 1251–1253. [Google Scholar] [CrossRef] [PubMed]

- Tochel, C.; Haig, A.; Hesketh, A. The effectiveness of portfolios for post-graduate assessment and education: BEME Guide No 12. Med. Teacher 2009, 31, 299–318. [Google Scholar] [CrossRef] [PubMed]

- Ten Cate, O. A primer on entrustable professional activities. Korean J. Med Educ. 2018, 30, 1–10. [Google Scholar] [CrossRef] [PubMed]

- TenCate, O. Entrustment Decisions: Bringing the Patient Into the Assessment Equation. Acad. Med. 2017, 92, 736–738. [Google Scholar] [CrossRef]

- Thompson, L.; Leung, C.; Green, B.; Lipps, J.; Schaffernocker, T.; Ledford, C.; Davis, J.; Way, D.; Kman, N. Development of an Assessment for Entrustable Professional Activity (EPA) 10: Emergent Patient Management. West. J. Emerg. Med. 2016, 18, 35–42. [Google Scholar] [CrossRef]

- Cook, D.A.; Reed, D.A. Appraising the Quality of Medical Education Research Methods: The Medical Education Research Study Quality Instrument and the Newcastle-Ottawa Scale-Education. A Acad. Med. 2015, 90, 1067–1076. [Google Scholar] [CrossRef] [PubMed]

- Santos, S.; Manuel, J. Design, implementation and evaluation of authentic assessment experience in a pharmacy course: Are students getting it? In Proceedings of the 3rd International Conference in Higher Education Advances, Valencia, Spain, 21–23 June 2017. [Google Scholar]

- Mackellar, A.; Ashcroft, D.M.; Bell, D.; James, D.H.; Marriott, J. Identifying Criteria for the Assessment of Pharmacy Students’ Communication Skills With Patients. Am. J. Pharm. Educ. 2007, 71, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Kadi, A.; Francioni-Proffitt, D.; Hindle, M.; Soine, W. Evaluation of Basic Compounding Skills of Pharmacy Students. Am. J. Pharm. Educ. 2005, 69, 508–515. [Google Scholar] [CrossRef][Green Version]

- Kirton, S.B.; Kravitz, L. Objective Structured Clinical Examinations (OSCEs) Compared with Traditional Assessment Methods. Am. J. Pharm. Educ. 2011, 75, 111. [Google Scholar] [CrossRef] [PubMed]

- Kimberlin, C. Communicating With Patients: Skills Assessment in US Colleges of Pharmacy. Am. J. Pharm. Educ. 2006, 70, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Aojula, H.; Barber, J.; Cullen, R.; Andrews, J. Computer-based, online summative assessment in undergraduate pharmacy teaching: The Manchester experience. Pharm. Educ. 2006, 6, 229–236. [Google Scholar] [CrossRef]

- Kelley, K.; Beatty, S.; Legg, J.; McAuley, J.W. A Progress Assessment to Evaluate Pharmacy Students’ Knowledge Prior to Beginning Advanced Pharmacy Practice Experiences. Am. J. Pharm. Educ. 2008, 72, 88. [Google Scholar] [CrossRef]

- Hanna, L.-A.; Davidson, S.; Hall, M. A questionnaire study investigating undergraduate pharmacy students’ opinions on assessment methods and an integrated five-year pharmacy degree. Pharm. Educ. 2017, 17, 115–124. [Google Scholar]

- Benedict, N.; Smithburger, P.; Calabrese, A.; Empey, P.; Kobulinsky, L.; Seybert, A.; Waters, T.; Drab, S.; Lutz, J.; Farkas, D.; et al. Blended Simulation Progress Testing for Assessment of Practice Readiness. Am. J. Pharm. Educ. 2017, 81, 14. [Google Scholar]

- World Health Organisation. Ensuring good dispensing practice. In Management Sciences for Health. MDS-3: Managing Access to Medicines and Health Technologies; Spivey, P., Ed.; World Health Organisation: Arlington, VA, USA, 2012. [Google Scholar]

- Pharmacy Board of Australia. Guidlines for Dispensing Medicines; Pharmacy Board of Australia: Canberra, Australia, 2015.

- The Pharmacy Guild of Australia. Dispensing Your Prescription Medicine: More Than Sticking A Label on A Bottle; The Pharmacy Guild of Australia: Canberra, Australia, 2016. [Google Scholar]

- Jungnickel, P.W.; Kelley, K.W.; Hammer, D.P.; Haines, S.T.; Marlowe, K.F. Addressing competencies for the future in the professional curriculum. Am. J. Pharm. Educ. 2009, 73, 156. [Google Scholar] [CrossRef]

- Merrium, S.; Bierema, L. Adult Learning: Linking Theory and Practice; Jossey Bass Ltd.: San Francisco, CA, USA, 2014; Volume 1. [Google Scholar]

- Scalese, R.; Obeso, V.; Issenberg, S.B. Simulation Technology for Skills Training and Competency Assessment in Medical Education. J. Gen. Intern. Med. 2007, 23, 46–49. [Google Scholar] [CrossRef] [PubMed]

- Ryall, T.; Judd, B.; Gordon, C. Simulation-based assessments in health professional education: A systematic review. J. Multidiscip. Healthc. 2016, 9, 69–82. [Google Scholar] [PubMed]

- Seybert, A.L.; Kobulinsky, L.R.; McKaveney, T.P. Human patient simulation in a pharmacotherapy course. Am. J. Pharm. Educ. 2008, 72, 37. [Google Scholar] [CrossRef] [PubMed]

- Smithburger, P.L.; Kane-Gill, S.L.; Ruby, C.M.; Seybert, A.L. Comparing effectiveness of 3 learning strategies: Simulation-based learning, problem-based learning, and standardized patients. Simul. Healthc. 2012, 7, 141–146. [Google Scholar] [CrossRef] [PubMed]

- Bray, B.S.; Schwartz, C.R.; Odegard, P.S.; Hammer, D.P.; Seybert, A.L. Assessment of human patient simulation-based learning. Am. J. Pharm. Educ. 2011, 75, 208. [Google Scholar] [CrossRef] [PubMed]

- Seybert, A. Patient Simulation in Pharmacy Education. Am. J. Pharm. Educ. 2011, 75, 187. [Google Scholar] [CrossRef] [PubMed]

- Abdulmohsen, H. Simulation-based medical teaching and learning. J. Fam. Community Med. 2010, 17, 35–40. [Google Scholar]

- Aggarwal, R.; Mytton, O.; Derbrew, M.; Hananel, D.; Heydenberg, M.; Barry Issenberg, S.; MacAulay, C.; Mancini, M.E.; Morimoto, T.; Soper, N.; et al. Training and simulation for patient safety. J. Qual. Saf. Health Care 2010, 19, i34–i43. [Google Scholar] [CrossRef]

- Boulet, J. Summative Assessment in Medicine: The Promise of Simulation for High-stakes Evaluation. Acad. Emerg. Med. 2008, 15, 1017–1024. [Google Scholar] [CrossRef]

- Sears, K.; Godfrey, C.; Luctkar-Flude, M.; Ginsberg, L.; Tregunno, D.; Ross-White, A. Measuring competence in healthcare learners and healthcare professionals by comparing self-assessment with objective structured clinical examinations: A systematic review. JBI Database Syst. Rev. 2014, 12, 221–229. [Google Scholar] [CrossRef]

- Nursing and Midwifery Board of Australia. Framework for Assessing Standards for Practice for Registered Nurses, Enrolled Nurses and Midwives. Australian Health Practitioner Regulation Agency. 2017. Available online: http://www.nursingmidwiferyboard.gov.au/Codes-Guidelines-Statements/Frameworks/Framework-for-assessing-national-competency-standards.aspx (accessed on 14 April 2019).

- Nulty, D.; Mitchell, M.; Jeffrey, A.; Henderson, A.; Groves, M. Best Practice Guidelines for use of OSCEs: Maximising value for student learning. Nurse Educ. Today 2011, 31, 145–151. [Google Scholar] [CrossRef] [PubMed]

- Marceau, M.; Gallagher, F.; Young, M.; St-Onge, C. Validity as a social imperative for assessment in health professions education: A concept analysis. Med. Educ. 2018, 52, 641–653. [Google Scholar] [CrossRef] [PubMed]

- Morgan, P.; Cleave-Hogg, D.; DeSousa, S.; Tarshis, J. High-fidelity patient simulation: Validation of performance checklists. Br. J. Anaesth. 2004, 92, 388–392. [Google Scholar] [CrossRef] [PubMed]

- Morgan, P.; Lam-McCulloch, J.; McIlroy, J.H.; Tarchis, J. Simulation performance checklist generation using the Delphi technique. Can. J. Anaesth. 2007, 54, 992–997. [Google Scholar] [CrossRef] [PubMed]

- Bann, S.; Davis, I.M.; Moorthy, K.; Munz, Y.; Hernandez, J.; Khan, M.; Datta, V.; Darzi, A. The reliability of multiple objective measures of surgery and the role of human performance. Am. J. Surg. 2005, 189, 747–752. [Google Scholar] [CrossRef] [PubMed]

- Boulet, J.; Murray, D.; Kras, J.; Woodhouse, J.; McAllister, J.; Ziv, A. Relaibility and validity of a simulation-based acute care skills assessment for medical students and residents. Anesthesiology 2003, 99, 1270–1280. [Google Scholar] [CrossRef] [PubMed]

- DeMaria, S., Jr.; Samuelson, S.T.; Schwartz, A.D.; Sim, A.J.; Levine, A.I. Simulation-based assessment and retraining for the anesthesiologist seeking reentry to clinical practice: A case series. Anesthesiology 2013, 119, 206–217. [Google Scholar] [CrossRef] [PubMed]

- Gimpel, J.; Boulet, D.; Errichetti, A. Evaluating the clinical skills of osteopathic medical students. J. Am. Osteopathy Assoc. 2003, 103, 267–279. [Google Scholar]

- Lipner, R.; Messenger, J.; Kangilaski, R.; Baim, D.; Holmes, D.; Williams, D.; King, S. A technical and cognitive skills eveluation of performance in interventional cardioogy procedures using medical simulation. J. Simul. Healthc. 2010, 5, 65–74. [Google Scholar] [CrossRef]

- Tavares, W.; LeBlanc, V.R.; Mausz, J.; Sun, V.; Eva, K.W. Simulation-based assessment of paramedics and performance in real clinical contexts. Prehosp. Emerg. Care 2014, 18, 116–122. [Google Scholar] [CrossRef]

- Isenberg, G.A.; Berg, K.W.; Veloski, J.A.; Berg, D.D.; Veloski, J.J.; Yeo, C.J. Evaluation of the use of patient-focused simulation for student assessment in a surgery clerkship. Am. J. Surg. 2011, 201, 835–840. [Google Scholar] [CrossRef] [PubMed]

- Kesten, K.S.; Brown, H.F.; Meeker, M.C. Assessment of APRN Student Competency Using Simulation: A Pilot Study. Nurs. Educ. Perspect. 2015, 36, 332–334. [Google Scholar] [CrossRef] [PubMed]

- Rizzolo, M.A.; Kardong-Edgren, S.; Oermann, M.H.; Jeffries, P.R. The National League for Nursing Project to Explore the Use of Simulation for High-Stakes Assessment: Process, Outcomes, and Recommendations. Nurs. Educ. Perspect. 2015, 36, 299–303. [Google Scholar] [CrossRef] [PubMed]

- McBride, M.; Waldrop, W.; Fehr, J.; Boulet, J.; Murray, D. Simulation in pediatrics: The reliability and validity of a multiscenario assessment. Pediatrics 2011, 128, 335–343. [Google Scholar] [CrossRef] [PubMed]

- Franklin, N.; Melville, P. Competency assessment tools: An exploration of the pedagogical issues facing competency assessment for nurses in the clinical environment. Collegian 2013, 22, 25–31. [Google Scholar] [CrossRef]

- Thompson, N. A practitioner’s guide for variable-length computerised classification testing. Pract. Assess. Res. Eval. 2007, 12, 1–13. [Google Scholar]

- Krieter, C.; Ferguson, K.; Gruppen, L. Evaluating the usefulness of computerized adaptive testing for medical in-course assessment. Acad. Med. 1999, 74, 1125–1128. [Google Scholar] [CrossRef]

- Pharmaceutical society of Australia. Dispensing Practice Guidelines; Pharmaceutical Society of Australia Ltd.: Canberra, ACT, Austrilia, 2017. [Google Scholar]

- Douglas, H.; Raban, M.; Walter, S.; Westbrook, J. Improving our understanding of multi-tasking in healthcare: Drawing together the cognitive psychology and healthcare literature. Appl. Ergon. 2017, 59, 45–55. [Google Scholar] [CrossRef]

- Shirwaikar, A. Objective structured clinical examination (OSCE) in pharmacy education—A trend. Pharm. Pract. 2015, 13, 627. [Google Scholar] [CrossRef]

- Haines, S.; Pittenger, A.; Stolte, S. Core entrustable professional activities for new pharmacy graduates. Am. J. Pharm. Educ. 2017, 81, S2. [Google Scholar]

- Pittenger, A.; Copeland, D.; Lacroix, M.; Masuda, Q.; Plaza, C.; Mbi, P.; Medina, M.; Miller, S.; Stolte, S. Report of the 2015–2016 Academic Affairs Standing Committee: Entrustable Professional Activities Implementation Roadmap. Am. J. Pharm. Educ. 2017, 81, S4. [Google Scholar] [PubMed]

- Marotti, S.; Sim, Y.T.; Macolino, K.; Rowett, D. From Entrustment of Competence to Entrustable Professional Activities. Available online: http://www.mm2018shpa.com/wp-content/uploads/2018/11/282_Marotti-S_From-assessment-of-competence-to-Entrustable-Professional-Activities-EPAs-.pdf (accessed on 14 April 2019).

- Al-Sallami, H.; Anakin, M.; Smith, A.; Peterson, A.; Duffull, S. Defining Entrustable Professional Activities to Drive the Learning of Undergradate Pharmacy Students School of Pharmacy; University of Otago: Dunedin, New Zealand, 2018. [Google Scholar]

- Croft, H.; Gilligan, C.; Rasiah, R.; Levett-Jones, T.; Schneider, J. Thinking in Pharmacy Practice: A Study of Community Pharmacists’ Clinical Reasoning in Medication Supply Using the Think-Aloud Method. Pharmacy 2017, 6, 1. [Google Scholar] [CrossRef] [PubMed]

- Epstein, R.M. Assessment in medical education. N. Engl. J. Med. 2007, 356, 387–396. [Google Scholar] [CrossRef] [PubMed]

- Cowan, T.; Norman, I.; Coopamah, V. Competence in nursing practice: A controversial concept—A focused literature review. Accid. Emerg. Nurs. 2007, 15, 20–26. [Google Scholar] [CrossRef] [PubMed]

- Ilgen, J.; Ma, I.W.Y.; Hatala, R.; Cook, D.A. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med. Educ. 2015, 49, 161–173. [Google Scholar] [CrossRef]

- Smith, V.; Muldoon, K.; Biesty, L. The Objective Structured Clinical Examination (OSCE) as a strategy for assessing clinical competence in midwifery education in Ireland: A critical review. Nurse Educ. Pract. 2012, 12, 242–247. [Google Scholar] [CrossRef]

- Rushforth, H. Objective structured clinical examination (OSCE): Review of literature and implications for nursing education. Nurse Educ. Today 2007, 27, 481–490. [Google Scholar] [CrossRef]

- Barman, A. Critiques on the objective structured clinical examination. Ann. Acad. Med. Singap. 2005, 34, 478–482. [Google Scholar]

- Malec, J.F.; Torsher, L.C.; Dunn, W.F.; Wiegmann, D.A.; Arnold, J.J.; Brown, D.A.; Phatak, V. The mayo high performance teamwork scale: Reliability and validity for evaluating key crew resource management skills. Simul. Healthc. 2007, 2, 4–10. [Google Scholar] [CrossRef]

- Flin, R.; Patey, R. Improving patient safety through training in non-technical skills. Br. Med. J. 2009, 339. [Google Scholar] [CrossRef]

- Forsberg, E.; Ziegert, K.; Hult, H.; Fors, U. Clinical reasoning in nursing, a think-aloud study using virtual patients—A base for an innovative assessment. Nurse Educ. Today 2014, 34, 538–542. [Google Scholar] [CrossRef] [PubMed]

- Tavares, W.; Brydges, R.; Myre, P.; Prpic, J.; Turner, L.; Yelle, R.; Huiskamp, M. Applying Kane’s validity framework to a simulation based assessment of clinical competence. Adv. Health Sci. Educ. Theory Pract. 2018, 23, 323–338. [Google Scholar] [CrossRef] [PubMed]

- Cizek, G. Progress on validity: The glass half full, the work half done. Assess. Educ. Princ. Policy Pract. 2016, 23, 304–308. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).