Applications of 3D Reconstruction in Virtual Reality-Based Teleoperation: A Review in the Mining Industry

Abstract

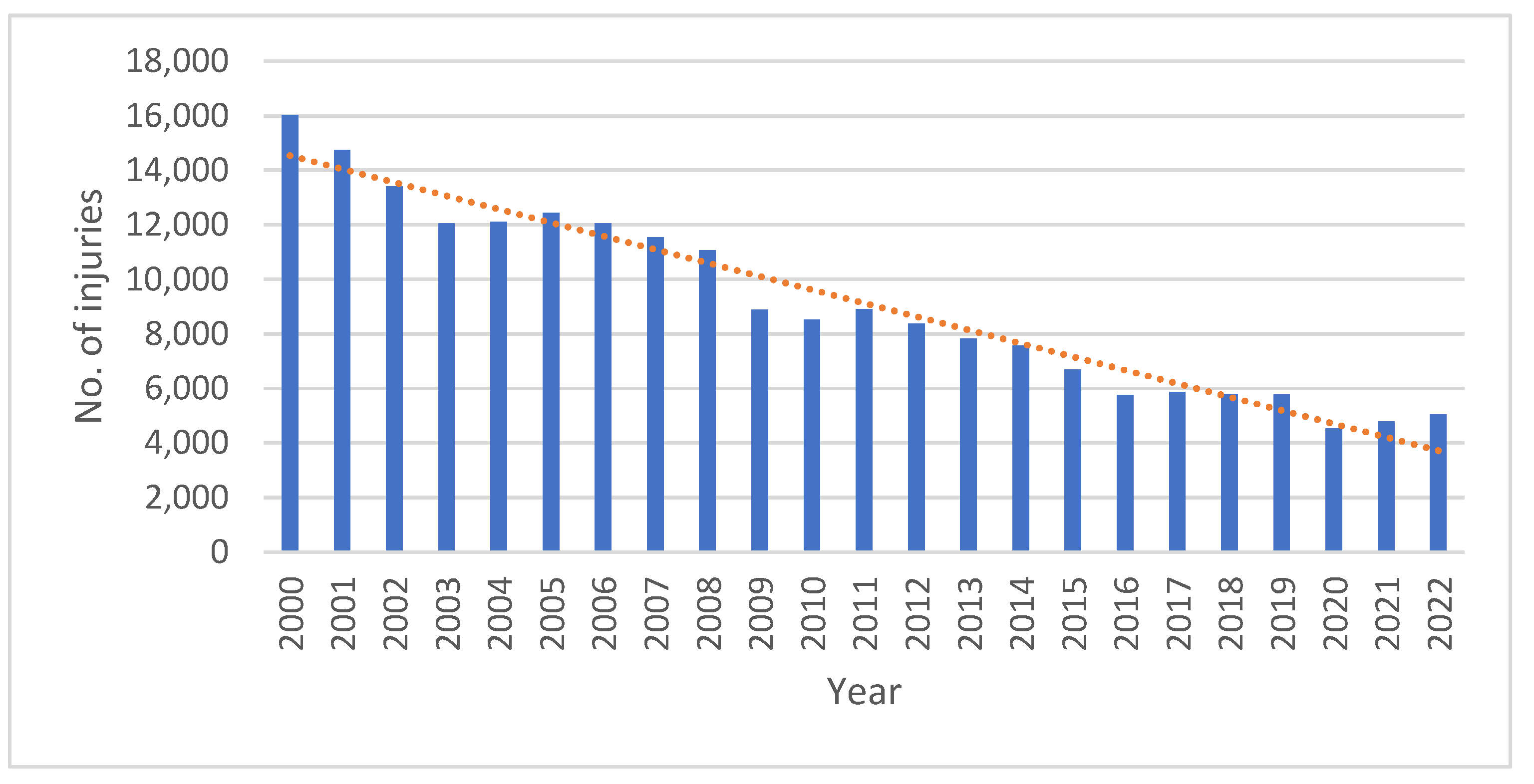

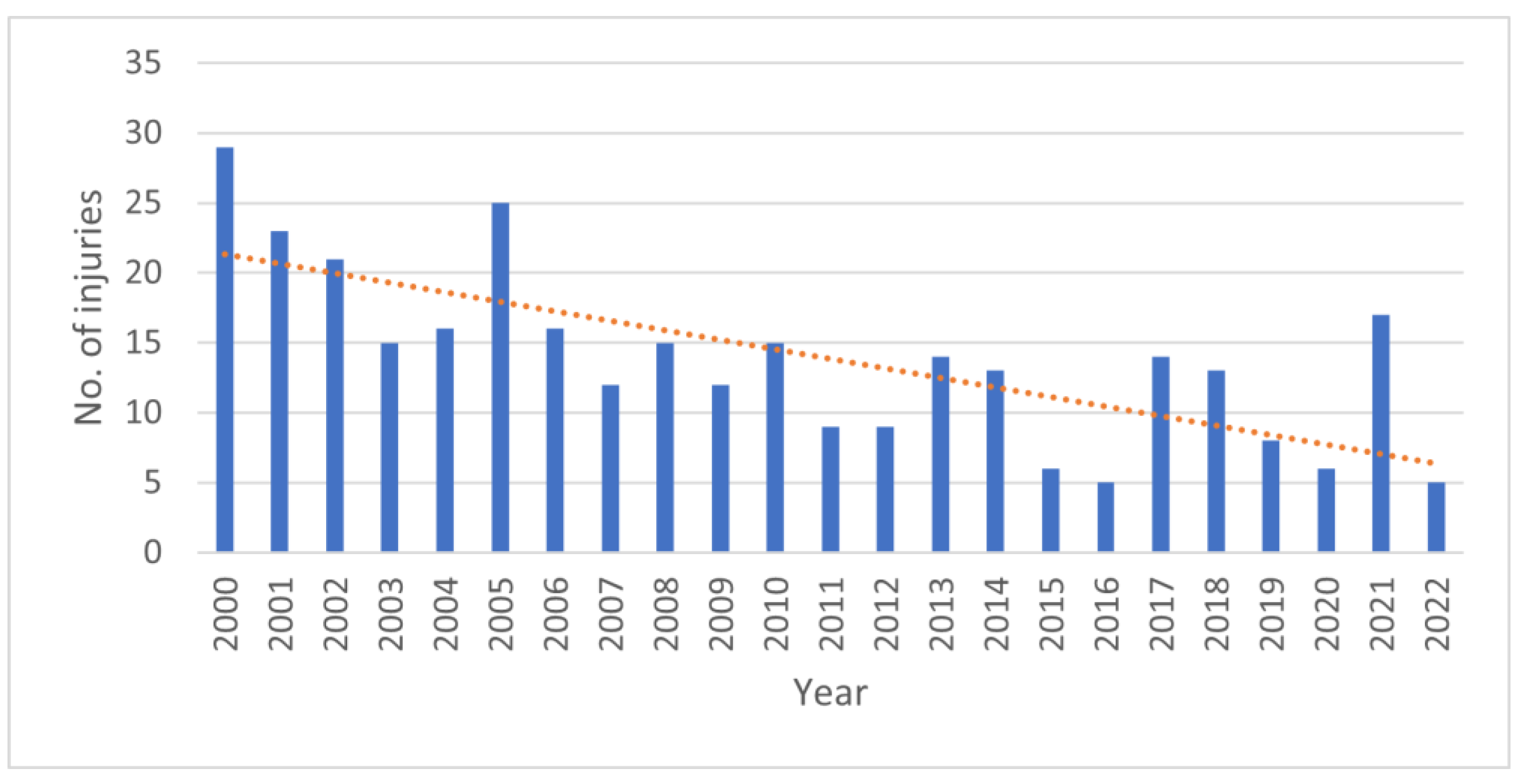

1. Introduction

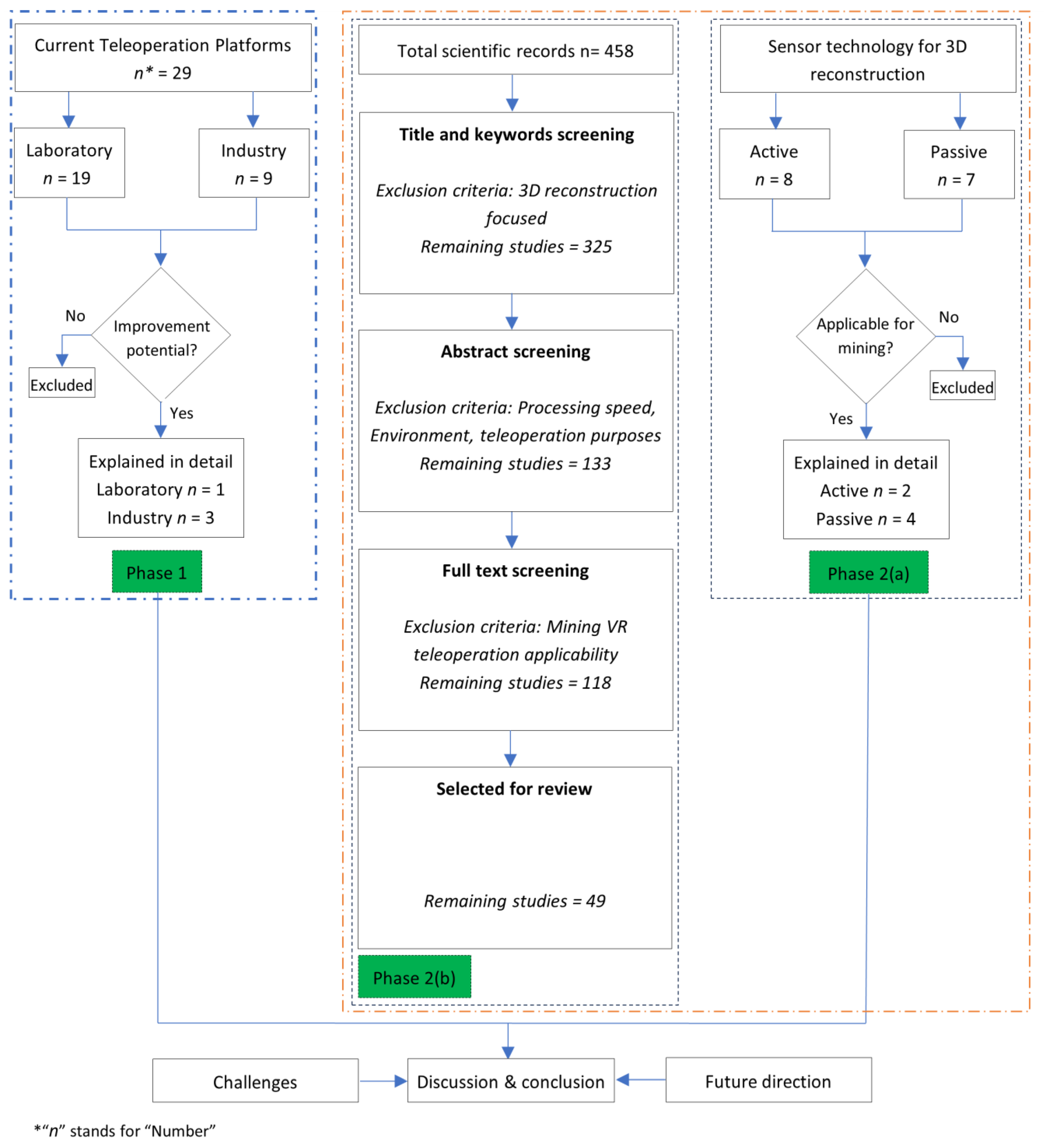

- The identification and evaluation of existing teleoperation platforms capable of potential transformation into VR-based teleoperation systems tailored for mining operations.

- The investigation and classification of sensor technologies that possess the capacity to enhance telepresence within the mining operation.

- The analysis of 3D reconstruction research studies and algorithms relevant to scenarios adaptable for employment in surface and underground mining telepresence scenarios.

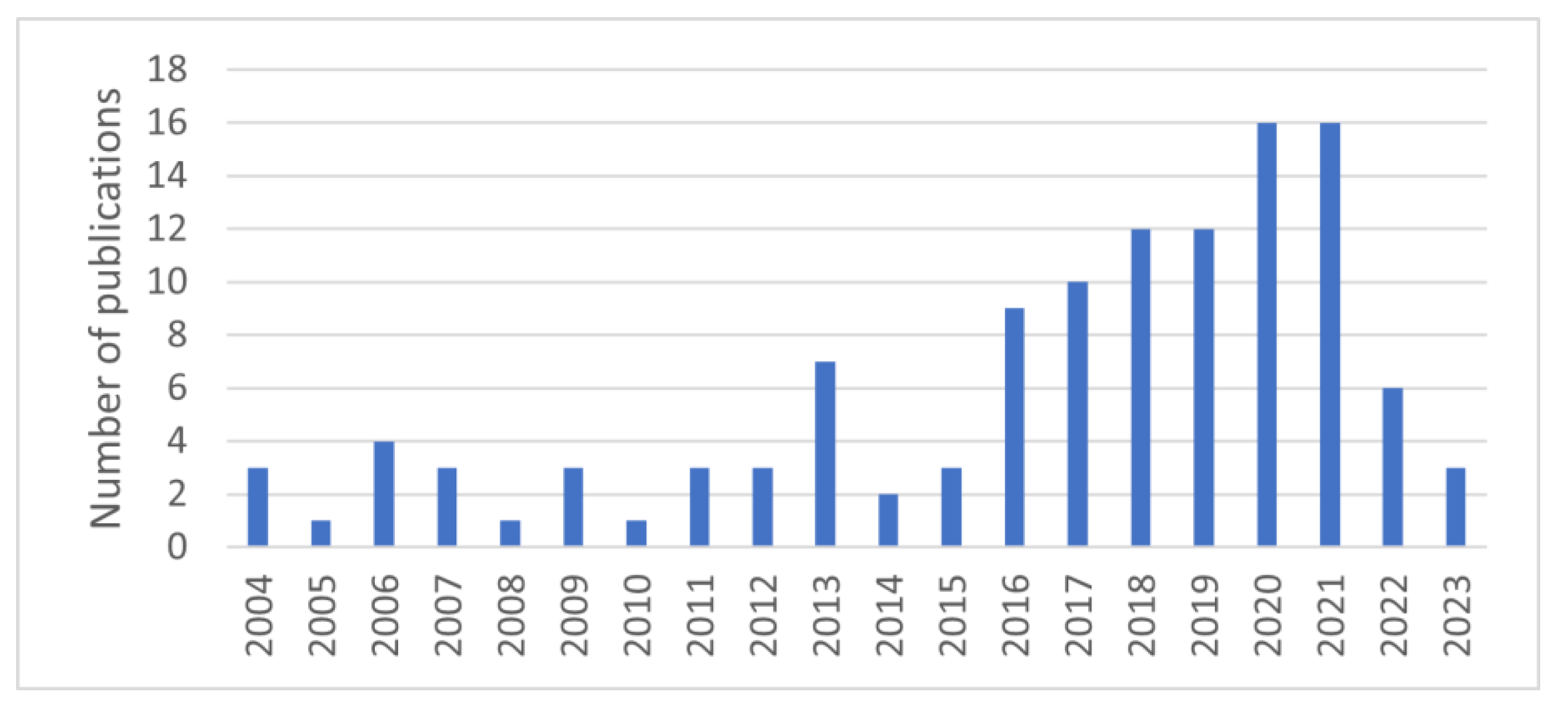

2. Materials and Methods

3. Results

3.1. Current Technologies in Teleoperation

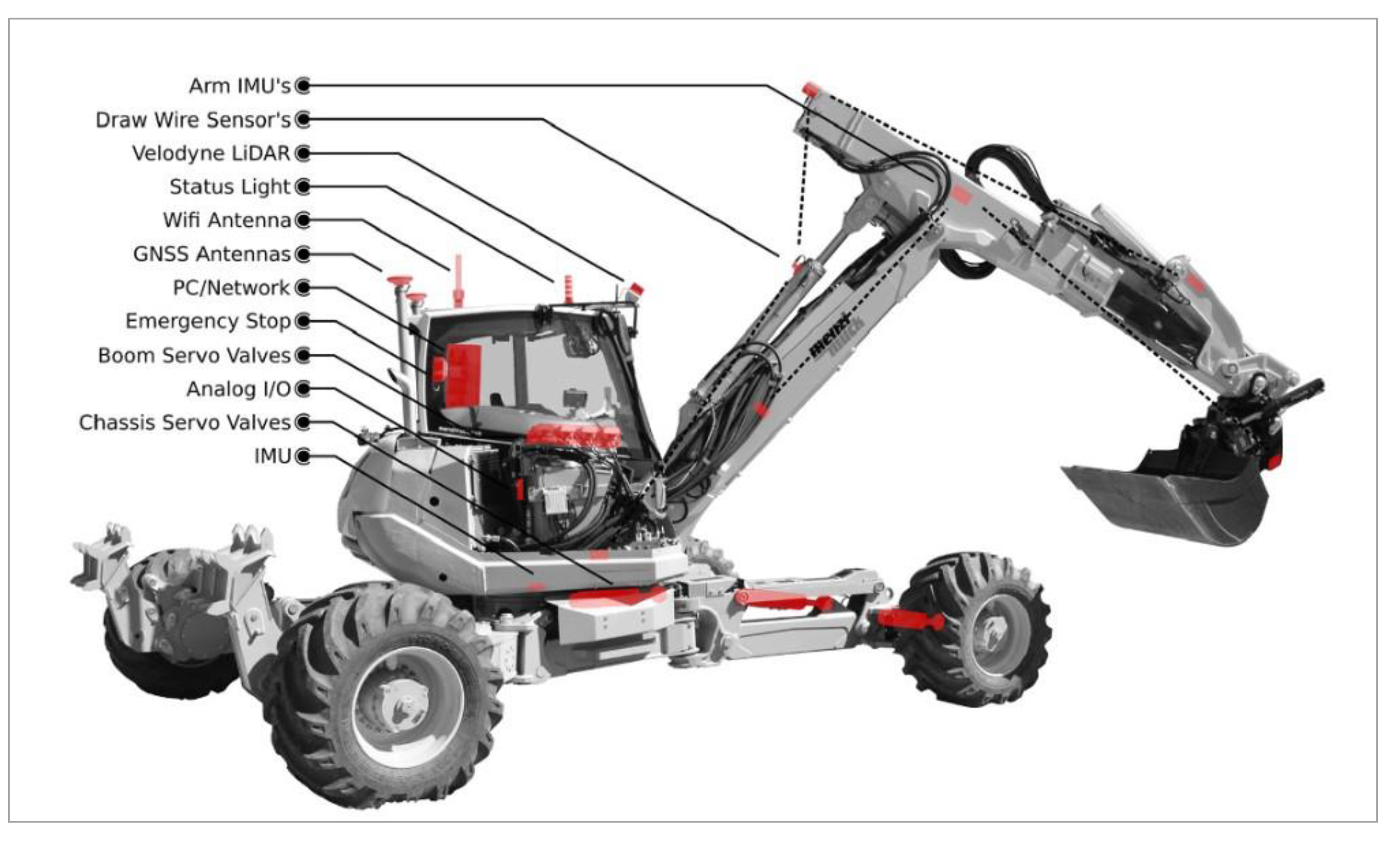

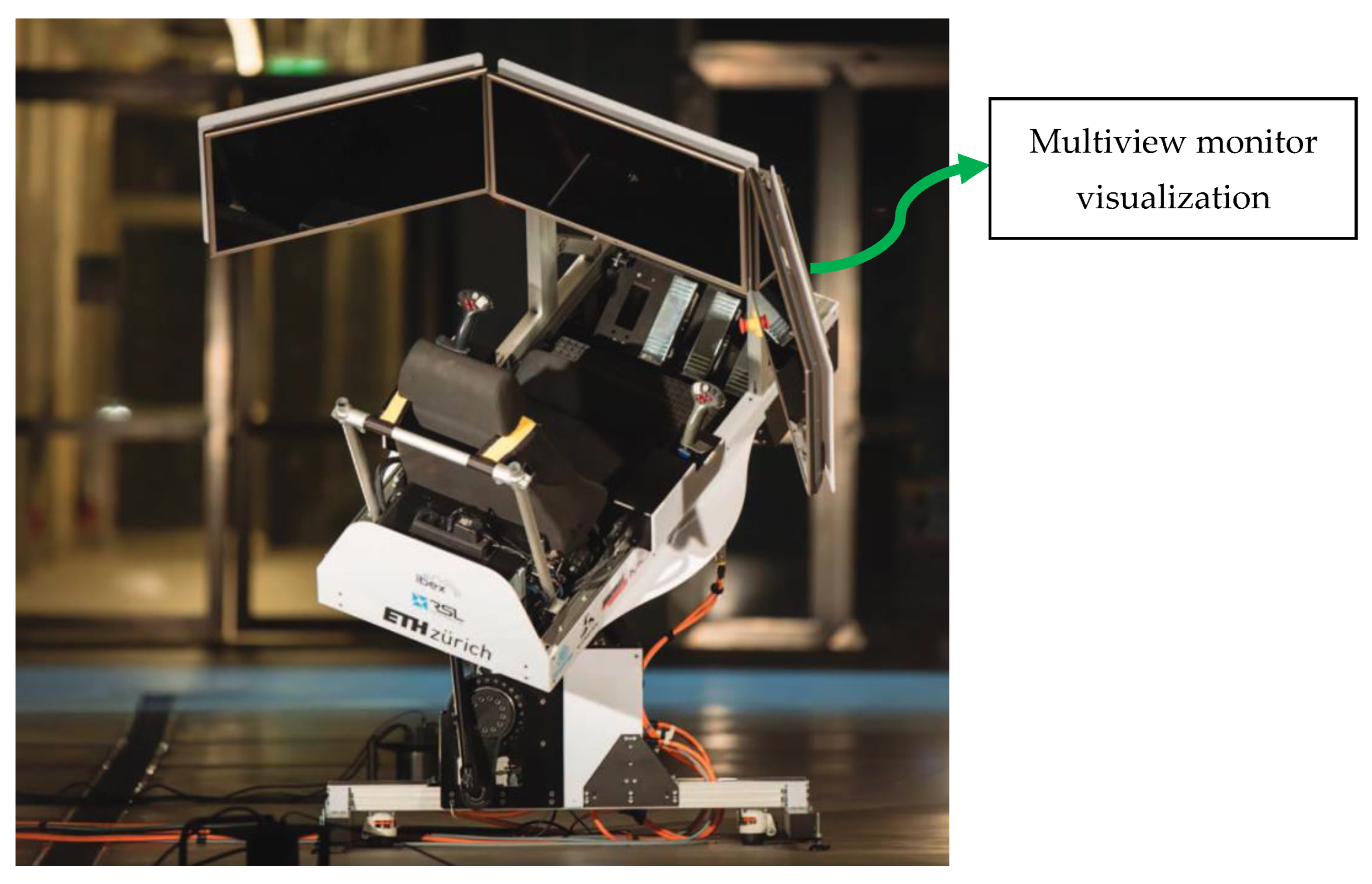

3.1.1. Research Laboratories

3.1.2. Heavy Equipment Manufacturers

- A 360° view monitor and machine display;

- The implementation of a semi-automated loading system;

- Real-time operator guidance and coaching through augmented reality (AR) technology.

- “Teleremote”—employed for bucket loading and unloading;

- “Copilot”—a semi-autonomous mode;

- “Autopilot”—facilitating autonomous machine operation.

3.2. 3D Reconstruction Approaches for Immersive Mining Teleoperation

3.2.1. Data Acquisition for 3D Reconstruction

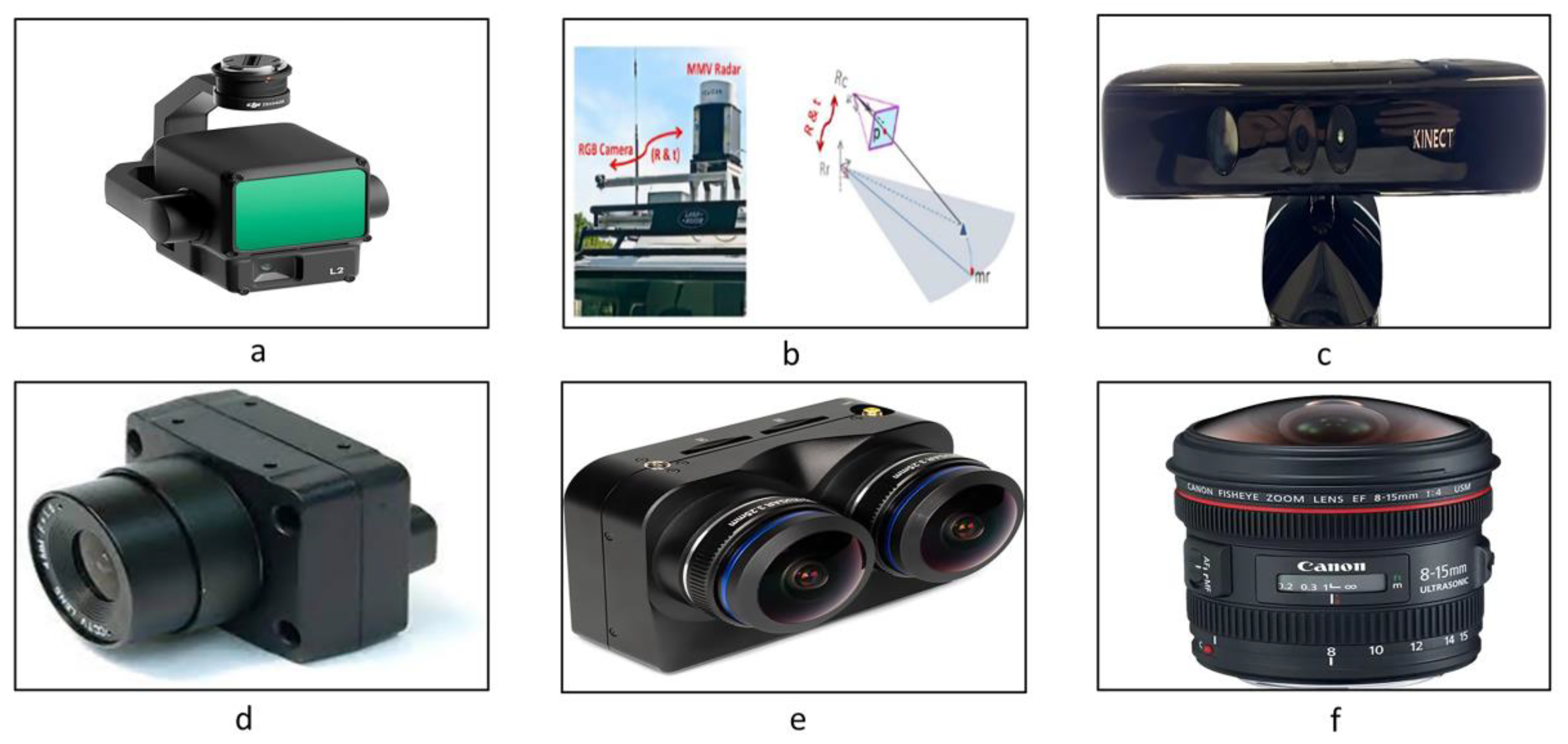

Active Sensors

- Lidar

- Radar

Passive Sensors

- RGB-D Camera

- Monocular Camera

- Binocular/Stereo Camera

- Fisheye Cameras

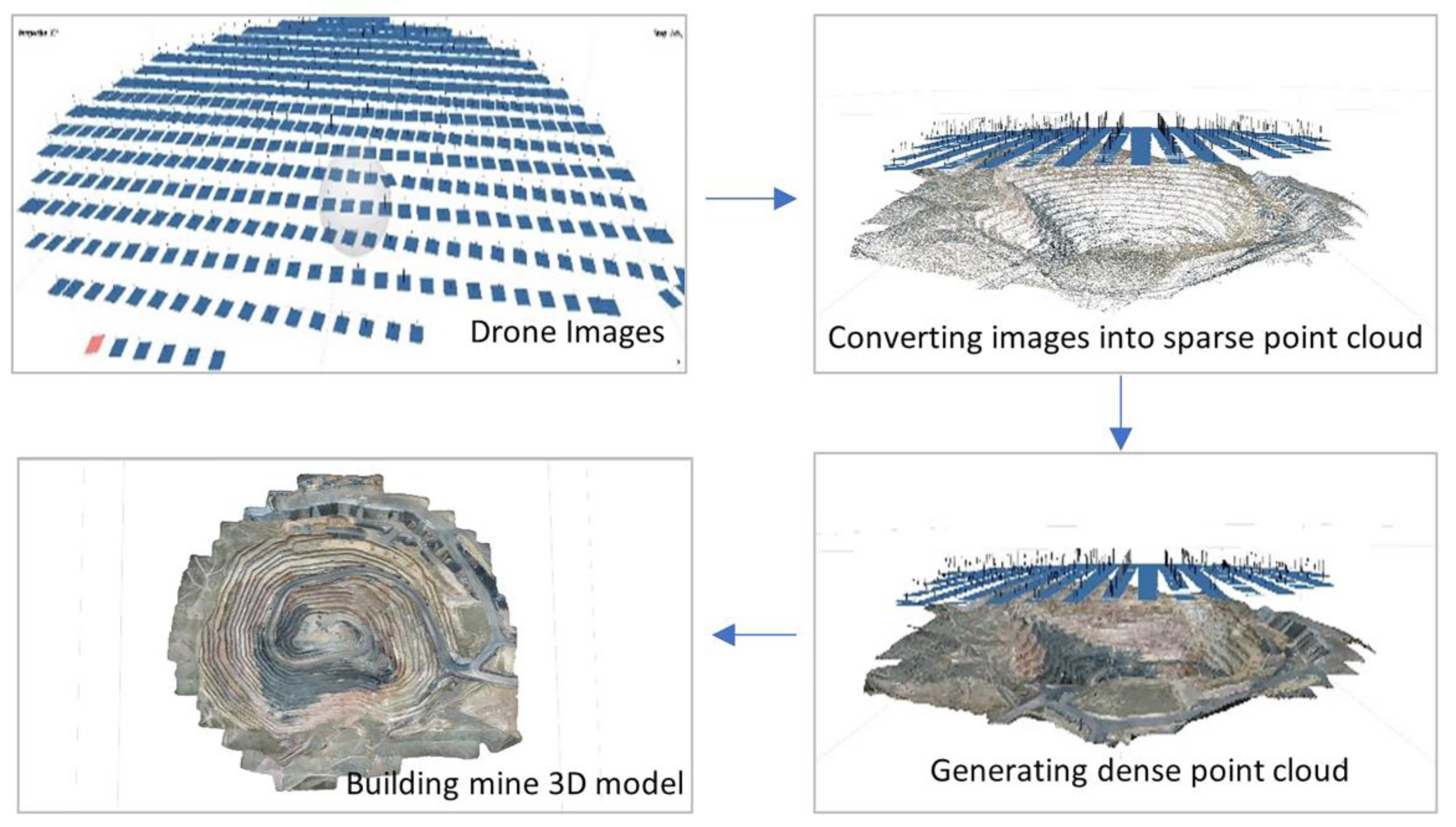

3.2.2. 3D Reconstruction in Surface Mines

Point Cloud Generation from Stereo Images

Stereo Camera Calibration

Feature Extraction

Feature Matching

Sparse 3D Reconstruction

Model Parameter Correction

Dense 3D Reconstruction

Point Cloud Segmentation

- Voxel Grid Based: This technique divides the data into uniform cubes, known as voxels. It is versatile and widely applicable for analyzing, segmenting, and processing point cloud data, making it useful for object recognition, augmented reality, CAD modeling, and other applications in large-scale scenes [80].

- Plane Detection: Detecting flat surfaces (planes) in point cloud data is a versatile method in 3D reconstruction. RANSAC and Hough transformation are among this method’s most common algorithms/techniques [81].

- Clustering: This method effectively clusters irregularly shaped objects, making it suitable for reconstructing outdoor environments. K-means and DBSCAN are popular algorithms used in this technique [82].

- Deep Learning Based: Convolutional neural networks have gained significant attention for their performance in 3D reconstruction. Techniques such as Mask R-CNN, FCN (Fully Convolutional Network), DeepLab, and PSPNet (Pyramid Scene Parsing Network) are notable in this domain [83].

Point Cloud Modeling

3.2.3. 3D Reconstruction in Underground Mines

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANMS | Adaptive non-maximal suppression |

| AR | Augmented reality |

| BRIEF | Binary robust independent elementary features |

| CGAN | Conditional generative adversarial nets |

| CMVS | Clustering views for multiview stereo |

| CRF | Conditional random fields |

| DBSCAN | Density-based spatial clustering of applications with noise |

| DDA | Amanatides and Woo 1987 |

| EKF | Extended Kalman filter |

| FEM | Finite element method |

| FRP | Free rigid priors |

| GLSL | OpenGL shading language |

| GMD-RDS | Gaussian mixture distribution—random down-sampling |

| ICP | Iterative closest point |

| IMU | Inertial measurement unit |

| MSCKF | Multi-state constraint Kalman filter |

| PEAC | Plane extraction in organized point clouds using agglomerative hierarchical clustering |

| VIO | Visual–inertial odometry |

| VR | Virtual reality |

References

- Moniri-Morad, A.; Pourgol-Mohammad, M.; Aghababaei, H.; Sattarvand, J. Production Capacity Insurance Considering Reliability, Availability, and Maintainability Analysis. ASCE ASME J. Risk Uncertain. Eng. Syst. A Civ. Eng. 2022, 8, 04022018. [Google Scholar] [CrossRef]

- Moniri-Morad, A.; Pourgol-Mohammad, M.; Aghababaei, H.; Sattarvand, J. Capacity-based performance measurements for loading equipment in open pit mines. J. Cent. South Univ. 2019, 6, 1672–1686. Available online: https://www.infona.pl/resource/bwmeta1.element.springer-doi-10_1007-S11771-019-4124-5 (accessed on 6 February 2024). [CrossRef]

- Mathew, R.; Hiremath, S.S. Control of Velocity-Constrained Stepper Motor-Driven Hilare Robot for Waypoint Navigation. Engineering 2018, 4, 491–499. [Google Scholar] [CrossRef]

- Dong, L.; Sun, D.; Han, G.; Li, X.; Hu, Q.; Shu, L. Velocity-Free Localization of Autonomous Driverless Vehicles in Underground Intelligent Mines. IEEE Trans. Veh. Technol. 2020, 69, 9292–9303. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. Available online: https://www.sciencedirect.com/science/article/pii/S1537511017309625 (accessed on 20 October 2021). [CrossRef]

- Lichiardopol, S. A survey on teleoperation. DCT rapporten, no. 155, 2007. Available online: https://research.tue.nl/files/4419568/656592.pdf (accessed on 6 February 2024).

- Stassen, H.G.; Smets, G.J.F. Telemanipulation and telepresence. Control. Eng. Pract. 1997, 5, 363–374. Available online: https://www.sciencedirect.com/science/article/pii/S0967066197000130 (accessed on 20 October 2021). [CrossRef]

- Mine Disasters, 1839–2022|NIOSH|CDC. Available online: https://wwwn.cdc.gov/NIOSH-Mining/MMWC/MineDisasters/Count (accessed on 19 January 2024).

- Mine Data Retrieval System|Mine Safety and Health Administration (MSHA). Available online: https://www.msha.gov/data-and-reports/mine-data-retrieval-system (accessed on 19 January 2024).

- Moniri-Morad, A.; Shishvan, M.S.; Aguilar, M.; Goli, M.; Sattarvand, J. Powered haulage safety, challenges, analysis, and solutions in the mining industry; a comprehensive review. Results Eng. 2024, 21, 101684. [Google Scholar] [CrossRef]

- Nielsen, C.W.; Goodrich, M.A.; Ricks, R.W. Ecological interfaces for improving mobile robot teleoperation. IEEE Trans. Robot. 2007, 23, 927–941. Available online: https://ieeexplore.ieee.org/abstract/document/4339541/ (accessed on 21 October 2021). [CrossRef]

- Meier, R.; Fong, T.; Thorpe, C.; Baur, C. Sensor fusion based user interface for vehicle teleoperation. In Field and Service Robotics; InfoScience: Dover, DE, USA, 1999; Available online: https://infoscience.epfl.ch/record/29985/files/FSR99-RM.pdf (accessed on 21 October 2021).

- Sato, R.; Kamezaki, M.; Niuchi, S.; Sugano, S.; Iwata, H. Cognitive untunneling multi-view system for teleoperators of heavy machines based on visual momentum and saliency. Autom. Constr. 2020, 110, 103047. [Google Scholar] [CrossRef]

- Marsh, E.; Dahl, J.; Pishhesari, A.K.; Sattarvand, J.; Harris, F.C. A Virtual Reality Mining Training Simulator for Proximity Detection. In Proceedings of the ITNG 2023 20th International Conference on Information Technology-New Generations, Las Vegas, NV, USA, 24 April 2023; pp. 387–393. [Google Scholar] [CrossRef]

- Ingale, A.K. Real-time 3D reconstruction techniques applied in dynamic scenes: A systematic literature review. Comput. Sci. Rev. 2021, 39, 100338. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. D4AR–a 4-dimensional augmented reality model for automating construction progress monitoring data collection, processing and communication. J. Inf. Technol. Constr. 2009, 14, 129–153. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.416.4584&rep=rep1&type=pdf (accessed on 25 October 2021).

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. Available online: https://www.sciencedirect.com/science/article/pii/S1077314215001071 (accessed on 25 October 2021). [CrossRef]

- Lin, J.; Zhang, F. R3LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. arXiv 2021, arXiv:2109.07982. [Google Scholar]

- Opiyo, S.; Zhou, J.; Mwangi, E.; Kai, W.; Sunusi, I. A Review on Teleoperation of Mobile Ground Robots: Architecture and Situation Awareness. Int. J. Control Autom. Syst. 2021, 19, 1384–1407. [Google Scholar] [CrossRef]

- XIMEA—Case Study: Remotely Operated Walking Excavator. Available online: https://www.ximea.com/en/corporate-news/excavator-remotely-operated-tele?responsivizer_template=desktop (accessed on 8 November 2021).

- Jud, D.; Kerscher, S.; Wermelinger, M.; Jelavic, E.; Egli, P.; Leemann, P.; Hottiger, G.; Hutter, M. HEAP—The autonomous walking excavator. Autom. Constr. 2021, 129, 103783. [Google Scholar] [CrossRef]

- Menzi Muck M545x Generation. Available online: https://menziusa.com/menzi-muck/ (accessed on 13 March 2024).

- XIMEA—Remotely Operated Walking Excavator: XIMEA Cameras Inside. Bauma 2019. Available online: https://www.ximea.com/en/exhibitions/excavator-munich-bauma-2019 (accessed on 8 November 2021).

- HEAP—Robotic Systems Lab|ETH Zurich. Available online: https://rsl.ethz.ch/robots-media/heap.html (accessed on 8 November 2021).

- MRTech SK|Image Processing. Available online: https://mr-technologies.com/ (accessed on 8 November 2021).

- Hutter, M.; Braungardt, T.; Grigis, F.; Hottiger, G.; Jud, D.; Katz, M.; Leemann, P.; Nemetz, P.; Peschel, J.; Preisig, J.; et al. IBEX—A tele-operation and training device for walking excavators. In Proceedings of the SSRR 2016—International Symposium on Safety, Security and Rescue Robotics, Lausanne, Switzerland, 23–27 October 2016; pp. 48–53. [Google Scholar]

- MINExpo INTERNATIONAL® 2021. Available online: https://www.minexpo.com/ (accessed on 8 November 2021).

- Immersive Technologies—Expect Results. Available online: https://www.immersivetechnologies.com/ (accessed on 8 November 2021).

- Doosan First to Use 5G for Worldwide ‘TeleOperation’|Doosan Infracore Europe. Available online: https://eu.doosanequipment.com/en/news/2019-28-03-doosan-to-use-5g-frombauma (accessed on 8 November 2021).

- Doosan First to Use 5G for Worldwide ‘TeleOperation’|Doosan Infracore Europe. Available online: https://eu.doosanequipment.com/en/news/2019-28-03-doosan-to-use-5g (accessed on 8 November 2021).

- Cat® Command|Remote Control|Cat|Caterpillar. Available online: https://www.cat.com/en_US/products/new/technology/command.html (accessed on 10 November 2021).

- Cat Command Remote Console and Station|Cat|Caterpillar. Available online: https://www.cat.com/en_US/products/new/technology/command/command/108400.html (accessed on 10 November 2021).

- Cat® Command for Loading & Excavation—Associated Terminals—YouTube. Available online: https://www.youtube.com/watch?v=sokQep1_7Gw (accessed on 10 November 2021).

- Cat Command for Underground|Cat|Caterpillar. Available online: https://www.cat.com/en_US/products/new/technology/command/command/102320.html (accessed on 10 November 2021).

- Andersen, K.; Gaab, S.J.; Sattarvand, J.; Harris, F.C. METS VR: Mining Evacuation Training Simulator in Virtual Reality for Underground Mines. Adv. Intell. Syst. Comput. 2020, 1134, 325–332. [Google Scholar] [CrossRef]

- Metashape V2 Software Package. Agisoft. 2024. Available online: https://www.agisoft.com/ (accessed on 24 January 2024).

- Xu, Y.; Stilla, U. Toward Building and Civil Infrastructure Reconstruction from Point Clouds: A Review on Data and Key Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2857–2885. [Google Scholar] [CrossRef]

- Whitaker, R.T. A level-set approach to 3D reconstruction from range data. Int. J. Comput. Vis. 1998, 18, 203–231. Available online: https://link.springer.com/article/10.1023/A:1008036829907 (accessed on 6 February 2024). [CrossRef]

- Remondino, F.; El-Hakim, S. Image-based 3D modelling: A review. Photogramm. Rec. 2006, 21, 269–291. [Google Scholar] [CrossRef]

- De Reu, J.; De Smedt, P.; Herremans, D.; Van Meirvenne, M.; Laloo, P.; De Clercq, W. On introducing an image-based 3D reconstruction method in archaeological excavation practice. J. Archaeol. Sci. 2014, 41, 251–262. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Zenmuse L2 LiDAR Sensor. DJI. 2024. Available online: https://enterprise.dji.com/zenmuse-l2 (accessed on 6 February 2024).

- LiDAR Remote Sensing and Applications—Pinliang Dong, Qi Chen—Google Books. Available online: https://books.google.com/books?hl=en&lr=&id=jXFQDwAAQBAJ&oi=fnd&pg=PP1&dq=lidar+&ots=j9QNyg8bWx&sig=LwzOQi2OiiUqRqSIYdtOqULRPOM#v=onepage&q=lidar&f=false (accessed on 20 January 2022).

- Lim, K.; Treitz, P.; Wulder, M.; St-Ongé, B.; Flood, M. LiDAR remote sensing of forest structure. Prog. Phys. Geogr. 2003, 27, 88–106. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Talebi, E.; Roghanchi, P.; Hassanalian, M. A Comprehensive Review of Applications of Drone Technology in the Mining Industry. Drones 2020, 4, 34. [Google Scholar] [CrossRef]

- An Introduction to RADAR. Available online: https://helitavia.com/skolnik/Skolnik_chapter_1.pdf (accessed on 18 April 2022).

- El Natour, G.; Ait-Aider, O.; Rouveure, R.; Berry, F.; Faure, P. Toward 3D reconstruction of outdoor scenes using an MMW radar and a monocular vision sensor. Sensors 2015, 15, 25937–25967. [Google Scholar] [CrossRef]

- Safari, M.; Mashhadi, S.R.; Esmaeilzadeh, N.; Pour, A.B. Multisensor Remote Sensing of the Mountain Pass Carbonatite-Hosted REE Deposit. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS) 2023, Pasadena, CA, USA, 16–21 July 2023; pp. 3693–3695. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D Mapping with an RGB-D camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- Da Silva Neto, J.G.; Da Lima Silva, P.J.; Figueredo, F.; Teixeira, J.M.X.N.; Teichrieb, V. Comparison of RGB-D sensors for 3D reconstruction. In Proceedings of the 2020 22nd Symposium on Virtual and Augmented Reality, SVR 2020, Porto de Galinhas, Brazil, 7–10 November 2020; pp. 252–261. [Google Scholar] [CrossRef]

- Darwish, W.; Li, W.; Tang, S.; Wu, B.; Chen, W. A Robust Calibration Method for Consumer Grade RGB-D Sensors for Precise Indoor Reconstruction. IEEE Access 2019, 7, 8824–8833. [Google Scholar] [CrossRef]

- Sensor Fusion of LiDAR and Camera—An Overview|by Navin Rahim|Medium. Available online: https://medium.com/@navin.rahim/sensor-fusion-of-lidar-and-camera-an-overview-697eb41223a3 (accessed on 29 October 2023).

- Calibrate a Monocular Camera—MATLAB & Simulink. Available online: https://www.mathworks.com/help/driving/ug/calibrate-a-monocular-camera.html (accessed on 18 April 2022).

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Caselitz, T.; Steder, B.; Ruhnke, M.; Burgard, W. Monocular camera localization in 3D LiDAR maps. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems 2016, Daejeon, Republic of Korea, 9–14 October 2016; pp. 1926–1931. [Google Scholar] [CrossRef]

- Z CAM K1 Pro Cinematic VR180 Camera K2501 B&H Photo Video. Available online: https://www.bhphotovideo.com/c/product/1389667-REG/z_cam_k2501_k1_pro_cinematic_vr180.html/?ap=y&ap=y&smp=y&smp=y&smpm=ba_f2_lar&lsft=BI%3A514&gad_source=1&gclid=Cj0KCQiAr8eqBhD3ARIsAIe-buPD3axh-_L88vlywplW5WdiG8eAydVL5ma60kwnUmnKvluZ51mVUn4aAobyEALw_wcB (accessed on 12 November 2023).

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the IEEE Computer Society Conference on Computer Vision. and Pattern Recognition 2006, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 519–526. [Google Scholar] [CrossRef]

- Sung, C.; Kim, P.Y. 3D terrain reconstruction of construction sites using a stereo camera. Autom. Constr. 2016, 64, 65–77. [Google Scholar] [CrossRef]

- EF 8–15mm f/4L Fisheye USM. Available online: https://www.usa.canon.com/shop/p/ef-8-15mm-f-4l-fisheye-usm?color=Black&type=New (accessed on 12 November 2023).

- Schöps, T.; Sattler, T.; Häne, C.; Pollefeys, M. Large-scale outdoor 3D reconstruction on a mobile device. Comput. Vis. Image Underst. 2017, 157, 151–166. [Google Scholar] [CrossRef]

- Battulwar, R.; Winkelmaier, G.; Valencia, J.; Naghadehi, M.Z.; Peik, B.; Abbasi, B.; Parvin, B.; Sattarvand, J. A practical methodology for generating high-resolution 3D models of open-pit slopes using UAVs: Flight path planning and optimization. Remote Sens. 2020, 12, 2283. [Google Scholar] [CrossRef]

- Battulwar, R.; Valencia, J.; Winkelmaier, G.; Parvin, B.; Sattarvand, J. High-resolution modeling of open-pit slopes using UAV and photogrammetry. In Mining Goes Digital; CRC Press: Boca Raton, FL, USA, 2019; Available online: https://www.researchgate.net/profile/Jorge-Valencia-18/publication/341192810_High-resolution_modeling_of_open-pit_slopes_using_UAV_and_photogrammetry/links/5eb2f992299bf152d6a1a603/High-resolution-modeling-of-open-pit-slopes-using-UAV-and-photogrammetry.pdf (accessed on 6 February 2024).

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Pollefeys, M.; Van Gool, L.; Vergauwen, M.; Verbiest, F.; Cornelis, K.; Tops, J.; Koch, R. Visual Modeling with a Hand-Held Camera. Int. J. Comput. Vis. 2004, 59, 207–232. [Google Scholar] [CrossRef]

- Remondino, F.; Fraser, C. Digital camera calibration methods. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, XXXVI, 266–272. [Google Scholar] [CrossRef]

- Gao, Z.; Gao, Y.; Su, Y.; Liu, Y.; Fang, Z.; Wang, Y.; Zhang, Q. Stereo camera calibration for large field of view digital image correlation using zoom lens. Measurement 2021, 185, 109999. [Google Scholar] [CrossRef]

- Kwon, H.; Park, J.; Kak, A.C. A new approach for active stereo camera calibration. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3180–3185. [Google Scholar] [CrossRef]

- Feng, W.; Su, Z.; Han, Y.; Liu, H.; Yu, Q.; Liu, S.; Zhang, D. Inertial measurement unit aided extrinsic parameters calibration for stereo vision systems. Opt. Lasers Eng. 2020, 134, 106252. Available online: https://www.sciencedirect.com/science/article/pii/S014381662030302X (accessed on 25 December 2021). [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications. 2010. Available online: https://books.google.com/books?hl=en&lr=&id=bXzAlkODwa8C&oi=fnd&pg=PR4&dq=Computer+Vision+Algorithms+and+Applications+by+Richard+Szeliski&ots=g--35-pABK&sig=wswbPomq55aj9B3o5ya52kIScu4 (accessed on 1 January 2022).

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Morel, J.M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. Alvey Vis. Conf. 1988, 15, 10–5244. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.434.4816&rep=rep1&type=pdf (accessed on 1 January 2022).

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 3951 LNCS, pp. 430–443. [Google Scholar] [CrossRef]

- Brown, M.; Szeliski, R.; Winder, S. Multi-image matching using multi-scale oriented patches. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Available online: https://ieeexplore.ieee.org/abstract/document/1467310/ (accessed on 1 January 2022).

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 3951 LNCS, pp. 404–417. [Google Scholar] [CrossRef]

- Alahi, A.; Ortiz, R.; Vandergheynst, P. FREAK: Fast retina keypoint. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 510–517. [Google Scholar] [CrossRef]

- Xu, C.; Wu, B.; Wang, Z.; Zhan, W.; Vajda, P.; Keutzer, K.; Tomizuka, M. SqueezeSegV3: Spatially-Adaptive Convolution for Efficient Point-Cloud Segmentation. In Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 12373 LNCS, pp. 1–19. [Google Scholar]

- Li, L.; Yang, F.; Zhu, H.; Li, D.; Li, Y.; Tang, L. An Improved RANSAC for 3D Point Cloud Plane Segmentation Based on Normal Distribution Transformation Cells. Remote Sens. 2017, 9, 433. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Chen, J.; Kira, Z.; Cho, Y.K. Deep Learning Approach to Point Cloud Scene Understanding for Automated Scan to 3D Reconstruction. J. Comput. Civil. Eng. 2019, 33, 04019027. [Google Scholar] [CrossRef]

- Liao, Y.; Donné, S.; Geiger, A. Deep Marching Cubes: Learning Explicit Surface Representations. 2018, pp. 2916–2925. Available online: https://avg.is.tue.mpg.de/research (accessed on 22 October 2023).

- Murez, Z.; van As, T.; Bartolozzi, J.; Sinha, A.; Badrinarayanan, V.; Rabinovich, A. Atlas: End-to-End 3D Scene Reconstruction from Posed Images. In Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 12352 LNCS, pp. 414–431. [Google Scholar]

- Choi, S.; Zhou, Q.Y.; Koltun, V. Robust reconstruction of indoor scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision. and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 5556–5565. [Google Scholar] [CrossRef]

- Naseer, M.; Khan, S.; Porikli, F. Indoor Scene Understanding in 2.5/3D for Autonomous Agents: A Survey. IEEE Access 2019, 7, 1859–1887. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. DeepVCP: An End-to-End Deep Neural Network for Point Cloud Registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 12–21. [Google Scholar]

- Cavallari, T.; Golodetz, S.; Lord, N.A.; Valentin, J.; Prisacariu, V.A.; Di Stefano, L.; Torr, P.H. Real-Time RGB-D Camera Pose Estimation in Novel Scenes Using a Relocalisation Cascade. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2465–2477. [Google Scholar] [CrossRef]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3D.net: A new Large-scale Point Cloud Classification Benchmark. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 91–98. [Google Scholar] [CrossRef]

- Brasoveanu, A.; Moodie, M.; Agrawal, R. BundleFusion: Real-time Globally Consistent 3D Reconstruction using On-the-fly Surface Re-integration. In CEUR Workshop Proceedings; CEUR-WS: Aachen, Germany, 2017; pp. 1–9. [Google Scholar]

- Petit, B.; Lesage, J.D.; Menier, C.; Allard, J.; Franco, J.S.; Raffin, B.; Boyer, E.; Faure, F. Multicamera real-time 3D modeling for telepresence and remote collaboration. Int. J. Digit. Multimed. Broadcast. 2010, 2010, 247108. [Google Scholar] [CrossRef]

- Zhao, C.; Sun, L.; Stolkin, R. A fully end-to-end deep learning approach for real-time simultaneous 3D reconstruction and material recognition. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; Available online: https://ieeexplore.ieee.org/abstract/document/8023499/?casa_token=avAyNhc_PMYAAAAA:ev-1i0fyy2rgQXugb03VvE6MQuOZBcEBrqZttzL6TA70czwQtvp3GWgRZwPFjyOAAXTsbQbO (accessed on 7 October 2023).

- Lin, J.; Wang, Y.; Sun, H. A Feature-adaptive Subdivision Method for Real-time 3D Reconstruction of Repeated Topology Surfaces. 3D Res. 2017, 8, 6. [Google Scholar] [CrossRef]

- Lin, J.H.; Wang, L.; Wang, Y.J. A Hierarchical Optimization Algorithm Based on GPU for Real-Time 3D Reconstruction. 3D Res. 2017, 8, 16. [Google Scholar] [CrossRef]

- Agudo, A.; Moreno-Noguer, F.; Calvo, B.; Montiel, J.M.M. Real-time 3D reconstruction of non-rigid shapes with a single moving camera. Comput. Vis. Image Underst. 2016, 153, 37–54. [Google Scholar] [CrossRef]

- Xu, Y.; Dong, P.; Lu, L.; Dong, J.; Qi, L. Combining depth-estimation-based multi-spectral photometric stereo and SLAM for real-time dense 3D reconstruction. In ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2018; pp. 81–85. [Google Scholar] [CrossRef]

- Runz, M.; Buffier, M.; Agapito, L. MaskFusion: Real-Time Recognition, Tracking and Reconstruction of Multiple Moving Objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2018, Munich, Germany, 16–20 October 2018; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019; pp. 10–20. [Google Scholar] [CrossRef]

- Lu, F.; Zhou, B.; Zhang, Y.; Zhao, Q. Real-time 3D scene reconstruction with dynamically moving object using a single depth camera. Vis. Comput. 2018, 34, 753–763. [Google Scholar] [CrossRef]

- Stotko, P.; Krumpen, S.; Hullin, M.B.; Weinmann, M.; Klein, R. SLAMCast: Large-Scale, Real-Time 3D Reconstruction and Streaming for Immersive Multi-Client Live Telepresence. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2102–2112. [Google Scholar] [CrossRef]

- Laidlow, T.; Czarnowski, J.; Leutenegger, S. DeepFusion: Real-time dense 3D reconstruction for monocular SLAM using single-view depth and gradient predictions. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; Available online: https://ieeexplore.ieee.org/abstract/document/8793527/ (accessed on 20 April 2022).

- Li, C.; Yu, L.; Fei, S. Large-Scale, Real-Time 3D Scene Reconstruction Using Visual and IMU Sensors. IEEE Sens. J. 2020, 20, 5597–5605. [Google Scholar] [CrossRef]

- Gong, M.; Li, R.; Shi, Y.; Zhao, P. Design of a Quadrotor Navigation Platform Based on Dense Reconstruction. In Proceedings of the 2020 Chinese Automation Congress, CAC 2020, Shanghai, China, 6–8 November 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020; pp. 7262–7267. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, T.; Li, G.; Dong, J.; Yu, H. MARS: Parallelism-based metrically accurate 3D reconstruction system in real-time. J. Real. Time Image Process. 2021, 18, 393–405. [Google Scholar] [CrossRef]

- He, Y.; Zheng, S.; Zhu, F.; Huang, X. Real-time 3D reconstruction of thin surface based on laser line scanner. Sensors 2020, 20, 534. [Google Scholar] [CrossRef]

- Fu, Y.; Yan, Q.; Liao, J.; Chow, A.L.H.; Xiao, C. Real-time dense 3D reconstruction and camera tracking via embedded planes representation. Vis. Comput. 2020, 36, 2215–2226. [Google Scholar] [CrossRef]

- Fei, C.; Ma, Y.; Jiang, S.; Liu, J.; Sun, B.; Li, Y.; Gu, Y.; Zhao, X.; Fang, J. Real-time dynamic 3D shape reconstruction with SWIR InGaAs camera. Sensors 2020, 20, 521. [Google Scholar] [CrossRef] [PubMed]

- Menini, D.; Kumar, S.; Oswald, M.R.; Sandström, E.; Sminchisescu, C.; Van Gool, L. A real-time online learning framework for joint 3d reconstruction and semantic segmentation of indoor scenes. IEEE Robot. Autom. Lett. 2022, 7, 1332–1339. Available online: https://ieeexplore.ieee.org/abstract/document/9664227/ (accessed on 20 April 2022). [CrossRef]

- Matsuki, H.; Scona, R.; Czarnowski, J.; Davison, A.J. CodeMapping: Real-Time Dense Mapping for Sparse SLAM using Compact Scene Representations. IEEE Robot. Autom. Lett. 2021, 6, 7105–7112. [Google Scholar] [CrossRef]

- Jia, Q.; Chang, L.; Qiang, B.; Zhang, S.; Xie, W.; Yang, X.; Sun, Y.; Yang, M. Real-Time 3D Reconstruction Method Based on Monocular Vision. Sensors 2021, 21, 5909. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Chen, X.; Wang, S.; Pu, L.; Wu, D. An Edge Computing-Based Photo Crowdsourcing Framework for Real-Time 3D Reconstruction. IEEE Trans. Mob. Comput. 2022, 21, 421–432. [Google Scholar] [CrossRef]

- Sun, J.; Xie, Y.; Chen, L.; Zhou, X.; Bao, H. NeuralRecon: Real-Time Coherent 3D Reconstruction from Monocular Video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 15598–15607. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the UIST’11—Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar] [CrossRef]

- Keller, M.; Lefloch, D.; Lambers, M.; Izadi, S.; Weyrich, T.; Kolb, A. Real-time 3D reconstruction in dynamic scenes using point-based fusion. In Proceedings of the 2013 International Conference on 3D Vision, 3DV 2013, Seattle, WA, USA, 29 June–1 July 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Niesner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. (TOG) 2013, 32, 1–11. [Google Scholar] [CrossRef]

- Kim, H.; Leutenegger, S.; Davison, A.J. Real-time 3D reconstruction and 6-DoF tracking with an event camera. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 9910 LNCS, pp. 349–364. [Google Scholar]

- Geiger, A.; Ziegler, J.; Stiller, C. StereoScan: Dense 3d reconstruction in real-time. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 963–968. [Google Scholar] [CrossRef]

- Geiger, A.; Roser, M.; Urtasun, R. Efficient large-scale stereo matching. In Proceedings of the 10th Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Volume 6492 LNCS, pp. 25–38. [Google Scholar]

- Pradeep, V.; Rhemann, C.; Izadi, S.; Zach, C.; Bleyer, M.; Bathiche, S. MonoFusion: Real-time 3D reconstruction of small scenes with a single web camera. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2013, Adelaide, Australia, 1–4 October 2013; pp. 83–88. [Google Scholar] [CrossRef]

- Zeng, M.; Zhao, F.; Zheng, J.; Liu, X. Octree-based fusion for realtime 3D reconstruction. Graph. Models 2013, 75, 126–136. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef]

- Gavgani, A.M.; Walker, F.R.; Hodgson, D.M.; Nalivaiko, E. A comparative study of cybersickness during exposure to virtual reality and ‘classic’ motion sickness: Are they different? J. Appl. Physiol. 2018, 125, 1670–1680. [Google Scholar] [CrossRef]

- Chang, E.; Kim, H.T.; Yoo, B. Virtual Reality Sickness: A Review of Causes and Measurements. Int. J. Hum. Comput. Interact. 2020, 36, 1658–1682. [Google Scholar] [CrossRef]

- Saredakis, D.; Szpak, A.; Birckhead, B.; Keage, H.A.D.; Rizzo, A.; Loetscher, T. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 2020, 14, 512264. [Google Scholar] [CrossRef]

| Sensor | Range | Accuracy | Environment Suitability | Real-Time Capability | Robustness in Dusty/Noisy Environments |

|---|---|---|---|---|---|

| Lidar | Long (up to 300 m+) | High (mm level) | Underground and surface | Moderate to high | Moderate to high |

| Radar | Medium to long | Moderate to high | Underground and surface | High | High |

| RGB-D | Short to medium | Moderate to high | Underground | High | Low to moderate |

| Monocular | Short | Low to moderate | Underground and surface | High | Low |

| Stereo | Short to medium | Moderate to high | Underground and surface | Moderate to high | Moderate to high |

| Fisheye | Wide field of view | Low to moderate | Underground and surface | High | Low to moderate |

| Feature Detectors | Application | Process Speed | Robustness to Scale | |||||

|---|---|---|---|---|---|---|---|---|

| Object Recognition | Image Matching | Image Stitching | Keypoint Detection | High | Mod * | Robust | Limited | |

| FAST | - | - | ✓ | ✓ | ✓ | - | - | ✓ |

| Harris | ✓ | ✓ | - | - | ✓ | - | - | ✓ |

| ASIFT | ✓ | - | ✓ | - | - | ✓ | ✓ | - |

| SURF | ✓ | ✓ | - | - | ✓ | - | ✓ | - |

| SIFT | ✓ | - | ✓ | - | - | ✓ | ✓ | - |

| Feature Matcher | Process Speed | Robustness to Scale | ||

|---|---|---|---|---|

| High | Med | Mod | Limited | |

| FLANN | ✓ | - | - | ✓ |

| ANN | ✓ | - | - | ✓ |

| RANSAC | - | ✓ | ✓ | - |

| Algorithm | Scalability | Accuracy | Computational Efficiency | Applicability | Robustness |

|---|---|---|---|---|---|

| CMVS * | High | High in complex scenes | Efficient | Large-scale structures | Complex scenes |

| PMVS ** | Depends on patch size | High in challenging scenes | Depends on patch size | Complex geometry and occlusions | Occlusions |

| SGM *** | Moderate | High in structured environments | Moderate (depends on scene size) | Versatile | Generally robust |

| Algorithm | Accuracy | Robustness | Processing Speed | Common Applications |

|---|---|---|---|---|

| DBSCAN * | High in dense clusters | Robust to noise and outliers | Depends on points and density | Object recognition |

| K-means | Struggle with non-spherical or unevenly sized clusters | Sensitive to outliers | Fast, even for dense points | Clustering spherical structures |

| Hough Transform | High in detecting geometric shapes | Robust to noise | Computationally expensive | Line detection, circle detection |

| RANSAC ** | High in model fitting | Robust to outliers | Moderate to slow | Fitting models to geometric structures |

| Algorithm | Accuracy | Robustness to | Processing Speed | Common Applications |

|---|---|---|---|---|

| TSDF * | High | Noisy input | Computationally intensive | 3D reconstruction in structured environments |

| Marching Cubes | High for a wide range of surfaces | Complex shapes and topologies | Depends on voxel resolution | Terrain 3D modeling |

| Ball Pivoting | High for intricate structures | Noisy data | Depends on point cloud density | 3D Reconstruction of irregular surfaces |

| Author | Input Type | Method | Scene | Scalability | Robustness to Dynamic Scenes/Object | Robustness to Deformable Object | Algorithms/Technique | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RGB | RGB-D | Other | SLAM | Volumetric | Sparse | Other | Indoor | Outdoor | Small | Large | Robust | Limited | Robust | Limited | ||

| A. Brasoveanu et al. [93] | - | ✓ | - | - | ✓ | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | End-to-end, SIFT |

| B. Petit et al. [94] | ✓ | - | - | - | - | ✓ | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | EPVH |

| C. Zhao et al. [95] | - | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | CRF, CRF-RNN (recognition) |

| J. Lin et al. [96] | - | ✓ | - | ✓ | - | ✓ | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | Optimized Feature-adaptive |

| J. hua Lin et al. [97] | ✓ | - | - | ✓ | ✓ | - | Hybrid | ✓ | - | - | ✓ | - | ✓ | - | ✓ | CPU-to-GPU processing |

| A. Agudo et al. [98] | ✓ | - | - | - | - | ✓ | - | ✓ | ✓ | ✓ | - | - | ✓ | ✓ | - | EKF-FEM-FRP |

| Y. Xu et al. [99] | ✓ | - | - | ✓ | - | - | - | ✓ | ✓ | ✓ | - | - | ✓ | - | - | cGAN |

| M. Runz et al. [100] | - | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | Multi-model SLAM-based, Mask-RCNN |

| F. Lu et al. [101] | - | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | 6D pose prediction, GLSL |

| P. Stotko et al. [102] | - | ✓ | - | SLAMCast | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | Improved voxel block hashing |

| T. Laidlow et al. [103] | ✓ | - | CNN-SLAM | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | Improved U-Net | |

| C. Li et al. [104] | ✓ | - | RGB+IMU | - | ✓ | - | - | ✓ | ✓ | ✓ | - | - | ✓ | - | ✓ | MSCKF, EKF |

| M. Gong et al. [105] | ✓ | - | Lidar | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | ✓ | - | ✓ | SLAM |

| S. Zhang et al. [106] | - | ✓ | - | - | - | - | Hybrid | ✓ | - | - | ✓ | - | ✓ | - | ✓ | Pyramid FAST, Rotated BRIEF, GMD-RDS, improved RANSAC |

| Y. He et al. [107] | - | - | Lidar | - | ✓ | - | - | ✓ | - | ✓ | - | - | ✓ | - | ✓ | Improved voxel block hashing |

| Y. Fu et al. [108] | - | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | PEAC, AHC, ICP |

| C. Fei et al. [109] | - | - | SWIR | - | - | - | FTP | ✓ | - | ✓ | - | ✓ | - | ✓ | - | Improved FTP |

| D. Menini et al. [110] | - | ✓ | - | - | - | - | NN | ✓ | - | - | ✓ | - | ✓ | - | ✓ | AdapNet++, SGD optimizer |

| H. Matsuki et al. [111] | - | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | ✓ | Sparse SLAM |

| Q. Jia et al. [112] | - | ✓ | - | - | - | - | YOLACT++ | ✓ | - | ✓ | - | - | ✓ | - | ✓ | YOLACT++, BCC-Drop, VJTR |

| S. Yu et al. [113] | ✓ | - | - | - | - | - | EC-PCS | - | ✓ | - | ✓ | - | ✓ | - | ✓ | Crowdsourcing (EC-PCS) |

| J. Sun et al. [114] | - | ✓ | - | ✓ | ✓ | - | Hybrid | ✓ | - | - | ✓ | - | ✓ | - | ✓ | FBV, GRU Fusion, ScanNet |

| S. Izadi et al. [115] | - | ✓ | - | - | ✓ | - | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | SLAM, TSDF, ICP |

| M. Keller et al. [116] | - | ✓ | - | - | ✓ | - | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | ICP |

| M. Niesner et al. [117] | - | ✓ | - | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | ✓ | - | ✓ | TSDF, ICP, Voxel Hashing, DDA |

| H. Kim et al. [118] | ✓ | - | - | ✓ | - | - | - | ✓ | ✓ | - | ✓ | ✓ | - | - | ✓ | EKF |

| A. Geiger et al. [119] | ✓ | - | - | - | - | ✓ | - | ✓ | ✓ | - | ✓ | ✓ | - | ✓ | - | SAD, NMS, KF, RANSAC, ELAS [120] |

| V. Pradeep et al. [121] | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | - | - | ✓ | - | ✓ | ZNCC, RANSAC, SVD, FAST, SAD, SDF |

| M. Zeng et al. [122] | - | ✓ | - | - | ✓ | - | - | ✓ | - | - | ✓ | ✓ | - | - | ✓ | TSDF, ICP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kamran-Pishhesari, A.; Moniri-Morad, A.; Sattarvand, J. Applications of 3D Reconstruction in Virtual Reality-Based Teleoperation: A Review in the Mining Industry. Technologies 2024, 12, 40. https://doi.org/10.3390/technologies12030040

Kamran-Pishhesari A, Moniri-Morad A, Sattarvand J. Applications of 3D Reconstruction in Virtual Reality-Based Teleoperation: A Review in the Mining Industry. Technologies. 2024; 12(3):40. https://doi.org/10.3390/technologies12030040

Chicago/Turabian StyleKamran-Pishhesari, Alireza, Amin Moniri-Morad, and Javad Sattarvand. 2024. "Applications of 3D Reconstruction in Virtual Reality-Based Teleoperation: A Review in the Mining Industry" Technologies 12, no. 3: 40. https://doi.org/10.3390/technologies12030040

APA StyleKamran-Pishhesari, A., Moniri-Morad, A., & Sattarvand, J. (2024). Applications of 3D Reconstruction in Virtual Reality-Based Teleoperation: A Review in the Mining Industry. Technologies, 12(3), 40. https://doi.org/10.3390/technologies12030040