Abstract

Newborns cry intensely, and most parents struggle to understand the reason behind their crying, as the baby cannot verbally express their needs. This makes it challenging for parents to know if their child has a need or a health issue. An embedded solution based on a Raspberry Pi is presented to address this problem. The module analyzes audio techniques to capture, analyze, classify, and remotely monitor a baby’s cries. These techniques rely on prosodic and cepstral features, such as MFCC coefficients. They can differentiate the reason behind a baby’s cry, such as hunger, stomach pain, or discomfort. A machine learning model was trained to anticipate the reason based on audio features. The embedded system includes a microphone to capture real-time cries and a display screen to show the anticipated reason. In addition, the system sends the collected data to a web server for storage, enabling remote monitoring and more detailed data analysis. A cell phone application has also been developed to notify parents in real time of why their baby is crying. This application enables parents to adapt quickly and efficiently to their infant’s needs, even when they are not around.

1. Introduction

Infant communication primarily relies on crying, a natural means to signal their needs or discomfort. However, the inability of babies to verbally express their needs presents a challenge for parents, who often must guess the reasons behind their cries. This situation can lead to delays in responding to the infant’s needs, causing stressful moments for parents and prolonged discomfort for the child. In response to this issue, technology offers solutions to help identify the reasons for crying more accurately. Consequently, distinguishing between cries with distinct meanings using associated auditory features is imperative. This can enable the interpretation of a baby’s needs and provide parents with appropriate ways to soothe their child. It can also reduce stress for parents and caregivers by helping them avoid misinterpreting their baby’s cries. Additionally, it can prevent mistreatment and neglect of infants. Furthermore, analyzing a baby’s cries offers a non-invasive way to assess their health without needing to perform invasive tests. In this context, an automatic infant cry classification system can assist medical staff in monitoring the baby’s health and help new parents understand their baby’s needs based on their cries.

Previous studies in this field have explored various techniques for classifying infant cries, employing innovative approaches to enhance the accuracy and robustness of detection systems. For instance, Maghfira et al. [1] proposed a CNN-RNN model that captures the acoustic and temporal features of cries, achieving reliable accuracy in classifying cries by different needs and emotions. Ji et al. [2] conducted an in-depth review of techniques in this field, covering acoustic features (MFCC, spectrograms) and advanced neural networks (CNN and RNN), demonstrating their effectiveness in identifying infant needs and pathological cries. Other studies have focused on specific approaches to improve the accuracy of pathological cry classification. Kachhi et al. [3] applied constant Q cepstral coefficients (CQCCs) alongside Gaussian mixture models (GMMs), reaching a reliable accuracy in distinguishing between normal and pathological cries. To increase model robustness across different datasets, Kachhi et al. [4] introduced data augmentation methods applied to MFCC and CQCC features, improving accuracy in cross-dataset scenarios. More recently, Charola et al. [5] introduced the Whisper Encoder, which outperformed MFCCs in classifying pathological cries using CNNs and Bi-LSTM networks, demonstrating improved accuracy over traditional methods. In parallel, Singh et al. [6] compared CNN and Transformer models for cry classification, showing that Transformers outperformed CNNs, especially with data augmentation. Finally, Kristian et al. [7] combined multimodal autoencoders to integrate acoustic and facial expression data, enhancing pain detection accuracy with high F1 scores.

However, despite this progress, there are still challenges ahead. One of the main obstacles lies in the diversity of acoustic characteristics depending on the baby, as well as variations in crying based on the environment. Therefore, it is necessary to develop integrated and practical systems capable of handling these variations while offering high performance in terms of classification accuracy and response speed.

This paper presents an embedded solution composed of a Raspberry Pi for real-time classification of infant crying, a microphone to record infant crying, a speaker to provide audio feedback to parents, and an OLED display to show the predicted reason for crying. The system develops advanced audio signal processing techniques using machine learning models to classify cries by the cause of crying. In addition, a mobile application is developed to alert parents in real time to the cause of infant crying, helping parents to respond quickly to their baby’s needs in their absence. This solution offers an integrated solution that is both cost-effective and practical for monitoring the cause of a baby’s crying and helping parents manage it, helping infants stop crying and reducing parental stress.

2. Materials and Methods

2.1. System Architecture

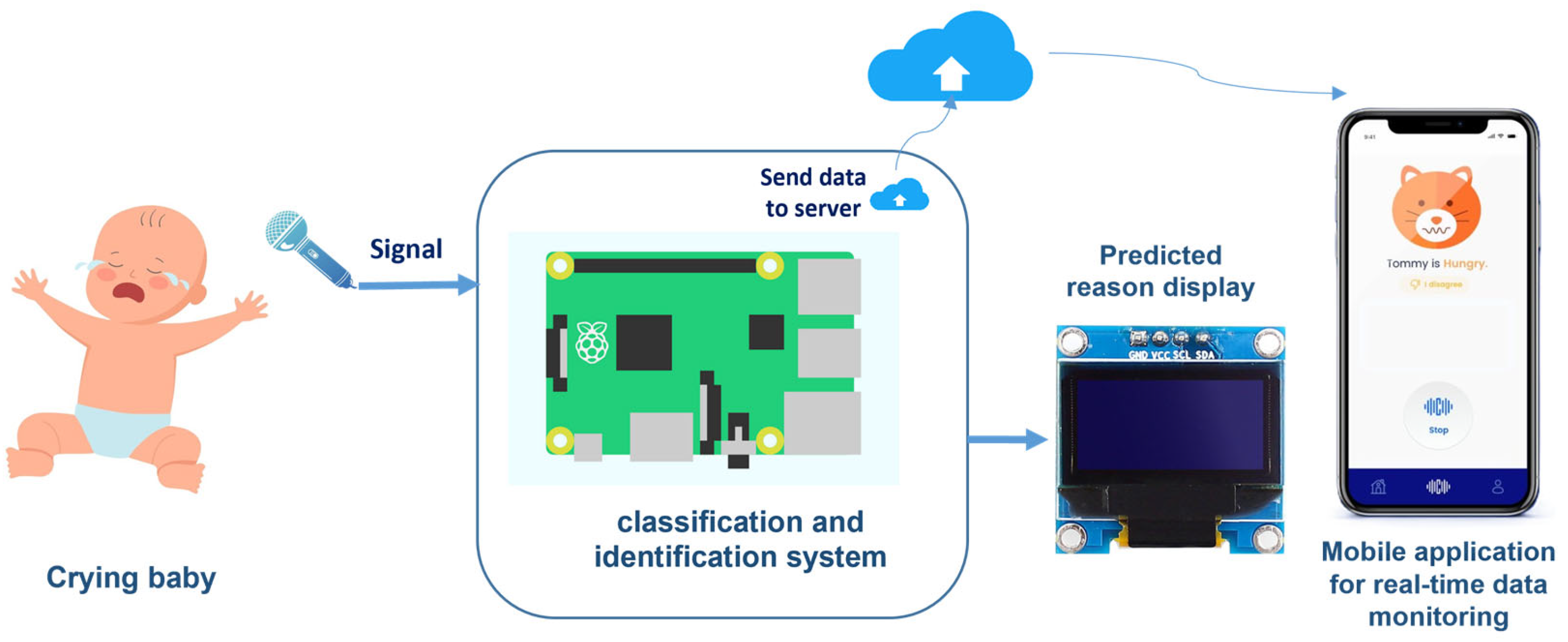

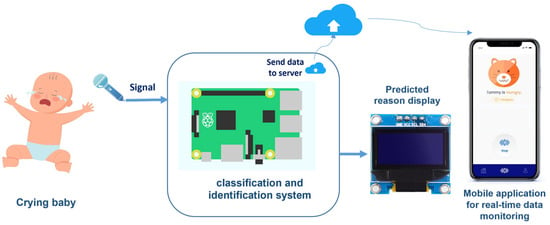

This article presents a novel approach to analyzing and managing infant crying, emphasizing its importance as an indicator of an infant’s needs and health status while addressing potential risks. The proposed solution is energy-efficient and integrates multiple stages and systems (Figure 1):

Figure 1.

The architecture of our intelligent system.

- Acquisition block: The acquisition system consists of a microphone and a temperature sensor. The signal picked up by the microphone will be recorded in a waveform audio format (WAV) file with a sampling frequency of 48 kHz and a resolution of 24 bits, then transmitted to the classification and identification system.

- Classification and identification block: This block uses advanced audio analysis and machine learning techniques to filter and process the signals picked up by the microphone. This block identifies the reasons for crying, such as hunger, stomach pain, or discomfort. The results of this identification and the temperature will then be displayed locally on the OLED display next to the baby and transmitted to a web server to be displayed on the parents’ mobile app and manage notifications.

- Supervision system: This is an intelligent mobile application that enables parents to monitor and interpret the baby’s needs remotely, with a notification system. In addition, analysis of the baby’s crying history enables an assessment of its state of health.

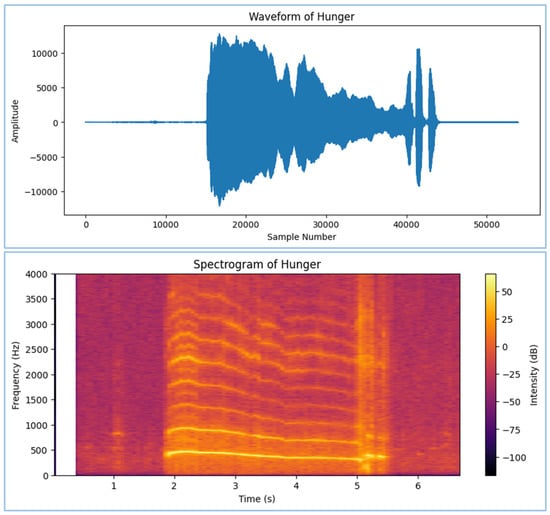

2.2. Infant’s Cry Characteristics

Infant crying has distinctive characteristics that make it a non-stationary signal, i.e., its properties, such as fundamental frequency (F0) and amplitude, vary over time. This type of signal is often quasi-periodic, with harmonic segments representing the baby’s voice, interspersed with periods of silence and breathing noises. The fundamental frequency of crying typically ranges between 200 and 600 Hz, with harmonics reaching up to 3000 Hz, providing additional information on the intensity and nature of the cry [8]. The amplitude of the cry varies considerably according to the baby’s emotion, with louder cries generally associated with higher amplitudes, often indicative of greater emotional distress, such as hunger or pain. Visually, the waveform of a baby’s crying signal shows a succession of peaks corresponding to vocal sounds, alternating with silent sections, a periodic structure typical of human vocal signals.

Table 1 shows the different types of baby crying, their characteristics, and the associated sounds.

Table 1.

Baby cry types and their characteristics [8].

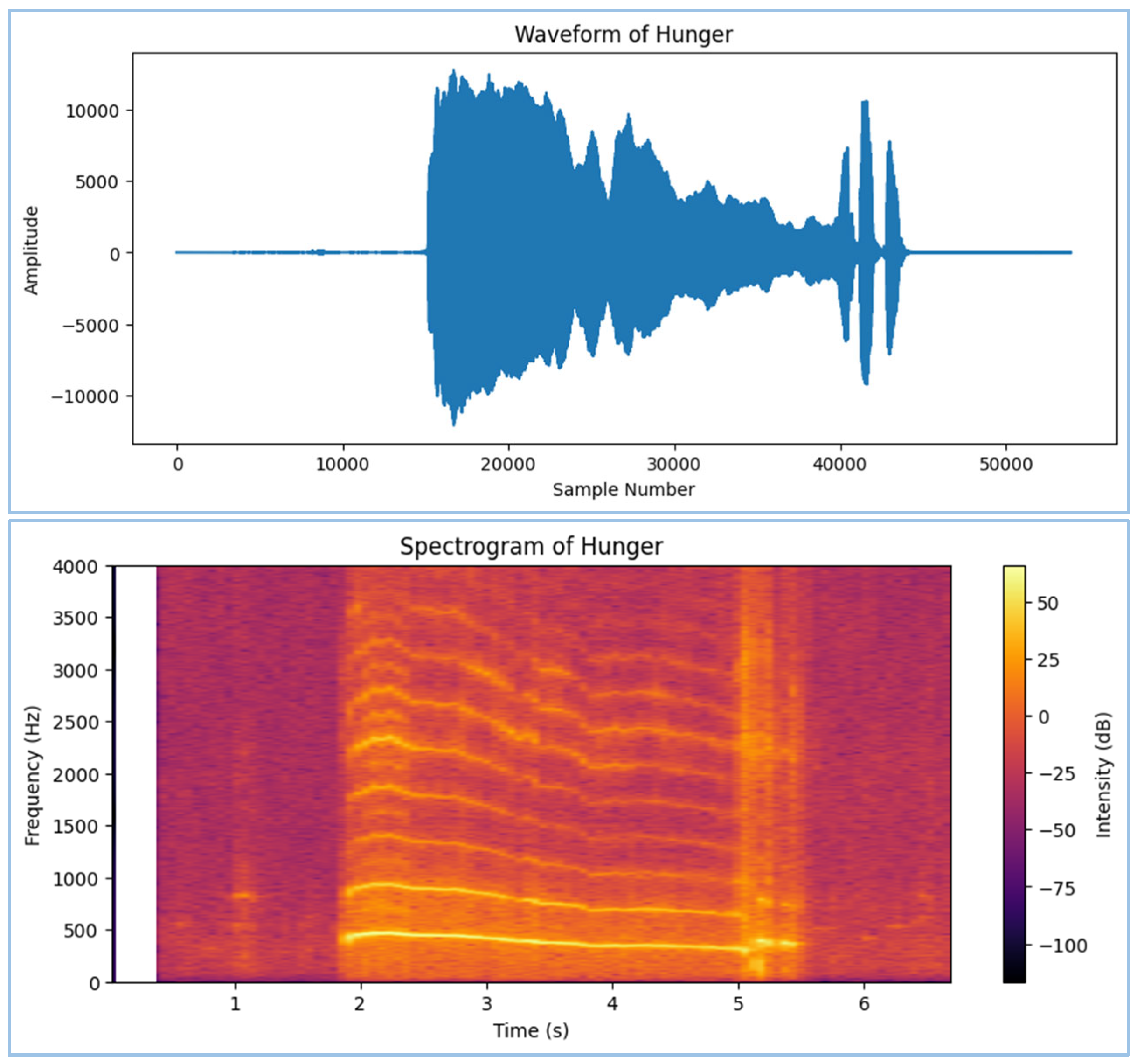

Figure 2 shows the waveform and spectrogram of a baby’s cry expressing hunger. The waveform illustrates the temporal variations of the signal, while the spectrogram highlights the frequency distribution over time, revealing harmonics and fluctuations linked to the intensity of the cry. These visualizations provide a better understanding of the acoustic structure of hungry cries.

Figure 2.

Waveform and spectrogram of a baby’s cry expressing hunger.

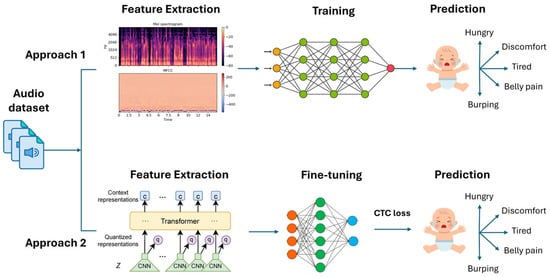

2.3. Classification

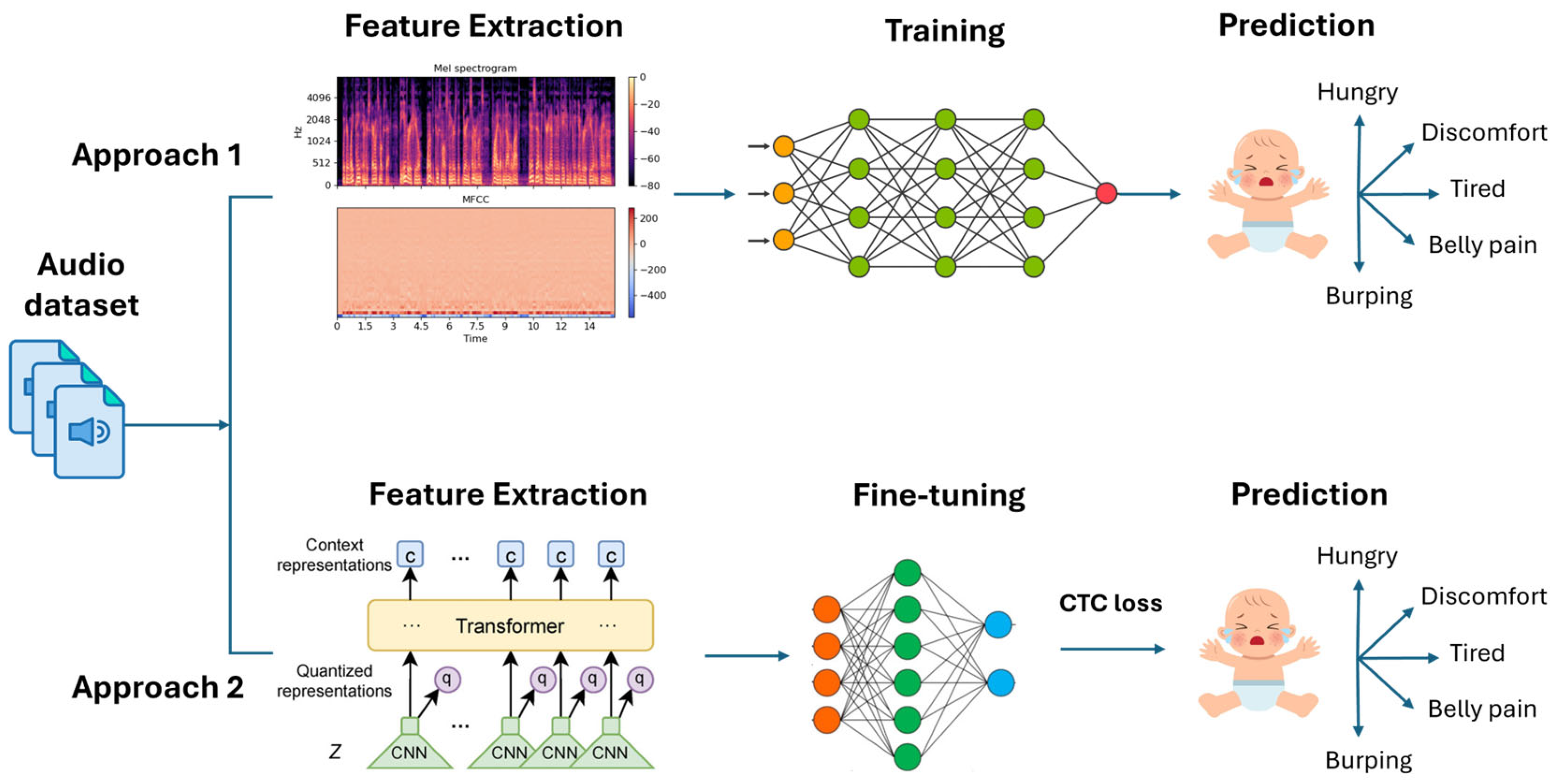

In this paper, as illustrated in Figure 3, we begin by gathering speech audio data from the Donate a Cry corpus [1]. The initial step is to convert the audio into spectrograms, which display the frequency spectrum of the audio signal over time. This preprocessing is crucial for feature extraction and sets the stage for deep learning analysis.

Figure 3.

Baby Cry Classification Approaches.

Our first approach uses a deep neural-network-based model. We input the spectrogram features into a fully connected neural network, where the input nodes in a neural network represent the initial data or features that are fed into the model. These inputs are processed through the network’s layers, which include hidden layers, where weighted connections transform the data. During this transformation, the network identifies and learns meaningful patterns through activation functions, enabling it to make predictions or classifications. The information is progressively abstracted as it moves through successive layers, with each layer capturing increasingly complex patterns. The output layer of the neural network then applies a SoftMax function to classify baby cries, assigning labels like hunger, tiredness, discomfort, or belly pain based on the input features.

In our second approach, we adopt a Transformer-based model. Initially, the raw audio is processed through convolutional neural networks (CNNs) to extract high-level feature representations, referred to as the “CNN Feature Extractor”. These features are quantized into discrete representations that capture key elements of the input signal. The contextual representations are then passed through transformer networks, which excel at capturing long-range dependencies within the speech data. The Transformer layers aggregate these context-rich representations, improving the model’s ability to recognize and classify baby cries across longer sections of the audio signal. Finally, a linear layer maps the high-dimensional contextual features to the target cries labels. This transformation is essential for converting the learned representations into a format suitable for classification. The model’s predictions are further refined using the connectionist temporal classification (CTC) loss function, which enables the alignment of predicted sequences with the ground truth labels without requiring explicit alignment between the input and output sequences. This approach accommodates the variable length of speech inputs, allowing the model to handle sequences of differing lengths. In the final step, the Transformer’s output layer uses a SoftMax function to generate the predicted labels based on the input features.

2.4. Database

We used the Donate a Cry dataset, which we obtained from the official GitHub version 1.0 repository [9]. Several studies have used this dataset, including [10,11,12]. This dataset consists of 457 audio recordings, each lasting 7 s and formatted as WAV files, with five class labels: belly pain, burping, discomfort, hungry, and tired, as shown in Table 2.

Table 2.

Donate a Cry corpus dataset distribution.

The audio samples were recorded in hospitals and homes and labeled by doctors, nurses, or parents, based on the reason for the infant’s cry. Digital recorders were placed near the infants to capture either individual cry signals or surrounding sound events over extended periods.

2.5. Model Training

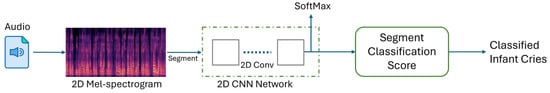

2.5.1. Convolutional Neural Network (CNN) with Mel Spectrograms

- (a)

- Feature extraction

Mel spectrograms offer an effective method for representing audio features across a range of classification tasks [13,14]. A Mel spectrogram is a time–frequency representation of sound that visually represents the frequency spectrum of an audio signal, mapped onto the Mel scale. This scale is specifically designed to mimic how the human ear perceives various frequencies, emphasizing frequency ranges more relevant to human perception. In the context of infant cries, their acoustic characteristics encode crucial information about the infant’s physiological and emotional states. The Mel spectrogram visually represents the frequency spectrum of an audio signal, mapped onto the Mel scale. The Mel scale is derived from psychoacoustic principles, modeling the non-linear sensitivity of the human auditory system, where lower frequencies are perceived with greater resolution than higher frequencies. Fundamentally, it functions as a mechanism for representing the energy composition across distinct frequency ranges, providing both temporal and spectral characteristics of sound signals. In the Mel spectrogram, the temporal aspect is represented by the X-axis, while the Y-axis represents the frequency. The intensity of color corresponds to the amplitude or power of the frequencies. The process starts with dividing the audio signal into short frames. For each frame, the Fourier transform is then applied, converting the time-domain signal into its frequency-domain representation. This allows the analysis of the frequency components within each segment of the audio signal. Following this, the Mel scale, which simulates the human ear’s response to different frequencies, is used to divide the frequency spectrum into distinct bands. The Mel spectrogram conversion can be mathematically represented as:

Here, denotes the short-time Fourier transform, where represents the time variable, corresponds to the frequency, and refers to the magnitude of the . The term signifies the Mel filter bank transformation, with representing the number of Mel filters applied. The resulting Mel spectrogram provides a representation of sound that aligns with human auditory perception by compressing higher frequencies and enhancing lower frequencies, which are more distinguishable to the human ear. This transformation highlights critical acoustic features, such as formants and harmonics, essential for analyzing and distinguishing different sounds, including infant cries.

- (b)

- Training CNN model

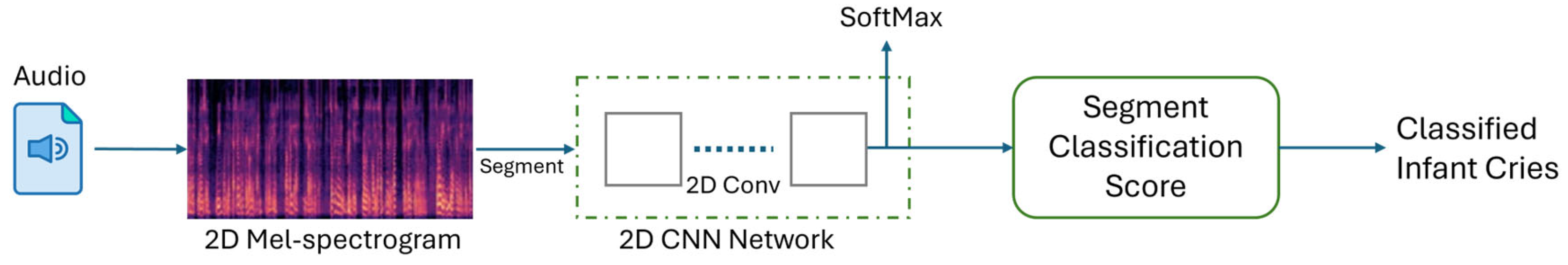

After extracting Mel spectrogram features from the Donate a Cry corpus dataset, a CNN model was applied (as shown in Figure 4). Mel spectrograms effectively represent the frequency content of audio signals in a way that mimics human auditory perception, making them well-suited for analyzing infant cries. Convolutional neural networks (CNNs) were specifically chosen for this study due to their proven capability to process complex audio signals. These networks extract hierarchical features from the Mel spectrograms, starting with basic patterns such as edges or frequency bands and progressing to more complex structures. This approach ensures that the model captures both the fundamental and nuanced characteristics of the crying signals, which are essential for accurate classification [14]. As the CNN processes data through its layers, it begins by extracting simple features, such as frequency edges and amplitude variations in the Mel spectrogram. As the data pass through deeper layers, the network identifies increasingly complex and abstract patterns, such as harmonic structures and temporal relationships, which are critical for distinguishing between different types of infant cries.

Figure 4.

Baby cry classification with CNN.

We resized the input to (64, 64) and normalized it. Our model included two convolutional layers (with 64 and 128 filters, respectively) using ReLU activation, followed by a max pooling layer for dimensionality reduction. We added a dropout layer (20% dropout rate, meaning 20% of the neurons were randomly deactivated during training) to prevent overfitting. The features were then flattened and passed through a random Fourier features layer for non-linear approximation. Finally, we compiled the model using the AdamW optimizer and categorical cross-entropy loss for multiclass classification.

2.5.2. Fine-Tuning Transformers

We fine-tuned pretrained speech recognition models Wav2Vec and distilHuBERT for baby cry classification (BCC). This will help us assess whether speech recognition features can be effectively used for BCC. Additionally, Transformers as self-supervised speech representation learning methods leveraging unlabeled speech data for pretraining offer good representations for numerous speech processing tasks and perform better with fine-tuning and require only a small increase in resources to achieve optimal accuracy.

- (a)

- Wav2Vec

We opted to concentrate on the Wav2Vec2-XLSR-53 architecture because of its strong performance in automatic speech recognition [15]. The Wav2Vec2 transformer, and specifically the Wav2Vec2-XLSR-53 model, has gained recognition for its effective use of attention mechanisms and self-supervised learning in speech recognition tasks [16].

The key concept behind Wav2Vec 2.0 is training the model to solve contrastive tasks using masked latent speech representations. This is performed by randomly masking parts of the audio input and training the model to predict the missing segments. By learning to differentiate between the actual masked audio and a set of distractor samples, the model develops robust speech features [17].

The Cross-lingual Speech Representations (XLSR) extension of Wav2Vec 2.0 builds on this concept by pretraining on a diverse set of 53 languages. This cross-lingual pretraining enables the model to learn more universal representations of audio, not confined to the phonetic or linguistic features of any single language. The model also includes a quantization feature shared across languages, which improves its ability to generalize to different types of audio inputs [18]. With its architecture leveraging self-supervised learning from raw audio data and its cross-lingual training, the model is well-suited for tasks like baby cry classification [19].

- (b)

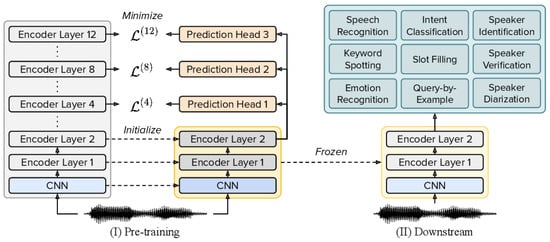

- DistilHuBERT

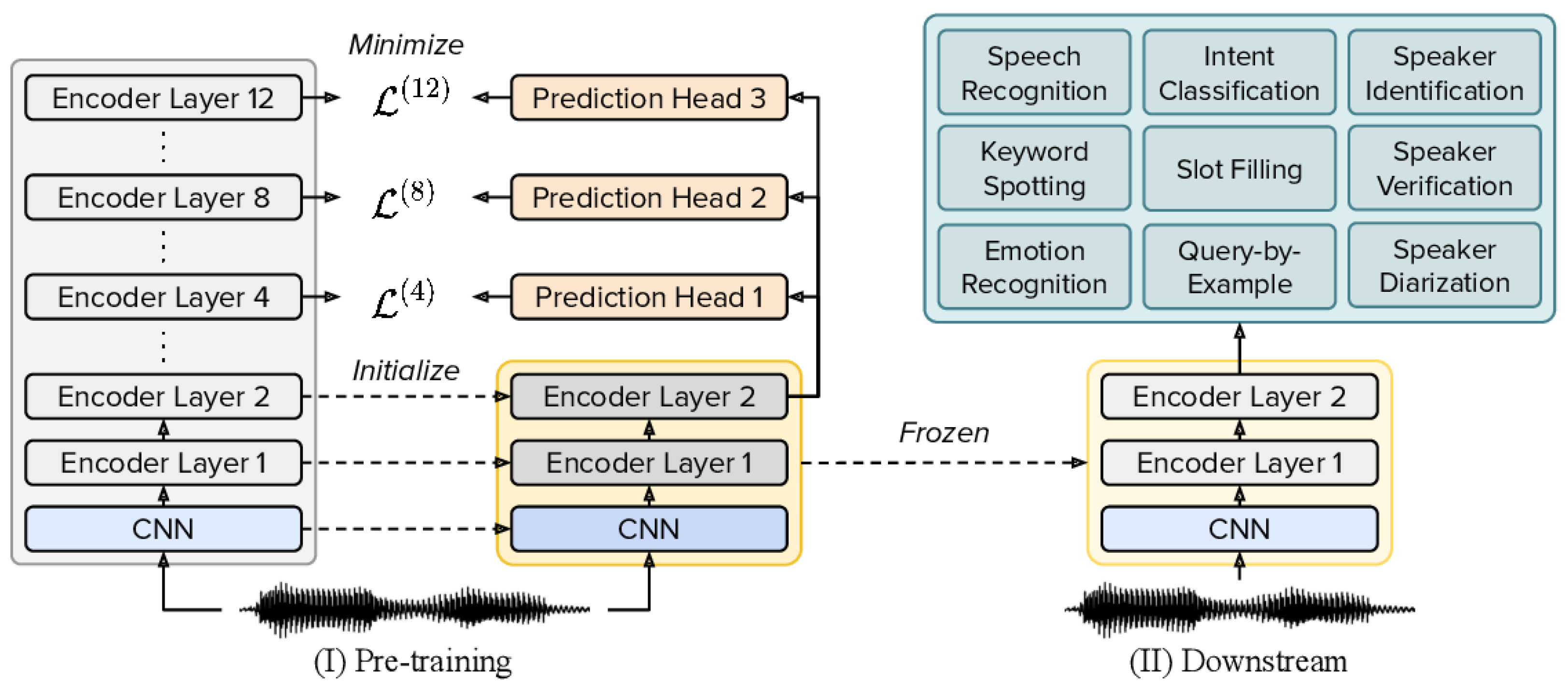

DistilHuBERT is speech representation learning by layer-wise distillation of the large model hidden-unit BERT (HuBERT) [20] to obtain DistilHuBERT, where a smaller model is trained to mimic the behavior of a larger model while reducing complexity. We have opted for DistilHuBERT for its ability to lower computational overhead, making it efficient for real-time processing and applications with limited computational resources. Despite its smaller architecture (as shown in Figure 5), DistilHuBERT retains most of HuBERT’s capacity to capture complex speech representations, ensuring minimal compromise in performance.

Figure 5.

DistilHuBERT architecture [20].

We will fine-tune the pretrained DistilHuBERT model on the Donate a Cry dataset to evaluate its effectiveness for the baby cry classification (BBC) downstream task.

2.6. Training Setting

Our models underwent fine-tuning utilizing the Colab Pro plan, which provides access to three types of GPUs along with a generous allocation of disk space. The specific GPUs and their characteristics are as follows:

- NVIDIA Tesla T4:

- -

- CUDA cores: 2560;

- -

- GPU RAM: 15 GB.

- NVIDIA V100:

- -

- CUDA cores: 5120;

- -

- GPU RAM: 16 GB.

- NVIDIA A100:

- -

- CUDA cores: 6912;

- -

- GPU RAM: 40 GB.

This range of GPU options allowed for flexible and efficient fine-tuning of our models to achieve optimal performance.

3. Results

3.1. Machine Learning Results

In this study, we conducted a comparative analysis of the performance of CNN and Transformer-based architectures for accurately classifying infant crying sounds. Specifically, we tested three models described in Section 2.5: CNN, Wav2Vec 2.0, and DistilHuBERT. The results of these experiments are now presented, with each model assessed based on performance metrics such as precision, recall, F1 score, and overall accuracy. The hyperparameters used across all models include a learning rate of 0.001, which regulates the step size during gradient descent, and a batch size of 4, which determines the number of samples processed before updating the model.

The results for each model across the five cry categories (belly pain, burping, discomfort, hungry, and tired) are detailed in Table 3, along with the overall accuracy for each model. The high accuracy achieved by all three models indicates their potential to enhance infant care and facilitate communication between parents and infants. By identifying the reasons for a baby’s cry, parents and caregivers can address the child’s needs, ensuring appropriate care and comfort.

Table 3.

The overall accuracy for the five cry labels across the three models.

The results for each model across the five cry categories—belly pain, burping, discomfort, hungry, and tired—are detailed in Table 3, along with the overall accuracy for each model. In addition to overall accuracy, we used performance metrics such as precision, recall, and F1 score to provide a more comprehensive evaluation of the models. These metrics are particularly important for datasets like the Donate a Cry dataset, where class imbalances and subtle differences between crying sounds may impact the model’s ability to correctly identify certain categories. Precision helps assess how well a model avoids false positives, recall measures its ability to capture all relevant instances of each class, and the F1 score balances the trade-off between precision and recall. By using these metrics, we ensure that the models not only achieve high overall accuracy but also perform well across all categories, leading to better identification of the reasons for a baby’s cry. DistilHuBERT outperformed the other models in all metrics, making it the most robust for cry classification and enabling caregivers to provide more responsive and accurate care.

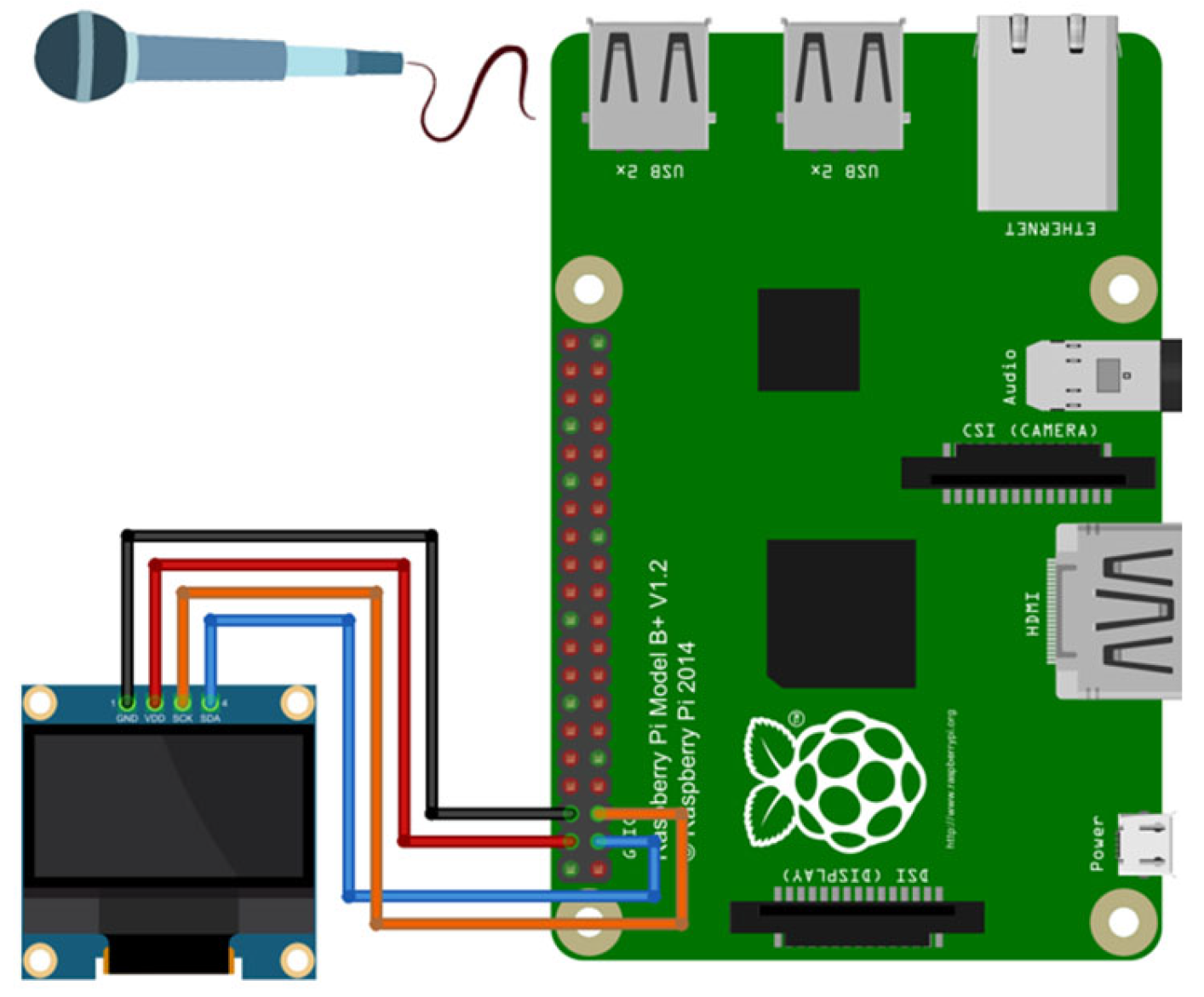

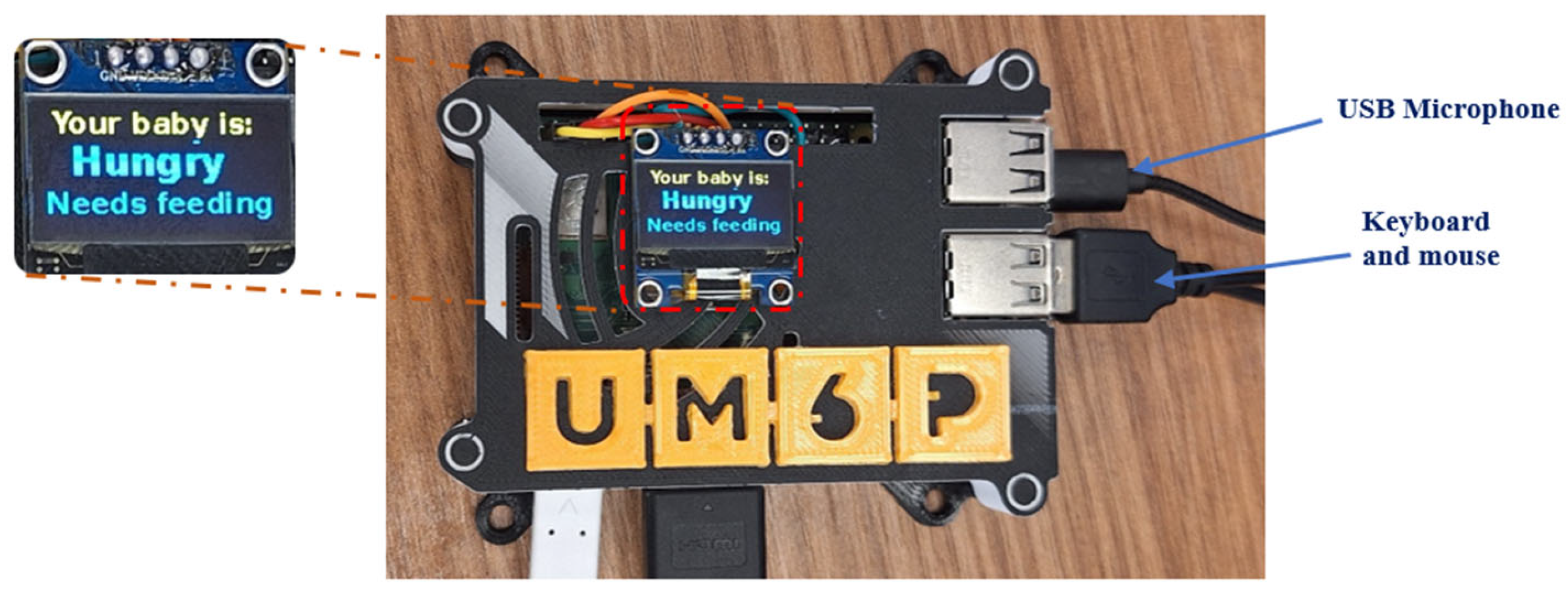

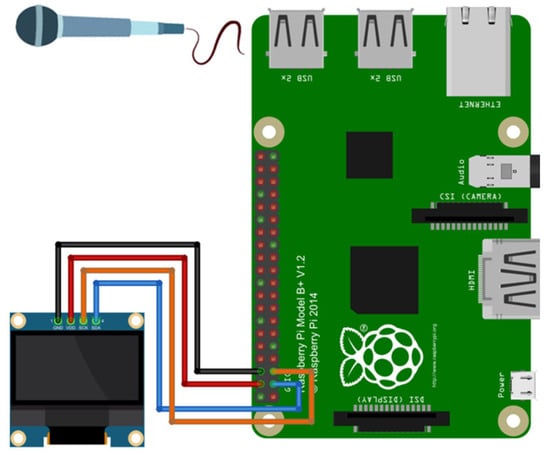

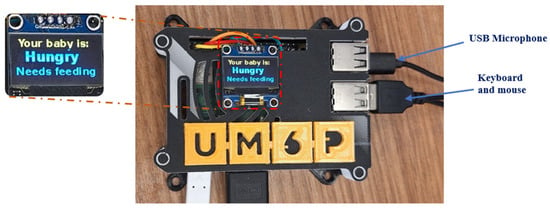

3.2. Hardware Implementation Results

The comparative analysis in the previous section identified the CNN DistilHuBERT model as the most effective for baby sound analysis. This model will be implemented on a Raspberry Pi board to improve real-time identification of crying. Once deployed, the board will include a microphone, a temperature sensor, and an OLED display. Figure 6 shows the corresponding wiring diagram.

Figure 6.

The general connections of the complete setup.

Once the model is embedded, we define a series of use cases to assess the system’s performance as if it were deployed on actual hardware. These tests will stress the model in real-world conditions, considering environmental variations such as background noise and temperature changes. The results will be compared with the initial Colab Pro performance to evaluate the system’s reproducibility. The addition of a temperature sensor also provides further insights into the infant’s condition, enhancing overall monitoring.

When a sound is detected, a procedure is triggered, starting the system’s operational mode. The microphone records a one-second WAV audio file. A filter is then applied to remove noise and improve the quality of the raw signal [21,22]. The filtered file is sent to a processing block, where the DistilHuBERT classification model determines the cause of the baby’s crying. After analysis, a message is displayed on the OLED screen, indicating the reason for the crying and suggesting ways to soothe the baby.

In parallel, the results, along with the WAV audio file and temperature data, are transmitted to a Firestore web server. This transmission is supported by a mobile application, enabling parents to receive real-time notifications. The system architecture developed in our laboratory is illustrated in Figure 7, which outlines the different modules of the implemented system.

Figure 7.

The hardware setup for our system.

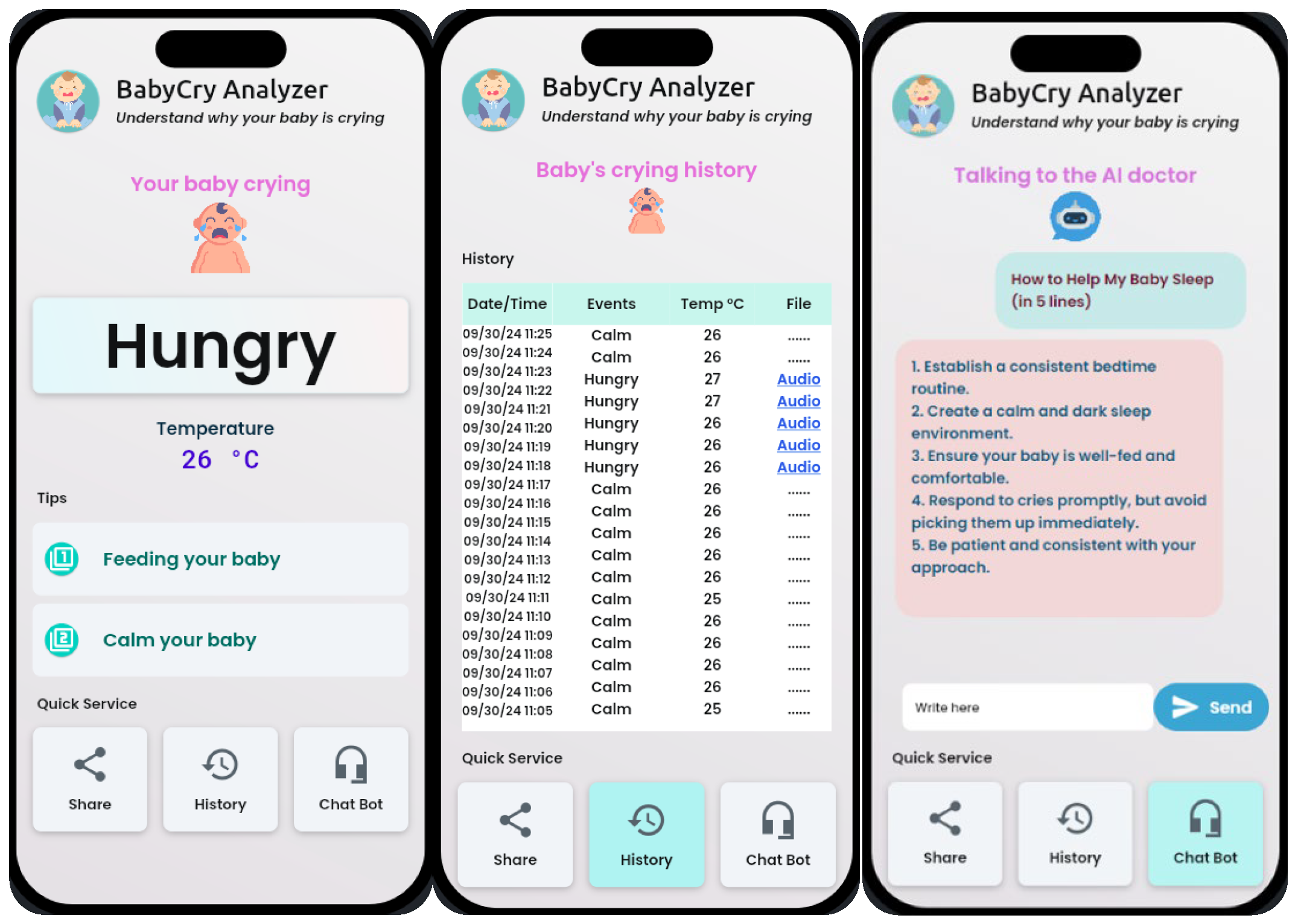

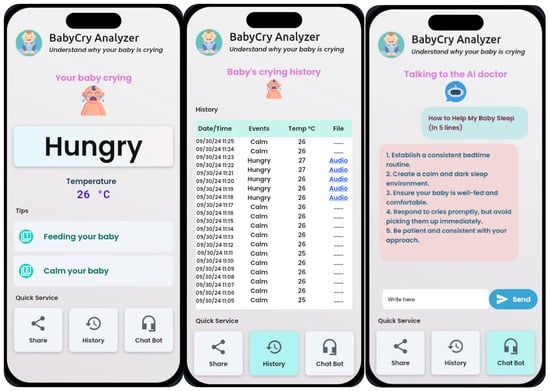

To make our data more accessible and easier to manage, and to enable real-time monitoring, we developed a mobile application compatible with both iOS and Android platforms [23]. This application was built using Dart with the Flutter framework and consists of three main pages.

- Authentication Page: The first page is dedicated to user authentication, requiring a login and password to access the application.

- Real-Time Monitoring Page: The second page displays real-time data related to the baby, including temperature and the reason for crying, if the baby is crying.

- History Page: The third page, titled “History”, shows the baby’s crying history over time.

Figure 8 illustrates the real-time monitoring of results on the mobile interface, which allows users to:

Figure 8.

Monitoring Results on the Mobile Interface.

- View and share data: All data related to the baby, including the audio file and crying history, can be viewed and shared with a doctor for consultation.

- Notifications for crying: When crying is detected, a notification is sent with the reason for the crying, along with some suggestions on how to soothe the baby.

- Interactive chatbot: The application includes a chatbot that provides additional advice and information on how to care for the baby.

The results show that the DistilHuBERT classification model outperformed other architectures, especially in recognizing discomfort, hunger, and fatigue cries, reaching an accuracy of 86.95% at 42 dB. Its ability to effectively extract features from audio signals makes it a strong candidate for baby cry classification. To assess the system’s robustness, we also tested it under different background noise conditions. The accuracy remained reasonably high at 78.61% under 54 dB, confirming its reliability in moderately noisy environments. We also observed that using a band-pass filter had a noticeable positive impact on performance, improving accuracy from 81.5% (without filter) to 86.95% (with filter) at 42 dB. The model was successfully integrated on a Raspberry Pi board, along with a microphone and temperature sensors, and performed well in real-life conditions. In addition, the mobile application allowed real-time alerts and easy data sharing with healthcare professionals, making the system practical and user-friendly for parents.

4. Conclusions

The paper presents an embedded system-based methodology to identify, classify, and monitor infant crying in real time using appropriate approaches that are comprehensively implemented using a Raspberry Pi. Using a mix of DistilHuBERT representations and prosodic and cepstral features with state-of-the-art machine learning models, the system can differentiate between various crying triggers, such as hunger, abdominal pain, or discomfort. This allows the convolutional neural network to learn and predict data captured in real time from a microphone, displaying the causes of crying along with the prediction and integrating a mobile application for remote monitoring. The feasibility and effectiveness of our system are demonstrated through its ability to send time-stamped data to a remote web server, enabling in-depth future analysis and continuous monitoring. This capability allows parents or caregivers to receive instructions and respond to the baby’s needs over long distances and when away. This strategy not only alleviates stress for parents but also ensures the baby’s well-being by enabling prompt responses to its needs. Future work will focus on enhancing identification accuracy in diverse environments and extending the system’s capabilities to include more complex health-related diagnostics.

Author Contributions

Conceptualization, M.M.; methodology, M.M. and W.F.; software, M.M. and W.F.; validation, A.C. and T.B.; formal analysis, M.M., W.F., N.E.B. and O.L.; investigation, M.M., N.E.B., W.F. and A.S.; resources, M.M., W.F. and A.C.; data curation, M.M., W.F. and M.B.; writing—original draft preparation, M.M.; writing—review and editing, M.M., W.F., O.L. and N.E.B.; visualization, M.M., A.S. and F.E.H.; supervision, A.C. and T.B.; project administration, A.C.; funding acquisition, A.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Green Tech Institute of UM6P.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in the GitHub repository referenced in [9].

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MFCC | Mel Frequency Cepstral Coefficient |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| OLED | Organic Light Emitting Diode |

| IoT | Internet of Things |

| CQCC | Constant Q Cepstral Coefficient |

| GMM | Gaussian Mixture Model |

| STFT | Short-Time Fourier Transform |

| ReLU | Rectified Linear Unit |

| XLSR | Cross-Lingual Speech Representations |

| BERT | Bidirectional Encoder Representations from Transformers |

| CTC | Connectionist Temporal Classification |

References

- Maghfira, T.N.; Basaruddin, T.; Krisnadhi, A. Infant cry classification using CNN—RNN. J. Phys. Conf. Ser. 2020, 1528, 012019. [Google Scholar] [CrossRef]

- Ji, C.; Mudiyanselage, T.B.; Gao, Y.; Pan, Y. A review of infant cry analysis and classification. EURASIP J. Audio Speech Music Process. 2021, 2021, 8. [Google Scholar] [CrossRef]

- Patil, H.A.; Patil, A.T.; Kachhi, A. Constant Q Cepstral coefficients for classification of normal vs. pathological infant cry. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings 2022, Singapore, 23–27 May 2022; Volume 2022, pp. 7392–7396. [Google Scholar] [CrossRef]

- Kachhi, A.; Chaturvedi, S.; Patil, H.A.; Singh, D.K. Data Augmentation for Infant Cry Classification. In Proceedings of the 2022 13th International Symposium on Chinese Spoken Language Processing ISCSLP 2022, Singapore, 11–14 December 2022; pp. 433–437. [Google Scholar] [CrossRef]

- Charola, M.; Kachhi, A.; Patil, H.A. Whisper Encoder features for Infant Cry Classification. Interspeech 2023, 2023, 1773–1777. [Google Scholar] [CrossRef]

- Singh, S.K.; Anand, B.S.; Bhatia, A.; Singh, G. A Novel Approach for Infant Cry Classification Using Transformer Models. In Proceedings of the 2023 IEEE 2nd International Conference on Industrial Electronics: Developments and Applications ICIDeA 2023, Imphal, India, 29–30 September 2023; pp. 631–638. [Google Scholar] [CrossRef]

- Kristian, Y.; Simogiarto, N.; Sampurna, M.T.A.; Hanindito, E. Ensemble of multimodal deep learning autoencoder for infant cry and pain detection. F1000Research 2022, 11, 359. [Google Scholar] [CrossRef]

- Limantoro, W.S.; Fatichah, C.; Yuhana, U.L. Application development for recognizing type of infant’s cry sound. In Proceedings of the 2016 International Conference on Information and Communication Technology and Systems ICTS 2016, Surabaya, Indonesia, 12 October 2016; pp. 157–161. [Google Scholar] [CrossRef]

- GitHub—Gveres/Donateacry-Corpus: An Infant Cry Audio Corpus That’s Being Built Through the Donate-a-Cry Campaign. Available online: https://github.com/gveres/donateacry-corpus (accessed on 7 October 2024).

- Ciric, D.; Peric, Z.; Nikolic, J.; Vucic, N. Audio Signal Mapping into Spectrogram-Based Images for Deep Learning Applications. In Proceedings of the 2021 20th International Symposium INFOTEH-JAHORINA INFOTEH 2021—Proceedings, Sarajevo, Bosnia and Herzegovina, 17–19 March 2021. [Google Scholar] [CrossRef]

- Yunida, R.; Faisal, M.R.; Muliadi; Indriani, F.; Abadi, F.; Budiman, I.; Prastya, S.E. LSTM and Bi-LSTM Models For Identifying Natural Disasters Reports From Social Media. J. Electron. Electromed. Eng. Med. Inform. 2023, 5, 241–249. [Google Scholar] [CrossRef]

- Junaidi, R.F.; Faisal, M.R.; Farmadi, A.; Herteno, R.; Nugrahadi, D.T.; Ngo, L.D.; Abapihi, B. Baby Cry Sound Detection: A Comparison of Mel Spectrogram Image on Convolutional Neural Network Models. J. Electron. Electromed. Eng. Med. Inform. 2024, 6, 355–369. [Google Scholar] [CrossRef]

- Fadel, W.; Araf, I.; Bouchentouf, T.; Buvet, P.A.; Bourzeix, F.; Bourja, O. Which French speech recognition system for assistant robots? In Proceedings of the 2022 2nd International Conference on Innovative Research in Applied Science, Engineering and Technology, IRASET in a Real-Time Application Voice 2022, Meknes, Morocco, 3–4 March 2022. [Google Scholar] [CrossRef]

- Santaniello, D.; Casillo, M.; Lombardi, M.; Younis, S.A.; Sobhy, D.; Tawfik, N.S. Evaluating Convolutional Neural Networks and Vision Transformers for Baby Cry Sound Analysis. Future Internet 2024, 16, 242. [Google Scholar] [CrossRef]

- Chaudhari, H.; Shah, A.J.; Patil, H.A. Cross Lingual Speech Representation for Infant Cry Classification. In Proceedings of the 2024 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Macau, Macao, 3–6 December 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Baevski, A.; Schneider, S.; Auli, M. vq-wav2vec: Self-Supervised Learning of Discrete Speech Representations. arXiv 2019, arXiv:1910.05453. [Google Scholar]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. Adv. Neural Inf. Process. Syst. 2020, 33, 12449–12460. [Google Scholar]

- Conneau, A.; Baevski, A.; Collobert, R.; Mohamed, A.; Auli, M. Unsupervised Cross-lingual Representation Learning for Speech Recognition. In Proceedings of the Annual Conference of the International Speech Communication Association INTERSPEECH, Dublin, Ireland, 20–24 August 2020; Volume 1, pp. 346–350. [Google Scholar] [CrossRef]

- Fadel, W.; Bouchentouf, T.; Buvet, P.A.; Bourja, O. Adapting Off-the-Shelf Speech Recognition Systems for Novel Words. Information 2023, 14, 179. [Google Scholar] [CrossRef]

- Chang, H.J.; Yang, S.W.; Lee, H.Y. DistilHuBERT: Speech Representation Learning by Layer-wise Distillation of Hidden-unit BERT. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings, Singapore, 23–27 May 2022; Volume 2022, pp. 7087–7091. [Google Scholar] [CrossRef]

- Mekhfioui, M.; Elgouri, R.; Satif, A.; Moumouh, M.; Hlou, L. Implementation of least mean square algorithm using arduino & simulink. Int. J. Sci. Technol. Res. 2020, 9, 664–667. [Google Scholar]

- Mekhfioui, M.; Elgouri, R.; Satif, A.; Hlou, L. Real-time implementation of a new efficient algorithm for source separation using matlab & arduino due. Int. J. Sci. Technol. Res. 2020, 9, 531–535. [Google Scholar]

- Mekhfioui, M.; Benahmed, A.; Chebak, A.; Elgouri, R.; Hlou, L. The Development and Implementation of Innovative Blind Source Separation Techniques for Real-Time Extraction and Analysis of Fetal and Maternal Electrocardiogram Signals. Bioengineering 2024, 11, 512. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).