The Connected Life: Using Access Technology at Home, at School and in the Community

Abstract

:1. Introduction

2. Factors Adversely Affecting Communication for Deaf Children

2.1. Environmental Factors

Distance and the Listening Bubble

2.2. Noise and Reverberation

3. Speaker Factors

3.1. Clear Speech and Message Complexity

3.2. Accented Speech

3.3. Access to Speechreading Cues

4. Listener Factors

Age

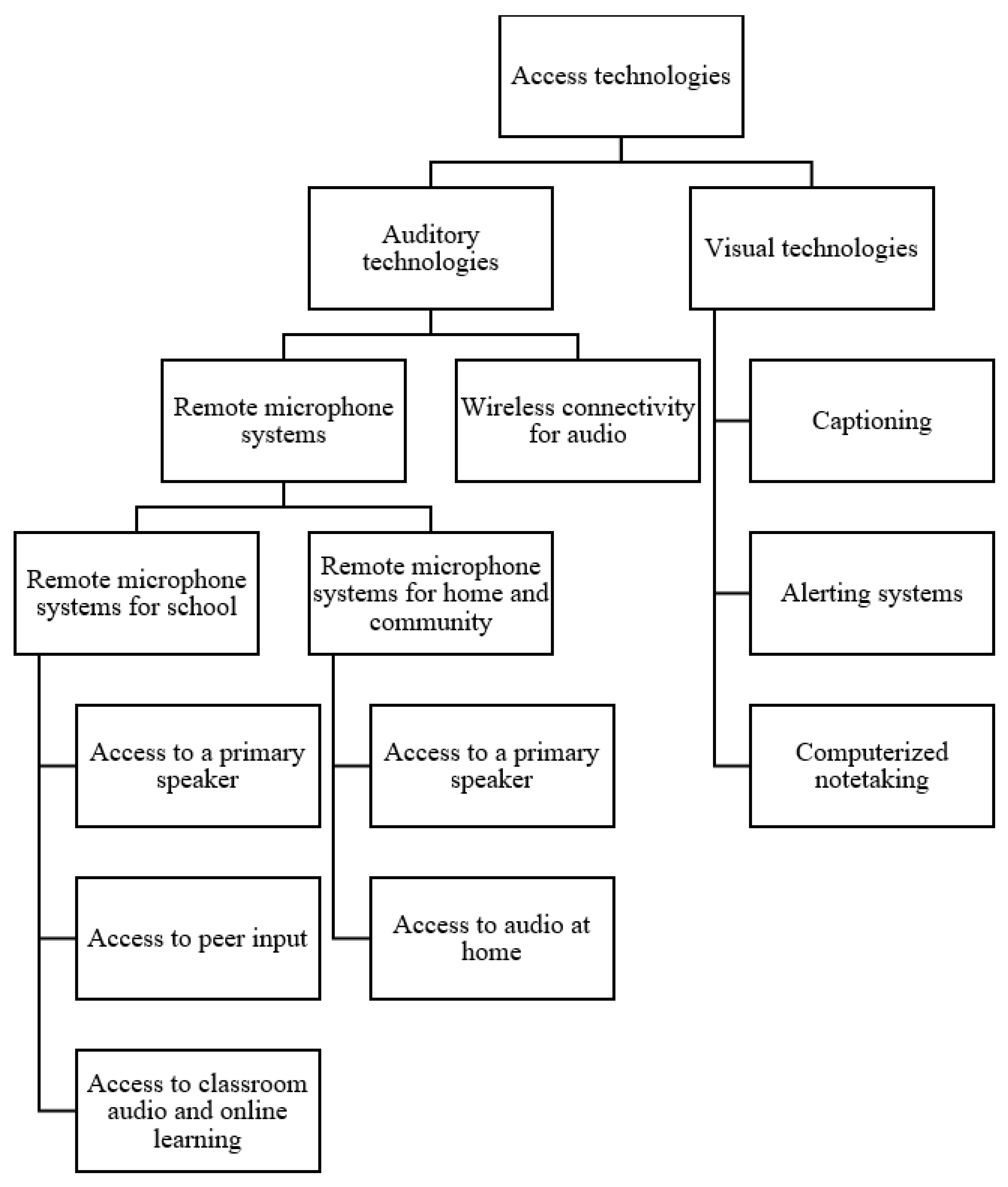

5. Purpose of Access Technologies

6. Auditory Technologies

6.1. Remote Microphone Systems for School

Access to a Primary Speaker

7. Access to Peer Input

8. Access to Classroom Audio and Online Learning

8.1. Remote Microphone Systems at Home and in the Community

8.2. Sports and Extracurricular Activities

8.3. Experiential Learning

8.4. Infants and Toddlers

8.5. Access to Audio at Home

9. Wireless Connectivity for Audio

10. Visual Access Technologies

Captioning

11. Communication Access Real-Time Translation (CART)

12. Automated Speech-to-Text Captioning

13. Computerized Notetaking

14. C-Print

15. Alerting Systems

16. Evaluating Benefits of Access Technologies

17. Challenges in the Implementation of Access Technologies

17.1. Cost and Availability

17.2. Insufficient Education on Use, Maintenance and Troubleshooting

17.3. Willingness and Ability to Use Technology

18. The Future of Access Technologies: Universal Design

18.1. Bluetooth LE Audio with Auracast

18.2. Captioning

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ching, T.Y.; Dillon, H.; Button, L.; Seeto, M.; Van Buynder, P.; Marnane, V.; Cupples, L.; Leigh, G. Age at Intervention for Permanent Hearing Loss and 5-Year Language Outcomes. Pediatrics 2017, 140, e20164274. [Google Scholar] [CrossRef] [Green Version]

- Ching, T.Y.C.; Dillon, H.; Leigh, G.; Cupples, L. Learning from the Longitudinal Outcomes of Children with Hearing Impairment (LOCHI) study: Summary of 5-year findings and implications. Int. J. Audiol. 2018, 57, S105–S111. [Google Scholar] [CrossRef]

- Cupples, L.; Ching, T.Y.C.; Button, L.; Leigh, G.; Marnane, V.; Whitfield, J.; Gunnourie, M.; Martin, L. Language and speech outcomes of children with hearing loss and additional disabilities: Identifying the variables that influence performance at five years of age. Int. J. Audiol. 2016, 57, S93–S104. [Google Scholar] [CrossRef]

- Walker, E.A.; Holte, L.; McCreery, R.W.; Spratford, M.; Moeller, M.P.; Aksoy, S.; Zehnhoff-Dinnesen, A.A.; Atas, A.; Bamiou, D.-E.; Bartel-Friedrich, S.; et al. The Influence of Hearing Aid Use on Outcomes of Children with Mild Hearing Loss. J. Speech Lang. Heart Res. 2015, 58, 1611–1625. [Google Scholar] [CrossRef] [Green Version]

- Mayer, C. What Really Matters in the Early Literacy Development of Deaf Children. J. Deaf. Stud. Deaf. Educ. 2007, 12, 411–431. [Google Scholar] [CrossRef] [Green Version]

- Easterbrooks, S.R.; Beal-Alvarez, J.S. States’ Reading Outcomes of Students Who Are d/Deaf and Hard of Hearing. Am. Ann. Deaf. 2012, 157, 27–40. [Google Scholar] [CrossRef] [PubMed]

- Mayer, C.; Trezek, B.J. Literacy Outcomes in Deaf Students with Cochlear Implants: Current State of the Knowledge. J. Deaf. Stud. Deaf. Educ. 2018, 23, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Mayer, C.; Trezek, B.J.; Hancock, G.R. Reading Achievement of Deaf Students: Challenging the Fourth Grade Ceiling. J. Deaf. Stud. Deaf. Educ. 2021, 26, 427–437. [Google Scholar] [CrossRef]

- Fagan, M.K. Cochlear implantation at 12 months: Limitations and benefits for vocabulary production. Cochlear Implant. Int. 2015, 16, 24–31. [Google Scholar] [CrossRef] [PubMed]

- Healy, E.W.; Yoho, S.E. Difficulty understanding speech in noise by the hearing impaired: Underlying causes and technological solutions. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL, USA, 16–20 August 2016; pp. 89–92. [Google Scholar] [CrossRef]

- Lerner, S. Limitations of Conventional Hearing Aids. Otolaryngol. Clin. N. Am. 2019, 52, 211–220. [Google Scholar] [CrossRef]

- Lesica, N.A. Why Do Hearing Aids Fail to Restore Normal Auditory Perception? Trends Neurosci. 2018, 41, 174–185. [Google Scholar] [CrossRef]

- Southworth, M.F. The sonic environment of cities. Environ. Behav. 1969, 1, 49–70. [Google Scholar]

- Anderson, K. The Problem of Classroom Acoustics: The Typical Classroom Soundscape Is a Barrier to Learning. Semin. Heart 2004, 25, 117–129. [Google Scholar] [CrossRef]

- Flagg-Williams, J.B.; Rubin, R.L.; Aquino-Russell, C.E. Classroom soundscape. Educ. Child Psychol. 2011, 28, 92–99. [Google Scholar] [CrossRef]

- World Health Organization. World Report on Hearing; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Pichora-Fuller, M.K.; Kramer, S.E. Eriksholm workshop on hearing impairment and cognitive energy. Ear. Heart 2016, 37, 1S–4S. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K.; Kramer, S.E.; Eckert, M.A.; Edwards, B.; Hornsby, B.W.; Humes, L.E.; Lemke, U.; Lunner, T.; Matthen, M.; Mackersie, C.L.; et al. Hearing impairment and cognitive energy: The framework for understanding effortful listening (FUEL). Ear. Heart 2016, 37, 5S–27S. [Google Scholar] [CrossRef]

- Rudner, M.; Lyberg-Åhlander, V.; Brännström, J.; Nirme, J.; Pichora-Fuller, M.K.; Sahlén, B. Effects of background noise, talker’s voice, and speechreading on speech understanding by primary school children in simulated classroom listening situations. J. Acoust. Soc. Am. 2018, 144, 1976. [Google Scholar] [CrossRef]

- Shields, C.; Willis, H.; Nichani, J.; Sladen, M.; Kort, K.K.-D. Listening effort: WHAT is it, HOW is it measured and WHY is it important? Cochlea-Implant. Int. 2022, 23, 114–117. [Google Scholar] [CrossRef]

- Leavitt, R.; Flexer, C. Speech Degradation as Measured by the Rapid Speech Transmission Index (RASTI). Ear Heart 1991, 12, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Madell, J. The Listening Bubble. Hearing Health and Technology Matters. 2014. Available online: https://hearinghealthmatters.org/hearing-and-kids/2014/listening-bubble/ (accessed on 17 January 2023).

- Gremp, M.A.; Easterbrooks, S.R. A Descriptive Analysis of Noise in Classrooms across the U.S. and Canada for Children who are Deaf and Hard of Hearing. Volta Rev. 2018, 117, 5–31. [Google Scholar] [CrossRef]

- Gheller, F.; Lovo, E.; Arsie, A.; Bovo, R. Classroom acoustics: Listening problems in children. Build. Acoust. 2020, 27, 47–59. [Google Scholar] [CrossRef]

- Lind, S. The evolution of standard S12.60 acoustical performance criteria, design requirements, and guidelines for schools. J. Acoust. Soc. Am. 2020, 148, 2705. [Google Scholar] [CrossRef]

- Nelson, P.B.; Blaeser, S.B. Classroom Acoustics: What Possibly Could Be New? ASHA Lead. 2010, 15, 16–19. [Google Scholar] [CrossRef]

- Wang, L.M.; Brill, L.C. Speech and noise levels measured in occupied K–12 classrooms. J. Acoust. Soc. Am. 2021, 150, 864–877. [Google Scholar] [CrossRef]

- Burlingame, E.P. Classroom Acoustics in the Postsecondary Setting: A Case for Universal Design. Ph.D. Thesis, State University of New York, Buffalo, NY, USA, 2018. [Google Scholar]

- Elmehdi, H.; Alzoubi, H.; Lohani, S.H.R. Acoustic quality of university classrooms: A subjective evaluation of the acoustic comfort and conditions at the University of Sharjah classrooms. In Proceedings of the International Conference on Acoustics, Aachen, Germany, 9–13 September 2019; pp. 4130–4137. [Google Scholar]

- Heuij, K.V.D.; Goverts, T.; Neijenhuis, K.; Coene, M. Challenging listening environments in higher education: An analysis of academic classroom acoustics. J. Appl. Res. High. Educ. 2021, 13, 1213–1226. [Google Scholar] [CrossRef]

- Benítez-Barrera, C.R.; Grantham, D.W.; Hornsby, B.W. The Challenge of Listening at Home: Speech and Noise Levels in Homes of Young Children with Hearing Loss. Ear Heart 2020, 41, 1575–1585. [Google Scholar] [CrossRef] [PubMed]

- Pang, Z.; Becerik-Gerber, B.; Hoque, S.; O’Neill, Z.; Pedrielli, G.; Wen, J.; Wu, T. How Work From Home Has Affected the Occupant’s Well-Being in the Residential Built Environment: An International Survey Amid the COVID-19 Pandemic. ASME J. Eng. Sustain. Build. Cities 2021, 2, 041003. [Google Scholar] [CrossRef]

- Picheny, M.A.; Durlach, N.I.; Braida, L.D. Speaking clearly for the hard of hearing I: Intelligibility differences between clear and conversational speech. J. Speech Lang. Heart Res. 1985, 28, 96–103. [Google Scholar] [CrossRef]

- Picheny, M.A.; Durlach, N.I.; Braida, L.D. Speaking clearly for the hard of hearing II: Acoustic characteristics of clear and conversational speech. J. Speech Lang. Heart Res. 1986, 29, 434–446. [Google Scholar] [CrossRef]

- Calandruccio, L.; Porter, H.L.; Leibold, L.J.; Buss, E. The Clear-Speech Benefit for School-Age Children: Speech-in-Noise and Speech-in-Speech Recognition. J. Speech Lang. Heart Res. 2020, 63, 4265–4276. [Google Scholar] [CrossRef]

- Haake, M.; Hansson, K.; Gulz, A.; Schötz, S.; Sahlén, B. The slower the better? Does the speaker’s speech rate influence children’s performance on a language comprehension test? Int. J. Speech-Lang. Pathol. 2014, 16, 181–190. [Google Scholar] [CrossRef] [Green Version]

- Meemann, K.; Smiljanić, R. Intelligibility of Noise-Adapted and Clear Speech in Energetic and Informational Maskers for Native and Nonnative Listeners. J. Speech Lang. Heart Res. 2022, 65, 1263–1281. [Google Scholar] [CrossRef]

- Van Engen, K.J.; Phelps, J.E.B.; Smiljanic, R.; Chandrasekaran, B. Enhancing Speech Intelligibility: Interactions Among Context, Modality, Speech Style, and Masker. J. Speech Lang. Heart Res. 2014, 57, 1908–1918. [Google Scholar] [CrossRef]

- Miller, S.; Wolfe, J.; Neumann, S.; Schafer, E.C.; Galster, J.; Agrawal, S. Remote Microphone Systems for Cochlear Implant Recipients in Small Group Settings. J. Am. Acad. Audiol. 2022, 33, 142–148. [Google Scholar] [CrossRef]

- Syrdal, A. Acoustic variability in spontaneous conversational speech of American English talkers. In Proceedings of the Fourth International Conference on Spoken Language Processing, ICSLP’96 1, Philadelphia, PA, USA, 6 August 2002; pp. 438–441. [Google Scholar] [CrossRef] [Green Version]

- Alain, C.; Du, Y.; Bernstein, L.J.; Barten, T.; Banai, K. Listening under difficult conditions: An activation likelihood estimation meta-analysis. Hum. Brain Mapp. 2018, 39, 2695–2709. [Google Scholar] [CrossRef] [Green Version]

- Pichora-Fuller, M.K.; Smith, S.L. Effects of age, hearing loss, and linguistic complexity on listening effort as measured by working memory span. J. Acoust. Soc. Am. 2015, 137, 2235. [Google Scholar] [CrossRef]

- Pisoni, D.B.; Geers, A.E. Working Memory in Deaf Children with Cochlear Implants: Correlations between Digit Span and Measures of Spoken Language Processing. Ann. Otol. Rhinol. Laryngol. 2000, 109, 92–93. [Google Scholar] [CrossRef]

- Mikic, B.; Miric, D.; Nikolic-Mikic, M.; Ostojic, S.; Asanovic, M. Age at implantation and auditory memory in cochlear implanted children. Cochlea-Implant. Int. 2014, 15, S33–S35. [Google Scholar] [CrossRef]

- Conway, C.M.; Pisoni, D.B.; Anaya, E.M.; Karpicke, J.; Henning, S.C. Implicit sequence learning in deaf children with cochlear implants. Dev. Sci. 2011, 14, 69–82. [Google Scholar] [CrossRef] [Green Version]

- Conway, C.M.; Pisoni, D.B.; Kronenberger, W.G. The importance of sound for cognitive sequencing abilities: The auditory scaffolding hypothesis. Curr. Dir. Psychol. Sci. 2009, 18, 275–279. [Google Scholar] [CrossRef] [Green Version]

- Siegel, J. Factors affecting notetaking performance. Int. J. List. 2022, 1–13. [Google Scholar] [CrossRef]

- Lecumberri, M.L.G.; Cooke, M.; Cutler, A. Non-native speech perception in adverse conditions: A review. Speech Commun. 2010, 52, 864–886. [Google Scholar] [CrossRef] [Green Version]

- Peng, Z.E.; Wang, L.M. Listening Effort by Native and Nonnative Listeners Due to Noise, Reverberation, and Talker Foreign Accent during English Speech Perception. J. Speech Lang. Heart Res. 2019, 62, 1068–1081. [Google Scholar] [CrossRef]

- Van Engen, K.J.; Peelle, J.E. Listening effort and accented speech. Front. Hum. Neurosci. 2014, 8, 577. [Google Scholar] [CrossRef] [Green Version]

- Gordon-Salant, S.; Yeni-Komshian, G.H.; Fitzgibbons, P.J. Recognition of accented English in quiet and noise by younger and older listeners. J. Acoust. Soc. Am. 2010, 128, 3152–3160. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K. Working memory and speechreading. In Speechreading by Humans and Machines: Models, Systems, and Applications; Springer Berlin Heidelberg: Berlin, Heidelberg, 1996; pp. 257–274. [Google Scholar]

- Moradi, S.; Lidestam, B.; Danielsson, H.; Ng, E.H.N.; Rönnberg, J. Visual Cues Contribute Differentially to Audiovisual Perception of Consonants and Vowels in Improving Recognition and Reducing Cognitive Demands in Listeners with Hearing Impairment Using Hearing Aids. J. Speech Lang. Heart Res. 2017, 60, 2687–2703. [Google Scholar] [CrossRef] [Green Version]

- Gagne, J.P.; Besser, J.; Lemke, U. Behavioral assessment of listening effort using a dual-task paradigm: A review. Trends Hear. 2017, 21, 2331216516687287. [Google Scholar] [CrossRef] [Green Version]

- Homans, N.C.; Vroegop, J.L. The impact of face masks on the communication of adults with hearing loss during COVID-19 in a clinical setting. Int. J. Audiol. 2022, 61, 365–370. [Google Scholar] [CrossRef]

- Rahne, T.; Fröhlich, L.; Plontke, S.; Wagner, L. Influence of surgical and N95 face masks on speech perception and listening effort in noise. PLoS ONE 2021, 16, e0253874. [Google Scholar] [CrossRef]

- Tofanelli, M.; Capriotti, V.; Gatto, A.; Boscolo-Rizzo, P.; Rizzo, S.; Tirelli, G. COVID-19 and Deafness: Impact of Face Masks on Speech Perception. J. Am. Acad. Audiol. 2022, 33, 098–104. [Google Scholar] [CrossRef]

- Kyle, F.E.; Campbell, R.; Mohammed, T.; Coleman, M.; MacSweeney, M. Speechreading Development in Deaf and Hearing Children: Introducing the Test of Child Speechreading. J. Speech Lang. Heart Res. 2013, 56, 416–426. [Google Scholar] [CrossRef] [Green Version]

- Kyle, F.E.; Campbell, R.; MacSweeney, M. The relative contributions of speechreading and vocabulary to deaf and hearing children’s reading ability. Res. Dev. Disabil. 2016, 48, 13–24. [Google Scholar] [CrossRef] [Green Version]

- Rogers, C.L.; Lister, J.J.; Febo, D.M.; Besing, J.M.; Abrams, H.B. Effects of bilingualism, noise, and reverberation on speech perception by listeners with normal hearing. Appl. Psycholinguist. 2006, 27, 465–485. [Google Scholar] [CrossRef]

- Lalonde, K.; McCreery, R.W. Audiovisual Enhancement of Speech Perception in Noise by School-Age Children Who Are Hard of Hearing. Ear Heart 2020, 41, 705–719. [Google Scholar] [CrossRef]

- Moore, D.R.; Cowan, J.A.; Riley, A.; Edmondson-Jones, A.M.; Ferguson, M.A. Development of Auditory Processing in 6- to 11-Yr-Old Children. Ear Heart 2011, 32, 269–285. [Google Scholar] [CrossRef]

- Dawes, P.; Bishop, D.V.M. Maturation of Visual and Auditory Temporal Processing in School-Aged Children. J. Speech Lang. Heart Res. 2008, 51, 1002–1015. [Google Scholar] [CrossRef]

- Krizman, J.; Tierney, A.; Fitzroy, A.B.; Skoe, E.; Amar, J.; Kraus, N. Continued maturation of auditory brainstem function during adolescence: A longitudinal approach. Clin. Neurophysiol. 2015, 126, 2348–2355. [Google Scholar] [CrossRef] [Green Version]

- Moore, D.R.; Rosen, S.; Bamiou, D.-E.; Campbell, N.G.; Sirimanna, T. Evolving concepts of developmental auditory processing disorder (APD): A British Society of Audiology APD Special Interest Group ‘white paper’. Int. J. Audiol. 2013, 52, 3–13. [Google Scholar] [CrossRef]

- Leibold, L.J. Speech Perception in Complex Acoustic Environments: Developmental Effects. J. Speech Lang. Heart Res. 2017, 60, 3001–3008. [Google Scholar] [CrossRef] [Green Version]

- Neves, I.F.; Schochat, E. Auditory processing maturation in children with and without learning difficulties. Pró-Fono Rev. Atualização Científica 2005, 17, 311–320. [Google Scholar] [CrossRef] [Green Version]

- Ponton, C.W.; Eggermont, J.J.; Kwong, B.; Don, M. Maturation of human central auditory system activity: Evidence from multi-channel evoked potentials. Clin. Neurophysiol. 2000, 111, 220–236. [Google Scholar] [CrossRef]

- Squires, B.; Bird, E.K.-R. Self-Reported Listening Abilities in Educational Settings of Typically Hearing Children and Those Who Are Deaf/Hard-of-Hearing. Commun. Disord. Q. 2023, 44, 107–116. [Google Scholar] [CrossRef]

- Oosthuizen, I.; Picou, E.M.; Pottas, L.; Myburgh, H.C.; Swanepoel, D.W. Listening Effort in School-Aged Children with Limited Useable Hearing Unilaterally: Examining the Effects of a Personal, Digital Remote Microphone System and a Contralateral Routing of Signal System. Trends Heart 2021, 25, 2331216520984700. [Google Scholar] [CrossRef]

- Gabova, K.; Meier, Z.; Tavel, P. Parents’ experiences of remote microphone systems for children with hearing loss. Disabil. Rehabil. Assist. Technol. 2022, 1–10. [Google Scholar] [CrossRef]

- Davis, H.; Schlundt, D.; Bonnet, K.; Camarata, S.; Hornsby, B.; Bess, F.H. Listening-Related Fatigue in Children with Hearing Loss: Perspectives of Children, Parents, and School Professionals. Am. J. Audiol. 2021, 30, 929–940. [Google Scholar] [CrossRef]

- McGarrigle, R.; Gustafson, S.J.; Hornsby, B.W.Y.; Bess, F.H. Behavioral Measures of Listening Effort in School-Age Children: Examining the Effects of Signal-to-Noise Ratio, Hearing Loss, and Amplification. Ear Heart 2019, 40, 381–392. [Google Scholar] [CrossRef]

- Peelle, J.E. Listening Effort: How the Cognitive Consequences of Acoustic Challenge Are Reflected in Brain and Behavior. Ear Heart 2018, 39, 204–214. [Google Scholar] [CrossRef]

- Winn, M.B.; Teece, K.H. Listening Effort Is Not the Same as Speech Intelligibility Score. Trends Heart 2021, 25, 23312165211027688. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Dong, R.; Fu, X.; Wang, Y.; Wang, S. Effects of Wireless Remote Microphone on Speech Recognition in Noise for Hearing Aid Users in China. Front. Neurosci. 2021, 15, 643205. [Google Scholar] [CrossRef]

- Fitzpatrick, E.M.; Fournier, P.; Séguin, C.; Armstrong, S.; Chénier, J.; Schramm, D. Users’ perspectives on the benefits of FM systems with cochlear implants. Int. J. Audiol. 2010, 49, 44–53. [Google Scholar] [CrossRef]

- Lewis, D.E.; Feigin, J.A.; Karasek, A.E.; Stelmachowicz, P.G. Evaluation and Assessment of FM Systems. Ear Heart 1991, 12, 268–280. [Google Scholar] [CrossRef]

- Lewis, D.; Spratford, M.; Stecker, G.C.; McCreery, R.W. Remote-Microphone Benefit in Noise and Reverberation for Children Who are Hard of Hearing. J. Am. Acad. Audiol. 2022, 21, 642–653. [Google Scholar] [CrossRef]

- Madell, J. FM Systems as Primary Amplification for Children with Profound Hearing Loss. Ear Heart 1992, 13, 102–107. [Google Scholar] [CrossRef]

- Snapp, H.; Morgenstein, K.; Sanchez, C.; Coto, J.; Cejas, I. Comparisons of performance in pediatric bone conduction implant recipients using remote microphone technology. Int. J. Pediatr. Otorhinolaryngol. 2020, 139, 110444. [Google Scholar] [CrossRef]

- Thibodeau, L.M. Between the Listener and the Talker: Connectivity Options. Semin. Heart 2020, 41, 247–253. [Google Scholar] [CrossRef]

- Zanin, J.; Rance, G. Functional hearing in the classroom: Assistive listening devices for students with hearing impairment in a mainstream school setting. Int. J. Audiol. 2016, 55, 723–729. [Google Scholar] [CrossRef]

- Martin, A.; Cox, J. Using Technology to Enhance Learning for Students Who Are Deaf/Hard of Hearing. In Using Technology to Enhance Special Education (Advances in Special Education); Bakken, J.P., Obiakor, F.E., Eds.; Emerald Publishing Limited: Bingley, UK, 2023; Volume 37, pp. 71–86. [Google Scholar] [CrossRef]

- Sassano, C. Comparison of Classroom Accommodations for Students Who Are Deaf/Hard-of-Hearing. 2016. Available online: http://purl.flvc.org/fsu/fd/FSU_libsubv1_scholarship_submission_1461183310 (accessed on 22 December 2022).

- Berndsen, M.; Luckner, J. Supporting Students Who Are Deaf or Hard of Hearing in General Education Classrooms. Commun. Disord. Q. 2012, 33, 111–118. [Google Scholar] [CrossRef]

- Fry, A.C. Survey of Personal FM Systems in the Classroom: Consistency of Use and Teacher Attitudes. 2014. Available online: https://kb.osu.edu/handle/1811/61601 (accessed on 5 January 2023).

- Millett, P. The role of sound field amplification for English Language Learners. J. Educ. Pediatr. (Re) Habilit. Audiol. 2018, 35. [Google Scholar]

- Nicolaou, C.; Matsiola, M.; Kalliris, G. Technology-Enhanced Learning and Teaching Methodologies through Audiovisual Media. Educ. Sci. 2019, 9, 196. [Google Scholar] [CrossRef] [Green Version]

- Aljedaani, W.; Krasniqi, R.; Aljedaani, S.; Mkaouer, M.W.; Ludi, S.; Al-Raddah, K. If online learning works for you, what about deaf students? Emerging challenges of online learning for deaf and hearing-impaired students during COVID-19: A literature review. Univers. Access Inf. Soc. 2022, 22, 1027–1046. [Google Scholar] [CrossRef]

- Johnson, C.D. Remote Learning for Children with Auditory Access Needs: What We Have Learned during COVID-19. Semin. Heart 2020, 41, 302–308. [Google Scholar] [CrossRef]

- Millett, P. Accommodating students with hearing loss in a teacher of the deaf/hard of hearing education program. J. Educ. Audiol. 2014, 15, 84–90. [Google Scholar]

- Millett, P. Improving accessibility with captioning: An overview of the current state of technology. Can. Audiol. 2019, 6, 1–5. [Google Scholar]

- Millett, P. Accuracy of Speech-to-Text Captioning for Students Who are Deaf or Hard of Hearing. J. Educ. Pediatr. (Re) Habilit. Audiol. 2021, 25, 1–13. [Google Scholar]

- Millett, P.; Mayer, C. Integrating onsite and online learning in a teacher of the deaf and hard of hearing education program. J. Online Learn. Teach. 2010, 6, 1–10. [Google Scholar]

- Walker, E.A.; Curran, M.; Spratford, M.; Roush, P. Remote microphone systems for preschool-age children who are hard of hearing: Access and utilization. Int. J. Audiol. 2019, 58, 200–207. [Google Scholar] [CrossRef] [PubMed]

- Gabbard, S.A. The use of FM technology for infants and young children. In Proceedings of the International Phonak conference: Achieving Clear Communication Employing Sound Solutions, Chicago; Fabry, D., Johnson, C.D., Eds.; Phonak: Geneva, Switzerland, 2004; pp. 93–99. [Google Scholar]

- Sexton, J.; Madell, J. Auditory Access for Infants and Toddlers Utilizing Personal FM Technology. Perspect. Heart Heart Disord. Child. 2008, 18, 58–62. [Google Scholar] [CrossRef]

- Pennington, C.G.; Costine, J.; Dunbar, M.; Jennings, R. Deafness and hard of hearing: Adapting sport and physical activity. In Proceedings of the 9th International Conference on Sport Sciences Research and Technology Support, Online, 28–29 October 2021. [Google Scholar]

- Moeller, M.P.; Tomblin, J.B. An Introduction to the Outcomes of Children with Hearing Loss Study. Ear Heart 2015, 36, 4S–13S. [Google Scholar] [CrossRef] [Green Version]

- Moeller, M.P.; Tomblin, J.B. Epilogue. Ear Heart 2015, 36, 92S–98S. [Google Scholar] [CrossRef] [Green Version]

- Thibodeau, L.M.; Schafer, E. Issues to Consider Regarding Use of FM Systems with Infants with Hearing Loss. Perspect. Heart Conserv. Occup. Audiol. 2002, 8, 18–21. [Google Scholar] [CrossRef]

- Benítez-Barrera, C.R.; Angley, G.P.; Tharpe, A.M. Remote Microphone System Use at Home: Impact on Caregiver Talk. J. Speech Lang. Heart Res. 2018, 61, 399–409. [Google Scholar] [CrossRef] [PubMed]

- Benítez-Barrera, C.R.; Thompson, E.C.; Angley, G.P.; Woynaroski, T.; Tharpe, A.M. Remote Microphone System Use at Home: Impact on Child-Directed Speech. J. Speech Lang. Heart Res. 2019, 62, 2002–2008. [Google Scholar] [CrossRef]

- Thompson, E.C.; Benítez-Barrera, C.R.; Tharpe, A.M. Home use of remote mi-crophone systems by children with hearing loss. Hear. J. 2020, 73, 34–35. [Google Scholar] [CrossRef]

- Thompson, E.C.; Benítez-Barrera, C.R.; Angley, G.P.; Woynaroski, T.; Tharpe, A.M. Remote Microphone System Use in the Homes of Children with Hearing Loss: Impact on Caregiver Communication and Child Vocalizations. J. Speech Lang. Heart Res. 2020, 63, 633–642. [Google Scholar] [CrossRef] [PubMed]

- McCracken, W.; Mulla, I. Frequency Modulation for Preschoolers with Hearing Loss. Semin. Heart 2014, 35, 206–216. [Google Scholar] [CrossRef]

- Curran, M.; Walker, E.A.; Roush, P.; Spratford, M. Using Propensity Score Matching to Address Clinical Questions: The Impact of Remote Microphone Systems on Language Outcomes in Children Who Are Hard of Hearing. J. Speech Lang. Heart Res. 2019, 62, 564–576. [Google Scholar] [CrossRef]

- Moeller, M.P.; Donaghy, K.F.; Beauchaine, K.L.; Lewis, D.E.; Stelmachowicz, P.G. Longitudinal Study of FM System Use in Nonacademic Settings: Effects on Language Development. Ear Heart 1996, 17, 28–41. [Google Scholar] [CrossRef]

- Beecher, F. A vision of the future: A “concept hearing aid” with Bluetooth wireless technology. Heart J. 2000, 53, 40–44. [Google Scholar] [CrossRef]

- Stone, M.; Dillon, H.; Chilton, H.; Glyde, H.; Mander, J.; Lough, M.; Wilbraham, K. To Generate Evidence on the Effectiveness of Wireless Streaming Technologies for Deaf Children, Compared to Radio Aids; Report for the National Deaf Children’s Society: London, UK, 2022. [Google Scholar]

- Gernsbacher, M.A. Video Captions Benefit Everyone. Policy Insights Behav. Brain Sci. 2015, 2, 195–202. [Google Scholar] [CrossRef] [Green Version]

- Perez, M.M.; Noortgate, W.V.D.; Desmet, P. Captioned video for L2 listening and vocabulary learning: A meta-analysis. System 2013, 41, 720–739. [Google Scholar] [CrossRef]

- Kent, M.; Ellis, K.; Latter, N.; Peaty, G. The Case for Captioned Lectures in Australian Higher Education. Techtrends 2018, 62, 158–165. [Google Scholar] [CrossRef]

- Kawas, S.; Karalis, G.; Wen, T.; Ladner, R.E. Improving real-time captioning experiences for deaf and hard of hearing students. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility, Reno, NV, USA, 23–26 October 2016; pp. 15–23. [Google Scholar]

- Cawthon, S.W.; Leppo, R.; Ge, J.J.; Bond, M. Accommodations Use Patterns in High School and Postsecondary Settings for Students Who Are d/Deaf or Hard of Hearing. Am. Ann. Deaf. 2015, 160, 9–23. [Google Scholar] [CrossRef] [PubMed]

- Powell, D.; Hyde, M.; Punch, R. Inclusion in Postsecondary Institutions with Small Numbers of Deaf and Hard-of-Hearing Students: Highlights and Challenges. J. Deaf. Stud. Deaf. Educ. 2014, 19, 126–140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ranchal, R.; Taber-Doughty, T.; Guo, Y.; Bain, K.; Martin, H.; Robinson, J.P.; Duerstock, B.S. Using speech recognition for real-time captioning and lecture transcription in the classroom. IEEE Trans. Learn. Technol. 2013, 6, 299–311. [Google Scholar] [CrossRef]

- Elliot, L.; Foster, S.; Stinson, M. Student Study Habits Using Notes from a Speech-to-Text Support Service. Except. Child. 2002, 69, 25–40. [Google Scholar] [CrossRef]

- Elliot, L.; Stinson, M.; Francis, P. C-Print Tablet PC support for deaf and hard of hearing students. In Proceedings of the ICERI2009 Proceedings, Madrid, Spain, 16–18 November 2009; pp. 2454–2473. [Google Scholar]

- Elliot, L.B.; Stinson, M.S.; McKee, B.G.; Everhart, V.S.; Francis, P.J. College Students’ Perceptions of the C-Print Speech-to-Text Transcription System. J. Deaf. Stud. Deaf. Educ. 2001, 6, 285–298. [Google Scholar] [CrossRef] [Green Version]

- Harish, K. New Zealand Early Childhood Education Teachers’ Knowledge and Experience of Supporting Hard of Hearing or Deaf Children. Master’s Thesis, University of Canterbury, Christchurch, New Zealand, 2022. [Google Scholar]

- Gustafson, S.J.; Ricketts, T.A.; Tharpe, A.M. Hearing Technology Use and Management in School-Age Children: Reports from Data Logs, Parents, and Teachers. J. Am. Acad. Audiol. 2017, 28, 883–892. [Google Scholar] [CrossRef]

- Barker, R.E. Teacher and Student Experiences of Remote Microphone Systems. Master’s Thesis, University of Canterbury, Christchurch, New Zealand, 2020. Available online: https://ir.canterbury.ac.nz/handle/10092/100086 (accessed on 15 March 2023).

- Esturaro, G.T.; Youssef, B.C.; Ficker, L.B.; Deperon, T.M.; Mendes, B.d.C.A.; Novaes, B.C.d.A.C. Adesão ao uso do Sistema de Microfone Remoto em estudantes com deficiência auditiva usuários de dispositivos auditivos. Codas 2022, 34. [Google Scholar] [CrossRef]

- Groth, J. Exploring teenagers’ access and use of assistive hearing technology. ENT Audiol. News 2017, 25. [Google Scholar]

- McPherson, B. Hearing assistive technologies in developing countries: Background, achievements and challenges. Disabil. Rehabil. Assist. Technol. 2014, 9, 360–364. [Google Scholar] [CrossRef] [PubMed]

- Hersh, M.; Mouroutsou, S. Learning technology and disability—Overcoming barriers to inclusion: Evidence from a multicountry study. Br. J. Educ. Technol. 2019, 50, 3329–3344. [Google Scholar] [CrossRef]

- Schafer, E.C.; Dunn, A.; Lavi, A. Educational Challenges during the Pandemic for Students Who Have Hearing Loss. Lang. Speech Heart Serv. Sch. 2021, 52, 889–898. [Google Scholar] [CrossRef] [PubMed]

- Taylor, K.; Neild, R.; Fitzpatrick, M. Universal Design for Learning: Promoting Access in Early Childhood Education for Deaf and Hard of Hearing Children. Perspect. Early Child. Psychol. Educ. 2023, 5, 4. [Google Scholar] [CrossRef]

- Houtrow, A.; Harris, D.; Molinero, A.; Levin-Decanini, T.; Robichaud, C. Children with disabilities in the United States and the COVID-19 pandemic. J. Pediatr. Rehabil. Med. 2020, 13, 415–424. [Google Scholar] [CrossRef]

- Kim, J.Y.; Fienup, D.M. Increasing Access to Online Learning for Students with Disabilities during the COVID-19 Pandemic. J. Spéc. Educ. 2022, 55, 213–221. [Google Scholar] [CrossRef]

- Taggart, L.; Mulhall, P.; Kelly, R.; Trip, H.; Sullivan, B.; Wallén, E.F. Preventing, mitigating, and managing future pandemics for people with an intellectual and developmental disability-Learnings from COVID-19: A scoping review. J. Policy Pract. Intellect. Disabil. 2022, 19, 4–34. [Google Scholar] [CrossRef]

- Kaufmann, T.B.; Foroogozar, M.; Liss, J.; Berisha, V. Requirements for mass adoption of assistive listening technology by the general public. arXiv 2023, arXiv:2303.02523. [Google Scholar]

- Fink, M.; Butler, J.; Stremlau, T.; Kerschbaum, S.L.; Brueggemann, B.J. Honoring access needs at academic conferences through Computer Assisted Real-time Captioning (CART) and sign language interpreting. Coll. Compos. Commun. 2020, 72, 103–106. [Google Scholar]

- Morris, K.K.; Frechette, C.; Dukes, L.; Stowell, N.; Topping, N.E.; Brodosi, D. Closed captioning matters: Examining the value of closed captions for all students. J. Postsecond. Educ. Disabil. 2016, 29, 231–238. [Google Scholar]

- McDonnell, E.J.; Liu, P.; Goodman, S.M.; Kushalnagar, R.; Froehlich, J.E.; Findlater, L. Social, Environmental, and Technical: Factors at Play in the Current Use and Future Design of Small-Group Captioning. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–25. [Google Scholar] [CrossRef]

- Li, F.M.; Lu, C.; Lu, Z.; Carrington, P.; Truong, K.N. An Exploration of Captioning Practices and Challenges of Individual Content Creators on YouTube for People with Hearing Impairments. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–26. [Google Scholar] [CrossRef]

- McDonnell, E.J. Understanding Social and Environmental Factors to Enable Collective Access Approaches to the Design of Captioning Technology. ACM SIGACCESS Access. Comput. 2023, 135, 1. [Google Scholar] [CrossRef]

- Peng, Y.-H.; Hsi, M.-W.; Taele, P.; Lin, T.-Y.; Lai, P.-E.; Hsu, L.; Chen, T.-C.; Wu, T.-Y.; Chen, Y.-A.; Tang, H.-H.; et al. SpeechBubbles: Enhancing Captioning Experiences for Deaf and Hard-of-Hearing People in Group Conversations. In Proceedings of the CHI’18: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–10. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Millett, P. The Connected Life: Using Access Technology at Home, at School and in the Community. Educ. Sci. 2023, 13, 761. https://doi.org/10.3390/educsci13080761

Millett P. The Connected Life: Using Access Technology at Home, at School and in the Community. Education Sciences. 2023; 13(8):761. https://doi.org/10.3390/educsci13080761

Chicago/Turabian StyleMillett, Pam. 2023. "The Connected Life: Using Access Technology at Home, at School and in the Community" Education Sciences 13, no. 8: 761. https://doi.org/10.3390/educsci13080761

APA StyleMillett, P. (2023). The Connected Life: Using Access Technology at Home, at School and in the Community. Education Sciences, 13(8), 761. https://doi.org/10.3390/educsci13080761