“FLIPPED ASSESSMENT”: Proposal for a Self-Assessment Method to Improve Learning in the Field of Manufacturing Technologies

Abstract

:1. Introduction

1.1. Self-Assessment of Student Motivation

1.2. Student Motivation

1.3. Research Questions

2. Materials and Methods

2.1. Scenario of this Study

2.2. Implementation of the Methodology

2.2.1. Quantitative Data

2.2.2. Qualitative Data

3. Results

3.1. Student Self-Perceived Measurements of The Learning Experience

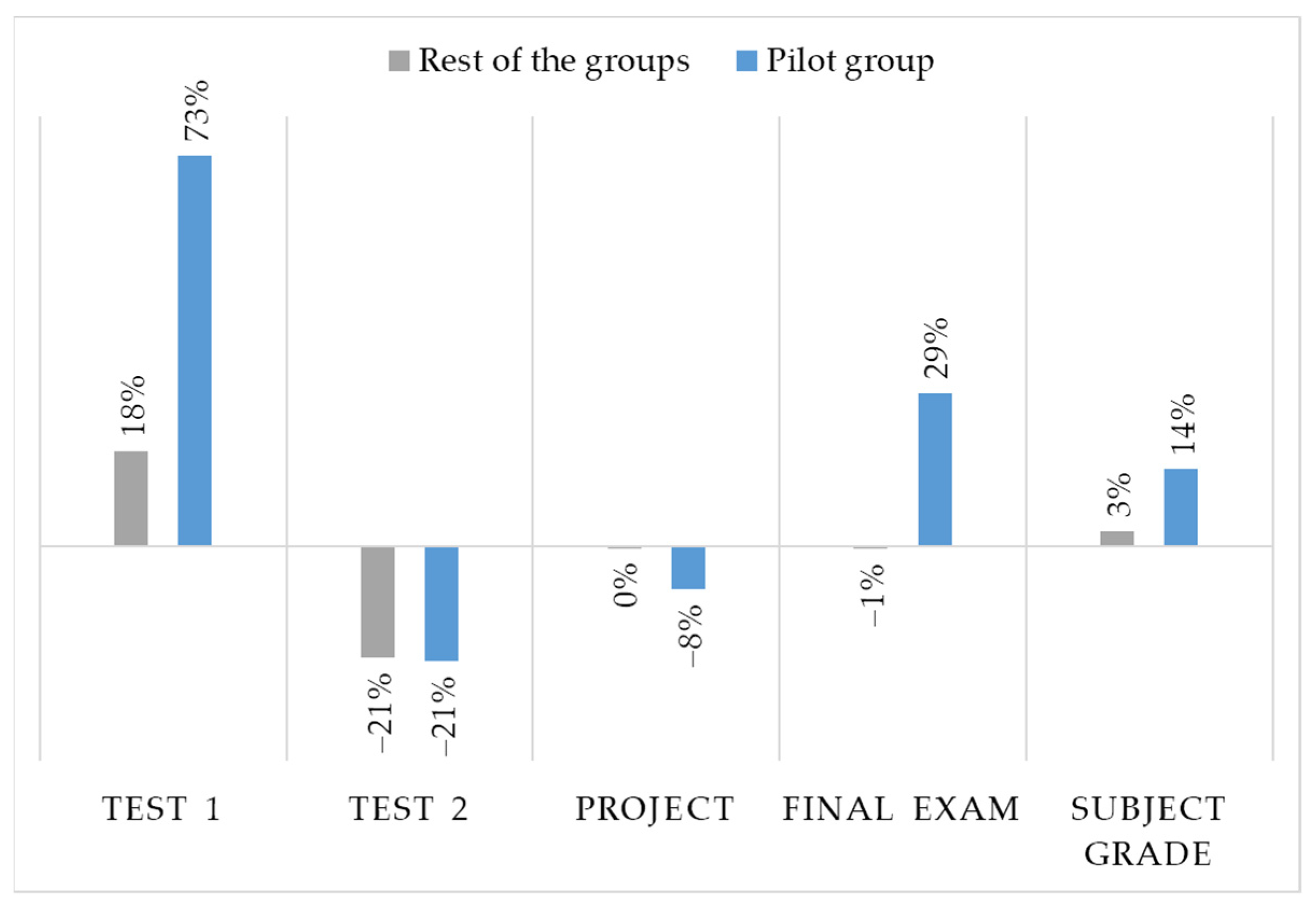

3.2. Student’s Grades

4. Discussion

4.1. Effect of Student Self-Assessment on Intrinsic Learning Motivation

4.2. The Contribution of Student Self-Assessment to Improving Their Perception of the Teaching Work and the Subject

4.3. Influence of Self-Assessment on Student Engagement and Classroom Dynamics

4.4. Relationship Between Self-Assessment and Better Academic Results

4.5. Limitations and Future Works

5. Conclusions

- The first hypothesis appears to be valid: self-assessment seems to help motivate students, even in the presented case where students do not necessarily have to answer their own questions but those of their classmates.

- The second hypothesis is also apparently well-posed. On average, students appear to be more satisfied with the subject when the self-assessment system is implemented. Students positively value the teacher’s work in all areas, highlighting how they organize classes, explain content, and resolve doubts. Additionally, they perceive that they have acquired more knowledge in a more time-efficient manner.

- In the matter of classroom dynamics and student engagement, self-assessment might promote a better learning environment and greater camaraderie, which would support the third hypothesis.

- Finally, the hypothesis regarding academic results also seems to be valid. In quantitative terms, the implemented self-assessment system appears to have allowed for an increase in student knowledge, resulting in higher academic results on average than before its implementation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rodríguez Esteban, M.A.; Frechilla-Alonso, M.A.; Saez-Pérez, M.P. Implementación de la evaluación por pares como herramienta de aprendizaje en grupos numerosos. Experiencia docente entre universidades = Implementation of the evaluation by pairs as a learning tool in large groups. Teaching experience between universities. Adv. Build. Educ. 2018, 2, 66. [Google Scholar] [CrossRef] [Green Version]

- Leach, L. Optional self-assessment: Some tensions and dilemmas. Assess. Eval. High. Educ. 2012, 37, 137–147. [Google Scholar] [CrossRef]

- Brown, G.T.L.; Andrade, H.L.; Chen, F. Accuracy in student self-assessment: Directions and cautions for research. Assess. Educ. Princ. Policy Pract. 2015, 22, 444–457. [Google Scholar] [CrossRef]

- Panadero, E.; Brown, G.T.L.; Strijbos, J.W. The Future of Student Self-Assessment: A Review of Known Unknowns and Potential Directions. Educ. Psychol. Rev. 2016, 28, 803–830. [Google Scholar] [CrossRef] [Green Version]

- Andrade, H.L. A Critical Review of Research on Student Self-Assessment. Front. Educ. 2019, 4, 87. [Google Scholar] [CrossRef] [Green Version]

- Panadero, E.; Jonsson, A.; Strijbos, J.W. Scaffolding Self-Regulated Learning Through Self-Assessment and Peer Assessment: Guidelines for Classroom Implementation. Enabling Power Assess. 2016, 4, 311–326. [Google Scholar]

- Yan, Z.; Brown, G.T.L. A cyclical self-assessment process: Towards a model of how students engage in self-assessment. Assess. Eval. High. Educ. 2017, 42, 1247–1262. [Google Scholar] [CrossRef]

- Mendoza, N.B.; Yan, Z. Studies in Educational Evaluation Exploring the moderating role of well-being on the adaptive link between self-assessment practices and learning achievement. Stud. Educ. Eval. 2023, 77, 101249. [Google Scholar] [CrossRef]

- Yan, Z.; Lao, H.; Panadero, E.; Fernández-Castilla, B.; Yang, L.; Yang, M. Effects of self-assessment and peer-assessment interventions on academic performance: A meta-analysis. Educ. Res. Rev. 2022, 37, 100484. [Google Scholar] [CrossRef]

- Panadero, E.; Alqassab, M. Cambridge Handbook of Instructional Feedback; Cambridge University Press: Cambridge, UK, 2018; ISBN 9781316832134. [Google Scholar]

- Bartimote-Aufflick, K.; Bridgeman, A.; Walker, R.; Sharma, M.; Smith, L. The study, evaluation, and improvement of university student self-efficacy. Stud. High. Educ. 2016, 41, 1918–1942. [Google Scholar] [CrossRef]

- You Searched for How to Successfully Introduce Self-Assessment inYour Classroom—THE EDUCATION HUB. Available online: https://theeducationhub.org.nz/?s=How+to+successfully+introduce+self-assessment+in+your+classroom (accessed on 12 August 2023).

- Hearn, J.; McMillan, J.H. Student Self-Assessment: The Key to Stronger Student Motivation and Higher Achievement. Educ. Horiz. 2008, 87, 40–49. [Google Scholar]

- Mphahlele, L. Students’ Perception of the Use of a Rubric and Peer Reviews in an Online Learning Environment. J. Risk Financ. Manag. 2022, 15, 503. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, X.; Boud, D.; Lao, H. The effect of self-assessment on academic performance and the role of explicitness: A meta-analysis. Assess. Eval. High. Educ. 2023, 48, 1–15. [Google Scholar] [CrossRef]

- Larsari, V.N.; Dhuli, R.; Koolai, Z.D. An Investigation into the Effect of Self-assessment on Improving EFL Learners’ Motivation in EFL Grammar Achievements. J. Engl. A Foreign Lang. Teach. Res. 2023, 3, 44–56. [Google Scholar]

- Chambers, A.W. Increasing Student Engagement with Self-Assessment Using Student-Created Rubrics. J. Scholarsh. Teach. Learn. 2023, 23, 96–99. [Google Scholar]

- Self-Assessment as a Tool for Teacher’s Professional Development—LessonApp. Available online: https://lessonapp.fi/self-assessment-as-a-tool-for-teachers-professional-development/ (accessed on 9 August 2023).

- Zimmerman, B.J. Motivational Sources and Outcomes of Self-Regulated Learning and Performance. In Handbook of Self-Regulation of Learning and Performance; Routledge: New York, NY, USA; Abingon, UK, 2015. [Google Scholar]

- Mendoza, N.B.; Yan, Z.; King, R.B. Computers & Education Supporting students’ intrinsic motivation for online learning tasks: The effect of need-supportive task instructions on motivation, self-assessment, and task performance. Comput. Educ. 2023, 193, 104663. [Google Scholar]

- Zimmerman, B.J. Chapter 2—Attaining Self-Regulation: A Social Cognitive Perspective. In Handbook of Self-Regulation; Boekaerts, M., Pintrich, P.R., Zeidner, M., Eds.; Academic Press: San Diego, CA, USA, 2000; pp. 13–39. ISBN 978-0-12-109890-2. [Google Scholar]

- Sharma, R.; Jain, A.; Gupta, N.; Garg, S.; Batta, M.; Dhir, S. Impact of self-assessment by students on their learning. Int. J. Appl. Basic Med. Res. 2016, 6, 226. [Google Scholar] [CrossRef] [Green Version]

- Andrade, H.L.; Andrade, H.; Du, Y. Student Perspectives on Rubric-Referenced Assessment. Educ. Couns. Psychol. Fac. Scholarsh. 2005, 10, 3. [Google Scholar]

- Black, P. and Wiliam, D. Assessment and Classroom Learning. Assess. Educ. Principles. Policy Pract. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Larsari, V.N. A comparative study of the effect of using self-assessment and traditional method on improving students’ academic mo-tivation in reading competency: The case of primary school. In Proceedings of the 1st International Congress (ICESSER), Ankara, Turkey, 29–30 May 2021. [Google Scholar]

- Margevica-grinberga, I.; Vitola, K. Teacher Education Students’ Self -assessment in COVID-19 Crisis. Psychol. Educ. 2021, 58, 2874–2883. [Google Scholar]

- Leenknecht, M.; Wijnia, L.; Köhlen, M.; Fryer, L.; Rikers, R.; Loyens, S. Formative assessment as practice: The role of students’ motivation. Assess. Eval. High. Educ. 2021, 46, 236–255. [Google Scholar] [CrossRef]

- Schunk, D.H.; Pintrich, P.R.; Meece, J.L. Motivation in Education: Theory Research and Applications, 3rd ed.; Merrill Prentice Hall: Hoboken, NJ, USA, 2008. [Google Scholar]

- Yarborough, C.B.; Fedesco, H.N. Motivating Students. Vanderbilt University Center for Teaching. 2020. Available online: https://cft.vanderbilt.edu//cft/guides-sub-pages/motivating-students/ (accessed on 12 August 2023).

- Ryan, R.M.; Deci, E.L. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68–78. [Google Scholar] [CrossRef]

- Gómez-Carrasco, C.J.; Monteagudo-Fernández, J.; Sainz-Gómez, M.; Moreno-Vera, J.R. Effects of a gamification and flipped-classroom program for teachers in training on motivation and learning perception. Educ. Sci. 2019, 9, 299. [Google Scholar] [CrossRef] [Green Version]

- Vansteenkiste, M.; Sierens, E.; Goossens, L.; Soenens, B.; Dochy, F.; Mouratidis, A.; Aelterman, N.; Haerens, L.; Beyers, W. Identifying configurations of perceived teacher autonomy support and structure: Associations with self-regulated learning, motivation and problem behavior. Learn. Instr. 2012, 22, 431–439. [Google Scholar] [CrossRef]

- Deci, E.L.; Koestner, R.; Ryan, R.M. A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychol. Bull. 1999, 125, 627–700. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef] [Green Version]

- Gan, Z.; He, J.; Zhang, L.J.; Schumacker, R. Examining the Relationships between Feedback Practices and Learning Motivation. Measurement 2023, 21, 38–50. [Google Scholar] [CrossRef]

- Russo, P.; Papa, I.; Lopresto, V. Impact damage behavior of basalt fibers composite laminates: Comparison between vinyl ester and nylon 6 based systems. In Proceedings of the 33rd Technical Conference of the American Society for Composites 2018, Seattle, WA, USA, 24–27 September 2018; DEStech Publications Inc.: Lancaster, PA, USA, 2018; Volume 5, pp. 3347–3360. [Google Scholar]

- Muenks, K.; Yang, J.S.; Wigfield, A. Associations between grit, motivation, and achievement in high school students. Motiv. Sci. 2018, 4, 158–176. [Google Scholar] [CrossRef]

- Habók, A.; Magyar, A.; Németh, M.B.; Csapó, B. Motivation and self-related beliefs as predictors of academic achievement in reading and mathematics: Structural equation models of longitudinal data. Int. J. Educ. Res. 2020, 103, 101634. [Google Scholar]

- Möller, J.; Pohlmann, B.; Köller, O.; Marsh, H.W. A meta-analytic path analysis of the internal/external frame of reference model of academic achievement and academic self-concept. Rev. Educ. Res. 2009, 79, 1129–1167. [Google Scholar] [CrossRef]

- Huang, C. Self-concept and academic achievement: A meta-analysis of longitudinal relations. J. Sch. Psychol. 2011, 49, 505–528. [Google Scholar] [PubMed]

- Lazowski, R.A.; Hulleman, C.S. Motivation Interventions in Education: A Meta-Analytic Review. Rev. Educ. Res. 2016, 86, 602–640. [Google Scholar] [CrossRef] [Green Version]

- Lee, Y.; Rofe, J.S. Paragogy and flipped assessment: Experience of designing and running a MOOC on research methods. Open Learn. 2016, 31, 116–129. [Google Scholar] [CrossRef] [Green Version]

- Toivola, M. Flipped Assessment—A Leap towards Assessment for Learning; Edita: Helsinki, Finland, 2020; ISBN 9513775151. [Google Scholar]

- Tejeiro, R.A.; Gómez-Vallecillo, J.L.; Romero, A.F.; Pelegrina, M.; Wallace, A.; Emberley, E. La autoevaluación sumativa en la enseñanza superior: Implicaciones de su inclusión en la nota final. Electron. J. Res. Educ. Psychol. 2017, 10, 789–812. [Google Scholar] [CrossRef] [Green Version]

- Sloan, J.A.; Scharff, L.F. Student Self-Assessment: Relationships between Accuracy, Engagement, Perceived Value, and Performance. J. Civ. Eng. Educ. 2022, 148, 04022004. [Google Scholar] [CrossRef]

- Liu, J. Correlating self-efficacy with self-assessment in an undergraduate interpreting classroom: How accurate can students be? Porta Linguarum 2021, 2021, 9–25. [Google Scholar]

- Bourke, R. Self-assessment in professional programmes within tertiary institutions. Teach. High. Educ. 2014, 19, 908–918. [Google Scholar] [CrossRef]

- Ndoye, A. Peer/Self Assessment and Student Learning. Int. J. Teach. 2017, 29, 255–269. [Google Scholar]

- Van Helvoort, A.A.J. How Adult Students in Information Studies Use a Scoring Rubric for the Development of Their Information Literacy Skills. J. Acad. Librariansh. 2012, 38, 165–171. [Google Scholar] [CrossRef]

- Siow, L.-F. Students’ perceptions on self- and peer-assessment in enhancing learning experience. Malays. Online J. Educ. Sci. 2015, 3, 21–35. [Google Scholar]

- Barana, A.; Boetti, G.; Marchisio, M. Self-Assessment in the Development of Mathematical Problem-Solving Skills. Educ. Sci. 2022, 12, 81. [Google Scholar] [CrossRef]

- Panadero, E.; Romero, M. To rubric or not to rubric? The effects of self-assessment on self-regulation, performance and self-efficacy. Assess. Educ. Princ. Policy Pract. 2014, 21, 133–148. [Google Scholar] [CrossRef]

- Barney, S.; Khurum, M.; Petersen, K.; Unterkalmsteiner, M.; Jabangwe, R. Improving students with rubric-based self-assessment and oral feedback. IEEE Trans. Educ. 2012, 55, 319–325. [Google Scholar] [CrossRef]

| 2021 | 2022 | Percentage of Variation, Values Obtained in 2022 with Respect to 2021 | ||

|---|---|---|---|---|

| Pilot with Respect to All Groups | Pilot with Respect to All Groups | Pilot Group | All Groups | |

| Teacher Performance | ||||

| The teacher stimulates learning appropriately. | −6% | −3% | 4% | 1% |

| The teacher plans and coordinates the class well and clearly exposes the explanations. | −24% | 2% | 37% | 3% |

| The teacher adequately resolves doubts and guides students in the development of tasks. | −24% | −4% | 33% | 6% |

| In general, I am satisfied with the teaching of the teacher of the subject. | −13% | −6% | 8% | 1% |

| Questions about the subject | ||||

| In general, I am satisfied with the subject. | −6% | 19% | 22% | −3% |

| Estimate the number of hours per week, excluding classes, you have devoted to the subject. | 35% | 1% | −32% | −9% |

| Value the increase in knowledge, competencies and/or skills acquired. | −10% | 9% | 22% | 1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz-Álvarez, J.; Díaz-Álvarez, A.; Mantecón, R.; Miguélez, M.H. “FLIPPED ASSESSMENT”: Proposal for a Self-Assessment Method to Improve Learning in the Field of Manufacturing Technologies. Educ. Sci. 2023, 13, 831. https://doi.org/10.3390/educsci13080831

Díaz-Álvarez J, Díaz-Álvarez A, Mantecón R, Miguélez MH. “FLIPPED ASSESSMENT”: Proposal for a Self-Assessment Method to Improve Learning in the Field of Manufacturing Technologies. Education Sciences. 2023; 13(8):831. https://doi.org/10.3390/educsci13080831

Chicago/Turabian StyleDíaz-Álvarez, José, Antonio Díaz-Álvarez, Ramiro Mantecón, and María Henar Miguélez. 2023. "“FLIPPED ASSESSMENT”: Proposal for a Self-Assessment Method to Improve Learning in the Field of Manufacturing Technologies" Education Sciences 13, no. 8: 831. https://doi.org/10.3390/educsci13080831

APA StyleDíaz-Álvarez, J., Díaz-Álvarez, A., Mantecón, R., & Miguélez, M. H. (2023). “FLIPPED ASSESSMENT”: Proposal for a Self-Assessment Method to Improve Learning in the Field of Manufacturing Technologies. Education Sciences, 13(8), 831. https://doi.org/10.3390/educsci13080831