Instructors’ Perceptions of the Use of Learning Analytics for Data-Driven Decision Making

Abstract

:1. Introduction

- Characterizing perceptions of educational data

- Which types of educational data components are most important for instructors and TAs?

- Which aspects of student learning would instructors/TAs use educational data to promote?

- Which actions would instructors/TAs take upon viewing educational data?

- What are their associations between educational data use in the course and the characteristics of instructors/TAs?

- How can instructors/TAs’ potential action-taking upon viewing educational data be predicted based on their characteristics and perceptions?

2. Literature Review

2.1. Data-Driven Decision Making in Higher Education

2.2. Instructors’ Perceptions of Data-Driven Decisions

3. Development of the Theoretical Framework

3.1. Learners—Student Promotion

3.2. Data—Course Website Use

3.3. Intervention—Teacher Action

4. Methodology

4.1. Research Field and Research Population

4.2. Research Variables

4.2.1. Independent Variables

4.2.2. Dependent Variables

- Increasing engagement in learning;

- Increasing motivation for learning;

- Completion the course successfully;

- Enhancing learning skills (e.g., time management, collaboration, and self-regulated learning).

- Online participation (number of entrances, % of participance, etc.);

- Communication (number of forum messages, extent of participation in online discussions, etc.);

- Assessment (grades on tasks and quizzes, % task submissions, submission attempts, etc.);

- Learning materials (number of accessed files, number of hit hyperlinks, extent of video watching, extent of glossary use, etc.).

- Promoting communication with the students (e.g., sending messages, office hours, and writing to a specific student);

- Adjusting the topics taught in that course;

- Adjusting the pedagogy (e.g., integrating hands-on activity and collaborative learning);

- Changing the course structure (e.g., extra lessons and practice);

- Adding self-practice opportunities for students (e.g., tasks or interactive activities);

- Changing assessment (e.g., task structure or number of tasks).

4.3. Research Tool and Procedure

4.4. Data Preprocessing and Analysis

5. Findings

5.1. Perceptions of Student Data (RQ1a-c)

5.1.1. Important Types of Data (RQ1a)

5.1.2. Intentions of Using Data to Promote Students’ Learning (RQ1b)

5.1.3. Potential Actions upon Viewing Data (RQ1c)

5.2. Associations Between Data Use and Independent Variables (RQ2)

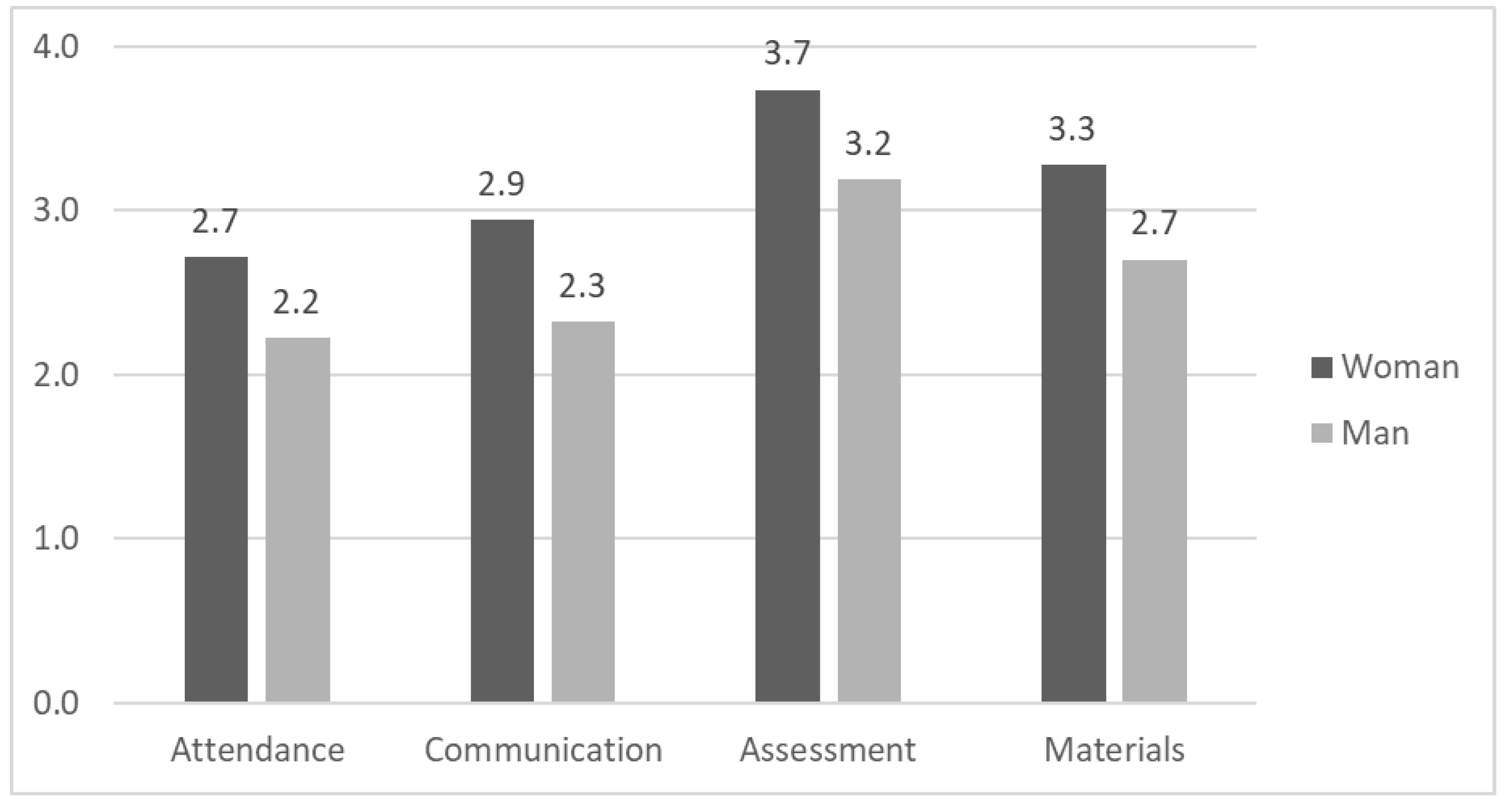

5.2.1. Important Types of Data

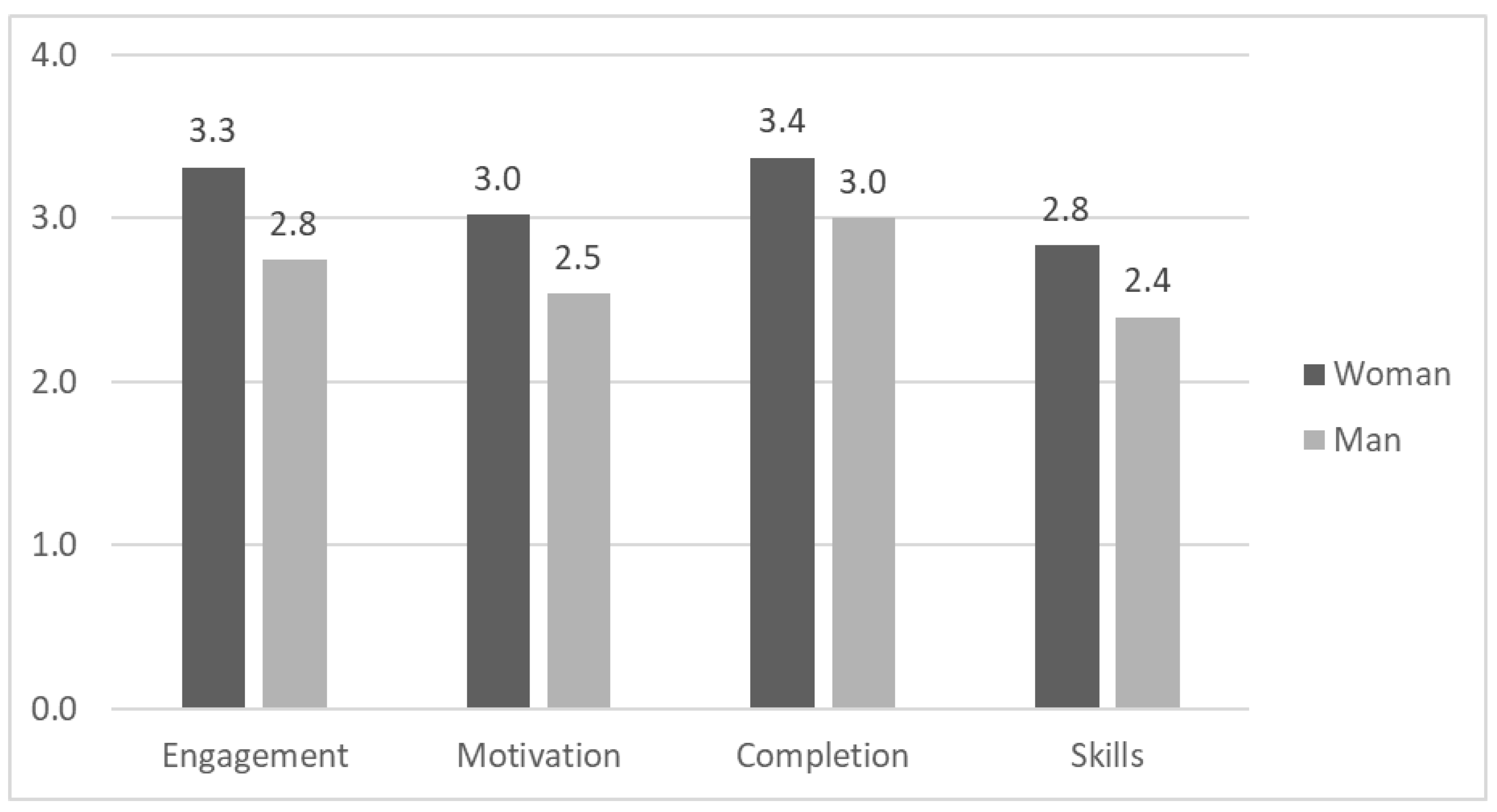

5.2.2. Intensions of Using Data to Promote Students’ Learning

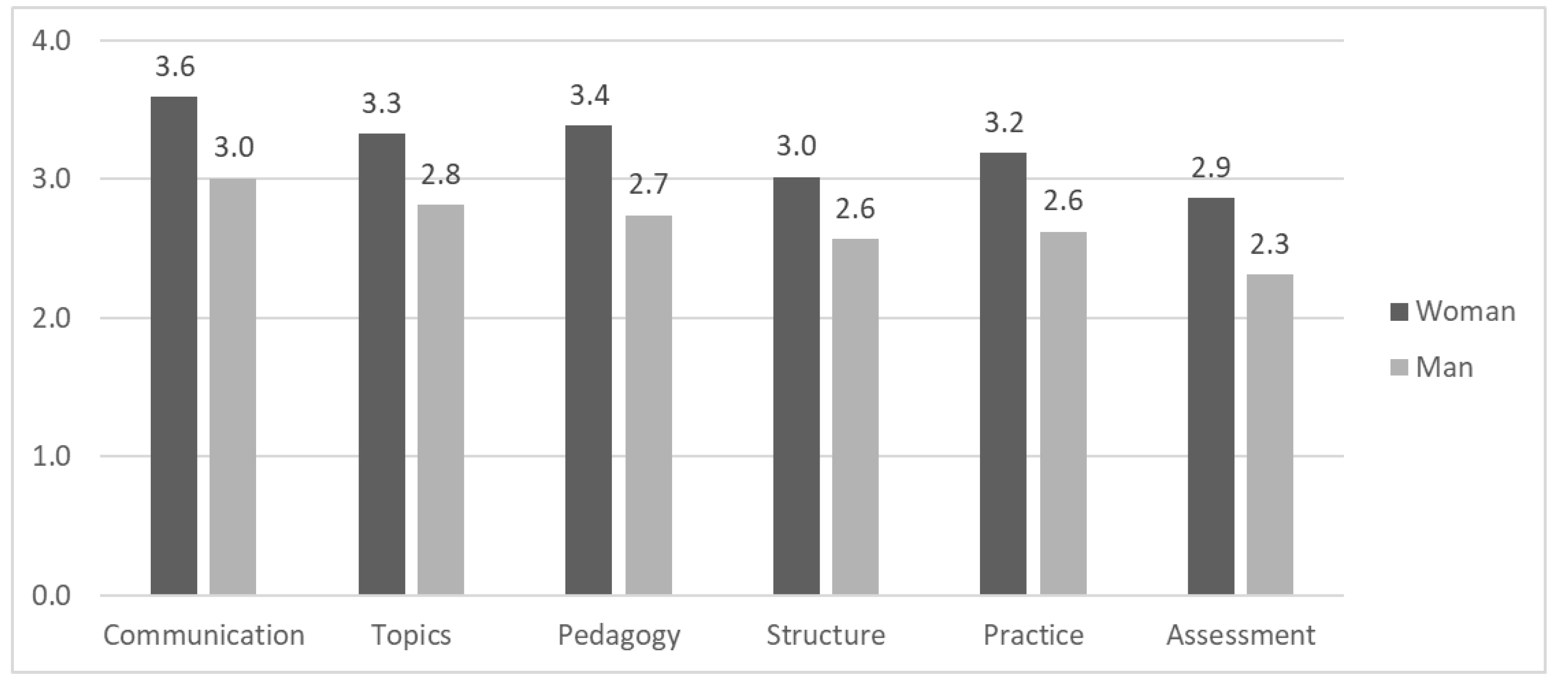

5.2.3. Potential Actions upon Viewing Data

5.3. Predicting Potential Action-Taking (RQ3)

5.3.1. Constructing a Factor Model

5.3.2. Predicting Action-Taking

6. Discussion

6.1. Global Patterns: Medium-High Scores, Women More Inclined than Men, Positive Associations with Experience with LMS

6.2. Perceptions of Instruction as Portrayed in the Findings

6.3. Importance and Use of Data as Predictors of Action-Taking

6.4. Contribution to the Understanding of Teachers’ Analytics Use

6.5. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gašević, D.; Dawson, S.; Pardo, A.; Gašević, D.; Dawson, S.; Pardo, A. How do we start? State and Directions of Learning Analytics Adoption. In Proceedings of the 2016 ICDE Presidents’ Summit, Sydney, Australia, 20–23 November 2016; pp. 1–24. [Google Scholar] [CrossRef]

- Khalil, M.; Prinsloo, P.; Slade, S. The use and application of learning theory in learning analytics: A scoping review. J. Comput. High. Educ. 2022, 35, 573–594. [Google Scholar] [CrossRef]

- Paulsen, L.; Lindsay, E. Learning analytics dashboards are increasingly becoming about learning and not just analytics—A systematic review. Educ. Inf. Technol. 2024, 29, 14279–14308. [Google Scholar] [CrossRef]

- Kustitskaya, T.A.; Esin, R.V.; Kytmanov, A.A.; Zykova, T.V. Designing an education database in a higher education institution for the data-driven management of the educational process. Educ. Sci. 2023, 13, 947. [Google Scholar] [CrossRef]

- Hora, M.T.; Bouwma-Gearhart, J.; Park, H.J. Data driven decision-making in the era of accountability: Fostering faculty data cultures for learning. Rev. High. Educ. 2017, 40, 391–426. [Google Scholar] [CrossRef]

- Ndukwe, I.G.; Daniel, B.K. Teaching analytics, value and tools for teacher data literacy: A systematic and tripartite approach. Ndukwe Daniel Int. J. Educ. Technol. High. Educ. 2020, 17, 22. [Google Scholar] [CrossRef]

- Sarmiento, J.P.; Wise, A.F. Participatory and co-design of learning analytics: An initial review of the literature. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, Online, 21–25 March 2022; ACM International Conference Proceeding Series. Association for Computing Machinery: New York, NY, USA, 2022; pp. 535–541. [Google Scholar] [CrossRef]

- Gaftandzhieva, S.; Hussain, S.; Hilčenko, S.; Doneva, R.; Boykova, K. Data-driven decision making in higher education institutions: State-of-play. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 397–405. [Google Scholar] [CrossRef]

- Asfaw, Z.; Alemneh, D.; Jimma, W. Data-driven decision-making and its impacts on education quality in developing countries: A systematic review. In Proceedings of the 2023 International Conference on Information and Communication Technology for Development for Africa (ICT4DA), Bahir Dar, Ethiopia, 26–28 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 198–203. [Google Scholar] [CrossRef]

- Usher, M.; Hershkovitz, A. Data-driven decisions of higher education instructors in an era of a global pandemic. Online Learn. 2023, 27, 170–186. [Google Scholar] [CrossRef]

- Wilcox, G.; Conde, C.F.; Kowbel, A. Using evidence-based practice and data-based decision making in inclusive education. Educ. Sci. 2021, 11, 129. [Google Scholar] [CrossRef]

- Andrews, T.C.; Lemons, P.P. It’s personal: Biology instructors prioritize personal evidence over empirical evidence in teaching decisions. CBE Life Sci. Educ. 2015, 14, ar7. [Google Scholar] [CrossRef]

- Herodotou, C.; Hlosta, M.; Boroowa, A.; Rienties, B.; Zdrahal, Z.; Mangafa, C. Empowering online teachers through predictive learning analytics. Br. J. Educ. Technol. 2019, 50, 3064–3079. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Luo, J. Exploring teacher perceptions of different types of ‘feedback practices’ in higher education: Implications for teacher feedback literacy. Assess. Eval. High. Educ. 2022, 47, 61–76. [Google Scholar] [CrossRef]

- Trinidad, J.E. Understanding student-centred learning in higher education: Students’ and teachers’ perceptions, challenges, and cognitive gaps. J. Furth. High. Educ. 2020, 44, 1013–1023. [Google Scholar] [CrossRef]

- McKinley, J.; McIntosh, S.; Milligan, L.; Mikołajewska, A. Eyes on the enterprise: Problematising the concept of a teaching-research nexus in UK higher education. High Educ. 2021, 81, 1023–1041. [Google Scholar] [CrossRef] [PubMed]

- Usher, M.; Hershkovitz, A. Interest in educational data and barriers to data use among massive open online course instructors. J. Sci. Educ. Technol. 2022, 31, 649–659. [Google Scholar] [CrossRef] [PubMed]

- Clark, J.-A.; Tuffley, D. Enhancing higher education with Learning Analytics in the digital age. ASCILITE Publ. 2023, 56–65. [Google Scholar] [CrossRef]

- Falcão, T.P.; Rodrigues, R.L.; Cechinel, C.; Dermeval, D.; de Oliveira, E.H.T.; Gasparini, I.; Araújo, R.D.; Primo, T.; Gasevic, D.; Mello, R.F. A penny for your thoughts: Students and instructors’ expectations about learning analytics in Brazil. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, Online, 21–25 March 2022; ACM: New York, NY, USA, 2022; pp. 186–196. [Google Scholar] [CrossRef]

- Hilliger, I.; Ortiz-Rojas, M.; Pesántez-Cabrera, P.; Scheihing, E.; Tsai, Y.-S.; Muñoz-Merino, P.J.; Broos, T.; Whitelock-Wainwright, A.; Pérez-Sanagustín, M. Identifying needs for learning analytics adoption in Latin American universities: A mixed-methods approach. Internet High. Educ. 2020, 45, 100726. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Yau, J.Y.K. Utilising learning analytics to support study success in higher education: A systematic review. Educ. Technol. Res. Dev. 2020, 68, 1961–1990. [Google Scholar] [CrossRef]

- Archer, E.; Barnes, G. Revisiting sensemaking: The case of the Digital Decision Network Application (DigitalDNA). Int. Rev. Res. Open Distance Learn. 2017, 18, 249–276. [Google Scholar] [CrossRef]

- Clow, D. The learning analytics cycle: Closing the loop effectively. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; pp. 134–138. [Google Scholar]

- Chanthiran, M.; Ibrahim, A.B.; Rahman, M.H.A.; Kumar, S.; Dandage, R.V. A systematic literature review with bibliometric meta-analysis of AI technology adoption in education. EDUCATUM J. Sci. Math. Technol. 2022, 9, 61–71. [Google Scholar] [CrossRef]

- Zhu, Y.; Areeprayolkij, W.; Thanyaphongphat, J.; Tumphasuwan, K. Literature review on influencing factors of university teachers’ attitude toward information and communication technology competence. In Proceedings of the 2021 IEEE 1st International Conference on Advanced Learning Technologies on Education & Research (ICALTER), Lima, Peru, 16–18 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Akram, H.; Abdelrady, A.H.; Al-Adwan, A.S.; Ramzan, M. Teachers’ perceptions of technology integration in teaching-learning practices: A systematic review. Front. Psychol. 2022, 13, 920317. [Google Scholar] [CrossRef]

- Chun, T.W.; Yunus, M.M. Exploring teachers’ technology acceptance during COVID-19 pandemic: A systematic review (2020–2022). Int. J. Eval. Res. Educ. (IJERE) 2023, 12, 956. [Google Scholar] [CrossRef]

- Aurangzeb, W.; Kashan, S.; Rehman, Z.U. Investigating technology perceptions among secondary school teachers: A systematic literature review on perceived usefulness and ease of use. Acad. Educ. Soc. Sci. Rev. 2024, 4, 160–173. [Google Scholar] [CrossRef]

- Kaqinari, T. Facilitators and barriers to online teaching and educational technology use by university lecturers during COVID-19: A systematic review of qualitative evidence. Trends High. Educ. 2023, 2, 636–666. [Google Scholar] [CrossRef]

- Kuh, G.D.; Kinzie, J.; Buckley, J.A.; Bridges, B.K.; Hayek, J.C. Piecing Together the Student Success Puzzle: Research, Propositions, and Recommendations; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Watson, W.R.; Watson, S.L. What are learning management systems, what are they not, and what should they become? TechTrends 2007, 51, 28–34. [Google Scholar]

- Brown, N.; Bower, M.; Skalicky, J.; Wood, L.; Donovan, D.; Loch, B.; Bloom, W.; Joshi, N. A professional development framework for teaching in higher education. In Research and Development in Higher Education: Reshaping Higher Education; Devlin, M., Nagy, J., Lichtenberg, A., Eds.; Higher Education Research and Development Society of Australasia (HERDSA): Canberra, Australia, 2010; pp. 133–143. [Google Scholar]

- Brown, G.; Atkins, M. Effective Teaching in Higher Education; Routledge: London, UK, 2002. [Google Scholar]

- Biglan, A. The characteristics of subject matter in different academic areas. J. Appl. Psychol. 1973, 57, 195–203. [Google Scholar] [CrossRef]

- Gorsuch, R.L. Factor Analysis, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1983. [Google Scholar]

- Tsai, Y.-S.; Moreno-Marcos, P.M.; Jivet, I.; Scheffel, M.; Tammets, K.; Kollom, K.; Gašević, D. The SHEILA framework: Informing institutional strategies and policy processes of learning analytics. J. Learn. Anal. 2018, 5, 5–20. [Google Scholar] [CrossRef]

- Gomes, T.C.S.; Falcão, T.P.; de Azevedo Restelli Tedesco, P.C. Exploring an approach based on digital games for teaching programming concepts to young children. Int. J. Child. Comput. Interact. 2018, 16, 77–84. [Google Scholar] [CrossRef]

- Erdemci, H.; Karal, H. Examination of instructors’ experiences for the use of learning analytics. Int. J. Inf. Learn. Technol. 2021, 38, 21–31. [Google Scholar] [CrossRef]

- Botvin, M.; Hershkovitz, A.; Forkosh-Baruch, A. Data-driven decision-making in emergency remote teaching. Educ. Inf. Technol. 2023, 28, 489–506. [Google Scholar] [CrossRef]

- Abdella, A.S.; Fataar, A. Teaching styles of educators in higher education in Eritrea. J. High. Educ. Afr. 2021, 19, 45–62. [Google Scholar] [CrossRef]

- Sabah, S.; Di, X. University faculty’s perceptions and practices of student centered learning in Qatar: Alignment or gap? J. Appl. Res. High. Educ. 2018, 10, 514–533. [Google Scholar] [CrossRef]

- Soomro, K.A.; Kale, U.; Curtis, R.; Akcaoglu, M.; Bernstein, M. Digital divide among higher education faculty. Int. J. Educ. Technol. High. Educ. 2020, 17, 21. [Google Scholar] [CrossRef]

- Scherer, R.; Howard, S.K.; Tondeur, J.; Siddiq, F. Profiling teachers’ readiness for online teaching and learning in higher education: Who’s ready? Comput. Human. Behav. 2021, 118, 106675. [Google Scholar] [CrossRef]

- Banihashem, S.K.; Noroozi, O.; Khaneh, M.P.A. Gender differences in engagement and self-regulation in an online constructivist learning design and learning analytics environment. In Proceedings of the International Conference on Studies in Education and Social Sciences, Antalya, Turkey, 11–14 November 2021; Balint, G., Antala, B., Carty, C., Mabieme, J.-M.A., Amar, I.B., Kaplanova, A., Eds.; Uniwersytet Śląski, Wydział Matematyki, Fizyki i Chemii: Katowice, Poland, 2021; pp. 171–176. Available online: https://eric.ed.gov/?id=ED625290 (accessed on 25 October 2024).

- van Leeuwen, A.; Campen, C.A.N.K.-V.; Molenaar, I.; Rummel, N. How teacher characteristics relate to how teachers use dashboards: Results from two case studies in k–12. J. Learn. Anal. 2021, 8, 6–21. [Google Scholar] [CrossRef]

- Kwon, S.; Kim, W.; Bae, C.; Cho, M.; Lee, S.; Dreamson, N. The identity changes in online learning and teaching: Instructors, learners, and learning management systems. Int. J. Educ. Technol. High. Educ. 2021, 18, 67. [Google Scholar] [CrossRef]

- Kollom, K.; Tammets, K.; Scheffel, M.; Tsai, Y.-S.; Jivet, I.; Muñoz-Merino, P.J.; Moreno-Marcos, P.M.; Whitelock-Wainwright, A.; Calleja, A.R.; Gasevic, D.; et al. A four-country cross-case analysis of academic staff expectations about learning analytics in higher education. Internet High. Educ. 2021, 49, 100788. [Google Scholar] [CrossRef]

- Li, Q.; Jung, Y.; d’Anjou, B.; Wise, A.F. Unpacking instructors’ analytics use: Two distinct profiles for informing teaching. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, Online, 21–25 March 2022; ACM: New York, NY, USA, 2022; pp. 528–534. [Google Scholar] [CrossRef]

- Hagenauer, G.; Muehlbacher, F.; Ivanova, M. ‘It’s where learning and teaching begins—Is this relationship’—Insights on the teacher-student relationship at university from the teachers’ perspective. High Educ. 2023, 85, 819–835. [Google Scholar] [CrossRef]

- Bartolini, A.C.; Running, C.L.; Duan, X.; Ambrose, G.A. Integrated closed-loop learning analytics scheme in a first-year engineering course. Presented at the ASEE Annual Conference and Exposition, Online, 22–26 June 2020. [Google Scholar] [CrossRef]

- Whitelock-Wainwright, A.; Gašević, D.; Tejeiro, R.; Tsai, Y.S.; Bennett, K. The student expectations of Learning analytics questionnaire. J. Comput. Assist. Learn. 2019, 35, 633–666. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovation, 5th ed.; Free Press: New York, NY, USA, 2003. [Google Scholar]

- Assaf, M.; Spil, T.; Bruinsma, G. Supporting teachers adopting game-based learning in formal education: A systematic literature review. Proc. Eur. Conf. Games-Based Learn. 2021, 2021, 33–42. [Google Scholar] [CrossRef]

- Ramadhan, M.A.; Daryati, D. Online learning innovation at vocational schools in Indonesia during Covid-19 pandemic: A literatur review. AIP Conf. Proc. 2022, 2489, 030016. [Google Scholar] [CrossRef]

- Hershkovitz, A.; Daniel, E.; Klein, Y.; Shacham, M. Technology integration in emergency remote teaching: Teachers’ self-efficacy and sense of success. Educ. Inf. Technol. 2023, 28, 12433–12464. [Google Scholar] [CrossRef] [PubMed]

- Mckee, H. An instructor learning analytics implementation model. Online J. 2017, 21, 87–102. [Google Scholar] [CrossRef]

- Wise, A.F.; Jung, Y. Teaching with analytics: Towards a situated model of instructional decision-making. J. Learn. Anal. 2019, 6, 53–69. [Google Scholar] [CrossRef]

- Muljana, P.S.; Luo, T. Utilizing learning analytics in course design: Voices from instructional designers in higher education. J. Comput. High. Educ. 2021, 33, 206–234. [Google Scholar] [CrossRef]

- Caporarello, L.; Cirulli, F.; Milani, M. Design of a learning analytics framework proposal in academic context. Ital. J. Educ. Res. 2019, 23, 43–55. [Google Scholar] [CrossRef]

- Prinsloo, P.; Khalil, M.; Slade, S. Learning analytics as data ecology: A tentative proposal. J. Comput. High. Educ. 2023, 36, 154–182. [Google Scholar] [CrossRef]

- Syed, M.; Duan, X.; Anggara, T.; Ambrose, G.A.; Lanski, A.; Chawla, N.V. Integrated closed-loop learning analytics scheme in a first year experience course. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, Tempe, AZ, USA, 4–8 March 2019; ACM International Conference Proceeding Series. ACM: New York, NY, USA, 2019; pp. 521–530. [Google Scholar] [CrossRef]

| Learners—Dimensions of Student Promotion | |

| Aspects of Student Success [30] | Our Research Framework Dimension |

| Academic achievement | Completion of course successfully |

| Engagement in educationally purposeful activities | Increasing engagement |

| Satisfaction | Increasing motivation |

| Acquisition of desired knowledge, skills, and competencies | Enhancing learning skills |

| Persistence | Completion of course successfully |

| Attainment of educational objectives | Completion of course successfully |

| Data—Course Website Use | |

| LMS Use [31] | Our Research Framework Dimension |

| Placement of course materials online | Learning materials |

| Associating students with courses | Online participation |

| Tracking student performance | Assessment |

| Storing student submissions | Online participation |

| Mediating communication between the students and their instructor | Communication |

| Intervention—Teacher Action | |

| HE Teachers’ Areas of Activity [32] | Our Research Framework Dimension |

| Design and planning | Change course structure |

| Teaching | Changing pedagogy |

| Assessment | Change assessment |

| Developing effective environments | Communication with students; Adding self-practice opportunities (inspired by [33]) |

| Integration of scholarship with teaching | Changing topics taught |

| Self-evaluation | N/A |

| Mean (SD) | Difference from Next Data Type (Z a) | |

|---|---|---|

| Learners—Dimensions of Student Promotion | ||

| Assessment | 3.5 (1.5) | 4.4 *** |

| Learning materials | 3.0 (1.5) | 3.8 *** |

| Communication | 2.6 (1.4) | 2.1 * |

| Online participation | 2.5 (1.3) | . |

| Data—Course Website Use | ||

| Completion of course successfully | 3.2 (1.3) | 2.3 * |

| Increasing engagement | 3.0 (1.3) | 4.6 *** |

| Increasing motivation | 2.8 (1.2) | 2.3 * |

| Enhancing learning skills | 2.6 (1.2) | . |

| Intervention—Teacher Action | ||

| Communication with students | 3.3 (1.2) | 2.9 ** |

| Change topics taught | 3.1 (1.2) | 0.01, p = 0.995 |

| Changing pedagogy | 3.0 (1.2) | 2.3 * |

| Adding self-practice opportunities | 2.9 (1.3) | 1.9 * |

| Change course structure | 2.8 (1.2) | 2.7 ** |

| Change assessment | 2.6 (1.2) | . |

| Item | Factor 1 (Act Upon Data) | Factor 2 (Use of Data) | Factor 3 (Importance of Data) | Uniqueness |

|---|---|---|---|---|

| Act Upon Data—Structure | 0.903 | 0.303 | ||

| Act Upon Data—Practice | 0.870 | 0.325 | ||

| Act Upon Data—Pedagogy | 0.786 | 0.259 | ||

| Act Upon Data—Assessment | 0.669 | 0.498 | ||

| Act Upon Data—Topics | 0.620 | 0.486 | ||

| Use of Data—Motivation | 0.952 | 0.207 | ||

| Use of Data—Engagement | 0.825 | 0.193 | ||

| Use of Data—Skills | 0.753 | 0.428 | ||

| Use of Data—Completion | 0.709 | 0.400 | ||

| Importance of Data—Attendance | 0.898 | 0.317 | ||

| Importance of Data—Materials | 0.779 | 0.409 | ||

| Importance of Data—Communication | 0.680 | 0.408 | ||

| Importance of Data—Assessment | 0.760 | |||

| Act Upon Data—Communication | 0.561 |

| Model | Unstandardized | Standard Error | Standardized a | t | p | |

|---|---|---|---|---|---|---|

| H0 | (Intercept) | 2.820 | 0.279 | 10.124 | <0.001 | |

| Teaching Experience | −0.009 | 0.007 | −0.100 | −1.415 | 0.159 | |

| Course Size | −0.001 | 8.204 × 10−4 | −0.114 | −1.788 | 0.075 | |

| Use of Moodle Teaching Tools | 0.065 | 0.067 | 0.063 | 0.973 | 0.332 | |

| Use of Moodle Reports | 0.220 | 0.065 | 0.219 | 3.357 | <0.001 | |

| Faculty Category (Soft) | −0.082 | 0.130 | −0.631 | 0.528 | ||

| Gender (Male) | −0.510 | 0.126 | −4.050 | <0.001 | ||

| Role (TA) | −0.361 | 0.156 | −2.311 | 0.022 | ||

| Course Level (Undergraduate) | 0.107 | 0.147 | 0.724 | 0.470 | ||

| H1 | (Intercept) | 1.006 | 0.242 | 4.155 | <0.001 | |

| F_Importance of Data | 0.209 | 0.051 | 0.249 | 4.109 | <0.001 | |

| F_Use of Data | 0.406 | 0.056 | 0.438 | 7.250 | <0.001 | |

| Teaching Experience | −0.002 | 0.005 | −0.021 | −0.388 | 0.698 | |

| Course Size | −6.715 × 10−4 | 6.086 × 10−4 | −0.052 | −1.103 | 0.271 | |

| Use of Moodle Teaching Tools | −0.018 | 0.050 | −0.017 | −0.347 | 0.729 | |

| Use of Moodle Reports | −0.013 | 0.051 | −0.013 | −0.248 | 0.804 | |

| F_Importance_Assessment | 0.100 | 0.035 | 0.150 | 2.827 | 0.005 | |

| Faculty Category (Soft) | 0.062 | 0.098 | 0.635 | 0.526 | ||

| Gender (Male) | −0.180 | 0.096 | −1.879 | 0.062 | ||

| Role (TA) | −0.198 | 0.116 | −1.701 | 0.090 | ||

| Course Level (Undergraduate) | 0.075 | 0.109 | 0.683 | 0.496 |

| Model | Unstandardized | Standard Error | Standardized a | t | p | |

|---|---|---|---|---|---|---|

| H0 | (Intercept) | 3.103 | 0.337 | 9.208 | <0.001 | |

| Teaching Experience | −0.013 | 0.008 | −0.117 | −1.639 | 0.103 | |

| Course Size | −0.003 | 9.923 × 10−4 | −0.174 | −2.712 | 0.007 | |

| Use of Moodle Teaching Tools | 0.067 | 0.081 | 0.054 | 0.835 | 0.405 | |

| Use of Moodle Reports | 0.235 | 0.079 | 0.195 | 2.973 | 0.003 | |

| Faculty Category (Soft) | −0.141 | 0.157 | −0.897 | 0.371 | ||

| Gender (Male) | −0.521 | 0.152 | −3.423 | <0.001 | ||

| Role (TA) | 0.091 | 0.189 | 0.481 | 0.631 | ||

| Course Level (Undergraduate) | 0.279 | 0.178 | 1.567 | 0.118 | ||

| H1 | (Intercept) | 1.293 | 0.329 | 3.925 | <0.001 | |

| F_Importance of Data | 0.263 | 0.069 | 0.261 | 3.806 | <0.001 | |

| F_Use of Data | 0.315 | 0.076 | 0.282 | 4.133 | <0.001 | |

| Teaching Experience | −0.006 | 0.007 | −0.052 | −0.860 | 0.391 | |

| Course Size | −0.002 | 8.280 × 10−4 | −0.126 | −2.367 | 0.019 | |

| Use of Moodle Teaching Tools | −0.017 | 0.069 | −0.014 | −0.254 | 0.800 | |

| Use of Moodle Reports | −0.004 | 0.069 | −0.004 | −0.062 | 0.951 | |

| F_Importance_Assessment | 0.144 | 0.048 | 0.179 | 2.989 | 0.003 | |

| Faculty Category (Soft) | −0.005 | 0.133 | −0.037 | 0.970 | ||

| Gender (Male) | −0.179 | 0.130 | −1.375 | 0.170 | ||

| Role (TA) | 0.270 | 0.158 | 1.708 | 0.089 | ||

| Course Level (Undergraduate) | 0.224 | 0.149 | 1.509 | 0.133 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hershkovitz, A.; Ambrose, G.A.; Soffer, T. Instructors’ Perceptions of the Use of Learning Analytics for Data-Driven Decision Making. Educ. Sci. 2024, 14, 1180. https://doi.org/10.3390/educsci14111180

Hershkovitz A, Ambrose GA, Soffer T. Instructors’ Perceptions of the Use of Learning Analytics for Data-Driven Decision Making. Education Sciences. 2024; 14(11):1180. https://doi.org/10.3390/educsci14111180

Chicago/Turabian StyleHershkovitz, Arnon, G. Alex Ambrose, and Tal Soffer. 2024. "Instructors’ Perceptions of the Use of Learning Analytics for Data-Driven Decision Making" Education Sciences 14, no. 11: 1180. https://doi.org/10.3390/educsci14111180

APA StyleHershkovitz, A., Ambrose, G. A., & Soffer, T. (2024). Instructors’ Perceptions of the Use of Learning Analytics for Data-Driven Decision Making. Education Sciences, 14(11), 1180. https://doi.org/10.3390/educsci14111180