Abstract

Recent studies highlight the potential of modern board games (MBGs) to foster computational thinking (CT) skills in students. This research explored the impact of integrating MBGs into a primary education classroom through an embedded concurrent mixed-methods approach, with a pre-experimental design in its quantitative aspect and content analysis in its qualitative dimension, with 20 fourth-grade students from a school in Portugal. The students participated in 10 game sessions, each lasting 50 min, and their CT skills were assessed using Bebras tasks in both the pre-test and post-test phases. Statistical analysis, including the Shapiro–Wilk test for normality and paired sample t-tests, revealed significant improvements in key CT areas, particularly abstraction, algorithmic thinking, and decomposition. Descriptive statistics were also calculated, and content analysis using Nvivo software was conducted on field notes, corroborating the quantitative data. The results suggest that MBGs can serve as a valuable educational tool for developing CT skills in young learners. This study not only highlights the effectiveness of MBGs but also emphasises the need for further research using more robust experimental designs to enhance CT development in educational settings.

1. Introduction

In 2006, Wing [1] conceptualized the term ‘computational thinking’, arguing that it represents a set of skills and attitudes capable of being applied universally and that are essential for any human being, regardless of their connection to computer science. In her view, CT includes problem solving, systems design, and understanding human behaviour using the essential principles of computer science. However, the author also pointed out that ‘technology and computers accelerate the spread of CT’ (p. 33), which may have contributed to the gradual growth of studies that ended up relating CT to computer science, and there is even a pre-formatted idea that the act of programming is a requirement for developing CT [2] or that CT only develops in technological and digital contexts [3]. However, problem solving through CT, although it requires creating solutions and processing data, is far from dependent on the use of digital technologies [4]. In other words, CT is therefore a fundamentally intellectual and creative skill that through simplified representations of reality allows for problem solving, the design of systems, and a greater understanding of human behaviour, and the solutions from this method can be implemented by humans or by machines [1,5,6]. Researchers exploring CT development in non-digital environments argue that this skill can be worked on in a wide range of contexts, just as with literacy or numeracy [7]. Limiting CT to the use of digital media as well as to computer thinking can create obstacles to understanding its procedural activity [8]. An example of this expansion of the concept includes unplugged activities, which allow CT skills to be developed without the use of digital technology [9]. Bell and Vahrenhold [10] defined these unplugged activities as ‘a vast collection of activities and ideas used to engage a variety of audiences with big ideas about Computer Science, without having to learn programming or even use a digital device’ (p. 497). Among the many characteristics of unplugged activities, Nishida [11] highlighted that an unplugged activity is a playful, interesting, and motivating approach, since its exploration is mostly kinaesthetic, requiring the manipulation of varied physical objects, such as cards, stickers, papers, pens, markers, chalk, boards, strings, dice, etc. Interestingly, in the last decade, researchers have broadened the study of CT by using board games as an unplugged activity, as these games seem to provide spaces for the development of this cognitive skill outside the digital realm [7,12,13,14,15].

While previous studies have focused on digital or video games [16,17], as well as exploring analogue games [18,19], a new wave of modern board games (MBGs) with innovative designs and mechanics has emerged as a promising resource for developing skills such as critical thinking, problem solving, communication, teamwork, and CT [7,12,13,15,20]. According to Rosa et al. [21], these are games that require players to participate in a continuous process of choices and decisions based on the game system. This feature has attracted the interest of the scientific community due to its potential for diverse learning outcomes [22,23,24,25]. In fact, these contemporary games provide a different learning environment from others, especially digital and video games, placing the responsibility for understanding and executing the rules squarely on the players and promoting the development of various cognitive skills [26]. The works of Berland and Lee [12] and Berland and Duncan [13], references in this field of work, highlight strategic and contemporary board games as a promising approach for significant CT development, with students demonstrating CT and reasoning. However, in addition to the aforementioned authors, a recent literature review also reinforces the need for further studies to evaluate the effect of using MBGs on CT development [27]. The originality and importance of this study resides in its focus on exploring the potential of MBGs in primary education as an innovative, unplugged tool to foster CT skills, an area that remains underexplored. Thus, although the development of CT is an objective assumed by many countries that wish to integrate CT into their elementary school curricula, most research has focused on digital tools [28,29]. Our research aims to provide new and substantial data, standing out by including game sessions using MBGs as a new, analogue experience to promote CT development. This unplugged approach aims to provide valuable insights into the potential of MBGs as pedagogical tools for teaching CT.

The research problem of our study was formulated through the following question: What is the impact of using modern board games on the development of computational thinking in elementary school students?

To guide our study, we divided the problem into the following research questions:

(RQ1) What is the effect of using MBGs in the fourth grade on the CT skills of elementary school students?

(RQ2) How do the mechanics of MBGs influence the development of CT?

This article is structured as follows: we begin with the Introduction, which briefly presents the concepts of CT and unplugged activities as well as their synergistic relationship, the rise of modern board games and some of their characteristics, and a concise analysis of the current educational context in Portugal, which integrates CT into the learning of primary school students. Section 2 outlines the materials and methods, with a special focus on the embedded concurrent mixed-methods design used in our research. In Section 3, we present and analyse the empirical results obtained. Section 4 is dedicated to discussing these results and their relation to the proposed research questions. Finally, in Section 5, we come to the conclusion of this study.

1.1. Computational Thinking and Unplugged Activities

The growing interest in incorporating CT into schools stems from its potential contribution to student learning, particularly in problem solving, critical thinking, creativity, and collaboration [30]. CT is defined as a set of skills that allows individuals to formulate and solve problems efficiently and creatively using concepts and techniques from computer science [1]. Specifically, this study adopts Papert’s perspective [31], which defines CT as ‘procedural thinking’ and emphasises the active participation of students who, when reflecting on programming, describe the necessary steps to solve a problem.

According to the recent report ‘Reviewing Computational Thinking in Compulsory Education’ by [30], 25 European countries already include initiatives to introduce programming and develop CT in their primary school curricula. This report highlights, among other motivations, the importance of fostering this skill as a ‘cognitive pillar’ for the future.

Consistent with the current literature, one way to develop CT is through ‘unplugged’ activities. Originally defined by Bell et al., ref. [9] states that these types of activities are successful strategies that allow for the development of CT without the need for any digital device. Nishida et al. [11] refers to ‘unplugged’ activities as playful, kinaesthetic, modular, and interactive games or challenges enabling peer collaboration and communication. It is within this spectrum that MBGs are included.

Similar to computer programming, especially in applications for video games, simulators, or automation, board games can be understood as closed systems represented in an organised and coherent manner through rules. Several authors have focused on understanding CT through closed rule-based systems [12,13].

This study explores whether MBGs, as ‘unplugged’ and entirely analogue activities, can provide a context for the initial development of CT in children. The results could contribute to understanding CT and developing new pedagogical approaches in primary education. In the next section, we explore the concept of MBGs and define some of its characteristics.

1.2. Modern Board Games

Board games have never been more successful than they are today [32]. In recent years, board games have seen a substantial increase in popularity. This growth is supported by sales figures in what is already considered the ‘Golden Age’ of board games [33]. Factors such as the emergence of ‘geek’ culture, greater demand for activities with direct social interaction, or the positive impact created by the COVID-19 pandemic due to a gradual demand for new forms of entertainment have contributed significantly to this growth [26].

According to Sousa and Bernardo [34], board games can be categorised into three types: (1) traditional and classic games; (2) mass-market games, and (3) hobby games. Considering the typological characteristics of each board game, the authors indicate that we are in the presence of a modern board game when it is understood as a commercial product that was created in the last five decades; has original mechanics and themes, high-quality components, and one or more perfectly defined authors; and was designed directly for a specific target audience. This growing interest in modern board games has aroused the interest of the educational community. However, it is their mechanics and mechanisms that make MBGs differentiated resources. Their existence makes it possible to make a board game more interactive, creating systems with balanced rules and motivating challenges [35]. According to Sousa and Dias [26], when we explore the elements that make up a game, we understand the importance of mechanics and mechanisms.

Like the authors, we consider the existence of mechanisms, which generate mechanics and dynamics in games. The mechanics, seen at a ‘macro’ level, correspond to the attributes and correlations between the game’s components. The player, in turn, plays a fundamental role in activating these mechanisms, knowing the rules, and gradually exploring the different layers of the game [32,36]. Although in recent years the use of digital and video games has increased, analogue games stand out as unique offline experiences, prioritizing tangibility, exploration of different game systems, constant communication and interaction, or collaboration [21,27,37]. In fact, they have been used for a long time in various educational contexts, and some of them are preferred by teachers and educators [19]. Their enormous potential has led teachers and educators to consider the exploration of these resources through new learning methodologies, such as ‘Gamification’, ‘Game-Based Learning’ or ‘Serious Games’ [37].

Similarly, researchers have also empirically studied the use of these resources in the classroom, since their renewed designs and distinctive mechanics turn them into educational tools with significant potential [12,13,38]. Even so, few studies have specifically investigated the use of MBGs as pedagogical tools for the development of CT in primary school contexts. The ‘unplugged’ nature of MBGs distances them from digital approaches, while the particularities of their mechanics differ from other types of board games, as well as from other types of unplugged activities, requiring students to internalize and apply rules without digital support, suggesting an open space for the development of CT [12,26,39]. The use of MBGs in the classroom, however, needs to be understood within the specific context of Basic Education in Portugal, where initiatives related to the development of CT have already begun to take shape. Below, we discuss the national educational context and the relevant curriculum to frame this research.

1.3. National Educational Context and Relevant Curriculum

The Portuguese educational system is structured into three main levels, as established by the Lei de Bases do Sistema Educativo (Law of the Educational System Framework), approved by Lei n.º 46/86, of October 14 [40]. These levels include Pre-School Education, Basic Education, and Secondary Education. Pre-School Education is optional, encompassing children from 3 to 6 years of age. Following this, Basic Education is compulsory, universal, and free, lasting a total of nine years, and organised into three sequential cycles each designed to complete and deepen the previous one, ensuring a global perspective.

The first cycle of Basic Education corresponds to the first four years of schooling (first to fourth grade). The second cycle covers the following two years (fifth and sixth grades), while the third cycle spans three years (seventh to ninth grade) [40]. Together, the first and second cycles constitute what is referred to as primary education in other educational systems [41]. In the context of this study, the participating students are part of primary education. The organisation and management of the primary education curriculum aim to provide all citizens with a fundamental, general, and common education, promoting the acquisition of essential knowledge and skills for further studies.

The integration of Information and Communication Technologies (ICT) into the primary education curriculum in Portugal was initially implemented through the inclusion of ICT as a subject. Additionally, ICT is incorporated transversally into other subjects, such as mathematics [42]. Approximately 40% of educational institutions complement the ICT subject with optional educational robotics clubs, accessible to any interested student. In these clubs, students develop various programming skills and engage in robotics projects linked to curricular themes and content [40].

In the 2022/2023 academic year, a restructured version of the curriculum document for the teaching of mathematics, called ‘Novas Aprendizagens Essenciais’, came into effect. This updated version presents new guidelines for all primary education teachers and includes six transversal competencies in the subject of mathematics, with a particular emphasis on CT. The inclusion of CT in the mathematics curriculum encompasses various dimensions such as problem solving, decomposition, debugging, pattern recognition, algorithms, programming, and robotics [30].

Currently, some projects in Portugal stand out in promoting the development of competencies in the domains of CT (e.g., MatemaTIC Pilot Project, Programming and Robotics in Schools 2022 Initiative, UBBU, Bebras, Scratch-Day, Hour of Code, etc.).

However, despite these projects highlighting the Portuguese education system’s commitment to integrating CT into the school curriculum, recognising the importance of digital skills in students’ academic and professional development, there are no significant initiatives exploring the potential of MBGs in this regard. The nature of MBGs, with complex strategies, problem solving, and application of rules without digital support, offers a valuable opportunity for the development of CT. The results of this study can enhance future educational practices and contribute to the development of CT through a valuable unplugged resource: the MBG.

2. Materials and Methods

This research used an embedded concurrent mixed-methods approach, with a pre-experimental design [43] in its quantitative aspect and content analysis in its qualitative dimension. The pre-experimental design is applied when the researcher does not have the possibility of resorting to a quasi-experimental design or when we are in the presence of the precursor to a real experiment, as in the case of a pilot test, for example [44]. Pre-experimental designs, therefore, are mostly carried out as a first step to establish evidence for or against a real intervention that is to be applied. The most common pre-experimental design was used, with a single experimental group and the application of pre-tests and post-tests [43,44]. The class that acted as the experimental group was an intact 4th grade class from an elementary school in Odivelas, Lisbon. The class was taught by a teacher who also took on the role of researcher. The pre-experimental plan was used as a pilot test for a true quasi-experimental design, to be applied in the future by the researcher. Thus, it was possible to establish a causal relationship between the use of MBGs (independent variable) and the students’ performance in the CT skills transfer test, Bebras (dependent variable) (RQ1) [45,46,47]. However, the chance of some sources of error appearing in this type of design is greater. Because although there are changes in the dependent variable, it is not possible to say with certainty that this change was due to the outcome of the treatment without a real control group. To support the internal validity of the research, homogeneity among the participants was guaranteed, since they were all between 8 and 9 years old, with similar levels of knowledge and no previous experience with modern board games. In addition, the same experimental procedure was applied in all the organised game sessions. Internationally recognised assessment instruments were also used, applied in many countries as part of various investigations [48,49].

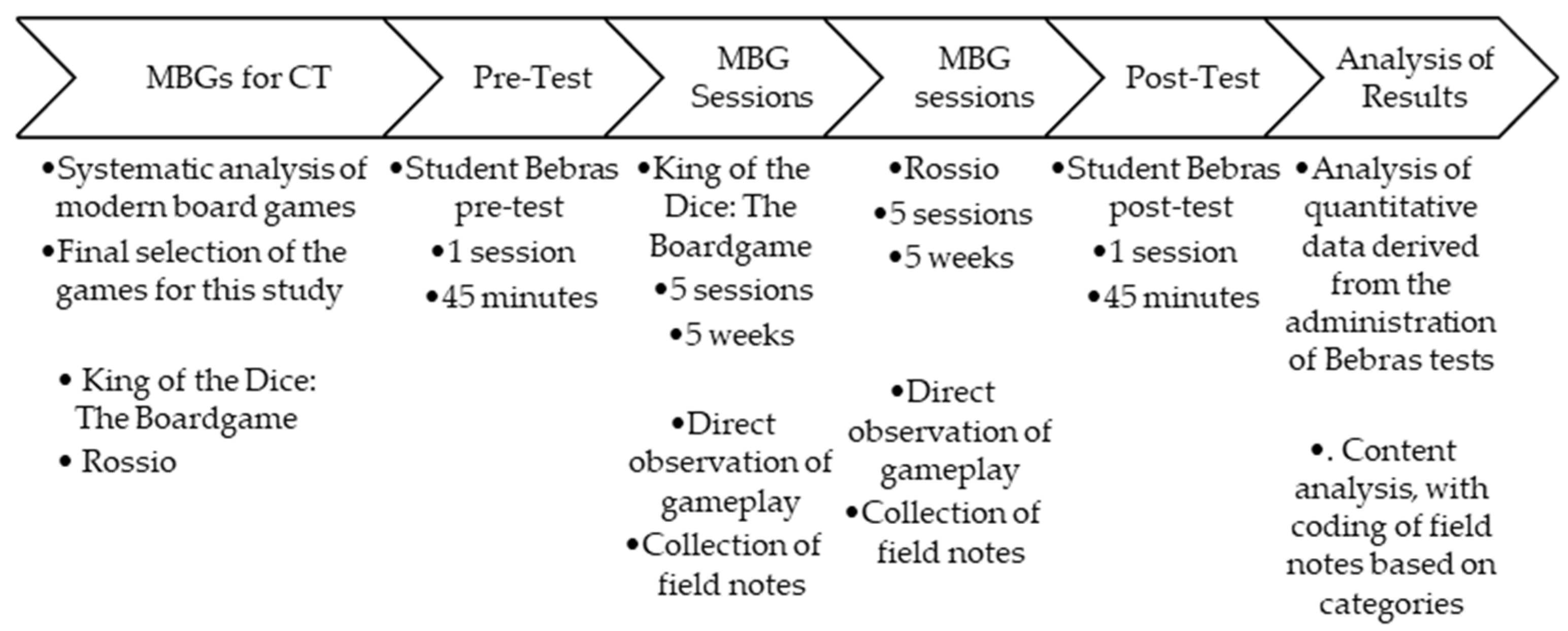

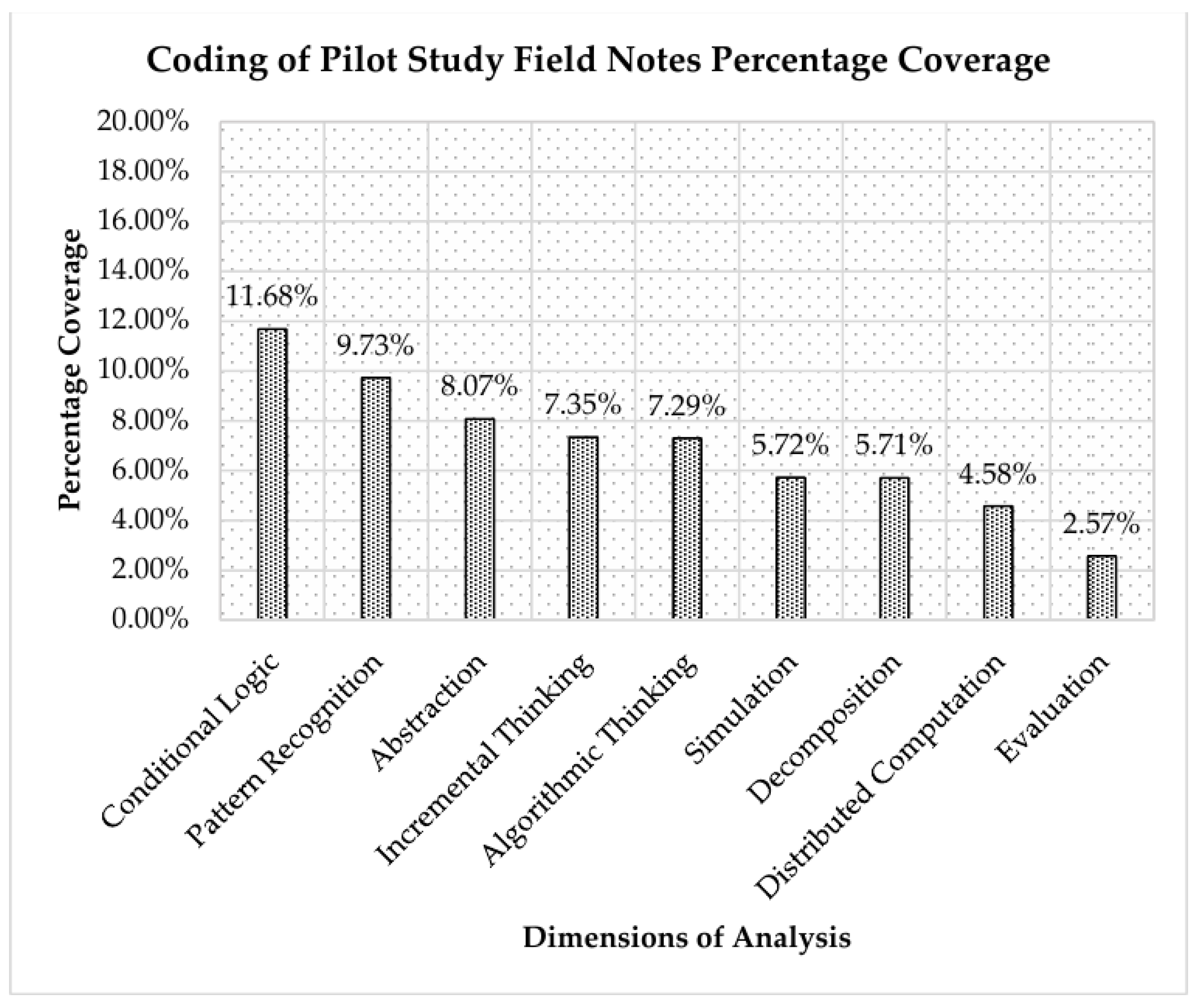

In addition, qualitative data were collected through direct observation of the participants and the collection of field notes during the game sessions in which the students explored MBGs in order to understand how the mechanics of these games open up space for the development of CT (RQ2). Content analysis [50] made it possible to identify and categorise the CT strategies applied by the students while exploring the games, based on previous studies [12,13,45]. This qualitative approach conveys greater validity to the pre-experimental design, as it provides a deeper insight into the processes involved, mitigating the typical limitations of this type of intervention [43]. The methodology design followed the steps described in Figure 1.

Figure 1.

Study design overview.

2.1. Selection of Modern Board Games

2.1.1. Systematic Analysis of Modern Board Games

We started this study by identifying Portuguese or international board game publishers (with a presence in the Portuguese market) and publishers with educational projects related to board games. Based on previous studies, we identified game mechanics and defined characteristics for MBGs that promote the development of CT. We consulted the ‘Boardgamegeek’ (BGG) database in October 2022 to see which MBGs included the suggested mechanics. We found 824 games, from which we selected 2 for this study, using inclusion/exclusion criteria [38,51].

2.1.2. Selection of the Modern Board Games for This Study

Previous studies have shown that MBGs can promote the development of CT during discursive interaction between players [7,12,13]. We identified 12 board game mechanics that favour the development of CT, which were related to the framework of Englestein and Shalev [36], authors who refer to mechanics as game ‘mechanisms’. These mechanics were grouped into thematic categories, each of which has variations. They support players’ actions, allowing them to understand the game system and the space for learning. The selected games were analysed using the CTLM-TM model to guide the development of CT through game mechanics [38]. Figure 2 and Figure 3 shows the two modern board games used in this study.

Figure 2.

Modern board game (MBG) used—King of the Dice: The Board Game.

Figure 3.

Modern board game (MBG) used—Rossio.

- King of the Dice: The Board Game.

The King of the Dice board game is an MBG published by the German publisher ‘Haba’, in which 2 to 4 players, aged 8 and up, compete for territorial dominance in a fantasy world. The game uses the ‘Dice Rolling’ mechanic to acquire cards and thus expand territories by placing new tiles, acquiring gems, or reconquering castles attacked by dragons. The ‘Dice Rolling’ mechanic seems to support the development of skills similar to those observed in computer data analysis processes since the information contained in the game’s physical data is analysed and evaluated based on certain conditions. The ‘Majority/Area Influence’ (Area Control) mechanic promotes algorithmic and heuristic thinking, as players evaluate their actions and decide which areas they want to control by placing castle pieces. Finally, through the ‘Pattern Recognition’ variant, the ‘Tile Placement’ mechanic calls for certain conditions to be met with sequences of colours or numbers, increasing the probability of success.

- Rossio.

Rossio is an MBG by Portuguese publisher Phytagoras, in which 2 to 4 players, aged 8 and over, take on the role of pavers to pave Rossio Square in Lisbon during the 19th century. The game ends when the last paving stone is laid. The winner is the one who has best managed their standard cards through the ‘Hand Management’ mechanic. However, the main mechanic is ‘Pattern Building’. This mechanic creates space for abstraction practices, requiring the player to be able to identify and build specific patterns. The strategic positioning of the pattern tiles also suggests strong support for algorithmic and incremental thinking. Players mentally simulate the best strategy for placing their pattern tiles, expanding and improving the paved area of the board. The gameplay requires players to devise tactics for tile placement, evaluate decisions, and reformulate their thinking whenever their ability to abstract allows them to identify a more advantageous move. When making decisions about a tile’s shape, colour, or positioning, an analogy can be drawn with so-called control structures. These become more complex as the game progresses.

2.2. Participants and Data Collection

The school where the investigation was conducted is part of a cluster of schools overseen by the Ministry of Education of Portugal. This school is notable for its numerous nationalities and significant linguistic diversity, stemming from the large immigrant community that resides and works within the school’s area of influence. In this context, 20 students took part in this study, 11 boys and 9 girls, forming a single group using a pre-experimental approach. The participants were in the fourth grade of school, aged 9 years old, in a class that had been organised since the start of the school year, as is common in many socio-educational contexts [43]. This study was implemented in the classroom, with game sessions integrated into the ‘Complementary Offer’ subject. Each game session lasted 45 min and was scheduled within the school timetable, allowing students to participate naturally in this study. The classroom was equipped with tables and chairs suitable for the game sessions. Due to the duality of roles, the researcher, who was also the class teacher, observed and monitored the students’ interactions during the game sessions, thus fulfilling a dual purpose [52]. Ethical guidelines, institutional protocols, and informed consent were applied to ensure data protection and the well-being of all participants. This study was approved by the Ethics Committee of the Institute of Education of the University of Lisbon, and informed consent was obtained from the students’ parents.

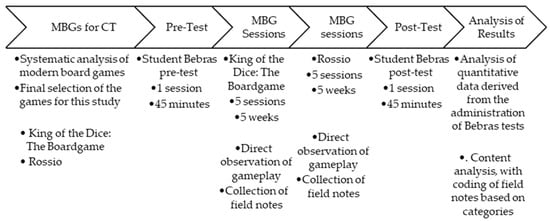

A pre-experimental pre-test and post-test design was used to assess the impact of the intervention on the students (see Figure 1). As it did not require prior knowledge of CT, the assessment tool selected was the CT skills transfer test called Bebras [45]. Widely recognised in the scientific community, Bebras tests are organised by age group and consist of multiple-choice questions to assess students’ ability to apply CT skills to various daily problems. For this study, Bebras tasks from the Portuguese competition were selected for convenience of access to the necessary materials suitable for students in the 3rd and 4th years of primary school. The tests used maintained a comparable structure, with 12 questions divided into three levels of difficulty—easy, medium, and difficult—ensuring that the results of the administrations were comparable and that the students were not taking the same test twice [53]. These challenges, designed to stimulate students’ problem-solving skills, are aligned with the classification guide by Dagiene, Sentence, and Stupuriene [45]: (CT1) abstraction, (CT2) algorithmic thinking, (CT3) decomposition, (CT4) evaluation, and (CT5) generalisation.

Table 1 presents the relationship, adapted by the researcher from the Bebras Solution Guides [54], between the Bebras tasks and these CT competencies (one or more).

Table 1.

Relationship between the Bebras items and CT skills in the pre-test and post-test. Where the skill was observed, it was marked with ‘+’, while where there are elements of the skill but in an ambiguous fashion, it was marked with ‘~’ [45,54].

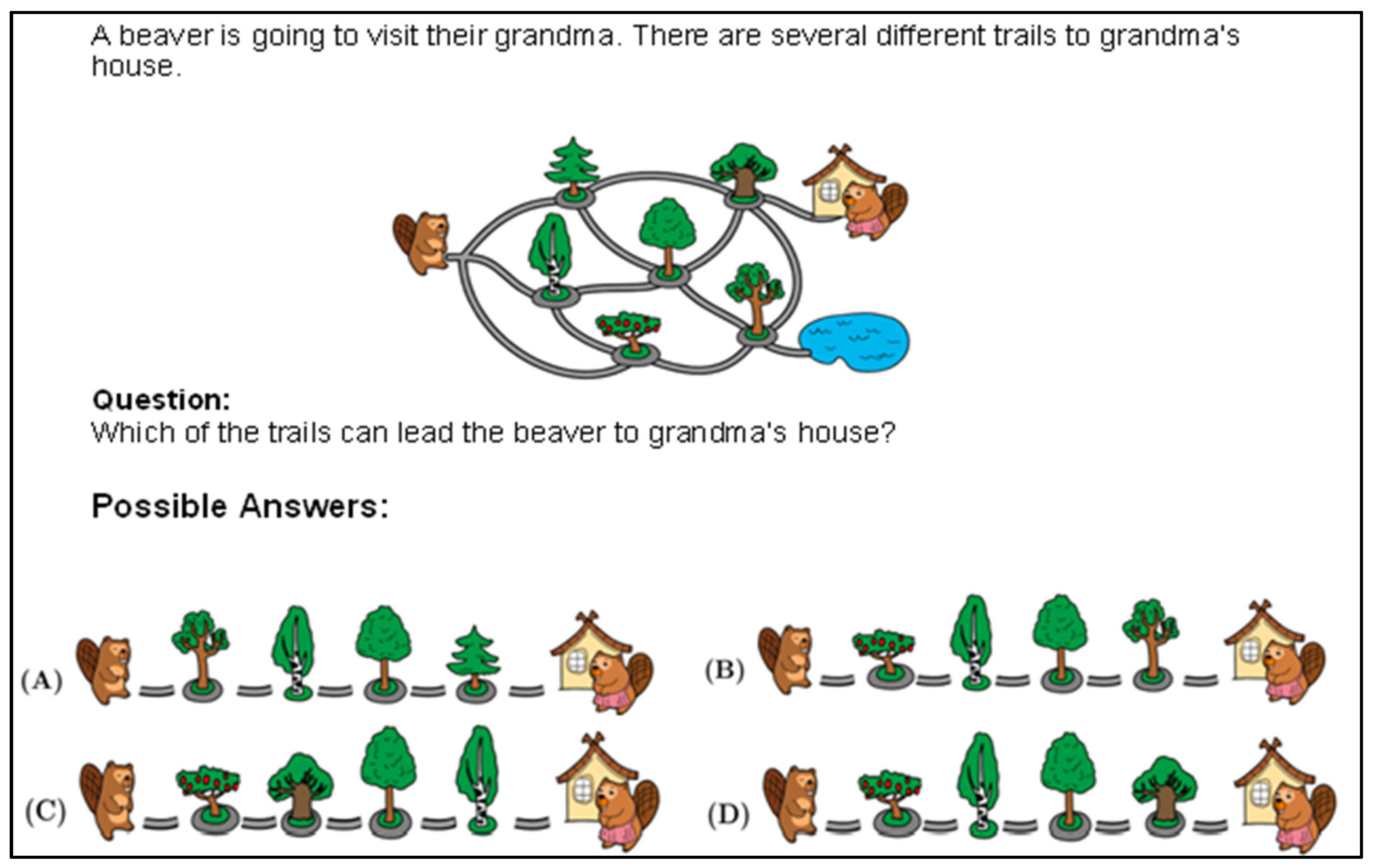

The scores were distributed gradually across the different levels of the Bebras tests (see Table 2). Figure 4 illustrates one of the items from the Bebras test used.

Table 2.

The scores assigned to the questions in the Bebras tests. Own creation.

Figure 4.

Adapted item from the CT Skills Transfer Test (Bebras) [37].

2.2.1. Statistical Analysis

The Shapiro–Wilk test was used to assess the normality of the distributions, due to the size of the sample used [55]. These tests are essential because many parametric statistical tests, such as the t-test, assume that the data follow a normal distribution. After confirming this assumption, we applied the t-test for paired samples, ensuring the significance of the results. We also calculated descriptive measures, such as means and standard deviations, to obtain an overview of the data. We used Jamovi software [56] to analyse the data.

2.2.2. Content Analysis

Due to the exploratory nature of this study, qualitative data were also collected through direct participant observation and the recording of field notes [44]. Within participant observation, this study adopts moderate participation [52], in which the researcher oscillated between the position of ‘insider’ and ‘outsider’. Field notes are nothing less than a written account of what the researcher saw and experienced, reflecting on their own records [57]. The notes were then transcribed and organised into their own forms using a word processor. Subsequently, content analysis was carried out, allowing the researcher to make interpretative inferences based on the content expressed once it has been broken down into ‘categories’ [50], thus enabling it to be explained and understood. The coding process was conducted collaboratively by both authors. The first author initially coded the data, and the second author independently reviewed it. Any differences in interpretation were discussed until a consensus was reached, ensuring inter-rater reliability. The categories were created before the fieldwork and are grouped by dimensions of analysis directly related to CT. To this end, the field notes were uploaded to Nvivo software and coded based on the dimensions of analysis in Table 3. The use of Nvivo software allowed for the clear organisation and systematic coding of the data, strengthening the rigor of the analysis. The categories in Table 3 followed the methodological procedures of Bardin [50] and the theoretical procedures of Berland and Lee [12] and Berland and Duncan [13]. Additionally, the field notes served as reflective tools, capturing insights and observations critical to the interpretation of the data, thus enhancing the transparency and validity of the findings.

Table 3.

Summary of categories (CAT) and respective dimensions of analysis (DIM) with description (DES), accompanied by a rationale from the literature (RAT).

3. Results

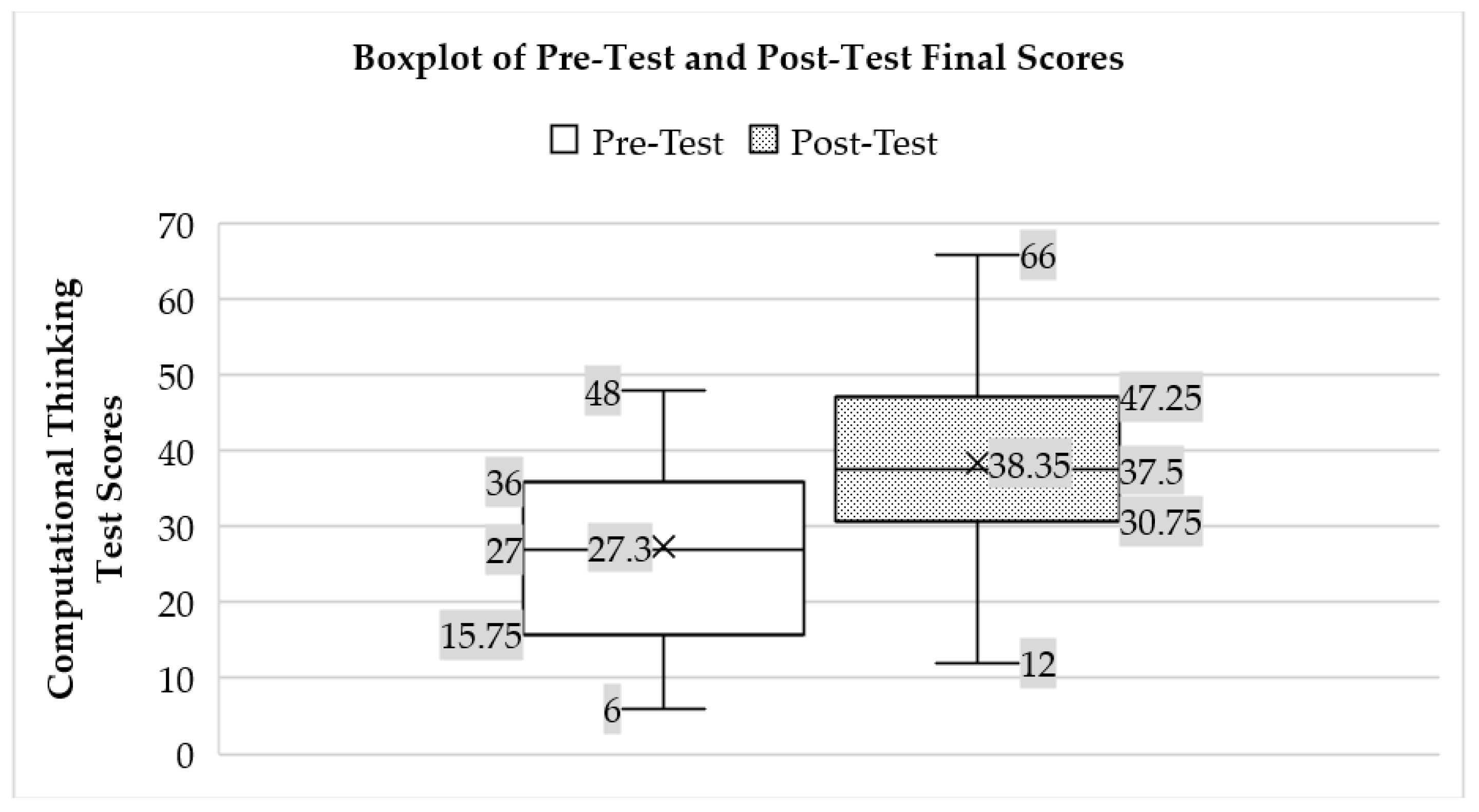

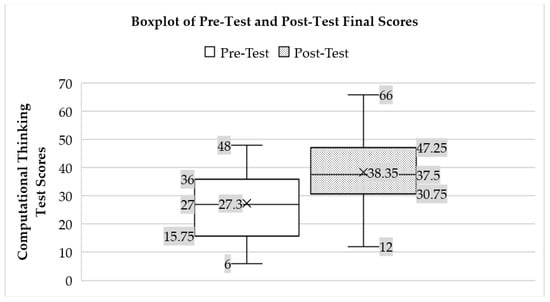

First, the overall results of the participants from the Bebras tasks are presented based on different measures of descriptive statistics (Table 4). The mean score of the pre-test, calculated according to the previously mentioned rules, was (M = 27.3); the median was (Md = 27), representing the second quartile; and the mode, which refers to the most frequently observed total score, was (Mo = 36). Regarding the measures of dispersion, a standard deviation (SD = 11.7) and a variance of (Var = 137) were observed in the pre-test, suggesting significant dispersion relative to the mean. These scores followed a normal distribution, with the Shapiro–Wilk test yielding (W = 0.93, p = 0.36), skewness (sk= −0.09), and kurtosis (ku = −1.10). Note that despite some flattening in kurtosis (platykurtic), the skewness values indicate a nearly symmetrical distribution [48]. Since the total score obtained by the majority of students fell within the first and second quartiles (Figure 5), the average results were considered not very satisfactory. After the intervention, students achieved a higher mean (M = 38.4) in the post-test, with a median (Md = 37.5) and a mode (Mo = 33). It is noteworthy that, on average, participants scored an increase of 11.1 points in the post-test, while the rise in the median value from 27 to 37.5 confirms the trend observed in the mean, suggesting that most students showed improvements in their performance. A significant shift in the mode value (Mo = 51) was observed in line with the apparent improvements, indicating that the data became concentrated at higher values. Nevertheless, slight increases in data dispersion relative to the mean were observed upon analysing the standard deviation values (SD = 13) and variance (Var = 170). These scores are accompanied by a normal distribution, as indicated by the Shapiro–Wilk test (W = 0.99, p = 1.00) and observation of skewness (sk = 0.0296) and kurtosis (ku = 0.204) [55]. Thus, the assumptions for applying the parametric paired Student’s t-test to calculate the statistical significance of the differences found in the students’ mean scores on the two tests were ensured.

Table 4.

Descriptive statistics for the mean score of participants in the test (N = 20).

Figure 5.

Box plot illustrating the distribution of results from the pre- and post-test. Own creation.

3.1. Student Learning as a Result of Sessions with MBGs (RQ1)

Next, the paired Student’s t-test was conducted to analyse the statistical significance of the influence of the use of MBGs on the development of students’ CT skills. The assumption of normality of the data was assumed according to the Shapiro–Wilk test (W = 0.916; p = 0.084). There was a significant difference in the scores for the post-test (M = 38.35) and pre-test (M = 27.3) in favour of the post-test (t(19) = 3.25, p = 0.002). To assess the size effect of the experiment, Cohen’s d was determined for the experiment, resulting in a calculated value of (d = 0.73). This outcome, by Cohen’s [64] classification levels, denotes a moderate-sized effect. No significant effects on the students’ outcomes were found considering the age and sex.

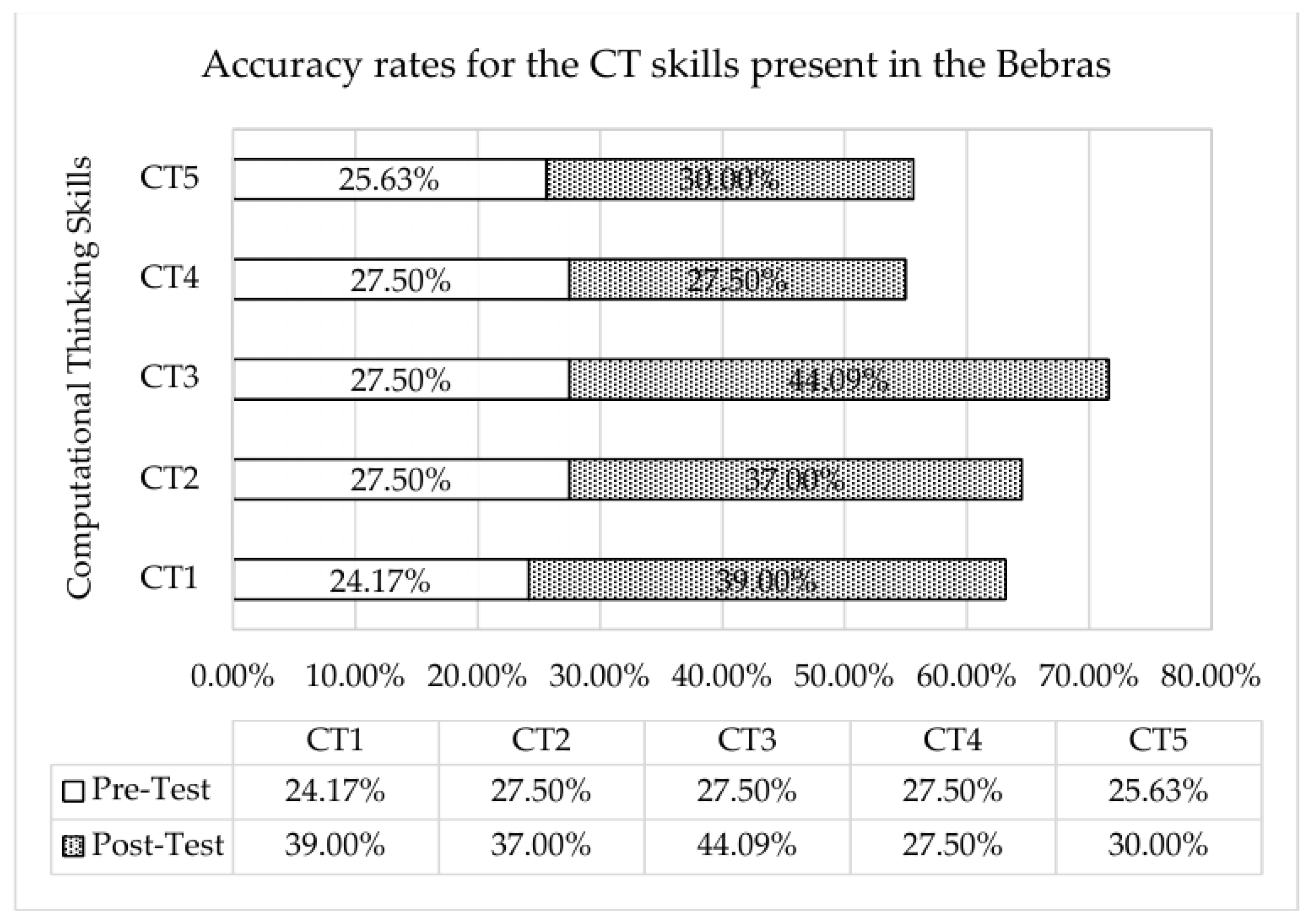

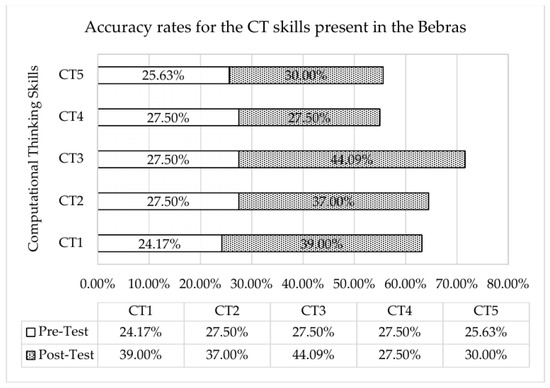

Analysing the accuracy rates for the CT skills present in the Bebras tests (see Figure 6), the results indicated that after the intervention, students progressed in four out of the five CT skills, showing improvement in abstraction (CT1, 24% to 39% correct responses), algorithmic thinking (CT2, 28% to 37% correct responses), decomposition (CT3, 28% to 44% correct responses), and generalisation (CT5, from 26% to 30% correct responses). Interestingly, there was no progress in the evaluation competency (CT4, remaining at 28%), which may indicate the need to use other MBGs with mechanics that foster this dimension.

Figure 6.

Accuracy rates for the CT skills present in the Bebras tests.

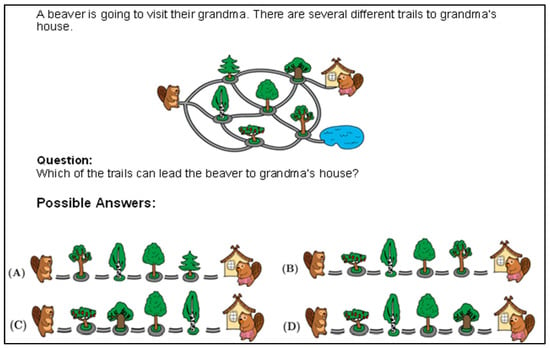

3.2. Content Analysis as a Result of Coding Field Notes (RQ2)

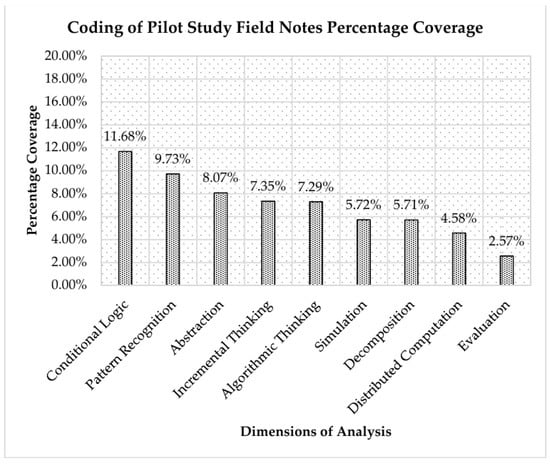

In addition, field notes were taken based on the students’ discursive interaction. Our task was to identify the frequency with which aspects linked to CT categories were evident in the students’ discourse. In total, 143 references were identified. It is important to note that the conversation between the students was not always continuous, and the amount of discourse that could be analysed was less than a quarter of the total time of the game sessions. In many cases, the students did not express their thoughts directly, only confirming their actions through simple expressions such as ‘OK’, ‘Yes’, or ‘Got it’ [12]. Another important aspect is that the coding categories in Table 3 are interdependent [65]. Although a category may have been more evident in specific actions in the game, there were few times when they appeared in isolation. The first 30 min of the first and sixth sessions were dedicated to explaining the rules of the games.

Concerning the discourses analysed, conditional logic, which belongs to the category of algorithmic thinking (CT2), stood out as the most prominent dimension of CT. This was followed by pattern recognition, belonging to generalisation (CT5), and abstraction (CT1). These results align with the evolution visible in Figure 6, which analyses the accuracy rate on questions involving these specific dimensions. Moreover, as shown in Figure 7, which illustrates the percentage coverage of coded field notes from the pilot study, the distribution of references across these categories further highlights the prevalence of certain CT dimensions in student discourse. However, it is surprising that the highest hit rate was found in questions that called for the process of decomposition (CT3) and that there were few discursive interactions in this sense. In our opinion, this is due to the fact that students tend to decompose problems mentally. When there are no doubts about solving the problem, they end up simply acting without explaining their reasoning, making it more complex to record. Berland and Lee [12] may not have felt this constraint because the game they used, Pandemic, was a collaborative game, meaning the students were ‘forced’ by the game system itself to expose their thoughts before acting. In the strategic games we used, the system and game dynamics call for more significant interaction but do not make it a criterion for progression in the game.

Figure 7.

Percentage coverage of codes about total coded data. Source: NVivo.

Finally, it should be noted that the evaluation competence (CT4) had the fewest records. This skill is related to debugging. Berlan and Lee [12] obtained excellent results when they presented debugging records whenever students checked the rules of the game using the rule book. However, the participants were between 17 and 19 years old. In our case, the primary school students were always very proactive but also entirely rash in many of the game’s actions, wanting to show off their knowledge of the game. As Aguiar [66] points out, children need to learn to control their personal will so that the game runs smoothly, and, in some cases, they find that they misjudged the move after it has been made. The names used in the following examples are pseudonyms.

3.2.1. Example of Conditional Logic (Related to CT2)

Conditional logic requires students to be able to think about or anticipate the moves made at specific points in the game. Directly related to the construction of algorithms, let us look at the following example observed during Sierra.VG’s move in the game King of the Dice: The Board Game:

Sierra.VG: ‘(…) I’m going to take two fours and two fives to try to make this sequence and take the merchant…no… I’d rather go and get the dragon…it’s almost impossible on the last turn to take a four and a five and then I can’t go and get anything (the merchant card required three fours and three fives).’

These statements by Sierra.VG may be related to the use of ‘if–then–else’ conditions when he says, ‘It’s almost impossible to take a four and a five on the last turn, and then I can’t get anything’, as he refers to the condition that determines the action chosen during his move. Note that the student is considering the possibility of not being able to obtain the desired combination of cards on the last throw of the dice, planning what he will do in that situation. The anticipation of scenarios and the development of appropriate algorithms also depend on the use of conditional thinking since several possible scenarios are considered.

3.2.2. Example of Abstraction (Related to CT1)

Abstraction, dubbed by Berland and Lee (2011) as ‘global logic’, highlights the reasoning carried out by students at a higher-order level (Wing 2011). Abstraction requires students to be able to predict not only their own decision-making process but also that of their opponents. Take the following example, taken from November.LA’s turn during a game of King of the Dice: The Board Game:

November.LA: ‘My intention is to get this thief card, making the condition red > blue > green and being able to get two castles with it. Since I got it, I can take two (terrain tiles) and put them into play. I think I’ll choose this one. It’s the flower field, so I’m going to put it here (it has a diamond). I score more points here.’

To make the best decision about which tile to choose, in this excerpt, the student shows processes of abstraction when deciding on the field of flowers. He separates the ‘content’ from the ‘form’, understanding that his reward goes beyond obtaining another tile. He uses abstraction to take information from reality (the game board) and determine his chances of obtaining a higher score by placing that tile, either because it is adjacent to another tile of his or because the flower field contains some bonus, in this particular case, a diamond. In both cases, the player would obtain two adjacent territories on the board, earning three points per tile. However, if the player chose the flower field, in addition to the three points per tile, they would also obtain three points for the golden diamond in the flower field.

3.2.3. Example of Decomposition (Related to CT3)

In decomposition, students ‘break down’ a problem into smaller parts, concentrating on solving smaller problems in isolation. It is the contextual situation in which the larger problem is inserted that will dictate the student’s choice of the type of decomposition to carry out. The following extract shows us an action with dependency decomposition, carried out by Romeu.SC during a turn of the Rossio MBG:

Romeo.SC: ‘I place this active card now (card with 4 yellow tiles worth 4 points), paying 3 coins. By my reckoning, I’ll score twelve points… because when I look at the tile area, I’ll be able to place enough tiles to make the card pattern at least once more (they were already visible twice). So that’s three times…3 × 4, that’s 12 points’.

In this approach, the larger problem has been divided up by Romeo.SC on the basis of parts that depend on each other. In the context of this move, Romeo.SC divides the problem into smaller parts based on the specific information available in the game, namely, the current layout of the tiles. His analysis and calculations depend directly on this information to determine how often he can replicate the pattern on his cobbler’s card and thus calculate a possible score.

3.2.4. Example of Generalisation (Related to CT5)

The literature suggests that generalisation is closely associated with the concept of ‘Pattern Recognition’. Pattern Recognition requires students to find similarities between actions and moves to solve complex problems on the game board more efficiently. Here is an example from India.FM, observed during a turn of the Rossio game:

India.FM: ‘I’m not going to play this card; I’m going to throw it away…because I have less chance of getting it…I’d rather activate it downwards and get a coin. Now here (in the tile zone), I’m going to place this one here (placing her tile in the playing area) because I have a pattern like it in my yard… Now I’m going to play again (the tile placed was adjacent to another one like it), and I’m going to block someone because you can make this pattern here (a pattern of two yellows, one of the most common and most easily reached).’

The student looked for elements that were similar to the pattern on her cobbler’s card (three reds horizontally). It should be noted that although the pattern on her card was represented horizontally, it could also be found vertically on the board, offering points for this representation. What is more, each tile would only count once to form a pattern, which, in addition to requiring greater concentration on pattern recognition, required India.FM to work extra hard on her abstraction skills. If India.FM places a red tile adjacent to another red tile, she will win the chance to play again. She knows this action opens up space for simultaneous moves, allowing her to generalise similar moves and efficiently maximise her score.

3.2.5. Example of Evaluation (Related to CT4)

Although the ‘Evaluation’ process was the dimension with the least favourable results, we also analysed some excerpts to understand the importance of debugging during the game flow. When we talk about the process of evaluating or verifying solutions, we are talking about the process of solving problems on the game board through trial and error [30].

(After placing her tiles in the Rossio square area, the student chooses a cobbler card to complete her hand of three cards).

Lima.IB: ‘I think I’ll go for this one with 7 coins (The card had 3 yellow tiles worth 4 points).’

Quebec.SH: ‘You can’t go for the 7-coin one. You only have 6. I mean, you can, but you’ll be wasting a turn at this stage because if you want to activate it next round, you won’t be able to.’

Lima.IB: ‘Then I don’t know…’

Quebec.SH: ‘Get the one that costs 5 coins… you already have 6… so you can activate it on your next turn…’

Lima.IB: ‘But the pattern is the same, and I’m winning by one point less.’

Quebec.SH: ‘Yes… but you’re also spending two coins less and making the move now’.

At this point in the game, the students already knew Rossio rules well, but Quebec.SH realised that Lima.IB was thinking the wrong way by wanting to obtain a cobbler’s card without having the coins to do so. This prompted Quebec.SH to comment, ‘You can’t get the cobbler’s card that costs 7 coins’. Faced with his colleague’s comment, Lima.IB looked at the board, and Quebec.SH added, ‘You only have 6. I mean, you can, but you’ll be wasting a turn at this stage because if you want to activate it in the next round, you won’t be able to’. Quebec.SH identified the problem and gave Lima.IB a solution by acting as a debugger, alerting his colleague to how he would act, i.e., by acquiring a card costing five coins. Based on the rules of the game, Lima.IB could only succeed in his move this turn if he went for the five-coin card, which, despite being worth one point less by default, allowed him to save more coins and start scoring points right now instead of waiting for the next turn.

4. Discussion and Implications

4.1. Discussion

Firstly, it is essential to highlight that the MBGs employed during the gaming sessions were meticulously selected and oriented towards fostering CT skills through the CTLM-TM framework [38].

To investigate the effect of MBGs on the development of students’ CT skills, the results of gaming sessions utilising King of the Dice: The Board Game and Rossio were analysed following their implementation. Subsequently, the outcomes of Bebras tests administered as pre-tests and post-tests to the students were examined. Additionally, qualitative data from field notes were collected, analysed, and integrated with the quantitative results, creating a triangulation that strengthened this study’s conclusions.

The findings revealed a moderately significant improvement in CT development after utilising these two modern board games, with a medium to large effect size. This observation of enhancement through analogue resources is consistent with previous research findings that employed similar methodologies [12,13]. The triangulation of data, comparing the qualitative and quantitative findings, confirmed that the strategies developed by the students during gameplay, as observed in the field notes, directly reflected the improvements seen in the Bebras tests.

Considering the specific CT skills delineated by Dagiene, Sentance, and Stupuriene [45], such as abstraction (CT1), algorithmic thinking (CT2), decomposition (CT3), and generalisation (CT5), discernible enhancements were observed. These results underscore the efficacy of utilising MGBs as a pedagogical tool for CT skill development. Nonetheless, it is evident that further interventions are warranted to bolster these dimensions and address areas where perceptible improvement was not noticeable.

The notable enhancement in abstraction skill becomes particularly compelling when considering the game Rossio, where its ‘Drafting’ (Hand Management) mechanic necessitates players to possess high levels of abstraction. Machuqueiro and Piedade [38] observe that ‘the characteristics of each pattern card, as well as the need to fulfil certain conditions for selecting the pavement workers, require players to have high levels of abstraction’ (p. 23). Similarly, advancements in algorithmic thinking skills could be attributed to the ‘Fix Turn Order’ mechanic, prevalent in this modern board game (MBG). Within this context, players consistently adapt their strategies to accommodate turn-specific variables, such as available resources or market dynamics, thereby continually devising new pathways to success with each successive turn.

On the other hand, the ‘Dice Selection’ mechanic in the game King of the Dice: The Board Game may have contributed to improvement in issues related to the decomposition skill, as ‘the dice-rolling mechanism seems to support the development of skills similar to those observed in computational data analysis processes, as the information present in the game’s physical dice is analysed and evaluated based on certain conditions’ [38] (p. 24), believing that decomposing the problem will lead to finding plays more efficiently.

Though somewhat modest, the perceptible advancement in the generalisation skill might have been shaped by the ‘Tile Laying’ mechanic found in both MBG. This aspect, notably conducive to fostering ‘Pattern Recognition’, is evident when players are tasked with mentally constructing sequences of their moves. This could involve either deducing patterns already present on the board in Rossio or meeting specific colour or numerical criteria associated with the dice in King of the Dice: The Board Game.

Before moving on to the study limitations and implications, it is worth noting that, as several studies suggest, the development of CT in schools is mainly achieved through robotics and programming [67,68]. However, ‘unplugged’ activities have long been recognised as a valid option for developing CT, especially at early ages [65,69]. Recently, the ABC Thinking program, for example, suggested a set of gamified activities for CT development [70]. However, using MBGs in the classroom to develop CT is still relatively uncommon. Implementing the MBGs King of the Dice: The Board Game and Rossio during this study, along with the subsequent analysis of the results conducted, contributes towards considering the regular use of this type of resource as a strategy for CT development. The integration of qualitative data, such as field notes, with the quantitative test results strengthens the validity of these conclusions, as both approaches support the positive impact of MBGs on CT development. Furthermore, considering the use of other contemporary and strategic games with different game mechanics, as suggested by Berland and Duncan [13], it also seems essential to enhance this development.

4.2. Implications

This study has some significant limitations. The absence of a control group prevents the exclusive attribution of the observed improvements to the use of MBGs, as other variables, such as elements of the maths curriculum aimed at learning CT, may have influenced the results. Furthermore, without the inclusion of a control group, it is difficult to determine whether the changes in scores are exclusively attributable to the intervention or whether they can be explained by natural progressions in the students’ development over time, familiarity with test-taking (for example, by getting used to the test format), or even external learning from other academic subjects. These factors may have contributed independently or in combination to the results observed, making it difficult to isolate the specific effect of the MBGs. The results of the Bebras tests showed low scores, suggesting difficulties faced by the students, possibly related to the difficulty of the stimuli and the complexity of the questions [71,72]. The sample used could have been more significant for a more robust generalisation of the results. The time allocated to each game session and the intervention seemed short, indicating the need for more time to prepare and explain the rules. The evolution of the students’ CT seemed to vary between the different concepts, suggesting the need for more targeted interventions. The use of a greater variety of MBGs and mechanics could further enhance the development of CT. In addition, the students’ initial exposure to MBGs raises questions about their behavioural attitudes towards this experience, highlighting the importance of considering not only the cognitive aspects but also the communicative and collaborative skills stimulated by MBGs [22].

5. Conclusions

The use of MGBs within the classroom setting among students in the first cycle of primary education remains relatively limited. However, considering that ‘unplugged’ activities are recognised as an exciting strategy to promote CT skills in young learners, including those with special educational needs [73], MBGs could assume significance as a valuable pedagogical resource within this domain.

Following the implementation of MBGs in the classroom and the analysis of the results of the Bebras tests, administered as pre-tests and post-tests to the students, we can conclude that the use of this type of resource suggests a significant contribution to the development of CT in students in the first cycle of primary education. However, despite the improvements observed in four out of the five skills present in the Bebras tests adapted from Dagiene [45,74], the results of the Bebras tests were low when compared to the maximum possible score in each test, indicating that future interventions, with longer duration, may lead to even more evident improvement. Indeed, an educational approach capable of encompassing various dimensions of CT, including using several distinct MBGs with teaching strategies and possible assessment, could be quite interesting. Clearly, as this was an exploratory study, more research is needed to establish clear parallels between game mechanics and the development of CT. Nevertheless, we consider this a first step towards establishing a new approach to developing this cognitive skill.

Author Contributions

Conceptualization, F.M. and J.P.; methodology, F.M and J.P.; software, J.P.; validation, F.M. and J.P.; formal analysis, F.M.; investigation, F.M.; resources, F.M.; data curation, F.M.; writing—original draft preparation, F.M.; writing—review and editing, J.P.; visualization, F.M.; supervision, J.P.; project administration, F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Funds through FCT-Portuguese Foundation for Science and Technology, I.P., under the scope of UIDEF-Unidade de Investigação e Desenvolvimento em Educação e Formação, UIDB/04107/2020, https://doi.org/10.54499/UIDB/04107/2020.

Institutional Review Board Statement

The research received a favourable decision from the Ethics Committee of the Instituto de Educação da Universidade de Lisboa.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available only on request from the corresponding author due to ethical reasons.

Acknowledgments

We would like to express our gratitude to the publishers for providing us with the analogue games.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wing, J. Computational Thinking. Commun. ACM 2006, 49, 140–158. [Google Scholar] [CrossRef]

- Yadav, A.; Stephenson, C.; Hong, H. Computational Thinking for Teacher Education. Commun. ACM 2017, 60, 55–62. [Google Scholar] [CrossRef]

- Angeli, C.; Voogt, J.; Fluck, A.; Webb, M.; Cox, M.; Malyn-Smith, J.; Zagami, J. A K-6 Computational Thinking Curriculum Framework: Implications for Teacher Knowledge. Educ. Technol. Soc. 2016, 19, 47–57. Available online: https://www.jstor.org/stable/jeductechsoci.19.3.47 (accessed on 25 July 2024).

- Caeli, E.N.; Yadav, A. Unplugged Approaches to Computational Thinking: A Historical Perspective. TechTrends 2020, 64, 29–36. [Google Scholar] [CrossRef]

- Aho, A.V. Computation and Computational Thinking. Comput. J. 2012, 55, 833–835. [Google Scholar] [CrossRef]

- Martins, A.R.Q.; Miranda, G.L.; Eloy, A.d.S. Uma Revisão Sistemática de Literatura Sobre Autoavaliação de Pensamento Computacional de Jovens. Renote 2020, 18, 2. [Google Scholar] [CrossRef]

- Bayeck, R.Y. Understanding Computational Thinking in the Gameplay of the African Songo Board Game. Br. J. Educ. Technol. 2024, 55, 259–276. [Google Scholar] [CrossRef]

- Yasar, O. Viewpoint a New Perspective on Computational Thinking: Addressing Its Cognitive Essence, Universal Value, and Curricular Practices. Commun. ACM 2018, 61, 33–39. [Google Scholar] [CrossRef]

- Bell, T.; Witten, I.; Fellows, M. Off-line activities and games for all ages. Comput. Sci. Unplug. 1998, 1, p. 1. Available online: https://classic.csunplugged.org/documents/books/english/unplugged-book-v1.pdf (accessed on 25 July 2024).

- Bell, T.; Vahrenhold, J. CS Unplugged—How Is It Used, and Does It Work? Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 11011, ISBN 9783319983554. [Google Scholar] [CrossRef]

- Nishida, T.; Kanemune, S.; Idosaka, Y.; Namiki, M.; Bell, T.; Kuno, Y. A CS Unplugged Design Pattern. SIGCSE Bull. Inroads 2009, 41, 231–235. [Google Scholar] [CrossRef]

- Berland, M.; Lee, V.R. Collaborative Strategic Board Games as a Site for Distributed Computational Thinking. Int. J. Game-Based Learn. 2011, 1, 65–81. [Google Scholar] [CrossRef]

- Berland, M.; Duncan, S. Computational Thinking in the Wild: Uncovering Complex Collaborative Thinking Through Gameplay. Educ. Technol. 2016, 56, 29–35. Available online: https://www.jstor.org/stable/44430490 (accessed on 2 June 2024).

- Scirea, M.; Valente, A. Boardgames and Computational Thinking: How to Identify Games with Potential to Support CT in the Classroom. In Proceedings of the FDG ’20: Proceedings of the 15th International Conference on the Foundations of Digital Games, Bugibba, Malta, 15–18 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Morais, A.; Sousa, H.; Joaquim, A.; Aires, A.; Catarino, P. Desenvolver o Pensamento Computacional Através Do Jogo de Mesa Tichu. 2023. Available online: https://www.researchgate.net/publication/377500165 (accessed on 25 June 2024).

- Chiazzese, G.; Fulantelli, G.; Pipitone, V.; Taibi, D. Engaging Primary School Children in Computational Thinking: Designing and Developing Videogames. Educ. Knowl. Soc. 2018, 19, 63–81. [Google Scholar] [CrossRef]

- Zhao, W.; Shute, V.J. Can Playing a Video Game Foster Computational Thinking Skills? Comput. Educ. 2019, 141, 103633. [Google Scholar] [CrossRef]

- Clarke, D.; Roche, A. The Power of a Single Game to Address a Range of Important Ideas in Fraction Learning. Aust. Prim. Math. Classr. 2010, 15, 18–24. Available online: https://files.eric.ed.gov/fulltext/EJ898705.pdf (accessed on 2 October 2024).

- Russo, J.A.; Roche, A.; Russo, T.; Kalogeropoulos, P. Examining Primary School Educators’ Preferences for Using Digital versus Non-Digital Games to Support Mathematics Instruction. Int. J. Math. Educ. Sci. Technol. 2024, 1–26. [Google Scholar] [CrossRef]

- Sousa, C.; Rye, S.; Sousa, M.; Torres, P.J.; Perim, C.; Mansuklal, S.A.; Ennami, F. Playing at the School Table: Systematic Literature Review of Board, Tabletop, and Other Analog Game-Based Learning Approaches. Front. Psychol. 2023, 14, 1160591. [Google Scholar] [CrossRef]

- Rosa, M.; Gordo, S.; Sousa, M.; Pocinho, R. Critical Thinking, Empathy and Problem Solving Using a Modern Board Game. In Proceedings of the Ninth International Conference on Technological Ecosystems for Enhancing Multiculturality, Barcelona, Spain, 27–29 October 2021; pp. 624–628. [Google Scholar] [CrossRef]

- Martinho, C.; Sousa, M. CSSII: A Player Motivation Model for Tabletop Games. In Proceedings of the Foundations of Digital Games 2023 (FDG 2023), Lisbon, Portugal, 12–14 April 2023; Volume 1. [Google Scholar] [CrossRef]

- Castronova, E.; Knowles, I. Modding Board Games into Serious Games: The Case of Climate Policy. Int. J. Serious Games 2015, 2, 63–75. [Google Scholar]

- Olympio, P.C.d.A.P.; Alvim, N.A.T. Board Games: Gerotechnology in Nursing Care Practice. Rev. Bras. Enferm. 2018, 71, 818–826. [Google Scholar] [CrossRef]

- Moya-Higueras, J.; Solé-Puiggené, M.; Vita-Barrull, N.; Estrada-Plana, V.; Guzmán, N.; Arias, S.; Garcia, X.; Ayesa-Arriola, R.; March-Llanes, J. Just Play Cognitive Modern Board and Card Games, It’s Going to Be Good for Your Executive Functions: A Randomized Controlled Trial with Children at Risk of Social Exclusion. Children 2023, 10, 1492. [Google Scholar] [CrossRef]

- Sousa, M.; Dias, J. From Learning Mechanics to Tabletop Mechanisms: Modding Steam Board Game to Be a Serious Game. In Proceedings of the 21st International Conference on Intelligent Games and Simulation, GAME-ON 2020, Aveiro, Portugal, 23–25 September 2020; pp. 41–48. [Google Scholar]

- Machuqueiro, F.; Piedade, J. Development of Computational Thinking Using Board Games: A Systematic Literature Review Based On Empirical Studies. Rev. Prism. Soc. 2022, 38, 5–36. Available online: https://revistaprismasocial.es/article/view/4766 (accessed on 25 September 2023).

- Rao, T.S.S.; Bhagat, K.K. Computational Thinking for the Digital Age: A Systematic Review of Tools, Pedagogical Strategies, and Assessment Practices; Springer: New York, NY, USA, 2024; Volume 72, ISBN 0123456789. Available online: https://link.springer.com/article/10.1007/s11423-024-10364-y (accessed on 25 July 2024).

- Su, J.; Weipeng, Y. A Systematic Review of Integrating Computational Thinking in Early Childhood Education. Comput. Educ. 2023, 4, 100122. [Google Scholar] [CrossRef]

- Bocconi, S.; Chioccariello, A.; Kampylis, P.; Wastiau, P.; Engelhardt, K.; Earp, J.; Horvath, M.; Malagoli, C.; Cachia, R.; Giannoutsou, N.; et al. Reviewing Computational Thinking; Office of the European Union: Luxembourg, 2022; ISBN 9789276472087. [Google Scholar] [CrossRef]

- Papert, S. The Children’s Machine; BasicBooks: New York, NY, USA, 1993. [Google Scholar]

- Sousa, M.; Zagalo, N.; Oliveira, P. Mechanics or Mechanisms: Defining Differences in Analog Games to Support Game Design. In Proceedings of the 2021 IEEE Conference on Games (CoG) 2021, Copenhagen, Denmark, 17–20 August 2021. [Google Scholar] [CrossRef]

- Booth, P. Game Play: Paratextuality in Contemporary Board Games; Bloomsbury Publishing (USA): New York, NY, USA, 2015; ISBN 9781628927443. [Google Scholar]

- Sousa, M.; Bernardo, E. Back in the Game Modern Board Games. Commun. Comput. Inf. Sci. 2019, 1164, 72–85. [Google Scholar] [CrossRef]

- Salen, K.; Zimmerman, E. Rules of Play—Game Design Fundamentals; The MIT Press: London, UK, 2004; ISBN 0-262-24045-9. [Google Scholar]

- Engelstein, G.; Shalev, I. Building Blocks of Tabletop Game Design; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Abingdon, UK, 2022; ISBN 9781138365490. [Google Scholar]

- Sousa, M. Mastering Modern Board Game Design to Build New Learning Experiences: The MBGTOTEACH Framework. Int. J. Games Soc. Impact 2013, 1, 68–93. [Google Scholar] [CrossRef]

- Machuqueiro, F.; Piedade, J. Exploring the Potencial of Modern Board Games to Support Computational Thinking. In Proceedings of the XXV Simpósio Internacional de Informática Educativa, Setúbal, Portugal, 16–18 November 2023. [Google Scholar] [CrossRef]

- Somma, R. Coding in the Classroom: Why You Should Care About Teaching Computer Science; No Starch Press, Inc.: San Francisco, CA, USA, 2020; ISBN 13-9781-7-185-0035-8. [Google Scholar]

- European Commission Eurydice. Available online: https://eurydice.eacea.ec.europa.eu/national-education-systems (accessed on 26 March 2024).

- Bocconi, S.; Chioccariello, A.; Dettori, G.; Ferrari, A.; Engelhardt, K.; Kampylis, P.; Punie, Y. Developing Computational Thinking: Approaches and Orientations in K-12 Education. In Proceedings of the EdMedia+ Innovate Learning, Association for the Advancement of Computing in Education (AACE), Vancouver, QC, Canada, 28–30 June 2016; pp. 1–7. Available online: https://publications.jrc.ec.europa.eu/repository/handle/JRC102384 (accessed on 26 March 2024).

- Matos, J.F.; Pedro, A.; Piedade, J. Integrating Digital Technology in the School Curriculum. Int. J. Emerg. Technol. Learn. 2019, 14, 4–15. [Google Scholar] [CrossRef]

- Coutinho, C.P. Metodologia de Investigação Em Ciências Sociais e Humanas; Edições Almedina SA: Coimbra, Portugal, 2015. [Google Scholar]

- Creswell, J. Research Design—Qualitative, Quantitative, and Mixed Methods Approache, 3rd ed.; Sage Publications: New York, NY, USA, 2009; ISBN 9781412965569. [Google Scholar]

- Dagiene, V.; Sentance, S.; Stupuriene, G. Developing a Two-Dimensional Categorization System for Educational Tasks in Informatics. Informatica 2017, 28, 23–44. [Google Scholar] [CrossRef]

- Cartelli, A.; Dagiene, V.; Futschek, G. Bebras Contest and Digital Competence Assessment. Int. J. Digit. Lit. Digit. Competence 2010, 1, 24–39. [Google Scholar] [CrossRef]

- Dagiene, V.; Stupuriene, G. Bebras—A Sustainable Community Building Model for the Concept Based Learning of Informatics and Computational Thinking. Inform. Educ. 2016, 15, 25–44. [Google Scholar] [CrossRef]

- Musaeus, L.H.; Musaeus, P. Computational Thinking and Modeling: A Quasi-Experimental Study of Learning Transfer. Educ. Sci. 2024, 14, 980. [Google Scholar] [CrossRef]

- Izu, C.; Mirolo, C.; Settle, A.; Mannila, L.; Stupuriene, G. Exploring Bebras Tasks Content and Performance: A Multinational Study. Inform. Educ. 2017, 16, 39–59. [Google Scholar] [CrossRef]

- Bardin, L. Análise de Conteúdo; Edições 70: São Paulo, Brazil, 2016. [Google Scholar]

- Machuqueiro, F.; Piedade, J. Modern Board Games and Computational Thinking: Results of a Systematic Analysis Process. EMI Educ. Media Int. 2024, 61, 161–183. [Google Scholar] [CrossRef]

- Mónico, L.; Alferes, V.; Castro, P.; Parreira, P. A Observação Participante Enquanto Técnica de Investigação Qualitativa. Pensar Enferm. 2017, 13, 30–36. [Google Scholar]

- NDTAC. A Brief Guide to Selecting and Using PrePost Assessments; Americans Institutes for Research: Arlington, VA, USA, 2006. [Google Scholar]

- Australian Maths Trust Bebras Solutions Guides. Available online: https://www.amt.edu.au/bebras-solutions-guides (accessed on 15 July 2024).

- Marôco, J. Analise Estatística Com o SPSS Statistics, 8th ed.; ReportNumber: Lisboa, Portugal, 2021. [Google Scholar]

- Jamovi Jamovi, Version 2.5; Software for Statistical Analysis; The Jamovi Project: Sydney, Australia. 2023; Available online: https://www.jamovi.org/ (accessed on 15 July 2024).

- Bogdan, R.; Biklen, S. Investigação Qualitativa em Educação: Uma Introdução à Teoria e Aos Métodos; Porto Editora: Porto, Portugal, 1994; ISBN 0205132669. [Google Scholar]

- Shin, N.; Bowers, J.; Roderick, S.; McIntyre, C.; Stephens, A.L.; Eidin, E.; Krajcik, J.; Damelin, D. A Framework for Supporting Systems Thinking and Computational Thinking through Constructing Models. Instr. Sci. 2022, 50, 933–960. [Google Scholar] [CrossRef]

- Grover, S.; Pea, R. Computational Thinking: A Competency Whose Time Has Come. Comput. Sci. Educ. 2017, 19, 1997–2004. [Google Scholar] [CrossRef]

- Brennan, K.; Resnick, M. New Frameworks for Studying and Assessing the Development of Computational Thinking. In Proceedings of the Annual Meeting of the American Educational Research Association, Vancouver, BC, Canada, 13–17 April 2012; Available online: http://scratched.gse.harvard.edu/ct/files/AERA2012.pdf (accessed on 15 July 2024).

- Rich, P.J.; Egan, G.; Ellsworth, J. A Framework for Decomposition in Computational Thinking. In Proceedings of the 2019 ACM Conference on Innovation and Technology in Computer Science Education, ITiCSE 2019, Aberdeen, UK, 5–8 July 2019; pp. 416–421. [Google Scholar] [CrossRef]

- Grover, S.; Pea, R. Computational Thinking in K-12: A Review of the State of the Field. Educ. Res. 2013, 42, 38–43. [Google Scholar] [CrossRef]

- Lee, T.Y.; Mauriello, M.L.; Ahn, J.; Bederson, B.B. CTArcade: Computational Thinking with Games in School Age Children. Int. J. Child. Comput. Interact. 2014, 2, 26–33. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates, Publishers: Mahwah, NJ, USA, 1988; ISBN 0805802835. [Google Scholar]

- Brackmann, C. Desenvolvimento Do Pensamento Computacional Através de Atividades Desplugadas Na Educação Básica. Ph.D. Thesis, Universidade Federal do Rio Grande do Sul, Porto Alegre, Brazil, 2017. Available online: https://lume.ufrgs.br/handle/10183/172208 (accessed on 15 July 2024).

- Aguiar, A. O Impacto do Jogo de Regras na Aprendizagem e na Motivação do 1.º CEB, 2019. Master’s Thesis, Escola Superior de Educação Paula Frassinetti, Porto, Portugal, 2019; pp. 12–15. Available online: http://repositorio.esepf.pt/bitstream/ (accessed on 15 July 2024).

- Constantinou, V.; Ioannou, A. Development of Computational Thinking Skills through Educational Robotics. In Proceedings of the European Conference on Technology Enhanced Learning, Leeds, UK, 3–6 September 2018; Volume 2193, pp. 1–11. [Google Scholar]

- Boom, K.D.; Bower, M.; Siemon, J.; Arguel, A. Relationships between Computational Thinking and the Quality of Computer Programs. Educ. Inf. Technol. 2022, 27, 8289–8310. [Google Scholar] [CrossRef]

- Rodriguez, B.; Kennicutt, S.; Rader, C.; Camp, T. Assessing Computational Thinking in CS Unplugged Activities. In Proceedings of the 2017 ACM SIGCSE Technical Symposium on Computer Science Education, ITiCSE 2017, Bologna, Italy, 3–5 July 2017; pp. 501–506. [Google Scholar] [CrossRef]

- Zapata-Cáceres, M.; Marcelino, P.; El-Hamamsy, L.; Martín-Barroso, E. A Bebras Computational Thinking (ABC-Thinking) Program for Primary School: Evaluation Using the Competent Computational Thinking Test. Educ. Inf. Technol. 2024, 29, 14969–14998. [Google Scholar] [CrossRef]

- Leong, S.C. On Varying the Difficulty of Test Items. In Proceedings of the International Association for Educational Assessment 32nd Annual Conference, Sinagapore, 21–26 May 2006; pp. 1–6. [Google Scholar]

- Lonati, V.; Monga, M.; Malchiodi, D.; Morpurgo, A. How Presentation Affects the Difficulty of Computational Thinking Tasks: An IRT Analysis. In Proceedings of the 17th Koli Calling International Conference on Computing Education Research, Koli, Finland, 16–19 November 2017; pp. 60–69. [Google Scholar] [CrossRef]

- Zapata-Ros, M. Computational Thinking Unplugged. Educ. Knowl. Soc. 2019, 20, 1–29. [Google Scholar] [CrossRef]

- Dagiene, V. Information Technology Contests—Introduction to Computer Science in an Attractive Way. Inform. Educ. 2006, 5, 37–46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).