Abstract

Students’ comprehension of ethical principles and their application in the realm of AI technology play a crucial role in shaping the efficacy and morality of assessment procedures. This study seeks to explore students’ viewpoints on ethical principles within the context of AI-driven assessment activities to illuminate their awareness, attitudes, and practices concerning ethical considerations in educational environments. A systematic review of articles on this topic was conducted using scientometric methods within the Web of Science database. This review identified a research gap in the specialized literature regarding studies that delve into students’ opinions. Subsequently, a questionnaire was administered to students at Transilvania University of Brasov as part of the Information Literacy course. Statistical analysis was performed on the obtained results. Ultimately, students expressed a desire for the Information Culture course to incorporate a module focusing on the ethical use of AI in academic publishing.

1. Introduction

Information literacy is a creative process that essentially involves the production of an original and authentic work of scientific significance. Traditionally, drawing up a scientific paper implies studying and is exclusively the result of human effort. Artificial intelligence has definitely transformed the world and brought significant changes to the academic environment through learning and data analysis algorithms, with this technology revolutionizing the research field. The use of AI has fundamentally changed research methodologies and has proven to be extremely useful in academic work, as AI has the ability to adapt so as to support the needs and abilities of the author [1]. The unlimited ability to extract, manipulate, and process information significantly speeds up the process of research and innovation, and AI has brought undeniable benefits in terms of knowledge, perspectives, and writing opportunities.

However, the ease with which information can be accessed and used by anyone interested can raise issues of research ethics. Research ethics regulate the standards of conduct for researchers. Essentially, they imply that, in their activities, authors of information literacy must be governed by the principles of honesty and academic integrity. Adhering to these principles ensures the protection of the dignity, rights, and well-being of researchers [2].

Research activity, materialized in information literacy, revolves around the idea of intellectual honesty, a notion that involves a commitment to truth, ethical principles, and transparency [3]. In a modern world dominated or even driven by AI, the use of AI technologies in the research process is inevitable. AI makes it possible to access, use, and process data and information from scientific works that belong to their authors.

Generative AI also uses data and information derived from such scientific works in its training process, called transfer learning, meaning that the newly created material with AI tools is based on works belonging to others. When these works are used without authorization and proper attribution [4], in any way, it constitutes plagiarism. An attribution is a form of intellectual appreciation [5] and a way to show respect to the author of the work.

Plagiarism conducted via a lack of attribution and recognition of the source is a form of copyright infringement. Considered an act of intellectual dishonesty, plagiarism involves stealing and deception [6] and it is one of the most serious and frequent forms of academic dishonesty because it conflicts with the sense of justice, rightness, and moral obligation [3]. The unauthorized use of others’ works through AI constitutes a severe breach of intellectual honesty, calls into question the authenticity and originality of works, and undermines the very foundations of scientific competence. In this case, “distinguishing between inspiration and imitation becomes a complex endeavour” [7]. Commitment to intellectual honesty, ethics, and transparency means academic integrity. Academic integrity is based on six values that form the pillars of ethical academic practices: honesty, trust, fairness, respect, responsibility, and courage [8]. Upright academic behavior is the only way to ensure the authenticity and originality of the work and to meet quality standards. AI technologies provide data and information that can be used in information literacy and they also contribute to enhancing the quality of research. However, depending on the algorithms they use and their sources, which may not be known to be accurate, reliable, and impartial, AI systems can generate erroneous data, raising issues of bias, accuracy, relevance, and reasoning [9]. Incorporating such data lowers the quality of work, calls research activities into question, and undermines the credibility of authors. The academic environment has not escaped AI’s influence, making its use inevitable in information literacy. However, authors must learn to maintain the delicate balance between ethics and the temptation of AI technologies. This balance can be achieved by creating Ethical AI in line with our traditional moral values [10] that have an impact on individuals and societies, and by raising awareness and respecting the principles of research ethics. “Balancing the innovative contributions of AI with the imperatives of maintaining authentic and original scientific discourse is pivotal for the harmonious advancement of AI-integrated scientific writing” [7]. AI technology is challenging but, at the same time, dangerous because it can alter the sets of rules on which our society is built; it can promote new values and rules that are concerning and will have a considerable role in the future [11]. Therefore, we are vulnerable in its presence, and its use must be done cautiously and responsibly. The interaction between humans and intelligent technologies must be a two-way process and relationship that prioritizes the values, norms, and principles of society [12]. “Failure to meet legal and ethical criteria means that AI usage risks the society as a result of innovation and expediency” [13].

In this context, the need to adopt an international legal standard on the responsible use of AI has been felt at the European level. Thus, on 27 May 2024, the EU Council’s Framework Convention on Artificial Intelligence, Human Rights, Democracy, and the Rule of Law [14] was adopted. The aim of this Convention is to create intelligent tools that operate under fundamental rights, democracy, and the principles of law.

2. Materials and Methods

The main aim of this study was to investigate students’ perceptions of the ethical use of artificial intelligence in academic publishing. The research design utilized in this study is a mixed qualitative–quantitative design.

The qualitative approach considered the scientometric analysis of studies investigating ethical principles in the use of artificial intelligence in scholarly publishing and investigating students’ views on the criteria for the ethical and responsible use of artificial intelligence in scholarly publishing. Its results formed the basis of the quantitative study with the following objectives:

- To explore the relationship between the program of study and student perceptions of the ethical use of artificial intelligence in scholarly publishing.

- To explore the relationships between students’ degree of trust in artificial intelligence systems and their perceptions of the ethical use of artificial intelligence in publishing.

- To analyze the relationships between students’ prior experience with artificial intelligence and their perceptions of its ethical use in publishing.

Scientometric Analysis of the Field

Scientometrics studies are investigations that analyze and measure the scientific output and impact of published articles and research [15,16]. The authors selected the Web of Science database for their scientometric study because of its extensive coverage of scientific publications. VOS Viewer is a no-cost and easy-to-use application designed for visualizing and examining scientific networks [17].

The research questions that the authors wanted to address were related to “Artificial Intelligence”, “Ethical Principles”, and academics.

The research team conducted scientometric research to synthesize the most recent advances in the field, current topics, and future development trends, taking a scientometric perspective. Relevant literature and journals were downloaded from the Web of Science Core Collection, using the search phrase “Artificial Intelligence” AND “Ethical Principles” AND academics. This approach led to the identification of 35 articles in the field. Four of the articles are review articles.

The research team downloaded the database of the 35 articles and, using the VOS visualizer, identified the key terms and associated terms in a network, thus providing a deeper understanding of the themes and relationships within this network.

3. Results

The results included 171 keywords, and the criterion for inclusion in the study was that each keyword appeared at least two times. Thus, 18 keywords were identified and distributed into five distinct groups.

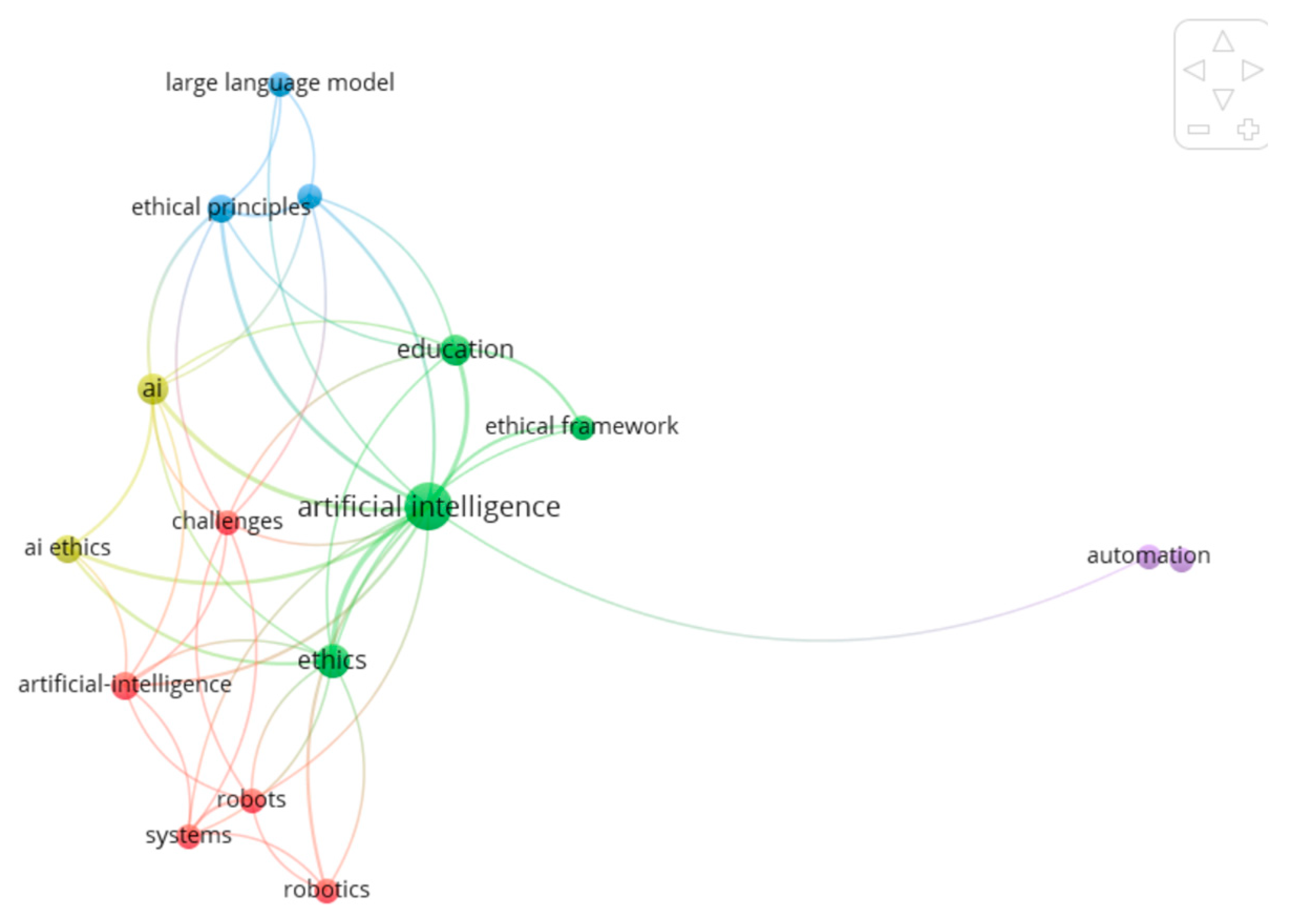

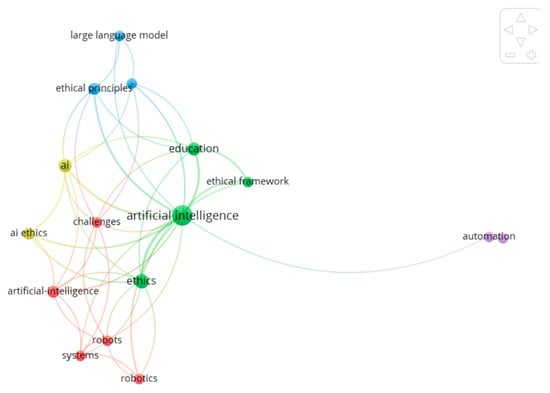

For a better visualization of the clusters and the relationships between terms, see Figure 1.

Figure 1.

Visualization of clusters.

Figure 1 is a network visualization related to artificial intelligence (AI) and its associated concepts. The visualization represents the connections and occurrences of various terms and concepts within a specific dataset. It utilizes different colors to indicate clusters of related topics.

Key elements in the visualization include:

- Central Node: “artificial intelligence” is the major central node, with several lines branching out to related concepts.

- Clusters: The clusters are color-coded and highlight various themes.

Green Cluster: Includes terms like “artificial intelligence”, “ethical framework”, “education”, and “ethics”.

Blue Cluster: Encompasses “large language model” and “ethical principles”.

Yellow Cluster: Contains “ai” and “ai ethics”.

Red Cluster: Pertains to “artificial intelligence”, “challenges”, “robots”, “robotics”, and “systems”.

3.1. Concepts

Terms such as “ethical principles”, “education”, “challenges”, “robots”, and “automation” are linked directly to artificial intelligence, showing the multifaceted implications and areas of study within AI.

The research team assigned 65 articles to the five clusters, according to the topic developed in each cluster. There was a review process of the articles associated with each cluster with the aim of developing and refining the identified research directions. In the review process it was found that 55 papers did not fit within the established research directions and were subsequently excluded from the analysis. Thus, 10 articles relevant to the analysis and synthesis of information in the identified research directions remained in the search.

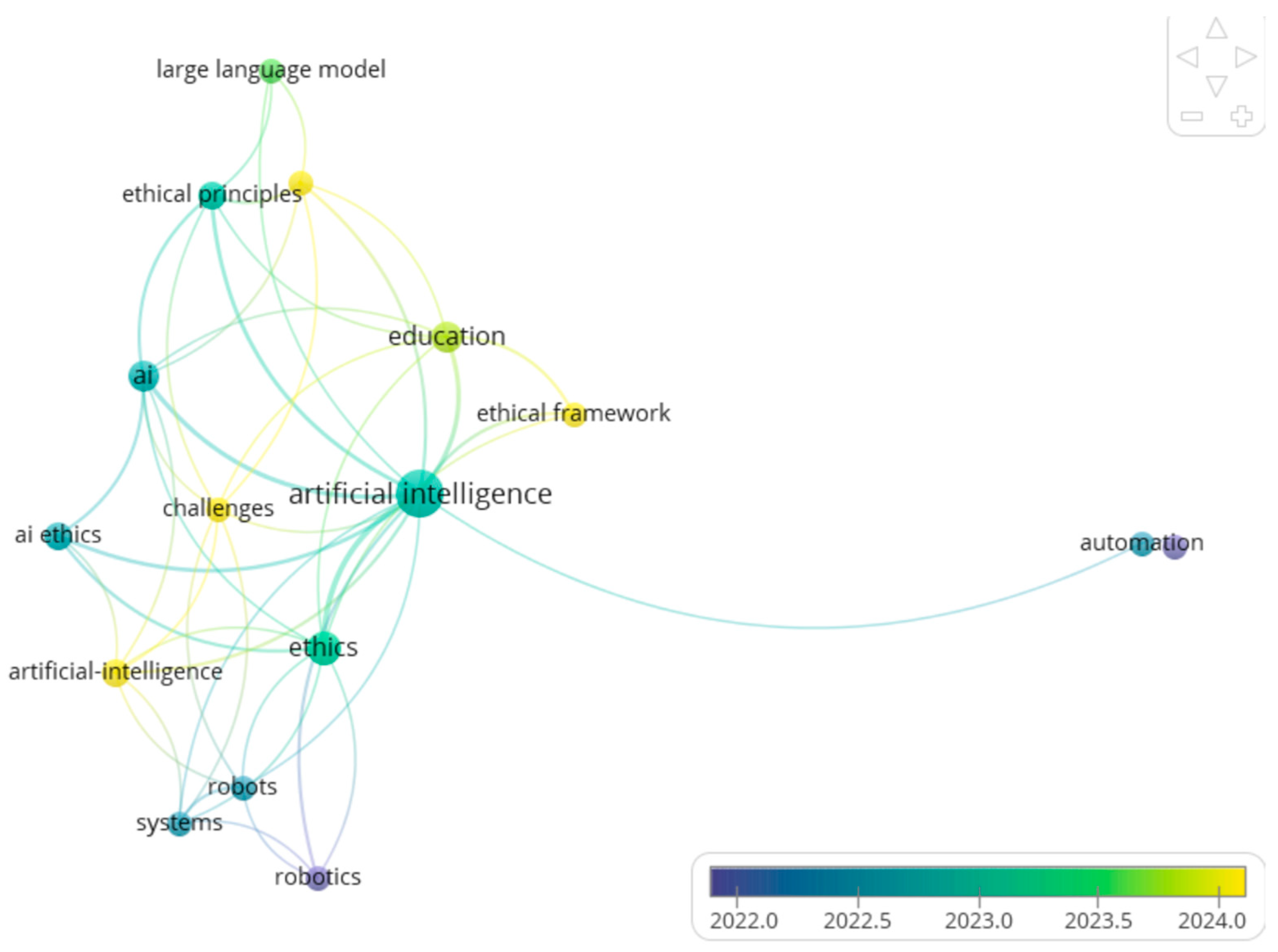

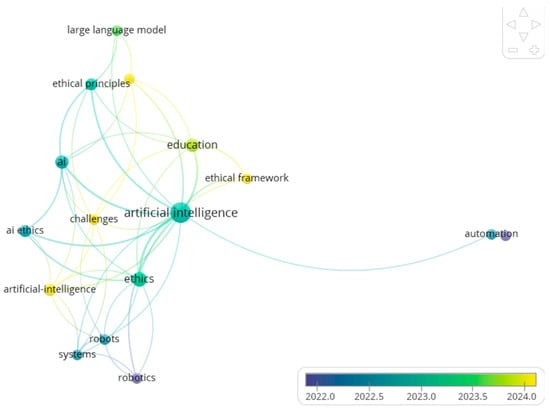

The image Figure 2 is a network visualization generated by VOSviewer, a software tool for constructing and visualizing bibliometric networks. This network likely represents the relationships and co-occurrences of terms related to ethical implications and frameworks in artificial intelligence (AI).

Figure 2.

Network graph.

Here is what can be observed from the visualization:

Main Nodes and Connections:

- The central and most significant node is “artificial intelligence”, indicating that it is the primary focus of the data.

- Other prominent nodes connected to “artificial intelligence” include “ethics”, “ethical framework”, “education”, and “automation”.

3.2. Clusters

Terms are grouped into clusters, identified by different colors, representing their relevance and relationships. Terms in the same cluster are more closely related to each other. The clusters and links’ colors also appear to have a time gradient from 2022 to 2024.

Time Scale: A color scale at the bottom indicates the timeline from 2022 to 2024. It shows that the terms progress over time, suggesting trends and changes in publications or discussions around these topics.

Additional Terms: Other significant terms include “ethical principles”, “challenges”, “ai ethics”, “robots”, “robotics”, and “systems”.

Layout: The layout of the graph is structured to show the interconnectedness of topics with each other, where nodes closer to each other have stronger or more frequent co-occurrences.

The image in Figure 3 is a diagram showing different authors and their associated years of publication, illustrating a network of connections. Here is a breakdown based on the image. This particular map focuses on academic references related to AI ethics.

Figure 3.

Sources.

Nodes (circles with names):

Nodes represent authors and years of publication. For example, “ryan (2021)” signifies a work by an author named Ryan published in 2021.

Lines (edges between nodes):

Lines indicate relationships or co-occurrences between the works of these authors.

3.3. Colors

Different colors represent different clusters, which correspond to different topics, themes, or communities within the AI ethics literature.

Labels:

- Ryan (2021) [18]

- Verma (2023) [19]

- Correa (2023) [20]

- Textor (2022) [21]

- Omari (2016) [22]

- Noain-Sanchez (2022) [23]

- Molnar-Gabor (2020) [24]

- Cole (2022) [25]

- Coates (2019) [26]

- Barreiro-ares (2023) [27]

We identified a research gap. Existing studies often focus on the ethical implications of AI in education from a broader institutional or political standpoint, overlooking the nuanced experiences and perspectives of students who directly interact with AI technology in assessment tasks.

By exploring students’ knowledge and practices regarding ethical principles in the use of AI, this study aims to address this gap in the literature and provide a more comprehensive understanding of the ethical challenges and opportunities presented by AI technology in education.

3.4. Investigating Students’ Views on Criteria for Ethical and Responsible Use of Artificial Intelligence in Academic Publishing

This study seeks to bridge the gap between theoretical discussions of ethics in AI and the practical realities faced by students in educational settings.

By exploring students’ practices in relation to ethical principles in AI-based assessment tasks, this research can help identify potential gaps in students’ ethical decision-making processes.

By examining students’ knowledge and practices regarding ethical principles in the context of AI-driven assessment tasks, this research aims to contribute to the development of ethical guidelines and best practices for the responsible use of AI in education.

The quantitative study had the following hypotheses:

- There are significant differences between students’ perceptions of the ethical use of artificial intelligence in scholarly publishing depending on the program of study followed.

- Students’ degree of trust in artificial intelligence systems influences their perceptions of its ethical use in publishing.

- Students’ previous experience with artificial intelligence influences their perceptions of its ethical use in publishing.

3.5. Description of Participants

The research was conducted in the months of March–April 2024, using an online administered questionnaire.

We aimed to collect students’ perceptions and opinions on the ethical use of artificial intelligence (AI) in various fields. We informed the participants on the front page of the questioner about the following aspects:

- Data confidentiality: All responses to this questionnaire will be treated confidentially and used for research purposes only. The data collected will be anonymized and will not be associated with the identity of the participant.

- Voluntary participation: Participation in this survey is completely voluntary. There is no obligation to answer all questions and you can decide to withdraw at any time without repercussions.

- Participant Rights: You have the right to express your opinions and perceptions honestly. If you have any questions or concerns, please contact us for further clarification.

The link https://www.surveymonkey.com/r/VGNHXTG (accessed on 31 March 2024) as sent to Transilvania University in Brasov students, to the Communication and Public Relations and Digital Media specializations within the Faculty of Sociology and Communication and to engineering students within the Faculty of Product and Environmental Design. As part of the Information Culture course, these students watched a film, https://www.youtube.com/watch?v=qDhx5HVJQwI (accessed on 31 March 2024), which is a film made within the European RespectNET project about exploring the risks and opportunities of artificial intelligence in academic writing.

A total of 192 students participated (male = 57, 29.7%; female = 134, 69.8%). Of these, there are 108 (56.2%) students enrolled in technical/engineering and 84 (43.8%) in social-humanities programs of study. Informed consent was obtained before the start of the study. Information on nature, purpose of the research and details of data confidentiality were presented at the top of the first page of the online survey.

The instrument had 16 items, 4 of which referred to socio-demographic indicators (such as age, gender, education level, and field of study) to contextualize responses. The questionnaire was constructed with the aim of collecting both general and specific information about students’ views on the ethical use of artificial intelligence. The other items were aimed at investigating students’ opinions on the ethical use of artificial intelligence in academic publishing. The issues considered were familiarization with the application of the concept of AI in academic writing, current use of AI in academic work, ethical attitudes and stances on the use of the concept of AI in academic writing, criteria for responsible and ethical use of AI in academic writing. The items were formulated following a review of relevant literature. The questionnaire was presented to a panel of experts from the university. Following their feedback, the items were revised to ensure clarity and relevance, with the aim of capturing a comprehensive understanding of students’ ethical considerations related to AI. The internal consistency coefficient Cronbach’s alpha obtained for the final form of the instrument was 0.78, which allowed for further statistical analysis.

3.6. Statistical Analyses

All statistical analyses (descriptive, correlations, and non-parametric tests for comparison of means) were performed with SPSS 23. The results reported in Table 1 and Table 2 suggest that there are significant differences between students’ perceptions of the ethical use of artificial intelligence in scholarly publishing depending on the program of study followed (U = 3370, z = −3.219, p = 0.01).

Table 1.

Ranks.

Table 2.

Test statistics.

Thus, it can be stated that students from the socio-human field considered to a greater extent that AI over used can compromise the quality of academic work than those from the engineering field. We also note that these results show that there were no significant differences between groups in the statement that the use of AI in information literacy can lead to unethical bias in academic assessment. They all believed that there is a link between information literacy and the responsible use of AI in information literacy and that transparency in the development and use of IA in academic contexts is important.

As we can see in the table above (Table 3), students who did not make much use of AI in their academic work believed that the use of AI in information literacy can lead to bias, disregarding the ethics in academic evaluation (those who have access to AI to be favored or to be evaluated higher than those who do not have access) (r = −0.237 **, p ≤ 0.01). Likewise, those who had ever used AI to assist in their information literacy or research did not believe that using AI in information literacy can lead to unethical bias in academic evaluation (r = −0.199 **, p ≤ 0.01).

Table 3.

Correlations.

Moreover, the results suggest that students who did not make much use of AI in their academic work believed that a reliance on it may compromise the quality of academic work (r = −0.167 *, p ≤ 0.05). Likewise, those who considered transparency in the development and use of AI in academic contexts to be important stated that they did not make much use of AI in academic projects (r = −0.164 *, p ≤ 0.05).

4. Discussion

The present study aimed to investigate students’ perceptions of the ethical use of artificial intelligence in academic publishing.

The qualitative approach scientometrically analyzed studies investigating ethical principles in the ethical use of artificial intelligence in academic publishing. The results of the scientometric analysis showed the current concerns in literature in studying the application of AI in education. The consideration for its ethical use in recent studies with this focus was also highlighted.

The students’ views on criteria for ethical and responsible use of artificial intelligence in scholarly publishing were assessed in the qualitative study. The main issues that students considered important were the need for education on the use of AI, the need for transparency in the use of AI, the need for academic standards in its use, the need for verification of the products produced with AI, copyright issues, source credibility issues, and data privacy issues.

They also believed that the role that AI plays in academic activity should be well established as a tool to help in the process of organization and inspiration, not a total alternative in the realization of the final product.

Quantitative analysis of the results revealed the existence of differences in opinions among students depending on the degree program in which they are enrolled. Thus, students in the socio-human field considered to a greater extent that AI overuse may compromise the quality of academic work than those in the engineering field.

Another interesting finding of the study is that, regardless of profile, students believed that information literacy is deeply intertwined with the responsible use of AI.

By investigating students’ perspectives on ethical principles in the context of AI-driven assessment tasks, this study aims to shed light on the awareness, attitudes, and behaviors of students towards ethical considerations in educational settings. Understanding how students navigate the ethical challenges posed by AI technology in assessments can provide valuable insights for educators, policymakers, and researchers to develop guidelines and strategies that promote ethical AI use in education.

Students’ understanding of these ethical principles and their practices in relation to AI technology are essential factors that can influence the effectiveness and ethicality of assessment processes. AI has become increasingly prevalent in various aspects of education, including assessment tasks, offering new opportunities and challenges for both educators and students. The use of AI in assessment tasks has the potential to streamline the evaluation process, provide personalized feedback, and enhance learning outcomes. In the context of AI-driven assessment tasks, ethical principles play a critical role in ensuring fairness, transparency, and accountability.

The existing correlations between students’ prior experience with artificial intelligence, students’ degree of trust in artificial intelligence systems and their perceptions of its ethical use in publishing are significant; therefore, hypotheses 2 and 3 are confirmed. However, we note the low values of the correlation coefficients, which invites the investigation of other possible factors involved in this topic.

While there is a growing call for “ethical artificial intelligence”, current guidelines and frameworks for AI ethics tend to focus narrowly on issues such as privacy, transparency, governance, and non-discrimination within a liberal political framework. One of the main challenges highlighted is the need to translate high-level ethical principles into actual technology design, development, and use in the labor process. Organizations often interpret ethics in an ad hoc manner, treating it as just another technological problem with technical solutions, and regulations have not adequately addressed the impact of AI on workers.

The idea of bridging the gap between abstract ethical principles and practical implementation in AI technology is crucial for ensuring that AI systems are developed and used in a responsible manner. By proposing principles and accountability mechanisms, Cole [25] aims to provide a framework that guides the development and deployment of AI systems while prioritizing fairness and ethical considerations in the workplace. This approach can help address potential biases, discrimination, and other ethical issues that may arise when AI is used in various industries. It is important to consider how these principles can be effectively implemented and enforced to create a more ethical and equitable AI ecosystem.

In a recent study, Noain-Sánchez [23] discussed the increasing prevalence of artificial intelligence (AI) in mass media and news agency newsrooms over the past decade, sparking debates about its potential negative impacts on journalism, particularly in terms of quality standards and ethical principles. The study aimed to explore the application of AI in newsrooms, focusing on its impact on news-making processes, media routines, and professional profiles, while also highlighting both the benefits and shortcomings of AI technology and addressing the ethical dilemmas that arise.

The study collected insights from a diverse international sample, including individuals from the United States, the United Kingdom, Germany, and Spain, through 15 detailed interviews conducted in 2019 and 2021 with journalists, media professionals, academics, industry experts, and technology providers at the forefront of AI efforts. Interviewees generally agreed that AI has the potential to enhance journalists’ capabilities by saving time, improving efficiency in news production processes, and boosting productivity in the mass media industry.

However, the study also identified a need for a shift in mindset within the media environment and emphasized the importance of training to ensure proper utilization of AI tools, given the observed lack of knowledge in this area. Furthermore, the emergence of ethical issues surrounding AI implementation underscores the necessity for ongoing monitoring and supervision of AI processes to address potential ethical concerns.

5. Limitations

This study used a quantitative design to investigate students’ perceptions of AI. However, this aspect is one of the limitations of this research, as complementary investigation through qualitative endeavors would have provided additional valuable data to complement the chosen model. We suggest this as a proposal for future research.

Another limitation is the limited diversity of the sample. Thus, a larger sample could have increased the generalizability of the findings.

6. Conclusions

The data support the conclusion that this study highlights the dual potential of AI to benefit and challenge academics, emphasizing the importance of embracing AI technology while also prioritizing training, ethical considerations, and oversight to navigate the evolving landscape of AI effectively, teaching students how to use it to their advantage, as a helpful tool and not as a way of evasion, Education on the use of AI is very important. This research reveals a significant gap between the perspectives highlighted by the scientometric analysis of ethics in AI and the practical challenges faced by students. While the current literature provides ethical guidance applicable to the institutional context, quantitative findings highlight that students often face complex, context-specific issues that are not fully addressed by existing ethical discussions. This gap suggests the importance of developing academic frameworks that emphasize actionable, context-based ethical practices, thereby preparing students to engage responsibly and effectively with AI technology in educational contexts. The present study also highlights the existence of differing views among students depending on the field of study they are pursuing, as well as a consensus among them on using AI responsibly and while respecting ethical considerations.

Although a quantitative research design does not provide sufficient depth of information, it is nevertheless a starting point for new approaches in academic teaching. It would be interesting to trace the impact of actionable educational interventions to support educators in teaching AI ethics, such as the use of case studies based on AI applications, simulations, role-playing in which students interpret different points of view (of AI developers, researchers, or educational policy makers) on the use of AI in research labs.

Author Contributions

Conceptualization, A.M.L., D.P., D.G.I., A.I.D. and A.R.; methodology, A.M.L., and D.P. and A.R.; validation, D.P. and A.R.; formal analysis, D.P.; investigation, A.R.; resources, D.G.I.; data curation, A.I.D.; writing—original draft preparation, D.P. and A.R.; writing—review and editing, D.P. and A.R.; visualization, A.M.L. and A.R.; supervision, A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in [ASPECKT DSPACE] at http://aspeckt.unitbv.ro/jspui/handle/123456789/2729 (accessed on 4 July 2024) (Reference Number 123456789/2729).

Acknowledgments

In this manuscript, Deep L Translator, developed by Deep L SE, was used to check the accuracy of the text, and Mendeley Reference Manager (Version 2.125.2) was used only and exclusively for the generation of the bibliography. Authors are fully responsible for the originality, validity, and integrity of the content of the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nguyen, Q.H. AI and Plagiarism: Opinion from Teachers, Administrators and Policymakers. Proc. Asiacall Int. Conf. 2023, 4, 75–85. [Google Scholar] [CrossRef]

- Ensuring Ethical Standards and Procedures for Research with Human Beings. Available online: https://www.who.int/activities/ensuring-ethical-standards-and-procedures-for-research-with-human-beings (accessed on 21 July 2024).

- Prashar, A.; Gupta, P.; Dwivedi, Y.K. Plagiarism awareness efforts, students’ ethical judgment and behaviors: A longitudinal experiment study on ethical nuances of plagiarism in higher education. Stud. High. Educ. 2023, 49, 929–955. [Google Scholar] [CrossRef]

- Devlin, M.; Gray, K. In their own words: A qualitative study of the reasons Australian university students plagiarize. High. Educ. Res. Dev. 2007, 26, 181–198. [Google Scholar] [CrossRef]

- Eaton, S.E. Postplagiarism: Transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. Int. J. Educ. Integr. 2023, 19, 23. [Google Scholar] [CrossRef]

- Montoneri, B. Plagiarism and Ethical Issues: A Literature Review on Academic Misconduct. Available online: https://www.researchgate.net/publication/357298979 (accessed on 3 July 2024).

- Carobene, A.; Padoan, A.; Cabitza, F.; Banfi, G.; Plebani, M. Rising adoption of artificial intelligence in scientific publishing: Evaluating the role, risks, and ethical implications in paper drafting and review process. Clin. Chem. Lab. Med. 2023, 62, 835–843. [Google Scholar] [CrossRef] [PubMed]

- Bozkurt, A. GenAI et al.: Cocreation, Authorship, Ownership, Academic Ethics and Integrity in a Time of Generative AI. Open Praxis. 2024, 16, 1–10. [Google Scholar] [CrossRef]

- Hosseini, M.; Hosseini, M.; Rasmussen, L.M.; Rasmussen, L.M.; Resnik, D.B.; Resnik, D.B. Using AI to write scholarly publications. Account. Res. 2023, 31, 715–723. [Google Scholar] [CrossRef] [PubMed]

- Borg, J.S. The AI field needs translational Ethical AI research. AI Mag. 2022, 43, 294–307. [Google Scholar] [CrossRef]

- Rip, A. Pervasive Normativity and Emerging Technologies. In Ethics on the Laboratory Floor; Palgrave Macmillan: London, UK, 2013; pp. 191–212. [Google Scholar] [CrossRef]

- Schintler, L.A. A Critical Examination of the Ethics of AI-Mediated Peer Review. Available online: https://arxiv.org/abs/2309.12356 (accessed on 3 July 2024).

- Anshari, M.; Hamdan, M.; Ahmad, N.; Ali, E.; Haidi, H. COVID-19, artificial intelligence, ethical challenges and policy implications. AI Soc. 2022, 38, 707–720. [Google Scholar] [CrossRef] [PubMed]

- Council of Europe. Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law; Council of Europe: Strasbourg, France, 2024. [Google Scholar]

- Pantea, I.; Repanovici, A.; Cocuz, M.E. Analysis of Research Directions on the Rehabilitation of Patients with Stroke and Diabetes Using Scientometric Methods. Healthcare 2022, 10, 773. [Google Scholar] [CrossRef] [PubMed]

- Pantea, I.; Roman, N.; Repanovici, A.; Drugus, D. Diabetes Patients’ Acceptance of Injectable Treatment, a Scientometric Analysis. Life 2022, 12, 2055. [Google Scholar] [CrossRef] [PubMed]

- VOSviewer. VOSviewer—Visualizing Scientific Landscapes. Available online: https://www.vosviewer.com// (accessed on 16 May 2023).

- Ryan, M.; Stahl, B.C. Artificial intelligence ethics guidelines for developers and users: Clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 2020, 19, 61–86. [Google Scholar] [CrossRef]

- Verma, S.; Garg, N. The trend and future of techno-ethics: A bibliometric analysis of three decades. Libr. Hi Tech 2023. ahead of print. [Google Scholar] [CrossRef]

- Corrêa, N.K.; Galvão, C.; Santos, J.W.; Del Pino, C.; Pinto, E.P.; Barbosa, C.; Massmann, D.; Mambrini, R.; Galvão, L.; Terem, E.; et al. Worldwide AI ethics: A review of 200 guidelines and recommendations for AI governance. Patterns 2023, 4, 100857. [Google Scholar] [CrossRef] [PubMed]

- Textor, C.; Zhang, R.; Lopez, J.; Schelble, B.G.; McNeese, N.J.; Freeman, G.; Pak, R.; Tossell, C.; de Visser, E.J. Exploring the Relationship Between Ethics and Trust in Human–Artificial Intelligence Teaming: A Mixed Methods Approach. J. Cogn. Eng. Decis. Mak. 2022, 16, 252–281. [Google Scholar] [CrossRef]

- Omari, R.M.; Mohammadian, M. Rule based fuzzy cognitive maps and natural language processing in machine ethics. J. Inf. Commun. Ethics Soc. 2016, 14, 231–253. [Google Scholar] [CrossRef]

- Noain-Sánchez, A. Addressing the Impact of Artificial Intelligence on Journalism: The perception of experts, journalists and academics. Commun. Soc. 2022, 35, 105–121. [Google Scholar] [CrossRef]

- Molnár-Gábor, F. Artificial Intelligence in Healthcare: Doctors, Patients and Liabilities. In Regulating Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 337–360. [Google Scholar] [CrossRef]

- Cole, M.; Cant, C.; Spilda, F.U.; Graham, M. Politics by Automatic Means? A Critique of Artificial Intelligence Ethics at Work. Front. Artif. Intell. 2022, 5, 869114. [Google Scholar] [CrossRef] [PubMed]

- Coates, D.L.; Martin, A. An instrument to evaluate the maturity of bias governance capability in artificial intelligence projects. IBM J. Res. Dev. 2019, 63, 7:1–7:15. [Google Scholar] [CrossRef]

- Barreiro-Ares, A.; Morales-Santiago, A.; Sendra-Portero, F.; Souto-Bayarri, M. Impact of the Rise of Artificial Intelligence in Radiology: What Do Students Think? Int. J. Environ. Res. Public Health 2023, 20, 1589. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).