A Systematic Review of Generative AI for Teaching and Learning Practice

Abstract

:1. Introduction

- RQ1. What is the evolutionary productivity in the field in terms of the most influential journals, most cited articles, and authors, including geographical distribution of authorship?

- RQ2. What are the main trends and core themes emerging from the extant literature?

- This review provides a comprehensive overview of the current state of research on GenAI for teaching and learning in HE, this helps researchers to identify the evolutionary progression (most influential journals, articles, authors, including geographical distribution of authorship), prevailing topics, and research directions within the field;

- This review synthesises the findings to generate insights into a holistic perspective on the potential, effectiveness, and limitations of GenAI use for teaching and learning in HE;

- This review identifies research gaps that require further investigation, guiding future research work.

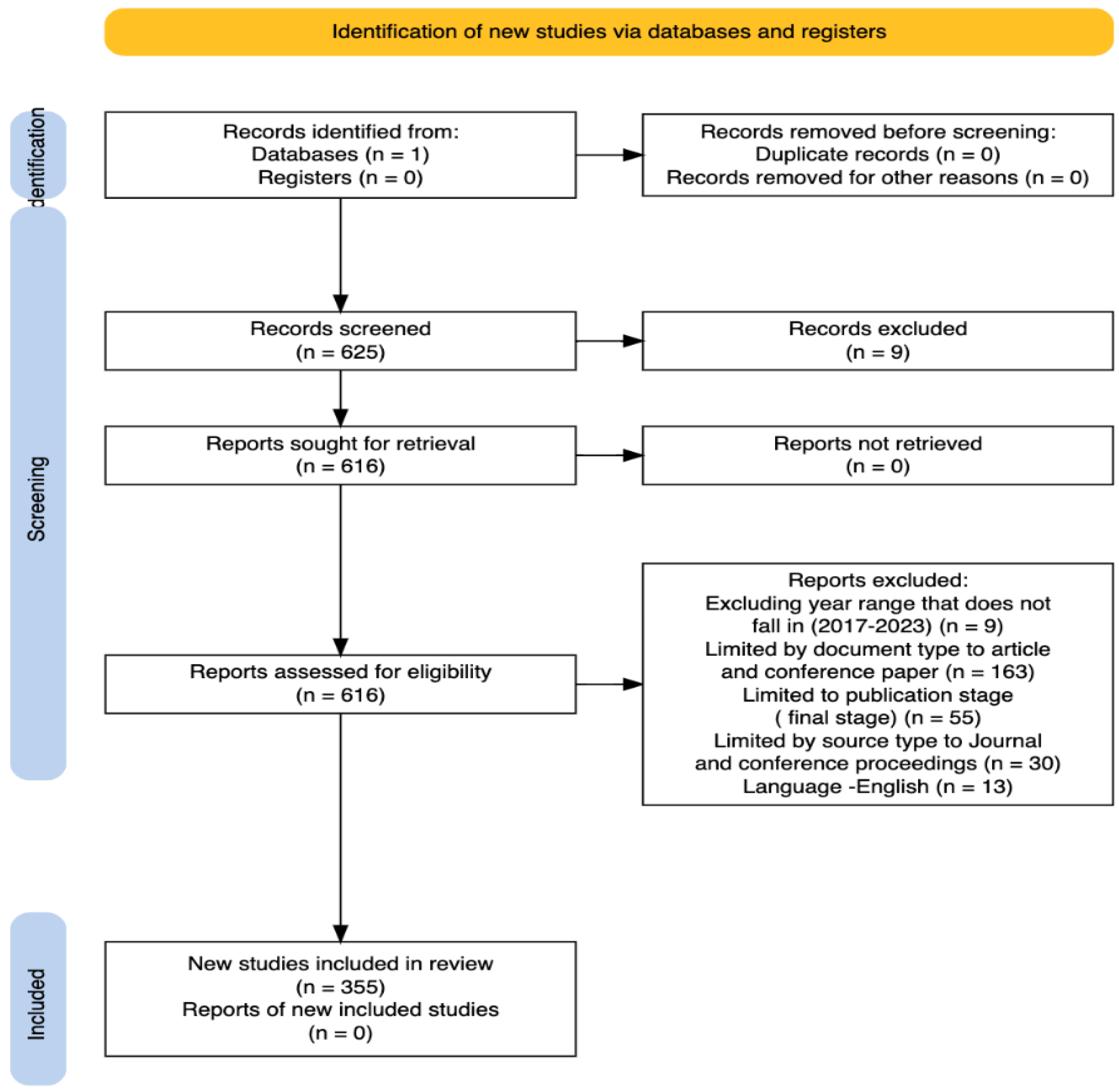

2. Methodology

2.1. Database Search and Eligibility Criteria

2.2. Data Quality Assessment

2.3. Bibliometric Approach

2.4. Topic Modelling Approach

3. Results and Discussion

3.1. Bibliometric Analysis Results

3.1.1. Documents by Publication Type

3.1.2. Publications per Year

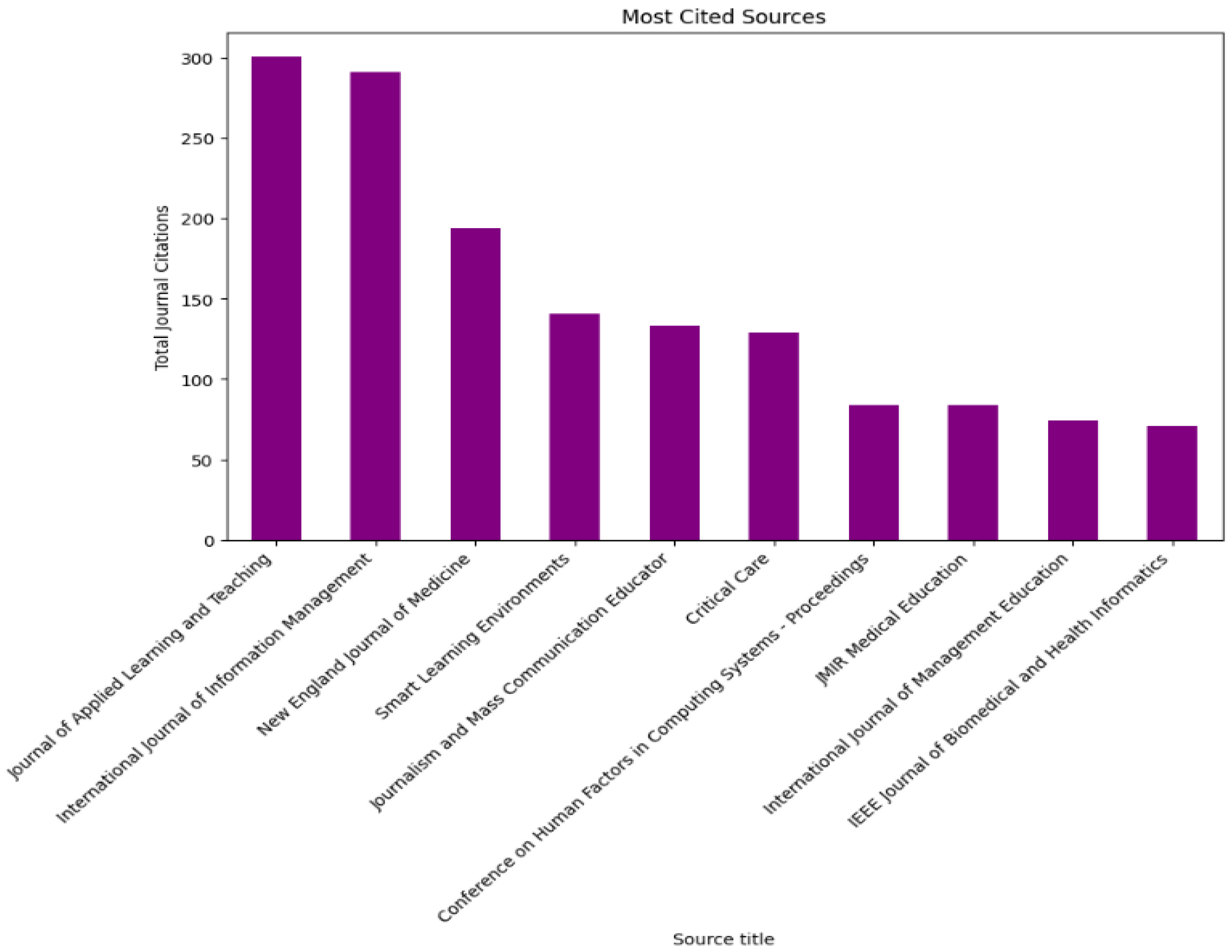

3.1.3. Citation per Source Title

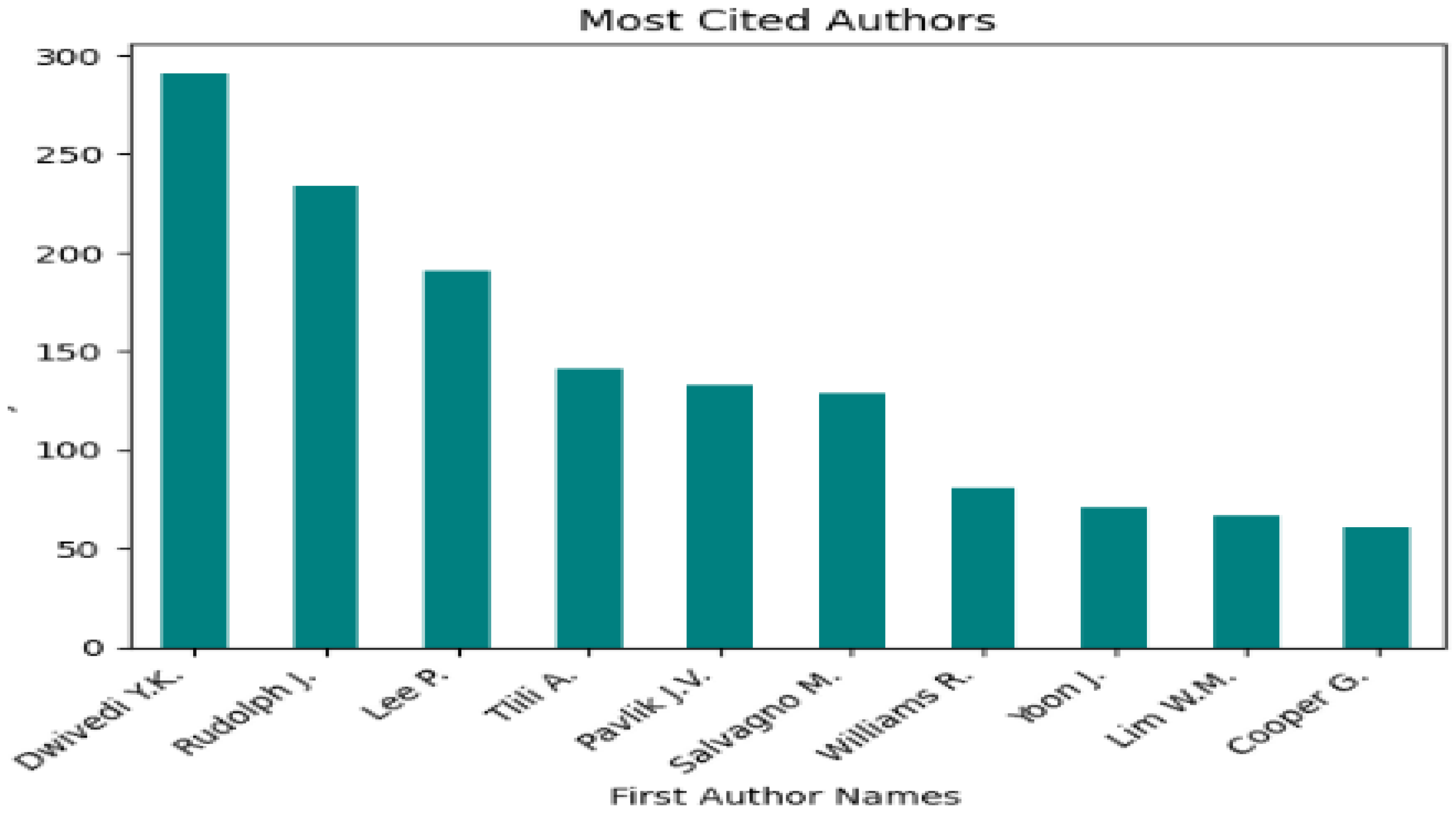

3.1.4. Citations per First Authors

3.1.5. Publications/Citations per Year

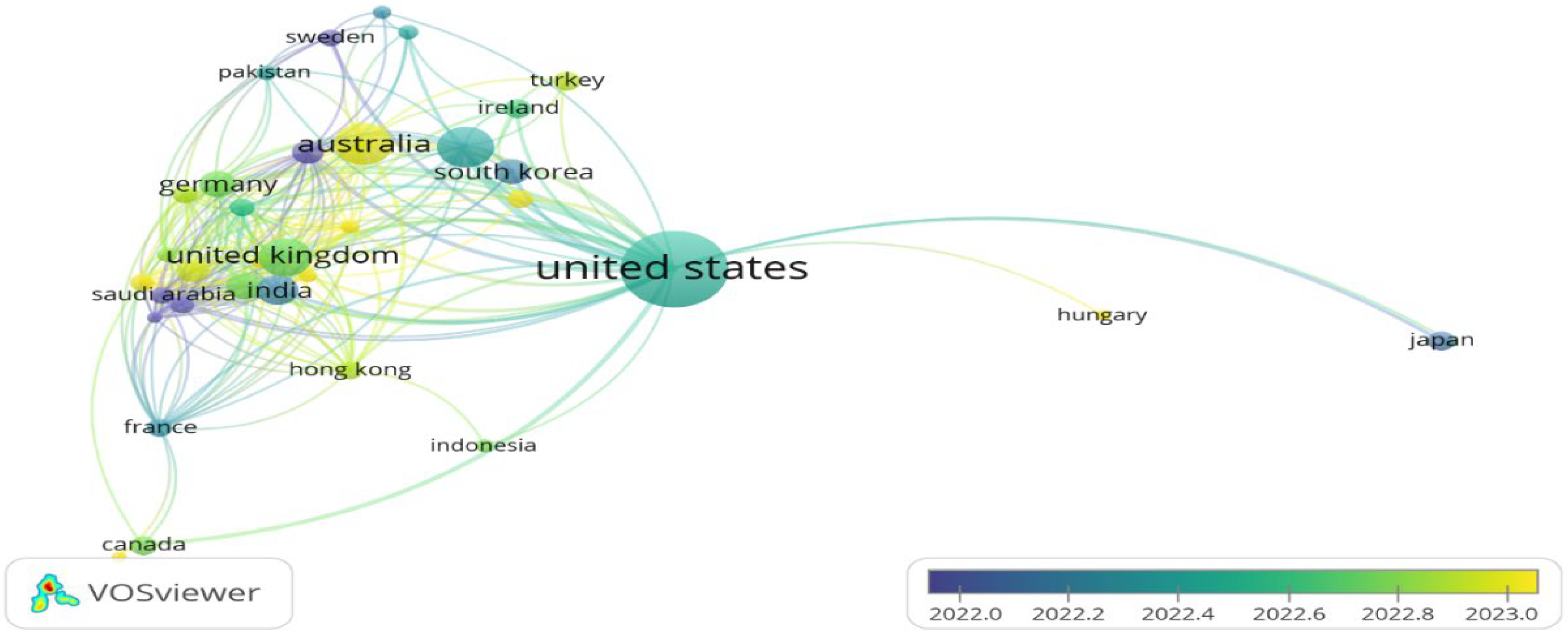

3.1.6. Co-Authorship

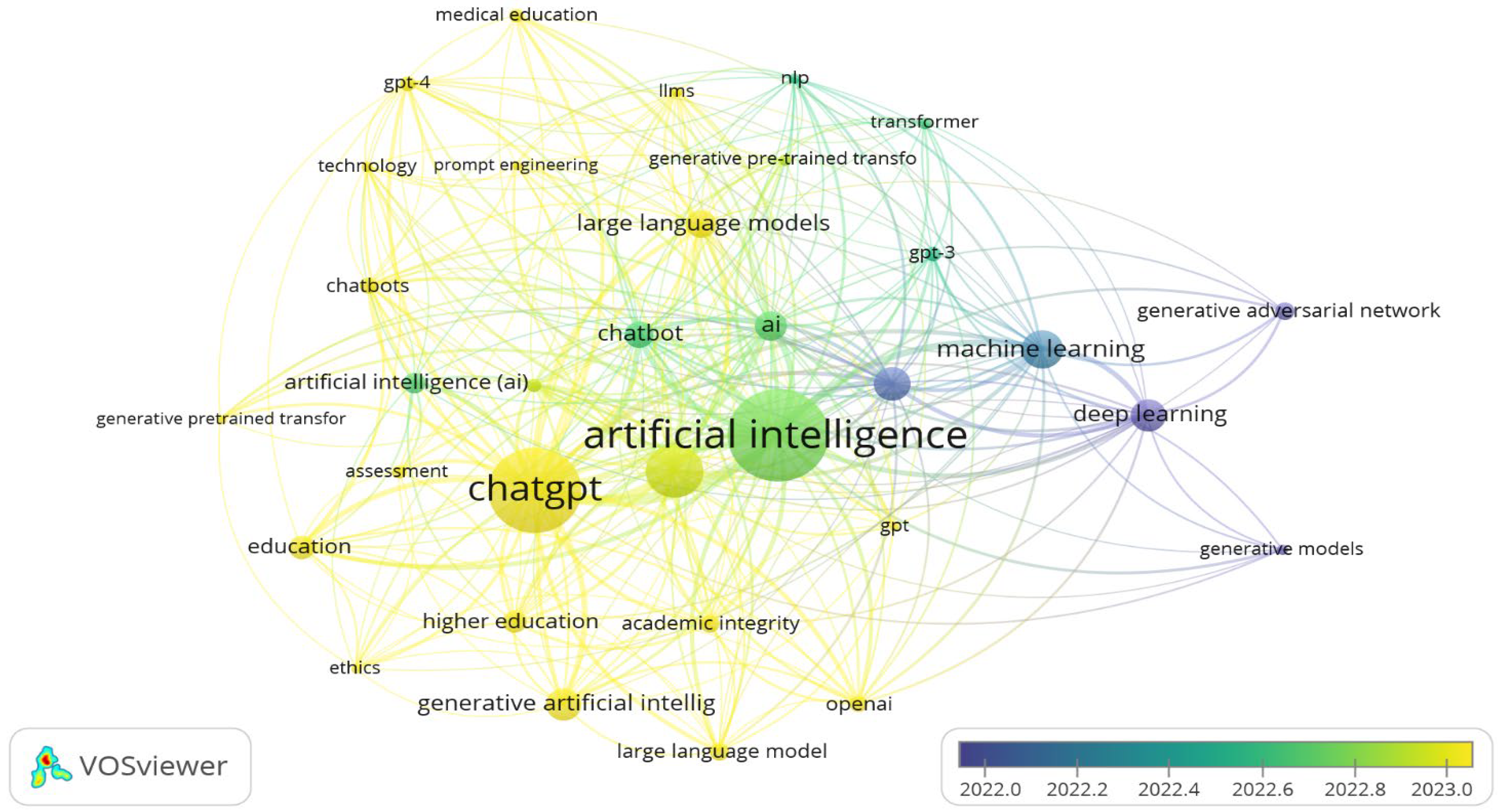

3.1.7. Co-Occurrence

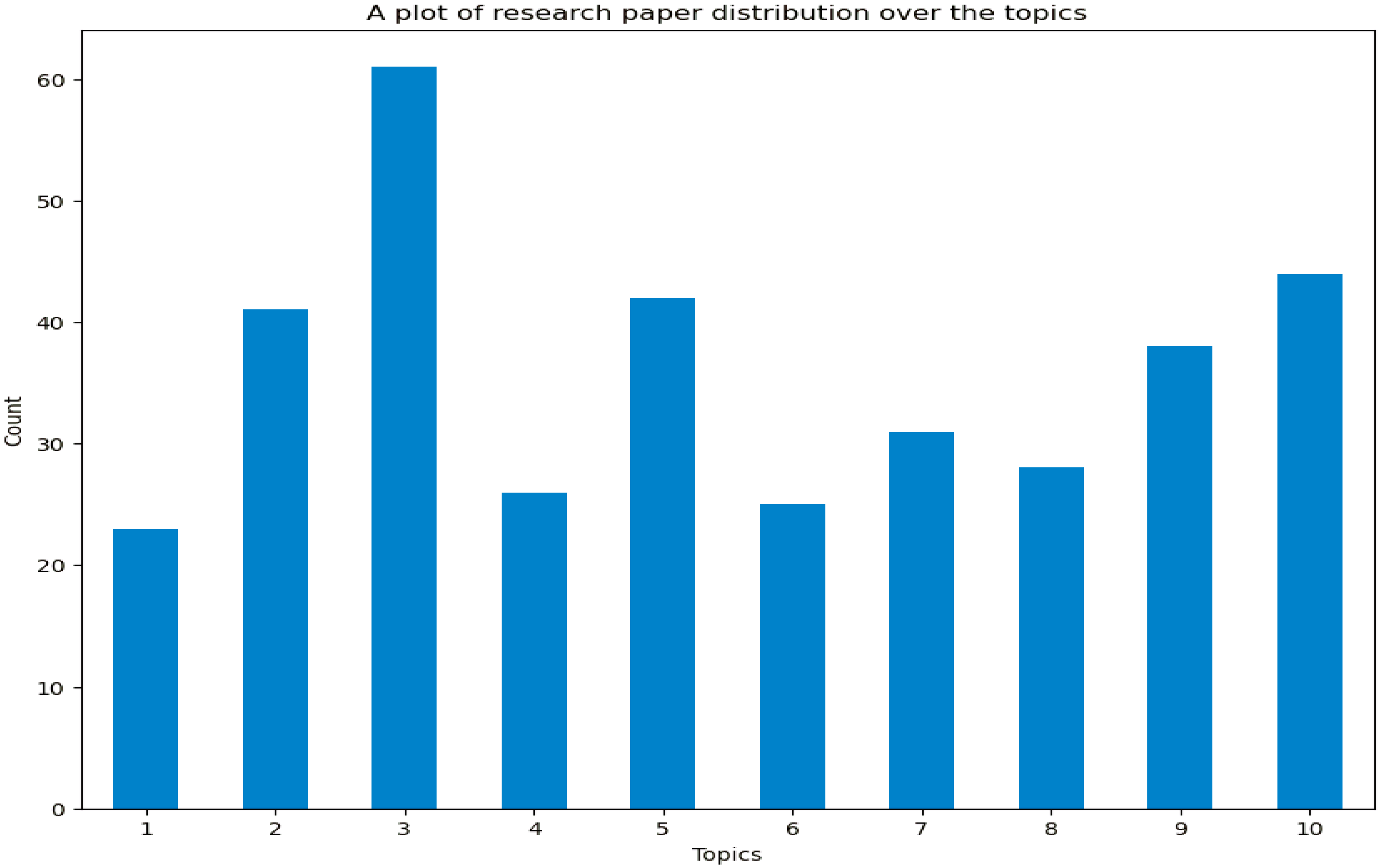

3.2. Topic Modelling Results

- Topic 1: Implications of GenAI (23 research papers)

- Topic 2: GenAI for education and research (40 research papers)

- Topic 3: Support system (60 research papers)

- Topic 4: Bias and inclusion (26 research papers)

- Topic 5: Intelligent tutoring system (42 research papers)

- Topic 6: Machine learning/AI applications (25 research papers)

- Topic 7: Performance evaluation on exam questions (30 research papers)

- Topic 8: GenAI for writing (28 research papers)

- Topic 9: Ethical and regulatory considerations (37 research papers)

- Topic 10: Deep learning/AI models (44 research papers)

4. Conclusions

Implications, Limitations, and Recommendations for Future Work

- Furtherance to the keyword, title, and abstract analyses, significant studies that examine the performance of GenAI tools in medical and healthcare disciplines abound; such studies across disciplines are required/recommended in HE. With such multidisciplinary and interdisciplinary studies, informed decisions on agreed guidelines towards the usage of GenAI systems in HE will emerge, hence the debate on GenAI will be well situated;

- This study revealed the countries with the largest number of publications, with none or low publications from developing countries. We, therefore, recommend that future publications be carried out in the area of GenAI through collaboration, especially in the global south;

- Academics need to understand the issues surrounding GenAI and develop strategies that will minimise its weaknesses but enhance its opportunities. Students also need to be aware of GenAI’s limitations and shortcomings in terms of its non-ethical use and the implications on critical and analytical thinking, as well as the impairment of other soft skills. With this in mind, both tutors’ and students’ inputs will need to be successfully incorporated into GenAI tools for pedagogical practice;

- The development of LLM-based chatbots is growing. More recently, the development of Gemini has occurred, which is said to outperform ChatGPT-4 in most NLP tasks. This is yet to be ascertained in the HE domain. Thus, we recommend an experimental comparison of these GenAI tools for teaching and learning and assessment in terms of pedagogical practice;

- To successfully incorporate GenAI tools into teaching and learning practice, there is a need for users’ input and perspectives with an interdisciplinary scope. Thus, there is a need for research synthesis from students’ and academic tutors’ perspectives to formulate the use of GenAI tools for teaching and learning pedagogical practice;

- Plagiarism detector systems like Turnitin have integrated AI content detectors into their system. However, the performance of such systems is not yet known. There is a need to examine the performance of Turnitin (and similar systems) to understand the extent to which these systems can identify AI-generated and human-written texts across several disciplines in HE;

- There is a need to update the curriculum in education [69]. However, there is a need to have a proper understanding of the potential impact of GenAI tools on the current curriculum. At this stage, it is not yet known whether including modules like an “Introduction to GenAI” in the curriculum will provide a balance between knowledge, usage, and ethics;

- To conclude, future research should be focused on interdisciplinary studies to develop guidelines for GenAI usage in HE. Experimental comparisons of advanced GenAI tools like Gemini and the performance of AI content detectors in plagiarism systems will be explored. Comparative studies should be conducted to assess the effectiveness of GenAI tools in educational settings, accurately. Updating curriculum and assessments to include GenAI topics, while assessing their impact on education, will be crucial for balanced knowledge and ethical usage.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Metrics | Score |

|---|---|

| Coherence score (cv) | 0.3605 |

| Coherence score (Umass) | −2.0841 |

| Perplexity | −5.1338 |

References

- Ogunleye, B.; Zakariyyah, K.I.; Ajao, O.; Olayinka, O.; Sharma, H. Higher education assessment practice in the era of generative AI tools. J. Appl. Learn. Teach. JALT 2024, 7, 1–11. [Google Scholar] [CrossRef]

- Daun, M.; Brings, J. How ChatGPT Will Change Software Engineering Education. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education, Turku, Finland, 7–12 July 2023; Volume 1, pp. 110–116. [Google Scholar]

- Brennan, J.; Durazzi, N.; Sene, T. Things We Know and Don’t Know about the Wider Benefits of Higher Education: A Review of the Recent Literature; BIS Research Paper (URN BIS/13/1244); Department for Business, Innovation and Skills: London, UK, 2013. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/251011/bis-13-1244-things-we-know-and-dont-know-about-the-wider-benefits-of-higher-education.pdf (accessed on 22 March 2024).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Ogunleye, B.; Maswera, T.; Hirsch, L.; Gaudoin, J.; Brunsdon, T. Comparison of topic modelling approaches in the banking context. Appl. Sci. 2023, 13, 797. [Google Scholar] [CrossRef]

- Ogunleye, B.; Dharmaraj, B. The Use of a Large Language Model for Cyberbullying Detection. Analytics 2023, 2, 694–707. [Google Scholar] [CrossRef]

- Wei, J.; Kim, S.; Jung, H.; Kim, Y.H. Leveraging large language models to power chatbots for collecting user self-reported data. Proc. ACM Hum.-Comput. Interact. 2024, 8, 1–35. [Google Scholar] [CrossRef]

- Pochiraju, D.; Chakilam, A.; Betham, P.; Chimulla, P.; Rao, S.G. Extractive summarization and multiple choice question generation using XLNet. In Proceedings of the 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; pp. 1001–1005. [Google Scholar]

- Grassini, S. Shaping the future of education: Exploring the potential and consequences of AI and ChatGPT in educational settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef] [PubMed]

- Michel-Villarreal, R.; Vilalta-Perdomo, E.; Salinas-Navarro, D.E.; Thierry-Aguilera, R.; Gerardou, F.S. Challenges and Opportunities of Generative AI for Higher Education as Explained by ChatGPT. Educ. Sci. 2023, 13, 856. [Google Scholar] [CrossRef]

- Ruiz-Rojas, L.I.; Acosta-Vargas, P.; De-Moreta-Llovet, J.; Gonzalez-Rodriguez, M. Empowering Education with Generative Artificial Intelligence Tools: Approach with an Instructional Design Matrix. Sustainability 2023, 15, 11524. [Google Scholar] [CrossRef]

- Xames, M.D.; Shefa, J. ChatGPT for research and publication: Opportunities and challenges. J. Appl. Learn. Teach. 2023, 6, 1. [Google Scholar] [CrossRef]

- Chaudhry, I.S.; Sarwary, S.A.M.; El Refae, G.A.; Chabchoub, H. Time to Revisit Existing Student’s Performance Evaluation Approach in Higher Education Sector in a New Era of ChatGPT—A Case Study. Cogent Educ. 2023, 10, 2210461. [Google Scholar] [CrossRef]

- Cotton DR, E.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Farrokhnia, M.; Banihashem, S.K.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2023, 60, 1–15. [Google Scholar] [CrossRef]

- Halaweh, M. ChatGPT in education: Strategies for responsible implementation. Contemp. Educ. Technol. 2023, 15, ep421. [Google Scholar] [CrossRef]

- Hung, J.; Chen, J. The Benefits, Risks and Regulation of Using ChatGPT in Chinese Academia: A Content Analysis. Soc. Sci. 2023, 12, 380. [Google Scholar] [CrossRef]

- Rasul, T.; Nair, S.; Kalendra, D.; Robin, M.; de Oliveira Santini, F.; Ladeira, W.J.; Heathcote, L. The role of ChatGPT in higher education: Benefits, challenges, and future research directions. J. Appl. Learn. Teach. 2023, 6, 41–56. [Google Scholar]

- Kurtz, G.; Amzalag, M.; Shaked, N.; Zaguri, Y.; Kohen-Vacs, D.; Gal, E.; Barak-Medina, E. Strategies for Integrating Generative AI into Higher Education: Navigating Challenges and Leveraging Opportunities. Educ. Sci. 2024, 14, 503. [Google Scholar] [CrossRef]

- Atlas, S. ChatGPT for Higher Education and Professional Development: A Guide to Conversational AI. 2023. Available online: https://digitalcommons.uri.edu/cgi/viewcontent.cgi?params=/context/cba_facpubs/article/1547/&path_info=ChatGPTforHigherEducation_Atlas.pdf (accessed on 22 March 2024).

- Pesovski, I.; Santos, R.; Henriques, R.; Trajkovik, V. Generative AI for Customizable Learning Experiences. Sustainability 2024, 16, 3034. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, C.; Li, C.; Qiao, Y.; Zheng, S.; Dam, S.K.; Hong, C.S. One small step for generative ai, one giant leap for agi: A complete survey on chatgpt in aigc era. arXiv 2023, arXiv:2304.06488. [Google Scholar]

- Jacques, L. Teaching CS-101 at the Dawn of ChatGPT. ACM Inroads 2023, 14, 40–46. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Owusu Ansah, L. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Cooper, G. Examining science education in ChatGPT: An exploratory study of generative artificial intelligence. J. Sci. Educ. Technol. 2023, 32, 444–452. [Google Scholar] [CrossRef]

- Rajabi, P.; Taghipour, P.; Cukierman, D.; Doleck, T. Exploring ChatGPT’s impact on post-secondary education: A qualitative study. In Proceedings of the Western Canadian Conference on Computing Education (WCCCE’23), Vancouver, BC, Canada, 4–5 May 2023; Simon Fraser University: Burnaby, BC, Canada, 2023. [Google Scholar]

- Lozano, A.; Blanco Fontao, C. Is the Education System Prepared for the Irruption of Artificial Intelligence? A Study on the Perceptions of Students of Primary Education Degree from a Dual Perspective: Current Pupils and Future Teachers. Educ. Sci. 2023, 13, 733. [Google Scholar] [CrossRef]

- Sánchez-Ruiz, L.M.; Moll-López, S.; Nuñez-Pérez, A.; Moraño-Fernández, J.A.; Vega-Fleitas, E. ChatGPT Challenges Blended Learning Methodologies in Engineering Education: A Case Study in Mathematics. Appl. Sci. 2023, 13, 6039. [Google Scholar] [CrossRef]

- Bond, M.; Khosravi, H.; De Laat, M.; Bergdahl, N.; Negrea, V.; Oxley, E.; Pham, P.; Chong, S.W.; Siemens, G. A Meta Systematic Review of Artificial Intelligence in Higher Education: A call for increased ethics, collaboration, and rigour. Int. J. Educ. Technol. High. Educ. 2024, 21, 4. [Google Scholar] [CrossRef]

- Sullivan, M.; Kelly, A.; McLaughlan, P. ChatGPT in higher education: Considerations for academic integrity and student learning. J. Appl. Learn. Teach. 2023, 6, 1–11. [Google Scholar]

- Bahroun, Z.; Anane, C.; Ahmed, V.; Zacca, A. Transforming Education: A Comprehensive Review of Generative Artificial Intelligence in Educational Settings through Bibliometric and Content Analysis. Sustainability 2023, 15, 12983. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Vagelas, I.; Leontopoulos, S. A Bibliometric Analysis and a Citation Mapping Process for the Role of Soil Recycled Organic Matter and Microbe Interaction due to Climate Change Using Scopus Database. AgriEngineering 2023, 5, 581–610. [Google Scholar] [CrossRef]

- Romanelli, J.P.; Gonçalves, M.C.P.; de Abreu Pestana, L.F.; Soares, J.A.H.; Boschi, R.S.; Andrade, D.F. Four challenges when conducting bibliometric reviews and how to deal with them. Environ. Sci. Pollut. Res. 2021, 28, 60448–60458. [Google Scholar] [CrossRef]

- Alhashmi, S.M.; Hashem, I.A.; Al-Qudah, I. Artificial Intelligence Applications in Healthcare: A Bibliometric and Topic Model-Based Analysis. Intell. Syst. Appl. 2023, 21, 200299. [Google Scholar] [CrossRef]

- Natukunda, A.; Muchene, L.K. Unsupervised title and abstract screening for systematic review: A retrospective case-study using topic modelling methodology. Syst. Rev. 2023, 12, 1–16. [Google Scholar] [CrossRef]

- Mendiratta, A.; Singh, S.; Yadav, S.S.; Mahajan, A. Bibliometric and Topic Modeling Analysis of Corporate Social Irresponsibility. Glob. J. Flex. Syst. Manag. 2022, 2022, 1–21. [Google Scholar] [CrossRef]

- Sheng, B.; Wang, Z.; Qiao, Y.; Xie, S.Q.; Tao, J.; Duan, C. Detecting latent topics and trends of digital twins in healthcare: A structural topic model-based systematic review. Digit. Health 2023, 9, 20552076231203672. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Mimno, D.; Wallach, H.; Talley, E.; Leenders, M.; McCallum, A. Optimizing semantic coherence in topic models. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 27–29 June 2011; pp. 262–272. [Google Scholar]

- Röder, M.; Both, A.; Hinneburg, A. Exploring the space of topic coherence measures. In Proceedings of the Eighth ACM International Conference on Web Search and Data Mining, Shanghai, China, 2–6 February 2015; pp. 399–408. [Google Scholar]

- Lim, J.P.; Lauw, H.W. Aligning Human and Computational Coherence Evaluations. Comput. Linguist. 2024, 1–58. [Google Scholar] [CrossRef]

- Hu, K. ChatGPT Sets Record for Fastest-Growing User Base—Analyst Note. 2023. Available online: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (accessed on 11 March 2024).

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 342–363. [Google Scholar]

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Pavlik, J.V. Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education. J. Mass Commun. Educ. 2023, 78, 84–93. [Google Scholar] [CrossRef]

- Salvagno, M.; Taccone, F.S.; Gerli, A.G. Can artificial intelligence help for scientific writing? Crit. Care 2023, 27, 75. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. War of the chatbots: Bard, Bing Chat, ChatGPT, Ernie and beyond. The new AI gold rush and its impact on higher education. J. Appl. Learn. Teach. 2023, 6, 1. [Google Scholar]

- Lim, W.M.; Gunasekara, A.; Pallant, J.L.; Pallant, J.I.; Pechenkina, E. Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 2023, 21, 100790. [Google Scholar] [CrossRef]

- Crawford, J.; Cowling, M.; Allen, K.A. Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). J. Univ. Teach. Learn. Pract. 2023, 20, 02. [Google Scholar] [CrossRef]

- Baber, H.; Nair, K.; Gupta, R.; Gurjar, K. The beginning of ChatGPT-A systematic and bibliometric review of the literature. Inf. Learn. Sci. 2023. [Google Scholar] [CrossRef]

- Barrington, N.M.; Gupta, N.; Musmar, B.; Doyle, D.; Panico, N.; Godbole, N.; Reardon, T.; D’Amico, R.S. A Bibliometric Analysis of the Rise of ChatGPT in Medical Research. Med. Sci. 2023, 11, 61. [Google Scholar] [CrossRef]

- Farhat, F.; Silva, E.S.; Hassani, H.; Madsen, D.; Sohail, S.S.; Himeur, Y.; Alam, M.A.; Zafar, A. The scholarly footprint of ChatGPT: A bibliometric analysis of the early outbreak phase. Front. Artif. Intell. 2023, 6, 1270749. [Google Scholar] [CrossRef]

- Liu, J.; Wang, C.; Liu, Z.; Gao, M.; Xu, Y.; Chen, J.; Cheng, Y. A bibliometric analysis of generative AI in education: Current status and development. Asia Pac. J. Educ. 2024, 44, 156–175. [Google Scholar] [CrossRef]

- Oliński, M.; Krukowski, K.; Sieciński, K. Bibliometric Overview of ChatGPT: New Perspectives in Social Sciences. Publications 2024, 12, 9. [Google Scholar] [CrossRef]

- Zheltukhina, M.R.; Sergeeva, O.V.; Masalimova, A.R.; Budkevich, R.L.; Kosarenko, N.N.; Nesterov, G.V. A bibliometric analysis of publications on ChatGPT in education: Research patterns and topics. Online J. Commun. Media Technol. 2024, 14, e202405. [Google Scholar] [CrossRef]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Does ChatGPT Perform on the United States Medical Licensing Examination (USMLE)? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef]

- Haddad, F.; Saade, J.S. Performance of ChatGPT on Ophthalmology-Related Questions Across Various Examination Levels: Observational Study. JMIR Med. Educ. 2024, 10, e50842. [Google Scholar] [CrossRef]

- Kaplan-Rakowski, R.; Grotewold, K.; Hartwick, P.; Papin, K. Generative AI and teachers’ perspectives on its implementation in education. J. Interact. Learn. Res. 2023, 34, 313–338. [Google Scholar]

- John, J.M.; Shobayo, O.; Ogunleye, B. An Exploration of Clustering Algorithms for Customer Segmentation in the UK Retail Market. Analytics 2023, 2, 809–823. [Google Scholar] [CrossRef]

- Van Eck, N.J.; Waltman, L. Citation-based clustering of publications using CitNetExplorer and VOSviewer. Scientometrics 2017, 111, 1053–1070. [Google Scholar] [CrossRef]

- Van Eck, N.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- D’ascenzo, F.; Rocchi, A.; Iandolo, F.; Vito, P. Evolutionary impacts of artificial intelligence in Healthcare Managerial Literature. A ten-year Bibliometric and Topic Modeling Review. Sustain. Futures 2024, 7, 100198. [Google Scholar] [CrossRef]

- Thurzo, A.; Strunga, M.; Urban, R.; Surovková, J.; Afrashtehfar, K.I. Impact of artificial intelligence on dental education: A review and guide for curriculum update. Educ. Sci. 2023, 13, 150. [Google Scholar] [CrossRef]

| (generative AND artificial AND intelligence OR generative AND ai OR genai OR gai) AND (assessment OR pedagogic OR student OR teaching AND learning OR teaching OR teacher) OR (llms OR language AND model) OR (academia OR education OR he OR higher AND education) |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Published between 2017 and 2023 | Published before 2017 |

| Publication should be peer reviewed | Not peer reviewed |

| Published in English | Papers not published in English due to authors’ common language |

| Journal articles or conference papers | Editorials, meeting abstracts, workshop papers, posters, book reviews, and dissertations |

| Year | No. of Publications | Total Citations |

|---|---|---|

| 2018 | 3 | 49 |

| 2019 | 4 | 114 |

| 2020 | 14 | 196 |

| 2021 | 23 | 290 |

| 2022 | 38 | 196 |

| 2023 | 273 | 2078 |

| Authors | Year | Title | Citations |

|---|---|---|---|

| Dwivedi et al. [46] | 2023 | “So what if ChatGPT wrote it?” Multidisciplinary perspectives on the opportunities, challenges, and implications of generative conversational AI for research, practice, and policy | 291 |

| Lee et al. [47] | 2023 | Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. | 191 |

| Rudolph et al. [48] | 2023 | ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? | 152 |

| Tlili et al. [49] | 2023 | What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education | 141 |

| Pavlik [50] | 2023 | Collaborating With ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education | 133 |

| Salvagno et al. [51] | 2023 | Can artificial intelligence help with scientific writing? | 129 |

| Rudolph et al. [52] | 2023 | War of the chatbots: Bard, Bing Chat, ChatGPT, Ernie, and beyond. The new AI gold rush and its impact on higher education | 82 |

| Lim et al. [53] | 2023 | Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators | 67 |

| Cooper [27] | 2023 | Examining science education in ChatGPT: An exploratory study of generative artificial intelligence | 61 |

| Crawford et al. [54] | 2023 | Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI) | 53 |

| Topic | Terms | Topic Label |

|---|---|---|

| 1 | Study, health, student, design, technology, control, medium, platform, tool, creation, issue, language, attention, practice, building. | Implications of GenAI |

| 2 | System, article, data, technology, language, study, interaction, risk, experience, scenario, application, management, approach, implication, challenge. | GenAI for education and research |

| 3 | Language, design, system, task, approach, study, result, method, process, assessment, development, domain, engineering, generation, framework. | Support system |

| 4 | Problem, tool, language, bias, student, practice, transformer, study, ability, llm, scenario, society, material, skill, level. | Bias and inclusion |

| 5 | Student, tool, study, educator, researcher, technology, language, data, challenge, concern, work, experience, knowledge, development, feedback. | Intelligent tutoring system |

| 6 | Data, machine, learning, analysis, result, development, method, application, work, area, technology, network, image, datasets, field. | Machine learning/AI application |

| 7 | The question, response, performance, answer, accuracy, gpt4, result, study, information, examination, knowledge, conclusion, case, chatbot, background. | Performance evaluation on exam questions |

| 8 | Technology, language, article, process, application, business, world, work, knowledge, course, information, capability, text, innovation, create. | GenAI for writing |

| 9 | Student, technology, practice, opportunity, question, university, healthcare, tool, article, challenge, concern, chatbots, response, impact, study. | Ethical and regulatory considerations |

| 10 | Image, network, method, application, study, generation, performance, data, result, accuracy, detection, approach, text, field, generate. | Deep learning/AI model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogunleye, B.; Zakariyyah, K.I.; Ajao, O.; Olayinka, O.; Sharma, H. A Systematic Review of Generative AI for Teaching and Learning Practice. Educ. Sci. 2024, 14, 636. https://doi.org/10.3390/educsci14060636

Ogunleye B, Zakariyyah KI, Ajao O, Olayinka O, Sharma H. A Systematic Review of Generative AI for Teaching and Learning Practice. Education Sciences. 2024; 14(6):636. https://doi.org/10.3390/educsci14060636

Chicago/Turabian StyleOgunleye, Bayode, Kudirat Ibilola Zakariyyah, Oluwaseun Ajao, Olakunle Olayinka, and Hemlata Sharma. 2024. "A Systematic Review of Generative AI for Teaching and Learning Practice" Education Sciences 14, no. 6: 636. https://doi.org/10.3390/educsci14060636