Navigating the Evolving Landscape of Teaching and Learning: University Faculty and Staff Perceptions of the Artificial Intelligence-Altered Terrain

Abstract

1. Introduction

2. Artificial Intelligence in Higher Education

- RQ1:

- What aspects of AI are discussed among university faculty and staff members?

- RQ2:

- What challenges and opportunities related to AI do university faculty and staff recognize?

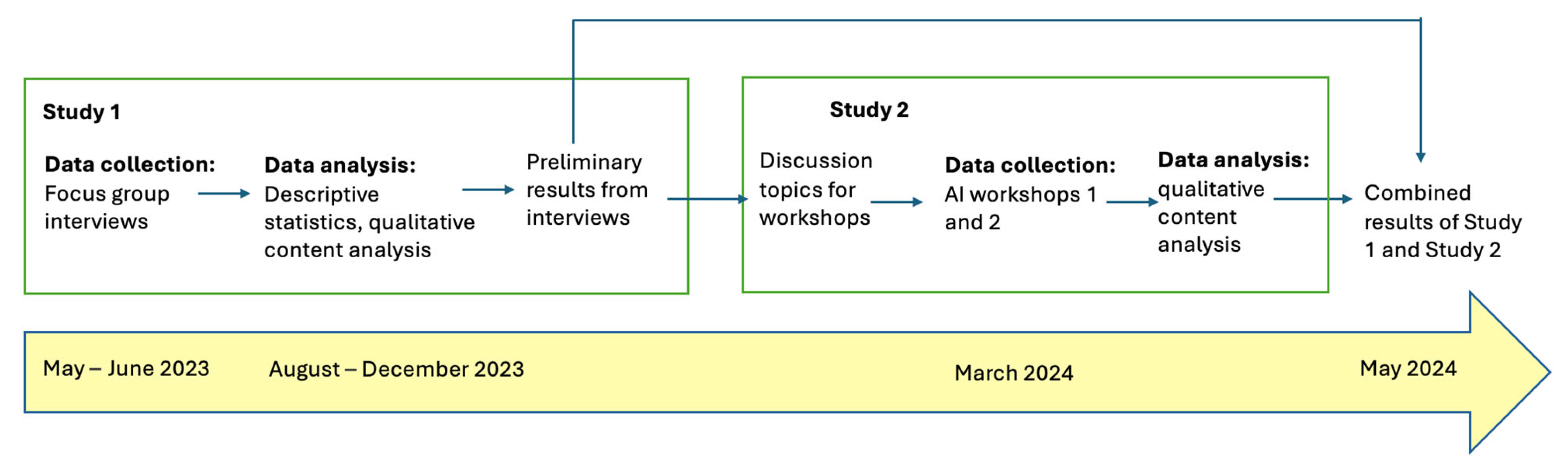

3. Materials and Methods

3.1. Context

3.2. Data Collection and Procedure

3.3. Analysis

4. Results

4.1. Results 1, Study 1: Main Categories

4.2. Results 2, Study 2: Enriched Categories

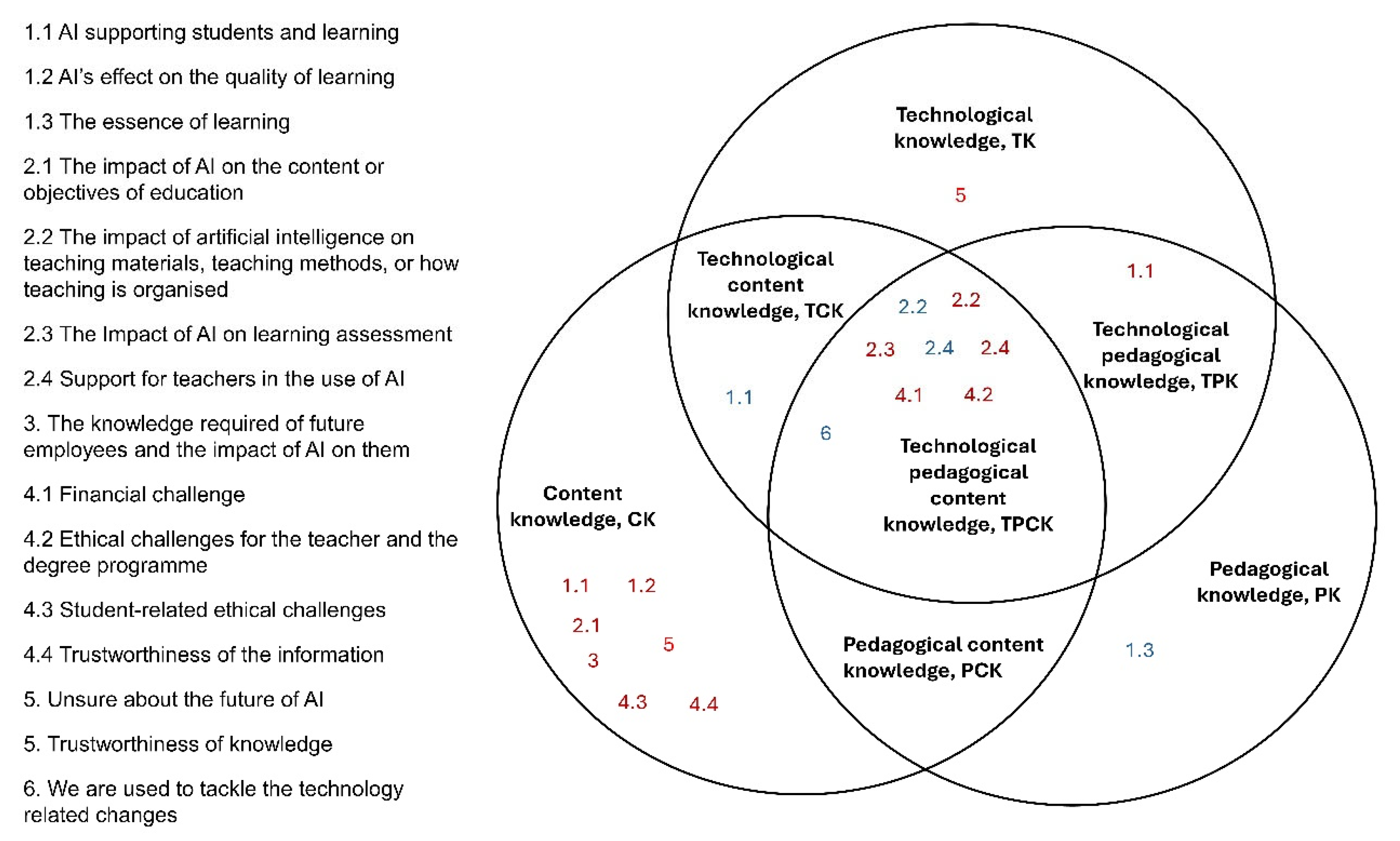

4.3. The Results though TPCK Framework

5. Discussion

5.1. Findings and Implications

5.2. Strengths and Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Interview Guide for Focus Group Discussions

- Could you please introduce yourself and say what is the best thing about teaching?

- What is essential in teaching?

- What is essential in assessment of learning?

- What future opportunities do you see for university teaching?

- What future opportunities do you see for the assessment of learning at the university?

- What needs to happen for these opportunities to be realized?

- What future challenges do you see for university teaching?

- How should teaching change to meet future challenges?

- What future challenges do you see for the assessment of learning?

- How should assessment change to meet future challenges?

- What kind of support is needed to face these challenges?

- What challenges does the future pose for the role of the teacher?

- What skills should the future (university) teacher have?

- What challenges does the future pose for the role of the (university) student?

- What skills should the student have in the future?

Appendix B. Instructions for the Learning Café Discussions

- The impact of artificial intelligence on studying: What opportunities/challenges does artificial intelligence offer to university students?

- The impact of artificial intelligence on teaching content and teaching methods: What opportunities/challenges does AI offer to university teachers?

- Artificial intelligence and assessment:What opportunities/challenges does artificial intelligence offer to university teachers/students?

- Ethical and economic issues of artificial intelligence in teaching and studying

- How will the use of AI in teaching/studying develop in the future?(think about a 5-year time span)

References

- Coleman, K.; Uzhegova, D.; Blaher, B.; Arkoudis, S. The Educational Turn: Rethinking the Scholarship of Teaching and Learning in Higher Education; Springer Nature: Singapore, 2023. [Google Scholar] [CrossRef]

- Czerniewicz, L.; Cronin, C. Higher Education for Good: Teaching and Learning Futures, 1st ed.; Open Book Publishers: Cambridge, UK, 2023. [Google Scholar] [CrossRef]

- Future of Teaching 2035′ Scenarios for the Futures of Teaching and Learning. Available online: https://teaching.helsinki.fi/instructions/article/starting-points-teaching#paragraph-7436 (accessed on 16 May 2024).

- Barnett, R. University Knowledge in an Age of Supercomplexity. High. Educ. 2000, 40, 409–422. [Google Scholar] [CrossRef]

- Barnett, R.; Hallam, S. Teaching for supercomplexity: A pedagogy for higher education. In Understanding Pedagogy and Its Impact on Learning; Mortimore, P., Ed.; Paul Chapman: London, UK, 1999; pp. 137–155. [Google Scholar]

- Rubin, A. Hidden, inconsistent, and influential: Images of the future in changing times. Futures 2013, 45, S38–S44. [Google Scholar] [CrossRef]

- Demneh, M.; Morgan, D. Destination Identity: Futures Images as Social Identity. J. Futures Stud. 2018, 22, 51–64. [Google Scholar] [CrossRef]

- Rubin, A.; Linturi, H. Transition in the making. The images of the future in education and decision-making. Futures 2001, 33, 267–305. [Google Scholar] [CrossRef]

- Myyry, L.; Kallunki, V.; Katajavuori, N.; Repo, S.; Tuononen, T.; Anttila, H.; Kinnunen, P.; Haarala-Muhonen, A.; Pyöralä, E. COVID-19 Accelerating Academic Teachers’ Digital Competence in Distance Teaching. Front. Educ. 2022, 7, 770094. [Google Scholar] [CrossRef]

- Kallunki, V.; Katajavuori, N.; Kinnunen, P.A.; Anttila, H.; Tuononen, T.; Haarala-Muhonen, A.; Pyörälä, E.; Myyry, L. Comparison of voluntary and forced digital leaps in higher education—Teachers’ experiences of the added value of using digital tools in teaching and learning. Educ. Inf. Technol. 2023, 28, 10005–10030. [Google Scholar] [CrossRef]

- Turnbull, D.; Chugh, R.; Luck, J. Transitioning to E-Learning during the COVID-19 pandemic: How have Higher Education Institutions responded to the challenge? Educ. Inf. Technol. 2021, 26, 6401–6419. [Google Scholar] [CrossRef]

- Guppy, N.; Verpoorten, D.; Boud, D.; Lin, L.; Tai, J.; Bartolic, S. The post-COVID-19 future of digital learning in higher education: Views from educators, students, and other professionals in six countries. Br. J. Educ. Technol. 2022, 53, 1750–1765. [Google Scholar] [CrossRef]

- Ramos, J. Futureslab: Anticipatory experimentation, social emergence and evolutionary change. J. Futures Stud. 2017, 22, 107–118. [Google Scholar]

- Aalto, H.-K.; Heikkilä, K.; Keski-Pukkila, P.; Mäki, M.; Pöllänen, M. Tulevaisuudentutkimus Tutuksi—Perusteita ja Menetelmiä. Tulevaisuuden Tutkimuskeskus, Turun Yliopisto; Tulevaisuudentutkimuksen Verkostoakatemian Julkaisuja. Turku, Finland. 2022. Available online: https://urn.fi/URN:ISBN:978-952-249-563-1 (accessed on 2 June 2024).

- Grassini, S. Shaping the Future of Education: Exploring the Potential and Consequences of AI and ChatGPT in Educational Settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Masters, K. Ethical use of Artificial Intelligence in Health Professions Education: AMEE Guide No. 158. Med. Teach. 2023, 5, 574–584. [Google Scholar] [CrossRef]

- Boscardin, C.K.; Gin, B.; Golde, P.B.; Hauer, K.E. ChatGPT and Generative Artificial Intelligence for Medical Education: Potential Impact and Opportunity. Acad. Med. 2024, 99, 22–27. [Google Scholar] [CrossRef] [PubMed]

- Flanagin, A.; Bibbins-Domingo, K.; Berkwits, M.; Christiansen, S.L. Nonhuman “Authors” and Implications for the Integrity of Scientific Publication and Medical Knowledge. J. Am. Med. Assoc. 2023, 329, 637–639. [Google Scholar] [CrossRef] [PubMed]

- Lingard, L. Writing with ChatGPT: An Illustration of its Capacity, Limitations & Implications for Academic Writers. Perspect. Med. Educ. 2023, 12, 261–270. [Google Scholar] [CrossRef] [PubMed]

- OpenAI, Introducing ChatGPT. Available online: https://openai.com/blog/chatgpt (accessed on 15 October 2023).

- Lock, S. What Is AI Chatbot Phenomenon ChatGPT and Could It Replace Humans? Available online: https://www.theguardian.com/technology/2022/dec/05/what-is-ai-chatbot-phenomenon-chatgpt-and-could-it-replace-humans (accessed on 5 December 2022).

- McKinsey & Company. What Is Generative AI? Available online: https://tinyurl.com/McKinsey-Generative-AI (accessed on 15 October 2023).

- Pisica, A.I.; Edu, T.; Zaharia, R.M.; Zaharia, R. Implementing Artificial Intelligence in Higher Education: Pros and Cons from the Perspectives of Academics. Societies 2023, 13, 118. [Google Scholar] [CrossRef]

- Currie, G.; Barry, K. ChatGPT in Nuclear Medicine Education. J. Nucl. Med. Technol. 2023, 51, 247–254. [Google Scholar] [CrossRef] [PubMed]

- UH, Guidelines for the Use of AI in Teaching at the University of Helsinki. Academic Affairs Council. Available online: https://teaching.helsinki.fi/system/files/inline-files/AI_in_teaching_guidelines_University%20of%20Helsinki_0.pdf (accessed on 16 October 2023).

- UO, Guidelines for the Use of Artificial Intelligence in Education. University of Oulu. Available online: https://www.oulu.fi/en/for-students/studying-university/guidelines-use-artificial-intelligence-education (accessed on 16 October 2023).

- JYU Using AI-Based Applications for Studies—JYU’s Instructions and Guidelines. University of Jyväskylä. Available online: https://www.jyu.fi/en/study/administrative-rules-and-regulations/using-ai-based-applications-for-studies-jyu2019s-instructions-and-guidelines (accessed on 16 May 2024).

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- OECD-Education International. Opportunities, Guidelines and Guardrails on Effective and Equitable Use of AI in Education; OECD Publishing: Paris, France, 2023. [Google Scholar]

- Koehler, M.J.; Mishra, P. What is technological pedagogical content knowledge? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Kramm, N.; McKenna, S. AI amplifies the tough question: What is higher education really for? Teach. High. Educ. 2023, 28, 2173–2178. [Google Scholar] [CrossRef]

- Newell, S.J. Employing the interactive oral to mitigate threats to academic integrity from ChatGPT. Scholarsh. Teach. Learn. Psychol. 2023, in press. [Google Scholar] [CrossRef]

- Yeo, M.A. Academic integrity in the age of Artificial Intelligence (AI) authoring apps. TESOL J. 2023, 14, e716. [Google Scholar] [CrossRef]

- Shulman, L. Knowledge and teaching: Foundations of the new reform. Harv. Educ. Rev. 1987, 57, 1–23. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological pedagogical content knowledge: A framework for integrating technology in teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Ertmer, P.A.; Ottenbreit-Leftwich, A.T.; Sadik, O.; Sendurur, E.; Sendurur, P. Teacher Beliefs and Technology Integration Practices: A Critical Relationship. Comput. Educ. 2012, 59, 423–435. [Google Scholar] [CrossRef]

- Al-Awidi, H.M.; Alghazo, I.M. The Effect of Student Teaching Experience on Preservice Elementary Teachers’ Self-Efficacy Beliefs for Technology Integration in the UAE. Educ. Technol. Res. Dev. 2012, 60, 923–941. [Google Scholar] [CrossRef]

- Han, I.; Shin, W.S.; Ko, Y. The effect of student teaching experience and teacher beliefs on pre-service teachers’ self-efficacy and intention to use technology in teaching. Teach. Teach. 2017, 23, 829–842. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovations, 5th ed.; Free Press: New York, NY, USA, 2003. [Google Scholar]

- Hixon, E.; Buckenmeyer, J.; Barczyk, C.; Feldman, L.; Zamojski, H. Beyond the early adopters of online instruction: Motivating the reluctant majority. Internet High. Educ. 2011, 15, 102–107. [Google Scholar] [CrossRef]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Graneheim, U.H.; Lundman, B. Qualitative content analysis in nursing research: Concepts, procedures and measures to achieve trustworthiness. Nurse Educ. Today 2004, 24, 105–112. [Google Scholar] [CrossRef]

- Hsieh, H.-F.; Shannon, S.E. Three Approaches to Qualitative Content Analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef]

- Mayring, P. Qualitative Content Analysis: Theoretical foundation, Basic Procedures and Software Solution. Social Science Open Access Repository (SSOAR). 2014. Available online: http://nbn-resolving.de/urn:nbn:de:0168-ssoar-395173 (accessed on 2 July 2024).

- Patton, M.Q. Qualitative Research & Evaluation Methods, 3rd ed.; Sage Publications: Thousand Oaks, CA, USA, 2002. [Google Scholar]

- Robert, K.Y. Qualitative Research from Start to Finish, 2nd ed.; The Guilford Press: New York, NY, USA, 2016. [Google Scholar]

- Vaismoradi, M.; Turunen, H.; Bondas, T. Content analysis and thematic analysis: Implications for conducting a qualitative descriptive study. Nurs. Health Sci. 2013, 15, 398–405. [Google Scholar] [CrossRef] [PubMed]

- ATLAS.ti Scientific Software Development GmbH [ATLAS.ti Windows, v23.4.0.29360]. Available online: https://atlasti.com (accessed on 23 February 2024).

- Denzin, N.K.; Lincoln, Y.S. (Eds.) Introduction: The discipline and practice of qualitative research. In Handbook of Qualitative Research; Sage Publications: Thousand Oaks, CA, USA, 2000; pp. 1–29. [Google Scholar]

- Lincoln, Y.S.; Guba, E.G. Naturalistic Inquiry; Sage: Beverly Hills, CA, USA, 1985. [Google Scholar]

- Shenton, A.K. Strategies for Ensuring Trustworthiness in Qualitative Research Projects. Educ. Inf. 2004, 22, 63–75. [Google Scholar] [CrossRef]

- Wilkinson, S. Focus groups. In Qualitative Psychology: A Practical Guide to Research Methods, 2nd ed.; Smith, J.A., Ed.; Sage: Thousand Oaks, CA, USA, 2009; pp. 186–206. [Google Scholar]

- Flick, U. An Introduction to Qualitative Research, 2nd ed.; Sage: Beverly Hills, CA, USA, 2002. [Google Scholar]

- Matthews, B.; Ross, L. Research Methods: A Practical Guide for the Social Sciences; Longman: Harlow, UK, 2010. [Google Scholar]

- Hennink, M.M.; Kaiser, B.N.; Weber, M.B. What Influences Saturation? Estimating Sample Sizes in Focus Group Research. Qual. Health Res. 2019, 29, 1483–1496. [Google Scholar] [CrossRef] [PubMed]

| Original Quotation | Subcategory | Main Category |

|---|---|---|

| But my own kind of philosophy maybe is… that in the final stage of your studies you just have to accept the fact that the AI will be part of doing your master’s thesis or the like… (7:26) | 1.1. AI supporting students and learning | 1. The impact of AI on students’ learning processes |

| But then, I am worried about those big language models and AI… they write an essay in two seconds. How this influences student learning—is the upshot real learning or is it just a really nice text that has been tidied up so that it corresponds to the task that was given. (6:9) | 1.2. AI’s effect on the quality of learning | |

| That they are all very simple right now, it is terrible copying. (5:15) | 1.3. The essence of learning. | |

| It is somehow troublesome, as it also elaborates anyway… those aims of the teaching…as we in a way mechanize things, then of course no need for them <aims> is left anymore…what ChatGTP is capable of doing, then it does not make any sense for me to examine, ask a student---(6:13) | 2.1. The impact of AI on the content or objectives of education | 2. The impact of AI on teaching |

| …add some empirical research to teaching, in that case you must really do…what I have already done, too, is that there are not only tasks that are based on literature but also an additional interview in which you have to apply… For instance, an interview with a relative (7:31) | 2.2. The impact of artificial intelligence on teaching materials, teaching methods, or how teaching is organized | |

| AI and this ChatGPT and these…they are such …that we should now learn how to use them… that how we create such tasks for assessing learning in which one could use that ChatGPT and still learn and show what you have learnt, although you had utilized some of artificial intelligence… (3:16) | 2.3. The Impact of AI on learning assessment | |

| …if we for example want that artificial intelligence is utilized in one way or another in learning or in the planning of teaching, so how is our gang going to support it (1:36) | 2.4. Support for teachers in the use of AI | |

| …also the needs in working life will change for the knowledge workers in the future… what kind of roles are left for human investigators in the future, if the part that is replaced grows larger and larger… in my view, perhaps, we’ll have more and more help from artificial intelligence. (6:14) | 3. The knowledge required of future employees and the impact of AI on them | |

| Who pays for the new tools and tech needed? (WS1) | 4.1. Financial challenge | 4. AI and ethical and economic issues |

| Where goes the line between what is considered self-produced material (teaching material and students’ text)… (WS2) | 4.2. Ethical challenges for the teacher and the degree program | |

| …they are good students, they are able to use it, because they understand what’s the point, so in that case it only makes their job more efficient and it’s not a problem. And the poor ones are not able <to use AI> and they get caught… (4:3) | 4.3. Student-related ethical challenges | |

| It becomes unclear what is true and what is not (images). (WS2) | 4.4. Trustworthiness of the information | |

| And it is certainly important… to see this kind of new things like ChatGPT as an opportunity rather than a threat. At least my first thought was that oh dear, this is going nowhere. But perhaps we should orient ourselves to possible ways of utilizing it, because you are forced to keep abreast of new developments. (3:18) | 5. The development of AI or its use in the future | |

| I do think that the biggest challenge of all is related to that artificial intelligence… My wild guess is that the next few years will see wild development and then it will perhaps become a bit steadier. It is my guess. And that you somehow manage to keep up to date—“OK, now the artificial intelligence is able to do this and that and that kind of thing could be done this way”—I think that it will be highly challenging for many people. (7:3) | 6. The nature of the change brought about by artificial intelligence |

| Main Category | Percent |

|---|---|

| 1. The impact of AI on students’ learning processes | 16% |

| 2. The impact of AI on teaching | 37% |

| 3. The knowledge required of future employees and the impact of AI on them | 9% |

| 4. AI and ethical and economic issues | 9% |

| 5. The development of AI or its use in the future | 18% |

| 6. The nature of the change brought about by artificial intelligence | 11% |

| Experienced Teachers, 3 FG, 9 Participants | Directors of Degree Program, 1 FG, 3 Participants | Young Teachers, 2 FG, 5 Participants | Educational Technology Experts, 1 FG, 4 Participants | Totals | |

|---|---|---|---|---|---|

| 1. The impact of AI on students’ learning processes | 11 (23%) | - | 2 (8%) | 5 (14%) | 18 |

| 2. The impact of AI on teaching | 16 (34%) | 7 (70%) | 9 (38%) | 11 (31%) | 43 |

| 3. The knowledge required of future employees and the impact of AI on them | 3 (6%) | - | 3 (13%) | 4 (11%) | 10 |

| 4. AI and ethical and economic issues | 5 (11%) | - | 3 (13%) | 3 (9%) | 11 |

| 5. The development of AI or its use in the future | 9 (19%) | 1 (10%) | 4 (17%) | 7 (20%) | 21 |

| 6. The nature of the change brought about by artificial intelligence | 3 (6%) | 2 (20%) | 3 (13%) | 5 (14%) | 13 |

| Totals | 47 (100%) | 10 (100%) | 24 (100%) | 35 (100%) | 116 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kallunki, V.; Kinnunen, P.; Pyörälä, E.; Haarala-Muhonen, A.; Katajavuori, N.; Myyry, L. Navigating the Evolving Landscape of Teaching and Learning: University Faculty and Staff Perceptions of the Artificial Intelligence-Altered Terrain. Educ. Sci. 2024, 14, 727. https://doi.org/10.3390/educsci14070727

Kallunki V, Kinnunen P, Pyörälä E, Haarala-Muhonen A, Katajavuori N, Myyry L. Navigating the Evolving Landscape of Teaching and Learning: University Faculty and Staff Perceptions of the Artificial Intelligence-Altered Terrain. Education Sciences. 2024; 14(7):727. https://doi.org/10.3390/educsci14070727

Chicago/Turabian StyleKallunki, Veera, Päivi Kinnunen, Eeva Pyörälä, Anne Haarala-Muhonen, Nina Katajavuori, and Liisa Myyry. 2024. "Navigating the Evolving Landscape of Teaching and Learning: University Faculty and Staff Perceptions of the Artificial Intelligence-Altered Terrain" Education Sciences 14, no. 7: 727. https://doi.org/10.3390/educsci14070727

APA StyleKallunki, V., Kinnunen, P., Pyörälä, E., Haarala-Muhonen, A., Katajavuori, N., & Myyry, L. (2024). Navigating the Evolving Landscape of Teaching and Learning: University Faculty and Staff Perceptions of the Artificial Intelligence-Altered Terrain. Education Sciences, 14(7), 727. https://doi.org/10.3390/educsci14070727