Perceptions and Use of AI Chatbots among Students in Higher Education: A Scoping Review of Empirical Studies

Abstract

:1. Introduction

- RQ1:

- Which disciplines, countries, and research methods are prevalent in studies of students’ perceptions and uses of AI chatbots in higher education?

- RQ2:

- What are the dominant themes and patterns in studies of students’ perceptions of AI chatbots in higher education?

- RQ3:

- What do studies reveal about AI chatbots’ role in students’ learning processes and task resolution?

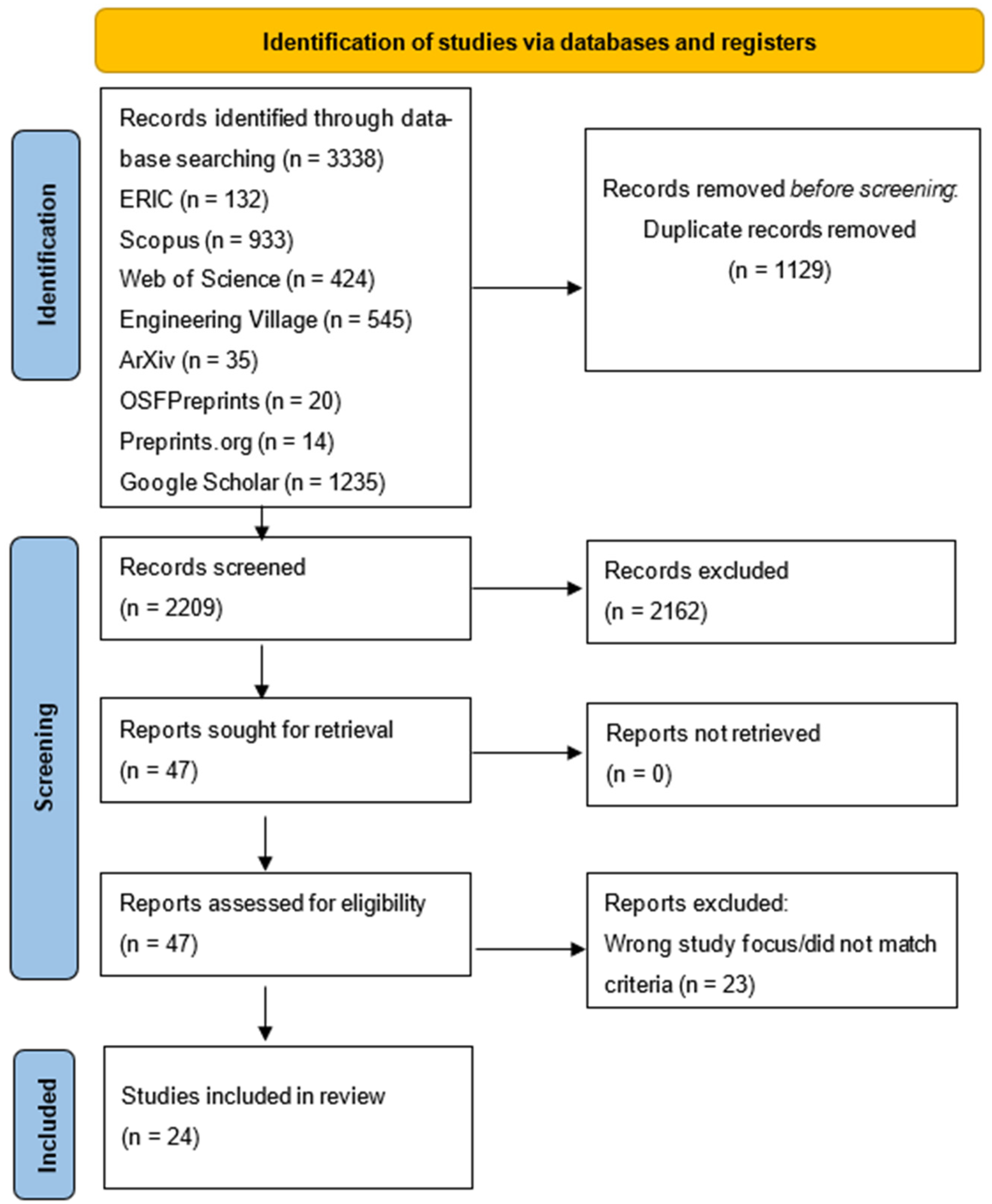

2. Materials and Methods

2.1. Step 1: Identifying the Review Questions

2.2. Step 2: Identifying the Relevant Studies

2.3. Step 3: Selecting the Studies

2.4. Step 4: Charting the Data

2.5. Step 5: Collating, Summarizing, and Reporting the Results

3. Findings and Discussion

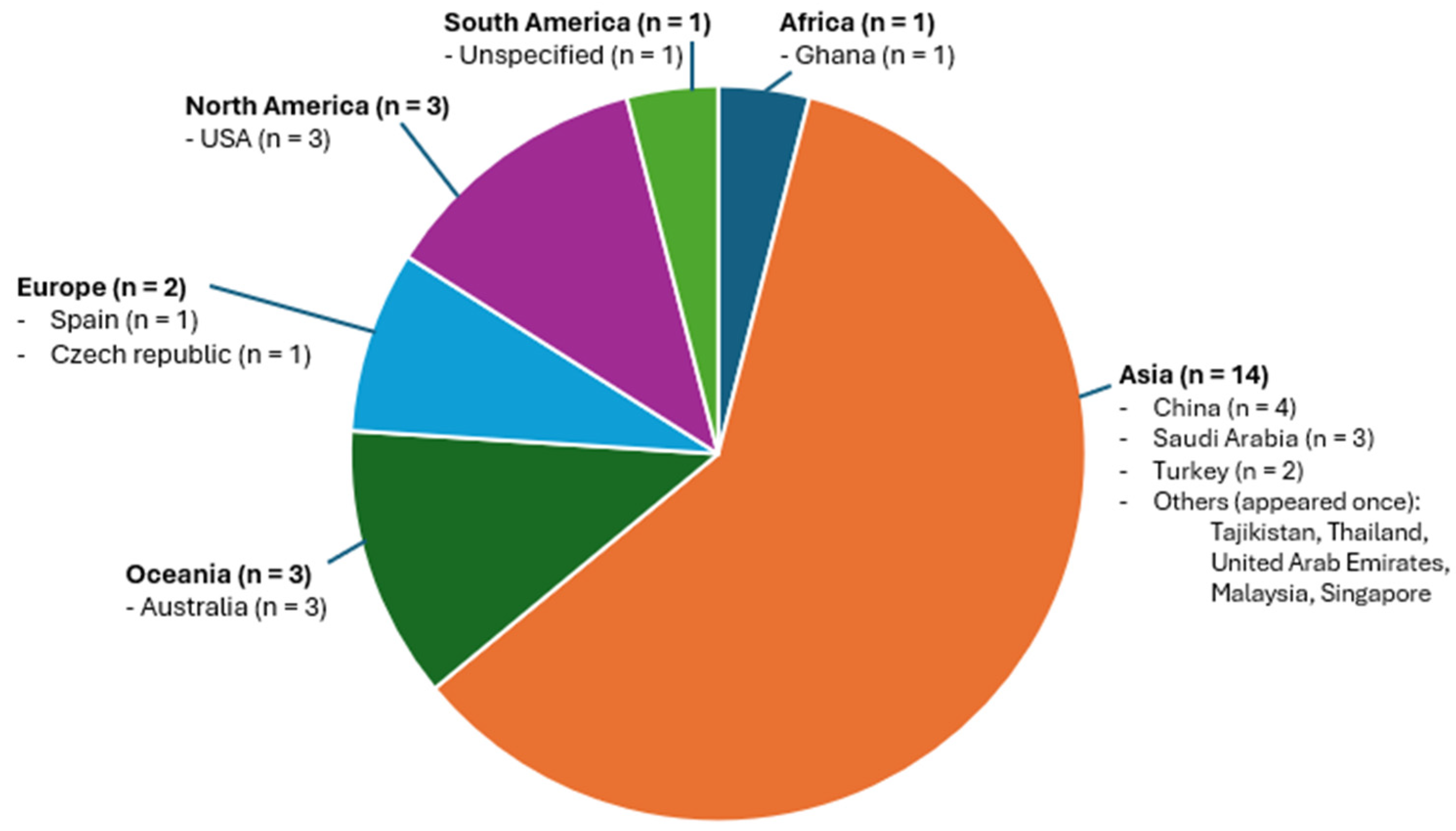

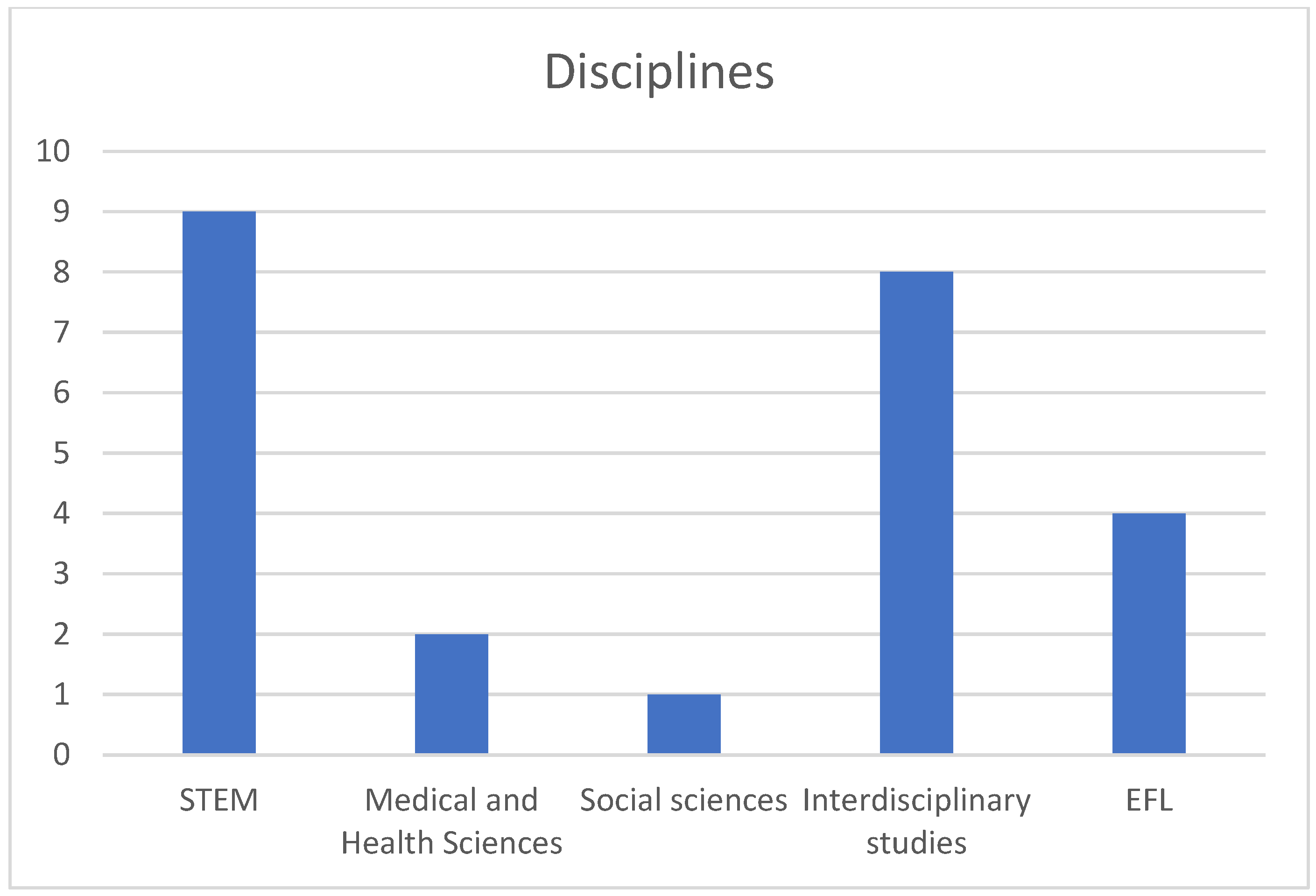

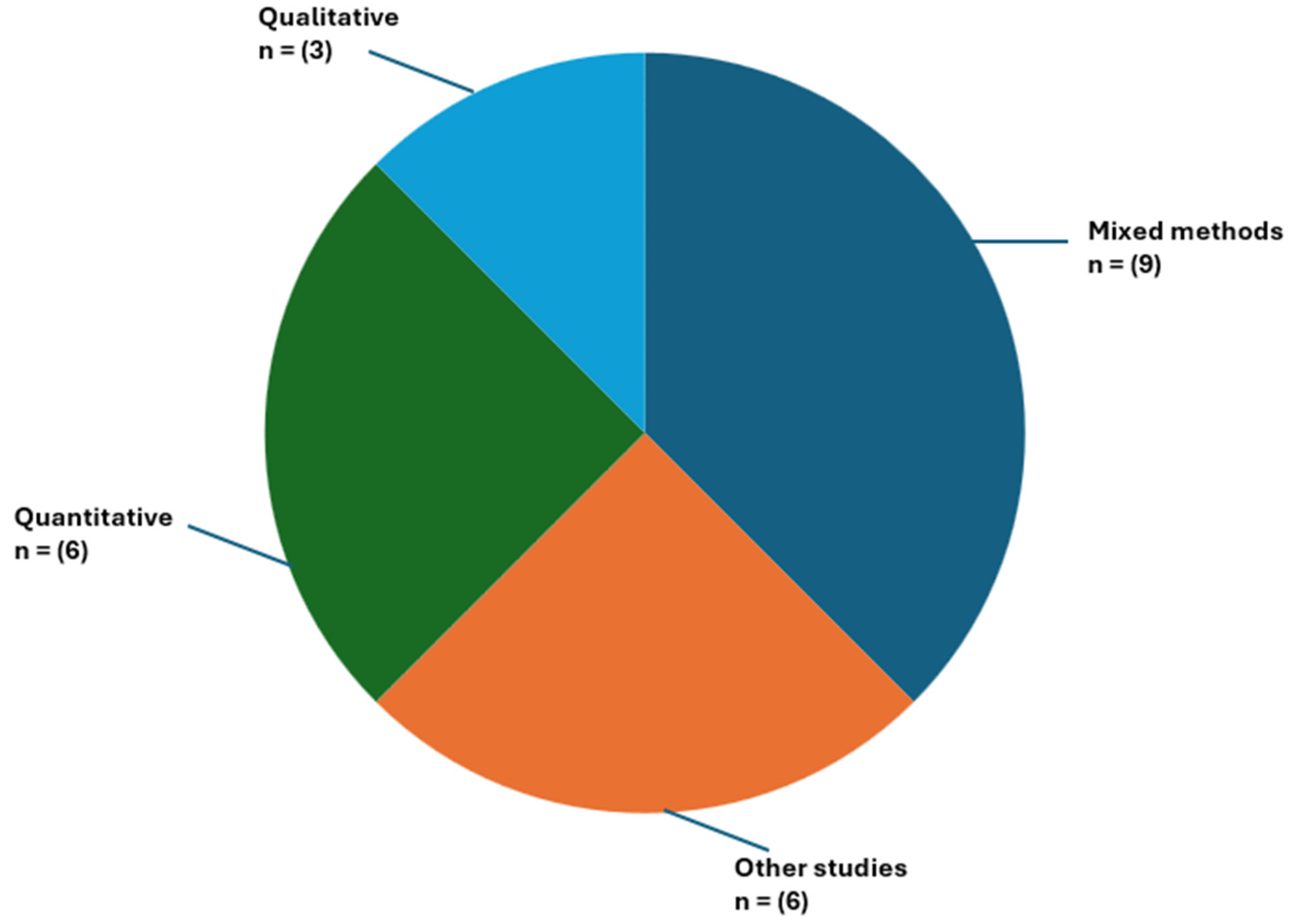

3.1. RQ1: Which Countries, Disciplines, and Research Methods Are Prevalent in Studies of Students’ Perceptions and Uses of AI Chatbots in Higher Education?

Countries, Disciplines, and Research Methods

3.2. RQ2: What Are Dominant Themes and Patterns in Students’ Perceptions of AI Chatbots in Higher Education?

3.2.1. Perceived Usefulness and Adoption of AI Chatbots

| Aspect | Function/Code | Representative Quotes | Other Representative Support |

|---|---|---|---|

| Perceived usefulness and adoption | Perceived usefulness | “Students reported that ChatGPT-3 helped them generate new ideas, save time in research, and enhance their writing skills. They appreciated the interactive nature of ChatGPT-3, which facilitated brainstorming and discussion” [46] (p. 360). “Students found ChatGPT’s responses accurate, insightful, logical, reasonable, unbiased, structured, comprehensive, and customized. One STEM student praised ChatGPT’s accuracy in the AI module, while a non-STEM student appreciated its ability to offer “a lot of insightful responses from a different perspective” [62] (p. 19). | [42,43,44,48,52,53,54,55,56] |

| Efficiency | “[ChatGPT] has been really fast in generating text. When you give it a topic, the application will return with a short essay of about 300 words in no time” [57] (p. 13) | [42,57,62] | |

| Feedback, interactivity, and availability | “When I have doubt and couldn’t find other people to help me out, ChatGPT seems like a good option.” [42] (p. 9) | [46,48,54,62] | |

| Interest | “The students expressed overall positive perceptions including interest … Almost 96% of the students find ChatGPT interesting or very interesting” [54] (p. 38810) | [42,46,53] |

3.2.2. Students’ Concerns about AI Chatbots in Higher Education

| Aspect | Function/Code | Representative Quotes | Other Representative Quotes |

|---|---|---|---|

| Perceived challenges and concerns | Academic integrity and plagiarism | “While students believe that AI has value in education (µ = 3.78), they also agree that AI could be dangerous for students (µ = 3.40) and using AI to complete coursework violates academic integrity” [51] (p. 12) | [46,49,62] |

| Accuracy and reliability of information | “We cannot predict or accurately verify the accuracy or validity of AI generated information. Some people may be misled by false information” [42] (p. 11). “Students have concerns about the limitations of ChatGPT, with some citing its tendency to provide generic, vague, and superficial responses that lack context, specificity, and depth. One student noted that “ChatGPT doesn’t do well in terms of giving exact case studies, but only gives a generic answer to questions” [62] (p. 22–23). | [48,52,54] | |

| Ethical issues | “AI technologies are too strong so that they can obtain our private information easily.” [42] (p. 11) | [42,54,57] | |

| Impairing learning, critical thinking, and creativity | “The participants raised concerns about how ChatGPT negatively affected their critical thinking and effort to generate ideas. In the AI module, a STEM student noted, “ChatGPT gives concise answers which take away the need for critical thinking.” [62] (p. 24) | [42,59] | |

| Changing teaching and assessment | Over 50% of students in our study would not feel confident if the instructor used it to create a syllabus, and the number of students who did not feel confident having an AI grade was even higher. [51] (p. 21) | [56,62] |

3.3. RQ3: What Does Current Research Reveal about AI Chatbots’ Role in Students’ Learning Processes and Task Resolution?

3.3.1. Students’ Use of AI Chatbots in Learning Processes

| Aspect | Function/Code | Representative Quotes | Other Representative Quotes |

|---|---|---|---|

| Learning processes | Helpful for learning processes | “… The majority of students think that ChatGPT can help them learn more effectively” [49] (p. 134) “ChatGPT uncovered information that I usually would not think of; it helps me to see other perspectives/applications. … ChatGPT provides a very broad answer that gives the users a good idea of the different information surrounding the questions they asked.” [62] (p. 20) | [42,43,52,54,59,60,61] |

| Motivation and self-efficacy | “ChatGPT increased students’ enthusiasm and interest in learning. Independence and intrinsic motivation had the highest mean scores, averaging 4.03 and 4, respectively. This demonstrates that ChatGPT gave students a sense of empowerment and increased engagement [50] (p. 16) “Participants with higher self-efficacy for resolving the task with ChatGPT assistance thought task resolution was both easier and more interesting and that ChatGPT was more useful. … However, it is important to note that there was no association between higher self-efficacy and actual task performance. The higher self-efficacy was on the other hand associated with overestimation of one’s performance” [56] (p. 22). | [40,44,55,60] |

3.3.2. Task Resolution, Critical Thinking Skills, and Problem Solving

| Aspect | Function/Code | Representative Quotes | Other Representative Quotes |

|---|---|---|---|

| Task resolution, creativity, and critical thinking | Improvement of critical thinking and problem-solving skills | “… the experimental group’s problem-solving posttest scores (M = 25.00, SD = 2.99) were significantly higher than those of the control group” [60] (p. 5). “… results indicate that the integration of ChatGPT-3 and AI literacy training significantly improved the students’ critical thinking and journalism writing skills” [46] (p. 359). | [53,56,59] |

| Improvement of task resolution | “ChatGPT increased students’ enthusiasm and interest in learning. Independence and intrinsic motivation had the highest mean scores, averaging 4.03 and 4, respectively. This demonstrates that ChatGPT gave students a sense of empowerment and increased engagement [50] (p. 16). “… the solutions of participants using ChatGPT were of higher quality (i.e., they more closely reflected the general objectives of the task). Moreover, these solutions were better elaborated and more original than the solutions of peers working on their own” [56] (p. 21). | [44,52,53,55] |

3.4. Knowledge Gaps and Implications

3.5. Practical Implications for Educators

3.6. Limitations

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| # | Query | Results |

|---|---|---|

| S1 | TI learn* OR AB learn* OR KW learn* | 490,892 |

| S2 | DE “Learning” | 9681 |

| S3 | S1 OR S2 | 492,607 |

| S4 | DE “Higher Education” | 516,243 |

| S5 | DE “Colleges” | 9105 |

| S6 | TI ((high* W0 educat*) or universit* or college*) OR AB ((high* W0 educat*) or universit* or college*) OR KW ((high* W0 educat*) or universit* or college*) | 419,407 |

| S7 | S4 OR S5 OR S6 | 637,434 |

| S8 | DE “Artificial Intelligence” | 3167 |

| S9 | TI ((artificial W0 intelligence) or ai) OR AB ((artificial W0 intelligence) or ai) OR KW ((artificial W0 intelligence) or ai) | 2392 |

| S10 | TI (chatbot* or chatgpt or (chat W0 gpt)) OR AB (chatbot* or chatgpt or (chat W0 gpt)) OR KW (chatbot* or chatgpt or (chat W0 gpt)) | 107 |

| S11 | TI large W0 language W0 model* OR AB large W0 language W0 model* OR KW large W0 language W0 model* | 5 |

| S12 | S8 OR S9 OR S10 OR S11 | 4132 |

| S13 | DE “Students” | 5308 |

| S14 | TI student* OR AB student* OR KW student* | 786,585 |

| S15 | S13 OR S14 | 788,039 |

| S16 | TI (impact* or effect* or influence* or outcome* or result* or consequence* or experience*) OR AB (impact* or effect* or influence* or outcome* or result* or consequence* or experience*) OR KW (impact* or effect* or influence* or outcome* or result* or consequence* or experience*) | 986,762 |

| S17 | S3 AND S7 AND S12 AND S15 AND S16 | 413 |

| S18 | S3 AND S7 AND S12 AND S15 AND S16 | 132 |

References

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Deng, X.; Yu, Z. A meta-analysis and systematic review of the effect of chatbot technology use in sustainable education. Sustainability 2023, 15, 2940. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2022, 38, 237–257. [Google Scholar] [CrossRef]

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Kevian, D.; Syed, U.; Guo, X.; Havens, A.; Dullerud, G.; Seiler, P.; Qin, L.; Hu, B. Capabilities of Large Language Models in Control Engineering: A Benchmark Study on GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra. arXiv 2024, arXiv:2404.03647. [Google Scholar]

- Gimpel, H.; Hall, K.; Decker, S.; Eymann, T.; Lämmermann, L.; Mädche, A.; Röglinger, M.; Ruiner, C.; Schoch, M.; Schoop, M. Unlocking the Power of Generative AI Models and Systems Such as GPT-4 and ChatGPT for Higher Education. 2023. Available online: https://www.econstor.eu/handle/10419/270970 (accessed on 10 January 2024).

- Lund, B.D.; Wang, T. Chatting about ChatGPT: How May AI and GPT Impact Academia and Libraries? Library Hi Tech News, 2023. Available online: https://ssrn.com/abstract=4333415 (accessed on 15 November 2023).

- Imran, A.A.; Lashari, A.A. Exploring the World of Artificial Intelligence: The Perception of the University Students about ChatGPT for Academic Purpose. Glob. Soc. Sci. Rev. 2023, VIII, 375–384. [Google Scholar] [CrossRef]

- Crompton, H.; Burke, D. Artifcial intelligence in higher education: The state of the feld. Int. J. Educ. Technol. High. Educ. 2023, 20, 8. [Google Scholar] [CrossRef]

- Labadze, L.; Grigolia, M.; Machaidze, L. Role of AI chatbots in education: Systematic literature review. Int. J. Educ. Technol. High. Educ. 2023, 20, 56. [Google Scholar] [CrossRef]

- Wu, R.; Yu, Z. Do AI chatbots improve students learning outcomes? Evidence from a meta-analysis. Br. J. Educ. Technol. 2023, 55, 10–33. [Google Scholar] [CrossRef]

- Ansari, A.N.; Ahmad, S.; Bhutta, S.M. Mapping the global evidence around the use of ChatGPT in higher education: A systematic scoping review. Educ. Inf. Technol. 2023, 29, 11281–11321. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Vargas-Murillo, A.R.; de la Asuncion Pari-Bedoya, I.N.M.; de Jesús Guevara-Soto, F. Challenges and Opportunities of AI-Assisted Learning: A Systematic Literature Review on the Impact of ChatGPT Usage in Higher Education. Intl. J. Learn. Teach. Edu. Res. 2023, 22, 122–135. [Google Scholar] [CrossRef]

- Farrell, W.C.; Bogodistov, Y.; Mössenlechner, C. Is Academic Integrity at Risk? Perceived Ethics and Technology Acceptance of ChatGPT; Association for Information Systems (AIS) eLibrary: Sacramento, CA, USA, 2023. [Google Scholar]

- Abbas, M.; Jam, F.A.; Khan, T.I. Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. Int. J. Educ. Technol. High. Educ. 2024, 21, 10. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Biggs, J.B. Student Approaches to Learning and Studying. Research Monograph; ERIC: Budapest, Hungary, 1987. [Google Scholar]

- Biggs, J.; Tang, C.; Kennedy, G. Ebook: Teaching for Quality Learning at University 5e; McGraw-Hill Education (UK): London, UK, 2022. [Google Scholar]

- Richardson, J.T. Approaches to learning or levels of processing: What did Marton and Säljö (1976a) really say? The legacy of the work of the Göteborg Group in the 1970s. Interchange 2015, 46, 239–269. [Google Scholar] [CrossRef]

- Marton, F.; Säljö, R. On qualitative differences in learning: I—Outcome and process. Br. J. Educ. Psychol. 1976, 46, 4–11. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M. Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model. Educ. Sci. 2023, 13, 1151. [Google Scholar] [CrossRef]

- Duong, C.D.; Vu, T.N.; Ngo, T.V.N. Applying a modified technology acceptance model to explain higher education students’ usage of ChatGPT: A serial multiple mediation model with knowledge sharing as a moderator. Int. J. Manag. Educ. 2023, 21, 100883. [Google Scholar] [CrossRef]

- Strzelecki, A. Students’ acceptance of ChatGPT in higher education: An extended unified theory of acceptance and use of technology. Innov. High. Educ. 2023, 49, 223–245. [Google Scholar] [CrossRef]

- Menon, D.; Shilpa, K. “Chatting with ChatGPT”: Analyzing the factors influencing users’ intention to Use the Open AI’s ChatGPT using the UTAUT model. Heliyon 2023, 9, e20962. [Google Scholar] [CrossRef] [PubMed]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Aldraiweesh, A.; Alturki, U.; Almutairy, S.; Shutaleva, A.; Soomro, R.B. Extended TAM based acceptance of AI-Powered ChatGPT for supporting metacognitive self-regulated learning in education: A mixed-methods study. Heliyon 2024, 10, e29317. [Google Scholar] [CrossRef]

- Lin, Y.; Yu, Z. A bibliometric analysis of artificial intelligence chatbots in educational contexts. Interact. Technol. Smart Educ. 2024, 21, 189–213. [Google Scholar] [CrossRef]

- Bearman, M.; Ryan, J.; Ajjawi, R. Discourses of artificial intelligence in higher education: A critical literature review. High. Educ. 2023, 86, 369–385. [Google Scholar] [CrossRef]

- Baytak, A. The Acceptance and Diffusion of Generative Artificial Intelligence in Education: A Literature Review. Curr. Perspect. Educ. Res. 2023, 6, 7–18. [Google Scholar] [CrossRef]

- Imran, M.; Almusharraf, N. Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Contemp. Educ. Technol. 2023, 15, ep464. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Papaioannou, D.; Sutton, A.; Booth, A. Systematic Approaches to a Successful Literature Review; SAGE Publications Ltd.: Washington, DC, USA, 2016; pp. 1–336. [Google Scholar]

- Nightingale, A. A guide to systematic literature reviews. Surgery 2009, 27, 381–384. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Abouammoh, N.; Alhasan, K.; Raina, R.; Malki, K.A. Exploring perceptions and experiences of ChatGPT in medical education: A qualitative study among medical College faculty and students in Saudi Arabia. medRxiv 2023. [Google Scholar] [CrossRef]

- Ali, J.K.M.; Shamsan, M.A.A.; Hezam, T.A. Impact of ChatGPT on learning motivation: Teachers and students’ voices. J. Engl. Stud. Arab. Felix 2023, 2, 41–49. [Google Scholar] [CrossRef]

- Bonsu, E.; Baffour-Koduah, D. From the consumers’ side: Determining students’ perception and intention to use ChatGPTin ghanaian higher education. J. Educ. Soc. Multicult. 2023, 4, 1–29. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Lee, K.K.W. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and Millennial Generation teachers? Smart Learn. Environ. 2023, 10, 60. [Google Scholar] [CrossRef]

- Elkhodr, M.; Gide, E.; Wu, R.; Darwish, O. ICT students’ perceptions towards ChatGPT: An experimental reflective lab analysis. STEM. Educ. 2023, 3, 70–88. [Google Scholar] [CrossRef]

- Exintaris, B.; Karunaratne, N.; Yuriev, E. Metacognition and Critical Thinking: Using ChatGPT-Generated Responses as Prompts for Critique in a Problem-Solving Workshop (SMARTCHEMPer). J. Chem. Educ. 2023, 100, 2972–2980. [Google Scholar] [CrossRef]

- Irfan, M.; Murray, L.; Ali, S. Integration of Artificial Intelligence in Academia: A Case Study of Critical Teaching and Learning in Higher Education. Glob. Soc. Sci. Rev. 2023, VIII, 352–364. [Google Scholar] [CrossRef]

- Kelly, A.; Sullivan, M.; Strampel, K. Generative artificial intelligence: University student awareness, experience, and confidence in use across disciplines. J. Univ. Teach. Learn. Pr. 2023, 20, 12. [Google Scholar] [CrossRef]

- Limna, P.; Kraiwanit, T.; Jangjarat, K. The use of ChatGPT in the digital era: Perspectives on chatbot implementation. Appl. Learn. Teach. 2023, 6, 64–74. [Google Scholar]

- Liu, B. Chinese University Students’ Attitudes and Perceptions in Learning English Using ChatGPT. Int. J. Educ. Humanit. 2023, 3, 132–140. [Google Scholar] [CrossRef]

- Muñoz, S.A.S.; Gayoso, G.G.; Huambo, A.C. Examining the Impacts of ChatGPT on Student Motivation and Engagement. Soc. Space 2023, 23, 1–27. [Google Scholar]

- Petricini, T.; Wu, C.; Zipf, S.T. Perceptions about Generative AI and ChatGPT Use by Faculty and College Students. 2023. Available online: https://osf.io/preprints/edarxiv/jyma4 (accessed on 10 January 2024).

- Qureshi, B. Exploring the use of chatgpt as a tool for learning and assessment in undergraduate computer science curriculum: Opportunities and challenges. arXiv 2023, arXiv:2304.11214. [Google Scholar]

- Sánchez-Ruiz, L.M.; Moll-López, S.; Nuñez-Pérez, A. ChatGPT Challenges Blended Learning Methodologies in Engineering Education: A Case Study in Mathematics. Appl. Sci. 2023, 13, 6039. [Google Scholar] [CrossRef]

- Shoufan, A. Exploring Students’ Perceptions of CHATGPT: Thematic Analysis and Follow-Up Survey. IEEE Access 2023, 11, 38805–38818. [Google Scholar] [CrossRef]

- Uddin, S.M.J.; Albert, A.; Ovid, A.; Alsharef, A. Leveraging ChatGPT to Aid Construction Hazard Recognition and Support Safety Education and Training. Sustainability 2023, 15, 7121. [Google Scholar] [CrossRef]

- Urban, M.; Děchtěrenko, F.; Lukavský, J.; Hrabalová, V. Can ChatGPT Improve Creative Problem-Solving Performance in University Students? Comput. Educ. 2024, 215, 105031. [Google Scholar] [CrossRef]

- Yan, D. Impact of ChatGPT on learners in a L2 writing practicum: An exploratory investigation. Educ. Inf. Technol. 2023, 28, 13943–13967. [Google Scholar] [CrossRef]

- Yifan, W.; Mengmeng, Y.; Omar, M.K. “A Friend or A Foe” Determining Factors Contributed to the Use of ChatGPT among University Students. Int. J. Acad. Res. Progress. Educ. Dev. 2023, 12, 2184–2201. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, R.; Yilmaz, F.G.K. Augmented intelligence in programming learning: Examining student views on the use of ChatGPT for programming learning. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100005. [Google Scholar] [CrossRef]

- Yilmaz, R.; Karaoglan Yilmaz, F.G. The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar] [CrossRef]

- Zheng, Y. ChatGPT for Teaching and Learning: An Experience from Data Science Education. arXiv 2023, arXiv:2307.16650. [Google Scholar]

- Zhu, G.; Fan, X.; Hou, C.; Zhong, T.; Seow, P. Embrace Opportunities and Face Challenges: Using ChatGPT in Undergraduate Students’ Collaborative Interdisciplinary Learning. arXiv 2023, arXiv:2305.18616. [Google Scholar]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Creswell, J.W. Educational Research; Pearson: London, UK, 2012. [Google Scholar]

- Li, D.; Tong, T.W.; Xiao, Y. Is China emerging as the global leader in AI. Harv. Bus. Rev. 2021, 18. [Google Scholar]

- Chu, H.-C.; Hwang, G.-H.; Tu, Y.-F.; Yang, K.-H. Roles and research trends of artificial intelligence in higher education: A systematic review of the top 50 most-cited articles. Australas. J. Educ. Technol. 2022, 38, 22–42. [Google Scholar]

- Mládková, L. Learning habits of generation Z students. In European Conference on Knowledge Management; Academic Conferences International Limited: Reading, UK, 2017; pp. 698–703. [Google Scholar]

- Hampton, D.C.; Keys, Y. Generation Z students: Will they change our nursing classrooms. J. Nurs. Educ. Pract. 2017, 7, 111–115. [Google Scholar] [CrossRef]

- Trigwell, K.; Prosser, M. Improving the quality of student learning: The influence of learning context and student approaches to learning on learning outcomes. High. Educ. 1991, 22, 251–266. [Google Scholar] [CrossRef]

- Bandura, A. Self-Efficacy: The Exercise of Control; Macmillan: London, UK, 1997. [Google Scholar]

- Zimmerman, B.J. Self-efficacy: An essential motive to learn. Contemp. Educ. Psychol. 2000, 25, 82–91. [Google Scholar] [CrossRef] [PubMed]

- Smolansky, A.; Cram, A.; Raduescu, C.; Zeivots, S.; Huber, E.; Kizilcec, R.F. Educator and Student Perspectives on the Impact of Generative AI on Assessments in Higher Education; Association for Computing Machinery, Inc.: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Tang, T.; Vezzani, V.; Eriksson, V. Developing critical thinking, collective creativity skills and problem solving through playful design jams. Think. Ski. Creat. 2020, 37, 100696. [Google Scholar] [CrossRef]

- Lai, E.R. Critical thinking: A literature review. Pearson’s Res. Rep. 2011, 6, 40–41. [Google Scholar]

- Essel, H.B.; Vlachopoulos, D.; Essuman, A.B.; Amankwa, J.O. ChatGPT effects on cognitive skills of undergraduate students: Receiving instant responses from AI-based conversational large language models (LLMs). Comput. Educ. Artif. Intell. 2024, 6, 100198. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.-S. Are Two Heads Better than One?: The Effect of Student-AI Collaboration on Students’ Learning Task Performance. TechTrends Link. Res. Pract. Improv. Learn. 2023, 67, 365–375. [Google Scholar] [CrossRef] [PubMed]

- Biggs, J. What the student does: Teaching for enhanced learning. High. Educ. Res. Dev. 1999, 18, 57–75. [Google Scholar] [CrossRef]

- Bransford, J.D.; Stein, B.S. The IDEAL Problem Solver: A Guide for Improving Thinking, Learning, and Creativity; W.H. Freeman: New York, NY, USA, 1993. [Google Scholar]

- Jonassen, D. Supporting problem solving in PBL. Interdiscip. J. Probl.-Based Learn. 2011, 5, 95–119. [Google Scholar] [CrossRef]

| Criteria | Inclusion | Exclusion |

|---|---|---|

| Article topic/study focus | Empirical studies that discuss: AI chatbots’ effect/impact/influence on students’ learning processes or outcomes in the field of higher education (HE) Students’ perceptions of AI chatbots in their learning processes Students’ use of AI chatbots in their learning processes Assessment and feedback directly relating to students’ learning with AI | Non-empirical articles Online learning related to AI Overviews, predictions, and strategies about AI in HE Teaching in HE Specialized AI tools (created) for specific fields of education |

| Population | Students in HE | Teachers, general public, and pupils in K12 |

| Publication type | Peer-reviewed publications in academic journals and pre-prints | All other types of publications, including books, grey literature, and conference papers |

| Study type | Primary studies | Secondary studies |

| Time period | Studies conducted between 1 January 2022 and 5 September 2023 | Articles outside the time period |

| Language | English, Norwegian, Swedish, and Danish | Non-English, non-Norwegian, non-Swedish, and non-Danish |

| Study | Discipline | Country | Method | Data Collection | Type | |

|---|---|---|---|---|---|---|

| [39] | Abouammoh et al. (2023) | Medicine | Saudi Arabia | Qualitative | Focus group interviews | Preprint |

| [40] | Ali et al. (2023) | EFL students | Saudi Arabia | Quantitative | Questionnaire | Peer-reviewed |

| [41] | Bonsu and Koduah (2023) | Multiple disciplines | Ghana | Mixed | Questionnaire and interviews | Peer-reviewed |

| [42] | Chan and Hu (2023) | Multiple disciplines | China | Mixed | Closed-ended and open-ended survey | Peer-reviewed |

| [43] | Chan and Lee (2023) | Multiple disciplines | China | Mixed | Closed-ended and open-ended questionnaire | Peer-reviewed |

| [44] | Elkhodr et al. (2023) | Information Technology | Australia | Mixed | Examination of students’ responses, instructors’ notes, and quantitative analysis of performance metrics | Peer-reviewed |

| [45] | Exintaris et al. (2023) | Pharmaceutical science | Australia | Workshop | Problem solving and evaluation of solutions | Peer-reviewed |

| [46] | Irfan et al. (2023) | Journalism | Tajikistan | Mixed | Intervention (pre-test, post-test) and interviews | Peer-reviewed |

| [47] | Kelly et al. (2023) | Multiple disciplines | Australia | Quantitative | Questionnaire | Peer-reviewed |

| [48] | Limna et al. (2023) | Multiple disciplines | Thailand | Qualitative | In-depth interviews | Peer-reviewed |

| [49] | Liu (2023) | EFL students | China | Quantitative | Questionnaire | Peer-reviewed |

| [50] | Munoz et al. (2023) | EFL students | Not specified (South America) | Quantitative | Questionnaire | Peer-reviewed |

| [51] | Petricini et al. (2023) | Multiple disciplines | USA | Quantitative | Survey | Preprint |

| [52] | Qureshi (2023) | Computer science | Saudi Arabia | Quasi-experimental design | Problem-solving and evaluation of solutions | Preprint |

| [53] | Sánchez-Ruiz et al. (2023) | Mathematics (Engineering) | Spain | Blended | Flipped teaching, gamification, problem-solving, laboratory sessions, and exams with a computer algebraic system | Peer-reviewed |

| [54] | Shoufan (2023) | Computer engineering | United Arab Emirates | Mixed | Open-ended and closed-ended questionnaire | Peer-reviewed |

| [55] | Uddin (2023 | Construction hazard | USA | Experimental design | Pre- and post-intervention activity and questionnaire | Peer-reviewed |

| [56] | Urban et al. (2023) | Multiple disciplines | Czech Republic | Experimental design | Results from experiments and survey | Preprint |

| [57] | Yan (2023) | EFL students | China | Multi-method qualitative approach | Interview, case-by-case observation, and thematic analysis | Peer-reviewed |

| [58] | Yifan et al. (2023) | Multiple disciplines | Malaysia | Quantitative | Questionnaire | Peer-reviewed |

| [59] | Yilmaz and Yilmaz (2023a) | Programming | Turkey | Case study and Mixed | Closed-ended and open-ended survey | Peer-reviewed |

| [60] | Yilmaz and Yilmaz (2023b) | Programming | Turkey | Experimental design | Computational thinking scale, computer programming self-efficacy scale, and motivation scale | Peer-reviewed |

| [61] | Zheng (2023) | Data science | USA | Mixed | Experiments and questionnaire | Peer-reviewed |

| [62] | Zhu et al. (2023) | STEM and non-STEM | Singapore | Quasi-experiment | Weekly surveys and online written self-reflections | Preprint |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schei, O.M.; Møgelvang, A.; Ludvigsen, K. Perceptions and Use of AI Chatbots among Students in Higher Education: A Scoping Review of Empirical Studies. Educ. Sci. 2024, 14, 922. https://doi.org/10.3390/educsci14080922

Schei OM, Møgelvang A, Ludvigsen K. Perceptions and Use of AI Chatbots among Students in Higher Education: A Scoping Review of Empirical Studies. Education Sciences. 2024; 14(8):922. https://doi.org/10.3390/educsci14080922

Chicago/Turabian StyleSchei, Odin Monrad, Anja Møgelvang, and Kristine Ludvigsen. 2024. "Perceptions and Use of AI Chatbots among Students in Higher Education: A Scoping Review of Empirical Studies" Education Sciences 14, no. 8: 922. https://doi.org/10.3390/educsci14080922