AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners

Abstract

1. Introduction

Eye-Tracking Case Studies: Examples in Education

2. Materials and Methods

3. Results

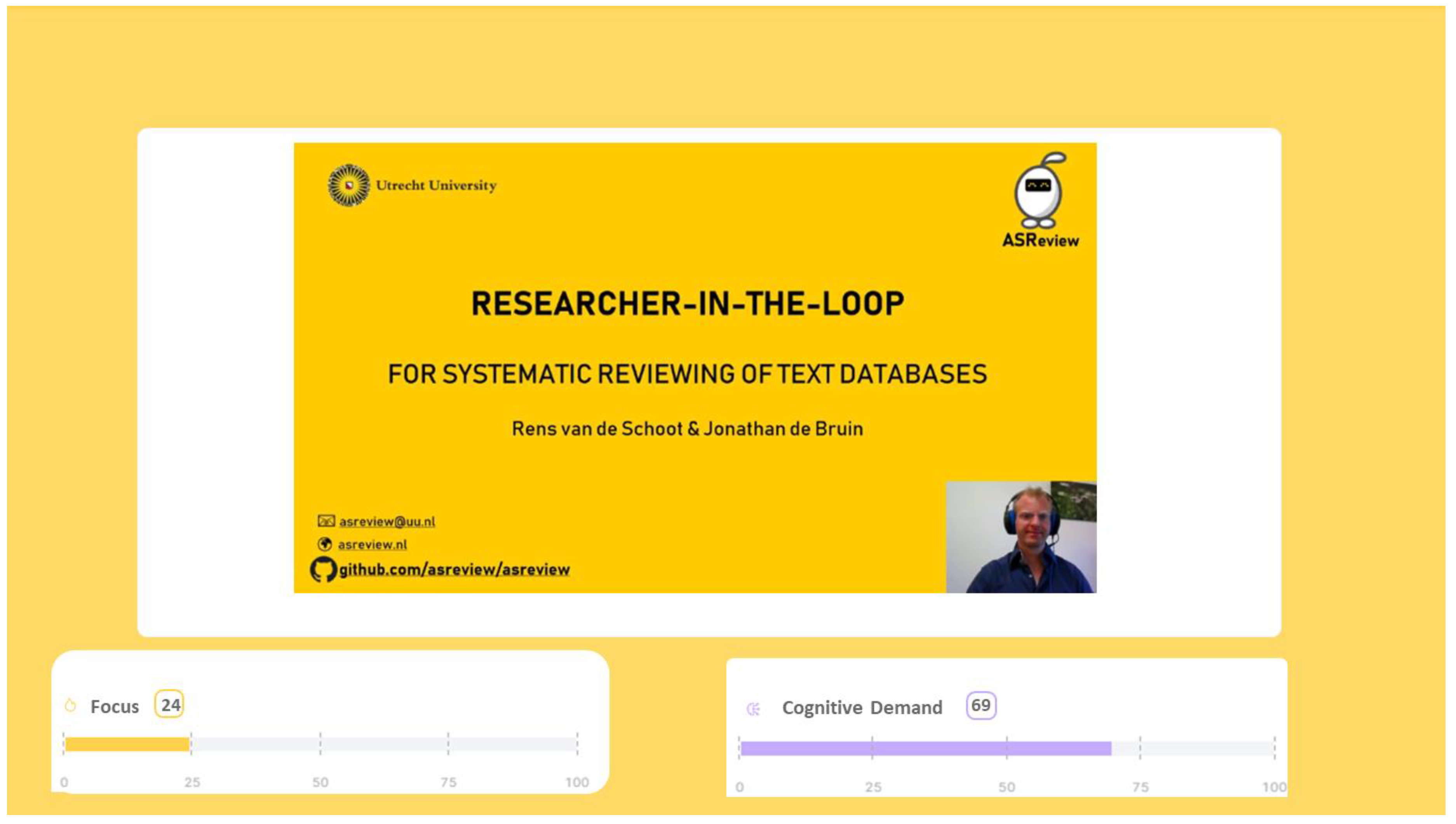

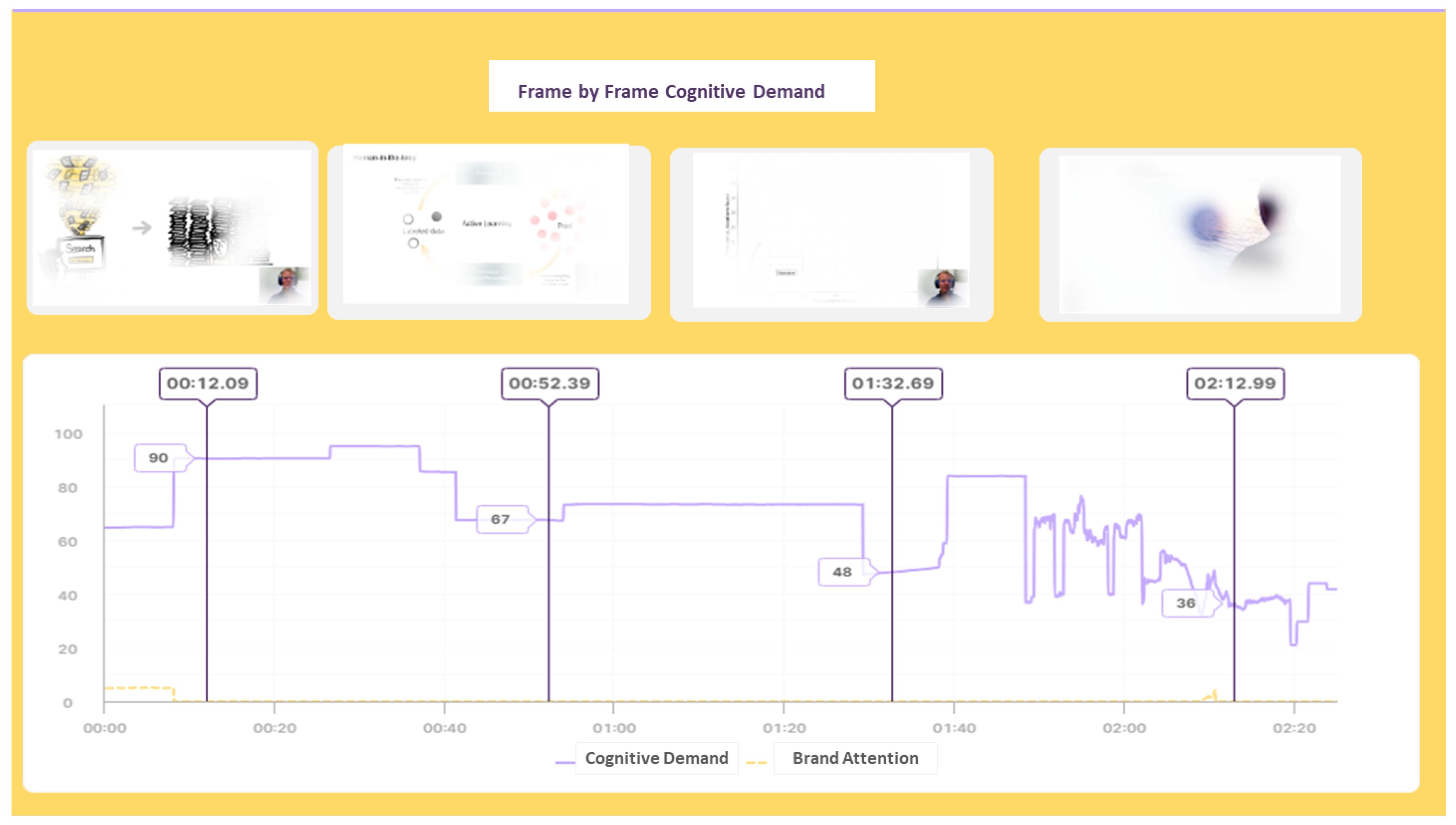

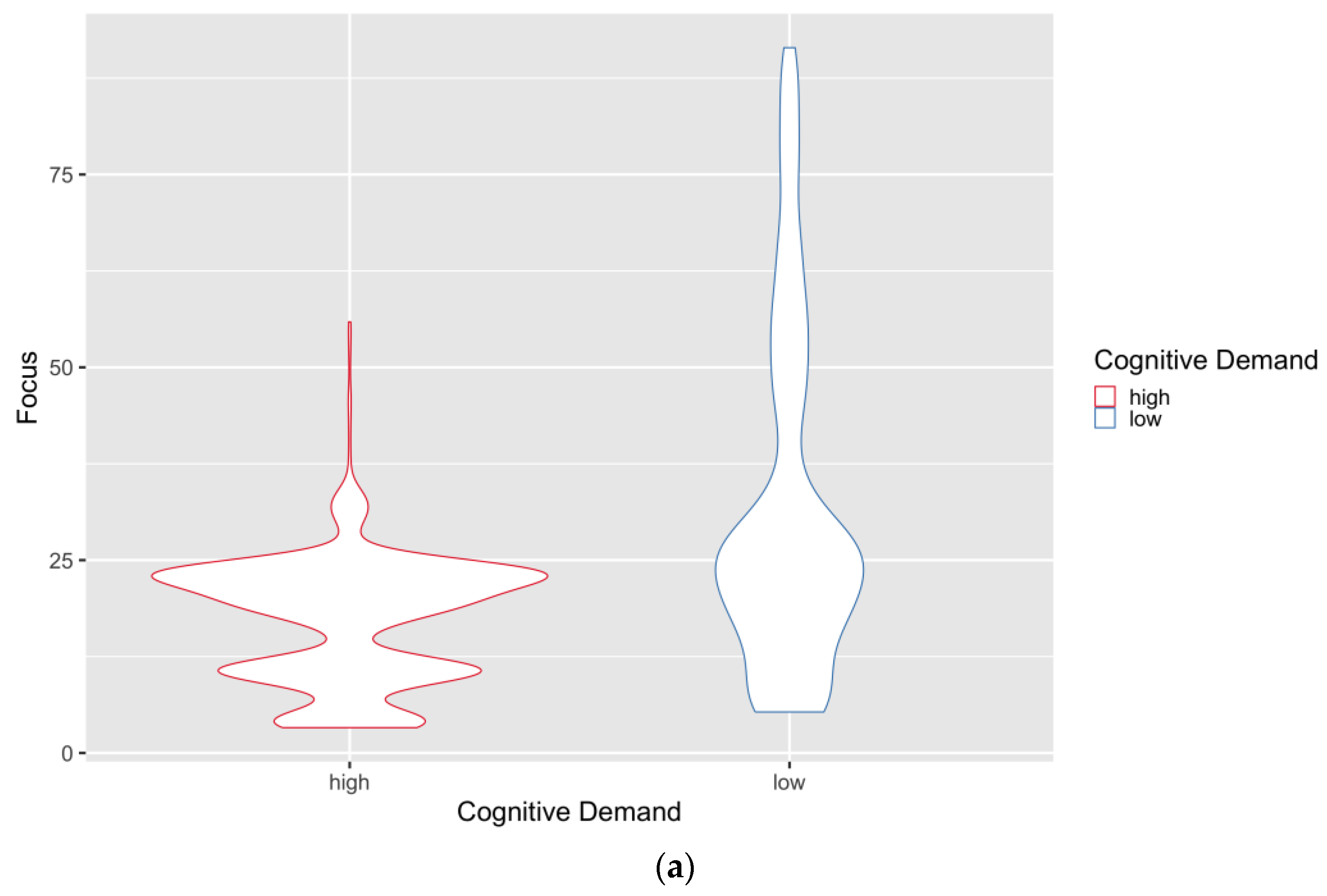

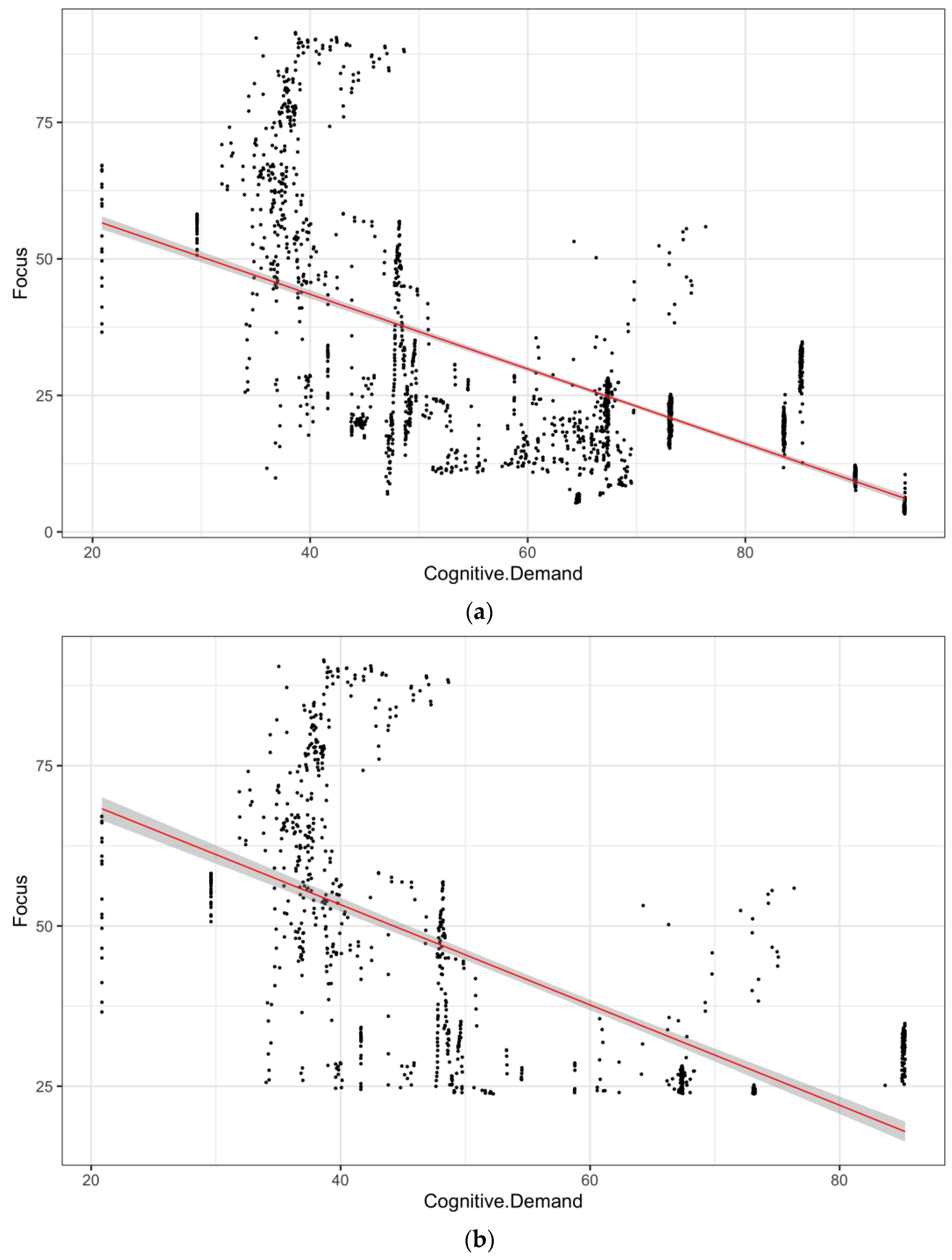

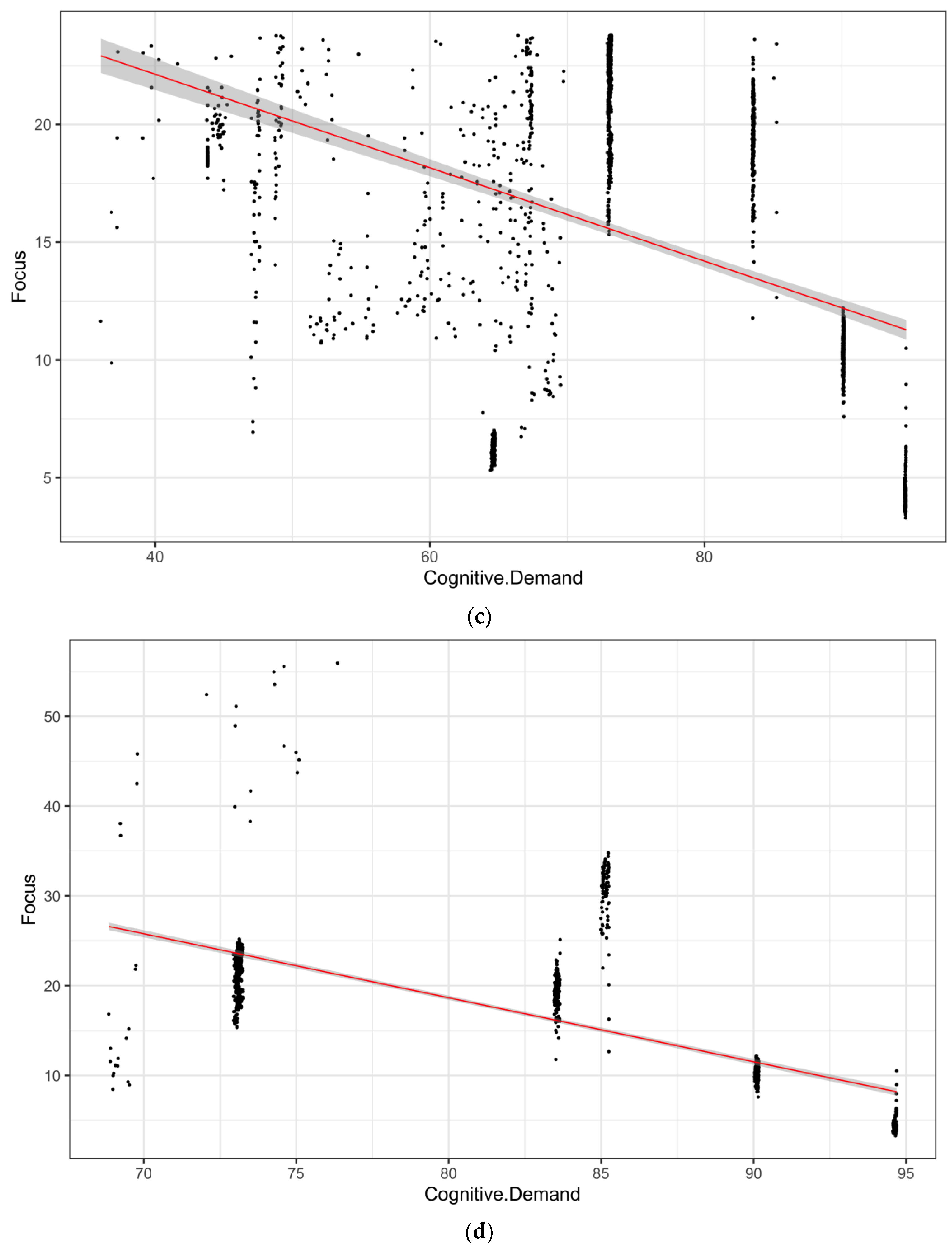

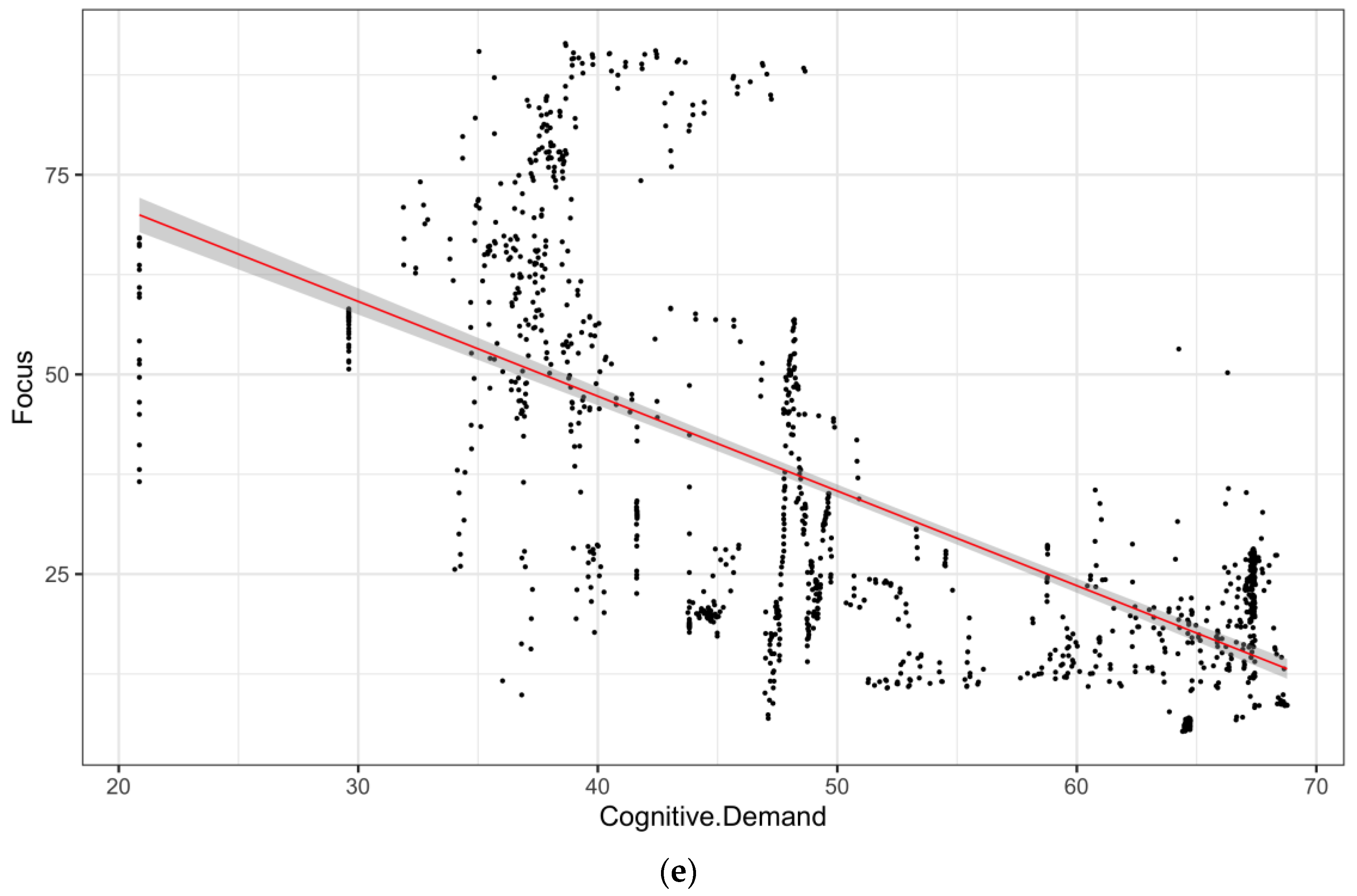

3.1. Utrecht University Video Lecture Insights

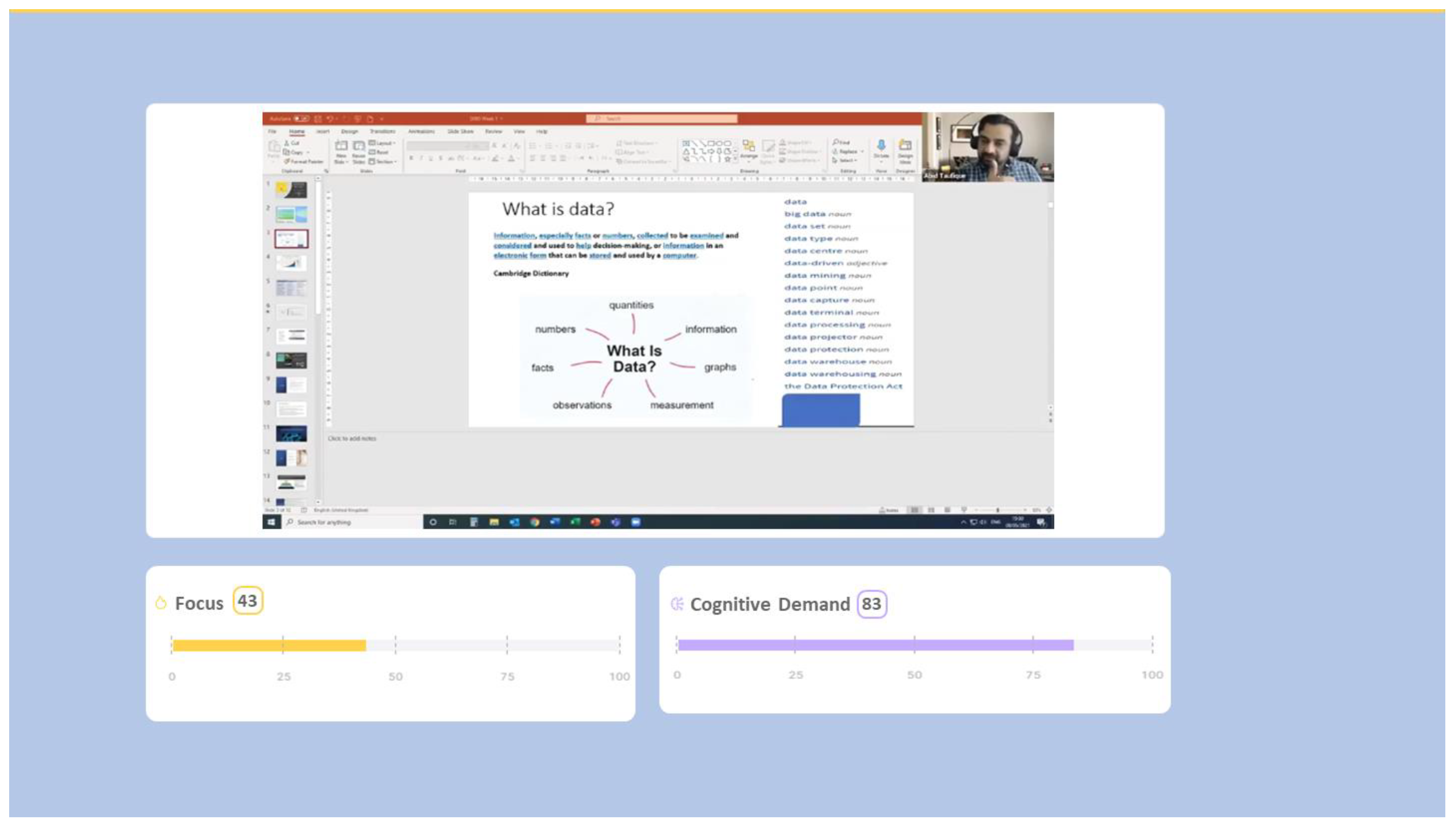

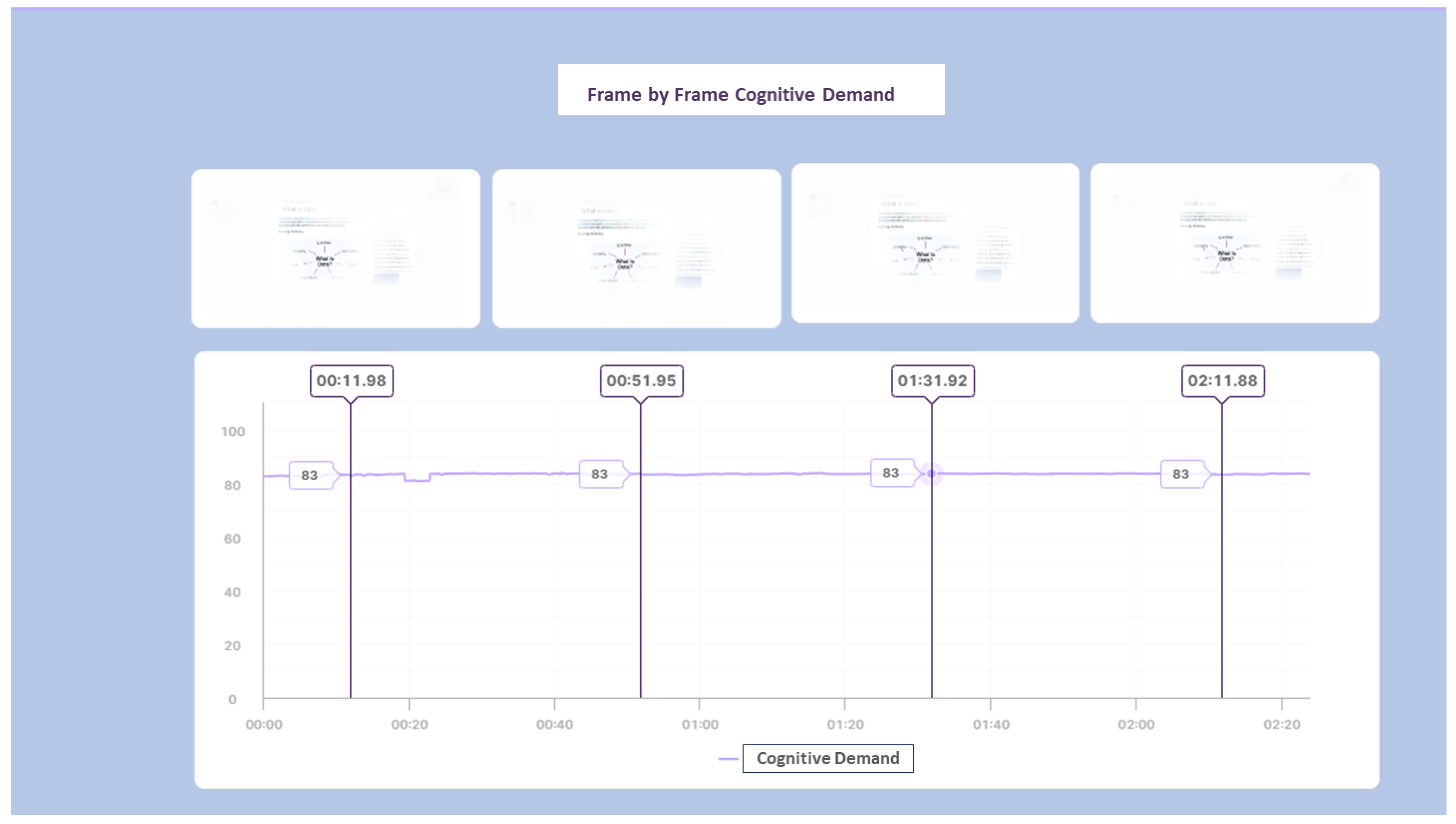

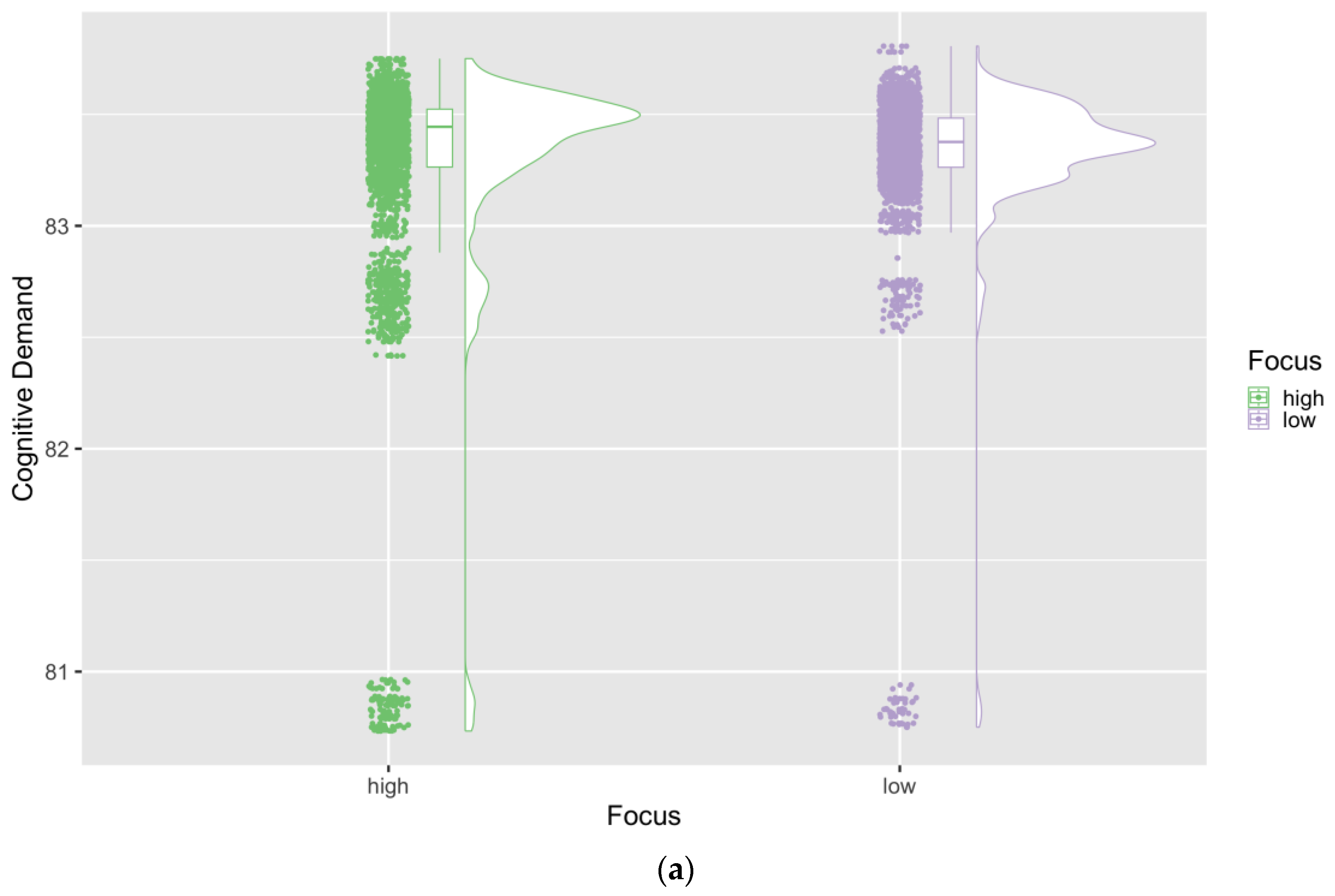

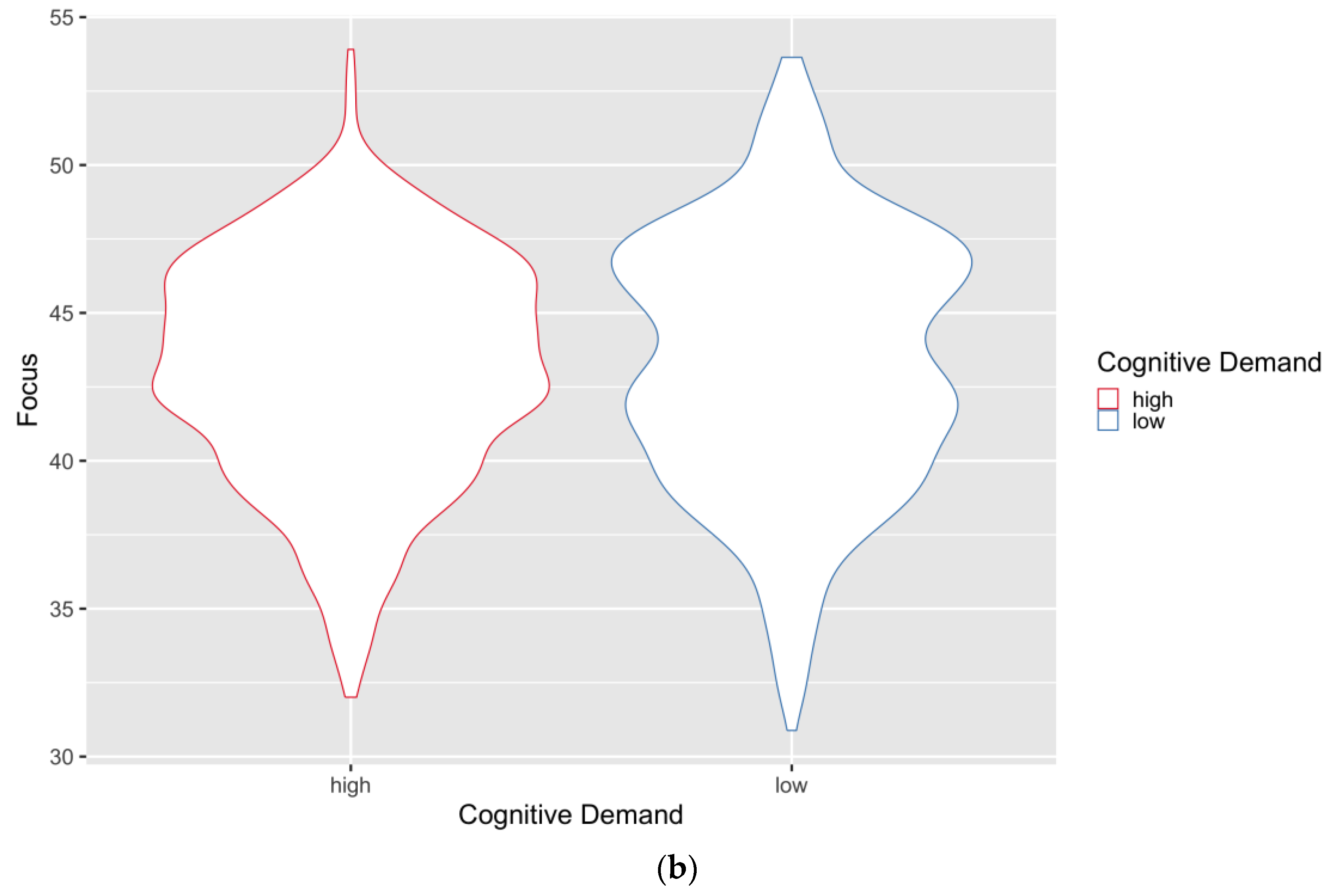

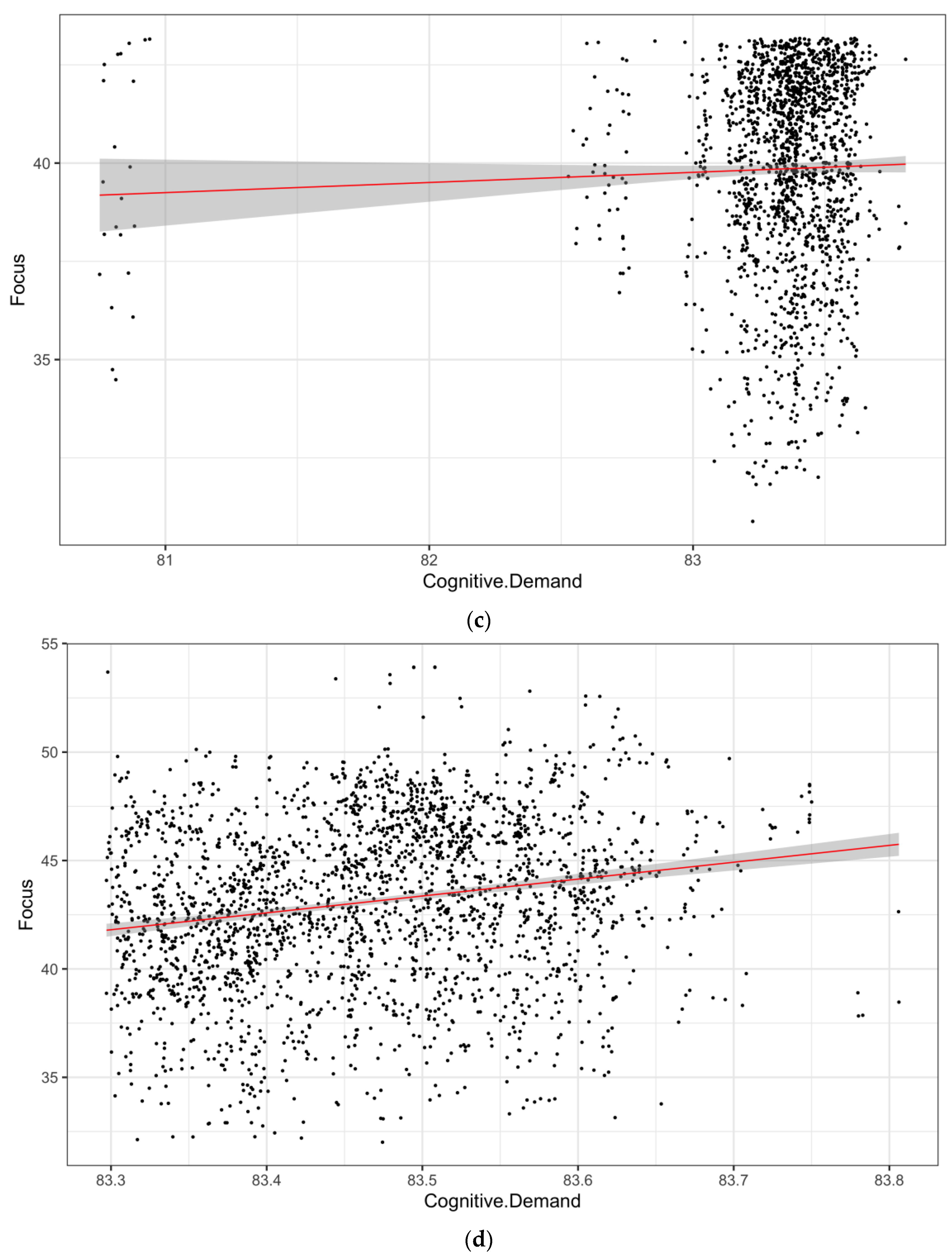

3.2. OBC Video Lecture Insights

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OBC | Oxford Business College |

| AAS | Attention-Aware Systems |

| HCI | Human–Computer Interactions |

| AMAM | Attention Monitoring and Alarm Mechanism |

| AVRLM | Attention-Based Video Lecture Review Mechanism |

| CD | Cognitive Demand |

| AOI | Area of Interest |

Appendix A. Neuromarketing Results for Utrecht University

Appendix B. Neuromarketing Results for Oxford Business College

References

- Sáiz-Manzanares, M.C.; González-Díez, I.; Varela Vázquez, C. Eye-Tracking Technology Applied to the Teaching of University Students in Health Sciences. In Lecture Notes in Networks and Systems, Proceedings of the International Joint Conference 16th International Conference on Computational Intelligence in Security for Information Systems (CISIS 2023) 14th International Conference on EUropean Transnational Education (ICEUTE 2023), Salamanca, Spain, 5–7 September 2023; Bringas, P.G., Ed.; Spinger: Cham, Switzerland, 2023; pp. 261–271. [Google Scholar] [CrossRef]

- Saxena, S.; Fink, L.K.; Lange, E.B. Deep learning models for webcam eye tracking in online experiments. Behav. Res. Methods 2023, 56, 3487–3503. [Google Scholar] [CrossRef] [PubMed]

- Jamil, N.; Belkacem, A.N. Advancing Real-Time Remote Learning: A Novel Paradigm for Cognitive Enhancement Using EEG and Eye-Tracking Analytics. IEEE Access 2024, 12, 93116–93132. [Google Scholar] [CrossRef]

- Gao, J.; Hu, W.; Lu, Y. Recursive Least-Squares Estimator-Aided Online Learning for Visual Tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 7384–7393. [Google Scholar] [CrossRef]

- Naelufah, D.R.; Dalika, F.N.; Hazawa, I.; Zulfa, S. Students’ Perception of Oral Presentation Assignment Using PowerPoint in English Education Students. Edukatif J. Ilmu Pendidik. 2023, 5, 2623–2632. [Google Scholar] [CrossRef]

- Orru, G.; Longo, L. The Evolution of Cognitive Load Theory and the Measurement of Its Intrinsic, Extraneous and Germane Loads: A Review. In Communications in Computer and Information Science, Proceedings of the Human Mental Workload: Models and Applications. H-WORKLOAD 2018, Amsterdam, The Netherlands, 20–21 September 2018; Spinger: Cham, Switzerland, 2019; pp. 23–48. [Google Scholar] [CrossRef]

- Bernard, L.; Raina, S.; Taylor, B.; Kaza, S. Minimizing Cognitive Load in Cyber Learning Materials—An Eye Tracking Study. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 25–27 May 2021; ACM: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, C.; Liu, L.; Tan, Z. Choosing optimal means of knowledge visualisation based on eye tracking for online education. Educ. Inf. Technol. 2023, 28, 15845–15872. [Google Scholar] [CrossRef]

- Jamil, N.; Belkacem, A.N.; Lakas, A. On enhancing students’ cognitive abilities in online learning using brain activity and eye movements. Educ. Inf. Technol. 2023, 28, 4363–4397. [Google Scholar] [CrossRef] [PubMed]

- Pouta, M.; Lehtinen, E.; Palonen, T. Student Teachers’ and Experienced Teachers’ Professional Vision of Students’ Understanding of the Rational Number Concept. Educ. Psychol. Rev. 2021, 33, 109–128. [Google Scholar] [CrossRef]

- Dass, S.; Ramananda, H.S.; Savio, A.A. Eye-Tracking in Education: Analysing the relationship between Student’s performance and Videonystagmography Report. In Proceedings of the 2023 International Conference on New Frontiers in Communication, Automation, Management and Security (ICCAMS), Bangalore, India, 27–28 October 2023; IEEE: New York City, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Martín Antón, L.J.; González-Díez, I.; Carbonero Martín, M.Á. Using Eye Tracking Technology to Analyse Cognitive Load in Multichannel Activities in University Students. Int. J. Hum. Comput. Interact. 2024, 40, 3263–3281. [Google Scholar] [CrossRef]

- Jaiswal, A.; Venkatesh, J.; Nanda, G. Understanding Student Engagement during an Experiential Learning Task Using Eye Tracking: A Case Study. In Proceedings of the 2023 IEEE Frontiers in Education Conference (FIE), College Station, TX, USA, 18–21 October 2023; IEEE: New York City, NY, USA, 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Vriend, S.A.; Vidyapu, S.; Rama, A.; Chen, K.-T.; Weiskopf, D. Which Experimental Design is Better Suited for VQA Tasks?: Eye Tracking Study on Cognitive Load, Performance, and Gaze Allocations. In Proceedings of the 2024 Symposium on Eye Tracking Research and Applications, Glasgow, UK, 4–7 June 2024; ACM: New York, NY, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Cazes, M.; Noël, A.; Jamet, E. Cognitive effects of humorous drawings on learning: An eye-tracking study. Appl. Cogn. Psychol. 2024, 38, 1. [Google Scholar] [CrossRef]

- Chen, C.-M.; Wang, J.-Y.; Lin, Y.-C. A visual interactive reading system based on eye tracking technology to improve digital reading performance. Electron. Libr. 2019, 37, 680–702. [Google Scholar] [CrossRef]

- Chen, C.-M.; Wang, J.-Y. Effects of online synchronous instruction with an attention monitoring and alarm mechanism on sustained attention and learning performance. Interact. Learn. Environ. 2018, 26, 427–443. [Google Scholar] [CrossRef]

- Liu, E.; Zhao, J. Meta-analysis of the effectiveness of electroencephalogram monitoring of sustained attention for improving online learning achievement. Soc. Behav. Personal. Int. J. 2022, 50, 1–11. [Google Scholar] [CrossRef]

- Ye, X.; He, Z.; Heng, W.; Li, Y. Toward understanding the effectiveness of attention mechanism. AIP Adv. 2023, 13, 3. [Google Scholar] [CrossRef]

- Pan, H.; He, S.; Zhang, K.; Qu, B.; Chen, C.; Shi, K. AMAM: An Attention-based Multimodal Alignment Model for Medical Visual Question Answering. Knowl. Based Syst. 2022, 255, 109763. [Google Scholar] [CrossRef]

- Su, C.; Liu, X.; Gan, X.; Zeng, H. Using Synchronized Eye Movements to Predict Attention in Online Video Learning. Educ. Sci. 2024, 14, 548. [Google Scholar] [CrossRef]

- Hogg, N. Measuring Cognitive Load. In Handbook of Research on Electronic Surveys and Measurements; IGI Global: Hershey, PA, USA, 2007; pp. 188–194. [Google Scholar] [CrossRef]

- Paul, J.W.; Seniuk Cicek, J. The Cognitive Science of Powerpoint. In Proceedings of the Canadian Engineering Education Association (CEEA-ACEG), Charlottetown, PEI, Canada, 20–23 June 2021. [Google Scholar] [CrossRef]

- Ekol, G.; Mlotshwa, S. Investigating the cognitive demand levels in probability and counting principles learning tasks from an online mathematics textbook. Pythagoras 2022, 43, 1. [Google Scholar] [CrossRef]

- Neurons. Predict Tech Paper. Neurons, Inc.: Taastrup, Denmark, 2024. [Google Scholar]

- Zhou, Y.; Xu, T.; Cai, Y.; Wu, X.; Dong, B. Monitoring Cognitive Workload in Online Videos Learning Through an EEG-Based Brain-Computer Interface. In Learning and Collaboration Technologies. Novel Learning Ecosystems, Proceedings of the Conference: International Conference on Learning and Collaboration Technologies, Vancouver, Canada, 9–14 July 2017; Spinger: Cham, Switzerland, 2017; pp. 64–73. [Google Scholar] [CrossRef]

- Drzyzga, G.; Harder, T.; Janneck, M. Cognitive Effort in Interaction with Software Systems for Self-regulation—An Eye-Tracking Study. In Engineering Psychology and Cognitive Ergonomics, Proceedings of the 20th International Conference, EPCE 2023, Held as Part of the 25th HCI International Conference, HCII 2023, Copenhagen, Denmark, 23–28 July 2023; Spinger: Cham, Switzerland, 2023; pp. 37–52. [Google Scholar] [CrossRef]

- O’Riordan, T.; Millard, D.E.; Schulz, J. How should we measure online learning activity? Res. Learn. Technol. 2016, 24, 30088. [Google Scholar] [CrossRef][Green Version]

- Yen, C.-H. Exploring the Choices for an Effective Method for Cognitive Load Measurement in Asynchronous Interactions of E-Learning. In Cognitive Load Measurement and Application; Routledge: New York, NY, USA, 2017; pp. 183–198. [Google Scholar] [CrossRef]

- Neurons. Predict Datasheet; Neurons, Inc.: Taastrup, Denmark, 2024. [Google Scholar]

- Chandran, P.; Huang, Y.; Munsell, J.; Howatt, B.; Wallace, B.; Wilson, L.; D’Mello, S.; Hoai, M.; Rebello, N.S.; Loschky, L.C. Characterizing Learners’ Complex Attentional States During Online Multimedia Learning Using Eye-tracking, Egocentric Camera, Webcam, and Retrospective recalls. In Proceedings of the 2024 Symposium on Eye Tracking Research and Applications, Glasgow, UK, 4–7 June 2024; ACM: New York, NY, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Dostálová, N.; Plch, L. A Scoping Review of Webcam Eye Tracking in Learning and Education. Stud. Paedagog. 2024, 28, 113–131. [Google Scholar] [CrossRef]

- Gu, C.; Peng, Y.; Nastase, S.A.; Mayer, R.E.; Li, P. Onscreen presence of instructors in video lectures affects learners’ neural synchrony and visual attention during multimedia learning. Proc. Natl. Acad. Sci. USA 2024, 121, 12. [Google Scholar] [CrossRef]

- Asaadi, A.H.; Amiri, S.H.; Bosaghzadeh, A.; Ebrahimpour, R. Effects and prediction of cognitive load on encoding model of brain response to auditory and linguistic stimuli in educational multimedia. Sci. Rep. 2024, 14, 9133. [Google Scholar] [CrossRef]

- She, L.; Wang, Z.; Tao, X.; Lai, L. The Impact of Color Cues on the Learning Performance in Video Lectures. Behav. Sci. 2024, 14, 560. [Google Scholar] [CrossRef]

- Li, W.; Kim, B.K. Different Effects of Video Types and Knowledge Types on Cognitive Load in Lecture Video Learning. Educ. Res. Inst. 2023, 21, 133–160. [Google Scholar] [CrossRef]

- Kmalvand, A. Visual Communication in PowerPoint Presentations in Applied Linguistics. TechTrends 2015, 59, 41–45. [Google Scholar] [CrossRef]

- Azizifar, A.; Kmalvand, A.; Ghorbanzade, N. An Investigation into Visual language in PowerPoint Presentations in Applied Linguistics. Eur. J. Engl. Lang. Linguist. Res. 2024, 5, 16–28. Available online: https://www.eajournals.org/wp-content/uploads/An-Investigation-into-Visual-Language-in-Powerpoint-Presentations-in-Applied-Linguistics.pdf (accessed on 28 May 2024).

- Han, J.; Geng, X.; Wang, Q. Sustainable Development of University EFL Learners’ Engagement, Satisfaction, and Self-Efficacy in Online Learning Environments: Chinese Experiences. Sustainability 2021, 13, 11655. [Google Scholar] [CrossRef]

- Guo, Q.; Chen, Y. The Effects of Visual Complexity and Task Difficulty on the Comprehensive Cognitive Efficiency of Cluster Separation Tasks. Behav. Sci. 2023, 13, 827. [Google Scholar] [CrossRef]

- Gwizdka, J. Assessing Cognitive Load on Web Search Tasks. Ergon. Open J. 2009, 2, 114–123. [Google Scholar] [CrossRef][Green Version]

- Rekik, G.; Khacharem, A.; Belkhir, Y.; Bali, N.; Jarraya, M. The effect of visualization format and content complexity on the acquisition of tactical actions in basketball. Learn. Motiv. 2019, 65, 10–19. [Google Scholar] [CrossRef]

- Linnell, K.J.; Caparos, S. Perceptual and cognitive load interact to control the spatial focus of attention. J. Exp. Psychol. Hum. Percept. Perform. 2011, 37, 1643–1648. [Google Scholar] [CrossRef]

- Liu, J.-C.; Li, K.-A.; Yeh, S.-L.; Chien, S.-Y. Assessing Perceptual Load and Cognitive Load by Fixation-Related Information of Eye Movements. Sensors 2022, 22, 1187. [Google Scholar] [CrossRef]

- Anand, R.; Gupta, N. Impact of Online Learning on Student Engagement and Academic Performance. Prax. Int. J. Soc. Sci. Lit. 2023, 6, 29–40. [Google Scholar] [CrossRef]

- He, H.; Zheng, Q.; Di, D.; Dong, B. How Learner Support Services Affect Student Engagement in Online Learning Environments. IEEE Access 2019, 7, 49961–49973. [Google Scholar] [CrossRef]

- Nanda, E.I.; Sowmya, M.R.; Khatua, L.; Teja, P.R.; Deshmukh, P. ALBERT-Based Personalized Educational Recommender System: Enhancing Students’ Learning Outcomes in Online Learning. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 2190–2201. [Google Scholar] [CrossRef]

- Alqudah, H.; Khasawneh, M.A.S. Exploring the Impact of Virtual Reality Field Trips on Student Engagement and Learning Outcomes. Migr. Lett. 2023, 20, 1205–1216. [Google Scholar] [CrossRef]

- James, W.; Oates, G.; Schonfeldt, N. Improving retention while enhancing student engagement and learning outcomes using gamified mobile technology. Account. Educ. 2024, 1–21. [Google Scholar] [CrossRef]

- More, S.K.; Patil, Y.M.; Kumbhar, P.D. Improving Student Performance through Interactive Webinars. J. Eng. Educ. Transform. 2024, 37, 339–342. [Google Scholar] [CrossRef]

- Sharp, J.G.; Hemmings, B.; Kay, R.; Atkin, C. Academic boredom, approaches to learning and the final-year degree outcomes of undergraduate students. J. Furth. High. Educ. 2018, 42, 1055–1077. [Google Scholar] [CrossRef]

- Tam, K.Y.; Poon, C.Y.; Hui, V.K.; Wong, C.Y.; Kwong, V.W.; Yuen, G.W.; Chan, C.S. Boredom begets boredom: An experience sampling study on the impact of teacher boredom on student boredom and motivation. Br. J. Educ. Psychol. 2020, 90, 124–137. [Google Scholar] [CrossRef]

- Fitriyani, E.; Gusripanto, E. Teacher support and student engagement: Correlation study on students of SMPN 4 Rengat Barat. J. Psychol. Instr. 2021, 5, 26–32. [Google Scholar] [CrossRef]

- Chen, C.-M.; Wu, C.-H. Effects of different video lecture types on sustained attention, emotion, cognitive load, and learning performance. Comput. Educ. 2015, 80, 108–121. [Google Scholar] [CrossRef]

- Hanif, A.; Galvez-Peralta, M. The design of a ‘1-minute break’ to help with students’ attention during lectures in a Pharm.D. programme. Pharm. Educ. 2023, 23, 648–655. [Google Scholar] [CrossRef]

- Lee, H.; Kim, Y.; Park, C. Classification of human attention to multimedia lecture. In Proceedings of the 2018 International Conference on Information Networking (ICOIN), Chiang Mai, Thailand, 10–12 January 2018; IEEE: New York, NY, USA; pp. 914–916. [Google Scholar] [CrossRef]

- Hung, C.-T.; Wu, S.-E.; Chen, Y.-H.; Soong, C.-Y.; Chiang, C.; Wang, W. The evaluation of synchronous and asynchronous online learning: Student experience, learning outcomes, and cognitive load. BMC Med. Educ. 2024, 24, 326. [Google Scholar] [CrossRef] [PubMed]

- Costley, J. Using cognitive strategies overcomes cognitive load in online learning environments. Interact. Technol. Smart Educ. 2020, 17, 215–228. [Google Scholar] [CrossRef]

- Putri, I.I.; Ferazona, S. Analisis Usaha Mental (Um) Mahasiswa Sebagai Gambaran Extranous Cognitive Load (Ecl) Dalam Kegiatan Perkuliahan Pendidikan Biologi. Perspekt. Pendidik. Dan Kegur. 2019, 10, 67–72. [Google Scholar] [CrossRef]

- Yeo, S. The Application of Cognitive Teaching and Learning Strategies to Instruction in Medical Education. Korean Med. Educ. Rev. 2024, 26 (Suppl. 1), S1–S12. [Google Scholar] [CrossRef]

- Kun, B.; Yan, W.; Han, D. Exploring Interactive Design Strategies of Online Learning Platform Based on Cognitive Load Theory. Hum. Factors Syst. Interact. 2023, 84, 9–17. [Google Scholar] [CrossRef]

- Rosenthal, S.; Walker, Z. Experiencing Live Composite Video Lectures: Comparisons with Traditional Lectures and Common Video Lecture Methods. Int. J. Scholarsh. Teach. Learn. 2020, 14, 1. [Google Scholar] [CrossRef]

- Hwang, E.; Lee, J. Attention-based automatic editing of virtual lectures for reduced production labor and effective learning experience. Int. J. Hum. Comput. Stud. 2024, 181, 103161. [Google Scholar] [CrossRef]

- Suma, M.D. AI Generated Image Detection Using Neural Networks. Int. J. Sci. Res. Manag. 2024, 8, 1–11. [Google Scholar] [CrossRef]

- Bekler, M.; Yilmaz, M.; Ilgın, H.E. Assessing Feature Importance in Eye-Tracking Data within Virtual Reality Using Explainable Artificial Intelligence Techniques. Appl. Sci. 2024, 14, 6042. [Google Scholar] [CrossRef]

- Lin, B.; Guo, Y.; Hou, M. Tracking Algorithm Based on Attention Mechanism and Template Update. In Proceedings of the 2024 IEEE 3rd International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 27–29 February 2024; IEEE: New York, NY, USA, 2024; pp. 57–61. [Google Scholar] [CrossRef]

- Xi, J.; Wang, Y.; Cai, H.; Chen, X. Refined attention Siamese network for real-time object tracking. In Proceedings of the 2021 International Conference on Optical Instruments and Technology: Optoelectronic Measurement Technology and Systems, Online, 8–10 April 2022; Zhu, J., Jiang, J., Han, S., Zeng, L., Eds.; SPIE: Bellingham, WA, USA, 2022; p. 61. [Google Scholar] [CrossRef]

- Chen, B.; Li, P.; Sun, C.; Wang, D.; Yang, G.; Lu, H. Multi attention module for visual tracking. Pattern Recognit. 2019, 87, 80–93. [Google Scholar] [CrossRef]

- Liu, D.; Ji, Y.; Ye, M.; Gan, Y.; Zhang, J. An Improved Attention-Based Spatiotemporal-Stream Model for Action Recognition in Videos. IEEE Access 2020, 8, 61462–61470. [Google Scholar] [CrossRef]

- Ravi, R.; Karmakar, M. Reviewing the Significance of Attention and Awareness for Developing Learner-Centric Taxonomy. J. High. Educ. Theory Pract. 2023, 23, 14. [Google Scholar] [CrossRef]

- Nȩcka, E. Cognitive analysis of intelligence: The significance of working memory processes. Personal. Individ. Differ. 1992, 13, 1031–1046. [Google Scholar] [CrossRef]

- Pinkosova, Z.; McGeown, W.J.; Moshfeghi, Y. Moderating effects of self-perceived knowledge in a relevance assessment task: An EEG study. Comput. Hum. Behav. Rep. 2023, 11, 100295. [Google Scholar] [CrossRef]

- Fan, Z.; Ning, L.; Kai, X.; Zhibo, C. Method and Apparatus for Video Quality Assessment Based on Content Complexity. U.S. Patent US10536703B2, 14 January 2020. [Google Scholar]

- Dokic, K.; Idlbek, R.; Mandusic, D. Model of information density measuring in e-learning videos. New Trends Issues Proc. Humanit. Soc. Sci. 2017, 4, 12–20. [Google Scholar] [CrossRef]

- Tiede, J. Part II: Measuring Media-related Educational Competencies. Medien. Z. Für Theor. Und Prax. Der Medien. 2020, 101–151. [Google Scholar] [CrossRef]

- Huang, C.L.; Luo, Y.F.; Yang, S.C.; Lu, C.M.; Chen, A.-S. Influence of Students’ Learning Style, Sense of Presence, and Cognitive Load on Learning Outcomes in an Immersive Virtual Reality Learning Environment. J. Educ. Comput. Res. 2020, 3, 596–615. [Google Scholar] [CrossRef]

- Feldon, D.F.; Callan, G.; Juth, S.; Jeong, S. Cognitive Load as Motivational Cost. Educ. Psychol. Rev. 2019, 31, 319–337. [Google Scholar] [CrossRef]

- Bellaj, M.; Dahmane, A.B.; Boudra, S.; Sefian, M.L. Educational Data Mining: Employing Machine Learning Techniques and Hyperparameter Optimization to Improve Students’ Academic Performance. Int. J. Online Biomed. Eng. iJOE 2024, 20, 55–74. [Google Scholar] [CrossRef]

- Karale, A.; Narlawar, A.; Bhujba, B.; Bharit, S. Student Performance Prediction using AI and ML. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 1644–1650. [Google Scholar] [CrossRef]

- Van de Schoot, R.; De Bruin, J. Researcher-in-the-loop for systematic reviewing of text databases. SciNLP: Natural Language Processing and Data Mining for Scientific Text. Zenodo 2020. [Google Scholar] [CrossRef]

| Metrics | Focus | Cognitive Demand | |||||

|---|---|---|---|---|---|---|---|

| Magazine | Condition | Mean | SD | Range | Mean | SD | Range |

| Utrecht University | High Focus | 40.77 | 19.34 | (23.803, 91.44) | 56.04 | 17.19 | (20.86, 85.25) |

| Low Focus | 15.12 | 6.64 | (3.29, 23.8) | 75.37 | 14.09 | (36.02, 94.69) | |

| High CD | 17.38 | 8.09 | (3.29, 55.89) | 81.78 | 8.64 | (68.85, 94.69) | |

| Low CD | 31.501 | 21.903 | (5.32, 91.44) | 53.3 | 12.67 | (20.86, 68.78) | |

| OBC | High Focus | 46.35 | 2.05 | (43.18, 53.91) | 83.27 | 0.53 | (80.73, 83.75) |

| Low Focus | 39.85 | 2.54 | (30.88, 43.17) | 83.33 | 0.34 | (80.75, 83.81) | |

| High CD | 43.11 | 3.83 | (32.01, 53.91) | 83.47 | 0.098 | (83.297, 83.81) | |

| Low CD | 43.32 | 4.34 | (30.88, 53.64) | 82.88 | 0.66 | (80.73, 83.3) | |

| Condition | Pearson’s Correlation Score |

|---|---|

| Overall | −0.697 |

| High Focus | −0.695 |

| Low Focus | −0.421 |

| High CD | −0.761 |

| Low CD | −0.686 |

| Condition | Pearson’s Correlation Score |

|---|---|

| Overall | −0.07 |

| High Focus | −0.07 |

| Low Focus | 0.04 (not significant) |

| High CD | 0.2 |

| Low CD | −0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šola, H.M.; Qureshi, F.H.; Khawaja, S. AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners. Educ. Sci. 2024, 14, 933. https://doi.org/10.3390/educsci14090933

Šola HM, Qureshi FH, Khawaja S. AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners. Education Sciences. 2024; 14(9):933. https://doi.org/10.3390/educsci14090933

Chicago/Turabian StyleŠola, Hedda Martina, Fayyaz Hussain Qureshi, and Sarwar Khawaja. 2024. "AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners" Education Sciences 14, no. 9: 933. https://doi.org/10.3390/educsci14090933

APA StyleŠola, H. M., Qureshi, F. H., & Khawaja, S. (2024). AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners. Education Sciences, 14(9), 933. https://doi.org/10.3390/educsci14090933