Abstract

When General Chemistry at West Point switched from interactive lectures to guided inquiry, it provided an opportunity to examine what was expected of students in classrooms and on assessments. Learning objectives and questions on mid-term exams for four semesters of General Chemistry I (two traditional semesters and two guided inquiry semesters) were analyzed by the Cognitive Process and Knowledge dimensions of Bloom’s revised taxonomy. The results of this comparison showed the learning objectives for the guided inquiry semesters had a higher proportion of Conceptual and Understand with a corresponding decrease of Factual, Procedural, Remember and Apply learning objectives. On mid-term exams, the proportion of Remember, Understand, Analyze/Evaluate, Factual, and Conceptual questions increased. We found that guided inquiry learning objectives and mid-term exam questions are more conceptual than traditional courses and may help explain how active learning improves equity in introductory chemistry.

1. Introduction

The 2023 ACS (American Chemical Society) Guidelines for Undergraduate Chemistry Programs state that programs should “use effective pedagogies” and “teach their courses in a challenging, engaging, and inclusive manner that helps improve learning for all students”. In fact, the list of “effective pedagogies” includes “inquiry-based learning” [1] (p. 28), and evidence is building that active learning makes courses more equitable [2,3,4,5,6]. Some evidence indicates that equity in STEM courses may be especially problematic [7,8,9], while other evidence reveals that higher-order thinking in the classroom is linked to increases in learning [10,11] or equity [12,13,14]. Furthermore, studies show that what or how students learn is linked to how they are tested [15,16,17,18,19]. Therefore, information on the extent of higher-order thinking in classrooms and assessments is valuable in providing equitable, inclusive learning environments for students.

The United States Military Academy at West Point is an intensive, immersive experience that graduates approximately 1000 lieutenants for the United States Army each year [20]. West Point aspires to have each graduating class reflect the composition of soldiers of the United States, which requires us to admit and graduate students from all 50 states and varied demographics. Teaching effectively and inclusively is essential for us to meet our goals.

Within these goals of efficacy and inclusion, all students are required to complete or validate one semester of general or introductory chemistry. Teaching 500–600 students in General Chemistry I each semester in sections of 16–18 students requires 25–30 sections. The transitory nature of many of our faculty [21] and the large number of sections prompt us to structure General Chemistry I to provide similar content and opportunities across all sections of the course. General Chemistry has common learning objectives for each class meeting, and students take common mid-term and final exams. Generally, learning objectives for the course persist from year to year with minor changes in content and phrasing. Changes to General Chemistry I learning objectives are usually caused by changes in textbooks that use different vocabulary (for example, molar mass instead of molecular weight).

Traditional teaching at West Point emphasizes student preparation before class and practice on learning objectives during class. Students are expected to come to class having read assigned material from the textbook and attempted practice problems. When students have questions based on their work, they note these questions and bring them to the class meeting. Faculty often open class by asking, “What are your questions?” Once students’ questions are answered, they are told to “Take Boards”, and students spend the balance of class working on problems or answering questions on chalkboards around the classroom [20,22].

After a review of the General Chemistry program, the department implemented Process Oriented Guided Inquiry Learning (POGIL) [23,24]. Guided inquiry activities often approach information differently from many traditional textbooks. Having students draw conclusions from data, figures, and text in self-managed teams allowed them to practice leadership, teamwork, and communication skills, all of which are especially relevant to preparing our students to become Army officers. This pedagogical change required assessment, which included investigating how learning objectives and mid-term exams had changed.

Bloom’s revised taxonomy [25] offers a framework to assess the complexity of learning objectives and exam questions. The taxonomy has two dimensions: Cognitive Process and Knowledge. The Cognitive Process dimension organizes learning from simplest to most complex, using categories of Remember, Understand, Apply, Analyze, Evaluate, and Create. The Knowledge dimension sorts learning from concrete to abstract, using the categories Factual, Conceptual, Procedural, and Metacognitive. For a more detailed summary of Bloom’s revised taxonomy, see Asmussen [26].

Previous studies used Bloom’s revised taxonomy to explore assessments in physiology [27], biology [28], and natural sciences [29]. Learning objectives for chemistry in Czechia, Finland, and Turkey were compared to one another [30]. Learning objectives in introductory chemistry were also compared to faculty beliefs and exams [31]. Momsen and coworkers studied learning objectives and exams in undergraduate biology classes, noting that objectives and exam questions were not always at the same Bloom’s level [28]. Undergraduate biology exams were also often shown to be lower level than MCAT questions [32]. Spindler used Bloom’s taxonomy to study modeling projects in a differential equations class [33]. Similar work has been done by researchers who developed alternate frameworks to Bloom’s taxonomy [34,35,36,37,38,39]. To our knowledge, no one has used Bloom’s taxonomy to compare learning objectives and mid-term exam questions in a transition to guided inquiry. We aim here to use both dimensions of Bloom’s revised taxonomy to examine General Chemistry I learning objectives and mid-term exam questions before and during the transition from traditional to guided inquiry teaching.

We posed three research questions:

- How do learning objectives in traditional semesters and guided inquiry semesters compare in the Cognitive Process or Knowledge dimensions of Bloom’s revised taxonomy?

- How do mid-term exam questions in traditional semesters and guided inquiry semesters compare in the Cognitive Process or Knowledge dimensions of Bloom’s revised taxonomy?

- Did Bloom’s revised taxonomy levels for mid-term exam questions correspond to learning objectives?

2. Materials and Methods

The move from traditional teaching methods [22] to guided inquiry provided a natural experiment for comparing learning objectives and mid-term exam questions before and after the transition. The faculty member with primary responsibility for writing General Chemistry I objectives and exams during the transition held this position in Fall 2009, Fall 2014, Fall 2015, and Spring 2016. For this reason, Fall 2009 and Fall 2014 were chosen to represent semesters using traditional teaching. Fall 2015 and Spring 2016 were semesters using guided inquiry.

Three raters, each having significant teaching experience in STEM, coded all learning objectives and mid-term exam questions for the four semesters into subtypes of both the Cognitive Process and Knowledge dimensions of Bloom’s revised taxonomy. When a single learning objective or question contained tasks or concepts from more than one level in the taxonomy, the objective or question was split into separate items for coding. For example, the learning objective “Explain the three mass laws (mass conservation, definite composition, multiple proportions), and calculate the mass percent of an element in a compound” was split into “Explain the three mass laws” and “Calculate the mass percent of an element in a compound”. All learning objectives and coding are available in the Supporting Materials as Excel spreadsheets, Tables S1–S4. Mid-term exam questions and codes are Tables S7–S10.

All three raters compared their codes and discussed them until they came to an agreement at the major type level. That is, coders might disagree on whether an item was 1.1 Recognizing or 1.2 Retrieval, but consensus that an item was Level 1 or Remember in the Cognitive Process dimension was sufficient.

Codes for similar objectives and exam question topics from different semesters were reviewed for internal consistency. Due to the length of time (six months) required to completely analyze the objectives and exam questions, we looked back at our data to see if our analysis had changed over time. At least ten percent of codes from each major type in both dimensions were reviewed for consistency. When discrepancies were found, the three coders discussed the items again to reach a consensus.

Because the number of learning objectives in traditional semesters was different from the number of learning objectives in guided inquiry semesters, F-tests were done to check that the data sets were comparable. Data were analyzed using Pearson’s χ2-test for more than two categories [40] to identify differences within and between groups, as shown in Table 1. All categories in both dimensions of Bloom’s revised taxonomy were considered during coding. Because no learning objectives or exam questions were coded as Create and Metacognitive, these categories were not considered in the analysis once coding was complete. Analyze and Evaluate were combined so that fewer than 20% of cells in contingency tables had values less than 5 and no expected values were less than 1, meeting the requirements for Pearson’s χ2 analysis. Cramer’s V was chosen to measure effect sizes because the contingency tables were larger than 2 × 2 [41]. Calculations of F-tests and Pearson’s χ2 analysis were done in R Studio [42]. Cramer’s V was calculated using Microsoft Excel. Calculations are provided in Supporting Material as Tables S5, S6, S11 and S12.

Table 1.

Data sets for within-group and between-group comparisons.

Additionally, course averages and aggregated grades for the four semesters under investigation were also collected. Two-tailed t-tests were done to identify statistical differences between semesters. Enrollment in Fall 2015 was lower than in previous years because West Point began offering General Chemistry I in both fall and spring that year. Combining grades for Fall 2015 and Spring 2016 was done to facilitate comparison to Fall 2009 and Fall 2014.

This study was a subset of a larger project conducted under protocol approval number CA17-016-17 through West Point’s Collaborative Academic Institutional Review Board. This was a natural experiment rather than an intervention. No personally identifiable information was collected.

3. Results

3.1. Qualitative Comparison among Learning Objectives

The two dimensions of Bloom’s revised taxonomy, Cognitive Process and Knowledge, were used to categorize learning objectives from both traditional and guided inquiry introductory chemistry courses. To illustrate the categories for different learning objectives, a few coded examples are provided, first for the Cognitive Process dimension and second for the Knowledge dimension.

3.1.1. Cognitive Process Dimension

The Cognitive Process dimension has levels termed Remember, Understand, Apply, Analyze, Evaluate, and Create. As an example of Remember, consider the learning objective: “Identify the defining features of the states of matter”. The Remember learning objectives involve locating knowledge in long-term memory that is consistent with presented material. The Fall 2014 learning objective: “Distinguish between physical and chemical properties and changes”, is an example of the Understand Cognitive Process dimension because it involves constructing meaning from classification. The Apply Cognitive Process dimension is illustrated in the learning objective: “Use the formula of a compound to predict the number of moles and concentration of ions in solution”, as it represents applying a procedure to a familiar task. For the Cognitive Process dimension Analyze, the learning objective: “Compare the relative strengths of intermolecular forces (IMFs) and identify which IMF is predominant in a given substance” is a relevant example because it involves determining how elements fit or function within a structure. As an example of Evaluate, the learning objective: “Use formal charges to select the most important resonance structure of a molecule or ion”, illustrates a judgment based on imposed criteria or standards. As mentioned previously, no learning objectives were coded to belong to the Create category.

3.1.2. Knowledge Dimension

The same learning objectives can be used to illustrate the Knowledge dimension, which sorts learning from concrete to abstract, using the categories Factual, Conceptual, Procedural, and Metacognitive. In this study, no learning objectives were identified in the Metacognitive dimension, so results are provided for the first three dimensions. With respect to the Factual dimension, the learning objective “Identify the defining features of the states of matter” illustrates knowledge of specific details and elements. The learning objective, “Distinguish between physical and chemical properties and changes”, is an example of the Conceptual dimension because it involves knowledge of classifications and categories. For the Procedural dimension, the learning objective of “Use the formula of a compound to predict the number of moles and concentration of ions in solution” is an example because it involves knowledge of subject-specific skills and algorithms.

3.2. Quantitative Comparisons for Learning Objectives

General Chemistry I learning objectives for traditional and inquiry teaching were coded for both the Cognitive Process and Knowledge dimensions of Bloom’s revised taxonomy. Two cases were examined: a comparison of Fall 2009 and Fall 2014 (for traditional) and a comparison of Fall 2015 and 2016 (for guided inquiry). Statistical tests performed for within-group comparisons were the F-test, Pearson’s χ-squared, and Cramer’s V.

Statistical comparison of the category assignments between the traditional semesters’ learning objectives can be seen in Table 2. After performing an F-test and a χ2-test, no significant difference was found for either the Cognitive Process or Knowledge dimensions between the traditional semesters.

Table 2.

Statistical comparison of Cognitive Process and Knowledge dimensions between learning objectives between the traditional semesters.

The learning objectives for the Fall 2015 and Spring 2016 semesters were essentially unchanged, with only minor word editing. No statistical tests were done to compare these two sets of objectives.

Table 3 shows the statistical comparison of learning objectives between traditional and inquiry semesters. After performing an F-test, the χ2 (and p values) for the Cognitive Process and Knowledge dimensions were found to be 28.263 (3.199 × 10−6) and 12.794 (0.001667), respectively, indicating that there was a significant change between traditional and inquiry semesters. Furthermore, Cramer’s V calculations for both the Cognitive Process and Knowledge dimensions revealed a medium effect size. The combination of these results indicates that any difference should be largely attributed to the difference between traditional and inquiry teaching.

Table 3.

Statistical comparison of Cognitive Process and Knowledge dimensions between learning objectives of traditional and inquiry semesters.

Further comparison of the traditional and inquiry learning objectives was performed by examining the number of assigned learning objectives of each dimension’s category relative to the total number of learning objectives in each teaching style. For the Knowledge dimension, Table 4 illustrates the distribution of learning objectives belonging to the categories Factual, Conceptual, and Procedural, with respect to both the traditional and inquiry General Chemistry I courses. From this distribution, it is apparent that the greatest percentage of learning objectives belonging to the Knowledge dimension are Conceptual for the inquiry-based class of introductory chemistry (43.9%), while for the traditional introductory chemistry course, the greatest percentage is Procedural (48.6%).

Table 4.

Assignment of learning objectives of traditional and inquiry lessons based on Knowledge dimensions of Bloom’s revised taxonomy.

In a similar fashion, Table 5 contrasts the statistical distribution of learning objectives among traditional and inquiry-based introductory chemistry courses with respect to the Cognitive Process. Here, we see that the greatest percentage of learning objectives for the inquiry-based introductory chemistry course is Understand (41.5%), while that of the traditional is Apply (43.7%). Interestingly, the proportion of learning objectives that belonged to Remember was cut in half with the change from traditional to inquiry teaching, 30.3% to 14.6%, while Understand and Analyze/Evaluate were approximately doubled, 21.8% to 41.5% and 4.2% to 8.5%, respectively.

Table 5.

Assignment of learning objectives of traditional and inquiry lessons based on Cognitive Process dimensions of Bloom’s revised taxonomy.

3.3. Quantitative Comparison among Assigned Exam Points

Assigning specific Bloom’s revised taxonomy categories to exam questions was used to compare assessments in traditional and inquiry semesters. Exam questions were weighted by points. Because topics were covered in a different order in traditional and guided inquiry semesters, data were pooled for all three mid-term exams for each semester. Copies of the exams are provided in the Supporting Material as Figures S1–S4. Tables S7–S10 show coding for the individual exams. Unlike the learning objectives, which differed in total number, each semester had three mid-term exams worth 200 points each. The total number of points was the same across traditional and inquiry semesters. Therefore, there was no need to perform an F-test to show variance across different semesters.

Statistical comparison of exam points category assignments on traditional exams can be seen in Table 6. The χ2 (and p values) for the Cognitive Process and Knowledge dimensions were found to be 43.060 (2.389 × 10−9) and 12.719 (0.00173), respectively. These values for both the Cognitive Process and Knowledge dimensions indicate that the exams had slightly different distributions of questions, even though the exams were written by the same person. Although the difference between the traditional exams is significant, the Cramer’s V test found that the effect sizes for both the Cognitive Process and Knowledge dimensions were small.

Table 6.

Statistical comparison of Cognitive Process and Knowledge dimensions between mid-term exam questions, weighted by points, between the traditional semesters.

Statistical comparison of the category assignments of exam points on the inquiry exams can be seen in Table 7. The χ2 (and p values) for the Cognitive Process and Knowledge dimensions were 24.808 (1.694 × 10−5) and 2.443 (0.2948), respectively. From these results, the Cognitive Process dimension was found to be different between inquiry exams, but the Knowledge domain was not. A Cramer’s V test performed on the Cognitive Process dimension showed the effect size was small.

Table 7.

Statistical comparison of Cognitive Process and Knowledge dimensions between mid-term exam questions, weighted by points, between the inquiry semesters.

Statistical comparison of the category assignments of exam points between traditional and inquiry exams can be seen in Table 8. The χ2 (and p values) for the Cognitive Process and Knowledge dimensions was found to be 152.28 (<2.2 × 10−16) and 219.120 (<2.2 × 10−16), respectively. These results show that there was a significant difference between the traditional and inquiry exams. Additionally, when compared to the differences within each pedagogy, traditional vs. traditional and inquiry vs. inquiry, the difference between traditional and inquiry exams was much larger. The Cramer’s V test also found the effect size was much larger for the comparison between traditional and inquiry exams than either individual teaching style comparison. Further comparison of the traditional and inquiry-based exams was performed by examining the point distribution of each dimension category relative to the total number of points on all exams. Table 9 shows a breakdown of the assigned points for each type of exam question with respect to the Knowledge dimension. The traditional semesters had a much higher percentage of points assigned to Procedural questions, 64.2%, compared to the inquiry-based exams, 35.8%. While the inquiry-based exams had the highest percentage of points assigned to Conceptual questions, 43.8%, the traditional exams had only 18.8%. The percentage of points assigned to Factual questions was similar between the traditional and inquiry-based exams, with 17.0% and 20.4%, respectively.

Table 8.

Statistical comparison of Cognitive Process and Knowledge dimensions between mid-term exam points of traditional and inquiry semesters.

Table 9.

Assignment of points to questions of traditional and inquiry mid-term exams based on the Knowledge dimension of Bloom’s revised taxonomy. Each semester had three mid-term exams worth 200 points each.

Table 10 shows a breakdown of the assigned points for each type of exam with respect to the Cognitive Process dimension. The traditional exams had a higher percentage of points assigned to Apply questions (60%), compared to the inquiry-based exams (36%). However, the inquiry-based exams had a more even distribution of points to questions of all Cognitive Process dimension categories compared to the traditional exams. Of the four categories that had questions in each type, Remember, Understand, Apply, and Analyze/Evaluate, the traditional exams had a percentage breakdown of 16.5%, 19.0%, 60.0%, and 4.5%, respectively. For the same four categories, the inquiry exams had a percentage breakdown of 22.2%, 32.7%, 35.7%, and 9.4%, respectively.

Table 10.

Assignment of points to questions of traditional and inquiry mid-term exams based on the Cognitive Process dimension of Bloom’s revised taxonomy. Each semester had three mid-term exams worth 200 points each.

3.4. Comparison of Course Grades

Average course grades and grade distributions were compared for each of the semesters of this study, shown in Table 11. The course average for Fall 2009, 84.9%, was slightly higher than the other three semesters; however, t-tests found no significant differences between semesters. The Fall 2014, Fall 2015, and Spring 2016 course averages were 81.9%, 82.0%, and 80.8%, respectively. After combining Fall 2015 and Spring 2016 course data to account for enrollment changes, the differences in course averages between Fall 2014 and the combined Fall 2015 and Spring 2016 semesters is less than half a percent.

Table 11.

Course averages with standard deviations and enrollment for each of the four semesters in this study.

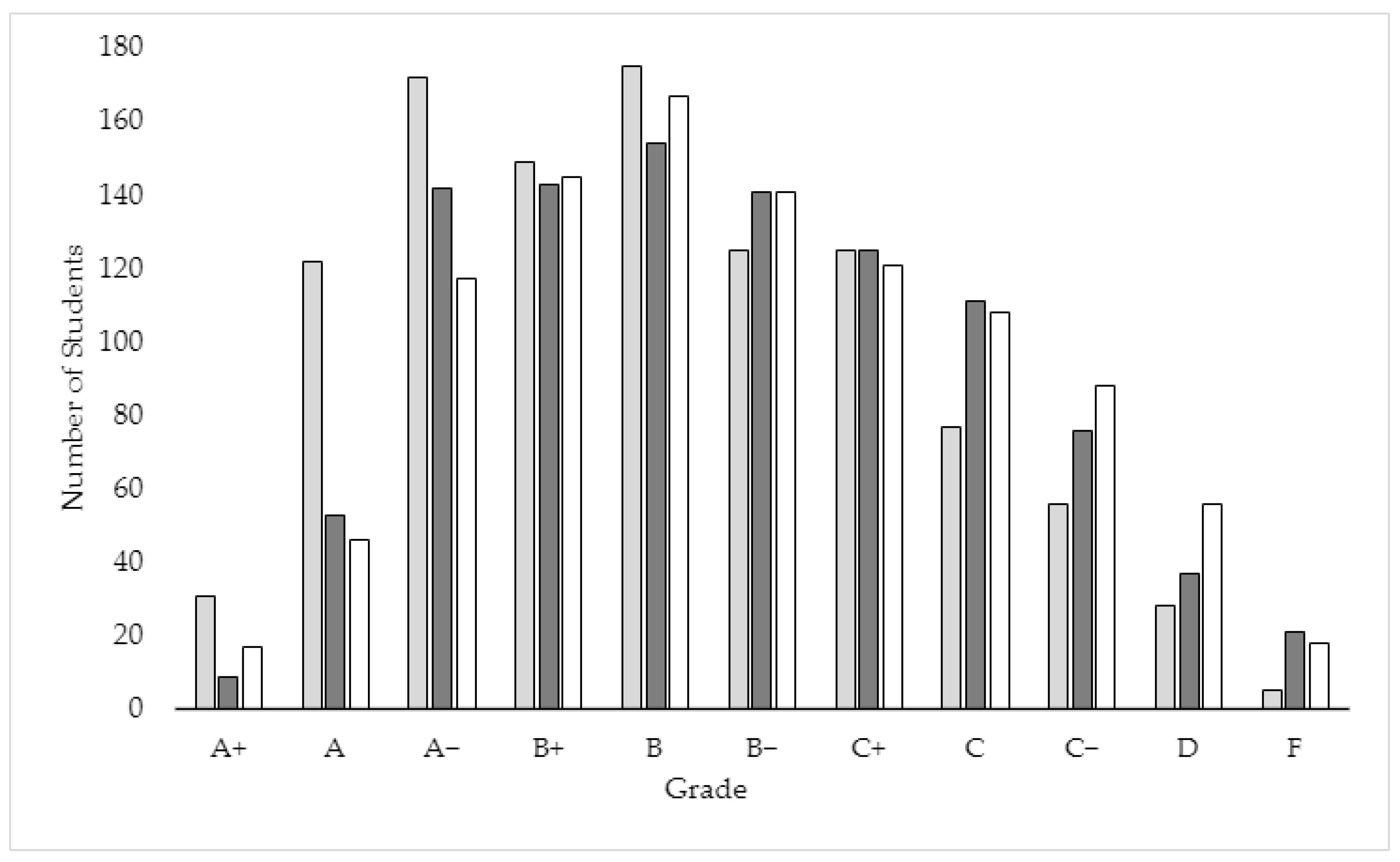

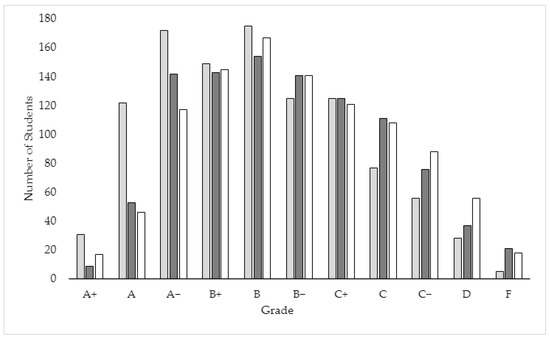

Further breakdown of the course grades by letter in a histogram can be seen in Figure 1. The higher average of the Fall 2009 semester may be related to a larger portion of A grades. However, a comparison of the grades from the Fall 2014 semester to the combined scores of the Fall 2015 and Spring 2016 semesters showed a similar distribution.

Figure 1.

Histogram of grades for Fall 2009 (light gray), Fall 2014 (dark gray), and Fall 2015/spring 2016 (white). Fall 2015 and Spring 2016 data were aggregated to facilitate comparison to earlier semesters.

4. Discussion

We set out to answer three specific research questions. We now summarize our findings.

4.1. How Do Learning Objectives in Traditional Semesters and Guided Inquiry Semesters Compare in the Cognitive Process or Knowledge Dimensions of Bloom’s Revised Taxonomy?

The statistical comparison of learning objectives in traditional and inquiry semesters seen in Table 3 shows significant results and a corresponding medium effect. The proportion of learning objectives classified according to Cognitive Process and Knowledge categories in the inquiry semesters illustrates the preponderance of objectives belonging to Conceptual (43.9%) and Understand (41.5%). This is in stark contrast to the traditional course, which revealed a greater proportion of learning objectives belonging to Procedural (48.6%) and Apply (43.7%). While there is a concern among educators that a dominance of Conceptual and Understand categories will arise at the expense of problem-solving ability, this does not seem to be the case for the inquiry-based semesters as a substantial percentage of learning objectives still belong to Procedural (40.2%) and Apply (35.4%).

These changes are even more surprising since during the transition to guided inquiry, the course director made the deliberate choice to retain the content and phrasing of learning objectives as much as possible (personal comm). Partly, this was motivated by simplicity; fewer changes streamlined the overall process. A second motivation was an “if it ain’t broke, don’t fix it” philosophy. Despite these efforts to minimize changes, the data suggests that learning objectives in traditional and guided-inquiry semesters were at different levels in both the Cognitive Process and Knowledge dimensions.

4.2. How Do Mid-Term Exam Questions in Traditional Semesters and Guided Inquiry Semesters Compare in the Cognitive Process or Knowledge Dimensions of Bloom’s Revised Taxonomy?

During traditional semesters, the Apply and Procedural exam questions comprised a large portion of the points on the exams, 60.3% and 64.2%, respectively. After adopting guided inquiry, the Apply and Procedural exam questions were both decreased to ~35% of the total points. The remaining Cognitive Process and Knowledge dimension categories increased in point value proportions by at least 5%, with the Conceptual category having the largest increase, 18.8% to 43.8%.

The proportions of Remember and Factual questions were similar for both traditional and guided inquiry semesters. This is likely attributable to concerns that free-response questions might be long or difficult to grade. The exam author made a deliberate choice to include a page of easy-to-grade questions (fill-in-the-blank or multiple choice, often Remember and/or Factual) to allow more time to grade free response questions asking students to sketch or provide rationale for Understand or Conceptual questions.

4.3. Did Bloom’s Revised Taxonomy Levels for Exam Questions Correspond to Learning Objectives?

Comparison of the Knowledge dimension for the traditional semesters shows an emphasis on Procedural learning objectives (Table 4, 48.6%) and exams (Table 9, 64.2%). Factual and Conceptual remained relatively equal in the proportions of learning objectives and exam points.

For the Knowledge dimension in inquiry semesters, learning objectives and exam points placed nearly identical emphasis on Conceptual (~44%) items. There was a slight increase (~5%) in Factual questions on exams compared to the learning objectives. The increased proportion of Factual questions over Procedural can be attributed to the deliberate choice to include a page of easy-to-grade questions.

In the Cognitive Process dimension, learning objectives (Table 5) and exam questions (Table 10) emphasized Procedural items. Both Remember and Understand had decreases in their respective proportion going from learning objectives to exam points, with Remember having the larger decrease (30.3% to 16.3%). Analyze/Evaluate remained constant for learning objectives and exam questions.

For the Inquiry semesters’ learning objectives, Understand (41.5%) was emphasized the most, followed by Apply (35.4%), Remember (14.6%), and then Analyze/Evaluate (8.5%). The exam questions favored Apply (35.7%), then Understand (32.7%), Remember (22.2%), and Analyze (9.4%). The increased emphasis on Remember questions on the exams, similar to Factual for the Knowledge dimension, was an effect of the deliberate choice to include a page of easy-to-grade questions.

4.4. Comparison of Grades

In addition to answering the above research questions, a comparison of average grades and grade distributions from the studied semesters found small fluctuations between the assigned grades regardless of each semester’s teaching approach. Therefore, despite having more conceptual learning objectives and mid-term exam questions in Fall 2015 and Spring 2016, asking more complex questions did not reduce course grades or hinder below-average students, contrary to faculty expectations [43,44,45].

4.5. Future Work

Analyzing objectives and exams from more recent semesters could identify trends in Bloom’s levels over time. Future work could also include analyzing learning objectives and mid-term exam questions using other frameworks [34,35,36,37,38,39]. 3D-LAP’s [35] focus on science and engineering practices, crosscutting concepts, and disciplinary core ideas might yield different results from a framework organized around definitions, concepts, and algorithms [37]. Conducting a similar analysis using Fink’s Taxonomy of Significant Learning [46] might also yield valuable insights.

4.6. Limitations

The Cognitive Process dimension and Knowledge dimensions of Bloom’s revised taxonomy are not totally independent of each other. Remembering Factual knowledge was more common than remembering other types of knowledge in an analysis of 940 biology assessment items [47]. Similarly, Apply and Procedural tended to occur together. Also, the taxonomy provides a list of action verbs that can indicate the level of Cognitive Process. These can be a good starting place, but they are not sufficient [47]. For example, “distinguish” is listed under 4.1 Differentiating, a subtype of Analyze, but “distinguish metals from nonmetals” is 2.3 Classifying, a subtype of Understanding. Properly assigning each item to an appropriate category requires considering how the objective or exam question is implemented in teaching and learning.

Learning objectives or exam questions categorized by Bloom’s revised taxonomy only provide opportunities for thinking at that level. Students may have chosen to work at a lower level. For example, objectives or exam questions intended to be higher level might be answered by recalling factual information [24] (p. 5). It is also possible that instructors taught at a lower level, perhaps inadvertently [48]. In particular, calculations that are Apply and Procedural often do not require Conceptual Understanding [49,50,51].

These findings may apply only to this context. Further work at other universities would be required to generalize these findings. Guided inquiry’s emphasis on analyzing information may be especially suited to supporting higher levels of Bloom’s taxonomy. Other active learning strategies, such as think-pair-share or jigsaw, may not show changes in Bloom’s levels.

5. Conclusions

Using Bloom’s revised taxonomy, we coded and analyzed learning objectives and general chemistry exams for a traditional and a guided-inquiry class, over four different semesters (Fall 2009, Fall 2014, Fall 2015, and Spring 2016). Analysis of learning objectives for the guided-inquiry semesters (Fall 2015 and Spring 2016) revealed more Understand, Analyze and Conceptual, with significantly fewer Remember and Factual.

In addition to the analysis of learning objectives, similar coding and statistical analyses were performed for exams. These data show more Understand, Analyze, and Conceptual questions, with fewer Procedural and Apply questions. Small increases in Remember and Factual reflected a choice to facilitate grading. Despite this, guided inquiry semesters generally gave students many opportunities to consider complex questions. Several studies indicate that faculty choose lower-level assessments from concerns that only a few students could successfully reason at higher levels [43,44,45]. This approach may be counterproductive, as more conceptual, less algorithmic classes have been shown to be more equitable [4,16].

All semesters showed some correspondence between exam questions and learning objectives within Bloom’s revised taxonomy. Learning objectives and mid-term exam questions in traditional semesters had similar proportions of Factual and Conceptual items, but Procedural questions on mid-term exams were overrepresented relative to learning objectives. Guided inquiry semesters showed strong correspondence between Bloom’s taxonomy levels in learning objectives and mid-term exam questions. The closer alignment of learning objectives and mid-term exam questions in the guided inquiry semesters is consistent with a literature recommendation for equitable teaching [52].

Guided inquiry has the capacity to enrich complexity and enhance learning. Making learning accessible to a wide variety of learners is paramount in any classroom, yet especially important for introductory STEM courses in which problems of retention and persistence are all too common [7,8,9,53]. The environment of the guided inquiry classroom promotes and encourages participation from all learners, regardless of course prerequisites and former STEM experiences, thus making it an environment of equity, belonging, and inclusivity. Guided inquiry then becomes the catalyst to tailor learning objectives and assess them in such a way as to ensure mastery of concepts at a deeper level.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/educsci14090943/s1, Figure S1: Fall 2009 Mid-Term Exams Solutions; Figure S2: Fall 2014 Mid-Term Exams Solutions; Figure S3: Fall 2015 Mid-Term Exams Solutions; Figure S4: Spring 2016 Mid-Term Exams Solutions; Table S1: 2009 Learning Objectives Coded; Table S2: 2014 Learning Objectives Coded; Table S3: 2015 Learning Objectives Coded; Table S4: 2016 Learning Objectives Coded; Table S5: Analysis of Cognitive Learning Objectives; Table S6: Analysis of Knowledge Learning Objectives; Table S7: 2009 Exam Questions Coded; Table S8: 2014 Exam Questions Coded; Table S9: 2015 Exam Questions Coded; Table S10: 2016 Exam Questions Coded; Table S11: Analysis of Cognitive Dimension Mid-Term Exams; Table S12: Analysis of Knowledge Dimension Mid-Term Exams.

Author Contributions

Conceptualization, E.M.K.; methodology, E.M.K., C.K. and K.J.M.; formal analysis, E.M.K., C.K. and K.J.M.; investigation, E.M.K., C.K. and K.J.M.; resources, E.M.K.; data curation, E.M.K., C.K. and K.J.M.; writing—original draft preparation, E.M.K., C.K. and K.J.M.; writing—review and editing, E.M.K., C.K. and K.J.M.; visualization, E.M.K., C.K. and K.J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was a subset of a larger project conducted under protocol approval number CA17-016-17 through West Point’s Collaborative Academic Institutional Review Board. This was a natural experiment rather than an intervention. No personally identifiable information was collected.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Acknowledgments

We thank the reviewers and the academic editor for their thorough reading of this manuscript and for helpful suggestions to improve it.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Committee on Professional Training. 2023 ACS Guidelines for Undergraduate Chemistry Programs; American Chemical Society: Washington, DC, USA, 2023; p. 32. Available online: https://www.acs.org/education/policies/acs-approval-program.html (accessed on 22 May 2024).

- Theobald, E.J.; Hill, M.J.; Tran, E.; Agrawal, S.; Arroyo, E.N.; Behling, S.; Chambwe, N.; Cintrón, D.L.; Cooper, J.D.; Dunster, G.; et al. Active Learning Narrows Achievement Gaps for Underrepresented Students in Undergraduate Science, Technology, Engineering, and Math. Proc. Natl. Acad. Sci. USA 2020, 117, 6476–6483. [Google Scholar] [CrossRef] [PubMed]

- Casey, J.R.; Supriya, K.; Shaked, S.; Caram, J.R.; Russell, A.; Courey, A.J. Participation in a High-Structure General Chemistry Course Increases Student Sense of Belonging and Persistence to Organic Chemistry. J. Chem. Educ. 2023, 100, 2860–2872. [Google Scholar] [CrossRef]

- Lin, Q.; Kirsch, P.; Turner, R. Numeric and Conceptual Understanding of General Chemistry at a Minority Institution. J. Chem. Educ. 1996, 73, 1003. [Google Scholar] [CrossRef]

- Haak, D.C.; HilleRisLambers, J.; Pitre, E.; Freeman, S. Increased Structure and Active Learning Reduce the Achievement Gap in Introductory Biology. Science 2011, 332, 1213–1216. [Google Scholar] [CrossRef]

- Eddy, S.L.; Hogan, K.A. Getting under the Hood: How and for Whom Does Increasing Course Structure Work. CBE—Life Sci. Educ. 2014, 13, 453–468. [Google Scholar] [CrossRef] [PubMed]

- Harris, R.B.; Mack, M.R.; Bryant, J.; Theobald, E.J.; Freeman, S. Reducing Achievement Gaps in Undergraduate General Chemistry Could Lift Underrepresented Students into a “Hyperpersistent Zone”. Sci. Adv. 2020, 6, eaaz5687. [Google Scholar] [CrossRef]

- Riegle-Crumb, C.; King, B.; Irizarry, Y. Does STEM Stand Out? Examining Racial/Ethnic Gaps in Persistence Across Postsecondary Fields. Educ. Res. 2019, 48, 133–144. [Google Scholar] [CrossRef] [PubMed]

- Hatfield, N.; Brown, N.; Topaz, C.M. Do Introductory Courses Disproportionately Drive Minoritized Students out of STEM Pathways? PNAS Nexus 2022, 1, pgac167. [Google Scholar] [CrossRef]

- Agarwal, P.K. Retrieval Practice & Bloom’s Taxonomy: Do Students Need Fact Knowledge before Higher Order Learning? J. Educ. Psychol. 2019, 111, 189–209. [Google Scholar] [CrossRef]

- Barikmo, K.R. Deep Learning Requires Effective Questions During Instruction. Kappa Delta Pi Rec. 2021, 57, 126–131. [Google Scholar] [CrossRef]

- Clark, T.M. Narrowing Achievement Gaps in General Chemistry Courses with and without In-Class Active Learning. J. Chem. Educ. 2023, 100, 1494–1504. [Google Scholar] [CrossRef]

- Schwarz, C.E.; DeGlopper, K.S.; Esselman, B.J.; Stowe, R.L. Tweaking Instructional Practices Was Not the Answer: How Increasing the Interactivity of a Model-Centered Organic Chemistry Course Affected Student Outcomes. J. Chem. Educ. 2024, 101, 2215–2230. [Google Scholar] [CrossRef]

- Ralph, V.R.; Scharlott, L.J.; Schwarz, C.E.; Becker, N.M.; Stowe, R.L. Beyond Instructional Practices: Characterizing Learning Environments That Support Students in Explaining Chemical Phenomena. J. Res. Sci. Teach. 2022, 59, 841–875. [Google Scholar] [CrossRef]

- Crooks, T.J. The Impact of Classroom Evaluation Practices on Students. Rev. Educ. Res. 1988, 58, 438–481. [Google Scholar] [CrossRef]

- Ralph, V.R.; Scharlott, L.J.; Schafer, A.G.L.; Deshaye, M.Y.; Becker, N.M.; Stowe, R.L. Advancing Equity in STEM: The Impact Assessment Design Has on Who Succeeds in Undergraduate Introductory Chemistry. JACS Au 2022, 2, 1869–1880. [Google Scholar] [CrossRef] [PubMed]

- Scouller, K. The Influence of Assessment Method on Students’ Learning Approaches: Multiple Choice Question Examination versus Assignment Essay. High. Educ. 1998, 35, 453–472. [Google Scholar] [CrossRef]

- Stowe, R.L.; Scharlott, L.J.; Ralph, V.R.; Becker, N.M.; Cooper, M.M. You Are What You Assess: The Case for Emphasizing Chemistry on Chemistry Assessments. J. Chem. Educ. 2021, 98, 2490–2495. [Google Scholar] [CrossRef]

- Stowe, R.L.; Cooper, M.M. Assessment in Chemistry Education. Isr. J. Chem. 2019, 59, 598–607. [Google Scholar] [CrossRef]

- United States Military Academy West Point Web Page. 2024. Available online: https://www.westpoint.edu/ (accessed on 22 May 2024).

- Koleci, C.; Kowalski, E.M.; McDonald, K.J. The STEM Faculty Experience at West Point. J. Coll. Sci. Teach. 2022, 51, 29–34. [Google Scholar] [CrossRef]

- Ertwine, D.R.; Palladino, G.F. The Thayer Concept vs. Lecture: An Alternative to PSI. J. Coll. Sci. Teach. 1987, 16, 524–528. [Google Scholar]

- Farrell, J.J.; Moog, R.S.; Spencer, J.N. A Guided-Inquiry General Chemistry Course. J. Chem. Educ. 1999, 76, 570. [Google Scholar] [CrossRef]

- Simonson, S.R. (Ed.) POGIL: An Introduction to Process Oriented Guided Inquiry Learning for Those Who Wish to Empower Learners, 1st ed.; Stylus: Sterling, VA, USA, 2019. [Google Scholar]

- Bloom, B.S. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives, Complete ed.; Anderson, L.W., Krathwohl, D.R., Eds.; Longman: New York, NY, USA, 2001. [Google Scholar]

- Asmussen, G.; Rodemer, M.; Bernholt, S. Blooming Student Difficulties in Dealing with Organic Reaction Mechanisms—An Attempt at Systemization. Chem. Educ. Res. Pract. 2023, 24, 1035–1054. [Google Scholar] [CrossRef]

- Casagrand, J.; Semsar, K. Redesigning a Course to Help Students Achieve Higher-Order Cognitive Thinking Skills: From Goals and Mechanics to Student Outcomes. Adv. Physiol. Educ. 2017, 41, 194–202. [Google Scholar] [CrossRef]

- Momsen, J.L.; Long, T.M.; Wyse, S.A.; Ebert-May, D. Just the Facts? Introductory Undergraduate Biology Courses Focus on Low-Level Cognitive Skills. Life Sci. Educ. 2010, 9, 435–440. [Google Scholar] [CrossRef]

- Momsen, J.; Offerdahl, E.; Kryjevskaia, M.; Montplaisir, L.; Anderson, E.; Grosz, N. Using Assessments to Investigate and Compare the Nature of Learning in Undergraduate Science Courses. Life Sci. Educ. 2013, 12, 239–249. [Google Scholar] [CrossRef]

- Elmas, R.; Rusek, M.; Lindell, A.; Nieminen, P.; Kasapoğlu, K.; Bílek, M. The Intellectual Demands of the Intended Chemistry Curriculum in Czechia, Finland, and Turkey: A Comparative Analysis Based on the Revised Bloom’s Taxonomy. Chem. Educ. Res. Pract. 2020, 21, 839–851. [Google Scholar] [CrossRef]

- Sanabria-Ríos, D.; Bretz, S.L. Investigating the Relationship between Faculty Cognitive Expectations about Learning Chemistry and the Construction of Exam Questions. Chem. Educ. Res. Pract. 2010, 11, 212–217. [Google Scholar] [CrossRef]

- Zheng, A.Y.; Lawhorn, J.K.; Lumley, T.; Freeman, S. Application of Bloom’s Taxonomy Debunks the “MCAT Myth”. Science 2008, 319, 414–415. [Google Scholar] [CrossRef] [PubMed]

- Spindler, R. Aligning Modeling Projects with Bloom’s Taxonomy. Probl. Resour. Issues Math. Undergrad. Stud. 2020, 30, 601–616. [Google Scholar] [CrossRef]

- Mac an Bhaird, C.; Nolan, B.C.; O’Shea, A.; Pfeiffer, K. A Study of Creative Reasoning Opportunities in Assessments in Undergraduate Calculus Courses. Res. Math. Educ. 2017, 19, 147–162. [Google Scholar] [CrossRef]

- Laverty, J.T.; Underwood, S.M.; Matz, R.L.; Posey, L.A.; Carmel, J.H.; Caballero, M.D.; Fata-Hartley, C.L.; Ebert-May, D.; Jardeleza, S.E.; Cooper, M.M. Characterizing College Science Assessments: The Three-Dimensional Learning Assessment Protocol. PLoS ONE 2016, 11, e0162333. [Google Scholar] [CrossRef]

- Reed, Z.; Tallman, M.A.; Oehrtman, M.; Carlson, M.P. Characteristics of Conceptual Assessment Items in Calculus. Probl. Resour. Issues Math. Undergrad. Stud. 2022, 32, 881–901. [Google Scholar] [CrossRef]

- Smith, K.C.; Nakhleh, M.B.; Bretz, S.L. An Expanded Framework for Analyzing General Chemistry Exams. Chem. Educ. Res. Pract. 2010, 11, 147–153. [Google Scholar] [CrossRef]

- Tallman, M.A.; Carlson, M.P.; Bressoud, D.M.; Pearson, M. A Characterization of Calculus I Final Exams in U.S. Colleges and Universities. Int. J. Res. Undergrad. Math. Educ. 2016, 2, 105–133. [Google Scholar] [CrossRef]

- Zoller, U. Algorithmic, LOCS and HOCS (Chemistry) Exam Questions: Performance and Attitudes of College Students. Int. J. Sci. Educ. 2002, 24, 185–203. [Google Scholar] [CrossRef]

- Hazra, A.; Gogtay, N. Biostatistics Series Module 4: Comparing Groups—Categorical Variables. Indian J. Dermatol. 2016, 61, 385. [Google Scholar] [CrossRef]

- Sharpe, D. Chi-Square Test Is Statistically Significant: Now What? Pract. Assess. Res. Eval. 2015, 20, 8. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. 2022. Available online: https://www.R-project.org/ (accessed on 28 May 2024).

- Bergqvist, T. How Students Verify Conjectures: Teachers’ Expectations. J. Math. Teach. Educ. 2005, 8, 171–191. [Google Scholar] [CrossRef]

- Bergqvist, E. University Mathematics Teachers’ Views on the Required Reasoning in Calculus Exams. Math. Enthus. 2012, 9, 371–408. [Google Scholar] [CrossRef]

- Fleming, K.; Ross, M.; Tollefson, N.; Green, S.B. Teachers’ Choices of Test-Item Formats for Classes with Diverse Achievement Levels. J. Educ. Res. 1998, 91, 222–228. [Google Scholar] [CrossRef]

- Fink, L.D. Creating Significant Learning Experiences: An Integrated Approach to Designing College Courses, Revised and Updated ed.; Jossey-Bass Higher and Adult Education Series; Jossey-Bass: San Francisco, CA, USA, 2013. [Google Scholar]

- Larsen, T.M.; Endo, B.H.; Yee, A.T.; Do, T.; Lo, S.M. Probing Internal Assumptions of the Revised Bloom’s Taxonomy. Life Sci. Educ. 2022, 21, ar66. [Google Scholar] [CrossRef] [PubMed]

- Schafer, A.G.L.; Kuborn, T.M.; Schwarz, C.E.; Deshaye, M.Y.; Stowe, R.L. Messages about Valued Knowledge Products and Processes Embedded within a Suite of Transformed High School Chemistry Curricular Materials. Chem. Educ. Res. Pract. 2022, 24, 71–88. [Google Scholar] [CrossRef]

- Nakhleh, M.B. Are Our Students Conceptual Thinkers or Algorithmic Problem Solvers? Identifying Conceptual Students in General Chemistry. J. Chem. Educ. 1993, 70, 52. [Google Scholar] [CrossRef]

- Pickering, M. Further Studies on Concept Learning versus Problem Solving. J. Chem. Educ. 1990, 67, 254. [Google Scholar] [CrossRef]

- Stamovlasis, D.; Tsaparlis, G.; Kamilatos, C.; Papaoikonomou, D.; Zarotiadou, E. Conceptual Understanding versus Algorithmic Problem Solving: Further Evidence from a National Chemistry Examination. Chem. Educ. Res. Pract. 2005, 6, 104–118. [Google Scholar] [CrossRef]

- Salehi, S.; Ballen, C.J.; Trujillo, G.; Wieman, C. Inclusive Instructional Practices: Course Design, Implementation, and Discourse. Front. Educ. 2021, 6, 602639. [Google Scholar] [CrossRef]

- Seymour, E.; Hunter, A.-B.; Thiry, H.; Weston, T.J.; Harper, R.P.; Holland, D.G.; Koch, A.K.; Drake, B.M. Talking about Leaving Revisited: Persistence, Relocation, and Loss in Undergraduate STEM Education; Springer International Publishing AG: Cham, Switzerland, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).