The Impact of AI-Generated Instructional Videos on Problem-Based Learning in Science Teacher Education

Abstract

1. Introduction

2. Background

2.1. Instructional Videos into PBL Contexts

- Introduction: Videos can introduce essential background knowledge and concepts necessary for understanding the problem context.

- During problem-solving: Videos can offer just-in-time support by demonstrating specific skills or procedures relevant to particular stages of the problem-solving process.

- Post-problem reflection: Videos can provide expert commentary or alternative solutions, facilitating deeper reflection and learning.

- Curating authentic and relevant content: Instructional videos should simulate real-world scenarios that resonate with learners’ experiences and challenge their problem-solving skills.

- Promoting multimedia-supported learning: Video triggers and productions foster participatory and interactive learning environments that cater to diverse student preferences.

- Addressing challenges: Science teacher education in programs and adequate technological support are essential to overcome barriers such as resource limitations and educator readiness.

- Leveraging AI and animation: Advanced technologies like AI chatbots and animated videos enhance adaptability, interactivity, and engagement, especially in addressing complex or abstract topics.

2.2. Observational and Demonstration Learning in Video Training

- Clear objectives: The preview should clearly state the instructional video’s learning goals.

- Conversational style: The narrative should be engaging and easy to follow, resembling a conversation.

- Introduction of key concepts: Critical or novel concepts should be briefly introduced, priming learners for deeper understanding during the demonstration.

2.3. AI-Generated Instructional Videos into PBL Contexts

3. Materials and Methods

3.1. Research Purpose and Questions

- RQ1: Do AI-generated instructional videos, with and without a preview feature, affect pre-service science teachers’ ability to apply scientific concepts?

- RQ2: Do AI-generated instructional videos, with and without a preview feature, influence pre-service science teachers’ immediate and delayed problem-solving performance?

- RQ3: Do AI-generated instructional videos, with and without a preview feature, impact pre-service science teachers’ self-efficacy in addressing complex scientific problems?

3.2. Research Design

- Pre-test: A 10-item multiple-choice and 2-item short-answer test assessed baseline knowledge (e.g., ‘Which of Newton’s laws explains why an object in motion stays in motion unless acted upon by an external force?’).

- Post-test: Administered immediately after the intervention, the post-test used a parallel format with reworded or scenario-based questions assessing the same concepts.

- Transfer test: Transfer was assessed by requiring participants to apply Newton’s laws to a novel, real-world context—analyzing a video of a complex mechanical system (e.g., a bicycle braking system)—distinct from the training examples (e.g., simple projectile motion problems). This ensured measurement of genuine knowledge application rather than simple recall.

3.3. Participants

3.4. Instruments

3.4.1. Training Materials

- Interpret: Participants are guided to interpret and understand natural phenomena by observing and analyzing scientific events such as the phases of the moon, changes in shadow length, and the motion of objects under various forces. Teachers provide context for these tasks, helping students identify key observational goals and comprehend the significance of the phenomena. Newton’s laws are introduced through AI-generated scenarios, such as a ball rolling on different surfaces to demonstrate inertia and force. AI tools offer culturally and contextually relevant visual prompts and scenarios to scaffold understanding. This focus on interpretation laid the groundwork for the transfer task, which required participants to interpret a complex mechanical system.

- Design: Participants collaborate with AI tools to develop detailed observation plans. For instance, they may design templates to measure and record motion data, such as acceleration or force diagrams. These tools support multi-lingual and multimodal interactions, allowing students to generate text-based or visual representations of their observations.

- Evaluate: Participants critically analyze their observation data against Newtonian principles. AI feedback systems help highlight inaccuracies or areas of improvement, promoting reflection. Peer discussion sessions, guided by AI-generated insights, enable collaborative evaluation of findings, fostering deeper understanding of concepts such as inertia and action-reaction pairs.

- Articulate: Participants provide their interpreted observations and reasoning to peers through presentations or reports. AI assistance ensures clarity and precision, offering real-time feedback and generating culturally relevant explanations to support their arguments, particularly around Newton’s laws.

- Interpret: Participants are introduced to experimental scenarios, such as testing the effects of mass on acceleration or analyzing the forces acting on an inclined plane. Teachers guide students to understand the purpose, methodology, and significance of these experiments. AI-generated videos include dynamic animations and visual overlays to enhance comprehension of experimental setups and protocols involving Newton’s laws.

- Design: Using AI tools, each participant designs experimental workflows, including hypotheses, variables, and data collection methods. AI offers real-time assistance by suggesting modifications to ensure safety and experimental validity, tailoring feedback to students’ cultural and educational contexts. Experiments emphasize key principles such as force, mass, and acceleration.

- Evaluate: Participants evaluate their experimental results by comparing their findings with expected outcomes derived from Newton’s laws. AI-powered analytics tools guide this process, identifying trends and highlighting discrepancies. Peer assessment sessions, supported by AI-generated discussion prompts, further refine their understanding of concepts such as force interactions and equilibrium.

- Articulate: Participants can articulate their experimental findings through interactive presentations, supported by AI-generated visual aids such as graphs, tables, and infographics. These outputs enable effective communication of results to diverse audiences, fostering scientific literacy with a focus on Newtonian mechanics.

- Interpret: The main researcher introduces complex scientific challenges, such as designing a water filtration system or analyzing the dynamics of a pendulum. AI helps students interpret these problems by breaking them into manageable components, using simulations to visualize underlying principles, including Newton’s laws.

- Design: Participants design solutions using AI-generated tools to create and refine prototypes or simulate outcomes. For example, they might model a pendulum’s motion to study forces and acceleration or simulate vehicle collisions to understand action-reaction pairs. AI tools provide iterative feedback, ensuring designs are scientifically sound and feasible.

- Evaluate: Participants test their solutions using AI-driven simulations and evaluate the results. They reflect on the success of their approaches and identify areas for improvement. AI-generated comparisons with best-practice solutions help them understand gaps in their designs and how Newton’s principles apply.

- Articulate: Participants can present their problem-solving processes and outcomes to peers and instructors, supported by AI-generated explanatory content. Real-time AI translation and contextual feedback help them effectively communicate complex ideas, particularly those related to Newtonian mechanics.

- Interpret: The main researcher guides students to understand the importance of using digital tools for effective learning. The interactive features of the AI-generated videos, such as pausing, rewinding, and adjusting playback, are demonstrated to help students optimize their learning experience. Videos include real-time examples of Newton’s laws in action to reinforce understanding.

- Design: Participants design their learning schedules and practices around these interactive features. AI provides personalized suggestions, such as recommending specific playback settings for segments illustrating complex Newtonian principles.

- Evaluate: Participants reflect on the utility of the interactive features in improving their comprehension and retention of Newton’s laws. Peer feedback and self-assessment help identify which features are most effective for different learning styles.

- Articulate: Participants articulate their experiences with the interactive features through written or verbal feedback, supported by AI prompts. This phase encourages how these tools enhance their learning of fundamental physics concepts.

- Preview videos: The preview videos provide concise overviews of the scientific phenomena or experimental techniques covered in each chapter. The narration begins conversationally, posing a problem or question to engage learners (e.g., “Have you ever wondered why a rolling ball eventually stops?” or “What happens when you push a stationary object?”). These videos include brief demonstrations of critical steps, enhanced by animations and zooming to emphasize key details. For example, a preview video might show a ball rolling across different surfaces (carpet vs. smooth floor) to illustrate the concept of friction and how it relates to Newton’s First Law (inertia). Another example could show a brief animation of a rocket launching to introduce the concept of action-reaction pairs (Newton’s Third Law). The average duration of preview videos is 1.30 min, with a five-second pause at the end to help students process the information (range 1.15−1.60). These previews served as initial exposure to the concepts tested in the pre- and post-tests.

- Demonstration videos: Demonstration videos deliver detailed step-by-step instructions for conducting experiments or solving scientific problems. Narration is structured to explain both actions and outcomes (e.g., “We will now measure the acceleration of a cart as we apply different forces, demonstrating Newton’s Second Law. First, we’ll measure the mass of the cart…” or “Observe how the force applied to the cart directly affects its acceleration.”). These videos incorporate AI-enhanced visuals, such as showing force vectors acting on an object or graphing the relationship between force and acceleration, to reinforce learning. For instance, a video could demonstrate how changing the mass of an object affects its acceleration when a constant force is applied. Another example could showcase the collision of two objects, demonstrating the conservation of momentum and Newton’s Third Law. Each step is followed by a brief pause to allow reflection, and the entire video concludes with a five-second pause. Demonstration videos have an average length of 1.77 min. These demonstrations directly addressed the content assessed in the post-test and provided concrete examples that aided in conceptual understanding relevant to the transfer task.

- Final video files: Accompanying the video tutorials are practice files, designed to replicate the scenarios presented in the videos. These files include step-by-step instructions for conducting experiments, paired with before-and-after images or data tables to guide learners through each task. For example, a file might provide instructions for calculating the force required to accelerate an object at a given rate (applying Newton’s Second Law) or analyzing the forces acting on an object on an inclined plane. Another example could involve analyzing the motion of a projectile, calculating its trajectory based on initial velocity and launch angle (incorporating concepts from Newton’s laws). These tasks allow students to apply what they have learned from the videos, with the option of re-visiting the segments for guidance. These practice files offered opportunities for applying learned concepts, reinforcing learning and preparing participants for the application-focused aspects of the post-test and the transfer task.

3.4.2. Self-Efficacy Scale

- “I interpret a graph of velocity versus time to determine the acceleration of an object.” (Relates to understanding motion and Newton’s First Law/Inertia if the velocity is constant, and to Newton’s Second Law if the velocity changes).

- “I identify the forces acting on a stationary object.” (Relates to Newton’s First Law and the concept of balanced forces).

- “I describe the force required to accelerate an object given its mass and acceleration.” (Directly relates to Newton’s Second Law: F = ma).

- “I analyze the forces acting on a roller coaster car as it moves along an inclined track, including gravity, normal force, and friction.” (Combines Newton’s First and Second Laws in a realistic scenario).

- “I predict the speed of a roller coaster car at different points on the track using the principles of energy conservation and Newton’s Laws.” (Integrates multiple concepts and laws within the PBL context).

- “I explain how Newton’s Third Law (action-reaction) affects the interaction between the roller coaster car and the track, especially during turns.” (Directly relates to a key aspect of roller coaster design).

3.4.3. Task Performance Tests

- A.

- Pre-Test, Immediate Post-Test, and Delayed Post-Test: The pre-test, conducted before the tutorial, consisted of seven tasks designed to evaluate participants’ initial understanding and skills related to science phenomena. These tasks were carefully aligned with the content of the AI-generated instructional videos, focusing on key scientific concepts and processes, including:

- Adjusting variables in a simulation to observe changes in a scientific phenomenon.

- Identifying relationships between dependent and independent variables in an experiment.

- Interpreting data from graphs or tables representing scientific phenomena.

- Organizing observations into structured formats, such as diagrams or flowcharts.

- Creating and labeling a model to represent a specific science concept.

- B.

- Transfer test: The transfer test evaluated participants’ ability to apply their knowledge and skills to science phenomena that were not explicitly addressed in the AI-generated instructional videos. This test aimed to measure adaptability, critical thinking, and independent problem-solving skills. The transfer test included a separate instruction file and four new tasks:

- Adjusting environmental variables in a simulation to observe changes in system behavior.

- Reorganizing data within a visual representation, such as a graph or chart.

- Identifying patterns in new experimental results.

- Creating a hypothesis and explaining its implications using unfamiliar scientific data.

3.5. Procedure

- Evaluating existing tools: Analyzing the effectiveness of current AI-powered educational applications like ChatGPT, Claude, and Gemini to identify areas for improvement.

- Designing integration frameworks: Developing frameworks for seamlessly integrating AI into existing digital literacy curriculums.

- Interactive multimedia content: Designing and developing educational videos and images enriched with interactive digital elements to enhance learners’ digital literacy across various subjects.

- AI-generated video production: Utilizing platforms like Sudowrite (https://www.sudowrite.com, accessed on 8 December 2024), Visla (https://www.visla.us, accessed on 8 December 2024), or Jasper (https://www.jasper.ai, accessed on 8 December 2024) for creating engaging educational video content through AI assistance.

- Pre-test: Participants completed the pre-test, which assessed their baseline knowledge of Newtonian mechanics and related problem-solving skills. They also completed the PrSSES, measuring their perceived self-efficacy in problem-solving.

- Intervention (Video conditions): Participants were exposed randomly to two conditions of AI-generated instructional videos:

- Condition 1: Video with preview: Participants watched AI-generated instructional videos on Newtonian mechanics with an embedded preview feature (video-with-preview condition).

- Condition 2: Video without preview: Participants watched the same AI-generated instructional videos on Newtonian mechanics without the embedded preview feature (video-without-preview condition).

- Immediate post-test: After viewing both video conditions, participants completed the immediate post-test, which assessed their understanding and application of the concepts presented in the videos. They also completed the PrSSES again to measure any changes in self-efficacy.

- Delayed post-test: Seven days after the intervention, participants completed the delayed post-test to assess retention of the learned material over time.

- Transfer test: Immediately following the delayed post-test, participants completed the transfer test. This assessed their ability to apply the learned concepts to novel problems, specifically a roller coaster design PBL activity.

3.6. Data Collection and Data Analysis

3.7. Ethical Considerations

4. Results

4.1. Descriptive Analysis

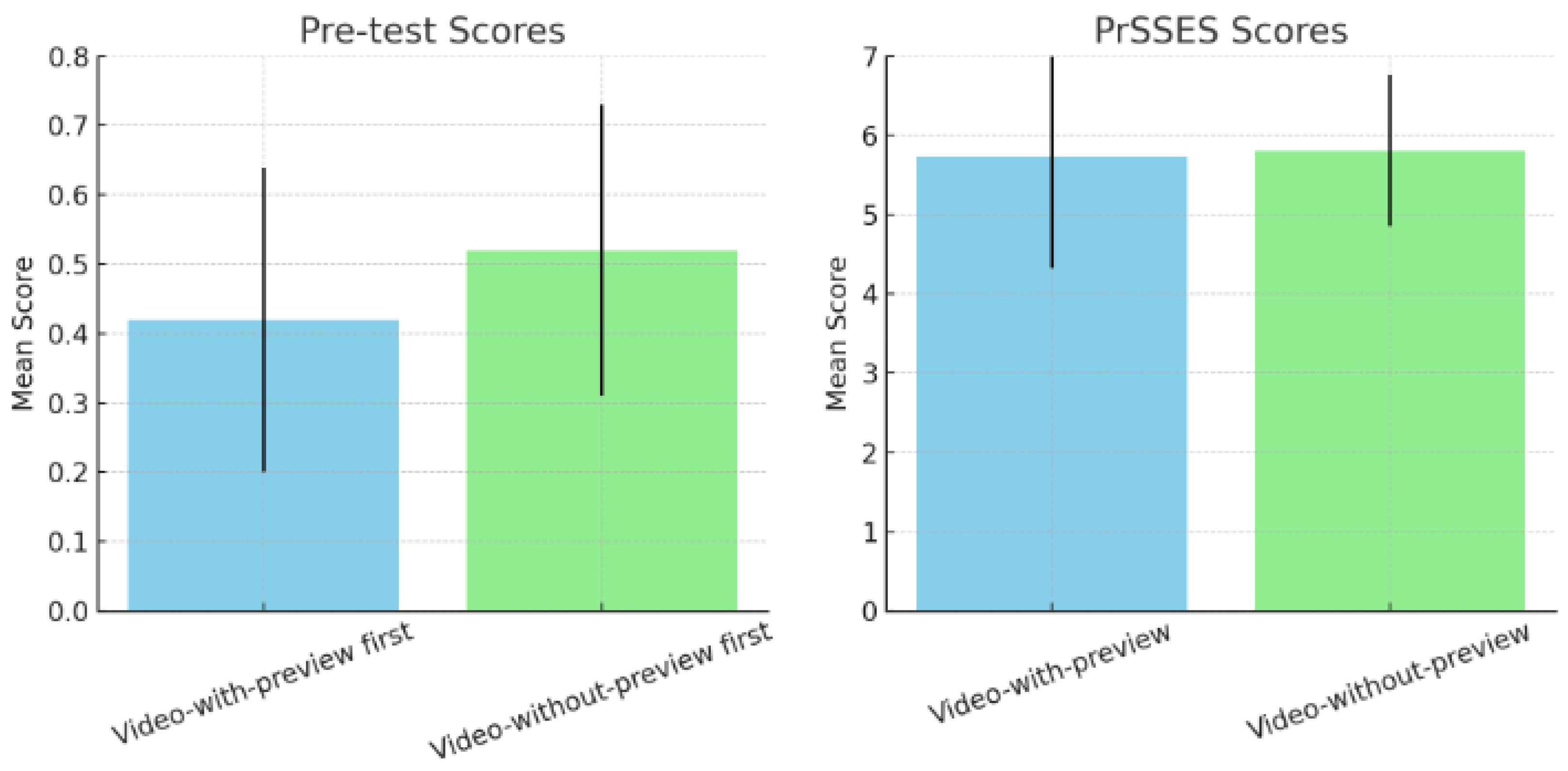

- Baseline differences in performance: Participants exhibited significantly higher pre-test scores when starting with the video-without-preview condition compared to the video-with-preview condition, indicating a disparity in initial task performance capabilities. This suggests that further investigation is needed to address such baseline differences and ensure equivalent starting points for all participants, regardless of the order of conditions.

- Consistency in self-efficacy perceptions: Despite differences in baseline performance, the PrSSES scores were comparable across the video-with-preview and video-without-preview conditions. This finding suggests that the AI-generated instructional videos were perceived similarly in terms of their ability to support self-efficacy across conditions, regardless of the order in which participants experienced the videos.

- Implications for instructional design: The significant difference in pre-test scores suggests that the preview feature alone may not be sufficient to ensure equivalent learning conditions. It is important, therefore, to incorporate additional scaffolding strategies or tailored previews to accommodate the diverse starting points of learners, as observed in the baseline performance differences.

- Generalizability of AI-generated tutorials: Participants showed similar levels of self-efficacy across both conditions, highlighting the broad potential of AI-generated instructional tools to enhance participant confidence, regardless of specific instructional design elements, such as the inclusion or exclusion of a preview feature.

4.2. Top of Form

Self-Efficacy Before and After Training

- Overall self-efficacy improvement: Participants significantly improved their self-efficacy after training, with a large effect size.

- Initial confidence: The pre-training self-efficacy scores were already above average, suggesting that the task was perceived as relatively manageable.

- Condition comparison: No significant difference between instructional video-without-preview and video-with-preview conditions, implying both benefited similarly from the training.

4.3. Task Performances and Learning

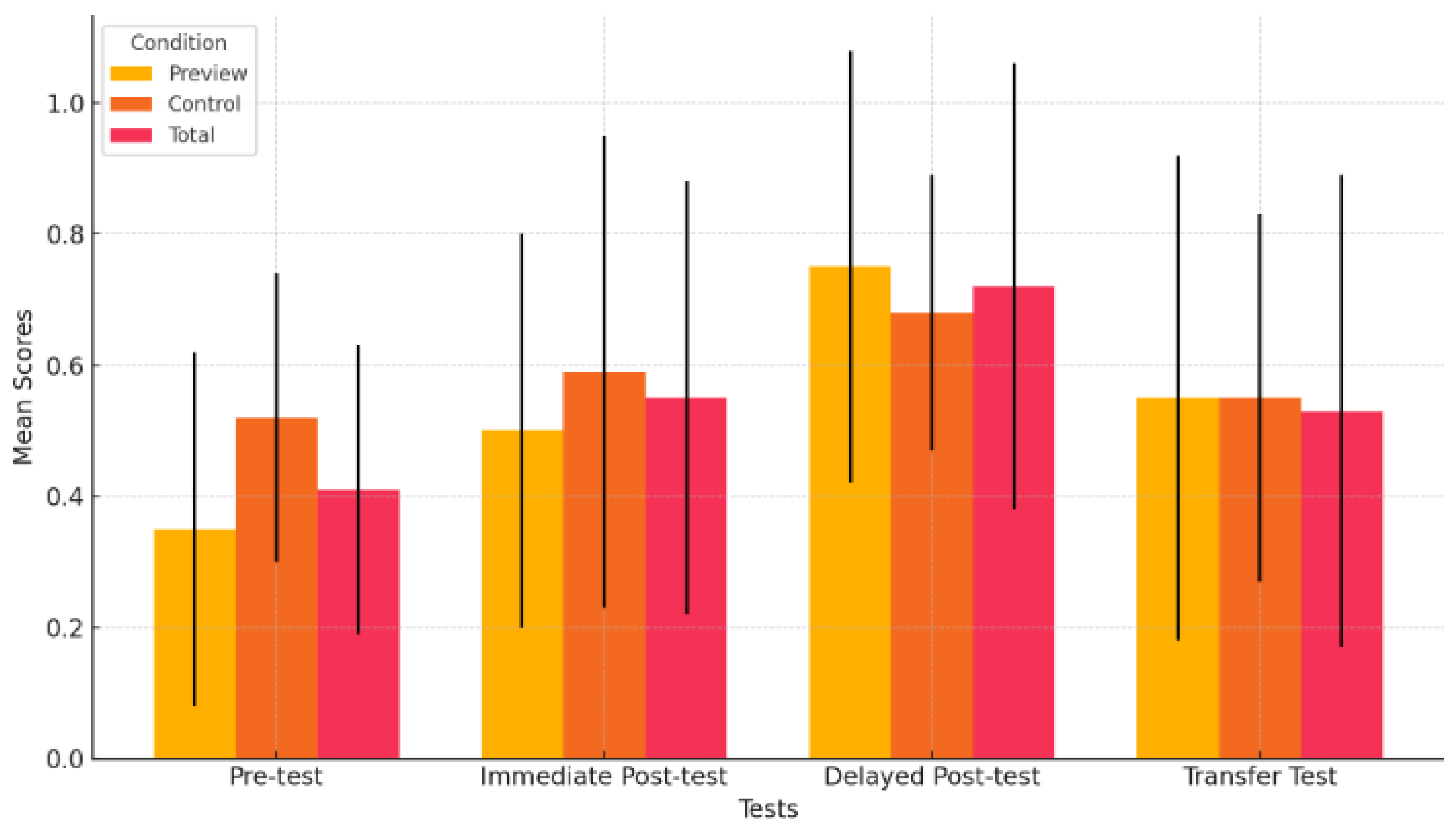

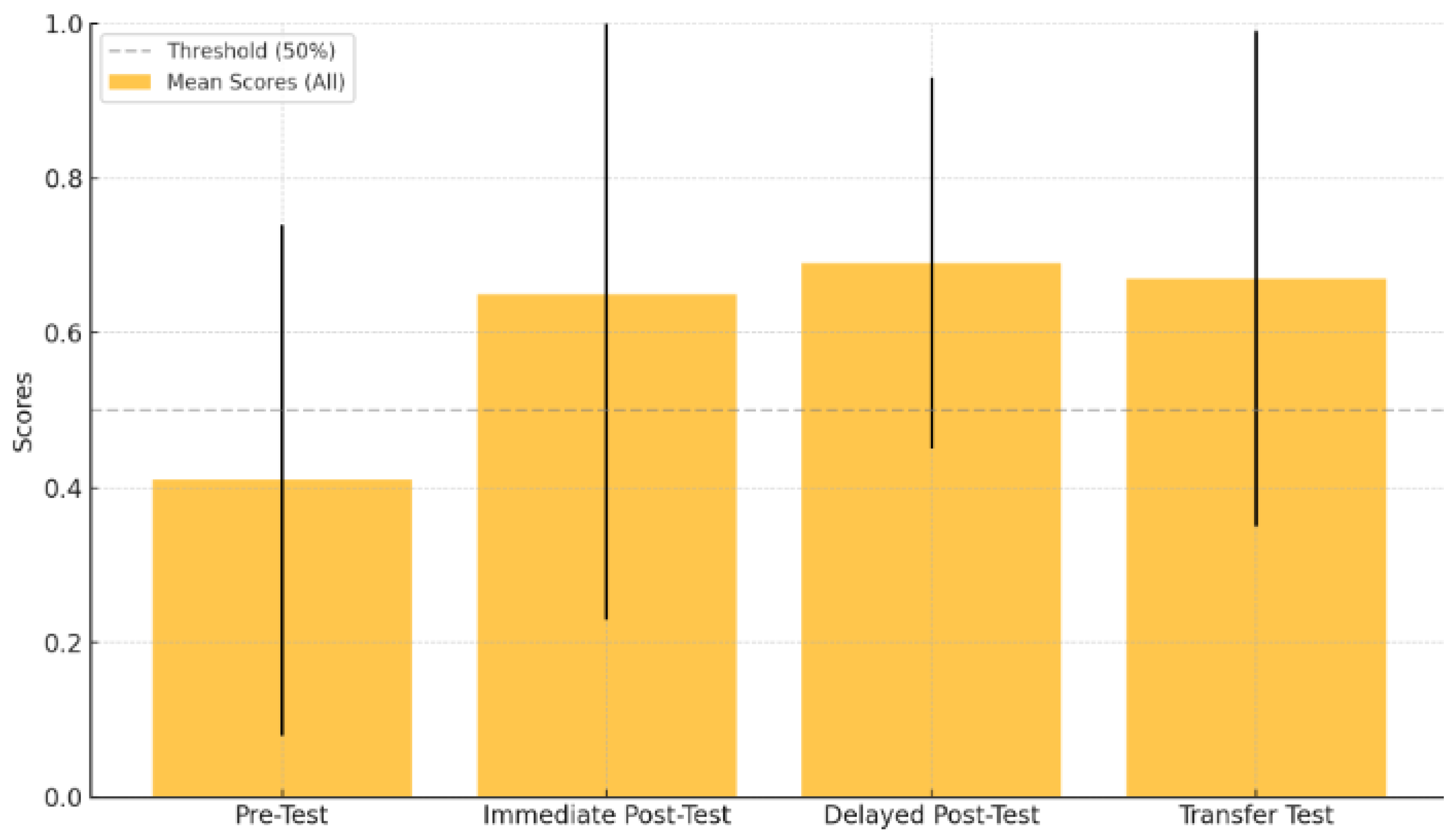

- Delayed post-test performance: The delayed post-test scores are the highest across all conditions, indicating a strong retention of the material over time.

- Pre-test comparison of participants: (a) the pre-test scores are the lowest overall, showing significant room for improvement before the intervention and (b) the participants in video-without-preview conditions scored higher than the video-with-preview group in the pre-test, indicating an initial disparity in baseline performance.

- Post-test insights: Participants in both conditions show improvement from the pre-test to the immediate post-test, but those in video-with-preview conditions’ delayed post-test scores surpass their counterparts, suggesting a longer-term benefit of the preview condition.

- Transfer test: Scores in the transfer test are consistent across conditions, suggesting that the intervention equally prepared both groups for applying learned concepts to new contexts.

- For the immediate post-test, the preview condition (M = 0.68, SD = 0.36) did not score significantly higher than the video-without-preview condition (M = 0.62, SD = 0.41), F(1, 54) = 0.003, p = 0.54.

- For the delayed post-test, the preview condition (M = 0.70, SD = 0.21) did not score significantly higher than the video-without-preview condition (M = 0.71, SD = 0.22), F(1, 54) = 0.04, p = 0.51.

- For the transfer test, the preview condition (M = 0.53, SD = 0.28) also did not score significantly higher than the video-without-preview condition (M = 0.51, SD = 0.29), F(1, 54) = 0.22, p = 0.73.

- Improvement across all tests: The immediate post-test, delayed post-test, and transfer test scores were all significantly higher than the pre-test scores. This suggests that the intervention (AI-generated video tutorial) had a positive impact on participant task performance, improving their ability to complete both tasks immediately and overtime. Specifically, the large effect sizes in the delayed post-test (d = 1.65) and the moderate effect size in the transfer test (d = 0.67) emphasize the sustained benefits of the intervention. These results confirm that the video tutorial facilitated learning and retention of knowledge, extending beyond the immediate learning phase.

- No significant difference between conditions: Although the preview condition showed slight improvements in scores across the immediate post-test, delayed post-test, and transfer test, these improvements were not statistically significant when compared to video-without-preview condition. This suggests that the inclusion of a preview in the AI-generated instructional video did not have a notable additional effect on performance relative to the video-without-preview condition. This indicates that other factors, such as the video content itself or individual differences, might be influencing task performance more than the preview alone.

- AI-generated instructional video ‘s effectiveness: Despite the lack of significant differences between conditions, the overall improvement in task performance from the pre-test to the subsequent tests (immediate, delayed, and transferred) supports the effectiveness of the AI-generated video tutorials in enhancing participants’ understanding and task execution. The video tutorials, whether with or without a preview, appear to be a useful tool for promoting learning outcomes.

- Potential limitations of the preview effect: The absence of significant findings regarding the preview conditions may point to potential limitations in how the preview was implemented. While previews are generally helpful in setting up context and expectations, it seems that the format, content, or delivery of the preview might not have been optimized in this case to enhance task performance. Future research could explore different approaches to delivering previews or investigate other factors that could interact with the preview to better support learning.

5. Discussion

6. Implications

7. Limitations and Directions for Future Research

8. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aidoo, B. (2023). Teacher educators’ experience adopting problem-based learning in science education. Education Sciences, 13(11), 1113. [Google Scholar] [CrossRef]

- Akçay, B. (2009). Problem-based learning in science education. Journal of Turkish Science Education, 6(2), 26–36. Available online: https://www.tused.org/index.php/tused/article/view/104 (accessed on 3 December 2024).

- Al-Zahrani, A. M., & Alasmari, T. M. (2024). Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications. Humanities and Social Sciences Communications, 11, 912. [Google Scholar] [CrossRef]

- Arkün-Kocadere, S., & Çağlar-Özhan, Ş. (2024). Video lectures with AI-generated instructors: Low video engagement, same performance as human instructors. The International Review of Research in Open and Distributed Learning, 25(3), 350–369. [Google Scholar] [CrossRef]

- Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares, & T. C. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 307–337). Information Age Publishing. [Google Scholar]

- Bewersdorff, A., Hartmann, C., Hornberger, M., Seßler, K., Bannert, M., Kasneci, E., & Nerdel, C. (2024). Taking the next step with generative artificial intelligence: The transformative role of multimodal large language models in science education. arXiv, arXiv:2401.00832. [Google Scholar]

- Blummer, B. A., & Kritskaya, O. (2009). Best practices for creating an online tutorial: A literature review. Journal of Web Librarianship, 3(3), 199–216. [Google Scholar] [CrossRef]

- Brame, C. J. (2016). Effective educational videos: Principles and guidelines for maximizing student learning from video content. CBE Life Sciences Education, 15, es6. [Google Scholar] [CrossRef] [PubMed]

- Brislin, R. W. (1970). Back-translation for cross-cultural research. Journal of Cross-Cultural Psychology, 1(3), 185–216. [Google Scholar] [CrossRef]

- Bush, A., & Grotjohann, N. (2020). Collaboration in teacher education: A cross-sectional study on future teachers’ attitudes towards collaboration, their intentions to collaborate and their performance of collaboration. Teaching and Teacher Education, 88, 102968. [Google Scholar] [CrossRef]

- Cortina, J. M. (1993). What is the coefficient alpha? An examination of theory and applications. Journal of Applied Psychology, 78(1), 98. [Google Scholar] [CrossRef]

- Costley, J., Fanguy, M., Lange, C., & Baldwin, M. (2020). The effects of video lecture viewing strategies on cognitive load. Journal of Computing in Higher Education, 33, 19–38. [Google Scholar] [CrossRef]

- Ester, P., Morales, I., & Herrero, L. (2023). Micro-videos as a learning tool for professional practice during the post-COVID era: An educational experience. Sustainability, 15, 5596. [Google Scholar] [CrossRef]

- Göltl, K., Ambros, R., Dolezal, D., & Motschnig, R. (2024). Pre-service teachers’ perceptions of their digital competencies and ways to acquire those through their studies and self-organized learning. Education Sciences, 14, 951. [Google Scholar] [CrossRef]

- Greenwald, A. G. (1976). Within-subjects designs: To use or not to use? Psychological Bulletin, 83(2), 314. [Google Scholar] [CrossRef]

- Gumisirizah, N., Nzabahimana, J., & Muwonge, C. M. (2024). Supplementing problem-based learning approach with video resources on students’ academic achievement in physics: A comparative study between government and private schools. Education and Information Technologies, 29, 13133–13153. [Google Scholar] [CrossRef]

- Hamad, Z. T., Jamil, N., & Belkacem, A. N. (2024). ChatGPT’s impact on education and healthcare: Insights, challenges, and ethical considerations. IEEE Access. [Google Scholar] [CrossRef]

- Hurzlmeier, M., Watzka, B., Hoyer, C., Girwidz, R., & Ertl, B. (2021). Visual cues in a video-based learning environment: The role of prior knowledge and its effects on eye movement measures. In E. De Vries, Y. Hod, & J. Ahn (Eds.), Proceedings of the 15th International Conference of the Learning Sciences—ICLS 2021 (pp. 3–10). International Society of the Learning Sciences. [Google Scholar] [CrossRef]

- Johnson, C., Hill, L., Lock, J., Altowairiki, N., Ostrowski, C., Da Rosa Dos Santos, L., & Liu, Y. (2017). Using design-based research to develop meaningful online discussions in undergraduate field experience courses. International Review of Research in Open and Distributed Learning, 18(6), 36–53. [Google Scholar] [CrossRef]

- Koumi, J. (2013). Pedagogic design guidelines for multimedia materials: A call for collaboration between practitioners and researchers. Journal of Visual Literacy, 32(2), 85–114. [Google Scholar] [CrossRef]

- Kulgemeyer, C. (2018). A framework of effective science explanation videos informed by criteria for instructional explanations. Research in Science Education, 50, 2441–2462. [Google Scholar] [CrossRef]

- Kumar, D. D. (2010). Approaches to interactive video anchors in problem-based science learning. Journal of Science Education and Technology, 19(1), 13–19. [Google Scholar] [CrossRef]

- Lange, C., & Costley, J. (2020). Improving online video lectures: Learning challenges created by media. International Journal of Educational Technology in Higher Education, 17(16), 1–18. [Google Scholar] [CrossRef]

- Li, B., Wang, C., Bonk, C. J., & Kou, X. (2024). Exploring inventions in self-directed language learning with generative AI: Implementations and perspectives of YouTube content creators. TechTrends, 68, 803–819. [Google Scholar] [CrossRef]

- Lim, J. (2024). The potential of learning with AI-generated pedagogical agents in instructional videos. In Extended abstracts of the CHI conference on human factors in computing systems (pp. 1–6). ACM: USA. [Google Scholar] [CrossRef]

- Lin, C., Zhou, K., Li, L., & Sun, L. (2025). Integrating generative AI into digital multimodal composition: A study of multicultural second-language classrooms. Computers and Composition, 75, 102895. [Google Scholar] [CrossRef]

- Magaji, A., Adjani, M., & Coombes, S. (2024). A systematic review of preservice science teachers’ experience of problem-based learning and implementing it in the classroom. Education Sciences, 14(3), 301. [Google Scholar] [CrossRef]

- Marshall, E. (2024). Examining the effectiveness of high-quality lecture videos in an asynchronous online criminal justice course. Journal of Criminal Justice Education, 1–18. [Google Scholar] [CrossRef]

- Martin, N. A., & Martin, R. (2015). Would you watch it? Creating effective and engaging video tutorials. Journal of Library & Information Services in Distance Learning, 9(1–2), 40–56. [Google Scholar] [CrossRef]

- Newbold, N., & Gillam, L. (2010). Text readability within video retrieval applications: A study on CCTV analysis. Journal of Multimedia, 5(2), 123–141. [Google Scholar] [CrossRef]

- Pellas, N. (2023a). The effects of generative AI platforms on undergraduates’ narrative intelligence and writing self-efficacy. Education Sciences, 13(11), 1155. [Google Scholar] [CrossRef]

- Pellas, N. (2023b). The influence of sociodemographic factors on students’ attitudes toward AI-generated video content creation. Smart Learning Environments, 10, 276. [Google Scholar] [CrossRef]

- Pellas, N. (2024). The role of students’ higher-order thinking skills in the relationship between academic achievements and machine learning using generative AI chatbots. Research and Practice in Technology Enhanced Learning, 20, 36. [Google Scholar] [CrossRef]

- Pi, Z., Zhang, Y., Zhu, F., Xu, K., Yang, J., & Hu, W. (2019). Instructors’ pointing gestures improve learning regardless of their use of directed gaze in video lectures. Computers & Education, 128(1), 345–352. [Google Scholar] [CrossRef]

- Qu, Y., Tan, M. X. Y., & Wang, J. (2024). Disciplinary differences in undergraduate students’ engagement with generative artificial intelligence. Smart Learning Environments, 11, 51. [Google Scholar] [CrossRef]

- Rasi, P. M., & Poikela, S. (2016). A review of video triggers and video production in higher education and continuing education PBL settings. Interdisciplinary Journal of Problem-Based Learning, 10(1), 7. [Google Scholar] [CrossRef]

- Reeves, T. C., Herrington, J., & Oliver, R. (2005). Design research: A socially responsible approach to instructional technology research in higher education. Journal of Computing in Higher Education, 16(2), 96–115. [Google Scholar] [CrossRef]

- Rismark, M., & Sølvberg, A. M. (2019). Video as a learner scaffolding tool. International Journal of Learning, Teaching and Educational Research, 18(1), 62–75. [Google Scholar] [CrossRef][Green Version]

- Saqr, M., & López-Pernas, S. (2024). Why explainable AI may not be enough: Predictions and mispredictions in decision making in education. Smart Learning Environments, 11, 343. [Google Scholar] [CrossRef]

- Savinainen, A., Scott, P., & Viiri, J. (2004). Using a bridging representation and social interactions to foster conceptual change: Designing and evaluating an instructional sequence for Newton’s third law. Science Education, 89(2), 175–195. [Google Scholar] [CrossRef]

- Schacter, D. L., & Szpunar, K. K. (2015). Enhancing attention and memory during video-recorded lectures. Scholarship of Teaching and Learning in Psychology, 1(1), 60–71. [Google Scholar] [CrossRef]

- Semeraro, A., & Vidal, L. T. (2022, April 30–May 5). Visualizing instructions for physical training: Exploring visual cues to support movement learning from instructional videos. CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA. [Google Scholar] [CrossRef]

- Shahzad, M. F., Xu, S., & Asif, M. (2024). Factors affecting generative artificial intelligence, such as ChatGPT, use in higher education: An application of technology acceptance model. British Educational Research Journal. [Google Scholar] [CrossRef]

- Shaukat, S., Wiens, P., & Garza, T. (2024). Development and validation of the Standards Self-Efficacy Scale (SSES) for US pre-service teachers. Educational Research: Theory and Practice, 35, 71–87. [Google Scholar]

- Simanjuntak, L. Y. A., Perangin-Angin, R. B., & Saragi, D. (2019). Development of scientific-based learning video media using problem-based learning (PBL) model to improve student learning outcomes in fourth grade students of elementary school Parmaksian, Kab. Sambair Toba. Budapest International Research and Critics in Linguistics and Education Journal, 2(4), 297–304. [Google Scholar] [CrossRef]

- Son, T. (2024). Noticing classes of preservice teachers: Relations to teaching moves through AI chatbot simulation. Education and Information Technologies. [Google Scholar] [CrossRef]

- Sullivan, G. M., & Feinn, R. (2012). Using effect size—Or why the p value is not enough. Journal of Graduate Medical Education, 4(3), 279–282. [Google Scholar] [CrossRef]

- Taningrum, N., Kriswanto, E. S., Pambudi, A. F., & Yulianto, W. D. (2024). Improving critical thinking skills using animated videos based on problem-based learning. Retos, 57, 692–696. [Google Scholar] [CrossRef]

- Thornton, R. K., & Sokoloff, D. R. (1998). Assessing student learning of Newton’s laws: The force and motion conceptual evaluation and the evaluation of active learning laboratory and lecture curricula. American Journal of Physics, 66(4), 338–352. [Google Scholar] [CrossRef]

- Van Der Meij, H. (2017). Reviews in instructional video. Computers & Education, 114, 164–174. [Google Scholar] [CrossRef]

- Van Der Meij, H., Rensink, I., & Van Der Meij, J. (2018a). Effects of practice with videos for software training. Computers in Human Behavior, 89, 439–445. [Google Scholar] [CrossRef]

- Van Der Meij, H., & Van Der Meij, J. (2014). A comparison of paper-based and video tutorials for software learning. Computers & Education, 78, 150–159. [Google Scholar] [CrossRef]

- Van Der Meij, H., Van Der Meij, J., & Voerman, T. (2018b). Supporting motivation, task performance, and retention in video tutorials for software training. Educational Technology Research and Development, 66(3), 597–614. [Google Scholar] [CrossRef]

- Wang, X., Lin, L., Han, M., & Spector, J. M. (2020). Impacts of cues on learning: Using eye-tracking technologies to examine the functions and designs of added cues in short instructional videos. Computers in Human Behavior, 107, 106279. [Google Scholar] [CrossRef]

- Zhang, C., Wang, Z., Fang, Z., & Xiao, X. (2024). Guiding student learning in video lectures: Effects of instructors’ emotional expressions and visual cues. Computers & Education, 218, 105062. [Google Scholar] [CrossRef]

| Phases | Activity | Data Collected | Time Point |

|---|---|---|---|

| Pre-Intervention | Completion of pre-test and Pre-Self-Efficacy Questionnaire (PrSSES) | Pre-test scores, PrSSES scores | Before Intervention |

| Intervention | Viewing AI-generated videos (with/without preview—counterbalanced) | Video viewing data (completion, time spent) | During Intervention |

| Immediate Post-Test | Completion of immediate post-test and Post-Self-Efficacy Questionnaire (PsSSES) | Immediate post-test scores, PsSSES scores | Immediately after Intervention |

| Delayed Post-Test | Completion of delayed post-test | Delayed post-test scores | 7 Days after Intervention |

| Transfer Test | Completion of transfer test | Transfer test scores | After Delayed Post-Test |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pellas, N. The Impact of AI-Generated Instructional Videos on Problem-Based Learning in Science Teacher Education. Educ. Sci. 2025, 15, 102. https://doi.org/10.3390/educsci15010102

Pellas N. The Impact of AI-Generated Instructional Videos on Problem-Based Learning in Science Teacher Education. Education Sciences. 2025; 15(1):102. https://doi.org/10.3390/educsci15010102

Chicago/Turabian StylePellas, Nikolaos. 2025. "The Impact of AI-Generated Instructional Videos on Problem-Based Learning in Science Teacher Education" Education Sciences 15, no. 1: 102. https://doi.org/10.3390/educsci15010102

APA StylePellas, N. (2025). The Impact of AI-Generated Instructional Videos on Problem-Based Learning in Science Teacher Education. Education Sciences, 15(1), 102. https://doi.org/10.3390/educsci15010102