Inquiring in the Science Classroom by PBL: A Design-Based Research Study

Abstract

:1. Introduction

2. Theoretical Framework

2.1. Problem-Based Learning

2.2. Design-Based Research

3. Materials and Methods

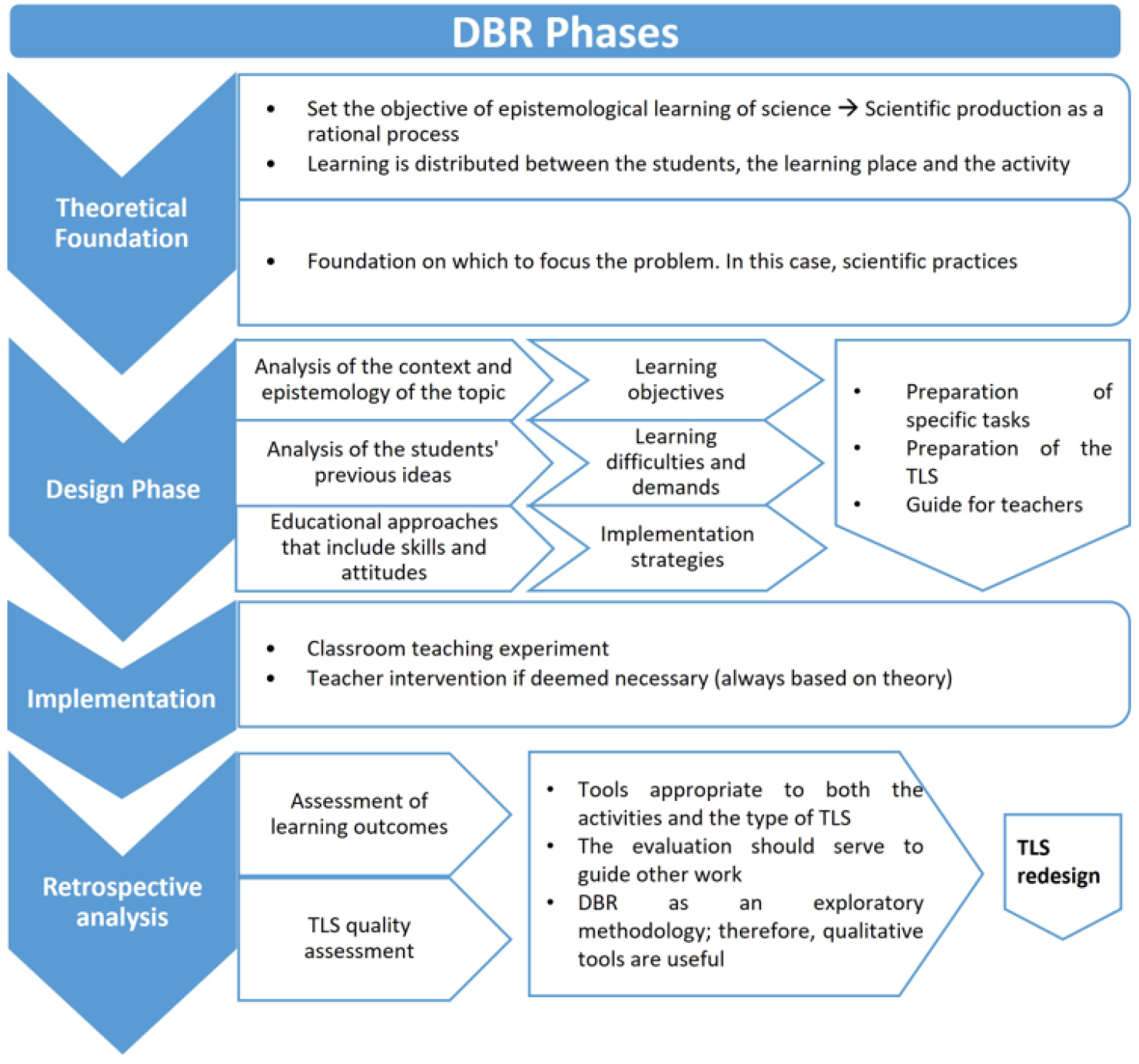

3.1. Stages of Design-Based Research

3.1.1. Theoretical Foundations for Research

3.1.2. Design Phase

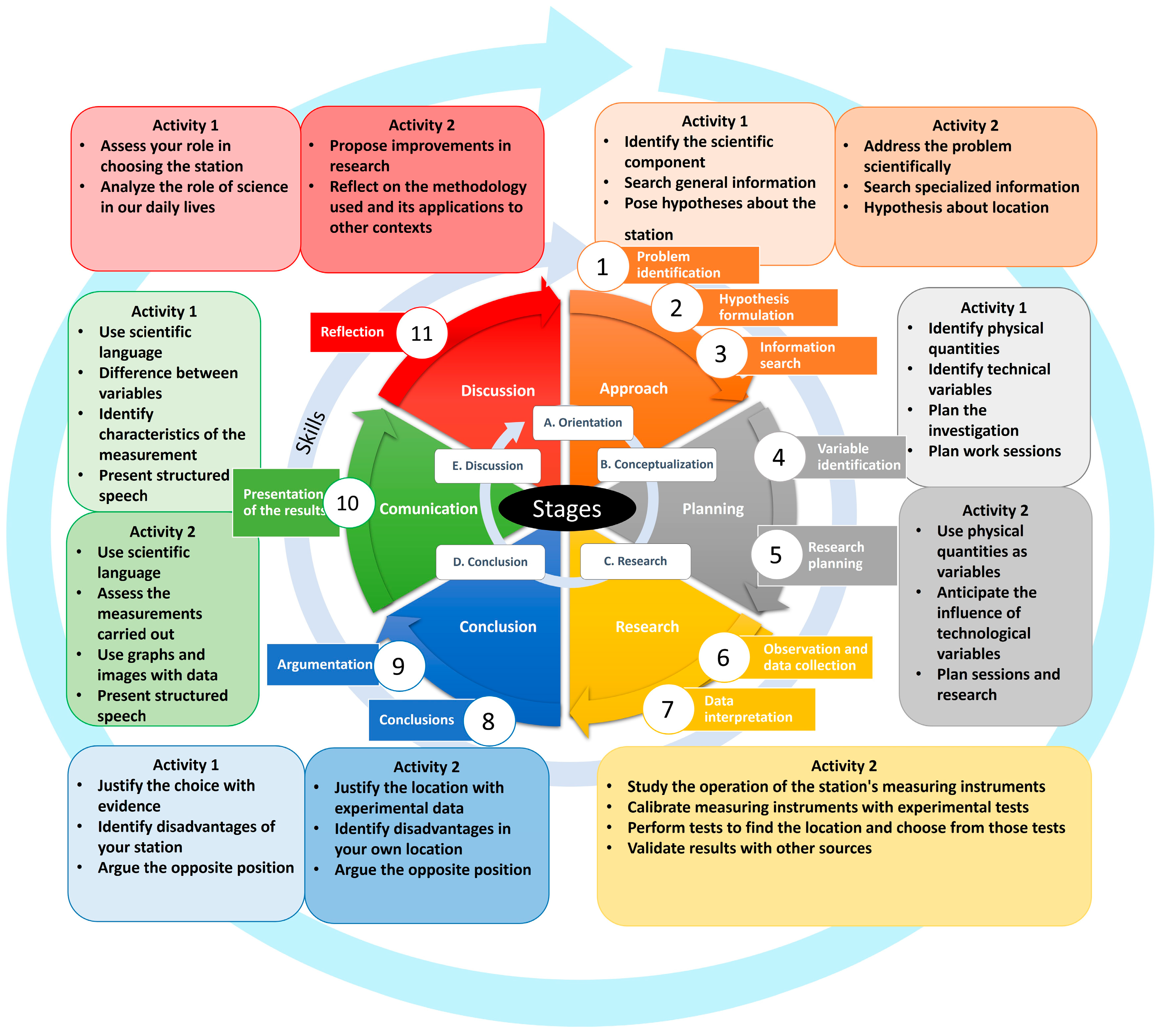

3.1.3. Implementation of the Activity

3.1.4. Retrospective Analysis: Evaluation and Redesign

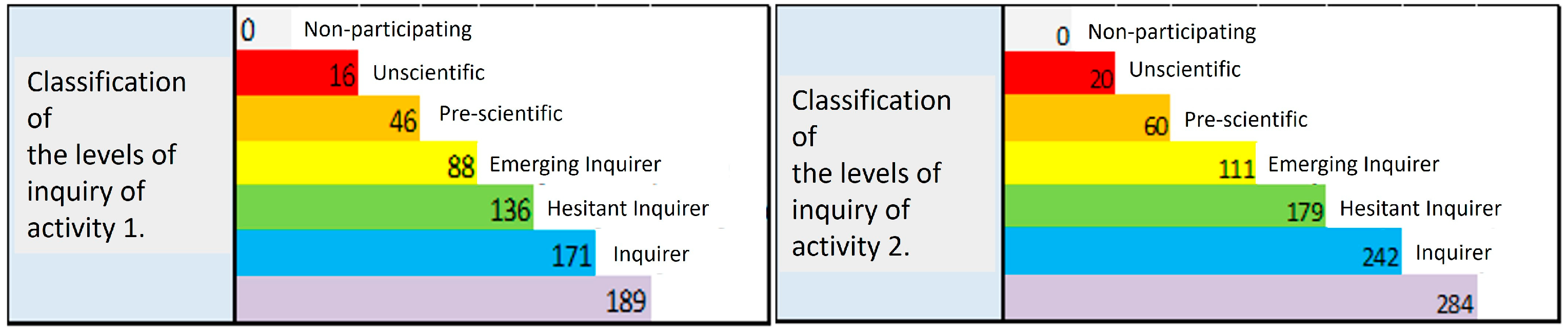

3.2. Instruments

3.3. Sample

4. Results

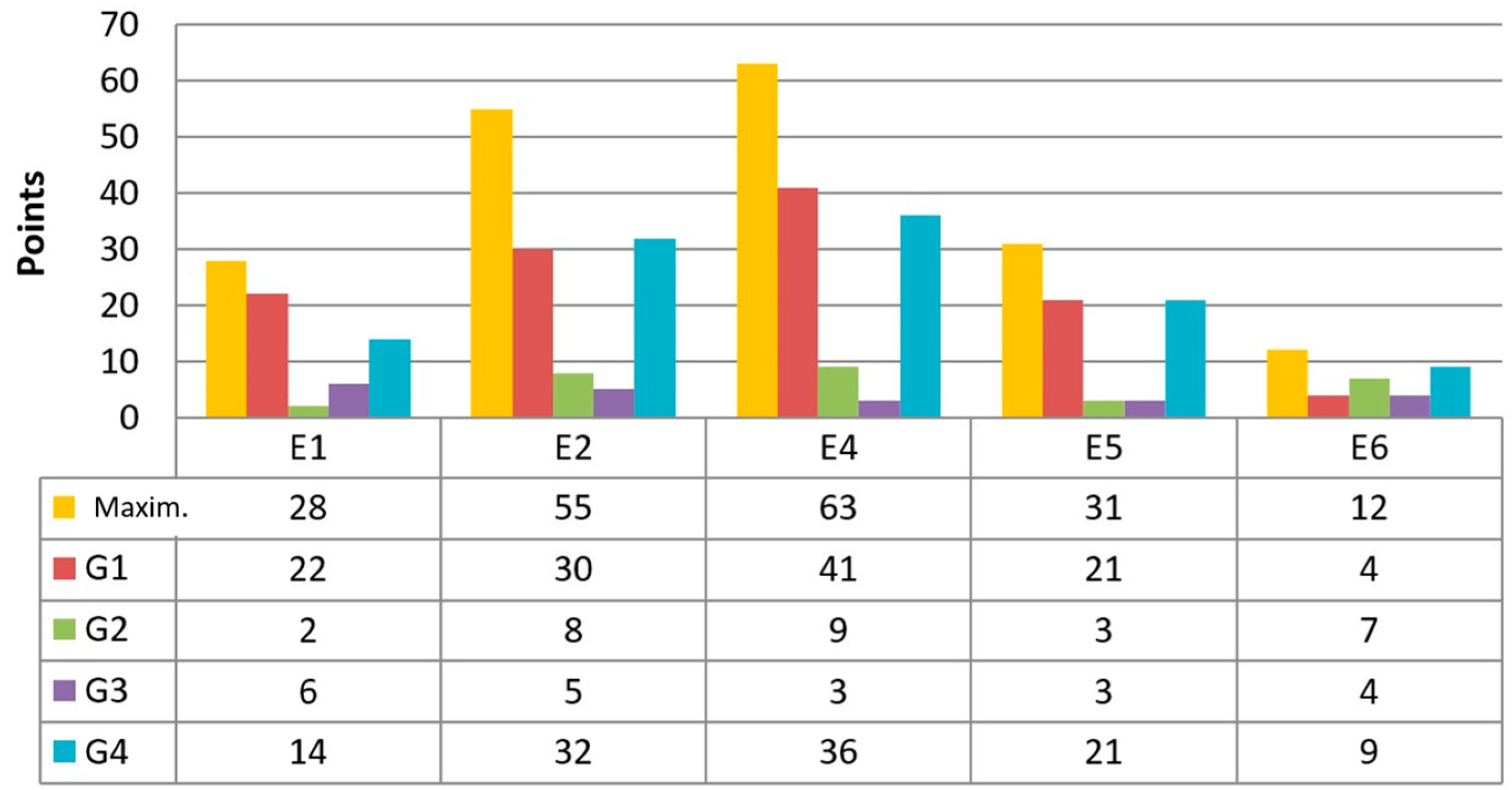

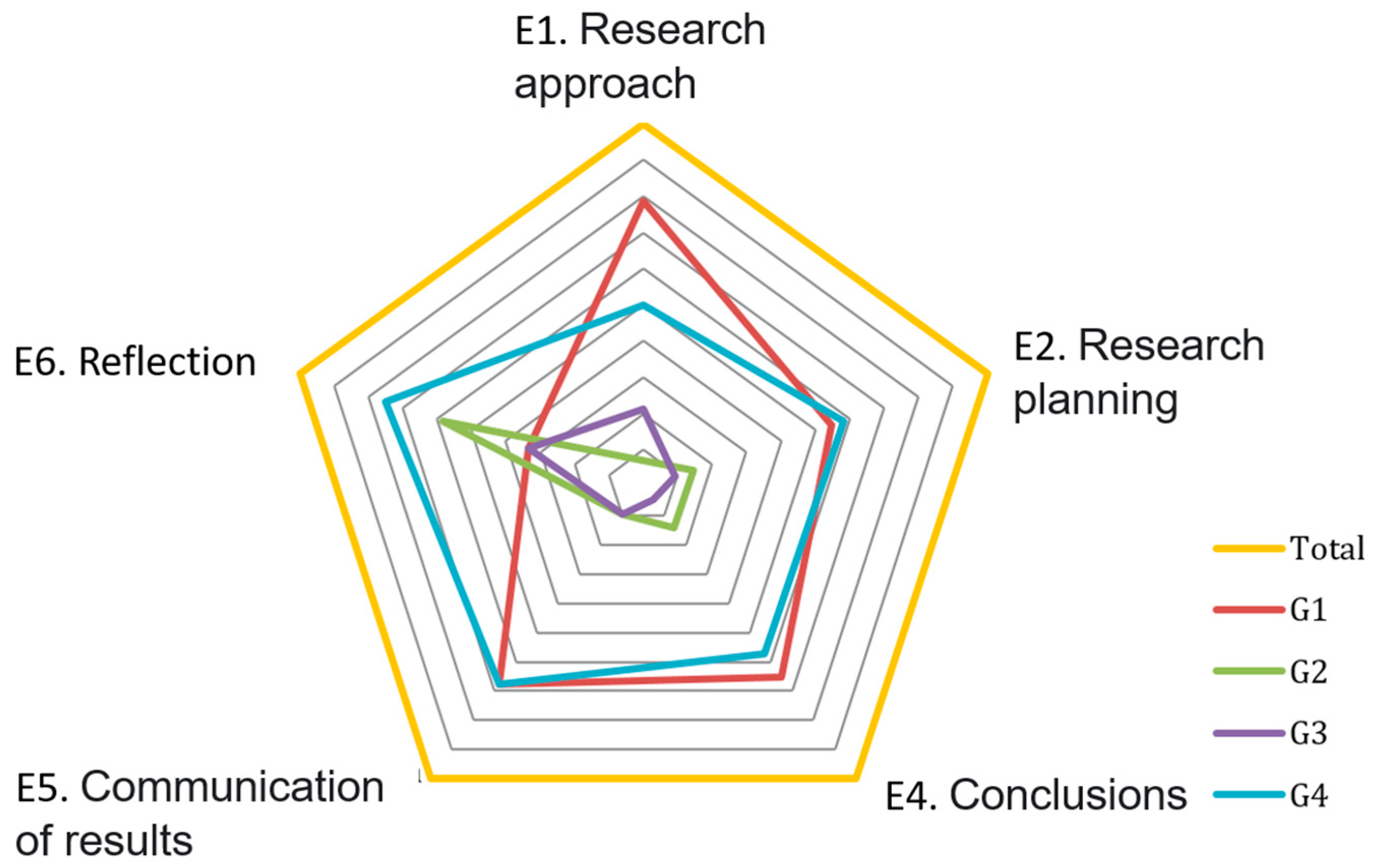

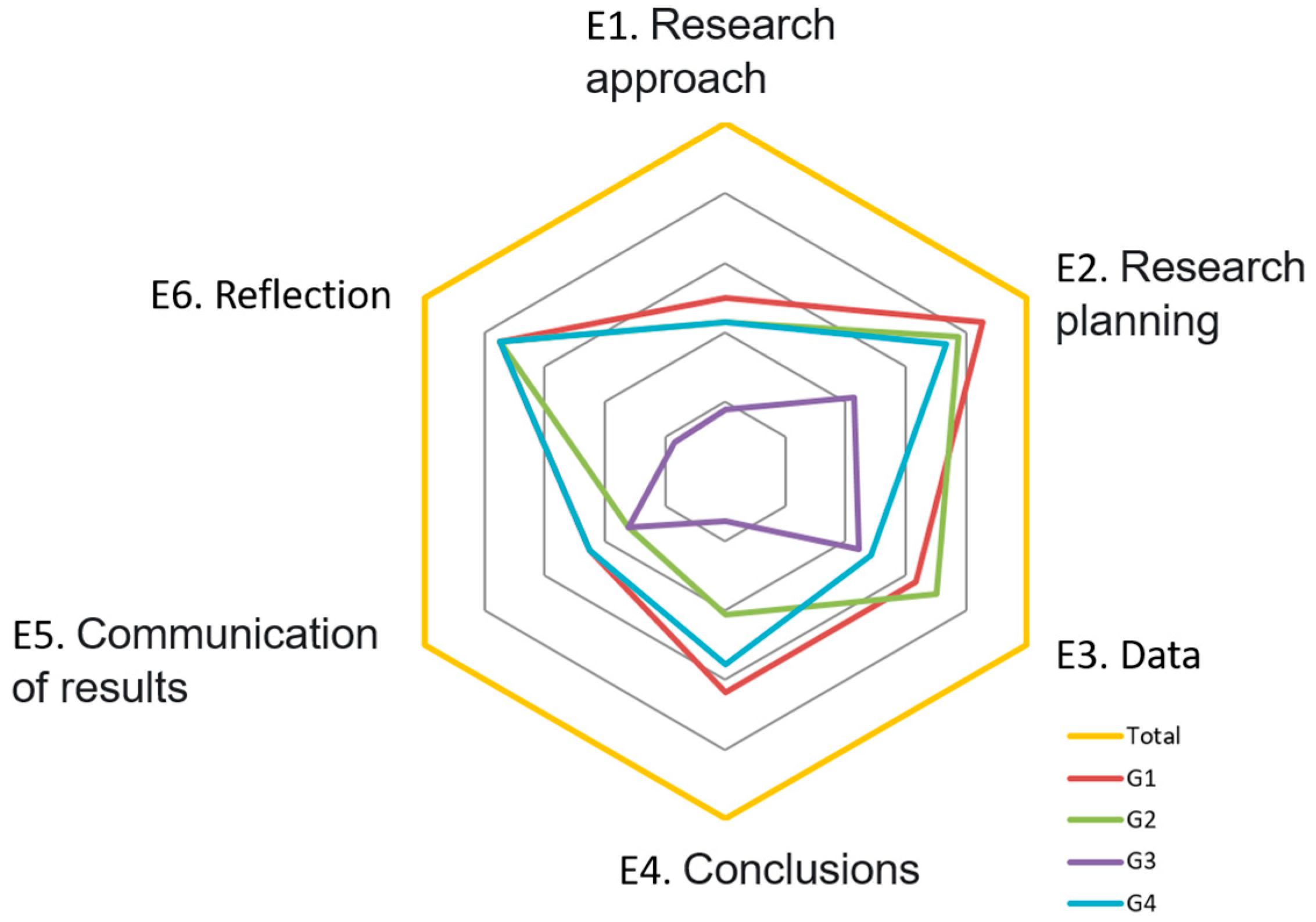

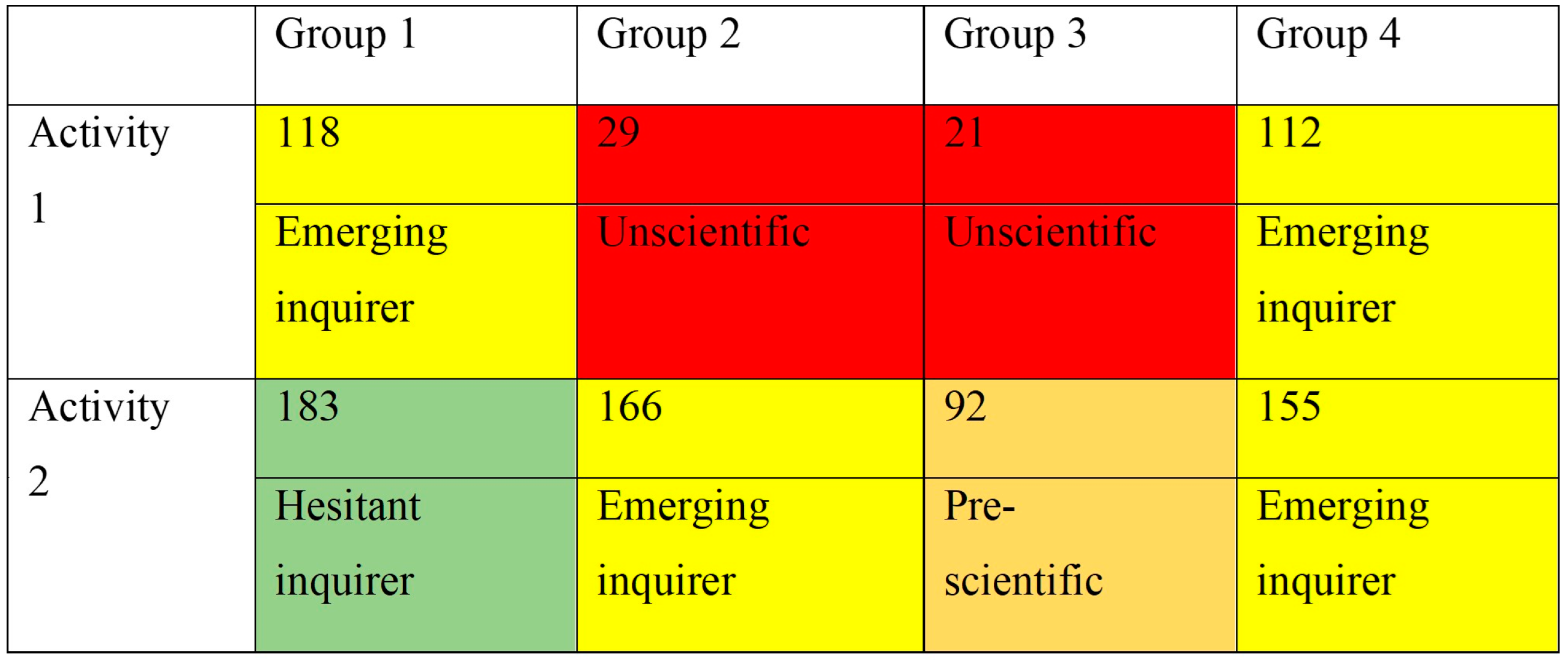

4.1. Activity 1: Choice of Weather Station

4.2. Activity 2: Choice of Weather Station Location

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aguilar, S., & Barroso, J. (2015). La triangulación de datos como estrategia en investigación educativa. Pixel-Bit. Revista de Medios y Educación, 47, 73–88. [Google Scholar] [CrossRef]

- Akuma, F. V., & Callaghan, R. (2019). A systematic review characterizing and clarifying intrinsic teaching challenges linked to inquiry-based practical work. Journal of Research in Science Teaching, 56(5), 619–648. [Google Scholar] [CrossRef]

- Alghamdi, A. H., & Li, L. (2013). Adapting design-based research as a research methodology in educational settings. International Journal of Education and Research, 1(10), 1–12. [Google Scholar]

- Anazifa, R. D., & Djukri, D. (2017). Project-based learning and problem-based learning: Are they effective to improve student’s thinking skills? Jurnal Pendidikan IPA Indonesia, 6, 346–355. [Google Scholar] [CrossRef]

- Avargil, S., Herscovitz, O., & Dori, Y. J. (2012). Teaching thinking skills in context-based learning: Teacher’s challenges and assessment knowledge. Journal of Science Education and Technology, 21, 207–225. [Google Scholar] [CrossRef]

- Barab, S., & Squire, K. (2004). Design-based research: Putting a stake in the ground. Journal of the Learning Sciences, 13(1), 1–14. [Google Scholar] [CrossRef]

- Bell, J. (2010). Doing your research project. McGraw-Hill. [Google Scholar]

- Bell, P. (2004). On the theoretical breadth of design-based research in education. Educational Psychologist, 39(4), 243–253. [Google Scholar] [CrossRef]

- Buty, C., Tiberghien, A., & Le Maréchal, J. (2004). Learning hypotheses and an associated tool to design and to analyse teaching–learning sequences. International Journal of Science Education, 26(5), 579–604. [Google Scholar] [CrossRef]

- Caamaño, A. (2018). Enseñar química en contexto. Un recorrido por los proyectos de química en contexto desde la década de los 80 hasta la actualidad. [Teaching chemistry in context. A tour of chemistry projects in context from the 1980s to the present]. Education Química, 29, 21–54. [Google Scholar] [CrossRef]

- Capraro, R., & Slough, S. (2013). Why PBL? Why stem? Why now? An introduction to STEM project-based learning: An integrated science, technology, engineering, and mathematics (STEM) approach. In Project-based learning: An integrates science, technology, engineering and mathematics (STEM) approach (2nd ed., pp. 1–6). Sense. [Google Scholar]

- Chamizo, J., & Izquierdo, M. (2005). Ciencia en contexto: Una reflexión desde la filosofía. Alambique: Didáctica de las Ciencias Experimentales, 46, 9–17. [Google Scholar]

- CherryHolmes, C. H. (1992). (Re)clamación de pragmatismo para la educación. Revista de Educación, 297, 227–262. [Google Scholar]

- Chin, C., & Chia, L. (2004). Problem-based learning: Using students’ questions to drive knowledge construction. Science Education, 88, 707–727. [Google Scholar] [CrossRef]

- Cobb, P., Confrey, J., diSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. [Google Scholar] [CrossRef]

- Couso, D., Jiménez-Liso, M. R., Refojo, C., & Sacristán, J. A. (2020). Enseñando ciencia con Ciencia. FECYT and Fundación Lilly; Penguin Random House Grupo Editorial S.A.U. [Google Scholar]

- Crawford, B. A. (2007). Learning to teach science as inquiry in the rough and tumble of practice. Journal of Research in Science Teaching, 44(4), 613–642. [Google Scholar] [CrossRef]

- Crujeiras-Pérez, B., & Cambeiro, F. (2018). Una experiencia de indagación cooperativa para aprender ciencias en educación secundaria participando en las prácticas científicas. Revista Eureka sobre Enseñanza y Divulgación de las Ciencias, 15(1), 1201. [Google Scholar] [CrossRef]

- Dewey, J. (1938). Experience and education. Macmillan. [Google Scholar]

- Dori, Y., Avargil, S., Kohen, Z., & Saar, L. (2018). Context-based learning and metacognitive prompts for enhancing scientific text comprehension. International Journal of Science Education, 40(10), 1198–1220. [Google Scholar] [CrossRef]

- Drake, K. N., & Long, D. (2009). Rebecca’s in the dark: A comparative study of problem-based learning and direct instruction/experiential learning in two 4th-grade classroom. Journal of Elementary Science Education, 21, 1–16. [Google Scholar] [CrossRef]

- Eherington, M. B. (2011). Investigative primary science: A problem-based learning approach. Australian Journal of Teacher Education (Online), 36, 53–74. [Google Scholar] [CrossRef]

- Ferrés-Gurt, C., Marbà-Tallada, A., & Sanmartí, N. (2015). Trabajos de indagación de los alumnos: Instrumentos de evaluación e identificación de dificultades. Revista Eureka sobre Enseñanza y Divulgación de las Ciencias, 12(1), 22–37. [Google Scholar] [CrossRef]

- Fraser, B. J., & Walberg, H. J. (2005). Research on teacher-student relationships and learning environments: Context, retrospect and prospect. International Journal of Educational Research, 43, 103–109. [Google Scholar] [CrossRef]

- Furió-Más, C., Guisasola, J., Almudí, J., & Ceberio, M. (2003). Learning the electric field concept as oriented research activity. Science Education, 87, 640–662. [Google Scholar] [CrossRef]

- Gilbert, J. K. (2006). On the nature of “context” in chemical education. International Journal of Science Education, 28(9), 957–976. [Google Scholar] [CrossRef]

- Guisasola, J., Ametller, J., & Zuza, K. (2021). Investigación basada en el diseño de secuencias de enseñanza-aprendizaje: Una línea de investigación emergente en enseñanza de las ciencias. Revista Eureka sobre Enseñanza y Divulgación de las Ciencias, 18(1), 1801. [Google Scholar] [CrossRef]

- Guisasola, J., Furió, C., & Zuza, K. (2008). Science education based on developing guided research. In M. V. Thomase (Ed.), Science education in focus (pp. 55–85). Nova Science Publisher. [Google Scholar]

- Hmelo-Silver, C. E. (2004). Problem-based learning: What and how do students learn? Educational Psychology Review, 16, 235–366. [Google Scholar] [CrossRef]

- Jiménez Aleixandre, M. P. (2011). 10 Ideas clave. Competencias en argumentación y uso de pruebas. Educatio Siglo XXI, 29(1), 363–366. [Google Scholar]

- Juuti, K., & Lavonen, J. (2006). Design-based research in science education: One step towards methodology. Nordic Studies in Science Education, 2(2), 54–68. [Google Scholar] [CrossRef]

- Kelly, G. J., & Duschl, R. A. (2002, April 1–5). Toward a research agenda for epistemological studies in science education. Annual Meeting of the National Association for Research in Science Teaching, New Orleans, LA, USA. [Google Scholar]

- King, D., & Ritchie, S. M. (2012). Learning science through real-world contexts. In Second international handbook of science education (pp. 69–79). Springer. [Google Scholar]

- Kolodner, J. L., Camp, P. J., Crismond, D., Fasse, B., Gray, J., Holbrook, J., Puntambekar, S., & Ryan, M. (2003). Problem-based learning meets case-based reasoning in the middle-school science classroom: Putting learning by design(tm) into practice. The Journal of the Learning Sciences, 12, 495–547. [Google Scholar] [CrossRef]

- Kortland, K., & Klaassen, K. (2010). Designing theory-based teaching-learning sequences for science education: Proceedings of the symposium in honour of piet lijnse at the time of his retirement as professor of physics didactics at utrecht university. CDBeta Press. [Google Scholar]

- Krathwohl, D. R. (2002). A revision of Bloom’s taxonomy: An overview. Theory into Practice, 41(4), 212–218. [Google Scholar] [CrossRef]

- Leach, J., & Scott, P. (2002). Designing and evaluating science teaching sequences: An approach drawing upon the concept of learning demand and a social constructivist perspective on learning. Studies in Science Education, 38(1), 115–142. [Google Scholar] [CrossRef]

- Lou, Y., Blanchard, P., & Kennedy, E. (2015). Development and validation of a science inquiry skills assessment. Journal of Geoscence Education, 63(1), 73–85. [Google Scholar] [CrossRef]

- Merritt, J., Lee, M. Y., Rillero, P., & Kinach, B. M. (2017). Problem-based learning in K-18 mathematics and science education: A literature review. Interdisciplinary Journal of Problem-Based Learning, 11(2), 3. [Google Scholar] [CrossRef]

- Méheut, M., & Psillos, D. (2004). Teaching–learning sequences: Aims and tools for science education research. International Journal of Science Education, 26(5), 515–535. [Google Scholar] [CrossRef]

- Mosquera Bargiela, I., Puig, B., & Blanco Anaya, P. (2018). Las prácticas científicas en infantil. Una aproximación al análisis del currículum y planes de formación del profesorado de Galicia. [Scientific practices in children. An approach to the analysis of the curriculum and teacher training plans in Galicia]. Enseñanza de las Cienc, 36, 7–23. [Google Scholar]

- Nieveen, N. (2009). Formative evaluation in educational design research (T. Plomp, & N. Nieveen, Eds.; pp. 89–101). Enschede. [Google Scholar]

- OCDE. (2016). PISA 2015 asseessment and analytical framework: Sicence, reading, mathematic and financial literacy. OEDC Publisching. [Google Scholar]

- Pedaste, M., Mäeots, M., Siiman, L. A., de Jong, T., van Riesen, S. A. N., Kamp, E. T., Manoli, C. C., Zacharia, Z. C., & Tsourlidaki, E. (2015). Phases of inquiry-based learning: Definitions and the inquiry cycle. Educational Research Review, 14, 47–61. [Google Scholar] [CrossRef]

- Philips, D. (2006). Assessing the quality of design research proposals: Some philosophical perspectives. In J. van de Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research (pp. 93–97). Routledge. [Google Scholar]

- Pozuelo-Muñoz, J., Calvo-Zueco, E., Sánchez-Sánchez, E., & Cascarosa-Salillas, E. (2023). Science skills development through problem-based learning in secondary education. Education Sciences, 13, 1096. [Google Scholar] [CrossRef]

- Reeves, T. C. (2006). Design research from a technology perspective. In J. van de Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research (pp. 52–66). Routledge. [Google Scholar]

- Rodrigues, A., & Mattos, C. (2011). Contexto, negociación y actividad en una clase de física. [Context, negotiation and activity in a physics class]. Enseñanza de las Ciencias, 29, 263–274. [Google Scholar]

- Romero Ariza, M., & Quesada, A. (2015). Is the science taught useful to explain daily phenomena? A qualitative study with pre-service teachers. In ICERI2015 Proccedings (pp. 2150–2156). LATED Academy. [Google Scholar]

- Rosales Ortega, E. M., Rodríguez Ortega, P. G., & Romero Ariza, M. (2020). Conocimiento, demanda cognitiva y contexto en la evaluación de la alfabetización científica en PISA. Revista Eureka sobre Enseñanza y Divulgación de las Ciencias, 17(2), 2302. [Google Scholar] [CrossRef]

- Runco, M. A., & Okuda, S. M. (1988). Problem Discovery, divergent thinking and the creative process. Journal of Youth and Adolescence, 17, 211–220. [Google Scholar] [CrossRef]

- Sanmartí, N., & Márquez, C. (2017). Aprendizaje de las ciencias basado en proyectos: Del contexto a la acción. Ápice Revista de Educación Científica, 1(1), 3–16. [Google Scholar] [CrossRef]

- Savall Alemany, F., Doménech, J., Guisasola, J., & Martínez Torregrosa, J. (2016). Identifying student and teacher difficulties in interpreting atomic spectra using a quantum model of emission and absorption of radiation. Physical Review Physics Education Research, 12, 010132. [Google Scholar] [CrossRef]

- Savall Alemany, F., Guisasola Aranzábal, J., Rosa Cintas, S., & Martínez-Torregrosa, J. (2019). Problem-based structure for a teaching-learning sequence to overcome students’ difficulties when learning about atomic spectra. Physical Review Physics Education Research, 15, 020138. [Google Scholar] [CrossRef]

- Sánchez-Azqueta, C., Cascarosa, E., Celma, S., Gimeno, C., & Aldea, C. (2019). Application of a flipped classroom for model-based learning in electronics. International Journal of Engineering Education, 35(3), 938–946. [Google Scholar]

- Sánchez-Azqueta, C., Cascarosa, E., Celma, S., Gimeno, C., & Aldea, C. (2023). Quick response codes as a complement for the teaching of Electronics in laboratory activities. International Journal of Electrical Engineering & Education, 60(2), 153–167. [Google Scholar]

- Solbes, J., Montserrat, R., & Furió, C. (2007). El desinterés del alumnado hacia el aprendizaje de la ciencia: Implicaciones en su enseñanza. [The disinterest of students towards learning science: Implications in their teaching]. Didáctica de las Ciencias Experimentales y Sociales, 21, 91–117. [Google Scholar]

- Swarat, S., Ortony, A., & Revelle, W. (2012). Activity matters: Understanding student interest in school science. Journal of Research in Science Teaching, 49, 515–537. [Google Scholar] [CrossRef]

- Tamir, D. P., Nussinovitz, R., & Friedler, Y. (1982). The design and use of a practical tests assessment inventory. Journal of Biological Education, 16(1), 42–50. [Google Scholar] [CrossRef]

- Ültay, N., & Çalik, M. (2012). A thematic review of studies into the effectiveness of context-based chemistry curricula. Journal of Science Education and Technology, 21, 686–701. [Google Scholar] [CrossRef]

- Wang, H. A., Thompson, P., & Shuler, C. F. (1998). Essential components of problem-based learning for the K-12 inquiry science instruction. CCMB. [Google Scholar]

| Activity 1: Choosing a Weather Station | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Stages | Skills | Cognitive Demand | |||||||||

| Total | |||||||||||

| E1: Research approach | Identification of researchable problems | 0 | 1 | 2 | 3 | 28 | |||||

| Hypothesis formulation | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| Information search | 0 | 1 | 2 | 3 | 4 | 10 | |||||

| E2: Research planning | Identification of variables | Recognize types | 0 | 1 | 2 | 3 | 6 | 55 | |||

| Technological | 0 | 1 | 2 | 3 | 4 | 10 | |||||

| Physics | 0 | 1 | 2 | 3 | 8 | 5 | 19 | ||||

| Research planning | Long term | 0 | 1 | 2 | 3 | 4 | 10 | ||||

| Each session | 0 | 1 | 2 | 3 | 4 | 10 | |||||

| E3: Data | Observation and data collection | 0 | 0 | 0 | |||||||

| Interpretation of results | 0 | 0 | |||||||||

| E4: Conclusions | Conclusion and argument | Reference to evidence | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | 63 |

| Disadvantages | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | |||

| Opposite position | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | |||

| E5: Communication of results | Results presentation | Clarity | 0 | 1 | 1 | 31 | |||||

| Graphics and images | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| Language | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| E6: Reflection | Reflection | Science and technology | 0 | 1 | 2 | 3 | 6 | 12 | |||

| Self-assessment | 0 | 1 | 2 | 3 | |||||||

| Total | 0 | 16 | 30 | 42 | 48 | 35 | 18 | 189 | |||

| Activity 2 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Stages | Skills | Cognitive Demand | |||||||||

| Total | T | ||||||||||

| E1: Research approach | Problem identification | 0 | 1 | 2 | 3 | 28 | |||||

| Hypothesis formulation | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| Information search | 0 | 1 | 2 | 3 | 4 | 10 | |||||

| E2: Research planning | Variable identification | Recognize types | 0 | 1 | 2 | 3 | 49 | ||||

| Technological | 0 | 1 | 4 | 5 | |||||||

| Physics | 0 | 1 | 2 | 6 | 12 | 21 | |||||

| Research planning | Long term | 0 | 1 | 2 | 3 | 4 | 10 | ||||

| Each session | 0 | 1 | 2 | 3 | 4 | 10 | |||||

| E3: Data | Observation and data collection | Functioning | 0 | 1 | 2 | 3 | 4 | 5 | 15 | 101 | |

| Instruments | 0 | 1 | 2 | 3 | 4 | 12 | 6 | 28 | |||

| Location | 1 | 2 | 3 | 4 | 6 | 6 | 22 | ||||

| Interpretation of results | About data | 1 | 2 | 3 | 4 | 5 | 6 | 21 | |||

| Validation | 0 | 4 | 5 | 6 | 15 | ||||||

| E4: Conclusions | Conclusion (argumentation) | Reference to evidence | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | 63 |

| Disadvantages | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | |||

| Opposite position | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 21 | |||

| E5: Communication of results | Results presentation | Clarity | 0 | 1 | 1 | 31 | |||||

| Graphics and images | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| Language | 0 | 1 | 2 | 3 | 4 | 5 | 15 | ||||

| E6: Reflection | Reflection | Science and technology | 0 | 1 | 2 | 3 | 6 | 12 | |||

| Self-assessment | 0 | 1 | 2 | 3 | 6 | ||||||

| Total | 0 | 20 | 40 | 51 | 68 | 63 | 42 | 284 | |||

| Presented Idea | Associated Data or Phrase | ||

|---|---|---|---|

| Related to Scientific Practice and Inquiry | |||

| They understand the activity as buying a weather station online and then placing it in the school | “So we looked online for a station to buy and then we put it in the school.” | ||

| They justify the scientific component because: | It is conducted in the subject of Scientific Culture | “It’s a scientific project because otherwise, we wouldn’t do it in the Scientific Culture subject.” | |

| A weather station is purchased, an instrument associated with measuring atmospheric phenomena | “It’s a meteorological project because it’s used to measure the weather.” | ||

| They do not see the usefulness of the weather station, although they do recognize the importance of knowing the weather | “And why do we need a weather station if in the end, the mobile phone tells you the weather forecast?” | ||

| Related to Physical and Meteorological Content Linked to the Project | |||

| Magnitudes | They do not know the meteorological magnitudes measured by a weather station. | “All weather stations will be more or less the same.” | |

| “It will measure heat, cold, wind, storms, lightning, and all that.” “Lightning cannot be predicted or measured.” | |||

| The only magnitudes clearly mentioned are temperature and wind | “It won’t measure heat and cold, just temperature.” | ||

| The weather station is not exactly understood as a measuring instrument but rather as a weather forecasting tool | “The station will be used to know if the weather will be good or if there will be a storm.” | ||

| Measurements | Only the thermometer and the weather vane are named as meteorological instruments. The units of measurement are known, but not the name of the instrument that measures wind speed | “Temperature is measured with a thermometer and wind with a weather vane.” “Temperature is measured in degrees or Kelvin degrees.” “Wind speed is measured in km/h.” | |

| Regarding characteristics, it is only mentioned that there should be no errors. It is not specified how or what needs to be considered for this to happen | “It is important that the station measures accurately”… “That means the station should not have measurement errors.” | ||

| Location | Two characteristics are mentioned: there should be no obstacles that interfere with the wind; it should be placed in the sun (without justification) | “It should not be placed behind a wall, for example, because then the weather vane won’t move.” “And it should also be in the sun” (the reason is not provided). | |

| Related to Technological Implications and Project Aspects | |||

| They believe that all weather stations are the same or very similar in characteristics | “All weather stations will be more or less the same; there won’t be a difference between the ones each group chooses.” | ||

| They are unaware of the station’s operation regarding its connection formats, parts, etc. | “But the station runs on batteries.” “And we need to check it to know what it is measuring.” | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pozuelo-Muñoz, J.; de Echave Sanz, A.; Cascarosa Salillas, E. Inquiring in the Science Classroom by PBL: A Design-Based Research Study. Educ. Sci. 2025, 15, 53. https://doi.org/10.3390/educsci15010053

Pozuelo-Muñoz J, de Echave Sanz A, Cascarosa Salillas E. Inquiring in the Science Classroom by PBL: A Design-Based Research Study. Education Sciences. 2025; 15(1):53. https://doi.org/10.3390/educsci15010053

Chicago/Turabian StylePozuelo-Muñoz, Jorge, Ana de Echave Sanz, and Esther Cascarosa Salillas. 2025. "Inquiring in the Science Classroom by PBL: A Design-Based Research Study" Education Sciences 15, no. 1: 53. https://doi.org/10.3390/educsci15010053

APA StylePozuelo-Muñoz, J., de Echave Sanz, A., & Cascarosa Salillas, E. (2025). Inquiring in the Science Classroom by PBL: A Design-Based Research Study. Education Sciences, 15(1), 53. https://doi.org/10.3390/educsci15010053