Using a Modified Gower Distance Measure to Assess Supplemental Learning Supporting an Online Social Science Graduate Course

Abstract

1. Introduction

2. SI Program Overview

3. Materials and Methods

3.1. Gower Distance Matching (GDM)

3.2. Academic Variables Used to Gauge SI Efficacy

3.3. Descriptive Statistics

4. Results

5. Discussion

5.1. Instructor Effect on the Impact of Supplemental Instruction

5.2. Effect of Frequency of Attendance on the Impact of Supplemental Instruction

5.3. Study Limitaions

6. Conclusions

- An improvement in post-course versus pre-course evaluation was recorded at a level exceeding the worthwhile intervention threshold. The magnitude of this effect was consistent no matter how many SI sessions were attended.

- For an outlier instructor, the course pass rate and course grade were significantly increased by SI with a medium to large effect noted. The more SI sessions attended by students, the greater the impact on these variables.

- For all other instructors, a non-significant increase in course retention and course grade was observed, which slightly increased with SI attendance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bowles, T. J., McCoy, A. C., & Bates, S. C. (2008). The effect of supplemental instruction on timely graduation. College Student Journal, 42, 853–859. [Google Scholar]

- Bowman, N. S., Preschel, S., & Martinez, D. (2023). Does Supplemental Instruction improve grades and retention? A propensity score analysis approach. The Journal of Experimental Education, 91(2), 205–229. [Google Scholar] [CrossRef]

- Burkholder, E., Salehi, S., & Wieman, C. E. (2021). Mixed results from a multiple regression analysis of supplemental instruction courses in introductory physics. PLoS ONE, 16(4), e0249086. [Google Scholar] [CrossRef]

- Cheng, D., & Walters, M. (2009). Peer-assisted learning in mathematics: An observational study of student success. Journal of Peer Learning, 2, 23–39. [Google Scholar]

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Routledge. [Google Scholar]

- Congos, D., & Mack, A. (2005). Supplemental instruction’s impact in two freshman chemistry classes: Research, modes of operation, and anecdotes. Research & Teaching in Developmental Education, 21(2), 43–64. [Google Scholar]

- Dawson, P., van der Meer, J., Skalicky, J., & Cowley, K. (2014). On the effectiveness of Supplemental Instruction: A systematic review of Supplemental Instruction and Peer-Assisted Study Sessions literature between 2001 and 2010. Review of Educational Research, 84(4), 609–639. [Google Scholar] [CrossRef]

- Deom, G. (2023). Distance based matching program assessment tool [computer code]. Available online: https://github.com/iu-ia-research-analytics/distance-based-matching-program-assessment-tool/blob/main/Matching_Application.R (accessed on 14 November 2023).

- Dettmann, E., Becker, C., & Schmeißer, C. (2011). Distance functions for matching in small samples. Computational Statistics and Data Analysis, 55(5), 1942–1960. [Google Scholar] [CrossRef]

- D’Orazio, M. (2021). Distances with mixed type variables some modified Gower’s coefficients. arXiv, arXiv:2101.02481. [Google Scholar] [CrossRef]

- Doubleday, K. F., & Townsend, S. A. (2018). Supplemental Instruction as a resource for graduate student pedagogical development. Yearbook of the Association of Pacific Coast Geographers, 80, 134–156. [Google Scholar] [CrossRef]

- Fayowski, V., & MacMillan, P. D. (2008). An evaluation of the supplemental instruction programme in a first year calculus course. International Journal of Mathematical Education in Science and Technology, 39, 843–855. [Google Scholar] [CrossRef]

- Feldon, D. F., Timmerman, B. C., Stowe, K. A., & Showman, R. (2010). Translating expertise into effective instruction: The impacts of Cognitive Task Analysis (CTA) on lab report quality and student retention in the Biological Sciences. Journal of Research in Science Teaching, 47(10), 1165–1185. [Google Scholar] [CrossRef]

- Garcia, G. A., Núñez, A.-M., & Sansone, V. A. (2019). Toward a Multidimensional Conceptual Framework for Understanding “Servingness” in Hispanic-Serving Institutions: A Synthesis of the Research. Review of Educational Research, 89(5), 745–784. [Google Scholar] [CrossRef]

- Gasiewski, J. A., Eagan, M. K., Garcia, G. A., Hurtado, S., & Chang, M. J. (2012). From gatekeeping to engagement: A multicontextual, mixed method study of student academic engagement in introductory STEM courses. Research in Higher Education, 53(2), 229–261. [Google Scholar] [CrossRef]

- Grillo, M. C., & Leist, C. W. (2013). Academic support as a predictor of retention to graduation: New insights on the role of tutoring, learning assistance, and supplemental instruction. Journal of College Student Retention: Research, Theory & Practice, 15(3), 387–408. [Google Scholar] [CrossRef]

- Guarcello, M. A., Levine, R. A., Beemer, J., Frazee, J. P., Laumakis, M. A., & Schellenberg, S. A. (2017). Balancing student success: Assessing Supplemental Instruction through coarsened exact matching. Technology, Knowledge and Learning, 22, 335–352. [Google Scholar] [CrossRef]

- Hattie, J. (2009). Visible learning: A synthesis of 800+ meta-analyses on achievement. Routledge. [Google Scholar]

- Hensen, K. A., & Shelley, M. C. (2003). The impact of supplemental instruction: Results from a large, public, Midwestern university. Journal of College Student Development, 44, 250–259. [Google Scholar] [CrossRef]

- Ho, D. E., Imai, K., King, G., & Stuart, E. A. (2011). MatchIt: Nonparametric preprocessing for parametric causal inference. Journal of Statistical Software, 42, 8. [Google Scholar] [CrossRef]

- Iacus, S. M., King, G., & Porro, G. (2012). Causal inference without balance checking: Coarsened exact matching. Political Analysis, 20, 1–24. [Google Scholar] [CrossRef]

- Kalsbeek, D. H. (2013). Framing retention for institutional improvement: A 4 Ps framework. In D. H. Kalsbeek (Ed.), Reframing retention strategy for institutional improvement (pp. 5–14). New Directions for Higher Education, no. 161. Jossey-Bass. [Google Scholar] [CrossRef]

- Koch, A. K. (2017). It’s about the gateway courses: Defining and contextualizing the issue. In A. K. Koch (Ed.), Improving teaching, learning, equity, and success in gateway courses (pp. 11–17). New Directions for Higher Education, no. 180. Jossey-Bass. [Google Scholar] [CrossRef]

- Kochenour, E., Jolley, D., Kaup, J., Patrick, D., Roach, K., & Wenzler, L. (1997). Supplemental instruction: An effective component of student affairs programming. Journal of College Student Development, 38, 577–586. [Google Scholar]

- Mack, A. C. (2007). Differences in academic performance and self-regulated learning based on level of student participation in Supplemental Instruction [Doctoral Dissertation, University of Central Florida]. [Google Scholar]

- Malm, J., Bryngfors, L., & Fredriksson, J. (2018). Impact of Supplemental Instruction on dropout and graduation rates: An example from 5-year engineering programs. Journal of Peer Learning, 11(1), 76–88. [Google Scholar]

- Martin, D. C., & Arendale, D. R. (1992). Supplemental instruction: Improving first-year student success in high-risk courses [Monograph] (Monograph Series Number 7). The National Resource Center for The Freshman Year Experience, University of South Carolina. [Google Scholar]

- Mayhew, M. J., Rockenbach, A. N., Bowman, N. A., Seifert, T. A., Wolniak, G. C., Pascarella, E. T., & Terenzini, P. T. (2016). How college affects students (Vol. 3): 21st century evidence that higher education works. Jossey-Bass. [Google Scholar]

- McCarthy, A., Smuts, B., & Cosser, M. (1997). Assessing the effectiveness of supplemental instruction: A critique and a case study. Studies in Higher Education, 22, 221–231. [Google Scholar] [CrossRef]

- Ogden, P., Thompson, D., Russell, A., & Simons, C. (2003). Supplemental Instruction: Short and long-term impact. Journal of Developmental Education, 3(26), 2–8. [Google Scholar]

- Oja, M. (2012). Supplemental instruction improves grades but not persistence. College Student Journal, 46(2), 344–349. [Google Scholar]

- Paloyo, A., Rogan, S., & Siminski, P. (2016). The effect of supplemental instruction on academic performance: An encouragement design experiment. Economics of Education Review, 55, 57–69. [Google Scholar] [CrossRef]

- Ramirez, M. (1997). Supplemental Instruction: Long-term impact. Journal of Developmental Education, 1(21), 2–6. [Google Scholar]

- Rath, K. A., Peterfreund, A. R., Xenos, S. P., Bayliss, F., & Carnal, N. (2007). Supplemental instruction in Introductory Biology I: Enhancing the performance and retention of underrepresented minority students. CBE Life Sciences Education, 6(3), 203–216. [Google Scholar] [CrossRef]

- Wlodkowski, R. J., & Ginsberg, M. B. (2010). Teaching intensive and accelerated courses: Instruction that motivates learning. Jossey-Bass. [Google Scholar]

| Semester | Students Enrolled | SI Attendants | Average Course Grade | Instructor | Pre- and Post-Evaluation |

|---|---|---|---|---|---|

| Spring 2020 | 36 | 9 | 2.438 | A | No |

| Summer 2020 | 14 | 5 | 3.462 | B | No |

| Fall 2020 | 38 | 14 | 2.257 | A | No |

| Spring 2021 | 33 | 29 | 2.774 | A | No |

| Summer 2021 | 23 | 16 | 3.136 | B | Yes |

| Fall 2021 | 30 | 6 | 3.037 | C | No |

| Spring 2022 | 25 | 6 | 3.542 | C | No |

| Summer 2022 | 27 | 5 | 3.320 | D | Yes |

| Fall 2022 | 31 | 4 | 3.379 | C | Yes |

| Spring 2023 | 34 | 14 | 3.719 | E | Yes |

| Summer 2023 | 18 | 10 | 3.778 | E | Yes |

| Student Populations | N | % Hispanic | % Female | Average Age | Average SCHs Attempted |

|---|---|---|---|---|---|

| Total | 309 | 68.9 | 61.8 | 30.9 | 7.86 |

| Non-SI Students | 191 | 71.2 | 56.5 | 29.9 | 7.81 |

| SI Students | 118 | 65.3 | 70.3 | 32.5 | 7.96 |

| p-Value | 0.2798 | 0.0135 * | 0.0214 * | 0.662 |

| Student Populations | N | % Hispanic | % Female | Average Age | Average SCHs Attempted |

|---|---|---|---|---|---|

| Total | 236 | 64.8 | 70.3 | 31.8 | 7.96 |

| Non-SI Students | 118 | 64.4 | 70.3 | 31.1 | 7.96 |

| SI Students | 118 | 65.3 | 70.3 | 32.5 | 7.96 |

| p-value | 0.8921 | 1 | 0.2646 | 1 |

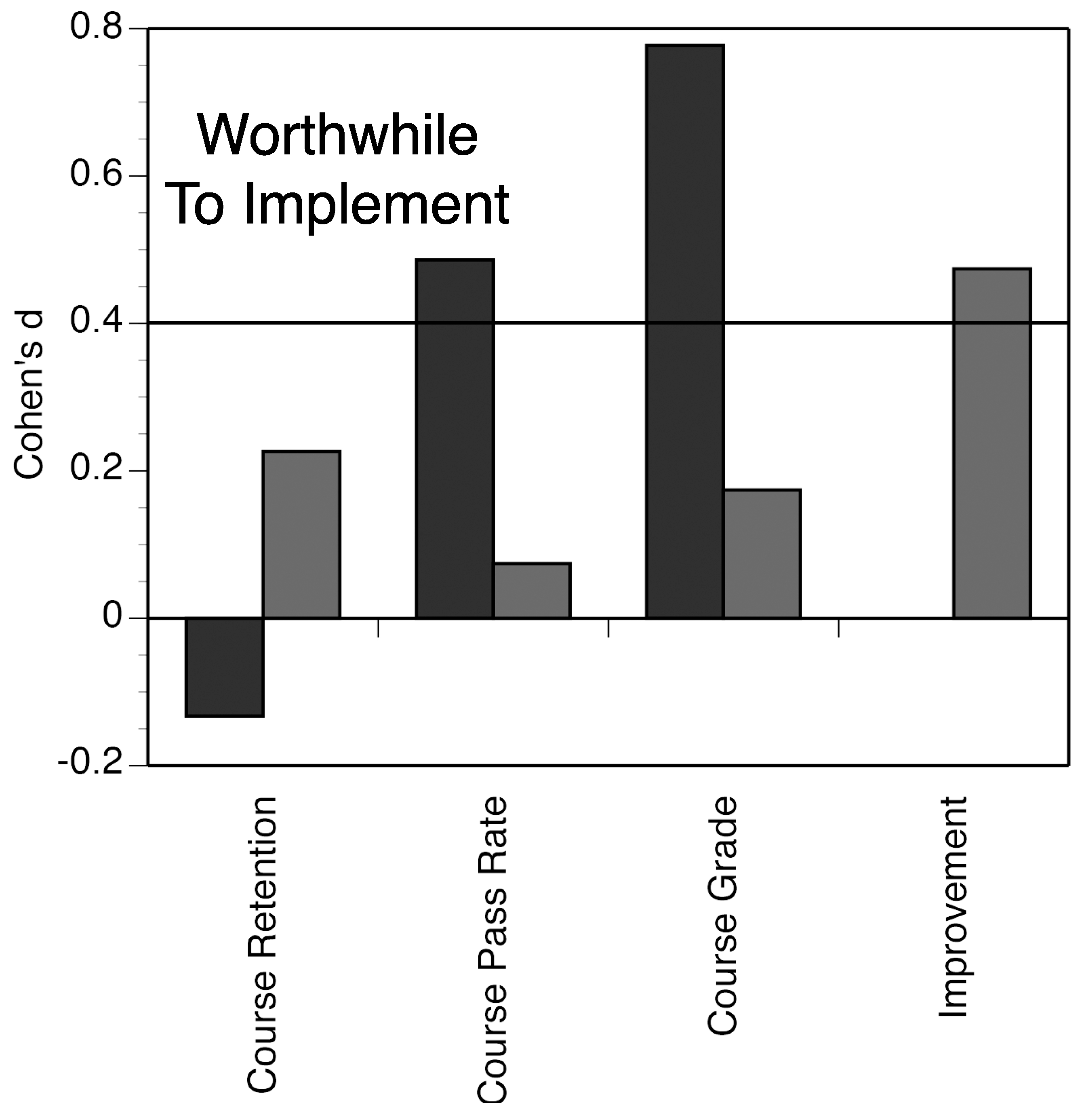

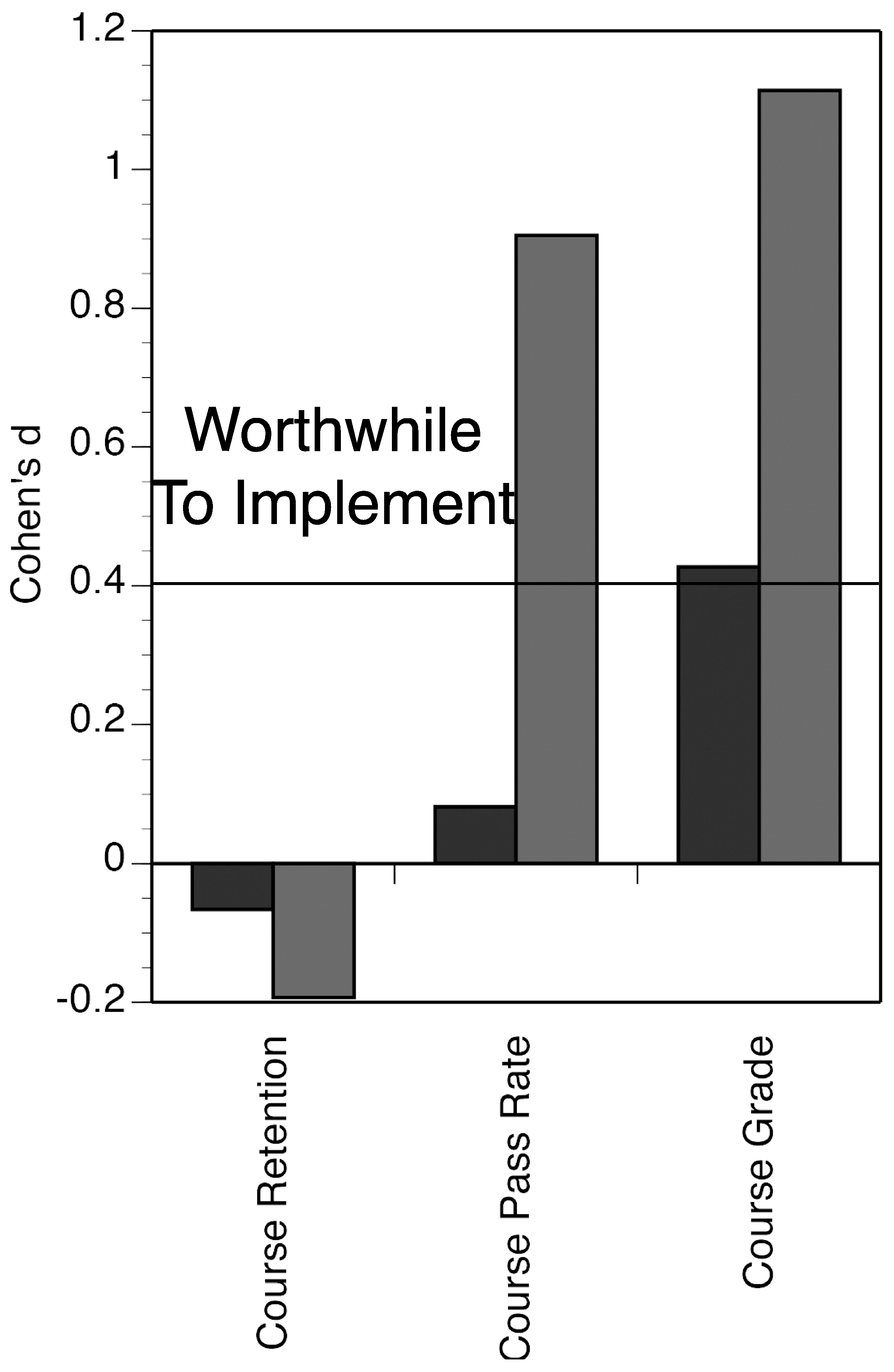

| Academic Variables | Non-SI Students | SI Students | p Value | Cohen’s d |

|---|---|---|---|---|

| Course Retention | 0.949 (n = 118) | 0.966 (n = 118) | 0.5202 | 0.0839 |

| Course Pass Rate | 0.777 (n = 112) | 0.816 (n = 114) | 0.4690 | 0.0966 |

| Course Grade | 2.946 (n = 112) | 3.202 (n = 114) | 0.0580 | 0.2542 |

| Improvement | 0.489 (n = 47) | 0.717 (n = 46) | 0.0245 * | 0.4739 |

| Academic Variables | Number of SI Sessions | Non-SI Students | SI Students | p Value | Cohen’s d |

|---|---|---|---|---|---|

| Course Retention | 1 | 0.940 (n = 84) | 0.955 (n = 22) | 0.790 | 0.060 |

| Course Retention | 2 or more | 0.940 (n = 84) | 1.000 (n = 44) | - | - |

| Course Pass Rate | 1 | 0.937 (n = 79) | 1.000 (n = 21) | - | - |

| Course Pass Rate | 2 or more | 0.937 (n = 79) | 0.932 (n = 44) | 0.918 | −0.020 |

| Course Grade | 1 | 3.354 (n = 79) | 3.429 (n = 21) | 0.603 | 0.101 |

| Course Grade | 2 or more | 3.354 (n = 79) | 3.500 (n = 44) | 0.264 | 0.199 |

| Improvement | 1 | 0.489 (n = 47) | 0.714 (n = 14) | 0.136 | 0.452 |

| Improvement | 2 or more | 0.489 (n = 47) | 0.719 (n = 32) | 0.039 * | 0.472 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De La Cruz Hernandez, J.; Tobin, K.J.; Kilburn, J.C.; Bennett, M.E. Using a Modified Gower Distance Measure to Assess Supplemental Learning Supporting an Online Social Science Graduate Course. Educ. Sci. 2025, 15, 371. https://doi.org/10.3390/educsci15030371

De La Cruz Hernandez J, Tobin KJ, Kilburn JC, Bennett ME. Using a Modified Gower Distance Measure to Assess Supplemental Learning Supporting an Online Social Science Graduate Course. Education Sciences. 2025; 15(3):371. https://doi.org/10.3390/educsci15030371

Chicago/Turabian StyleDe La Cruz Hernandez, Jacinto, Kenneth John Tobin, John C. Kilburn, and Marvin Edward Bennett. 2025. "Using a Modified Gower Distance Measure to Assess Supplemental Learning Supporting an Online Social Science Graduate Course" Education Sciences 15, no. 3: 371. https://doi.org/10.3390/educsci15030371

APA StyleDe La Cruz Hernandez, J., Tobin, K. J., Kilburn, J. C., & Bennett, M. E. (2025). Using a Modified Gower Distance Measure to Assess Supplemental Learning Supporting an Online Social Science Graduate Course. Education Sciences, 15(3), 371. https://doi.org/10.3390/educsci15030371