Can Correct and Incorrect Worked Examples Supersede Worked Examples and Problem-Solving on Learning Linear Equations? An Examination from Cognitive Load and Motivation Perspectives

Abstract

1. Introduction

2. Cognitive Load and Element Interactivity

- Intrinsic cognitive load is the cognitive load imposed by the inherent complexity of learning materials (Chen et al., 2023), which is influenced by the level of element interactivity and varies with the learner’s expertise in a given domain (Sweller, 2024). Experts in a specific domain can treat multiple interactive elements as a single unit of element, or schema, reducing the cognitive load. For instance, consider the linear equation 5x + 2(3 − x) = 12 (Appendix A). The first step in solving the equation involves expanding the bracket, resulting in 5x + 6 − 2x = 12. A learner with prior knowledge of bracket expansion can process 2(3 − x) as a single unit, thus reducing the element interactivity involved and lowering the cognitive load required to solve the equation.

- Germane cognitive load arises from the investment of cognitive resources to actively process and learn the essential aspects of the materials, which are intrinsic to the learning process (Sweller, 2010). For example, variable practice tasks can increase germane cognitive load by requiring learners to distinguish between different tasks that share a similar problem structure (Likourezos et al., 2019). As task variability increases, so does the element interactivity, and consequently, germane cognitive load. Thus, germane cognitive load does not act as an independent source of cognitive load; rather, it is considered a component of intrinsic cognitive load.

- Extraneous cognitive load is the cognitive load that does not contribute to learning and is typically caused by ineffective instructional design. It can be minimized by re-designing the instruction. For example, a problem-solving approach that requires learners to search for a solution path without guidance imposes a high level of element interactivity, which contributes to extraneous cognitive load. Replacing the problem-solving approach with worked examples can reduce extraneous cognitive load by providing learners with a clear solution path to understanding.

3. Worked Example Effect and Expertise Reversal Effect

4. Correct and Incorrect Worked Examples (CICWEs)

5. Motivation: Achieving Optimal Best

- Optimal best (L2)—This refers to a person’s belief in their maximum capability to master a complex task, shaped by their learning experiences. For example, successful learning to solve complex linear equations would influence a learner’s belief in their optimal best or notional best functioning.

- Realistic best (L1)—This refers to a person’s belief in their ability to master a simpler task based on their learning experiences. For instance, successful learning to solve simpler linear equations would influence a learner’s belief in their actual best or realistic best.

6. The Target Domain: Linear Equations

- Type 1 equations: these involve only positive numbers and positive pronumerals (e.g., 2x + 3(1 + x) = 13).

- Type 2 equations: these involve positive numbers, a positive pronumeral, and a negative pronumeral (e.g., x + 2(3 − x) = 12).

- Type 3 equations: these include positive and negative numbers, as well as both positive and negative pronumerals (e.g., 4x − 2(1 − 2x) = 14 (Vlassis, 2002).

7. The Present Study

- Realistic best (i.e., realistic best subscale) would reflect a student’s belief in their ability to solve simple linear equations, which would not be a function of different instructional approaches.

- Optimal best (i.e., optimal best subscale) would reflect a student’s belief in their ability to solve complex linear equations, and this belief would be a function of different instructional approaches.

- (i)

- Does an appropriate instructional approach influence a student’s belief in achieving optimal achievement best?

- (ii)

- Does a sub-optimal instructional approach influence a student’s belief in achieving realistic achievement best? Additionally, our inquiry examines the impact of learner expertise and instructional efficiency on learning linear equations of varying complexity.

8. Experiment 1

9. Methods

9.1. Participants

9.2. Materials

- I am content with what I have accomplished so far for the topic of solving equations.

- The practice exercise is not very effective in helping me learn how to solve equations.

- I can achieve much more for the topic of solving equations than I have indicated through my work so far.

- The practice exercise is very effective in helping me learn how to solve equations.

9.3. Procedure

9.4. Scoring

10. Results and Discussion

11. Experiment 2

12. Method

12.1. Participants

12.2. Materials, Procedure, and Scoring

13. Results and Discussion

14. General Discussion

14.1. Theoretical and Practical Contributions for Consideration

14.2. Limitations and Future Directions

15. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Instruction Sheet

Appendix A.1.1. Equations

- An equation is a number sentence in which one of the numbers is unknown and is represented by a pronumeral.

- Equations are sometimes called algebraic sentences.

Appendix A.1.2. Solving Equations

- Solving equations requires us to use inverse operation to work back to the pronumeral.

- We perform inverse operations to remove all other numbers associated with the pronumeral.

- For instance, we conceptualize a positive number from one side of the equation to become a negative number on the other side of the equation.

| 13 | Expand the bracket | |

| 13 | Collect like terms; + 3 becomes | |

| 13 − 3 | ||

| 10 | × 5 becomes ÷ 5 | |

| x = | ||

| 2 |

| 12 | Expand the bracket | |

| 12 | Collect like terms; + 6 becomes | |

| 12 − 6 | ||

| 6 | × 3 becomes ÷ 3 | |

| 2 |

| 14 | Expand the bracket | |

| 14 | Collect like terms; becomes | |

| 14 + 2 | ||

| 16 | × 8 becomes ÷ 8 | |

| x = | ||

| 2 |

Appendix B

Appendix B.1. Acquisition Equations

| CICWE approach | ||

| Correct worked example | ||

| Line 1: | ||

| Line 2: | ||

| Line 3: | ||

| Line 4: | ||

| Incorrect worked example | ||

| Line 1: | ||

| Line 2: | Can you explain why this step is incorrect? (hint: compare this step with Line 2 above) | |

| Line 3: | Explain (Write a short sentence): | |

| Line 4: | ||

| WE approach | ||

| Worked example 1 | ||

| Problem 1 | ||

| PS approach | ||

| Problem 1 | Problem 2 | |

Appendix B.2. Sample for the Pre-Test or the Post-Test

| Question 1 | Question 2 |

| Question 3 | Question 4 |

References

- Agostinho, S., Tindall-ford, S., Ginns, P., Howard, S. J., Leahy, W., & Paas, F. (2015). Giving learning a helping hand: Finger tracing of temperature graphs on an iPad. Educational Psychology Review, 27(3), 427–443. [Google Scholar] [CrossRef]

- Aldenderfer, M. S., & Blashfield, R. K. (1985). Cluster analysis. Sage Publications. [Google Scholar]

- Atkinson, R. K., Derry, S. J., Renkl, A., & Wortham, D. (2000). Learning from examples: Instructional principles from the worked examples research. Review of Educational Research, 70(2), 181–214. [Google Scholar] [CrossRef]

- Ayres, P. L. (2001). Systematic mathematical errors and cognitive load. Contemporary Educational Psychology, 26(2), 227–248. [Google Scholar] [CrossRef]

- Ayres, P. L. (2006). Using subjective measures to detect variations of intrinsic cognitive load within problems. Learning and Instruction, 16(5), 389–400. [Google Scholar] [CrossRef]

- Ballheim, C. (1999). Readers respond to what’s basic. Mathematics Education Dialogues, 3, 11. [Google Scholar]

- Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman & Co. [Google Scholar]

- Bandura, A. (2002). Social cognitive theory in cultural context. Applied Psychology: An International Review, 51(2), 269–290. [Google Scholar] [CrossRef]

- Barbieri, C. A., & Booth, J. L. (2016). Support for struggling students in algebra: Contributions of incorrect worked examples. Learning and Individual Differences, 48, 36–44. [Google Scholar] [CrossRef]

- Barbieri, C. A., & Booth, J. L. (2020). Mistakes on display: Incorrect examples refine equation solving and algebraic feature knowledge. Applied Cognitive Psychology, 34(4), 862–878. [Google Scholar] [CrossRef]

- Blayney, P., Kalyuga, S., & Sweller, J. (2016). The impact of complexity on the expertise reversal effect: Experimental evidence from testing accounting students. Educational Psychology, 36(10), 1868–1885. [Google Scholar] [CrossRef]

- Bokosmaty, S., Sweller, J., & Kalyuga, S. (2015). Learning geometry problem solving by studying worked examples:Effects of learner guidance and expertise. American Educational Research Journal, 52(2), 307–333. [Google Scholar] [CrossRef]

- Booth, J. L., Lange, K. E., Koedinger, K. R., & Newton, K. J. (2013). Using example problems to improve student learning in algebra: Differentiating between correct and incorrect examples. Learning and Instruction, 25, 24–34. [Google Scholar] [CrossRef]

- Caglayan, G., & Olive, J. (2010). Eighth grade students’ representations of linear equations based on a cups and tiles model. Educational Studies in Mathematics, 74(2), 143–162. [Google Scholar] [CrossRef]

- Cai, J., Lew, H. C., Morris, A., Moyer, J. C., Ng, S. F., & Schmittau, J. (2005). The development of students’ algebraic thinking in earlier grades: A cross-cultural comparative perspective. ZDM—The International Journal on Mathematics Education, 37, 5–15. [Google Scholar] [CrossRef]

- Chen, O., Kalyuga, S., & Sweller, J. (2017). The expertise reversal effect is a variant of the more general element interactivity effect. Educational Psychology Review, 29(2), 393–405. [Google Scholar] [CrossRef]

- Chen, O., Paas, F., & Sweller, J. (2023). A Cognitive load theory approach to defining and measuring task complexity through element interactivity. Educational Psychology Review, 35(2), 63. [Google Scholar] [CrossRef]

- Cohen, J. (1988). Statistical power analysis the behavioral sciences (2nd ed.). Erlbaum. [Google Scholar]

- Durkin, K., & Rittle-Johnson, B. (2012). The effectiveness of using incorrect examples to support learning about decimal magnitude. Learning and Instruction, 22(3), 206–214. [Google Scholar] [CrossRef]

- Ericsson, K. A. (2006). The influence of experience and deliberate practice on the development of superior expert performance. In The Cambridge handbook of expertise and expert performance (pp. 683–703). Cambridge University Press. [Google Scholar] [CrossRef]

- Evans, P., Vansteenkiste, M., Parker, P., Kingsford-Smith, A., & Zhou, S. (2024). Cognitive load theory and its relationships with motivation: A self-determination theory perspective. Educational Psychology Review, 36(1), 7. [Google Scholar] [CrossRef]

- Fast, L. A., Lewis, J. L., Bryant, M. J., Bocian, K. A., Cardullo, R. A., Rettig, M., & Hammond, K. A. (2010). Does math self-efficacy mediate the effect of perceived classroom environment on standardized math performance? Journal of Educational Psychology, 102(3), 729–740. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). GPower 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef]

- Feldon, D. F., Franco, J., Chao, J., Peugh, J., & Maahs-Fladung, C. (2018). Self-efficacy change associated with a cognitive load-based intervention in an undergraduate biology course. Learning and Instruction, 56, 64–72. [Google Scholar] [CrossRef]

- Fraillon, J. (2004). Measuring student well-being in the context of Australian schooling: Discussion paper (E. Ministerial Council on Education, Training and Youth Affairs Ed.). The Australian Council for Research. [Google Scholar]

- Grammer, J. K., Coffman, J. L., Ornstein, P. A., & Morrison, F. J. (2013). Change over time: Conducting longitudinal studies of children’s cognitive development. Journal of Cognition and Development, 14(4), 515–528. [Google Scholar] [CrossRef]

- Granero-Gallegos, A., Phan, H. P., & Ngu, B. H. (2023). Advancing the study of levels of best practice pre-service teacher education students from Spain: Associations with both positive and negative achievement-related experiences. PLoS ONE, 18(6), e0287916. [Google Scholar] [CrossRef] [PubMed]

- Große, C. S., & Renkl, A. (2007). Finding and fixing errors in worked examples: Can this foster learning outcomes? Learning and Instruction, 17(6), 612–634. [Google Scholar] [CrossRef]

- Grund, A., Fries, S., Nückles, M., Renkl, A., & Roelle, J. (2024). When is learning “effortful”? Scrutinizing the concept of mental effort in cognitively oriented research from a motivational perspective. Educational Psychology Review, 36(11), 11. [Google Scholar] [CrossRef]

- Heemsoth, T., & Heinze, A. (2014). The impact of incorrect examples on learning fractions: A field experiment with 6th grade students. Instructional Science, 42(4), 639–660. [Google Scholar] [CrossRef]

- Huang, X. (2017). Example-based learning: Effects of different types of examples on student performance, cognitive load and self-efficacy in a statistical learning task. Interactive Learning Environments, 25(3), 283–294. [Google Scholar] [CrossRef]

- Huk, T., & Ludwigs, S. (2009). Combining cognitive and affective support in order to promote learning. Learning and Instruction, 19(6), 495–505. [Google Scholar] [CrossRef]

- Humberstone, J., & Reeve, R. A. (2008). Profiles of algebraic competence. Learning and Instruction, 18(4), 354–367. [Google Scholar] [CrossRef]

- Kalyuga, S., Ayres, P., Chandler, P., & Sweller, J. (2003). The Expertise Reversal Effect. Educational Psychologist, 38(1), 23–31. [Google Scholar] [CrossRef]

- Kalyuga, S., Chandler, P., Tuovinen, J., & Sweller, J. (2001). When problem solving is superior to studying worked examples. Journal of Educational Psychology, 93(3), 579–588. [Google Scholar] [CrossRef]

- Kieran, C. (1992). The learning and teaching of school algebra. In D. Grouws (Ed.), Handbook of research on mathematics teaching and learning (pp. 390–419). Macmillan. [Google Scholar] [CrossRef]

- Kline, R. B. (2011). Principles and practice of structural equation modeling (3rd ed.). The Guilford Press. [Google Scholar]

- Krell, M., Xu, K. M., Rey, G. D., & Paas, F. (Eds.). (2022). Recent approaches for assessing cognitive load from a validity perspective. Frontiers Media SA. [Google Scholar] [CrossRef]

- Likourezos, V., & Kalyuga, S. (2017). Instruction-first and problem-solving-first approaches: Alternative pathways to learning complex tasks. Instructional Science, 45(2), 195–219. [Google Scholar] [CrossRef]

- Likourezos, V., Kalyuga, S., & Sweller, J. (2019). The variability effect: When instructional variability is advantageous. Educational Psychology Review, 31(2), 479–497. [Google Scholar] [CrossRef]

- Loibl, K., Tillema, M., Rummel, N., & van Gog, T. (2020). The effect of contrasting cases during problem solving prior to and after instruction. Instructional Science, 48(2), 115–136. [Google Scholar] [CrossRef]

- Markovits, H., Schleifer, M., & Fortier, L. (1989). Development of elementary deductive reasoning in young children. Developmental Psychology, 25(5), 787–793. [Google Scholar] [CrossRef]

- Mascolo, M. F., College, M., & Fischer, K. W. (2010). The dynamic development of thinking, feeling and acting over the lifespan. In W. F. Overton (Ed.), Biology, cognition and methods across the life-span (Vol. 1). Wiley. [Google Scholar]

- Mayer, R. E. (1992). Thinking, problem solving, cognition (2nd ed.). W. H. Freeman and Company. [Google Scholar]

- Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81–97. [Google Scholar] [CrossRef]

- Neroni, J., Meijs, C., Kirschner, P. A., Xu, K. M., & de Groot, R. H. M. (2022). Academic self-efficacy, self-esteem, and grit in higher online education: Consistency of interests predicts academic success. Social Psychology of Education, 25(4), 951–975. [Google Scholar] [CrossRef]

- Ngu, B. H., & Phan, H. P. (2022). Advancing the study of solving linear equations with negative pronumerals: A smarter way from a cognitive load perspective. PLoS ONE, 17(3), e0265547. [Google Scholar] [CrossRef]

- Ngu, B. H., Phan, H. P., Usop, H., & Hong, K. S. (2023). Instructional efficiency: The role of prior knowledge and cognitive load. Applied Cognitive Psychology, 37(6), 1223–1237. [Google Scholar] [CrossRef]

- Ojose, B. (2008). Applying Piaget’s theory of cognitive development to mathematics instruction. The Mathematics Educator, 18(1), 26–30. [Google Scholar] [CrossRef]

- Paas, F. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. Journal of Educational Psychology, 84(4), 429. [Google Scholar] [CrossRef]

- Paas, F., Tuovinen, J. E., Tabbers, H., & Van Gerven, P. W. M. (2003). Cognitive load measurement as a means to advance cognitive load theory. Educational Psychologist, 38(1), 63–71. [Google Scholar] [CrossRef]

- Pachman, M., Sweller, J., & Kalyuga, S. (2013). Levels of knowledge and deliberate practice. Journal of Experimental Psychology: Applied, 19(2), 108–119. [Google Scholar] [CrossRef] [PubMed]

- Parker, M., & Leinhardt, G. (1995). Percent: A privileged proportion. Review of Educational Research, 65(4), 421–481. [Google Scholar] [CrossRef]

- Phan, H. P., & Ngu, B. H. (2021). Introducing the concept of consonance-disconsonance of best practice: A focus on the development of ‘Student Profiling’. Frontiers in Psychology, 12, 557968. [Google Scholar] [CrossRef] [PubMed]

- Phan, H. P., Ngu, B. H., Wang, H.-W., Shih, J.-H., Shi, S.-Y., & Lin, R.-Y. (2018). Understanding levels of best practice: An empirical validation. PLoS ONE, 13(6), e0198888. [Google Scholar] [CrossRef] [PubMed]

- Phan, H. P., Ngu, B. H., & Williams, A. (2016). Introducing the concept of Optimal Best: Theoretical and methodological contributions. Education, 136(3), 312–322. [Google Scholar]

- Phan, H. P., Ngu, B. H., & Yeung, A. S. (2017). Achieving optimal best: Instructional efficiency and the use of cognitive load theory in mathematical problem solving. Educational Psychology Review, 29(4), 667–692. [Google Scholar] [CrossRef]

- Phan, H. P., Ngu, B. H., & Yeung, A. S. (2019). Optimization: In-depth examination and proposition. Frontiers in Psychology, 10, 1398. [Google Scholar] [CrossRef]

- Piaget, J. (1963). The psychology of intelligence. Littlefield Adams. [Google Scholar]

- Piaget, J. (1990). The child’s conception of the world. Littlefield Adams. [Google Scholar]

- Pillai, R. M., Loehr, A. M., Yeo, D. J., Hong, M. K., & Fazio, L. K. (2020). Are there costs to ssing incorrect worked examples in mathematics education? Journal of Applied Research in Memory and Cognition, 9(4), 519–531. [Google Scholar] [CrossRef]

- Reed, S. K. (1987). A structure-mapping model for word problems. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13(1), 124–139. [Google Scholar] [CrossRef]

- Renkl, A. (2017). Learning from worked-examples in mathematics: Students relate procedures to principles. ZDM, 49(4), 571–584. [Google Scholar] [CrossRef]

- Renkl, A., Atkinson, R. K., Maier, U. H., & Staley, R. (2002). From example study to problem solving: Smooth transitions help learning. Journal of Experimental Education, 70(4), 293–315. [Google Scholar] [CrossRef]

- Rey, G. D., & Buchwald, F. (2011). The expertise reversal effect: Cognitive load and motivational explanations. Journal of Experimental Psychology: Applied, 17(1), 33–48. [Google Scholar] [CrossRef] [PubMed]

- Richland, L. E., & McDonough, I. M. (2010). Learning by analogy: Discriminating between potential analogs. Contemporary Educational Psychology, 35(1), 28–43. [Google Scholar] [CrossRef]

- Rimfeld, K., Kovas, Y., Dale, P. S., & Plomin, R. (2016). True grit and genetics: Predicting academic achievement from personality. Journal of Personality and Social Psychology, 111(5), 780. [Google Scholar] [CrossRef]

- Rittle-Johnson, B., & Star, J. R. (2007). Does comparing solution methods facilitate conceptual and procedural knowledge? An experimental study on learning to solve equations. Journal of Educational Psychology, 99(3), 561–574. [Google Scholar] [CrossRef]

- Schmidt, W. H., McKnight, C. C., Houang, R. T., Wang, H., Wiley, D. E., Cogan, L. S., & Wolfe, R. G. (2001). Why schools matter: A cross-national comparison of curriculum and learning. Jossey-Bass. [Google Scholar]

- Schumacker, R. E., & Lomax, R. G. (2004). A beginner’s guide to structural equation modeling (2nd ed.). Lawrence Erlbaum Associates, Inc. [Google Scholar]

- Siegler, R. S. (2002). Microgenetic studies of self-explanations. In N. Granott, & J. Parziale (Eds.), Microdevelopment: Transition processes in development and learning (pp. 31–58). Cambridge University Press. [Google Scholar]

- Speece, D. L. (1994). Cluster Analysis in Perspective. Exceptionality, 5(1), 31–44. [Google Scholar] [CrossRef]

- Stacey, K., & MacGregor, M. (1999). Learning the algebraic method of solving problems. The Journal of Mathematical Behavior, 18(2), 149–167. [Google Scholar] [CrossRef]

- Stevenson, H. W., Lee, S.-y., Chen, C., Lummis, M., Stigler, J., Fan, L., & Ge, F. (1990). Mathematics achievement of Children in China and the United States. Child Development, 61(4), 1053. [Google Scholar] [CrossRef][Green Version]

- Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educational Psychology Review, 22(2), 123–138. [Google Scholar] [CrossRef]

- Sweller, J. (2012). Human cognitive architecture: Why some instructional procedures work and others do not. In K. Harris, S. Graham, & T. Urdan (Eds.), APA educational psychology handbook (Vol. 1, pp. 295–325). American Psychological Association. [Google Scholar]

- Sweller, J. (2024). Cognitive load theory and individual differences. Learning and Individual Differences, 110, 102423. [Google Scholar] [CrossRef]

- Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive load theory. Springer. [Google Scholar] [CrossRef]

- Sweller, J., van Merrienboer, J. G., & Paas, F. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. [Google Scholar] [CrossRef]

- Sweller, J., van Merriënboer, J. J. G., & Paas, F. (2019). Cognitive Architecture and Instructional Design: 20 Years Later. Educational Psychology Review, 31(2), 261–292. [Google Scholar] [CrossRef]

- Tan, C. Y. (2024). Socioeconomic Status and Student Learning: Insights from an Umbrella Review. Educational Psychology Review, 36(4), 100. [Google Scholar] [CrossRef]

- van Gog, T., Kester, L., & Paas, F. (2011). Effects of worked examples, example-problem, and problem-example pairs on novices’ learning. Contemporary Educational Psychology, 36(3), 212–218. [Google Scholar] [CrossRef]

- van Gog, T., Rummel, N., & Renkl, A. (2019). Learning how to solve problems by studying examples. In The Cambridge handbook of cognition and education (pp. 183–208). Cambridge University Press. [Google Scholar]

- van Peppen, L. M., Verkoeijen, P. P. J. L., Heijltjes, A. E. G., Janssen, E., & van Gog, T. (2021). Enhancing students’ critical thinking skills: Is comparing correct and erroneous examples beneficial? Instructional Science, 49(6), 747–777. [Google Scholar] [CrossRef]

- Vicki, S., Kaye, S., & Beth, P. (2022). Beyond accuracy: A Process for analysis of constructed responses in large datasets and insights into students’ equation solving. 수학교육학연구, 32(3), 201–228. [Google Scholar] [CrossRef]

- Vlassis, J. (2002). The balance model: Hindrance or support for the solving of linear equations with one unknown. Educational Studies in Mathematics, 49(3), 341–359. [Google Scholar] [CrossRef]

- Yap, J. B. K., & Wong, S. S. H. (2024). Deliberately making and correcting errors in mathematical problem-solving practice improves procedural transfer to more complex problems. Journal of Educational Psychology, 116(7), 1112–1128. [Google Scholar] [CrossRef]

- Yu-Kang, T., & Law, G. (2009). Re-examining the associations between family backgrounds and children’s cognitive developments in early ages. Early Child Development and Care, 99999(1), 10. [Google Scholar] [CrossRef]

| Instructional Approach | Type of Cognitive Load |

|---|---|

| Correct and incorrect worked examples (CICWEs). The incorrect example typically has one or more steps differ from the correct example. The incorrect example encourages a learner to examine why a particular solution step of the incorrect example is wrong. | Comparing a pair of CICWEs simultaneously requires learners to invest germane cognitive load. Studying a pair CICWEs simultaneously imposes about twice the level of element interactivity (i.e., intrinsic cognitive load) compared to studying worked examples sequentially. |

| Worked examples (WEs). The solution procedure of a WE encompasses principle knowledge and its application and thus scaffolding the development of schema. | Studying WEs imposes a low level of element interactivity and thus low cognitive load because it directs learners’ attention to explicit solution steps, helping them to develop solution schemas. |

| Problem-solving (PS). | Searching for a solution path to solve practice problems imposes a high level of element interactivity, which contributes to extraneous cognitive load and thus interferes with schema acquisition. |

| China Sample | Malaysia Sample | |

|---|---|---|

| Mean age | 10.00 (SD = 0.26) | 14.07 (SD = 0.21) |

| Grade level | Year 4 | Form 1 students (equivalent to Year 8 students in Australia) |

| Gender | 24 girls, 23 boys | 38 girls, 30 boys |

| Ethnicity | Hokkian | Chinese (35%), Malay (45%), Indigenous Malaysian (30%) |

| Language of instruction | Mandarin | English for mathematics and science subjects |

| Mathematics curriculum | Mathematics curriculum standards | National curriculum for mathematics education |

| The topic of linear equations | Covered in elementary mathematics curriculum | Covered in junior secondary mathematics curriculum |

| Location of the school | Urban area | Rural area |

| Type of school | Public school | Public school |

| Prior knowledge | Basic knowledge of solving two-step linear equations | Basic knowledge of solving multi-step linear equations |

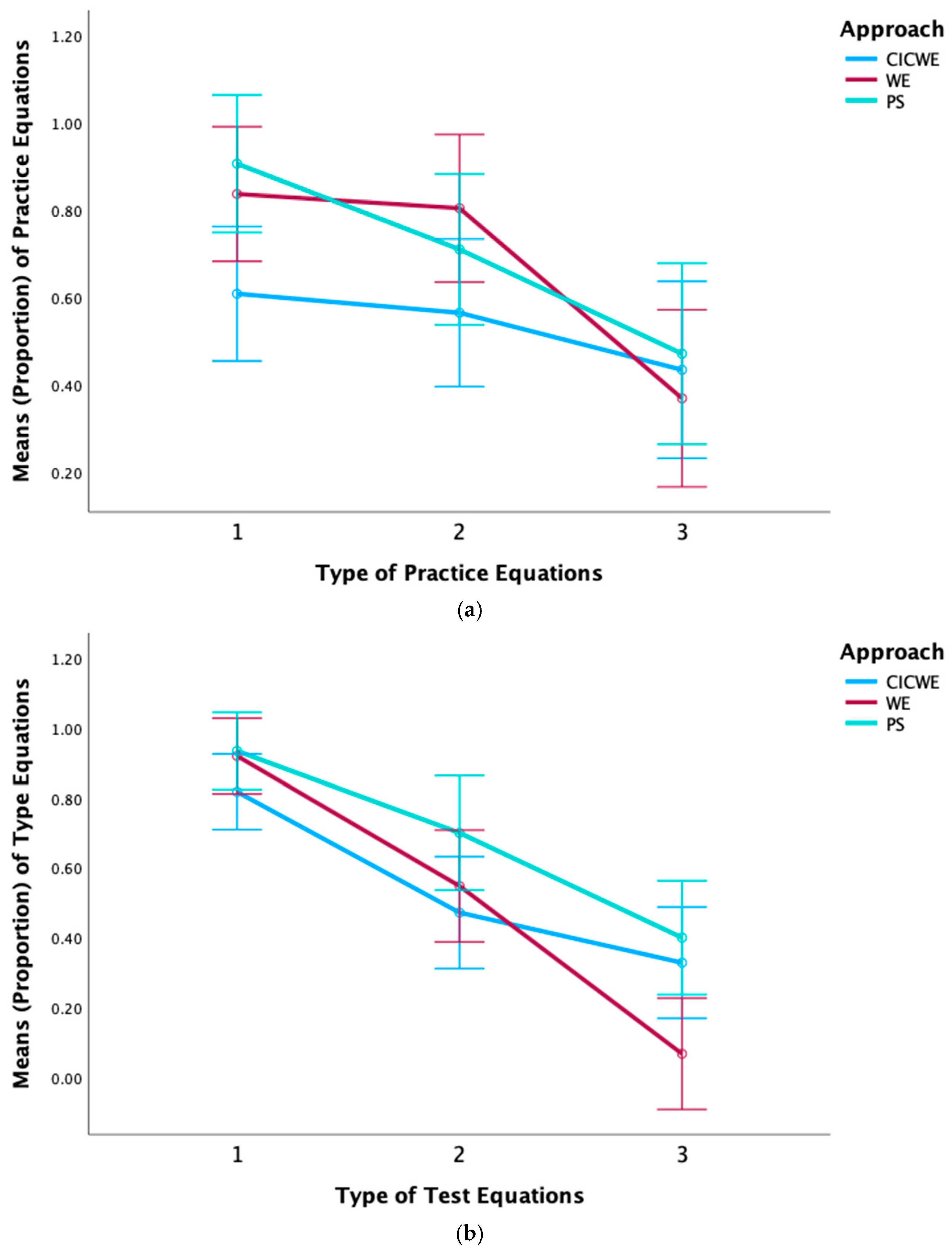

| CICWE | WE | PS | ||||

|---|---|---|---|---|---|---|

| M | (SD) | M | (SD) | M | (SD) | |

| Experiment 1 | n = 14 | n = 15 | n = 14 | |||

| Pre-test (proportion) | 0.18 | 0.15 | 0.21 | 0.17 | 0.20 | 0.25 |

| Practice equations (proportion) | ||||||

| Type 1 | 0.82 | 0.37 | 0.90 | 0.28 | 0.63 | 0.42 |

| Type 2 | 0.50 | 0.48 | 0.83 | 0.31 | 0.35 | 0.45 |

| Type 3 | 0.23 | 0.29 | 0.42 | 0.46 | 0.18 | 0.37 |

| Post-test (proportion) | ||||||

| Type 1 | 0.64 | 0.45 | 0.85 | 0.31 | 0.71 | 0.44 |

| Type 2 | 0.06 | 0.12 | 0.09 | 0.17 | 0.10 | 0.16 |

| Type 3 | 0.00 | 0.00 | 0.20 | 0.41 | 0.21 | 0.38 |

| Realistic best subscale | 2.53 | 0.51 | 2.34 | 0.51 | 2.72 | 0.44 |

| Optimal best subscale | 3.34 | 0.68 | 3.62 | 0.62 | 3.65 | 0.44 |

| Experiment 2 | n = 23 | n = 23 | n = 22 | |||

| Pre-test (proportion) | 0.31 | 0.27 | 0.26 | 0.31 | 0.37 | 0.33 |

| Practice equations (proportion) | ||||||

| Type 1 | 0.61 | 0.45 | 0.84 | 0.37 | 0.91 | 0.25 |

| Type 2 | 0.57 | 0.46 | 0.80 | 0.35 | 0.71 | 0.40 |

| Type 3 | 0.43 | 0.48 | 0.37 | 0.48 | 0.47 | 0.49 |

| Post-test (proportion) | ||||||

| Type 1 | 0.82 | 0.31 | 0.92 | 0.24 | 0.93 | 0.22 |

| Type 2 | 0.47 | 0.39 | 0.55 | 0.38 | 0.70 | 0.38 |

| Type 3 | 0.33 | 0.41 | 0.07 | 0.22 | 0.40 | 0.48 |

| Mental effort | 5.17 | 1.61 | 4.52 | 1.86 | 4.86 | 2.19 |

| Realistic best subscale | 3.22 | 0.49 | 3.19 | 0.49 | 3.26 | 0.33 |

| Optimal best subscale | 3.54 | 0.45 | 3.79 | 0.55 | 3.90 | 0.40 |

| (a) | |||||

| Instructional Approach | Mean | Median | Minimum | Maximum | |

| CICW | Pre-test | 0.18 | 0.17 | 0.00 | 0.38 |

| Practice equations | |||||

| Type 1 | 0.82 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.50 | 0.50 | 0.00 | 1.00 | |

| Type 3 | 0.23 | 0.00 | 0.00 | 0.75 | |

| Post-test | |||||

| Type 1 | 0.64 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.06 | 0.00 | 0.00 | 0.35 | |

| Type 3 | 0.00 | 0.00 | 0.00 | 0.00 | |

| Realistic best subscale | 2.53 | 2.50 | 1.58 | 3.67 | |

| Optimal best subscale | 3.34 | 3.17 | 2.33 | 4.67 | |

| WE | Pre-test | 0.21 | 0.22 | 0.00 | 0.43 |

| Practice equations | |||||

| Type 1 | 0.90 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.83 | 1.00 | 0.00 | 1.00 | |

| Type 3 | 0.42 | 0.25 | 0.00 | 1.00 | |

| Post-test | |||||

| Type 1 | 0.85 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.09 | 0.05 | 0.00 | 0.65 | |

| Type 3 | 0.20 | 0.00 | 0.00 | 1.00 | |

| Realistic best subscale | 2.34 | 2.17 | 1.42 | 3.17 | |

| Optimal best subscale | 3.62 | 3.67 | 2.42 | 4.92 | |

| PS | Pre-test | 0.20 | 0.06 | 0.00 | 0.75 |

| Practice equations | |||||

| Type 1 | 0.63 | 0.81 | 0.00 | 1.00 | |

| Type 2 | 0.35 | 0.09 | 0.00 | 1.00 | |

| Type 3 | 0.18 | 0.00 | 0.00 | 1.00 | |

| Post-test | |||||

| Type 1 | 0.71 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.10 | 0.00 | 0.00 | 0.50 | |

| Type 3 | 0.21 | 0.00 | 0.00 | 1.00 | |

| Realistic best subscale | 2.72 | 2.71 | 2.33 | 3.92 | |

| Optimal best subscale | 3.65 | 3.63 | 3.00 | 4.25 | |

| (b) | |||||

| Instructional Approach | Minimum | Maximum | Mean | Mean | |

| CICW | Pre-test | 0.31 | 0.33 | 0.00 | 0.78 |

| Practice equations | |||||

| Type 1 | 0.61 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.57 | 0.50 | 0.00 | 1.00 | |

| Type 3 | 0.43 | 0.00 | 0.00 | 1.00 | |

| Post-test | |||||

| Type 1 | 0.82 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.47 | 0.25 | 0.00 | 1.00 | |

| Type 3 | 0.33 | 0.20 | 0.00 | 1.00 | |

| Mental effort | 5.17 | 5.00 | 3.00 | 9.00 | |

| Realistic best subscale | 3.22 | 3.08 | 2.42 | 5.00 | |

| Optimal best subscale | 3.54 | 3.42 | 3.00 | 5.00 | |

| WE | Pre-test | 0.26 | 0.10 | 0.00 | 1.00 |

| Practice equations | |||||

| Type 1 | 0.84 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.80 | 1.00 | 0.00 | 1.00 | |

| Type 3 | 0.37 | 0.00 | 0.00 | 1.00 | |

| Post-test | |||||

| Type 1 | 0.92 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.55 | 0.55 | 0.00 | 1.00 | |

| Type 3 | 0.07 | 0.00 | 0.00 | 1.00 | |

| Mental effort | 4.52 | 5.00 | 1.00 | 9.00 | |

| Realistic best subscale | 3.19 | 3.33 | 2.33 | 4.25 | |

| Optimal best subscale | 3.80 | 3.83 | 2.58 | 4.67 | |

| PS | Pre-test | 0.37 | 0.35 | 0.00 | 1.00 |

| Practice equations | |||||

| Type 1 | 0.91 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.71 | 1.00 | 0.00 | 1.00 | |

| Type 3 | 0.47 | 0.22 | 0.00 | 1.00 | |

| Post-test | |||||

| Type 1 | 0.93 | 1.00 | 0.00 | 1.00 | |

| Type 2 | 0.70 | 0.93 | 0.00 | 1.00 | |

| Type 3 | 0.40 | 0.00 | 0.00 | 1.00 | |

| Mental effort | 4.86 | 4.50 | 1.00 | 9.00 | |

| Realistic best subscale | 3.26 | 3.25 | 2.58 | 3.92 | |

| Optimal best subscale | 3.90 | 3.92 | 3.25 | 4.50 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ngu, B.H.; Chen, O.; Phan, H.P.; Usop, H.; Anding, P.N. Can Correct and Incorrect Worked Examples Supersede Worked Examples and Problem-Solving on Learning Linear Equations? An Examination from Cognitive Load and Motivation Perspectives. Educ. Sci. 2025, 15, 504. https://doi.org/10.3390/educsci15040504

Ngu BH, Chen O, Phan HP, Usop H, Anding PN. Can Correct and Incorrect Worked Examples Supersede Worked Examples and Problem-Solving on Learning Linear Equations? An Examination from Cognitive Load and Motivation Perspectives. Education Sciences. 2025; 15(4):504. https://doi.org/10.3390/educsci15040504

Chicago/Turabian StyleNgu, Bing Hiong, Ouhao Chen, Huy P. Phan, Hasbee Usop, and Philip Nuli Anding. 2025. "Can Correct and Incorrect Worked Examples Supersede Worked Examples and Problem-Solving on Learning Linear Equations? An Examination from Cognitive Load and Motivation Perspectives" Education Sciences 15, no. 4: 504. https://doi.org/10.3390/educsci15040504

APA StyleNgu, B. H., Chen, O., Phan, H. P., Usop, H., & Anding, P. N. (2025). Can Correct and Incorrect Worked Examples Supersede Worked Examples and Problem-Solving on Learning Linear Equations? An Examination from Cognitive Load and Motivation Perspectives. Education Sciences, 15(4), 504. https://doi.org/10.3390/educsci15040504