Evaluating the Potential of Generative Artificial Intelligence to Innovate Feedback Processes

Abstract

1. Introduction

1.1. Feedback in Education

- Feed up: Explain what is expected in the activity and how it relates to the learning objectives.

- Feed back: Analyze the students’ work and indicate what is good and what needs improvement.

- Feed forward: Suggest concrete actions to improve future deliveries or the understanding of the topic.

1.2. GenAI and Its Use in Education and Feedback

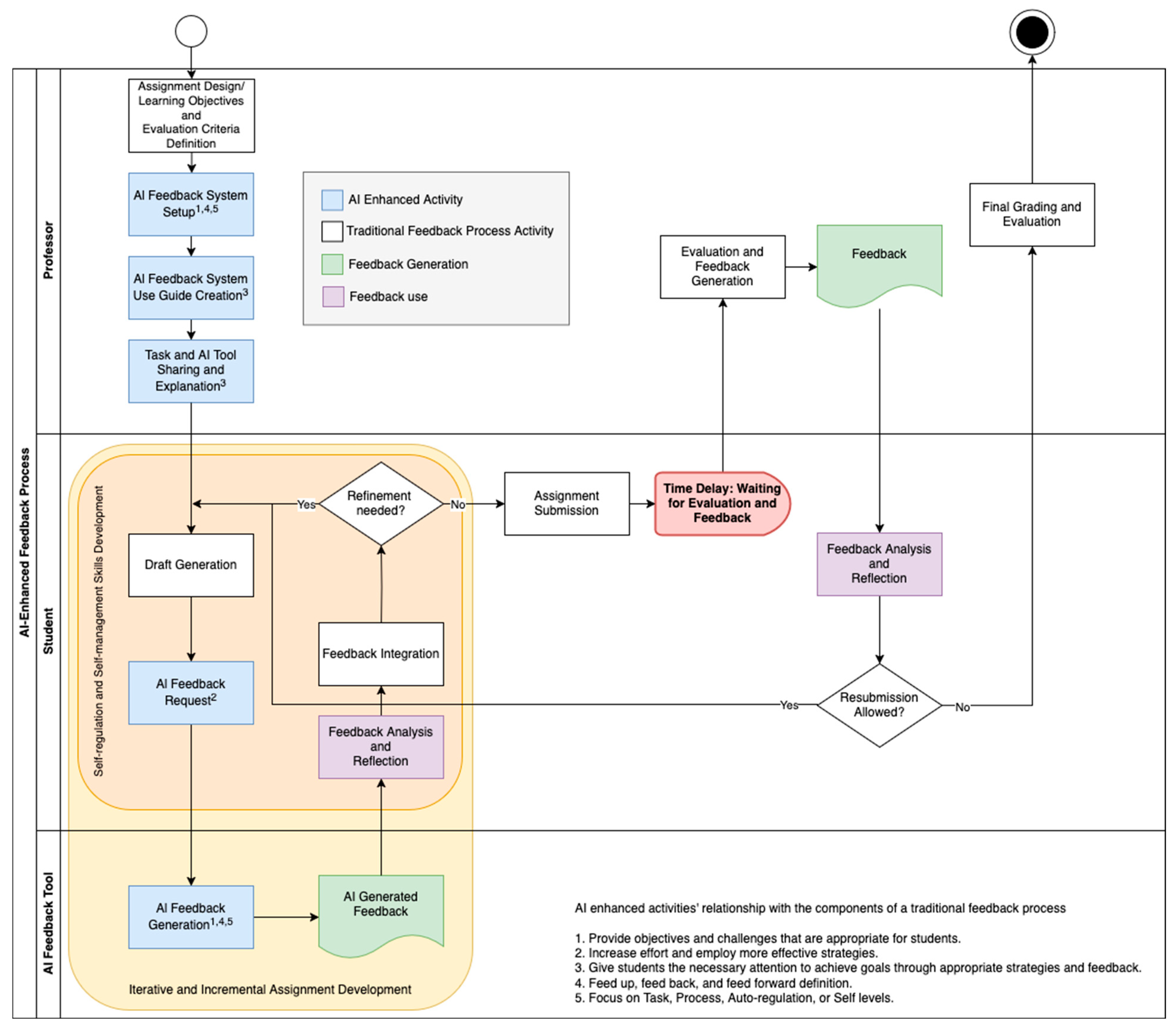

2. The Use of GenAI to Enhance the Feedback Process: A Proposed Methodology

- Intention and Context. The personality that the GenAI tool will exhibit is described here. This specification can be made using the Persona Pattern for prompt Engineering (White et al., 2023) by giving instructions like “Act as a person who is an expert on topic x”. Likewise, in this section, the characteristics of the students are presented. It is suggested to indicate the name of the course (as a specification of the domain in which the tool will be deployed) and the level of studies of the course (to indicate the depth to be applied). Other elements can be added to make specifications. The objective of these definitions is to give an initial context to the AI tool.

- Task Description and Instructions. The activity instructions designed in the previous steps are provided. It is important to mention that the length of the prompts can confuse the tool, so it is suggested to make a precise summary. Additionally, it is important to declare the deliverables to be produced by students.

- Learning Objectives. This section describes the objectives to be achieved in the activity. This will center the tool’s answer on what should be achieved by students.

- Evaluation Criteria. The evaluation of the activity is based on previously defined criteria. It is recommended to use rubrics or checklists to delimit the expected results. Having clear criteria is essential to ensure that feedback is understandable and useful for students (Wesolowski, 2020; Brookhart, 2020). It is important to mention that evaluation is the exclusive responsibility of the professors. AI cannot replace the ethical and expert judgment of professors, who have the full context of the student’s performance. Furthermore, only professors can consider emotional and motivational factors in the final evaluation (Burner et al., 2025). On the contrary, the tool cannot apply direct observation of the student’s actions or the development of attitudinal or behavioral components. So, this section is only a reference for the tool

- AI Behavior and Expectations. The type of interaction that the tool must have with the students, what is allowed, and what is not allowed within this interaction are defined in this section. For example, professors can tell the tool to not provide a direct solution to the task. Patterns such as Question Refinement or Cognitive Verifier (White et al., 2023) can also be applied so the tool can have more precision in its answers. Additionally, a tool’s introduction message could be defined.

- Feedback Format. A structured format is established to guarantee that the answers provided by the tool are clear, organized, and aligned with pedagogical principles of effective feedback. In this section of the prompt, professors can define elements in the three levels of feedback mentioned by Hattie and Timperley (2007) (feed up, feed back, and feed forward).The Context Manager pattern (White et al., 2023) can be applied here to maintain a fixed response structure to avoid redundant or disorganized information.The perception of feedback influences students’ motivation and commitment, so structuring feedback in a balanced way is essential to maintain their confidence in the learning process (Mayordomo et al., 2022; Van Boekel et al., 2023). So, a balance between positive aspects and areas for improvement should be enforced. This will make the feedback immediate, understandable, and actionable and will avoid infinite loops in the conversation.

- Additional Guidelines. Other elements can be added to fine-tune the tool’s interactions. For example, to use friendly and pleasant language during conversation.

3. Hypothesis

4. Materials and Methods

- One group of a course in Discrete Mathematics. A curricular course for undergraduate programs related to Computer Sciences was taught by a team of 2 professors. This group was selected as a focus group (n = 17). The methodology was implemented in a challenge that lasted 5 weeks (the course’s duration). Students had to solve a problem linked to reality. The AI tool was configured to help students create a written report by giving feedback about the organization of the document and how to strengthen its links to the course concepts. This group was taught in a virtual format.

- Five groups of a course related to Architecture. A curricular course for the Architecture undergraduate program. These groups were all taught by the same professor. Randomly, 2 groups were designed as control groups (n = 27) while 3 groups were designed as focus groups (n = 69). Students created a prompt to create an image to inspire others to commit to fighting global warming and climate change by learning to design zero-carbon buildings. This activity lasted for 4 weeks. These groups were in a face-to-face format.

- One group of a course related to Biomimicry. This is an elective course for any undergraduate program in the institution. This group was taught by a team of 2 professors in a virtual format. Due to the high enrollment in this course (n = 150) and the fact that it included students from different campuses of the institution, the course was delivered as a single group, with no possibility of splitting students into separate control and focus groups. As part of this study, all students completed two separate learning activities. The first activity, considered the control group implementation, was completed without using any AI tool and covered a different topic, but was equivalent in terms of scope, difficulty, and grading weight to the second activity. The second activity considered the focus group implementation and included access to a customized AI tool that provided formative feedback. This approach was chosen to ensure equitable access to the AI tool for all students, considering the group’s diverse composition and large class size. In the focus group activity, students analyzed a Leadership in Energy and Environmental Design-certified project, a globally recognized certification system for sustainable buildings developed by the U.S. Green Building Council. The AI tool was set up to support students in developing written analysis and an infographic, providing feedback on the structure, clarity, and coherence. In addition, it helped them to strengthen their sustainability analysis and improvement proposals, promoting a more robust and informed approach. The students had almost 4 weeks to develop the activity.

- Instrument validation. Experts were consulted and a statistical process was applied.

- A statistical analysis of the difference in perception of the feedback received between the focus and control groups in the pretest results was conducted to determine if the groups had any difference in their experiences in previous courses.

- Analysis of the difference in perception of the feedback received between the focus and control groups in the post-test results was conducted by applying an ordinal logistic regression to validate the hypothesis of this work.

- Students who decided not to participate in this research were not asked to answer the surveys, and their data were not included.

5. Results

5.1. Instrument Validation

- Would you recommend the use of the AI tool to your colleagues or friends? Answers: Yes, No, or I do not know.

- In general, how satisfied are you with the feedback you received for the activity?

- Did you use the AI tool to receive feedback during the activity?

- Did the feedback you received in this activity from the AI tool make you realize your areas of opportunity or improvement?

- Did you use the feedback the AI tool gave you to improve the delivery of your activity?

- Would you ask the AI tool for feedback again?

- Would you recommend the use of the AI tool to your colleagues and/or friends?

“In general, and in your experience, what is the average level of satisfaction you have with the feedback you have received on previous courses?”

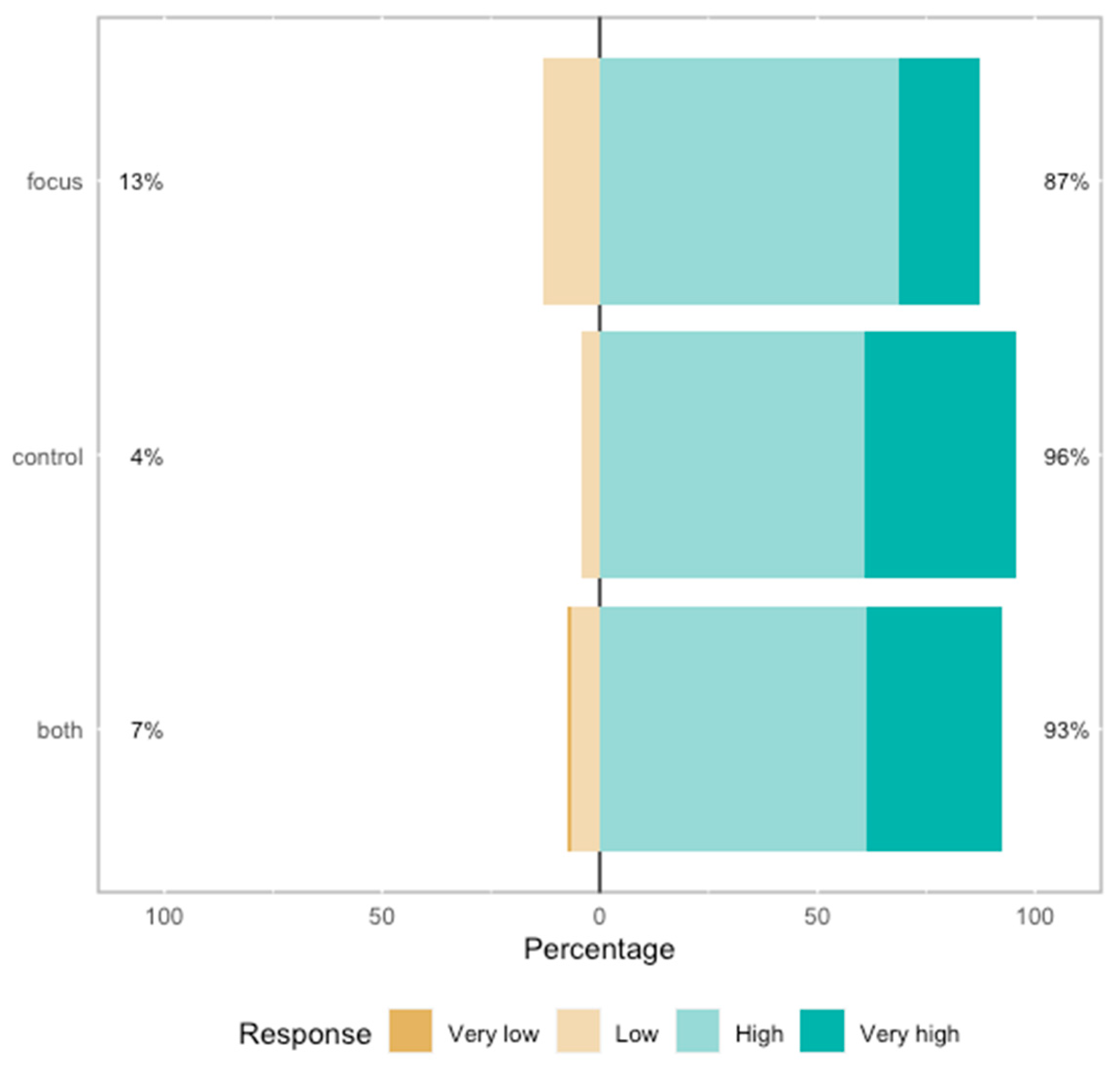

5.2. Statistical Analysis of the Difference in Perception of the Feedback Received on Previous Experiences (Pretest Results)

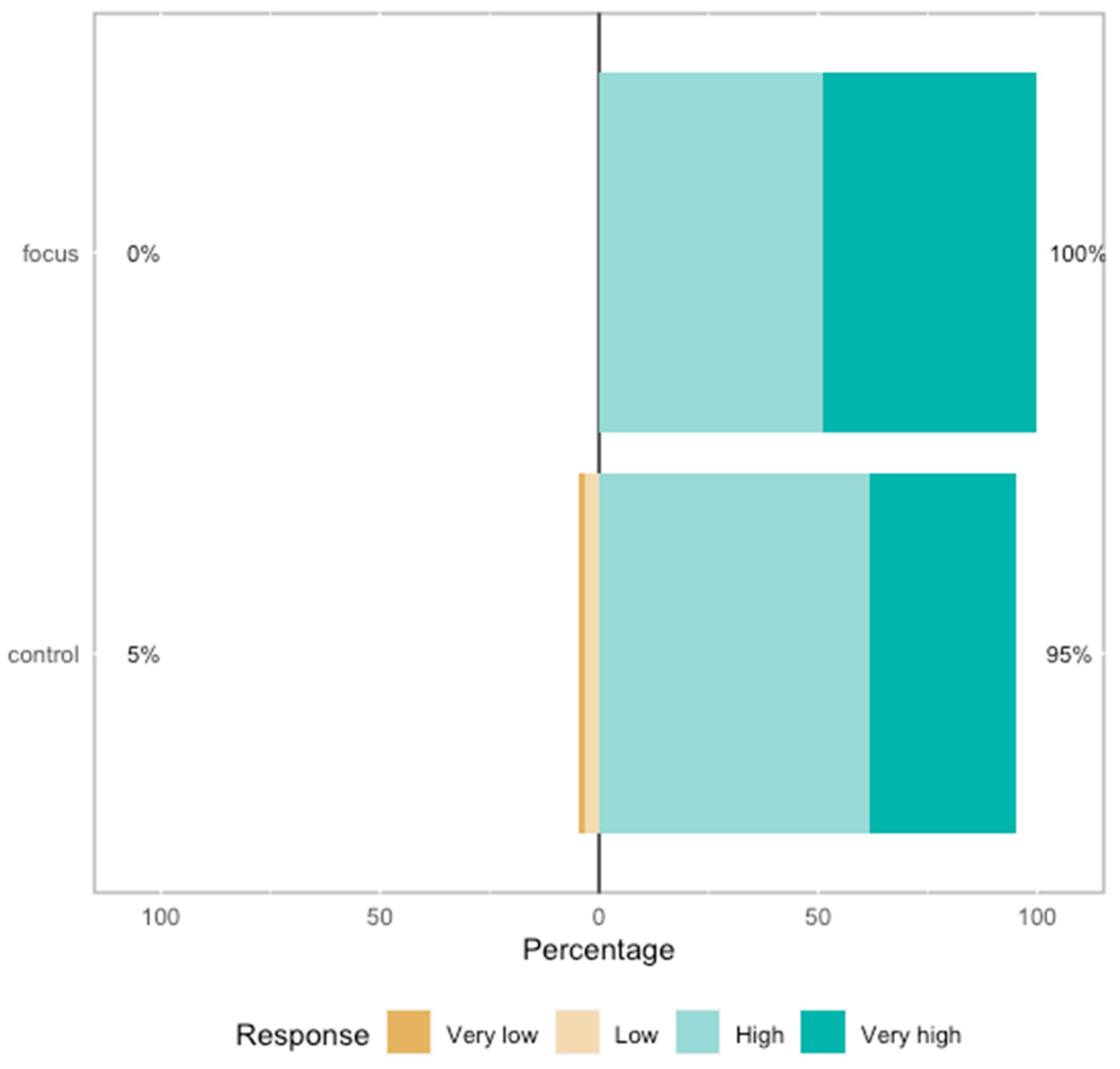

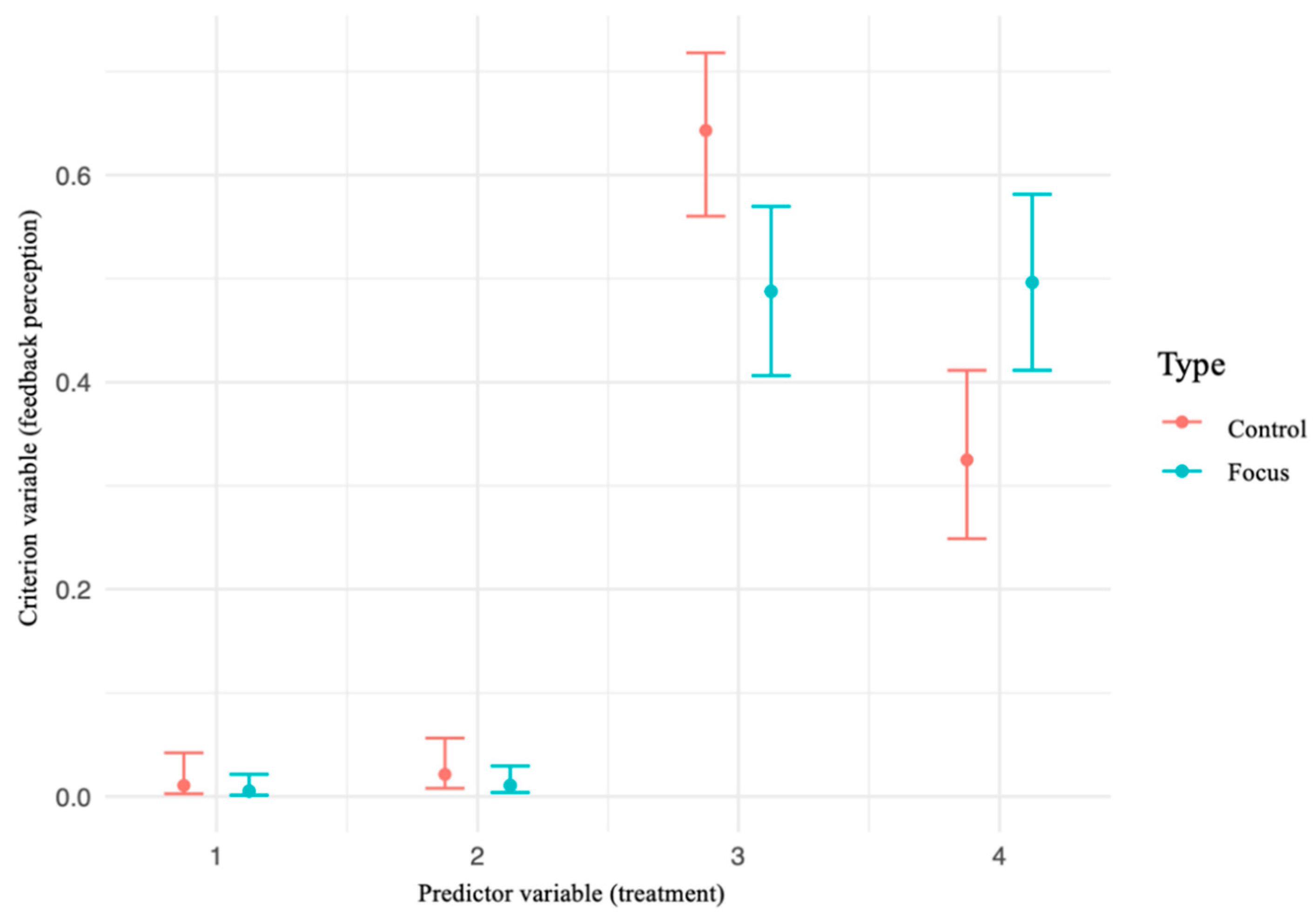

5.3. Analysis of the Difference in Perception of the Feedback Received When Solving the Learning Activities Comparing Focus and Control Groups (Post-Test Results)

5.4. Opinion of Students That Used the GenAI Tool

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ANOVA | Analysis of Variance |

| CVR | Content Validity Ratio |

| GenAI | Generative artificial intelligence |

References

- Abdelghani, R., Sauzéon, H., & Oudeyer, P. Y. (2023). Generative AI in the classroom: Can students remain active learners? arXiv, arXiv:2310.03192. [Google Scholar]

- Afzaal, M., Nouri, J., Zia, A., Papapetrou, P., Fors, U., Wu, Y., Li, X., & Weegar, R. (2021). Explainable AI for data-driven feedback and intelligent action recommendations to support students’ self-regulation. Frontiers in Artificial Intelligence, 4, 723447. [Google Scholar] [CrossRef]

- Aguayo-Hernández, C. H., Sánchez Guerrero, A., & Vázquez-Villegas, P. (2024). The learning assessment process in higher education: A grounded theory approach. Education Sciences, 14(9), 984. [Google Scholar] [CrossRef]

- Albdrani, R. N., & Al-Shargabi, A. A. (2023). Investigating the effectiveness of ChatGPT for providing personalized learning experience: A case study. International Journal of Advanced Computer Science & Applications, 14(11). [Google Scholar] [CrossRef]

- Allen, T. J., & Mizumoto, A. (2024). ChatGPT over my friends: Japanese English-as-a-Foreign-Language learners’ preferences for editing and proofreading strategies. RELC Journal, 00336882241262533. [Google Scholar] [CrossRef]

- Al Murshidi, G., Shulgina, G., Kapuza, A., & Costley, J. (2024). How understanding the limitations and risks of using ChatGPT can contribute to willingness to use. Smart Learning Environments, 11(1), 36. [Google Scholar] [CrossRef]

- Angulo Valdearenas, M. J., Clarisó, R., Domènech Coll, M., Garcia-Brustenga, G., Gómez Cardosa, D., & Mas Garcia, X. (2024). Com incorporar la IA en les activitats d’aprenentatge. Repositori Institucional (O2) Universitat Oberta de Catalunya. Available online: http://hdl.handle.net/10609/151242 (accessed on 12 January 2025).

- Bewersdorff, A., Hartmann, C., Hornberger, M., Seßler, K., Bannert, M., Kasneci, E., Kasneci, G., Zhai, X., & Nerdel, C. (2025). Taking the next step with generative artificial intelligence: The transformative role of multimodal large language models in science education. Learning and Individual Differences, 118, 102601. [Google Scholar] [CrossRef]

- Boud, D. (2015). Feedback: Ensuring that it leads to enhanced learning. The Clinical Teacher, 12(1), 3–7. [Google Scholar] [CrossRef]

- Boud, D., & Molloy, E. (2013). Rethinking models of feedback for learning: The challenge of design. Assessment & Evaluation in Higher Education, 38(6), 698–712. [Google Scholar]

- Boud, D., & Soler, R. (2016). Sustainable assessment revisited. Assessment & Evaluation in Higher Education, 41(3), 400–413. [Google Scholar]

- Brookhart, S. M. (2020). Feedback and measurement. In Classroom assessment and educational measurement (p. 63). Routledge. [Google Scholar]

- Brown, S. (2005). Assessment for learning. Learning and Teaching in Higher Education, (1), 81–89. [Google Scholar]

- Burner, T., Lindvig, Y., & Wærness, J. I. (2025). “We Should Not Be Like a Dinosaur”—Using AI Technologies to Provide Formative Feedback to Students. Education Sciences, 15(1), 58. [Google Scholar] [CrossRef]

- Campos, M. (2025). AI-assisted feedback in CLIL courses as a self-regulated language learning mechanism: Students’ perceptions and experiences. European Public & Social Innovation Review, 10, 1–14. [Google Scholar]

- Carless, D. (2013). Sustainable feedback and the development of student self-evaluative capacities. In Reconceptualising feedback in higher education (pp. 113–122). Routledge. [Google Scholar]

- Carless, D., & Winstone, N. (2023). Teacher feedback literacy and its interplay with student feedback literacy. Teaching in Higher Education, 28(1), 150–163. [Google Scholar] [CrossRef]

- Chang, C. Y., Chen, I. H., & Tang, K. Y. (2024). Roles and research trends of ChatGPT-based learning. Educational Technology & Society, 27(4), 471–486. [Google Scholar]

- Chu, H. C., Lu, Y. C., & Tu, Y. F. (2025). How GenAI-supported multi-modal presentations benefit students with different motivation levels. Educational Technology & Society, 28(1), 250–269. [Google Scholar]

- Cordero, J., Torres-Zambrano, J., & Cordero-Castillo, A. (2024). Integration of Generative Artificial Intelligence in Higher Education: Best Practices. Education Sciences, 15(1), 32. [Google Scholar] [CrossRef]

- Dai, W., Tsai, Y. S., Lin, J., Aldino, A., Jin, H., Li, T., Gašević, D., & Chen, G. (2024). Assessing the proficiency of large language models in automatic feedback generation: An evaluation study. Computers and Education: Artificial Intelligence, 7, 100299. [Google Scholar] [CrossRef]

- Falconí, C. A. R., Figueroa, I. J. G., Farinango, E. V. G., & Dávila, C. N. M. (2024). Estrategias para fomentar la autonomía del estudiante en la educación universitaria: Promoviendo el aprendizaje autorregulado y la autodirección académica. Reincisol, 3(5), 691–704. [Google Scholar] [CrossRef]

- Guo, K., & Wang, D. (2024). To resist it or to embrace it? Examining ChatGPT’s potential to support teacher feedback in EFL writing. Education and Information Technologies, 29(7), 8435–8463. [Google Scholar] [CrossRef]

- Güner, H., Er, E., Akçapinar, G., & Khalil, M. (2024). From chalkboards to AI-powered learning. Educational Technology & Society, 27(2), 386–404. [Google Scholar]

- Hagendorff, T. (2024). Mapping the ethics of generative AI: A comprehensive scoping review. Minds and Machines, 34(4), 39. [Google Scholar] [CrossRef]

- Hair Junior, J. F., Hult, G. T. M., Ringle, C. M., & Sarstedt, M. (2014). A primer on partial least squares structural equation modeling (PLS-SEM). SAGE Publications, Inc. [Google Scholar]

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. [Google Scholar] [CrossRef]

- Hounsell, D. (2007). Towards more sustainable feedback to students. In Rethinking assessment in higher education (pp. 111–123). Routledge. [Google Scholar]

- Huesca, G., Martínez-Treviño, Y., Molina-Espinosa, J. M., Sanromán-Calleros, A. R., Martínez-Román, R., Cendejas-Castro, E. A., & Bustos, R. (2024). Effectiveness of using ChatGPT as a tool to strengthen benefits of the flipped learning strategy. Education Sciences, 14(6), 660. [Google Scholar] [CrossRef]

- Hutson, J., Fulcher, B., & Ratican, J. (2024). Enhancing assessment and feedback in game design programs: Leveraging generative AI for efficient and meaningful evaluation. International Journal of Educational Research and Innovation, 1–20. [Google Scholar] [CrossRef]

- Jiménez, A. F. (2024). Integration of AI helping teachers in traditional teaching roles. European Public & Social Innovation Review, 9, 1–17. [Google Scholar]

- Khahro, S. H., & Javed, Y. (2022). Key challenges in 21st century learning: A way forward towards sustainable higher educational institutions. Sustainability, 14(23), 16080. [Google Scholar] [CrossRef]

- Kohnke, L. (2024). Exploring EAP students’ perceptions of GenAI and traditional grammar-checking tools for language learning. Computers and Education: Artificial Intelligence, 7, 100279. [Google Scholar] [CrossRef]

- Korseberg, L., & Elken, M. (2024). Waiting for the revolution: How higher education institutions initially responded to ChatGPT. Higher Education, 1–16. [Google Scholar] [CrossRef]

- Larasati, A., DeYong, C., & Slevitch, L. (2011). Comparing neural network and ordinal logistic regression to analyze attitude responses. Service Science, 3(4), 304–312. [Google Scholar] [CrossRef]

- Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel Psychology, 28(4), 567. [Google Scholar] [CrossRef]

- Lin, S., & Crosthwaite, P. (2024). The grass is not always greener: Teacher vs. GPT-assisted written corrective feedback. System, 127, 103529. [Google Scholar] [CrossRef]

- Mayordomo, R. M., Espasa, A., Guasch, T., & Martínez-Melo, M. (2022). Perception of online feedback and its impact on cognitive and emotional engagement with feedback. Education and Information Technologies, 27(6), 7947–7971. [Google Scholar] [CrossRef]

- Márquez, A. M. B., & Martínez, E. R. (2024). Retroalimentación formativa con inteligencia artificial generativa: Un caso de estudio. Wímb lu, 19(2), 1–20. [Google Scholar]

- Mendiola, M. S., & González, A. M. (2020). Evaluación del y para el aprendizaje: Instrumentos y estrategias. Imagia Comunicación. [Google Scholar]

- Moreno Olivos, T. (2016). Evaluación del aprendizaje y para el aprendizaje: Reinventar la evaluación en el aula. Universidad Autónoma Metropolitana. [Google Scholar]

- Naz, I., & Robertson, R. (2024). Exploring the feasibility and efficacy of ChatGPT3 for personalized feedback in teaching. Electronic Journal of e-Learning, 22(2), 98–111. [Google Scholar] [CrossRef]

- Ngo, T. T. A. (2023). The perception by university students of the use of ChatGPT in education. International Journal of Emerging Technologies in Learning, 18(17), 4. [Google Scholar] [CrossRef]

- Ostertagova, E., Ostertag, O., & Kováč, J. (2014). Methodology and application of the Kruskal-Wallis test. Applied Mechanics and Materials, 611, 115–120. [Google Scholar] [CrossRef]

- Panadero, E., Andrade, H., & Brookhart, S. (2018). Fusing self-regulated learning and formative assessment: A roadmap of where we are, how we got here, and where we are going. The Australian Educational Researcher, 45, 13–31. [Google Scholar] [CrossRef]

- Pozdniakov, S., Brazil, J., Abdi, S., Bakharia, A., Sadiq, S., Gašević, D., Denny, P., & Khosravi, H. (2024). Large language models meet user interfaces: The case of provisioning feedback. Computers and Education: Artificial Intelligence, 7, 100289. [Google Scholar] [CrossRef]

- Schmidt-Fajlik, R. (2023). ChatGPT as a grammar checker for Japanese english language learners: A comparison with grammarly and proWritingAid. AsiaCALL Online Journal, 14(1), 105–119. [Google Scholar] [CrossRef]

- Sheehan, T., Riley, P., Farrell, G., Mahmood, S., Calhoun, K., & Thayer, T.-L. (2024, December 3). Predicts 2024: Education automation, adaptability and acceleration. Garner. Available online: https://www.gartner.com/en/documents/5004931 (accessed on 12 January 2025).

- Stamper, J., Xiao, R., & Hou, X. (2024, July 8–12). Enhancing llm-based feedback: Insights from intelligent tutoring systems and the learning sciences. International Conference on Artificial Intelligence in Education (pp. 32–43), Recife, Brazil. [Google Scholar]

- Stiggins, R. (2005). From formative assessment to assessment for learning: A path to success in standards-based schools. Phi Delta Kappan, 87(4), 324–328. [Google Scholar] [CrossRef]

- Stobart, G. (2018). Becoming proficient: An alternative perspective on the role of feedback. In A. A. Lipnevich, & J. K. Smith (Eds.), The cambridge handbook of instructional feedback (pp. 29–51). Cambridge University Press. [Google Scholar]

- Sun, D., Boudouaia, A., Zhu, C., & Li, Y. (2024). Would ChatGPT-facilitated programming mode impact college students’ programming behaviors, performances, and perceptions? An empirical study. International Journal of Educational Technology in Higher Education, 21(1), 14. [Google Scholar] [CrossRef]

- Sung, G., Guillain, L., & Schneider, B. (2025). Using AI to Care: Lessons Learned from Leveraging Generative AI for Personalized Affective-Motivational Feedback. International Journal of Artificial Intelligence in Education, 1–40. [Google Scholar] [CrossRef]

- Teng, M. F. (2024). “ChatGPT is the companion, not enemies”: EFL learners’ perceptions and experiences in using ChatGPT for feedback in writing. Computers and Education: Artificial Intelligence, 7, 100270. [Google Scholar] [CrossRef]

- Teng, M. F. (2025). Metacognitive Awareness and EFL Learners’ Perceptions and Experiences in Utilising ChatGPT for Writing Feedback. European Journal of Education, 60(1), e12811. [Google Scholar] [CrossRef]

- Tossell, C. C., Tenhundfeld, N. L., Momen, A., Cooley, K., & de Visser, E. J. (2024). Student perceptions of ChatGPT use in a college essay assignment: Implications for learning, grading, and trust in artificial intelligence. IEEE Transactions on Learning Technologies, 17, 1069–1081. [Google Scholar] [CrossRef]

- Tran, T. M., Bakajic, M., & Pullman, M. (2024). Teacher’s pet or rebel? Practitioners’ perspectives on the impacts of ChatGPT on course design. Higher Education. [Google Scholar] [CrossRef]

- UNESCO. (2023). ChatGPT e inteligencia artificial en la educación superior. La Organización de las Naciones Unidas para la Educación, la Ciencia y la Cultura. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000385146_spa (accessed on 21 November 2024).

- Van Boekel, M., Hufnagle, A. S., Weisen, S., & Troy, A. (2023). The feedback I want versus the feedback I need: Investigating students’ perceptions of feedback. Psychology in the Schools, 60(9), 3389–3402. [Google Scholar] [CrossRef]

- Wang, H., Dang, A., Wu, Z., & Mac, S. (2024). Generative AI in higher education: Seeing ChatGPT through universities’ policies, resources, and guidelines. Computers and Education: Artificial Intelligence, 7, 100326. [Google Scholar] [CrossRef]

- Wang, Y., Rodríguez de Gil, P., Chen, Y. H., Kromrey, J. D., Kim, E. S., Pham, T., Nguyen, D., & Romano, J. L. (2017). Comparing the performance of approaches for testing the homogeneity of variance assumption in one-factor ANOVA models. Educational and Psychological Measurement, 77(2), 305–329. [Google Scholar] [CrossRef]

- Wang, Z., Yin, Z., Zheng, Y., Li, X., & Zhang, L. (2025). Will graduate students engage in unethical uses of GPT? An exploratory study to understand their perceptions. Educational Technology & Society, 28(1), 286–300. [Google Scholar]

- Wecks, J. O., Voshaar, J., Plate, B. J., & Zimmermann, J. (2024). Generative AI usage and academic performance. Available online: https://ssrn.com/abstract=4812513 (accessed on 4 January 2025).

- Wesolowski, B. C. (2020). “Classroometrics”: The validity, reliability, and fairness of classroom music assessments. Music Educators Journal, 106(3), 29–37. [Google Scholar] [CrossRef]

- White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert, H., Elnashar, A., Spencer-Smith, J., & Schmidt, D. C. (2023). A prompt pattern catalog to enhance prompt engineering with ChatGPT. arXiv, arXiv:2302.11382. [Google Scholar]

- Wiliam, D., Lee, C., Harrison, C., & Black, P. (2004). Teachers developing assessment for learning: Impact on student achievement. Assessment in Education: Principles, Policy & Practice, 11(1), 49–65. [Google Scholar]

- Wilson, F. R., Pan, W., & Schumsky, D. A. (2012). Recalculation of the critical values for Lawshe’s content validity ratio. Measurement and Evaluation in Counseling and Development, 45(3), 197–210. [Google Scholar] [CrossRef]

- Xu, J., & Liu, Q. (2025). Uncurtaining windows of motivation, enjoyment, critical thinking, and autonomy in AI-integrated education: Duolingo Vs. ChatGPT. Learning and Motivation, 89, 102100. [Google Scholar] [CrossRef]

- Zhou, X., Zhang, J., & Chan, C. (2024). Unveiling students’ experiences and perceptions of Artificial Intelligence usage in higher education. Journal of University Teaching and Learning Practice, 21(6), 126–145. [Google Scholar] [CrossRef]

- Zhou, Y., Zhang, M., Jiang, Y. H., Gao, X., Liu, N., & Jiang, B. (2025). A Study on Educational Data Analysis and Personalized Feedback Report Generation Based on Tags and ChatGPT. arXiv, arXiv:2501.06819. [Google Scholar]

| Course | Candidate Students | Control Group Usable Data | Focus Group Usable Data |

|---|---|---|---|

| Discrete Mathematics | 17 | 0 | 16 |

| Architecture | 96 | 20 | 51 |

| Biomimicry | 150 | 105 | 60 |

| Total | 263 | 125 | 127 |

| # | Question | Type of Answer | Essential | Useful, But Not Essential | Not Necessary | Content Validity Ratio | Decision |

|---|---|---|---|---|---|---|---|

| 1 | What is your level of satisfaction with the feedback you received for the activity? | Very low, Low, High, Very high | 20 | 2 | 0 | 0.82 | To keep and modify the wording |

| 2 | Do you think you had a better feedback experience in this activity than in previous courses or activities? | Yes, No | 14 | 7 | 1 | 0.27 | To remove |

| 3 | On average, how many times did you request feedback from any entity or person during the activity? | Numeric | 11 | 8 | 3 | 0 | To remove |

| 4 | Order the following entities with respect to the frequency with which you turned to them to ask for feedback or to resolve doubts during the activity. The first option is the one you used the most and the last one is the one you used the least.

| Order a list of items | 11 | 11 | 0 | 0 | To remove |

| 5 | During the activity, did you turn to other entities to receive feedback or resolve questions? If yes, write down the entities in the following space separated by commas. | Yes (text), No | 8 | 10 | 4 | −0.27 | To remove |

| 6 | Did you use the AI tool to receive feedback during the activity? | Yes, No | 20 | 2 | 0 | 0.82 | To keep |

| 7 | Was the feedback you received in the activity from the AI tool useful in improving your performance? | Yes, No | 21 | 1 | 0 | 0.91 | To remove. Even if this item is validated, experts said that it repeats the same idea of item 8. |

| 8 | Did the feedback you received in this activity from the AI tool make you realize your areas of opportunity or improvement? | Not at all useful, Not very useful, Useful, Very useful | 21 | 1 | 0 | 0.91 | To keep |

| 9 | Did you use the feedback the AI tool gave you to improve the delivery of your activity? | Yes, No | 20 | 2 | 0 | 0.82 | To keep |

| 10 | Would you ask for the AI tool for feedback again? | Never, Sometimes, Frequently, Always | 19 | 3 | 0 | 0.73 | To keep |

| 11 | Indicate three characteristics that you value or liked about the feedback and/or use of the AI tool. | Text | 11 | 11 | 0 | 0 | To remove |

| Value | Focus Group | Control Group | Both Treatments |

|---|---|---|---|

| N | 70 | 23 | 108 |

| Min. | 2.00 | 2.00 | 1.00 |

| 1st qu. | 3.00 | 3.00 | 3.00 |

| Median | 3.00 | 3.00 | 3.00 |

| Mean | 3.06 | 3.30 | 3.23 |

| 3rd qu. | 3.00 | 4.00 | 4.00 |

| Max. | 4.00 | 4.00 | 4.00 |

| Standard deviation | 0.56 | 0.56 | 0.61 |

| Value | Focus Group | Control Group |

|---|---|---|

| N | 127 | 125 |

| Min. | 3.00 | 1.00 |

| 1st qu. | 3.00 | 3.00 |

| Median | 3.00 | 3.00 |

| Mean | 3.49 | 3.27 |

| 3rd qu. | 4.00 | 4.00 |

| Max. | 4.00 | 4.00 |

| Standard deviation | 0.50 | 0.60 |

| Threshold | Estimate | Std. Error | z Value |

|---|---|---|---|

| 1|2 | −4.5273 | 0.7168 | −6.316 |

| 2|3 | −3.4069 | 0.4253 | −8.010 |

| 3|4 | 0.7314 | 0.1905 | 3.839 |

| Reference | Strategy | Number of Students | Application Context | Discipline | Type of Study | Results |

|---|---|---|---|---|---|---|

| This work | ChatGPT as an extension tool for the feedback process | 263 | Undergraduate students | Discrete Mathematics, Architecture, Biomimicry | Qualitative and quantitative. Pretest–post-test. Control and focus groups. | There is a significant difference in students’ perceptions between those who used GenAI and those who did not. ChatGPT would be recommended by 97% of students. |

| Tossell et al. (2024) | ChatGPT for an essay creation activity | 24 | Air force academy | Design | Quantitative. Pretest–post-test. | No significant difference in perception of the value of the tool. |

| Schmidt-Fajlik (2023) | ChatGPT for writing | 69 | University | Japanese writing | Qualitative | ChatGPT is highly recommended by students because of its detailed feedback and its explanations are easy to understand. |

| Kohnke (2024) | GenAI for writing | 14 | University | English for academic purposes | Qualitative | The in-depth explanations of GenAI tools are useful for students to have a better understanding of the feedback. |

| Sun et al. (2024) | GenAI for programming | 82 | University | Programming for Educational Technology majors | Quantitative. Control and focus groups. | Positive students’ acceptance of ChatGPT with no significant difference regarding learning gains. |

| Albdrani and Al-Shargabi (2023) | ChatGPT for Internet of Things course | 20 | University | Computer Sciences | Qualitative and quantitative. Control and focus groups. | Positive perception of a personalized learning experience. |

| Ngo (2023) | ChatGPT for academic purposes | 200 | University | Information Technology Business Administration Media Communication Hospitality and Tourism Linguistics Graphic Design | Qualitative | A total of 86% of students presented high satisfaction when using ChatGPT in previous academic experiences. |

| Márquez and Martínez (2024) | Comparison between feedback provided by a professor and ChatGPT | No data | University | Psychology | ChatGPT identifies partially activities’ quality, and grades differed between both actors. ChatGPT presented the ability to personalize feedback. | |

| Wecks et al. (2024) | Detection of the use of GenAI in writing essays | 193 | University | Financial Accounting | Quantitative. Control and focus groups. | Students who used GenAI ranked lower in the final exam. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huesca, G.; Elizondo-García, M.E.; Aguayo-González, R.; Aguayo-Hernández, C.H.; González-Buenrostro, T.; Verdugo-Jasso, Y.A. Evaluating the Potential of Generative Artificial Intelligence to Innovate Feedback Processes. Educ. Sci. 2025, 15, 505. https://doi.org/10.3390/educsci15040505

Huesca G, Elizondo-García ME, Aguayo-González R, Aguayo-Hernández CH, González-Buenrostro T, Verdugo-Jasso YA. Evaluating the Potential of Generative Artificial Intelligence to Innovate Feedback Processes. Education Sciences. 2025; 15(4):505. https://doi.org/10.3390/educsci15040505

Chicago/Turabian StyleHuesca, Gilberto, Mariana E. Elizondo-García, Ricardo Aguayo-González, Claudia H. Aguayo-Hernández, Tanya González-Buenrostro, and Yuridia A. Verdugo-Jasso. 2025. "Evaluating the Potential of Generative Artificial Intelligence to Innovate Feedback Processes" Education Sciences 15, no. 4: 505. https://doi.org/10.3390/educsci15040505

APA StyleHuesca, G., Elizondo-García, M. E., Aguayo-González, R., Aguayo-Hernández, C. H., González-Buenrostro, T., & Verdugo-Jasso, Y. A. (2025). Evaluating the Potential of Generative Artificial Intelligence to Innovate Feedback Processes. Education Sciences, 15(4), 505. https://doi.org/10.3390/educsci15040505