Reforming the Teaching and Learning of Foundational Mathematics Courses: An Investigation into the Status Quo of Teaching, Feedback Delivery, and Assessment in a First-Year Calculus Course

Abstract

:1. Introduction

2. Review of Relevant Literature

2.1. Conceptualising Feedback

2.2. Conceptualising Assessment

2.3. Research Context and the Research Questions

3. Methods

3.1. Sample of the Study

3.2. Measuring Instrument

3.3. Data Collection and Analysis

4. Results and Discussion

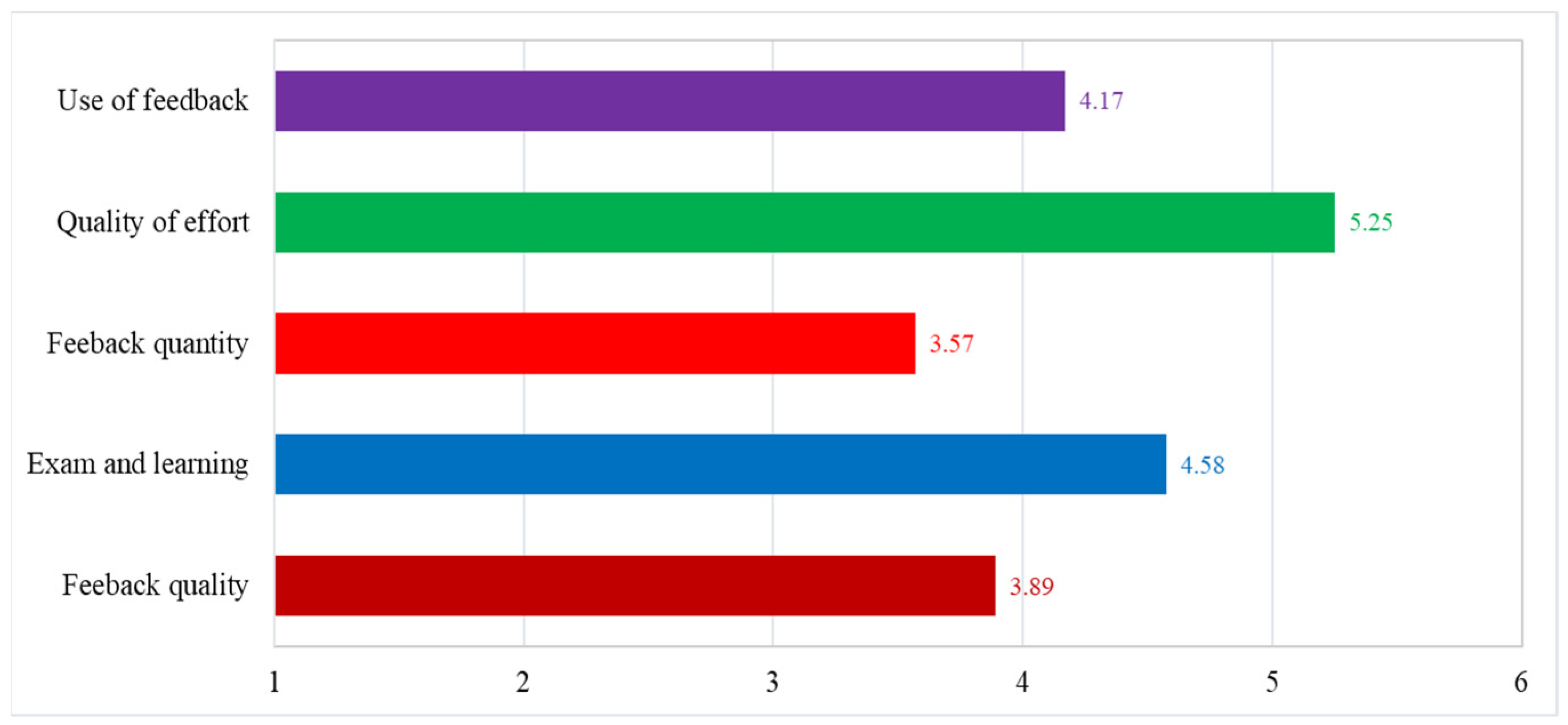

4.1. Quantitative Analysis Results

4.2. Qualitative Analysis Results

- Students’ perceptions of teaching.

- Feedback delivery and the assessment tasks.

4.2.1. Students’ Perceptions of Teaching

The subject moved on very quickly to new topics, which made it difficult to get proper benefits from learning in class.

It was hectic and constant working. There are many topics “fighting” about study time. You end up in a situation where you try to keep up with everything, but some topics have to be sacrificed to perform in others.

The teaching was hectic and the lecturer often ‘rushes’ through large pieces of proof and calculations.

The lectures were used for a lot of unnecessary proof.

There was not so much at lectures. YouTube is better.

4.2.2. Feedback Delivery and the Assessment Tasks

I wish it was possible to get feedback on the exam to learn from it.

With larger and fewer assignments, it was difficult to learn the material as it took longer each time I worked on the subject.

I did not get much feedback from the teacher. Had little compulsory and the obligatory was difficult (did not get much out of them). Better with small assignments.

Have more obligations so you get feedback continuously. Should have graded scores on submissions that count toward the exam.

Could also have had something to do that counts towards the exam during the semester (e.g., a 2-week project or something).

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Your Experience of Assessment and Feedback

| Strongly Disagree | Disagree | Slightly Disagree | Slightly Agree | Agree | Strongly Agree | ||

|---|---|---|---|---|---|---|---|

| 1 | The requirements of this course make it necessary to work consistently hard. | ||||||

| 2 | The feedback I receive makes me understand things better. | ||||||

| 3 | I read the feedback I receive from the teacher carefully and try to understand the teacher’s assessments and comments. | ||||||

| 4 | On this course, it is necessary to work consistently and regularly. | ||||||

| 5 | I have hardly received any feedback on submitted assignments. | ||||||

| 6 | I learn new things while preparing for the exams. | ||||||

| 7 | As a rule, the feedback on assignments makes me go back over material we have covered earlier. | ||||||

| 8 | The feedback gives me a clear sense of what needs to be improved for next time. | ||||||

| 9 | The feedback makes me understand better why the teachers are assessing my work as they do. | ||||||

| 10 | Both exam preparations and the exam provide me with a greater overview and understanding of the material. | ||||||

| 11 | I use the feedback I received to go back over what I had done in my work. | ||||||

| 12 | Feedback comes quickly. | ||||||

| 13 | I learn new things better as a result of the exams. | ||||||

| 14 | Whatever feedback I received on my work came too late to be useful. | ||||||

| 15 | The way the assessment system works here, it is necessary to work regularly every week. | ||||||

Appendix B. Din Opplevelse av Vurdering og Tilbakemeldinger

| Veldig Uenig | Uenig | Litt Uenig | Litt Enig | Enig | Veldig Enig | ||

|---|---|---|---|---|---|---|---|

| 1 | Kravene på studiet gjør det nødvendig å jobbe hardt hele tiden | ||||||

| 2 | Tilbakemeldingene til meg gjør at jeg forstår tingene mye bedre | ||||||

| 3 | Jeg leser nøye igjennom tilbakemeldingene jeg får og prøver å forstå lærerens vurderinger og kommentarer | ||||||

| 4 | For å gjøre det bra på dette studiet må vi jobbe jevnt og regelmessig | ||||||

| 5 | Jeg har nesten ikke fått tilbakemeldinger på innleverte oppgaver | ||||||

| 6 | Jeg lærer nye ting når jeg forbereder meg til eksamen | ||||||

| 7 | Som regel fører tilbakemeldinger på oppgaven(e) at jeg repeterer lærestoff vi har arbeidet med tidligere | ||||||

| 8 | Tilbakemeldingene gir meg klar beskjed om hva som bør forbedres neste gang | ||||||

| 9 | Tilbakemeldingene gjør at jeg forstår bedre hvorfor lærerne vurderer arbeidet mitt (oppgavene) som de gjør | ||||||

| 10 | Både forberedelser til eksamen og selve eksamen gir meg oversikt og bedre forståelse av kunnskapsstoffet | ||||||

| 11 | Jeg bruker tilbakemeldingene til å gå igjennom oppgaven på nytt | ||||||

| 12 | Tilbakemeldinger (feedback) kommer raskt | ||||||

| 13 | Jeg lærer ting bedre som resultat av eksamen | ||||||

| 14 | Tilbakemeldingene kommer nesten alltid for sent til å være av noen nytte | ||||||

| 15 | Slik vurderingssystemet fungerer her er det nødvendig å jobbe jevnt hver uke | ||||||

References

- Dontre, A.J. The influence of technology on academic distraction: A review. Hum. Behav. Emerg. 2020, 3, 379–390. [Google Scholar] [CrossRef]

- Smith, W.M.; Rasmussen, C.; Tubbs, R. Introduction to the special issue: Insights and lessons learned from mathematics departments in the process of change. PRIMUS 2021, 31, 239–251. [Google Scholar] [CrossRef]

- Chen, Q.; Yan, Z. Does multitasking with mobile phones affect learning? A review. Comput. Hum. Behav. 2016, 54, 34–42. [Google Scholar] [CrossRef]

- Laursen, S. Levers for Change: An Assessment of Progress on Changing STEM Instruction, 1st ed.; American Association for the Advancement of Science: Washington, DC, USA, 2019. [Google Scholar]

- Gynnild, V.; Tyssedal, J.; Lorentzen, L. Approaches to study and the quality of learning. Some empirical evidence from engineering education. Int. J. Sci. Math. Educ. 2005, 3, 587–607. [Google Scholar] [CrossRef]

- Zakariya, Y.F. Undergraduate Students’ Performance in Mathematics: Individual and Combined Effects of Approaches to Learning, Self-Efficacy, and Prior Mathematics Knowledge; Department of Mathematical Sciences, University of Agder: Kristiansand, Norway, 2021. [Google Scholar]

- Leomarich, C. Factors affecting the failure rate in mathematics: The case of Visayas State University (VSU). Rev. Socio-Econ. Res. Devel. Stud. 2019, 3, 1–18. [Google Scholar]

- Almeida, M.E.B.d.; Queiruga-Dios, A.; Cáceres, M.J. Differential and Integral Calculus in First-Year Engineering Students: A Diagnosis to Understand the Failure. Mathematics 2021, 9, 61. [Google Scholar] [CrossRef]

- Zakariya, Y.F.; Bamidele, E.F. Investigation into causes of poor academic performance in mathematics among Obafemi Awolowo University undergraduate students, Nigeria. GYANODAYA—J. Progress. Educ. 2016, 9, 11. [Google Scholar] [CrossRef]

- Zakariya, Y.F.; Nilsen, H.K.; Bjørkestøl, K.; Goodchild, S. Analysis of relationships between prior knowledge, approaches to learning, and mathematics performance among engineering students. Int. J. Math. Educ. Sci. Technol. 2021, 1–19. [Google Scholar] [CrossRef]

- Ellis, J.; Fosdick, B.K.; Rasmussen, C. Women 1.5 times more likely to leave STEM pipeline after calculus compared to men: Lack of mathematical confidence a potential culprit. PLoS ONE 2016, 11, e0157447. [Google Scholar] [CrossRef]

- Smith, W.M.; Voigt, M.; Ström, A.; Webb, D.C.; Martin, W.G. Transformational Change Efforts: Student Engagement in Mathematics through an Institutional Network for Active Earning; American Mathematical Society: Providence, RI, USA, 2021. [Google Scholar]

- Reinholz, D.L.; Apkarian, N. Four frames for systemic change in STEM departments. Int. J. STEM Educ. 2018, 5, 3. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef] [Green Version]

- Stovner, R.B.; Klette, K.; Nortvedt, G.A. The instructional situations in which mathematics teachers provide substantive feedback. Educ. Stud. Math. 2021, 108, 533–551. [Google Scholar] [CrossRef]

- Kontorovich, I. Minding mathematicians’ discourses in investigations of their feedback on students’ proofs: A case study. Educ. Stud. Math. 2021, 107, 213–234. [Google Scholar] [CrossRef]

- Reinholz, D.L.; Pilgrim, M.E. Student sensemaking of proofs at various distances: The role of epistemic, rhetorical, and ontological distance in the peer review process. Educ. Stud. Math. 2021, 106, 211–229. [Google Scholar] [CrossRef]

- Reinholz, D.L. Peer-Assisted reflection: A design-based intervention for improving success in calculus. Int. J. Res. Undergrad. Math. Educ. 2015, 1, 234–267. [Google Scholar] [CrossRef] [Green Version]

- Fujita, T.; Jones, K.; Miyazaki, M. Learners’ use of domain-specific computer-based feedback to overcome logical circularity in deductive proving in geometry. ZDM—Math. Educ. 2018, 50, 699–713. [Google Scholar] [CrossRef] [Green Version]

- Robinson, M.; Loch, B.; Croft, T. Student perceptions of screencast feedback on mathematics assessment. Int. J. Res. Undergrad. Math. Educ. 2015, 1, 363–385. [Google Scholar] [CrossRef] [Green Version]

- Sezen-Barrie, A.; Marbach-Ad, G. Cultural-historical analysis of feedback from experts to novice science teachers on climate change lessons. Int. J. Sci. Educ. 2021, 43, 497–528. [Google Scholar] [CrossRef]

- Han, Y.; Hyland, F. Learner engagement with written feedback: A sociocognitive perspective. In Feedback in Second Language Writing; Han, Y., Hyland, F., Eds.; Cambridge University Press: Cambridge, UK, 2019; pp. 247–264. [Google Scholar]

- Zhan, Y.; Wan, Z.H.; Sun, D. Online formative peer feedback in Chinese contexts at the tertiary Level: A critical review on its design, impacts and influencing factors. Comp. Educ. 2022, 176, 104341. [Google Scholar] [CrossRef]

- Guo, W.; Wei, J. Teacher feedback and students’ self-regulated learning in mathematics: A study of chinese secondary students. Asia-Pac. Educ. Res. 2019, 28, 265–275. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Assessment and classroom learning. Assess. Educ. Princ. Policy Pract. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Jonsson, A. Facilitating productive use of feedback in higher education. Active Learn. High. Educ. 2012, 14, 63–76. [Google Scholar] [CrossRef]

- Brown, G.T.; Peterson, E.R.; Yao, E.S. Student conceptions of feedback: Impact on self-regulation, self-efficacy, and academic achievement. Br. J. Educ. Psychol. 2016, 86, 606–629. [Google Scholar] [CrossRef]

- Barana, A.; Marchisio, M.; Sacchet, M. Interactive feedback for learning mathematics in a digital learning environment. Educ. Sci. 2021, 11, 279. [Google Scholar] [CrossRef]

- Gibbs, G. Using Assessment to Support Student Learning; Leeds Metropolitan University: West Yorkshire, UK, 2010. [Google Scholar]

- Shute, V.J. Focus on formative feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Sangwin, C. Computer Aided Assessment of Mathematics; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Biggs, J.B. What the student does: Teaching for enhanced learning. High. Educ. Res. Dev. 2012, 31, 39–55. [Google Scholar] [CrossRef]

- Taylor, C. Assessment for measurement or standards: The peril and promise of large-scale assessment reform. Am. Educ. Res. J. 1994, 31, 231–262. [Google Scholar] [CrossRef]

- Lipnevich, A.A.; Berg, D.A.G.; Smith, J.K. Toward a model of student response to feedback. In The Handbook of Human and Social Conditions in Assessment; Brown, G.T.L., Harris, L.R., Eds.; Routledge: New York, NY, USA, 2016; pp. 169–185. [Google Scholar]

- Pettersen, R.C.; Karlsen, K.H. Studenters Erfaringer med Tilbakemeldinger (Feedback): Norsk Versjon av Assessment Experience Questionnaire (AEQ). 2011. Available online: https://www.researchgate.net/publication/261572653_Studenters_erfaringer_med_tilbakemeldinger_feedback_Norsk_versjon_av_Assessment_Experience_Questionnaire_AEQ (accessed on 6 May 2022).

- Gibbs, G.; Simpson, C. Measuring the response of students to assessment: The assessment experience questionnaire. In Proceedings of the 11th International Improving Student Learning Symposium, Hinckley, UK, 1–3 September 2003. [Google Scholar]

- Braun, V.; Clarke, V.; Hayfield, N.; Terry, G. Thematic Analysis. In Handbook of Research Methods in Health Social Sciences; Liamputtong, P., Ed.; Springer: Singapore, 2019; pp. 843–860. [Google Scholar]

- Guest, G.; MacQueen, K.M.; Namey, E.E. Applied Thematic Analysis; Sage: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Vattøy, K.-D.; Gamlem, S.M.; Rogne, W.M. Examining students’ feedback engagement and assessment experiences: A mixed study. Stud. High. Educ. 2021, 46, 2325–2337. [Google Scholar] [CrossRef]

- Kyaruzi, F.; Strijbos, J.-W.; Ufer, S.; Brown, G.T.L. Students’ formative assessment perceptions, feedback use and mathematics performance in secondary schools in Tanzania. Assess. Educ. Princ. Policy Pract. 2019, 26, 278–302. [Google Scholar] [CrossRef]

- Greiffenhagen, C. The materiality of mathematics: Presenting mathematics at the blackboard. Bri. J. Soc. 2014, 65, 502–528. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ginga, U.A.; Zakariya, Y.F. Impact of a social constructivist instructional strategy on performance in algebra with a focus on secondary school students. Educ. Res. Int. 2020, 2020, 3606490. [Google Scholar] [CrossRef]

- Rasmussen, C.; Kwon, O.N. An inquiry-oriented approach to undergraduate mathematics. J. Math. Behav. 2007, 26, 189–194. [Google Scholar] [CrossRef]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef] [Green Version]

- Theobald, E.J.; Hill, M.J.; Tran, E.; Agrawal, S.; Arroyo, E.N.; Behling, S.; Chambwe, N.; Cintron, D.L.; Cooper, J.D.; Dunster, G.; et al. Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proc. Natl. Acad. Sci. USA 2020, 117, 6476–6483. [Google Scholar] [CrossRef] [Green Version]

- Laursen, S.L.; Rasmussen, C. I on the prize: Inquiry approaches in undergraduate mathematics. Int. J. Res. Undergrad. Math. Educ. 2019, 5, 129–146. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Developing the theory of formative assessment. Educ. Assess. Eval. Account. 2009, 21, 5–31. [Google Scholar] [CrossRef] [Green Version]

- Van der Kleij, F.M.; Feskens, R.C.W.; Eggen, T.J.H.M. Effects of feedback in a computer-based learning environment on students’ learning outcomes. Rev. Educ. Res. 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Demosthenous, E.; Christou, C.; Pitta-Pantazi, D. Mathematics classroom assessment: A framework for designing assessment tasks and interpreting students’ responses. Eur. J. Investig. Health Psychol. Educ. 2021, 11, 1088–1106. [Google Scholar] [CrossRef]

| Dimension | Short Description | Item Number | Sample Item | α |

|---|---|---|---|---|

| Feedback quality | The feedback fosters students’ understanding and highlights specific areas of improvement in students’ work. | 2, 8, 9 | The feedback I receive makes me understand things better. | 0.75 |

| Exam and learning | The exam is aligned with the course content materials and fosters learning. | 6, 10, 13 | I learn new things while preparing for the exams. | 0.70 |

| Feedback quantity | The feedback is sufficient and timely. | 5 *, 12, 14 * | Feedback comes quickly. | 0.69 |

| Quality of effort | The course and its assessment tasks necessitate consistent effort. | 1, 4, 15 | The requirements of this course make it necessary to work consistently hard. | 0.77 |

| Use of feedback | The feedback is used by the students to improve learning. | 3, 7, 11 | I use the feedback I received to go back over what I had done in my work. | 0.66 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zakariya, Y.F.; Midttun, Ø.; Nyberg, S.O.G.; Gjesteland, T. Reforming the Teaching and Learning of Foundational Mathematics Courses: An Investigation into the Status Quo of Teaching, Feedback Delivery, and Assessment in a First-Year Calculus Course. Mathematics 2022, 10, 2164. https://doi.org/10.3390/math10132164

Zakariya YF, Midttun Ø, Nyberg SOG, Gjesteland T. Reforming the Teaching and Learning of Foundational Mathematics Courses: An Investigation into the Status Quo of Teaching, Feedback Delivery, and Assessment in a First-Year Calculus Course. Mathematics. 2022; 10(13):2164. https://doi.org/10.3390/math10132164

Chicago/Turabian StyleZakariya, Yusuf F., Øystein Midttun, Svein Olav Glesaaen Nyberg, and Thomas Gjesteland. 2022. "Reforming the Teaching and Learning of Foundational Mathematics Courses: An Investigation into the Status Quo of Teaching, Feedback Delivery, and Assessment in a First-Year Calculus Course" Mathematics 10, no. 13: 2164. https://doi.org/10.3390/math10132164