An Improved Matting-SfM Algorithm for 3D Reconstruction of Self-Rotating Objects

Abstract

:1. Introduction

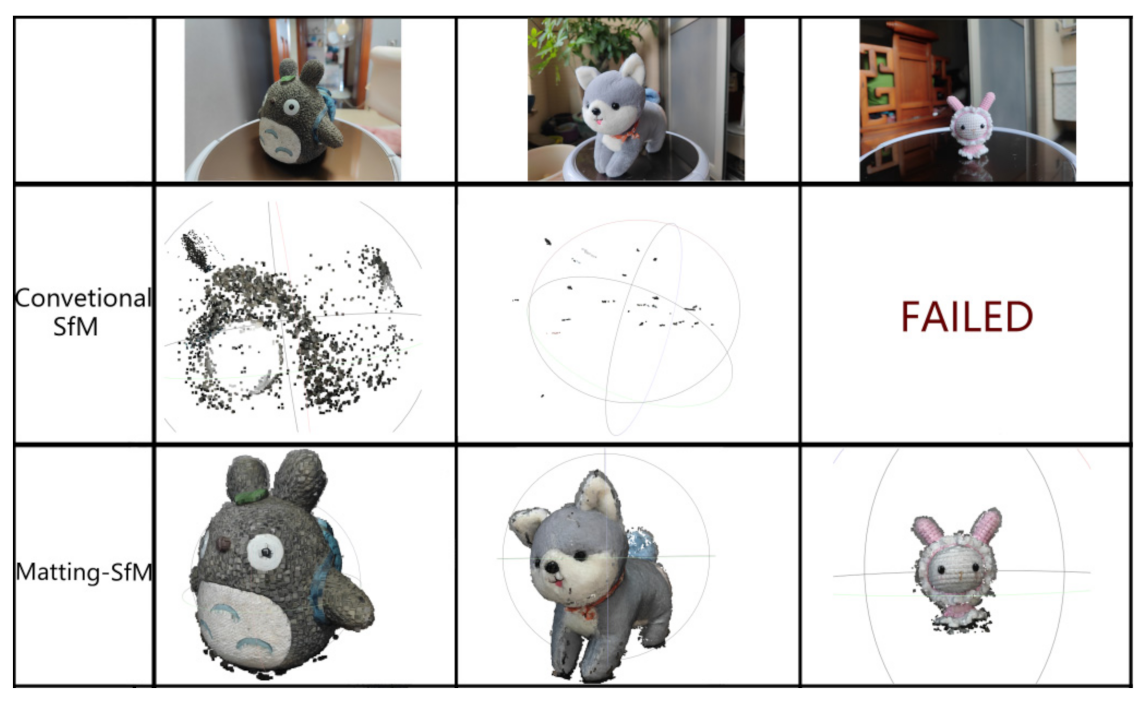

- We reveal the reason why conventional SfM cannot reconstruct self-rotating objects.

- We propose a new algorithm called Matting-SfM, and compare the results of the two algorithms (Matting-SfM and the SfM) after the MVS reconstruction. It was proven that Matting-SfM algorithm possessed more accurate results and solved the problem that the self-rotating objects could not be reconstructed.

2. Related Work

3. Methods

3.1. Video Segmentation and Background Replacement

3.2. Reconstructing Sparse Point Cloud

3.3. Densifying the Sparse Point Cloud by MVS

4. Experiment Materials and Evaluation Indices

5. Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Han, R.; Yan, H.; Ma, L. Research on 3D Reconstruction methods Based on Binocular Structured Light Vision. J. Phys. Conf. Ser. 2021, 1744, 032002. [Google Scholar] [CrossRef]

- Han, X.F.; Laga, H.; Bennamoun, M. Image-based 3D object reconstruction: State-of-the-art and trends in the deep learning era. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1578–1604. [Google Scholar] [CrossRef] [PubMed]

- Fahim, G.; Amin, K.; Zarif, S. Single-View 3D reconstruction: A Survey of deep learning methods. Comput. Graph. 2021, 94, 164–190. [Google Scholar] [CrossRef]

- Li, G.; Hou, J.; Chen, Z.; Yu, L.; Fei, S. Real-time 3D reconstruction system using multi-task feature extraction network and surfel. Opt. Eng. 2021, 60, 083104. [Google Scholar] [CrossRef]

- Campos, T.J.F.L.; Filho, F.E.d.V.; Rocha, M.F.H. Assessment of the complexity of renal tumors by nephrometry (RENAL score) with CT and MRI images versus 3D reconstruction model images. Int. Braz. J. Urol. 2021, 47, 896–901. [Google Scholar] [CrossRef]

- Kadi, H.; Anouche, K. Knowledge-based parametric modeling for heritage interpretation and 3D reconstruction. Digit. Appl. Archaeol. Cult. Herit. 2020, 19, e00160. [Google Scholar] [CrossRef]

- Moulon, P.; Monasse, P.; Marlet, R. Adaptive structure from motion with a contrario model estimation. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 257–270. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Schönberger, J.L.; Price, T.; Sattler, T.; Frahm, J.M.; Pollefeys, M. A vote-and-verify strategy for fast spatial verification in image retrieval. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 321–337. [Google Scholar]

- Pierre, M.; Pascal, M.; Romuald, P.; Renaud, M. OpenMVG: Open multiple view geometry. In International Workshop on Reproducible Research in Pattern Recognition; Springer: Cham, Switzerland, 2016; Volume 10214, pp. 60–74. [Google Scholar]

- Wu, C. Towards linear-time incremental structure from motion. In Proceedings of the 3DV-Conference, International Conference on IEEE Computer Society, Seattle, WA, USA, 29 June–1 July 2013; pp. 127–134. [Google Scholar]

- Wang, F.; Galliani, S.; Vogel, C.; Speciale, P.; Pollefeys, M. Patchmatchnet: Learned multi-view patchmatch stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14194–14203. [Google Scholar]

- Yao, Y.; Luo, Z.; Li, S.; Quan, L. Mvsnet: Depth inference for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 767–783. [Google Scholar]

- Yao, Y.; Luo, Z.; Li, S.; Shen, T.; Fang, T.; Quan, L. Recurrent MVSnet for high-resolution multi-view stereo depth inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5525–5534. [Google Scholar]

- Chen, R.; Han, S.; Xu, J.; Su, H. Point-based multi-view stereo network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1538–1547. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Cernea, D. OpenMVS: Multi-View Stereo Reconstruction Library. 2020. Volume 5, p. 7. Available online: https://cdcseacave.github.io/openMVS (accessed on 27 October 2021).

- Furukawa, Y.; Curless, B.; Seitz, S.M.; Szeliskiet, R. Towards internet-scale multi-view stereo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1434–1441. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Xie, Y.; Chen, L.; Zhou, X.; Bao, H. NeuralRecon: Real-time coherent 3D reconstruction from monocular video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15598–15607. [Google Scholar]

- Kim, J.; Chung, D.; Kim, Y.; Kim, H. Deep learning-based 3D reconstruction of scaffolds using a robot dog. Autom. Constr. 2022, 134, 104092. [Google Scholar] [CrossRef]

- Chen, J.; Kira, Z.; Cho, Y.K. Deep Learning Approach to Point Cloud Scene Understanding for Automated Scan to 3D Reconstruction. J. Comput. Civ. Eng. 2019, 33, 04019027. [Google Scholar] [CrossRef]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. Bundlefusion: Real-time globally consistent 3D reconstruction using on-the-fly surface reintegration. ACM Trans. Graph. 2017, 36, 76a. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Barath, D.; Mishkin, D.; Eichhardt, I.; Shipachev, I.; Matas, J. Efficient initial pose-graph generation for global SfM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14546–14555. [Google Scholar]

- Zhu, S.; Shen, T.; Zhou, L.; Zhang, R.; Wang, J.; Fang, T.; Quan, L. Parallel structure from motion from local increment to global averaging. arXiv 2017, arXiv:1702.08601. [Google Scholar]

- Zhu, S.; Zhang, R.; Zhou, L.; Shen, T.; Fang, T.; Tan, P.; Quan, L. Very large-scale global SfM by distributed motion averaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4568–4577. [Google Scholar]

- Chen, Y.; Chan, A.B.; Lin, Z.; Suzuki, K.; Wang, G. Efficient tree-structured SfM by RANSAC generalized Procrustes analysis. Comput. Vis. Image Underst. 2017, 157, 179–189. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, S.; Ryabtsev, A.; Sengupta, S.; Cureless, B.L.; Seitz, S.M.; Kemelmacher-Shlizerman, I. Real-time high-resolution background matting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8762–8771. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Florian, L.C.; Adam, S.H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587v3. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Moisan, L.; Moulon, P.; Monasse, P. Automatic Homographic Registration of a Pair of Images, with A Contrario Elimination of Outliers. Image Process. Line 2012, 2, 56–73. [Google Scholar] [CrossRef]

- Goesele, M.; Snavely, N.; Curless, B.; Hoppe, H.; Seitz, S.M. Multi-view stereo for community photo collections. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Kolmogorov, V. Convergent tree-reweighted message passing for energy minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1568–1583. [Google Scholar] [CrossRef] [PubMed]

- Hirschmuller, H. Stereo processing by semi-global matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Hao, Y.; Wang, N.; Li, J.; Gao, X. HSME: Hypersphere manifold embedding for visible thermal person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8385–8392. [Google Scholar]

- Liu, H.; Cheng, J.; Wang, W.; Su, Y.; Bai, H. Enhancing the discriminative feature learning for visible-thermal cross-modality person re-identification. Neurocomputing 2020, 398, 11–19. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.H.; Mackinnon, T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 2018, 73, 439–445. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.W.; Li, H. HPILN: A feature learning framework for cross-modality person re-identification. arXiv 2019, arXiv:1906.03142. [Google Scholar]

| Methods | Hist Similarity (%) | PNSR | SSIM |

|---|---|---|---|

| Colmap | 99.85% | 10.95 | 0.25 |

| VisualSfM | 99.80% | 10.79 | 0.21 |

| OpenMVG | 99.50% | 9.55 | 0.14 |

| Matting-SfM | 99.90% | 11.51 | 0.26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhang, Z.; Luo, S.; Cai, Y.; Guo, S. An Improved Matting-SfM Algorithm for 3D Reconstruction of Self-Rotating Objects. Mathematics 2022, 10, 2892. https://doi.org/10.3390/math10162892

Li Z, Zhang Z, Luo S, Cai Y, Guo S. An Improved Matting-SfM Algorithm for 3D Reconstruction of Self-Rotating Objects. Mathematics. 2022; 10(16):2892. https://doi.org/10.3390/math10162892

Chicago/Turabian StyleLi, Zinuo, Zhen Zhang, Shenghong Luo, Yuxing Cai, and Shuna Guo. 2022. "An Improved Matting-SfM Algorithm for 3D Reconstruction of Self-Rotating Objects" Mathematics 10, no. 16: 2892. https://doi.org/10.3390/math10162892

APA StyleLi, Z., Zhang, Z., Luo, S., Cai, Y., & Guo, S. (2022). An Improved Matting-SfM Algorithm for 3D Reconstruction of Self-Rotating Objects. Mathematics, 10(16), 2892. https://doi.org/10.3390/math10162892