1. Introduction

The mathematics of

Lights Out was extensively studied in the past decades. The Lights Out game board is a rectangular grid of lights, where each light is either on (lit) or off (unlit). By performing a

move, a selected light and its adjacent rectilinear neighbors are toggled. At the beginning of the game, some lights have already been switched on. The player is asked to

solve the game, i.e., switching off all the lights, by performing a sequence of moves. Many variants such as the setting up of different toggle patterns were explored, e.g., in [

1,

2].

Regardless of the variant of Lights Out, most studies focused on the solvability (or the attainability) of given games or the number of solvable (or attainable) games. These problems can usually be solved efficiently by elementary linear algebra and group theory approaches, e.g., in [

3,

4]. More precisely, these problems are in the complexity class P. A brief introduction of algorithmic complexity can be found in the

Supplementary Materials. In a nutshell, problems in P can be computed efficiently and are considered as simple mathematical problems. Sometimes, the analysis may require the use of other mathematical tools, such as Fibonacci polynomials [

5] and cellular automata [

6].

However, not every derived problem has a known algorithm for solving it in an efficient manner. For example, the

shortest solution problem focuses on solving a game with minimal number of moves, which is related to the minimum distance decoding (or the maximum likelihood decoding) in coding theory. In a more general setting, this problem was proven to have no known efficient algorithms for solving [

7,

8]. Precisely, this problem is NP-hard and its decision problem is NP-complete. Approximating the solution within a constant factor is also NP-hard [

9,

10]. Yet, it is possible to solve the shortest solution problem efficiently if certain special properties of the game can be detected and found.

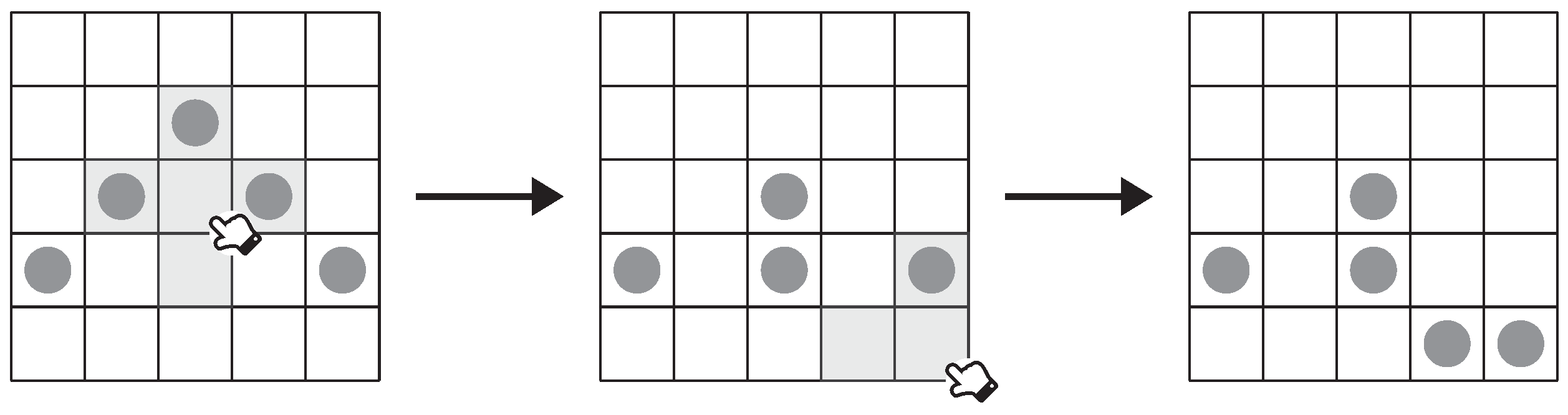

The motivation of this study is inspired by the

Gale–Berlekamp switching game, a Lights Out variant such that the player can either toggle an entire row or an entire column per move. Example moves of a

Gale–Berlekamp switching game are illustrated in

Figure 1, where the gray cells indicate the toggle pattern. Despite the simple toggle patterns, Roth and Viswanathan [

11] proved that finding the minimal number of lights that the player cannot switch off from a given Gale–Berlekamp switching game is NP-complete, although a linear time approximation algorithm was later discovered by Karpinski and Schudy [

12].

This problem has a special symbolization in coding theory, and has attracted researchers to further investigate this game, e.g., [

13,

14,

15]. In other words, when we view the game from a coding-theoretical perspective, we can ask more questions with special symbolizations in coding theory, such as:

Hamming weight. What is the minimal number of lit lights among all solvable games except the solved game?

Coset leader. Which game has the minimal number of lit lights that the player can achieve from a given game? What is this minimal number? How many such games can the player achieve?

Covering radius. Among all possible games, what is the maximal number of lit lights that remained when the number of lit lights is minimized?

Error correction. Which is the “closest” solvable game from a given unsolvable game in the sense of toggling the minimal number of individual lights? Furthermore, is such closest solvable game unique?

A brief introduction of coding theory can be found in the

Supplementary Materials, which we discuss the physical meaning of the aforementioned terminologies in coding theory. Again, not all problems have known algorithms to be solved in an efficient manner. For example, the first problem about Hamming weight, which is also known as the

minimum distance problem, is NP-hard in general [

16].

Previous studies of switching games in recreational mathematics mostly focused on the solvability check, the way to solve a game, and the counting of the number of solvable games. On the other hand, the coding-theoretical view enhances the ways to play the games, but the existing studies mostly focused on the hardness results, e.g., for Gale–Berlekamp switching games and its generalization. One natural question to ask is as follows: Is there any commonly played switching game where its coding-theoretical results are not computationally hard? We give an affirmative answer to this question, which is the switching game that we are focusing within this study.

In this study, we consider a natural variant of Lights Out in a way that each toggle pattern is changed from a “small cross” to a “big cross” covering the entire row and the entire column. This variant is the two-state version of a game called

Alien Tiles, where the original Alien Tiles has four states per light [

17]. A comparison between the ordinary Lights Out and the two-state Alien Tiles is as illustrated in

Figure 2 and

Figure 3. The two-state Alien Tiles has a neat and simple parity condition, so that this game has various discussions on the Internet such as [

18,

19]. Similar setups also appear as training questions of programming contests [

20]. The solvability and the number of solvable games of arbitrary-state Alien Tiles have been answered by Maier and Nickel [

21]. However, the coding-theoretical problems that we listed above were not investigated, and we will answer these questions within this study.

This paper is organized as follows. We first discuss some general techniques for Lights Out and its two-state variants in

Section 2. Next, we discuss the basic properties of two-state Alien Tiles in

Section 3. We then solve our proposed coding-theoretical problems of the two-state Alien Tiles in

Section 4. In

Section 5, we apply the two-state Alien Tiles as an error-correcting code and investigate its optimality. Lastly, we discuss a states decomposition technique to link up the original four-state Alien Tiles and the two-state variant in

Section 6, and then conclude this study in

Section 7.

2. General Techniques for Lights out and Its Two-State Variants

We now describe some existing techniques in the literature for tackling Lights Out and its two-state variants.

2.1. Model

A general two-state Lights Out game consists of two basic elements:

When we toggle the lights according to a toggle pattern, we say we apply (or perform) a move.

We first model the game board. For convenience, we use 1 to represent a lit light, and 0 to represent an unlit light. We consider an grid of lights. Each possible grid can be represented by an matrix over a binary field . A notable property of is that the addition operation is having the same effect as the subtraction operation.

We can represent each toggle pattern by an

grid of lights, where each light in the grid is on if and only if the toggle pattern toggles this light. For example, the gray cells in

Figure 2 and

Figure 3 are the lit lights in the toggle pattern when the central light is clicked. Similarly, each toggle pattern is represented by an

matrix over

. Throughout the process, we can model the game board after performing a move by matrix addition, i.e., the original game board matrix plus the toggle pattern matrix.

Figure 4 illustrates an example of the model in matrix form.

To simplify the notations, we vectorize each matrix into an column vector by stacking the columns of the matrix on top of one another. The collection of all possible (vectorized) grids forms a vector space , which is called the game space. When it is clear from the context, we do not vectorize the matrix form of the game for readability. In a similar manner, we can transform an arbitrary-shape game board into a vector; thus, a similar vector space model also works for non-rectangular game boards.

Definition 1 (Solvability). A game is solvable if and only if there exists a sequence of moves to switch g into a zero vector.The zero vector is also called a solved game.

The concept of solvability and attainability are similar. We mention both terminologies here because some literature, such as [

21], consider attainability in lieu of solvability. A game

is

attainable if and only if there exists a sequence of moves that can switch a zero vector into

g.

We define the solvable game space by the vector space S over spanned by the set of all vectorized toggle patterns. Note that . A game g is solvable if and only if . This is due to the nature of the game that:

Applying a move twice will not toggle any lights, i.e., apply the same move again will undo the move;

Every permutation of a sequence of moves toggles the same set of lights, i.e., the order of the moves is not taken into consideration.

It is easy to observe that solvability is equivalent to attainability in Lights Out and its two-state variants: the sequence of moves that solves a game can also attain the game.

For most Lights Out variants, including the two-state Alien Tiles, we can model the moves in this manner. There are totally toggle patterns, so there are possible moves. We can bijectively associate each move by a light in the grid. For the two-state Alien Tiles, the -th light in the grid is associated with the move that toggles all the lights in the i-th row and the j-th column. That is, this move is represented by a binary matrix where only the -th entry is 1. When we perform such a move, we also say we click on the -th light.

The move space, denoted by K, is the vector space over spanned by the set of vectorized moves, where each vector in K corresponds to a sequence of (vectorized) moves, which is unique up to permutation. We remark that when there are totally toggle patterns, .

For a more general setting, the number of toggle patterns may not be , for example, the Gale–Berlekamp switching game. Although the physical meaning of “clicking” a light may not be valid, the concept of move space still works in this mathematical model. In such scenario, K may not equal to G. Yet, the remaining discussions are still valid by changing certain lengths and dimensions of the vectors and the matrices.

We can relate

K and

G in the following way. Define a linear map

that outputs a game by performing the moves stated in the input on a solved game. The image of

is

S. Being a linear map,

is also a homomorphism.

Figure 5 illustrates two examples of mapping a

to a

. We can also write

in its matrix form

, where

is an

matrix formed by juxtaposing all vectorized toggle patterns. That is, we have

.

2.2. Linear Algebra Approach

Suppose a such that for some is given, we can solve s by clicking the lights lit in k because returns a zero vector due to the fact that subtraction is the same as addition in . In other words, solving a game is equivalent to finding a such that . When we represent in its matrix form , we have a system of linear equations . Since , the dimension of the solvable game space S is the same as the dimension of the vector space spanned by the columns in . In other words, the number of solvable games equals .

If

is a full rank matrix, then every game in the game space is solvable, i.e.,

. Kreh [

1] coined the following terminology to describe this type of game.

Definition 2 (Completely Solvable). The game is completely solvable if and only if , i.e., every is a solvable game.

We can find a sequence of moves to solve a game after Gaussian elimination is applied to solve . If the number of columns in is more than , then some toggle patterns must be linearly dependent of some other toggle patterns. We only need to take at most (independent) columns for finding a that solves the game; hence, we can regard in its worst case as an binary matrix. The problem formulation is as follows:

Problem: Solvability Check and/or Finding a Solution for General Two-State Lights Out Variants;

Instance: An binary vector , and an binary matrix , with ;

Question: Is there a k that satisfies ?

Objective: Find a k that satisfies .

As , we consider the worst case here. The complexity for Gaussian elimination is therefore , which is in P.

We can use Gaussian elimination to check the solvability of the game

g by observing whether the solution of

exists. Other than brutally applying Gaussian elimination, there is another approach as stated in [

3], which is summarized as follows: The orthogonal complement of

S, denoted by

, is the null space of

. An unsolvable game, i.e., a game that is not in

S, cannot be orthogonal to

. Therefore, we can determine the solvability of the game by calculating

(where each column of

E corresponds to a vector in a basis of

). The game

s is said to be solvable if and only if

. In fact, this technique was also applied in coding theory for detecting errors of a linear code.

2.3. Minimal Number of Moves (The Shortest Solution)

Consider , i.e., the game s can be solved by the move sequence k. Based on the property of the kernel (null space) of , as denoted by , that for all , we have for all due to homomorphism.

If we want to solve s with the minimal number of moves, we need to find the candidate u that minimizes the 1-norm . This move sequence is then called the shortest solution of the solvable game s. If the dimension of is in terms of m or n, then the size of is exponentially large in terms of m or n; thus, an exhaustive search among all possible is inefficient.

We now formulate the shortest solution problem for general two-state Lights Out variants (including the ordinary Lights Out). As a general problem, we need to support an arbitrary . The dimension of the kernel is no larger than ; thus, the input for describing the kernel is amounts of binary vectors in that can span the kernel. The problem is stated as follows:

Problem: Shortest Solution Problem for General Two-State Lights Out Variants;

Instance: A set B of binary vectors in , where and the span of B is , and an binary vector ;

Objective: Find a vector that can minimize .

Theorem 1. The shortest solution problem for general two-state Lights Out variants is NP-hard.

Proof. We prove this theorem by considering the minimum distance decoding of linear code in coding theory. As a recall, the (function) problem of minimum distance decoding of a linear code is equivalent to the following: Given a parity check matrix H of the linear code and a (column vector) word k, the goal is to find out the error (column) vector e that results in the smallest Hamming weight such that . This is due to the property of parity check matrix that if and only if c is a codeword.

The reduction is as follows. Consider the span of the columns in

H as the orthogonal complement of

, that is, every

is a codeword. Furthermore, suppose

k is the move sequence that solves the game

. Write

, with

. Since

, we know that

e is a move sequence that can solve

s. That is, by finding the shortest solution

e (which has the smallest Hamming weight), we have

; thus,

. This solves the minimum distance decoding problem, and such problem is no harder than the shortest solution problem for general two-state Lights Out variants. As minimum distance decoding is NP-hard [

7,

8], the shortest solution problem for general two-state Lights Out variants is also NP-hard. □

Nevertheless, it is still possible to have an efficient algorithm for finding the shortest solution of a specific Lights Out variant. For example, if the game space is completely solvable, then we must have . In such case, the move sequence obtained by the Gaussian elimination process is unique. Therefore, this problem is in P (in cubic time in terms of ).

As a remark, we can also reduce this shortest solution problem to the minimum distance decoding problem in a similar manner, by treating the juxtaposition of the (column) vectors in the basis of the orthogonal complement of as the parity check matrix H. This shows that the two problems are actually equivalent to each other.

3. Two-State Alien Tiles

We now discuss some specific techniques for the two-state Alien Tiles problem. Note that we can regard the game space G and the solvable game space S as abelian groups under vector addition. Then, we have , i.e., S is a normal subgroup of G.

Definition 3 (Syndrome). Two games have the same syndrome if and only if they are in the same coset in the quotient group .

One crucial component that will be applied into solving our coding-theoretical problems is the function that outputs the syndrome of a game. The quotient group is isomorphic to the set of all syndromes.

We adopt the term “syndrome” in coding theory here because each coset represents an “error” from the solvable game space that cannot be recovered by any move sequences. In other words, an unsolvable game becomes solvable after we remove the “error” by toggling some individual lights.

3.1. Easy Games and Doubly Easy Games

For two-state Alien Tiles, we can click on any light on the game board; thus, , i.e., the move space K equals the game space G. In other words, we can regard a game as a move in K and vice versa.

Definition 4 (Easy Games). A game s is called easy if and only if it can be solved by clicking the lights lit in s, i.e., .

Figure 6 illustrates an example of an easy two-state Alien Tiles game. The terminology “easy” was due to Torrence [

22]. In other words,

s is easy if

is an eigenvector of

. We also extend the terminology as follows.

Definition 5 (Doubly Easy Games). A game s is doubly easy if and only if it can be solved by first clicking the lights lit in s to obtain a game , then clicking the lights lit in the game .

That is, a game

s is doubly easy if and only if:

Due to homomorphism and the property of binary field that

(i.e.,

s equals its additive inverse), we have:

To write down

explicitly, we consider the following. Denote by

and

an

and a

all-ones matrix, respectively. Similarly, denote by

and

an

and a

zero matrix, respectively. Let

be a

identity matrix. By juxtaposing all (vectorized) toggle patterns, we obtain an

matrix:

To analyze doubly easy games, we need to apply

multiple times on a given

. For any positive integer

c and matrix

A over

, we write

to denote

for simplicity. In addition, note that:

In other words,

. By making use of the mixed-product property of the Kronecker product:

where

A,

B,

C, and

D are matrices of suitable sizes that can form matrix products

and

, we can now evaluate the powers of

(over

):

That is, for any positive integer , we have .

3.2. Even-by-Even Games

When both m and n are even, we have . In other words, and is an isomorphism; thus, . This means that all games are solvable, i.e., every even-by-even two-state Alien Tiles is completely solvable, and the number of solvable games is .

Consider an arbitrary solvable game

. There exists a unique

such that

. Then, we have

, thus

. In other words, all solvable games are doubly easy. The size of

is 1 as it is a zero vector space. A similar result in the case of square grids was also discussed in [

23]. An interesting property of even-by-even games is the duality that

solves the game

if and only if

solves the game

.

We can also view the solvability in another manner. If we know the way to toggling an arbitrary light, we can repeatedly apply this strategy to switch off the lights one by one. This also means that all games are solvable. We can toggle a single light by clicking on all the lights in the same row and the same column (where the light at the intersection of the row and the column is clicked once only). This can be observed from , when s only consists of a single 1.

To compute , the worst case (i.e., the case that takes the maximal number of computational steps) occurs when s is an all-ones game (a game that has all lights lit, which is an all-ones column vector of length ). Every 1 in s toggles lights. Therefore, the complexity to compute k is , which is of the same complexity as solving the linear system directly by Gaussian elimination. On the other hand, due to the uniqueness of k, the minimal number of moves to solve s is . To compute , we scan the status of the lights after we obtain k; thus, the complexity is .

3.3. Even-by-Odd (and Odd-by-Even) Games

Due to symmetry, we only need to discuss even-by-odd games. We first discuss how to solve these games.

Note that

. For each solvable game

, pick an arbitrary

such that

. Since

, by applying the

map twice, we have:

By clicking the lit lights in

s, we obtain the game

. Afterwards, we click the lit lights in

to obtain the game:

which is either a solved game or an all-ones game.

To see how the all-ones game is being solved, we consider the following: An arbitrary row of odd length n is selected, and every light in this row is clicked. In this way, every light in this row is toggled n times, and every light not belonging to this row is toggled once. Note that ; thus, every light in the game is toggled once. In other words, this move sequence can solve the all-ones game.

The (worst-case) complexity to compute is . Given , we have the same complexity for computing . The complexity to click every light in an arbitrary row is . Therefore, the overall complexity to solve a solvable game is . We have the same complexity for finding the move sequence because the complexity for checking whether is , which is being absorbed into .

Next, we discuss the move sequence to toggle all the lights in an arbitrary column, which will be used in the remaining discussion. Suppose we want to toggle the j-th column. Consider this move sequence: Except the -th light, click every light in the j-th column and every light in the 1-st row. Based on such operations, we have the followings:

The -th light is toggled for times, where is odd.

Each of the other lights in the j-th column is toggled for times, where is odd.

Each of the other lights in the 1-st row is toggled for times, where is even.

All other lights that are not in the j-th column and not in the i-th row are toggled twice.

As a result, only the lights in the j-th column are toggled for odd number of times, then all the lights in the j-th column are being toggled.

We notice that if we apply the above move sequence to every column, the resultant move sequence is that, except the first row, all the other lights are clicked once. Although this can solve the all-ones game, this move sequence is longer than what we have previously described. This suggests that the move sequence for solving a solvable game is not unique.

We now discuss the method to check whether a game is solvable or not. Denote by

the

-th light in the game

g. Let

be the row parity of the

i-th row in

g, i.e.,

Let be a column vector over , called the row parity vector. We can consider this row parity vector as exclusive or-ing (XOR) in all the columns, or the nim-sum of the columns.

Suppose we click an arbitrary light, say, the

-th light. Then, the values of

at all

or

are toggled. We can see that the new

satisfies

. Except when

, the new

will become

. That is, all parity bits in

r are flipped. This is as illustrated in

Figure 7.

To map both row parity vectors into one invariant such that any move sequence acting on a game does not change the invariant, we define the invariant function

by:

As every solvable game gives , we have , i.e., the number of lit lights in a solvable game is even. In addition, implies that the game g is not solvable. This suggests that not all games are solvable, say, a game where only the -st light is lit has an invariant .

On the other hand, any move sequence acting on a game in a coset gives a game in the same coset, because S is the span of all toggle patterns. Then, the following question arises: What is the relation between the invariant function and the cosets? To be more specific: Does the invariant function give a syndrome of the game? The answer is affirmative.

Theorem 2. Let g be an even-by-odd game. is a surjective map that maps g to its syndrome.

The above theorem has the following consequences:

if and only if , because is the syndrome of the solved game.

The number of cosets in , i.e., the index , is , due to the surjection of .

The number of solvable games, i.e., , is .

A game is solvable if and only if the parities of all rows are the same. The complexity of verifying the solvability is thus .

The method to find the minimal number of moves for solving an even-by-odd game efficiently is remained as an open problem. To justify that exhaustive search for this problem is inefficient, we calculate the size of . By the rank-nullity theorem, we know that . Therefore, , which means that exhaustive search cannot be performed in polynomial time.

3.4. Odd-by-Odd Games

When both m and n are odd, we have . In other words, we have . For each solvable game , pick an arbitrary such that . By applying again, we obtain . Using the fact that , we have . That is, all solvable games are easy. Similar as the above subsections, the worst-case complexity to solve the game is . To find a move sequence k, we only need to output s directly; thus, the complexity is .

To check whether a game

g is solvable or not, it is insufficient to only consider the row parity vector

. Let

be the column parity of the

j-th column in

g, i.e.,

and let

be a row vector over

, called the

column parity vector. When we click an arbitrary light, say, the

-th light, we can see that the new

becomes

, and the new

is

. Except when

, where the new

is

and the new

is

, all the parity bits of

and

are flipped. This is as illustrated in

Figure 8.

We call the pair the parity pair of the game g. We remark that not all combinations of parity pairs are valid. The exact criteria are as stated in thm:paritydiff.

Theorem 3. For odd-by-odd games, if and only if is even.

Proof. We first prove the “only if” part. The result is trivial for the solved game . Consider an arbitrary non-zero game . When we toggle an arbitrary lit light in g, say, the -th light, to form a new game , the row parity of the y-th row and the column parity of the x-th column are both flipped. That is, equals . Inductively, the procedure is repeated until all lit lights are off. Then, is equivalent to , where the former one equals 0. In other words, implies that is even.

For the “if” part, we prove by construction. Let be an index set such that if and only if y is in this set. Similarly, let be an index set such that if and only if x is in this set.

First, we construct a game such that only the -th lights are lit, where . If , then the proof is done. If , let . Then, we partition T into sets of size 2, as denoted by . For every , let , and we switch on the -th and the -th lights for an arbitrary . Afterwards, the row parity vector of the game matches with . For each partition, we have switched on 2 lights in the same column, so the column parity of this column remains unchanged. That is, the parity pair of the resultant game is . We can prove the case in a similar manner by symmetry arguments, and the game exists in the prescribed game space. □

The construction in the above proof will also be applicable when we discuss the coding-theoretical problems. We illustrate an example of such construction in

Figure 9. In this example, we start from a solved game, i.e., all lights are off. In the first phase, we match the bits in the row parity vector with the column parity vector. This example has fewer bits in the column parity vector, thus all the bits in the column parity vector are matched with some bits in the row parity vector. The bits in the row parity vector that are matched are arbitrary. In

Section 3.4, the red bits are matched, followed by the blue bits. Each pair of matched bits indicates a location, which is marked by a crosshair of the same color in the game grid. By switching on the lights marked by the crosshairs, the second phase is proceeded.

The bits that are not matched must all fall into either the row or the column parity vector. In this example, there are four bits remaining in the row parity vector. We group the bits two by two arbitrarily. In

Section 3.4, the two cyan bits and the two brown bits are respectively grouped. For each group, we can select an arbitrary column (if the unmatched bits fall into the column parity vector), followed by the selection of an arbitrary row. The crosshairs of the same color mark the column selected by the same group. By switching on the lights marked by the crosshairs, the desired game is constructed.

We now discuss the invariant function. Similar to the

in even-by-odd games, we define

by:

The invariant function for odd-by-odd games, denoted by

, is defined as:

Again, any move sequence acting on a game will never alter the invariant. Therefore, implicates that g is not solvable. In particular, a game with only the -st light lit has an invariant .

Unlike the case of even-by-odd games, the number of lit lights in a solvable game can either be odd or even. For example, an all-ones game has odd number of lit lights, which can be solved by clicking all the lights so that every light is toggled for times, where is odd. On the other hand, a solved game is a solvable game with no lit lights, hence the number of lit lights is even.

Theorem 4. Let g be an odd-by-odd game. inv is a surjective function that maps g to its syndrome.

Similar to the discussion in the last subsection, the consequences of the above theorem include:

if and only if , because is the syndrome of the solved game.

The number of cosets in , i.e., the index , is , due to the surjection of inv.

The number of solvable games, i.e., , is .

A game is solvable if and only if the parities of all rows and all columns are the same. The complexity of verifying its solvability is thus .

Seeking an efficient algorithm to find the minimal number of moves in solving an odd-by-odd game remains an open problem nowadays. For square grids, i.e., when

, the simplified problem was discussed in [

24,

25], but the answers there for odd-by-odd grids are certain exhaustive searches, which have to undergo the trial of

possibilities, where this number equals to the size of

for odd-by-odd square grids as discussed in [

23]. For the general sense, we can easily show that

by the rank-nullity theorem. This means that an exhaustive search on the kernel cannot be efficiently conducted.

4. Coding-Theoretical Perspective of Two-State Alien Tiles

The solvable game space S is a vector subspace of the game space G. That is, we can regard S as a linear code. Our task here is to find out the properties of S from the perspective of coding theory.

4.1. Hamming Weight

The basic parameter of an error-correcting code is the

distance of the code. When the distance

d is known, the error-detecting ability

and the error-correcting ability

are then obtained. As a linear code, the distance of the code equals the

Hamming weight of the code, which is defined as the minimal Hamming weight among all the non-zero codewords of the code. However, finding the Hamming weight of a general linear code is NP-hard [

16]. For two-state Alien Tiles, we can determine the Hamming weight of the solvable game space with ease, via the use of invariant functions.

Even-by-Even Games. These games are completely solvable, so a game with only one lit light has the minimal Hamming weight among all non-zero solvable games, i.e., the Hamming weight of such code is 1. This also means that even-by-even games have no error-detecting and correcting abilities.

Even-by-Odd Games. The syndrome of an even-by-odd solvable game is . There are two possible row parity vectors (of size ) that correspond to this syndrome, namely, and .

We first consider the row parity vector . Each row has an even number of lit lights. As the Hamming weight of a code only considers non-zero codewords, we cannot have a row parity vector for any non-zero solvable game if . For , the smallest equals to 2, where and .

Now, we consider the row parity vector . Each row has an odd number of lit lights. That is, the smallest equals to m, where and . As is even, the smallest possible m is 2. That is, we do not need to consider the row parity vector unless .

Odd-by-Odd Games. The syndrome of an odd-by-odd solvable game is the pair . If , only two games are in S, which are the all-zeros and the all-ones games. Therefore, the Hamming weight of the code is n. If , similarly, the Hamming weight is m. Both cases hold at the same time when .

Now, consider the case with both m and n greater than 1, i.e., each of them is at least 3. There are two possible parity pairs (in ), namely, and . For the former case, each row and each column has an even number of lit lights. In other words, the smallest , where and , is 4. For the latter case, each row and each column has an odd number of lit lights. That is, the minimal number of lit lights is no fewer than .

When

, it is easy to see that the minimal number of lit lights can be achieved by a game represented by an identity matrix. If

, without loss of generality, consider the case of

. An example to achieve the minimal number of lit lights

is the game:

Note that unless

, we have

. Therefore, we reach the conclusion that:

One may further combine the aforementioned results of all game sizes as follows:

4.2. Coset Leader

The quotient group contains all the cosets, where each coset contains all games achieved by any possible finite move sequence from a certain setup of the game. Two games are in the same coset if and only if there exists a move sequence to transform one to another. We have discussed the technique to identify the coset that a game belongs to, mainly by finding the syndrome of the game, where each coset is associated with a unique syndrome.

Any game , with being unsolvable. In the view of coding theory, we are interested in the minimal number of independent lights that we need to toggle such that the game becomes solvable. In the algebraic point of view, we want to know how far the coset C and the solvable space S are separated. That is, the mission is to find a game with smallest such that . Such e can be regarded as an error, so if we know the error pattern of each coset, an error-correcting code can be applied. In coding-theoretical terminology, this e is called the coset leader of the coset C.

Even-by-Even Games. As even-by-even games have no error-correcting ability, it is not an interesting problem to find out the coset leader. In fact, we have , and thus the coset leader of the only coset is the solved game , which contains no lit lights.

Even-by-Odd Games. We consider the syndrome

of a game

g in a coset

C. We have two possible row parity vectors, namely,

First, note that the row parities of different rows are independent of each other. If the row parity of a row is 0, then there are an even number of lit lights in this row. That is, the minimal number of lit lights in this row is 0. If the row parity is 1, then there are odd number of lit lights in this row, where the minimal number is 1. In other words, the smallest such that is . The situation is similar for .

Note that

and

. As both

r and

correspond to the same syndrome

, we know that:

which is of time complexity

if

is known. The coset leader is an arbitrary game that belongs to

, for example:

The construction can be completed within time. During the construction of the coset leader, if we need to put a bit in a row, we can locate it arbitrarily. Therefore, the number of coset leaders in the coset is .

Odd-by-Odd Games. Consider the syndrome

. Write

and

. Let

The syndrome can be induced by four parity pairs, namely,

According to thm:paritydiff, we know that only two out of the four parity pairs are valid, because the difference between the number of bits in the row and the column parity vectors must be an even number. Let be a valid parity pair. If the parity of a row/column is 0, then the minimal number of lit lights in this row/column is 0. If the parity is 1, then the minimal number is 1. Thus, the minimal number of lit lights is bounded below by .

Now, we use the construction in the proof of thm:paritydiff to construct a game with parity pair . Note that the number of lit lights in is , which matches with the lower bound. As there are two valid parity pairs, we need to see which one results in a game that has fewer lit lights. The one with fewer lit lights is the coset leader.

The overall procedure to find out the coset leader is as follows:

Generate from .

If is not an even number, flip all the parity bits in either r or c.

If , then flip all the parity bits in both r and c.

Construct a game that has a parity pair (by using the construction in the proof of thm:paritydiff), and such game is denoted as the coset leader.

As we need time to construct such game, the complexities of other computation steps that take either or are being absorbed by this dominant term; thus, the overall complexity to construct a coset leader is .

At the end of this construction, we can also obtain the minimal number of lit lights among all games in the coset, which is

. Let

We can also directly calculate this number as follows, with

c being flipped:

or equivalently (flipping

r):

This number can be calculated in time when is given.

Let

be the parity pair of a coset leader. We remark that the coset leader is non-unique when

or

. Denote:

To count the number of coset leaders, we recall the idea of the construction that we have used. The first phase matches each 1 in with a distinct 1 in to minimize the number of lit lights. There are totally combinations. If , then the construction is done, and the number of combinations is achieved. Otherwise, we proceed to the second phase, which is described as follows:

The remaining unmatched 1’s must either be only in the row parity vector or only in the column parity vector. We group them two by two so that if we put each group in the same row/column, the row/column parity will not be toggled. If

, then each group can choose any of the

n columns. If

, then each group can choose any of the

m rows. By applying a similar technique to generate the Lagrange coefficients for Lagrange interpolation, we can combine the two cases into one formula. That is, each group has

choices. It is not hard to see that we have enumerated all possibilities that can achieve the minimal number of lit lights. The number of groups is:

Therefore, the number of possible coset leaders when

is:

Combining the two cases, the number of possible coset leaders is:

4.3. Covering Radius

The covering radius problem of the game asks for the maximal number of lights remained among all games when the player minimizes the number of lights. With the understanding of cosets, the desired number is represented by:

Even-by-Even Games. All even-by-even games are solvable, so the covering radius is trivially 0.

Even-by-Odd Games. Recall that for each coset

C,

As the syndrome is a vector in

, and each possible vector in

corresponds to a distinct coset, we can calculate the covering radius of even-by-odd games as follows:

Odd-by-Odd Games. Consider a syndrome of a game in a coset C. Let and . Each possible syndrome in corresponds to a distinct coset; therefore, it suffices to consider all possible and . Note that we are considering the syndrome but not the parity pair, and therefore can either be even or odd.

Case I:

is even. Then, we have:

To maximize this number among all cosets, we need to check both

and

, which gives

and

, respectively. That is, if we restrict ourselves to those

that are even, the covering radius is:

where

is the Kronecker delta, i.e.,

Case II:

is odd. Then, we have:

To maximize this number among all cosets, we need to check both and , which gives and , respectively. That is, if we restrict ourselves to those that are odd, the covering radius is , which is the same as when is even.

Combining these two cases, the covering radius of odd-by-odd games can be represented as:

4.4. Error Correction

The error correction problem is to find a solvable game by toggling the fewest number of lights individually. Depending on the capability of error correcting, such a corrected game may not be unique.

Even-by-Even Games. All even-by-even games are solvable; therefore, there is no unsolvable game for discussion.

Even-by-Odd Games. When , the Hamming distance is 2. Thus, the code has single error-detecting ability, but with no error-correcting ability.

When , the Hamming distance is an even number. Denote such even number as m. Thus, the code has error-detecting ability and error-correcting ability. Note that an game only consists of two codewords, namely, and .

Consider an arbitrary vector . If there are number of 0’s and number of 1’s in w, then we cannot correct the error because the Hamming distance from w to either of the codewords is the same. If the number of 0’s in w is more than that of 1’s, then w is closer to . If the number of 1’s in w is more than that of 0’s, then w is closer to . In the latter two cases, the number of errors is at most ; thus, the closest solvable game is unique. In fact, this code is known as a repetition code in coding theory.

Odd-by-Odd Games. When and , the code is again a repetition code as previously mentioned. However, in this context, m is an odd number; therefore, the code has error-correcting ability.

Consider an arbitrary vector . It is impossible to have the same number of 0’s and 1’s in w. Therefore, we can always correct w to the closest solvable game and such a solvable game is unique. A similar result holds for and based on symmetry. However, when , the Hamming distance is 1, thus, the code has no error-detecting nor error-correcting abilities.

The non-trivial cases are the odd-by-odd games when . Suppose we have an unsolvable odd-by-odd game . We first calculate the syndrome to identify the coset that the game g belongs to. Then, we find an arbitrary coset leader e of such coset. Recall that the coset leader of a coset is the game that has the minimal number of lit lights. Therefore, the game is a closest solvable game.

The code distance of a game is 3; thus, it has 2 error-detecting abilities and 1 error-correcting ability. For games of any other size, the distance is 4; therefore, the code has 3 error-detecting abilities and 1 error-correcting ability. This also implies that for any unsolvable odd-by-odd game , the closest solvable game is unique if and only if the coset leader of the coset where the game it belongs to has one and only one lit light.

In principle, we can use the syndrome of the game to directly locate the error when there is only 1 error. The procedure is as follows:

Calculate the syndrome of the given game g;

Initialize , , , and ;

If is odd, then let and flip all the bits in r;

If or , then the game g is already solvable, and this procedure is then completed;

If and , then there is more than one error, which implicates that the errors cannot be uniquely corrected. Therefore, the procedure can again be terminated;

If , then flip all the bits in both vectors r and c;

Let y and x be the indices where the y-th entry in r is 1 and the x-th entry in c is 1. Then, the -th light in g is the only error position.

The idea of the above procedure is similar to the construction of the coset leader. We first find a valid parity pair of g, i.e., is even. Next, we filter out the cases where the game has no errors, or has more than one error. If , then the current parity pair has exactly one 1 in each of the row and column parity vectors. Otherwise, we have ; then, we flip both the row and column parity vectors such that there is exactly one 1 in both parity vectors. As a result, the coset leader has exactly one 1 in this case, where the location of the 1 is indicated by the parity pair.

5. As an Error-Correcting Code

In this section, we discuss the use of the solvable game space of a two-state Alien Tiles game as an error-correcting code. Although the general linear code technique based on the generator matrix and the parity check matrix also work, the corresponding matrices have a much more complicated form. Here, we describe a natural and simple way for encoding and decoding. We also discuss whether the code is an optimal linear code or not, where optimality means that the linear code has the maximal number of codewords among all possible linear codes under the same set of parameters, for example, field size, codeword length, and code distance.

5.1. Even-by-Odd Games

We first discuss the games. An game only consists of two codewords, namely, and , so it is simply a repetition code. The message that we can encode is either the bit 0 or 1. To encode, we simply map 0 to and map 1 to . The way to decode was discussed in the last section, which is precisely described as follows: Let , where . If the number of a’s in a to-be-decoded word is more than that of b’s, then we decode to a. If their numbers are equal, then we cannot uniquely decode the word.

Now, consider

. All these games have single error-detecting ability but no error-correcting abilities, because the code distance is 2. As the size of the solvable game space, i.e., the number of codewords, is

, we have

bits acting as the redundancy for error detection. The message that we can encode is an

-bits string. Denote the message by

, a natural way to encode the message is as follows: We first consider an

matrix:

and calculate its row parity vector

. If

, then we construct the game:

Otherwise, we construct the game:

The row parity vector of the former game is , and the one of the latter game is . That is, both games are solvable. Thus, we have encoded the message.

Note that the -st entry of the encoded message is the same as . Therefore, we can perform the following steps to decode a game g:

Calculate the row parity vector of the game g.

If and , then we have detected the existence of errors, but we cannot decode the game; thus, the decoding procedure can be terminated.

The game has no errors; thus, the message can be recovered by referring to the matrix.

Lastly, we discuss the optimality of the code. The optimality of

games follows the optimality of repetition codes, which can easily be verified by the use of the Singleton bound [

26].

Theorem 5. For even-by-odd games (where ), only the games are optimal linear codes.

Proof. We first consider

. Recall that

. By applying the Singleton bound [

26], we have:

where

denotes the maximal number of codewords among all possible linear or non-linear codes with field size

q, codeword length

ℓ, and code distance

d. As

, we know that all

games are optimal linear codes.

Now, we consider . If there exists a linear code having the same set of parameters, but of a greater number of codewords, we can conclude that the error-correcting code (ironically without error-correcting ability) formed by even-by-odd games (with ) is not an optimal linear code. The existence of such code is shown by the following construction. Consider , where is odd. Take any two arbitrary such that their Hamming distance is 1. One of them has odd number of bits and the other has even number of bits. Without loss of generality, assume a has an odd number of bits. We append a parity bit, i.e., 1, at the end of a, and append a parity bit, i.e., 0, at the end of b. The extended words (vectors) are elements in , and their Hamming distance is 2. That is, by appending a parity bit to every word in D, we obtain a linear code that has the same set of parameters as the even-by-odd games when . The number of codewords is , which is greater than . This proves the non-optimality of even-by-odd games, with and . □

5.2. Odd-by-Odd Games

When or (but not both), the code is an repetition code; thus, it is optimal. The way to encode and decode is similar to that of even-by-1 games, except that the numbers of 0’s and 1’s are never the same. If , then there is no unsolvable game. Thus, we do not need to consider this case.

In the remaining discussion of this sub-section, we only consider odd-by-odd games, with .

Theorem 6. For odd-by-odd games (where ), only the games form an optimal linear code.

Recall that for all games, the Hamming distance of this code is 3. Thus, it is 2 error-detecting and 1 error-correcting. For games of larger sizes, i.e., but not , their Hamming distances are all 4. Thus, they are 3 error-detecting and 1 error-correcting. That is, we can only correct up to one error. Regardless of the optimality, we now discuss the natural way to encode and decode.

The dimension of the solvable game space is

; thus, the message that we can encode is an

-bits string. Denote the message by

. First, we put the message into a matrix in the form:

Let

be the parity pair of

D. By thm:paritydiff, the number of bits in

r and

c must either be both odd or both even. If the number of bits in

r (or

c) is even, then the game

has a parity pair

. If the number of bits in

r (or

c) is odd, then the game

has a parity pair

. In both of the above games, if the

-st entry is not equal to

, then we click the

-st light so that all the lights in the first row and the first column are toggled. Combining these two cases,

Table 1 shows the condition to flip all the bits in the parity pair.

The table is actually the truth table of exclusive OR (XOR). As addition in binary field is equivalent to modulo 2 addition, we can write the desired codeword as:

To decode a game, we can run the procedure as described in

Section 4.4. The procedure has three types of output:

The first type of output indicates the game is a solvable one. Therefore, we can directly extract the message .

The second type of output indicates the game has more than one error, so the error correction is non-unique. Thus, we consider the code as non-decodable.

The third type of output indicates the location of the single error. Let be the location of the error, we can correct the error by flipping the -th bit of the game grid.

5.3. Example

To demonstrate the error-correcting code, we provide an example in this sub-section. Consider the

games. Suppose we want to encode the message

. First, the matrix

D that consists of the first four bits of the message is:

Then, we fill in the parity pair of

D, which is

, to a

game:

As

and the last bit of the message is 0, according to

Table 1, we need to flip the first row and the first column except the

-st entry. Thus, the encoded codeword is:

Now, we introduce an error to the codeword. Note that the error can be located at any entry in the codeword, including those bits that are not the message bits. For example, the erroneous codeword is:

To correct such error, we first calculate the syndrome of this game, which is

. Next, we initialize

and

. As

is odd, we flip the bits in

r. Thus,

r becomes

. Now, we have:

so we flip both

r and

c. That is, we have

and

. This pair of bits indicates that the error is located at:

Afterwards, we can correct the error by

and obtain the codeword

By directly reading this matrix, the decoded message can be recovered.

6. States Decomposition

One may wonder whether we can practically decompose the four states of the ordinary (four-state) Alien Tiles to obtain two two-state Alien Tiles games. We describe a states decomposition method below, where the number of states and the toggle patterns can be arbitrary. Suppose there are

q states. We reuse

to denote the matrix of all vectorized toggle patterns. Suppose

q has

P distinct prime factors. We can express

q in canonical representation:

where

are distinct primes.

To show whether the (vectorized) game

g is solvable, it is equivalent to show whether the linear system

is solvable. By applying the Chinese remainder theorem, we can decompose the system into

and combine the solutions of the above system into a unique modulo

q solution afterwards. Our task becomes solving the linear system in the form of:

where

p is a prime. If

, we cannot further decompose the system, which means that we have to directly solve the problem.

Consider

. The solution of Equation (

1) is also a solution of the linear system

Let

be a solution of this system. We have:

for some integer vector

z (after vectorization). Let

be a solution of Equation (

1). We have:

By dividing

p, we obtain:

Repeatedly applying this procedure, we can reduce the power of the prime in the modulo until .

The main issue of this decomposition is that the solution may not be unique. We need to try which is solvable after we reduce the prime power, where is equivalent to a zero game. A wrong choice can lead to an unsolvable system. This also happens when we reduce the four-state Alien Tiles to the two-state ones.

However, if

m and

n are both even, all four-state Alien Tiles games are solvable [

4,

21]. In other words, the move sequence to solve an arbitrary game is unique (up to permutation). We do not need to concern the choice of

as every even-by-even two-state Alien Tiles is solvable. A summary of the procedures in solving a four-state Alien Tiles

g by states decomposition is as follows:

Find a move sequence k that solves the two-state Alien Tiles .

Calculate without performing modulo.

Find a move sequence y that solves the two-state Alien Tiles .

The move sequence in solving the four-state Alien Tiles g is .

As an example, consider the four-state Alien Tiles:

where the four states are mapped to 0, 1, 2, and 3, respectively. The first step is to solve the two-state game:

After that, we solve another two-state game:

Finally, we obtain the move sequence that solves the four-state game

g, which is:

7. Conclusions

The study of Lights Out and its variants is mostly for theoretical interests and pedagogical purposes. The main goals and purposes of this paper are to stimulate the interests of the recreational mathematics community in investigating special features of switching games, apart from dealing with the solvability problems. Furthermore, this study can provide an entry point for recreational mathematicians to easily pick up and explore related switching game problems, even though they may not excel in coding theory and related mathematical disciplines.

In this paper, we investigated the properties of two-state Alien Tiles, including the solvability, invariants, etc., based on different settings. We also discussed the efficient methods to deal with coding-theoretical problems for the game, such as the Hamming weight, the coset leader, and the covering radius. We also demonstrated how to apply the game as an error-correcting code with a natural way to encode and decode and verified that particular game sizes can form optimal linear codes. An open problem that remains in this paper is the existence of an efficient method to find out the minimal number of moves (the shortest solution) in solving a solvable game. Lastly, we discussed the states decomposition method and left an open problem on the issue of kernel selection.

One future direction is to study the coding-theoretical problems on the ordinary Lights Out and also its other variants. Different variants possess different structures and have to be explored in depth. In particular, some variants may have easy solutions, e.g., the two-state Alien Tiles that we have discussed in this paper; while some of them may not, e.g., the Gale–Berlekamp switching game. In addition to constructing easy solutions, proving how hard a game problem is will also be a potential research direction.

Another future direction is to investigate more structural properties of the ordinary four-state Alien Tiles. In the four-state version, there is a subtle issue when we model the problem via the use of linear algebra. Take the odd-by-odd games as an example: We can click all the lights twice in a) two arbitrary distinct columns, b) two arbitrary distinct rows, or c) an arbitrary column, followed by an arbitrary row, to keep an arbitrary game unchanged. The combination of these actions forms a kernel. As each light must be clicked for even number of times, the kernel is a free module instead of a vector space over , which means that it is a module that has a basis. This is because multiplication in this finite field is not having the same effect as taking modulo after integer multiplication. In particular, applying two double clicks is equivalent to “no clicking”; however, in .

On top of this, we have concluded that the states decomposition has an issue in finding a proper element in the kernel that leads to a solvable game in the lower state. However, this also means that we have reduced the search space by eliminating those elements in the kernel that lead to an unsolvable game in the lower state. A future direction is to investigate whether this decomposition can help in solving constraint programming problems like that described in [

27]. This problem is a recreational mathematics problem on Alien Tiles, which aims to find the solvable game that has the longest “shortest solution ”. Existing approaches reduce the search space mainly by symmetry breaking [

28,

29,

30]. The use of our states decomposition together with symmetry breaking in obtaining desired solvable games (which may not be unique) is a potential research direction.