MIRA: Model-Based Imagined Rollouts Augmentation for Non-Stationarity in Multi-Agent Systems

Abstract

:1. Introduction

2. Related Work

2.1. Centralized Training Techniques

2.2. Multi-Agent Communication

2.3. Opponent Modeling

2.4. Meta-Reinforcement Learning

3. Preliminaries

3.1. Partially Observable Stochastic Games

3.2. Model-Based Environment Dynamics

4. Method

4.1. Proposed Approach

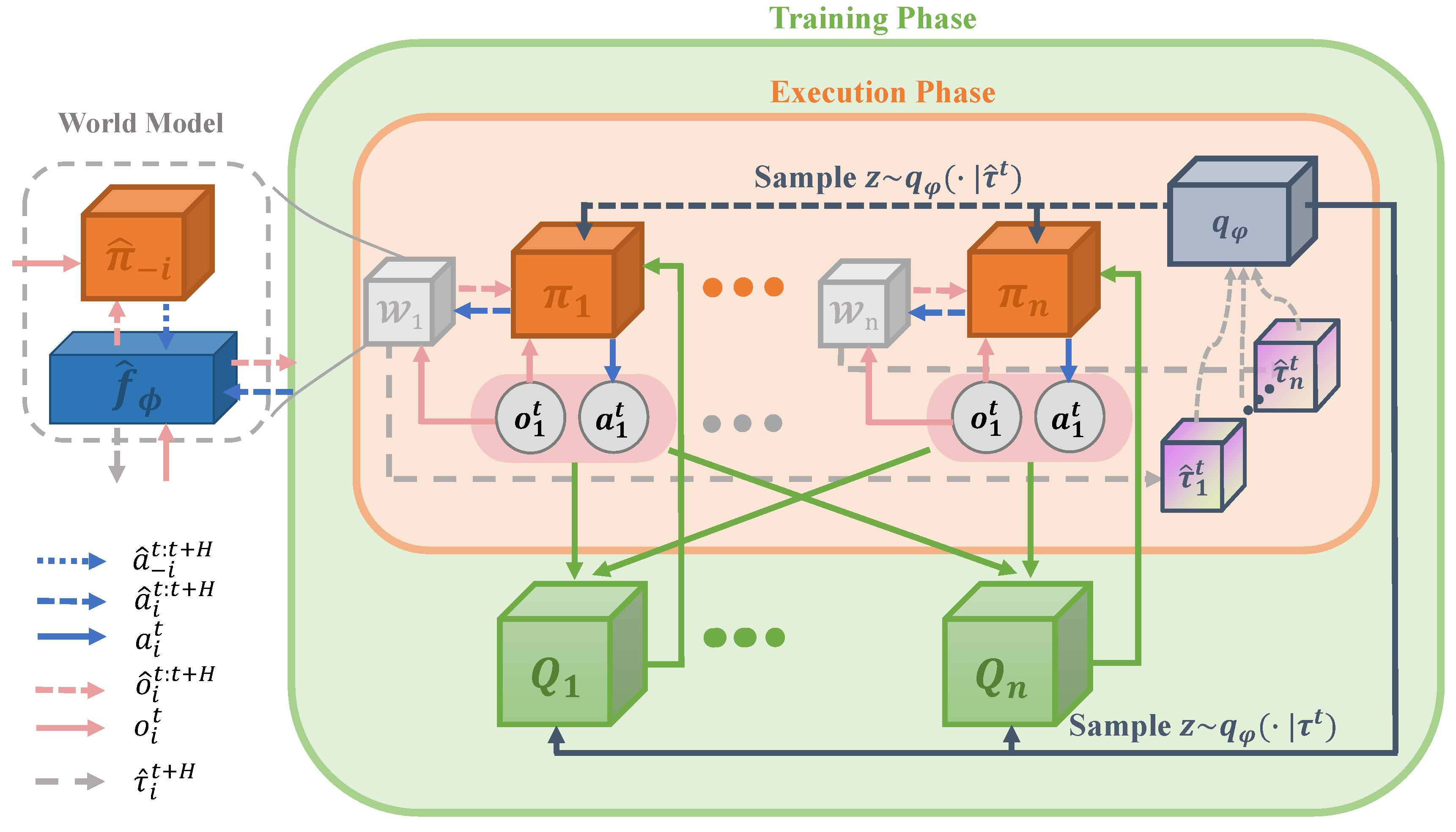

- The world model of agent i (illustrated as the gray cubes in Figure 1) is trained using the data collected in the replay buffer in a supervised learning manner. This model consists of an environment dynamics model and opponent models represented as estimated policies of other agents . We introduce a hyperparameter H to determine the horizon of recursive reasoning for the world model.

- The latent-variable inference module (illustrated as a pewter cube) realizes the extraction of trajectory from historical data and records it into the replay buffer together with the experience at each time step within an episode. The accumulated off-policy data are transformed from past trajectory to a probability variable Z via the latent-variable inference module, which is resampled by a posterior probability (illustrated as a pewter solid arrow) on trajectory .

- The critic module and the actor module (described as the green cubes and the orange cubes, respectively) exploit the latent variable Z in reinforcement learning tasks; Z is a probabilistic variable that embeds the non-stationarity of the current environment and incorporates with the input vectors of in the training. The critic module is implemented by the Deep Q-learning network and essentially acts as a state-action value function. Moreover, the actor module denoted as updates the parameters via the policy gradient guided by the corresponding critic module.

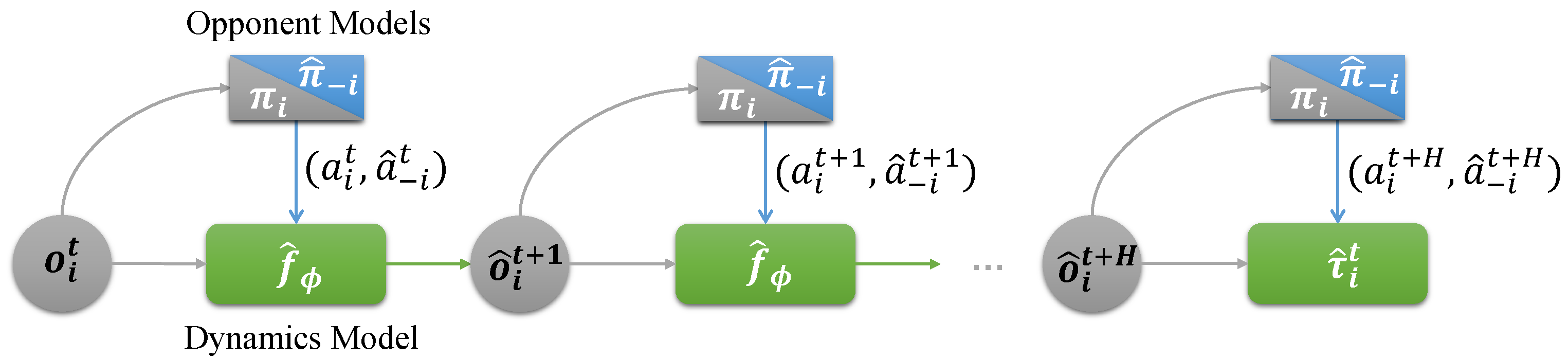

4.2. World Model

4.2.1. Modeling Environment Dynamics

4.2.2. Reasoning Imagined Rollouts

4.3. Latent-Variable Inference Module

4.4. Implementation on Off-Policy Reinforcement Learning

| Algorithm 1 Centralized training. |

| Parameter: Learning rate , rollout length H. Output: Actor networks and critic networks

|

| Algorithm 2 Decentralized executing. |

Input: Actor networks and critic networks .

|

5. Empirical Evaluation

5.1. Experimental Settings

5.1.1. Experimental Environment

5.1.2. Baselines

5.2. Performance Evaluation

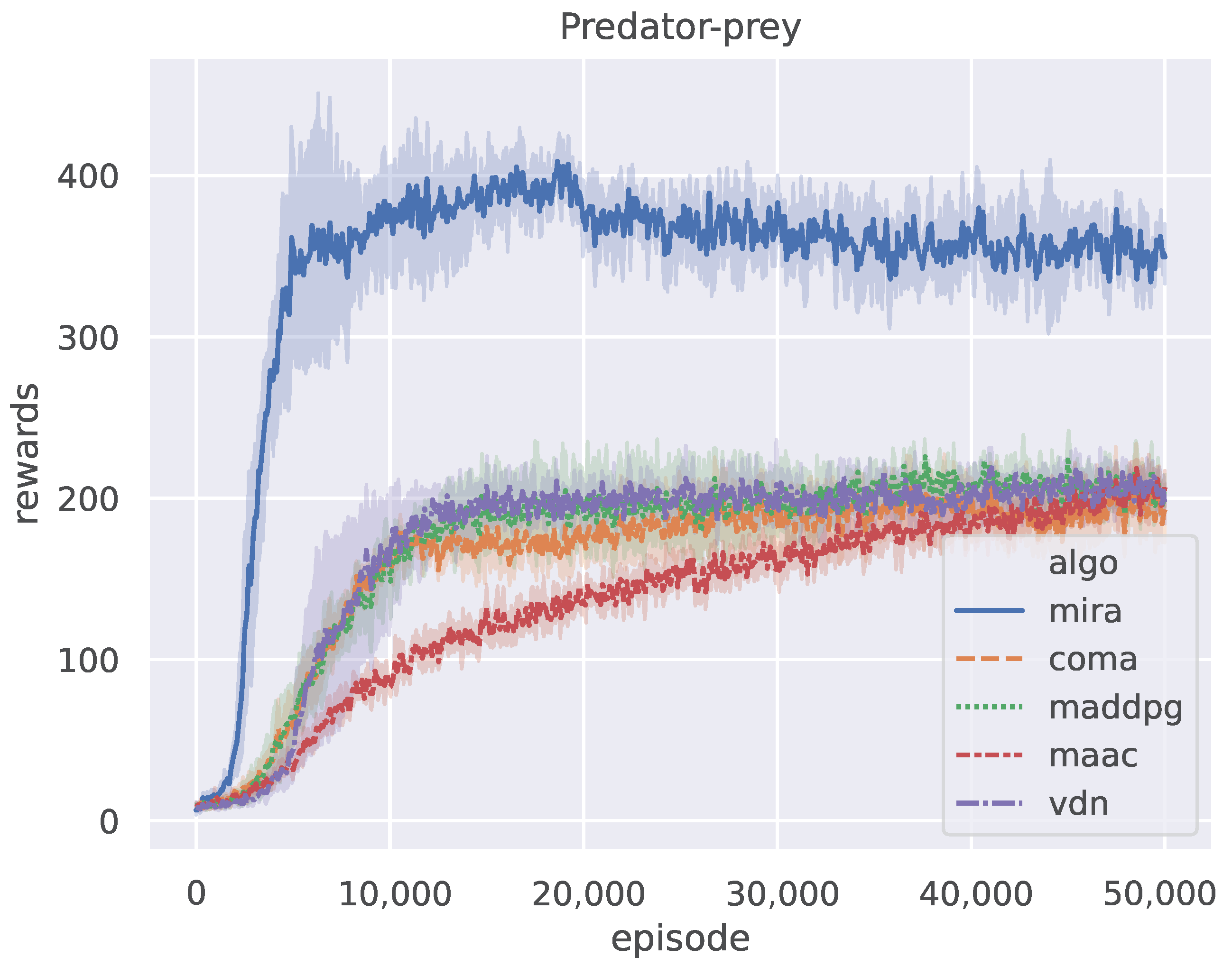

5.2.1. Overall Results

- Convergence Reward (CR): The mean value of the episode reward in the last 100 episodes, used as the convergence result of the algorithms.

- Convergence Duration (CD): The number of training episodes required for the episode reward to stabilize within of the convergent reward.

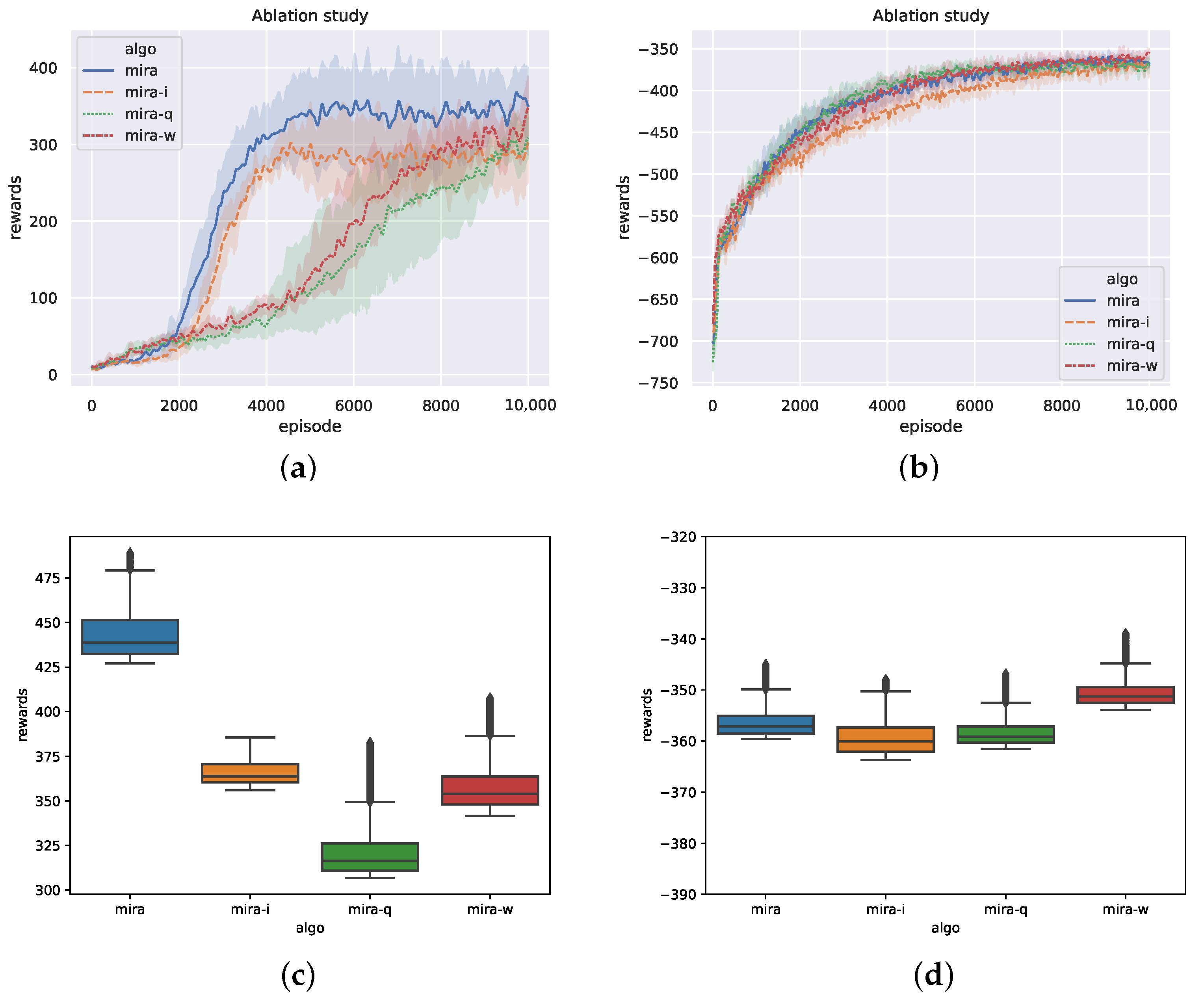

5.2.2. Ablation Studies

- MIRA-w is a model-free MARL method without the world model and is taken as the baseline of other methods.

- MIRA-q adopts an LSTM network as a part of the actor module and processes the trajectory into a real-valued representation instead of an estimate on distribution .

- MIRA-i uses a latent-variable inference module to enhance policy exploration by posterior sampling and learn the posterior probability of a given input trajectory by maximizing the expected return from the critic modules. However, the loss function of its inference module eliminates the design of an information bottleneck for comparison with MIRA.

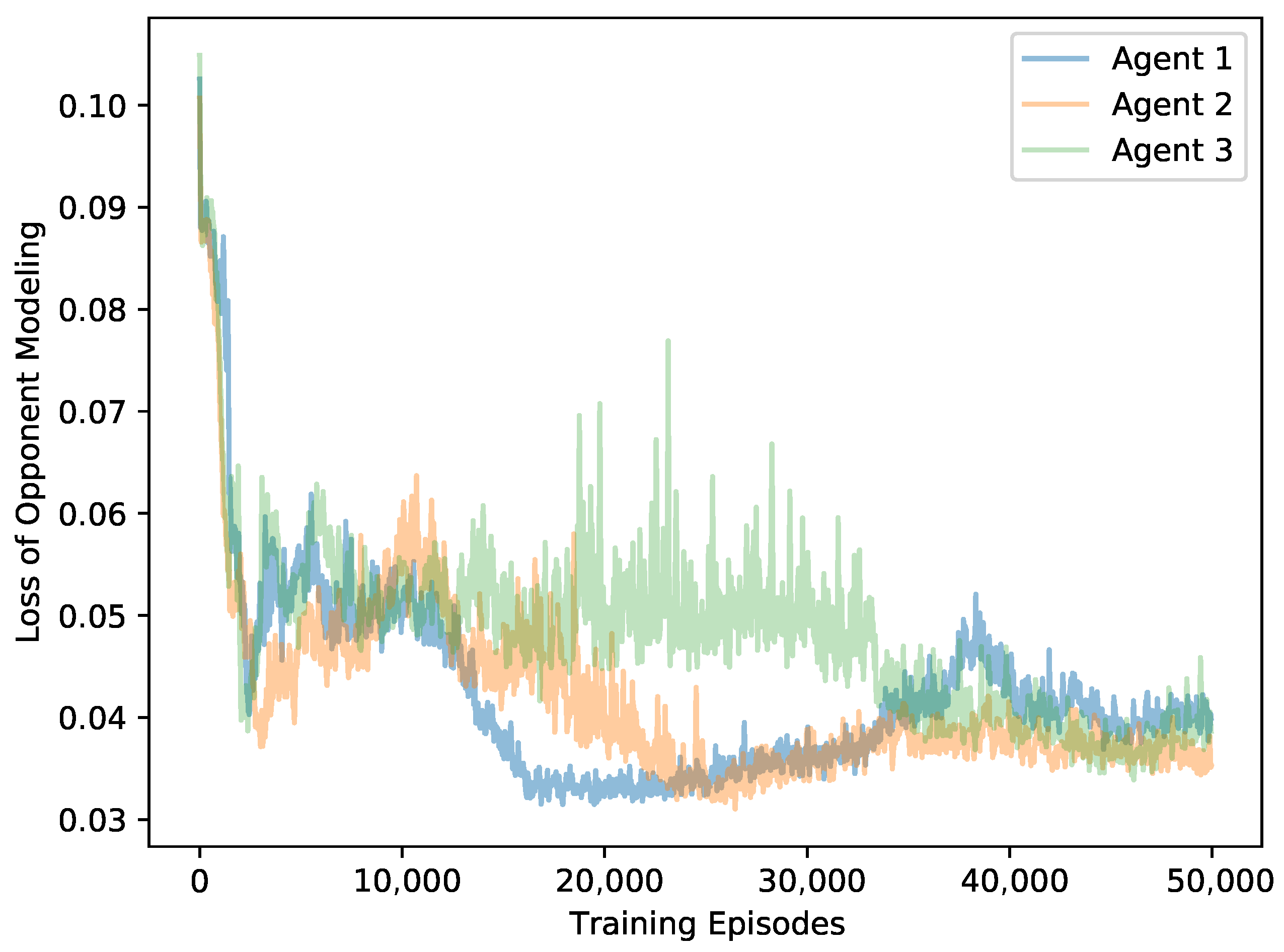

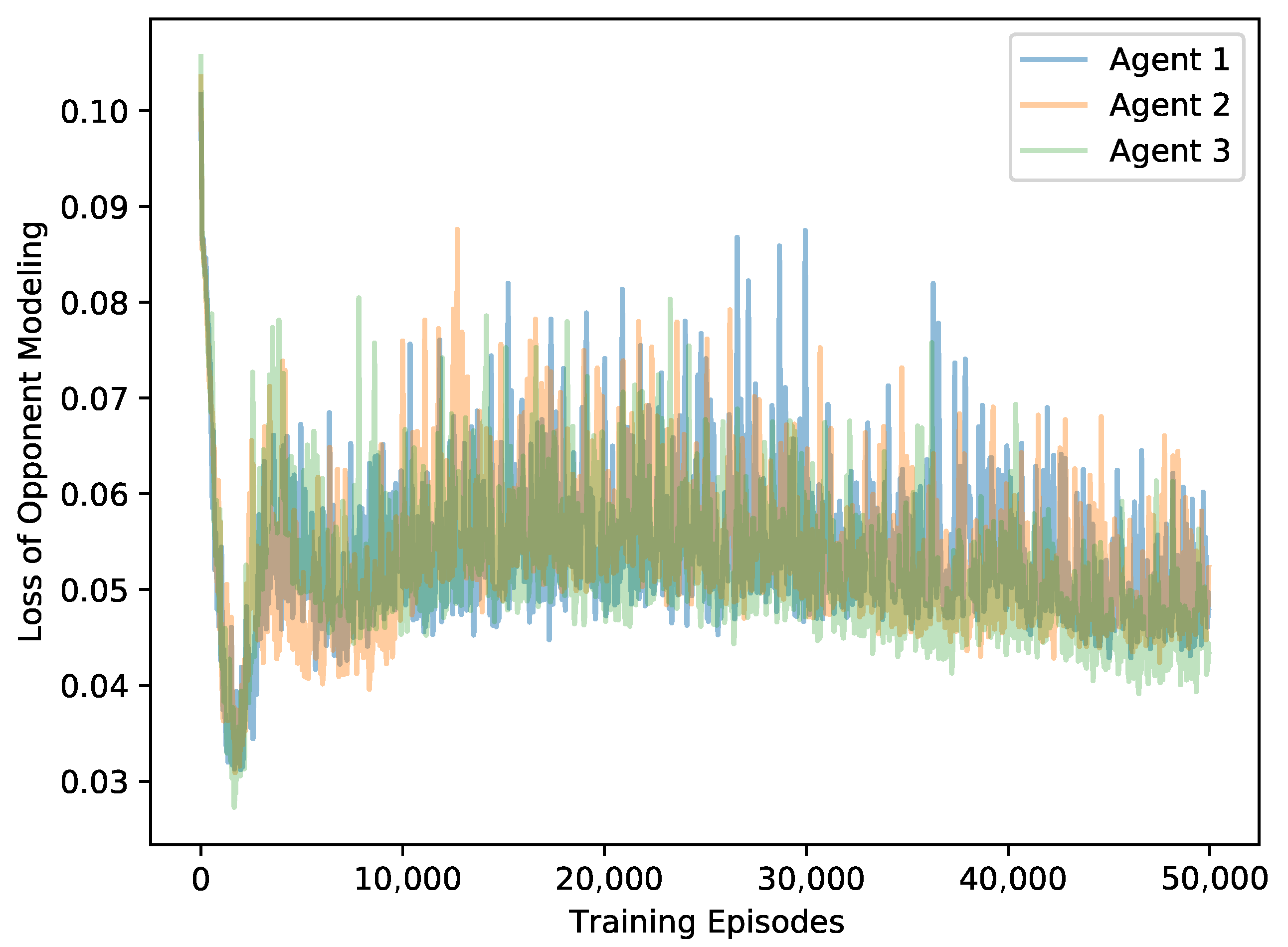

5.2.3. Opponent Loss

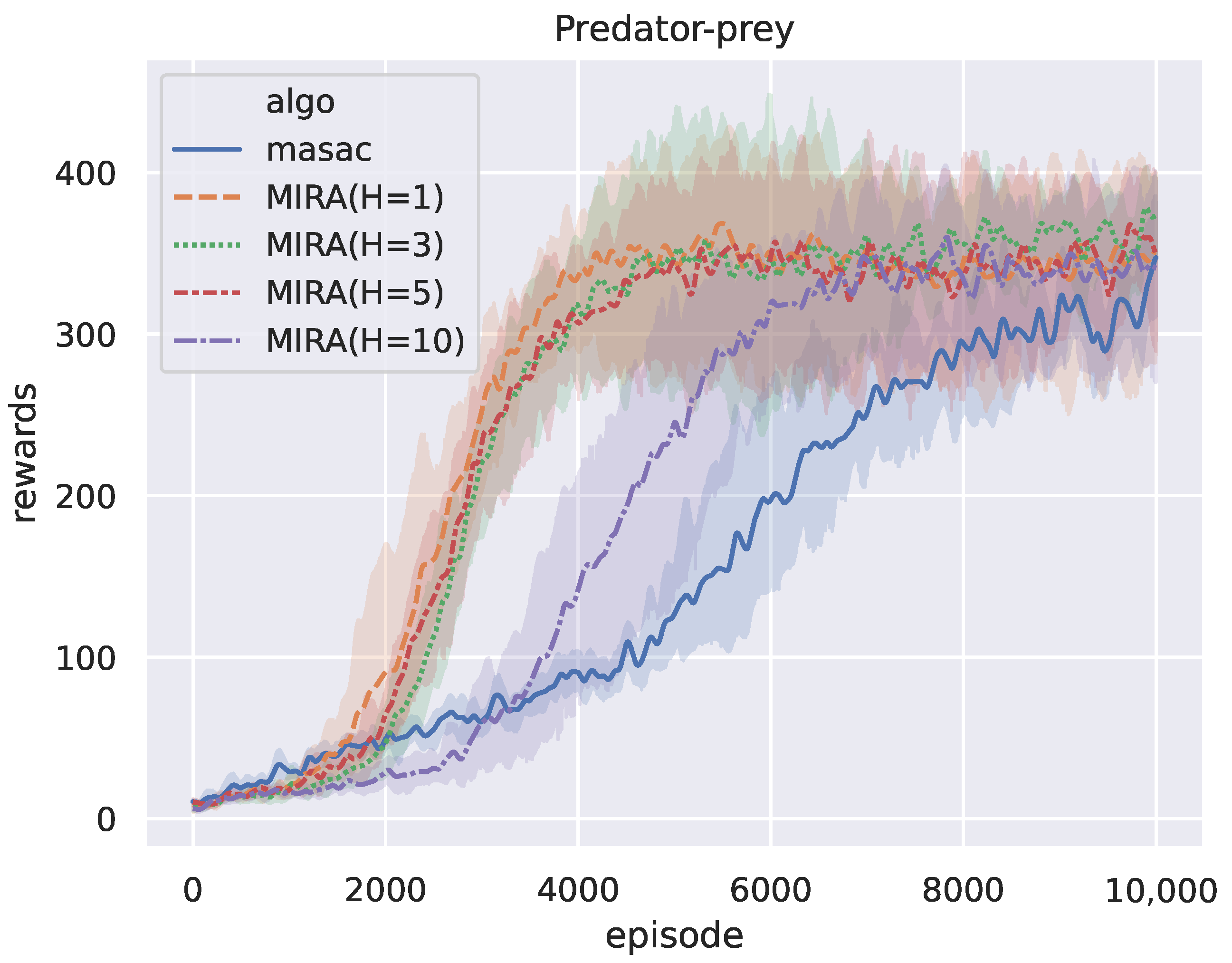

5.2.4. Hyperparameter Test

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Perrusquía, A.; Yu, W.; Li, X. Multi-agent reinforcement learning for redundant robot control in task-space. Int. J. Mach. Learn. Cybern. 2021, 12, 231–241. [Google Scholar] [CrossRef]

- Palanisamy, P. Multi-agent connected autonomous driving using deep reinforcement learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar]

- You, X.; Li, X.; Xu, Y.; Feng, H.; Zhao, J.; Yan, H. Toward Packet Routing With Fully Distributed Multiagent Deep Reinforcement Learning. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 855–868. [Google Scholar] [CrossRef]

- Iqbal, S.; Sha, F. Actor-Attention-Critic for Multi-Agent Reinforcement Learning. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: New York, NY, USA, 2019; Volume 97, pp. 2961–2970. [Google Scholar]

- Lowe, R.; WU, Y.; Tamar, A.; Harb, J.; Pieter Abbeel, O.; Mordatch, I. Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017 (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 6379–6390. [Google Scholar]

- Rashid, T.; Samvelyan, M.; de Witt, C.S.; Farquhar, G.; Foerster, J.N.; Whiteson, S. QMIX: Monotonic Value Function Factorisation for Deep Multi-Agent Reinforcement Learning. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; PMLR: New York, NY, USA, 2018; Volume 80, pp. 4292–4301. [Google Scholar]

- Son, K.; Kim, D.; Kang, W.J.; Hostallero, D.E.; Yi, Y. QTRAN: Learning to Factorize with Transformation for Cooperative Multi-Agent Reinforcement Learning. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: New York, NY, USA, 2019; Volume 97, pp. 5887–5896. [Google Scholar]

- Kuba, J.G.; Chen, R.; Wen, M.; Wen, Y.; Sun, F.; Wang, J.; Yang, Y. Trust Region Policy Optimisation in Multi-Agent Reinforcement Learning. In Proceedings of the ICLR 2022: The Tenth International Conference on Learning Representations, Online, 25–29 April 2022; Available online: https://arxiv.org/abs/2109.11251 (accessed on 21 January 2022).

- Wen, M.; Kuba, J.G.; Lin, R.; Zhang, W.; Wen, Y.; Wang, J.; Yang, Y. Multi-Agent Reinforcement Learning is a Sequence Modeling Problem. arXiv 2022, arXiv:2205.14953. [Google Scholar]

- Willemsen, D.; Coppola, M.; de Croon, G.C.H.E. MAMBPO: Sample-efficient multi-robot reinforcement learning using learned world models. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2021), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5635–5640. [Google Scholar]

- Zhang, W.; Wang, X.; Shen, J.; Zhou, M. Model-based Multi-agent Policy Optimization with Adaptive Opponent-wise Rollouts. arXiv 2021, arXiv:2105.03363. [Google Scholar]

- Sutton, R.S. Integrated Modeling and Control Based on Reinforcement Learning and Dynamic Programming. In Proceedings of the NIPS 1990, Denver, CO, USA, 26–29 November 1990. [Google Scholar]

- Wang, T.; Gupta, T.; Mahajan, A.; Peng, B.; Whiteson, S.; Zhang, C. RODE: Learning Roles to Decompose Multi-Agent Tasks. arXiv 2021, arXiv:2010.01523. [Google Scholar]

- Wang, T.; Dong, H.; Lesser, V.R.; Zhang, C. ROMA: Multi-Agent Reinforcement Learning with Emergent Roles. arXiv 2020, arXiv:2003.08039. [Google Scholar]

- Ribeiro, J.G.; Martinho, C.; Sardinha, A.; Melo, F.S. Assisting Unknown Teammates in Unknown Tasks: Ad Hoc Teamwork under Partial Observability. arXiv 2022, arXiv:2201.03538. [Google Scholar]

- Rakelly, K.; Zhou, A.; Finn, C.; Levine, S.; Quillen, D. Efficient Off-Policy Meta-Reinforcement Learning via Probabilistic Context Variables. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; PMLR: New York, NY, USA, 2019; Volume 97, pp. 5331–5340. [Google Scholar]

- Luo, Y.; Xu, H.; Li, Y.; Tian, Y.; Darrell, T.; Ma, T. Algorithmic Framework for Model-based Deep Reinforcement Learning with Theoretical Guarantees. In Proceedings of the 7th International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019; Available online: https://openreview.net/forum?id=BJe1E2R5KX (accessed on 17 December 2018).

- Tishby, N.; Pereira, F.C.N.; Bialek, W. The information bottleneck method. In Proceedings of the 37th Allerton Conference on Communication and Computation, Monticello, NY, USA, 22–24 September 1999; pp. 368–377. [Google Scholar]

- Peter Sunehag, G.L.; Gruslys, A.; Czarnecki, W.M.; Zambaldi, V.F.; Jaderberg, M.; Lanctot, M.; Sonnerat, N.; Leibo, J.Z.; Tuyls, K.; Graepel, T. Value-Decomposition Networks For Cooperative Multi-Agent Learning Based On Team Reward. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS 2018), Stockholm, Sweden, 10–15 July 2018; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2018; pp. 2085–2087. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the 4th International Conference on Learning Representations (ICLR 2016), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Foerster, J.; Farquhar, G.; Afouras, T.; Nardelli, N.; Whiteson, S. Counterfactual multi-agent policy gradients. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; AAAI Press: Palo Alto, CA, USA, 2018; Volume 32, pp. 2974–2982. [Google Scholar]

- Sukhbaatar, S.; Szlam, A.; Fergus, R. Learning Multiagent Communication with Backpropagation. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016 (NeurIPS 2016), Barcelona, Spain, 5–10 December 2016; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 2244–2252. [Google Scholar]

- Das, A.; Gervet, T.; Romoff, J.; Batra, D.; Parikh, D.; Rabbat, M.; Pineau, J. TarMAC: Targeted Multi-Agent Communication. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: New York, NY, USA, 2019; Volume 97, pp. 1538–1546. [Google Scholar]

- Mao, H.; Gong, Z.; Zhang, Z.; Xiao, Z.; Ni, Y. Learning Multi-agent Communication under Limited-bandwidth Restriction for Internet Packet Routing. arXiv 2019, arXiv:1903.05561. [Google Scholar]

- Kim, W.; Park, J.; Sung, Y. Communication in Multi-Agent Reinforcement Learning: Intention Sharing. In Proceedings of the 9th International Conference on Learning Representations (ICLR 2021), Vienna, Austria, 3–7 May 2021; Available online: https://openreview.net/forum?id=qpsl2dR9twy (accessed on 8 January 2021).

- Grover, A.; Al-Shedivat, M.; Gupta, J.K.; Burda, Y.; Edwards, H. Learning Policy Representations in Multiagent Systems. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; Dy, J.G., Krause, A., Eds.; PMLR: New York, NY, USA, 2018; Volume 80, pp. 1797–1806. [Google Scholar]

- Papoudakis, G.; Christianos, F.; Albrecht, S. Agent Modelling under Partial Observability for Deep Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021 (NeurIPS 2021), Online, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; pp. 19210–19222. [Google Scholar]

- Racanière, S.; Weber, T.; Reichert, D.; Buesing, L.; Guez, A.; Jimenez Rezende, D.; Puigdomènech Badia, A.; Vinyals, O.; Heess, N.; Li, Y.; et al. Imagination-Augmented Agents for Deep Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017 (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5690–5701. [Google Scholar]

- Rusu, A.A.; Rao, D.; Sygnowski, J.; Vinyals, O.; Pascanu, R.; Osindero, S.; Hadsell, R. Meta-Learning with Latent Embedding Optimization. In Proceedings of the 7th International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019; Available online: https://openreview.net/forum?id=BJgklhAcK7 (accessed on 18 December 2018).

- Duan, Y.; Schulman, J.; Chen, X.; Bartlett, P.L.; Sutskever, I.; Abbeel, P. RL2: Fast Reinforcement Learning via Slow Reinforcement Learning. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2017; Available online: https://openreview.net/forum?id=HkLXCE9lx (accessed on 21 February 2017).

- Yang, Y.; Wang, J. An Overview of Multi-Agent Reinforcement Learning from Game Theoretical Perspective. arXiv 2020, arXiv:2011.00583. [Google Scholar]

- Higuera, J.C.G.; Meger, D.; Dudek, G. Synthesizing Neural Network Controllers with Probabilistic Model-Based Reinforcement Learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2538–2544. [Google Scholar]

- Fu, J.; Levine, S.; Abbeel, P. One-shot learning of manipulation skills with online dynamics adaptation and neural network priors. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2016), Daejeon, Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4019–4026. [Google Scholar]

- Janner, M.; Fu, J.; Zhang, M.; Levine, S. When to Trust Your Model: Model-Based Policy Optimization. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 12498–12509. [Google Scholar]

- Deisenroth, M.P.; Rasmussen, C.E. PILCO: A Model-Based and Data-Efficient Approach to Policy Search. In Proceedings of the 28th International Conference on Machine Learning (ICML 2011), Bellevue, WA, USA, 28 June–2 July 2011; Omnipress: Madison, WI, USA, 2011; pp. 465–472. [Google Scholar]

- Heess, N.; Wayne, G.; Silver, D.; Lillicrap, T.; Erez, T.; Tassa, Y. Learning Continuous Control Policies by Stochastic Value Gradients. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015 (NeurIPS 2015), Montreal, Canada, 7–12 December 2015; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; pp. 2944–2952. [Google Scholar]

- Chua, K.; Calandra, R.; McAllister, R.; Levine, S. Deep Reinforcement Learning in a Handful of Trials using Probabilistic Dynamics Models. In Proceedings of the Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; pp. 4759–4770. [Google Scholar]

- Gupta, A.; Mendonca, R.; Liu, Y.; Abbeel, P.; Levine, S. Meta-Reinforcement Learning of Structured Exploration Strategies. In Proceedings of the Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; pp. 5307–5316. [Google Scholar]

- Mordatch, I.; Abbeel, P. Emergence of Grounded Compositional Language in Multi-Agent Populations. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; AAAI Press: Palo Alto, CA, USA, 2018; Volume 32, pp. 1495–1502. [Google Scholar]

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Learning rate (model, actor, critic) | 1 | Discount factor | 0.95 |

| MLP hidden layers | 2 | Inference module hidden size | 128 |

| Critic hidden size | 128 | Actor hidden size | 128 |

| Batch size b | 128 | Number of ensemble model M | 5 |

| Target network update rate | 0.01 | Replay buffer size | 1 |

| Epsilon greedy | 0.95 | Target entropy | −0.05 |

| Encoder entropy coefficient | 0.1 | Opponent model coefficient | 0.01 |

| Rollout legth H (MIRA) | 5 | Attention heads (MAAC) | 4 |

| MIRA | MAAC | COMA | MADDPG | VDN | |

|---|---|---|---|---|---|

| CR | |||||

| (mean ± std) | |||||

| CD (episodes) | 4376 | 33,053 | 24,909 | 19,039 | 12,162 |

| MIRA | MAAC | COMA | MADDPG | VDN | |

|---|---|---|---|---|---|

| CR | |||||

| (mean ± std) | |||||

| CD (episodes) | 30,095 | 29,345 | 28,071 | 30,145 |

| Methods | Model-Free Baseline | Usage of the Imagined Rollouts | Optimize with Information Bottleneck |

|---|---|---|---|

| MIRA-w | MIRA-w | × | × |

| MIRA-q | MIRA-w | Representation learning | × |

| MIRA-i | MIRA-w | Infer a latent variable Z | × |

| MIRA | MIRA-w | Infer a latent variable Z | ✓ |

| H | 1 | 3 | 5 | 10 | Baseline |

|---|---|---|---|---|---|

| CR | |||||

| (mean ± std) | |||||

| CD (episodes) | 3289 | 3451 | 3639 | 4742 | 6235 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Fang, Q.; Hu, C.; Hu, Y.; Yin, Q. MIRA: Model-Based Imagined Rollouts Augmentation for Non-Stationarity in Multi-Agent Systems. Mathematics 2022, 10, 3059. https://doi.org/10.3390/math10173059

Xu H, Fang Q, Hu C, Hu Y, Yin Q. MIRA: Model-Based Imagined Rollouts Augmentation for Non-Stationarity in Multi-Agent Systems. Mathematics. 2022; 10(17):3059. https://doi.org/10.3390/math10173059

Chicago/Turabian StyleXu, Haotian, Qi Fang, Cong Hu, Yue Hu, and Quanjun Yin. 2022. "MIRA: Model-Based Imagined Rollouts Augmentation for Non-Stationarity in Multi-Agent Systems" Mathematics 10, no. 17: 3059. https://doi.org/10.3390/math10173059

APA StyleXu, H., Fang, Q., Hu, C., Hu, Y., & Yin, Q. (2022). MIRA: Model-Based Imagined Rollouts Augmentation for Non-Stationarity in Multi-Agent Systems. Mathematics, 10(17), 3059. https://doi.org/10.3390/math10173059