Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey

Abstract

1. Introduction

2. Overview of PHM, ADAS in AVs, and Sensor Faults

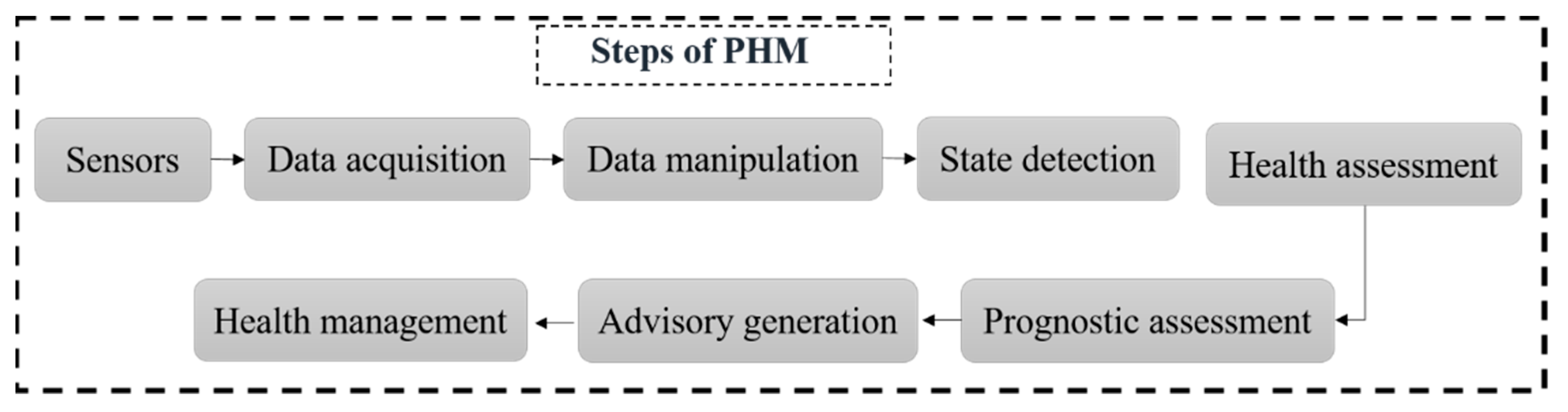

2.1. Overview of PHM Technology

2.2. Autonomous Vehicle and Advanced Driver Assistance System

2.3. Sensor Malfunctioning in AVs

2.4. Sensors Uncertainty and PHM

3. Sensor-Based PHM for ADAS System

3.1. PHM of Vision Sensors

| S. No | Camera Faults | Detailed Description of Camera Malfunctioning |

|---|---|---|

| 1 | Lens occlusion or soiling | Covering of the lens by solid or fluid |

| 2 | Weather condition | Rain/snow |

| Icing of camera | ||

| Fogging of camera | ||

| 3 | Environmental condition | Time (dawn or dust) |

| Crossing by tunnel or over a bridge | ||

| Inner-city roads or motorways | ||

| 4 | Optical faults | Lens occupied by dust |

| Lens loss | ||

| Interior fogging | ||

| Damage or stone impact of the lens | ||

| 5 | Sensor faults | Smear |

| Image element defect (black or white marks) | ||

| Thermal disturbance | ||

| 6 | Visibility distance | Range of distance to detect a clear image |

3.2. PHM of Light Detection and Ranging (LiDAR) Sensor

3.3. Radio Detection and Ranging (RADAR)

3.4. Ultrasonic Sensors

3.5. Positioning Sensors

3.6. Discussion

4. Challenges and Future Perspectives AVs and Its PHM

5. Conclusions

- For the LiDAR system, the sensor-based faults are malfunction of mirror motor, damage to optical filter, misalignment of the optical receiver, security breach, adverse climatic circumstances, intermodulation distortion, and short-circuit and overvoltage of electrical components. Some representative PHM efforts for LiDAR are correlation with sensor framework, output of the monitoring sensor, correlation to passive ground truth, correlation to active ground truth, correlation to another similar sensor, and correlation to a different sensor.

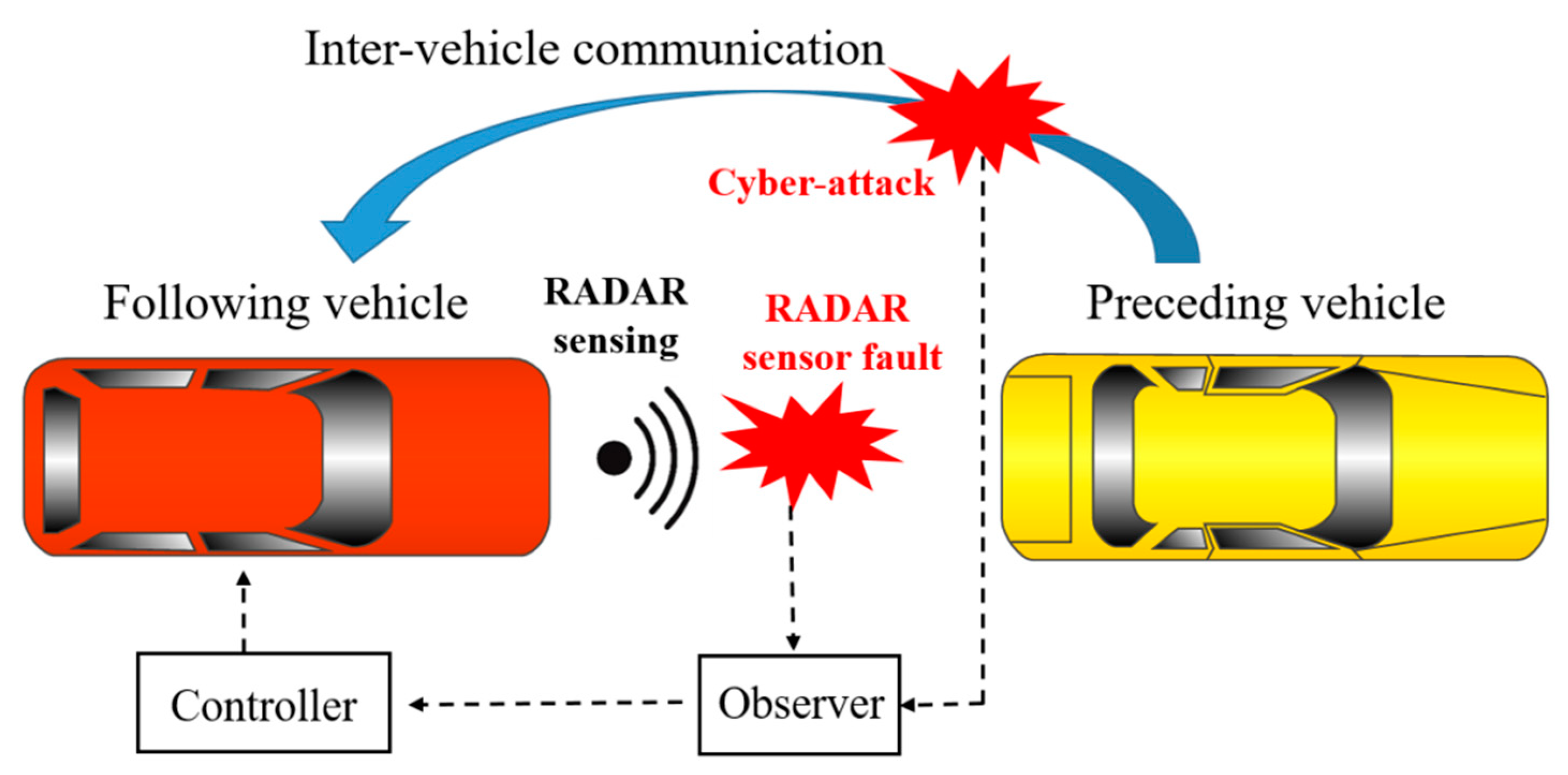

- RADAR is more economical than LiDAR and cameras, and it works on the principle of the Doppler effect. The primary failure modes of RADAR are fault in the range rate signal and sensor fault. Some preventive and corrective measures for RADAR are calibration in relevant service stations, sliding mode observer, sensor fault detection, cyberattack detection observer, and the LSTM-based deep learning approach.

- The ultrasonic sensors used to determine an object in the domain of the sensors are susceptible to acoustic/electronic noise, performance degradation under bright ambient light, vehicle corner error, and cross echoes, among others. Common approaches for detecting faults and corrective measures in ultrasonic sensors are artificial neural networks, parallel parking assist systems, combined ultrasonic sensors and three-dimensional vision sensors, and grid maps.

- The positioning sensors and systems employed to locate the position of AVs are prone to jumps in GPS observation, satellite and received signal faults, and sensor data with/without curbs on the roadsides. The techniques employed for the fault detection and isolation of positioning sensors and systems are the Kalman filter, Markov blanket (MB) algorithm, and sensor redundancy fault detection model.

Author Contributions

Funding

Conflicts of Interest

References

- Meyer-Waarden, L.; Cloarec, J. “Baby, You Can Drive My Car”: Psychological Antecedents That Drive Consumers’ Adoption of AI-Powered Autonomous Vehicles. Technovation 2022, 109, 102348. [Google Scholar] [CrossRef]

- Nowicki, M.R. A Data-Driven and Application-Aware Approach to Sensory System Calibration in an Autonomous Vehicle. Measurement 2022, 194, 111002. [Google Scholar] [CrossRef]

- Jain, S.; Ahuja, N.J.; Srikanth, P.; Bhadane, K.V.; Nagaiah, B.; Kumar, A.; Konstantinou, C. Blockchain and Autonomous Vehicles: Recent Advances and Future Directions. IEEE Access 2021, 9, 130264–130328. [Google Scholar] [CrossRef]

- Reid, T.G.; Houts, S.E.; Cammarata, R.; Mills, G.; Agarwal, S.; Vora, A.; Pandey, G. Localization Requirements for Autonomous Vehicles. arXiv 2019, arXiv:1906.01061. [Google Scholar] [CrossRef]

- Yu, F.; Biswas, S. Self-Configuring TDMA Protocols for Enhancing Vehicle Safety with DSRC Based Vehicle-to-Vehicle Communications. IEEE J. Sel. Areas Commun. 2007, 25, 1526–1537. [Google Scholar] [CrossRef]

- Torrent-Moreno, M.; Mittag, J.; Santi, P.; Hartenstein, H. Vehicle-to-Vehicle Communication: Fair Transmit Power Control for Safety-Critical Information. IEEE Trans. Veh. Technol. 2009, 58, 3684–3703. [Google Scholar] [CrossRef]

- Bi, Y.; Cai, L.X.; Shen, X.; Zhao, H. Efficient and Reliable Broadcast in Intervehicle Communication Networks: A Cross-Layer Approach. IEEE Trans. Veh. Technol. 2010, 59, 2404–2417. [Google Scholar] [CrossRef]

- Palazzi, C.E.; Roccetti, M.; Ferretti, S. An Intervehicular Communication Architecture for Safety and Entertainment. IEEE Trans. Intell. Transp. Syst. 2009, 11, 90–99. [Google Scholar] [CrossRef]

- Tabatabaei, S.A.H.; Fleury, M.; Qadri, N.N.; Ghanbari, M. Improving Propagation Modeling in Urban Environments for Vehicular Ad Hoc Networks. IEEE Trans. Intell. Transp. Syst. 2011, 12, 705–716. [Google Scholar] [CrossRef]

- You, J.-H.; Oh, S.; Park, J.-E.; Song, H.; Kim, Y.-K. A Novel LiDAR Sensor Alignment Inspection System for Automobile Productions Using 1-D Photodetector Arrays. Measurement 2021, 183, 109817. [Google Scholar] [CrossRef]

- Kala, R.; Warwick, K. Motion Planning of Autonomous Vehicles in a Non-Autonomous Vehicle Environment without Speed Lanes. Eng. Appl. Artif. Intell. 2013, 26, 1588–1601. [Google Scholar] [CrossRef]

- Correa-Caicedo, P.J.; Barranco-Gutiérrez, A.I.; Guerra-Hernandez, E.I.; Batres-Mendoza, P.; Padilla-Medina, J.A.; Rostro-González, H. An FPGA-Based Architecture for a Latitude and Longitude Correction in Autonomous Navigation Tasks. Measurement 2021, 182, 109757. [Google Scholar]

- Duran, O.; Turan, B. Vehicle-to-Vehicle Distance Estimation Using Artificial Neural Network and a Toe-in-Style Stereo Camera. Measurement 2022, 190, 110732. [Google Scholar] [CrossRef]

- Duran, D.R.; Robinson, E.; Kornecki, A.J.; Zalewski, J. Safety Analysis of Autonomous Ground Vehicle Optical Systems: Bayesian Belief Networks Approach. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems, Kraków, Poland, 8–11 September 2013; pp. 1419–1425. [Google Scholar]

- Woongsun, J.; Ali, Z.; Rajesh, R. Resilient Control Under Cyber-Attacks in Connected ACC Vehicles. In Proceedings of the ASME 2019 Dynamic Systems and Control Conference, Park City, UT, USA, 8–11 October 2019. [Google Scholar]

- Park, W.J.; Kim, B.S.; Seo, D.E.; Kim, D.S.; Lee, K.H. Parking Space Detection Using Ultrasonic Sensor in Parking Assistance System. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 1039–1044. [Google Scholar] [CrossRef]

- van Schaik, W.; Grooten, M.; Wernaart, T.; van der Geld, C. High Accuracy Acoustic Relative Humidity Measurement in Duct Flow with Air. Sensors 2010, 10, 7421–7433. [Google Scholar] [CrossRef]

- Alonso, L.; Milanés, V.; Torre-Ferrero, C.; Godoy, J.; Oria, J.P.; de Pedro, T. Ultrasonic Sensors in Urban Traffic Driving-Aid Systems. Sensors 2011, 11, 661–673. [Google Scholar] [CrossRef]

- Sahoo, A.K.; Udgata, S.K. A Novel ANN-Based Adaptive Ultrasonic Measurement System for Accurate Water Level Monitoring. IEEE Trans. Instrum. Meas. 2020, 69, 3359–3369. [Google Scholar] [CrossRef]

- Blanch, J.; Walter, T.; Enge, P. A Simple Satellite Exclusion Algorithm for Advanced RAIM. In Proceedings of the 2016 International Technical Meeting of The Institute of Navigation, Monterey, CA, USA, 25–28 January 2016; pp. 239–244. [Google Scholar]

- Mori, D.; Sugiura, H.; Hattori, Y. Adaptive Sensor Fault Detection and Isolation Using Unscented Kalman Filter for Vehicle Positioning. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1298–1304. [Google Scholar]

- Tadjine, H.; Anoushirvan, D.; Eugen, D.; Schulze, K. Optical Self Diagnostics for Camera Based Driver Assistance. In Proceedings of the FISITA 2012 World Automotive Congress; SAE-China, FISITA, Eds.; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2013; Volume 197, pp. 507–518. ISBN 978-3-642-33804-5. [Google Scholar]

- Saponara, S.; Greco, M.S.; Gini, F. Radar-on-Chip/in-Package in Autonomous Driving Vehicles and Intelligent Transport Systems: Opportunities and Challenges. IEEE Signal Processing Mag. 2019, 36, 71–84. [Google Scholar] [CrossRef]

- Park, S.; Oh, K.; Jeong, Y.; Yi, K. Model Predictive Control-Based Fault Detection and Reconstruction Algorithm for Longitudinal Control of Autonomous Driving Vehicle Using Multi-Sliding Mode Observer. Microsyst. Technol. 2020, 26, 239–264. [Google Scholar] [CrossRef]

- Oh, K.; Yi, K. A Longitudinal Model Based Probabilistic Fault Diagnosis Algorithm of Autonomous Vehicles Using Sliding Mode Observer. In Proceedings of the ASME 2017 Conference on Information Storage and Processing Systems, San Francisco, CA, USA, 29–30 August 2017; American Society of Mechanical Engineers: New York, NY, USA, 2017; pp. 1–3. [Google Scholar]

- Lyu, Y.; Pang, Z.; Zhou, C.; Zhao, P. Prognostics and Health Management Technology for Radar System. MATEC Web Conf. 2020, 309, 04009. [Google Scholar] [CrossRef][Green Version]

- Lim, B.S.; Keoh, S.L.; Thing, V.L.L. Autonomous Vehicle Ultrasonic Sensor Vulnerability and Impact Assessment. In Proceedings of the IEEE World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 231–236. [Google Scholar] [CrossRef]

- Bank, D. A Novel Ultrasonic Sensing System for Autonomous Mobile Systems. Proc. IEEE Sens. 2002, 1, 1671–1676. [Google Scholar] [CrossRef]

- Li, C.; Fu, Y.; Yu, F.R.; Luan, T.H.; Zhang, Y. Vehicle Position Correction: A Vehicular Blockchain Networks-Based GPS Error Sharing Framework. IEEE Trans. Intell. Transp. Syst. 2021, 22, 898–912. [Google Scholar] [CrossRef]

- Hu, J.; Sun, Q.; Ye, Z.-S.; Zhou, Q. Joint Modeling of Degradation and Lifetime Data for RUL Prediction of Deteriorating Products. IEEE Trans. Ind. Inf. 2021, 17, 4521–4531. [Google Scholar] [CrossRef]

- Ye, Z.-S.; Xie, M. Stochastic Modelling and Analysis of Degradation for Highly Reliable Products: Z.-S. YE AND M. XIE. Appl. Stochastic Models Bus. Ind. 2015, 31, 16–32. [Google Scholar] [CrossRef]

- Ng, T.S. ADAS in Autonomous Driving. In Robotic Vehicles: Systems and Technology; Springer: Berlin/Heidelberg, Germany, 2021; pp. 87–93. [Google Scholar]

- Li, X.; Lin, K.-Y.; Meng, M.; Li, X.; Li, L.; Hong, Y. Composition and Application of Current Advanced Driving Assistance System: A Review. arXiv 2021, arXiv:2105.12348. [Google Scholar]

- Choi, J.D.; Kim, M.Y. A Sensor Fusion System with Thermal Infrared Camera and LiDAR for Autonomous Vehicles and Deep Learning Based Object Detection. ICT Express, 2022, in press. [CrossRef]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian Recognition and Tracking Using 3D LiDAR for Autonomous Vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Khan, A.; Khalid, S.; Raouf, I.; Sohn, J.-W.; Kim, H.-S. Autonomous Assessment of Delamination Using Scarce Raw Structural Vibration and Transfer Learning. Sensors 2021, 21, 6239. [Google Scholar] [CrossRef]

- Raouf, I.; Lee, H.; Kim, H.S. Mechanical Fault Detection Based on Machine Learning for Robotic RV Reducer Using Electrical Current Signature Analysis: A Data-Driven Approach. J. Comput. Des. Eng. 2022, 9, 417–433. [Google Scholar] [CrossRef]

- Habibi, H.; Howard, I.; Simani, S.; Fekih, A. Decoupling Adaptive Sliding Mode Observer Design for Wind Turbines Subject to Simultaneous Faults in Sensors and Actuators. IEEE/CAA J. Autom. Sin. 2021, 8, 837–847. [Google Scholar] [CrossRef]

- Saeed, U.; Jan, S.U.; Lee, Y.-D.; Koo, I. Fault Diagnosis Based on Extremely Randomized Trees in Wireless Sensor Networks. Reliab. Eng. Syst. Saf. 2021, 205, 107284. [Google Scholar] [CrossRef]

- Bellanco, I.; Fuentes, E.; Vallès, M.; Salom, J. A Review of the Fault Behavior of Heat Pumps and Measurements, Detection and Diagnosis Methods Including Virtual Sensors. J. Build. Eng. 2021, 39, 102254. [Google Scholar] [CrossRef]

- Rajpoot, S.C.; Pandey, C.; Rajpoot, P.S.; Singhai, S.K.; Sethy, P.K. A Dynamic-SUGPDS Model for Faults Detection and Isolation of Underground Power Cable Based on Detection and Isolation Algorithm and Smart Sensors. J. Electr. Eng. Technol. 2021, 16, 1799–1819. [Google Scholar] [CrossRef]

- Bhushan, B.; Sahoo, G. Recent Advances in Attacks, Technical Challenges, Vulnerabilities and Their Countermeasures in Wireless Sensor Networks. Wirel. Pers. Commun. 2018, 98, 2037–2077. [Google Scholar] [CrossRef]

- Goelles, T.; Schlager, B.; Muckenhuber, S. Fault Detection, Isolation, Identification and Recovery (FDIIR) Methods for Automotive Perception Sensors Including a Detailed Literature Survey for Lidar. Sensors 2020, 20, 3662. [Google Scholar] [CrossRef] [PubMed]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous Vehicle Perception: The Technology of Today and Tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar]

- Biddle, L.; Fallah, S. A Novel Fault Detection, Identification and Prediction Approach for Autonomous Vehicle Controllers Using SVM. Automot. Innov. 2021, 4, 301–314. [Google Scholar] [CrossRef]

- Sun, Q.; Ye, Z.-S.; Zhu, X. Managing Component Degradation in Series Systems for Balancing Degradation through Reallocation and Maintenance. IISE Trans. 2020, 52, 797–810. [Google Scholar]

- Gu, J.; Barker, D.; Pecht, M.G. Uncertainty Assessment of Prognostics of Electronics Subject to Random Vibration. In Proceedings of the AAAI Fall Symposium: Artificial Intelligence for Prognostics, Arlington, VA, USA, 9–11 November 2007; pp. 50–57. [Google Scholar]

- Ibargüengoytia, P.H.; Vadera, S.; Sucar, L.E. A Probabilistic Model for Information and Sensor Validation. Comput. J. 2006, 49, 113–126. [Google Scholar] [CrossRef]

- Cheng, S.; Azarian, M.H.; Pecht, M.G. Sensor Systems for Prognostics and Health Management. Sensors 2010, 10, 5774–5797. [Google Scholar] [CrossRef]

- Gong, Z.; Lin, H.; Zhang, D.; Luo, Z.; Zelek, J.; Chen, Y.; Nurunnabi, A.; Wang, C.; Li, J. A Frustum-Based Probabilistic Framework for 3D Object Detection by Fusion of LiDAR and Camera Data. ISPRS J. Photogramm. Remote Sens. 2020, 159, 90–100. [Google Scholar] [CrossRef]

- SAE-China; FISITA (Eds.) Proceedings of the FISITA 2012 World Automotive Congress; Springer: Berlin/Heidelberg, Germany, 2013; ISBN 3-642-33840-2. [Google Scholar]

- Kobayashi, T.; Simon, D.L. Application of a Bank of Kalman Filters for Aircraft Engine Fault Diagnostics. In Proceedings of the ASME Turbo Expo 2003, Atlanta, GA, USA, 16–19 June 2003; Volume 1, pp. 461–470. [Google Scholar]

- Salahshoor, K.; Mosallaei, M.; Bayat, M. Centralized and Decentralized Process and Sensor Fault Monitoring Using Data Fusion Based on Adaptive Extended Kalman Filter Algorithm. Measurement 2008, 41, 1059–1076. [Google Scholar] [CrossRef]

- Köylüoglu, T.; Hennicks, L. Evaluating Rain Removal Image Processing Solutions for Fast and Accurate Object Detection. Master’s Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2019. [Google Scholar]

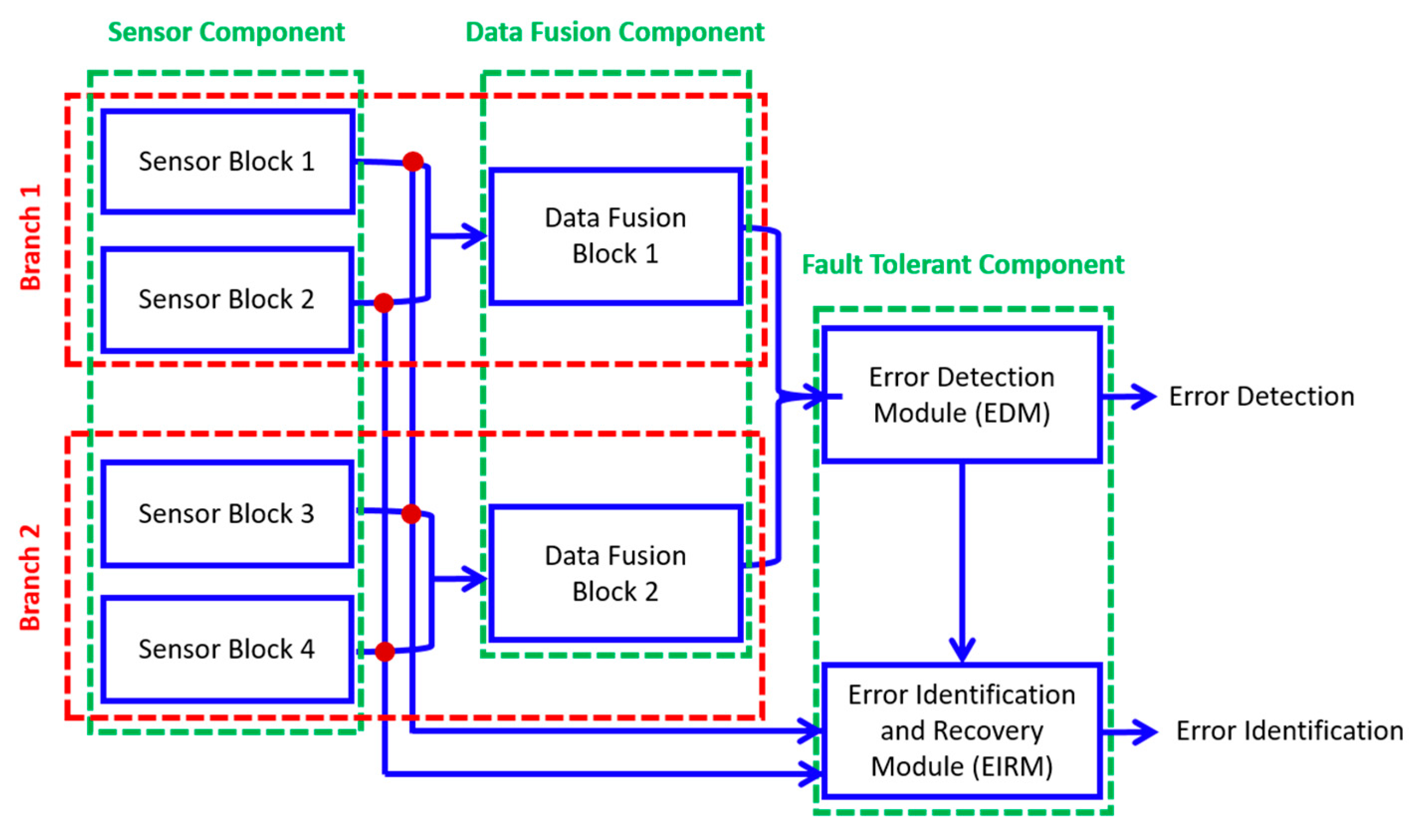

- Realpe, M. Multi-Sensor Fusion Module in a Fault Tolerant Perception System for Autonomous Vehicles. J. Autom. Control. Eng. 2016, 4, 460–466. [Google Scholar] [CrossRef]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M. Machine Learning: The High Interest Credit Card of Technical Debt. 2014. Available online: https://blog.acolyer.org/2016/02/29/machine-learning-the-high-interest-credit-card-of-technical-debt/ (accessed on 10 August 2022).

- Spinneker, R.; Koch, C.; Park, S.-B.; Yoon, J.J. Fast Fog Detection for Camera Based Advanced Driver Assistance Systems. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1369–1374. [Google Scholar]

- Tian, Y.; Pei, K.; Jana, S.; Ray, B. Deeptest: Automated Testing of Deep-Neural-Network-Driven Autonomous Cars. In Proceedings of the 40th International Conference on Software Engineering, Gothenburg, Sweden, 27 May–3 June 2018; pp. 303–314. [Google Scholar]

- Hata, A.; Wolf, D. Road Marking Detection Using LIDAR Reflective Intensity Data and Its Application to Vehicle Localization. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 584–589. [Google Scholar]

- Poczter, S.L.; Jankovic, L.M. The Google Car: Driving Toward A Better Future? JBCS 2013, 10, 7. [Google Scholar] [CrossRef]

- Yoo, H.W.; Druml, N.; Brunner, D.; Schwarzl, C.; Thurner, T.; Hennecke, M.; Schitter, G. MEMS-Based Lidar for Autonomous Driving. Elektrotech. Inftech. 2018, 135, 408–415. [Google Scholar] [CrossRef]

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-Based Static and Moving Obstacle Detection in Driving Environments: An Approach Based on Voxels and Multi-Region Ground Planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Segata, M.; Cigno, R.L.; Bhadani, R.K.; Bunting, M.; Sprinkle, J. A Lidar Error Model for Cooperative Driving Simulations. In Proceedings of the 2018 IEEE Vehicular Networking Conference (VNC), Taipei, Taiwan, 5–7 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar]

- Sun, X. Method and Apparatus for Detection and Ranging Fault Detection and Recovery. U.S. Patent 10,203,408, 12 February 2019. [Google Scholar]

- Rivero, J.R.V.; Tahiraj, I.; Schubert, O.; Glassl, C.; Buschardt, B.; Berk, M.; Chen, J. Characterization and Simulation of the Effect of Road Dirt on the Performance of a Laser Scanner. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Trierweiler, M.; Caldelas, P.; Gröninger, G.; Peterseim, T.; Neumann, C. Influence of Sensor Blockage on Automotive LiDAR Systems. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Periu, C.F.; Mohsenimanesh, A.; Laguë, C.; McLaughlin, N.B. Isolation of Vibrations Transmitted to a LIDAR Sensor Mounted on an Agricultural Vehicle to Improve Obstacle Detection. Can. Biosyst. Eng. 2013, 55, 233–242. [Google Scholar] [CrossRef]

- Hama, S.; Toda, H. Basic Experiment of LIDAR Sensor Measurement Directional Instability for Moving and Vibrating Object. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chongqing, China, 23–25 November 2018; IOP Publishing: Bristol, UK, 2019; Volume 472, p. 012017. [Google Scholar]

- Choi, H.; Lee, W.-C.; Aafer, Y.; Fei, F.; Tu, Z.; Zhang, X.; Xu, D.; Deng, X. Detecting Attacks against Robotic Vehicles: A Control Invariant Approach. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 801–816. [Google Scholar]

- Petit, J.; Shladover, S.E. Potential Cyberattacks on Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2014, 16, 546–556. [Google Scholar] [CrossRef]

- Shin, H.; Kim, D.; Kwon, Y.; Kim, Y. Illusion and Dazzle: Adversarial Optical Channel Exploits against Lidars for Automotive Applications. In Proceedings of the International Conference on Cryptographic Hardware and Embedded Systems, Santa Barbara, CA, USA, 17–19 August 2016; Springer: Berlin/Heidelberg, Germany, 2017; pp. 445–467. [Google Scholar]

- Kim, G.; Eom, J.; Park, Y. An Experiment of Mutual Interference between Automotive LIDAR Scanners. In Proceedings of the 2015 12th International Conference on Information Technology-New Generations, Las Vegas, NV, USA, 13–15 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 680–685. [Google Scholar]

- Zhang, F.; Liu, Q.; Gong, M.; Fu, X. Anti-Dynamic-Crosstalk Method for Single Photon LIDAR Detection. In LIDAR Imaging Detection and Target Recognition 2017; International Society for Optics and Photonics: Washington, DC, USA, 2017; Volume 10605, p. 1060503. [Google Scholar]

- Ning, X.; Li, F.; Tian, G.; Wang, Y. An Efficient Outlier Removal Method for Scattered Point Cloud Data. PLoS ONE 2018, 13, e0201280. [Google Scholar] [CrossRef]

- McMichael, R.; Schabb, D.E.; Thakur, A.; Francisco, S.; Kentley-Klay, D.; Torrey, J.R. Sensor Obstruction Detection and Mitigation Using Vibration and/or Heat. U.S. Patent 11,176,426, 16 November 2021. [Google Scholar]

- Bohren, J.; Foote, T.; Keller, J.; Kushleyev, A.; Lee, D.; Stewart, A.; Vernaza, P.; Derenick, J.; Spletzer, J.; Satterfield, B. Little Ben: The Ben Franklin Racing Team’s Entry in the 2007 DARPA Urban Challenge. J. Field Robot. 2008, 25, 598–614. [Google Scholar] [CrossRef]

- Fallis, A.G. Autonomous Ground Vehicle. J. Chem. Inf. Modeling 2013, 53, 1689–1699. [Google Scholar]

- Kesting, A.; Treiber, M.; Schönhof, M.; Helbing, D. Adaptive Cruise Control Design for Active Congestion Avoidance. Transp. Res. Part C Emerg. Technol. 2008, 16, 668–683. [Google Scholar] [CrossRef]

- Werneke, J.; Kleen, A.; Vollrath, M. Perfect Timing: Urgency, Not Driving Situations, Influence the Best Timing to Activate Warnings. Hum. Factors 2014, 56, 249–259. [Google Scholar] [CrossRef]

- Li, H.-J.; Kiang, Y.-W. Radar and Inverse Scattering. In The Electrical Engineering Handbook; Elsevier: Amsterdam, The Netherlands, 2005; pp. 671–690. [Google Scholar]

- Rohling, H.; Möller, C. Radar Waveform for Automotive Radar Systems and Applications. In Proceedings of the 2008 IEEE Radar Conference, RADAR, Rome, Italy, 26–30 May 2008; Volume 1. [Google Scholar] [CrossRef]

- Bilik, I.; Longman, O.; Villeval, S.; Tabrikian, J. The Rise of Radar for Autonomous Vehicles. IEEE Signal Process. Mag. 2019, 36, 20–31. [Google Scholar] [CrossRef]

- Campbell, S.; O’Mahony, N.; Krpalcova, L.; Riordan, D.; Walsh, J.; Murphy, A.; Ryan, C. Sensor Technology in Autonomous Vehicles: A Review. In Proceedings of the 29th Irish Signals and Systems Conference, ISSC, Belfast, UK, 21–22 June 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Severino, J.V.B.; Zimmer, A.; Brandmeier, T.; Freire, R.Z. Pedestrian Recognition Using Micro Doppler Effects of Radar Signals Based on Machine Learning and Multi-Objective Optimization. Expert Syst. Appl. 2019, 136, 304–315. [Google Scholar] [CrossRef]

- Loureiro, R.; Benmoussa, S.; Touati, Y.; Merzouki, R.; Ould Bouamama, B. Integration of Fault Diagnosis and Fault-Tolerant Control for Health Monitoring of a Class of MIMO Intelligent Autonomous Vehicles. IEEE Trans. Veh. Technol. 2014, 63, 30–39. [Google Scholar] [CrossRef]

- Dickmann, J.; Appenrodt, N.; Bloecher, H.L.; Brenk, C.; Hackbarth, T.; Hahn, M.; Klappstein, J.; Muntzinger, M.; Sailer, A. Radar Contribution to Highly Automated Driving. In Proceedings of the 2014 44th European Microwave Conference, Rome, Italy, 6–9 October 2014; pp. 412–415. [Google Scholar] [CrossRef]

- Rajamani, R.; Shrivastava, A.; Zhu, C.; Alexander, L. Fault Diagnostics for Intelligent Vehicle Applications; Minnesota Department of Transportation: Saint Paul, MN, USA, 2001. [Google Scholar]

- Jeon, W.; Xie, Z.; Zemouche, A.; Rajamani, R. Simultaneous Cyber-Attack Detection and Radar Sensor Health Monitoring in Connected ACC Vehicles. IEEE Sens. J. 2021, 21, 15741–15752. [Google Scholar] [CrossRef]

- Yigit, E. Automotive Radar Self-Diagnostic Using Calibration Targets That Are Embedded in Road Infrastructure; Delft University of Technology: Delft, The Netherlands, 2021. [Google Scholar]

- Oh, K.; Park, S.; Lee, J.; Yi, K. Functional Perspective-Based Probabilistic Fault Detection and Diagnostic Algorithm for Autonomous Vehicle Using Longitudinal Kinematic Model. Microsyst. Technol. 2018, 24, 4527–4537. [Google Scholar] [CrossRef]

- Oh, K.; Park, S.; Yi, K. A Predictive Approach to the Fault Detection in Fail-Safe System of Autonomous Vehicle Based on the Multi-Sliding Mode Observer. In Proceedings of the ASME-JSME 2018 Joint International Conference on Information Storage and Processing Systems and Micromechatronics for Information and Precision Equipment, ISPS-MIPE, San Francisco, CA, USA, 29–30 August 2018; pp. 2018–2020. [Google Scholar] [CrossRef]

- Song, T.; Lee, J.; Oh, K.; Yi, K. Dual-Sliding Mode Approach for Separated Fault Detection and Tolerant Control for Functional Safety of Longitudinal Autonomous Driving. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 1446–1460. [Google Scholar] [CrossRef]

- Cheek, E.; Khuttan, D.; Changalvala, R.; Malik, H. Physical Fingerprinting of Ultrasonic Sensors and Applications to Sensor Security. In Proceedings of the 2020 IEEE 6th International Conference on Dependability in Sensor, Cloud and Big Data Systems and Application, DependSys, Nadi, Fiji, 14–16 December 2020; pp. 65–72. [Google Scholar] [CrossRef]

- Al-Turjman, F.; Malekloo, A. Smart Parking in IoT-Enabled Cities: A Survey. Sustain. Cities Soc. 2019, 49, 101608. [Google Scholar] [CrossRef]

- Kianpisheh, A.; Mustaffa, N.; Limtrairut, P.; Keikhosrokiani, P. Smart Parking System (SPS) Architecture Using Ultrasonic Detector. Int. J. Softw. Eng. Its Appl. 2012, 6, 51–58. [Google Scholar]

- Kotb, A.O.; Shen, Y.C.; Huang, Y. Smart Parking Guidance, Monitoring and Reservations: A Review. IEEE Intell. Transp. Syst. Mag. 2017, 9, 6–16. [Google Scholar] [CrossRef]

- Taraba, M.; Adamec, J.; Danko, M.; Drgona, P. Utilization of Modern Sensors in Autonomous Vehicles. In Proceedings of the 12th International Conference ELEKTRO 2018, Mikulov, Czech Republic, 21–23 May 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, Y.; Zhang, L.; Cao, Y.; Guo, S.; Li, H. Research Review on Parking Space Detection Method. Symmetry 2021, 13, 128. [Google Scholar] [CrossRef]

- Idris, M.Y.I.; Tamil, E.M.; Razak, Z.; Noor, N.M.; Km, L.W. Smart Parking System Using Image Processing Techniques in Wireless Sensor Network Environment. Inf. Technol. J. 2009, 8, 114–127. [Google Scholar] [CrossRef]

- Hanzl, J. Parking Information Guidance Systems and Smart Technologies Application Used in Urban Areas and Multi-Storey Car Parks. Transp. Res. Procedia 2020, 44, 361–368. [Google Scholar] [CrossRef]

- Valasek, C. A Survey of Remote Automotive Attack Surfaces; Technical White Paper; IOActive: Washington, DC, USA, 2014; pp. 1–90. [Google Scholar]

- Nourinejad, M.; Bahrami, S.; Roorda, M.J. Designing Parking Facilities for Autonomous Vehicles. Transp. Res. Part B Methodol. 2018, 109, 110–127. [Google Scholar] [CrossRef]

- Ma, S.; Jiang, Z.; Jiang, H.; Han, M.; Li, C. Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser. Appl. Sci. 2020, 10, 2582. [Google Scholar] [CrossRef]

- Jeong, S.H.; Choi, C.G.; Oh, J.N.; Yoon, P.J.; Kim, B.S.; Kim, M.; Lee, K.H. Low Cost Design of Parallel Parking Assist System Based on an Ultrasonic Sensor. Int. J. Automot. Technol. 2010, 11, 409–416. [Google Scholar] [CrossRef]

- Pelaez, L.P.; Recalde, M.E.V.; Munoz, E.D.M.; Larrauri, J.M.; Rastelli, J.M.P.; Druml, N.; Hillbrand, B. Car Parking Assistance Based on Time-or-Flight Camera. In Proceedings of the IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1753–1759. [Google Scholar] [CrossRef]

- Bank, D. An Error Detection Model for Ultrasonic Sensor Evaluation on Autonomous Mobile Systems. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Berlin, Germany, 27 September 2002; pp. 288–293. [Google Scholar] [CrossRef]

- Soika, M. Grid Based Fault Detection and Calibration of Sensors on Mobile Robots. Proc. IEEE Int. Conf. Robot. Autom. 1997, 3, 2589–2594. [Google Scholar] [CrossRef]

- Abdel-Hafez, M.F.; Al Nabulsi, A.; Jafari, A.H.; Al Zaabi, F.; Sleiman, M.; Abuhatab, A. A Sequential Approach for Fault Detection and Identification of Vehicles’ Ultrasonic Parking Sensors. In Proceedings of the 2011 4th International Conference on Modeling, Simulation and Applied Optimization, ICMSAO, Kuala Lumpur, Malaysia, 19–21 April 2011. [Google Scholar] [CrossRef]

- De Simone, M.C.; Rivera, Z.B.; Guida, D. Obstacle Avoidance System for Unmanned Ground Vehicles by Using Ultrasonic Sensors. Machines 2018, 6, 18. [Google Scholar] [CrossRef]

- Arvind, C.S.; Senthilnath, J. Autonomous Vehicle for Obstacle Detection and Avoidance Using Reinforcement Learning. In Soft Computing for Problem Solving; Springer: Berlin/Heidelberg, Germany, 2020; pp. 55–66. [Google Scholar]

- Rosique, F.; Navarro, P.J.; Fernández, C.; Padilla, A. A Systematic Review of Perception System and Simulators for Autonomous Vehicles Research. Sensors 2019, 19, 648. [Google Scholar] [CrossRef]

- Capuano, V.; Harvard, A.; Chung, S.-J. On-Board Cooperative Spacecraft Relative Navigation Fusing GNSS with Vision. Prog. Aerosp. Sci. 2022, 128, 100761. [Google Scholar] [CrossRef]

- Gyagenda, N.; Hatilima, J.V.; Roth, H.; Zhmud, V. A Review of GNSS-Independent UAV Navigation Techniques. Robot. Auton. Syst. 2022, 152, 104069. [Google Scholar] [CrossRef]

- Zermani, S.; Dezan, C.; Hireche, C.; Euler, R.; Diguet, J.-P. Embedded and Probabilistic Health Management for the GPS of Autonomous Vehicles. In Proceedings of the 2016 5th Mediterranean Conference on Embedded Computing (MECO), Bar, Montenegro, 12–16 June 2016; pp. 401–404. [Google Scholar]

- Dezan, C.; Zermani, S.; Hireche, C. Embedded Bayesian Network Contribution for a Safe Mission Planning of Autonomous Vehicles. Algorithms 2020, 13, 155. [Google Scholar] [CrossRef]

- Rahiman, W.; Zainal, Z. An Overview of Development GPS Navigation for Autonomous Car. In Proceedings of the 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA), Melbourne, Australia, 19–21 June 2013; pp. 1112–1118. [Google Scholar]

- Kojima, Y.; Kidono, K.; Takahashi, A.; Ninomiya, Y. Precise Ego-Localization by Integration of GPS and Sensor-Based Odometry. J. Intell. Connect. Veh. 2008, 3, 485–490. [Google Scholar]

- Zein, Y.; Darwiche, M.; Mokhiamar, O. GPS Tracking System for Autonomous Vehicles. Alex. Eng. J. 2018, 57, 3127–3137. [Google Scholar] [CrossRef]

- Quddus, M.A.; Ochieng, W.Y.; Noland, R.B. Current Map-Matching Algorithms for Transport Applications: State-of-the Art and Future Research Directions. Transp. Res. Part C Emerg. Technol. 2007, 15, 312–328. [Google Scholar] [CrossRef]

- Ercek, R.; De Doncker, P.; Grenez, F. Study of Pseudo-Range Error Due to Non-Line-of-Sight-Multipath in Urban Canyons. In Proceedings of the 18th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2005), Long Beach, CA, USA, 13–16 September 2005; Citeseer: University Park, PA, USA, 2005; pp. 1083–1094. [Google Scholar]

- Joerger, M.; Spenko, M. Towards Navigation Safety for Autonomous Cars. Inside GNSS 2017, 40–49. Available online: https://par.nsf.gov/biblio/10070277 (accessed on 10 August 2022).

- Sukkarieh, S.; Nebot, E.M.; Durrant-Whyte, H.F. Achieving Integrity in an INS/GPS Navigation Loop for Autonomous Land Vehicle Applications. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146), Leuven, Belgium, 20–24 May 1998; IEEE: Piscataway, NJ, USA, 1998; Volume 4, pp. 3437–3442. [Google Scholar]

- Yan, L.; Zhang, Y.; He, Y.; Gao, S.; Zhu, D.; Ran, B.; Wu, Q. Hazardous Traffic Event Detection Using Markov Blanket and Sequential Minimal Optimization (MB-SMO). Sensors 2016, 16, 1084. [Google Scholar] [CrossRef]

- Khalid, A.; Umer, T.; Afzal, M.K.; Anjum, S.; Sohail, A.; Asif, H.M. Autonomous Data Driven Surveillance and Rectification System Using In-Vehicle Sensors for Intelligent Transportation Systems (ITS). Comput. Netw. 2018, 139, 109–118. [Google Scholar] [CrossRef]

- Bhamidipati, S.; Gao, G.X. Multiple Gps Fault Detection and Isolation Using a Graph-Slam Framework. In Proceedings of the 31st International Technical Meeting of the Satellite Division of the Institute of Navigation, ION GNSS+ 2018, Miami, FL, USA, 24–28 September 2018; Institute of Navigation: Manassas, VA, USA, 2018; pp. 2672–2681. [Google Scholar]

- Meng, X.; Wang, H.; Liu, B. A Robust Vehicle Localization Approach Based on Gnss/Imu/Dmi/Lidar Sensor Fusion for Autonomous Vehicles. Sensors 2017, 17, 2140. [Google Scholar] [CrossRef]

- Bikfalvi, P.; Lóránt, I. Combining Sensor Redundancy for Fault Detection in Navigation of an Autonomous Mobile Vehicle. IFAC Proc. Vol. 2000, 33, 843–847. [Google Scholar] [CrossRef]

- Bader, K.; Lussier, B.; Schön, W. A Fault Tolerant Architecture for Data Fusion: A Real Application of Kalman Filters for Mobile Robot Localization. Robot. Auton. Syst. 2017, 88, 11–23. [Google Scholar] [CrossRef]

- Bijjahalli, S.; Ramasamy, S.; Sabatini, R. A GNSS Integrity Augmentation System for Airport Ground Vehicle Operations. Energy Procedia 2017, 110, 149–155. [Google Scholar] [CrossRef]

- Barabás, I.; Todoruţ, A.; Cordoş, N.; Molea, A. Current Challenges in Autonomous Driving. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 252, p. 012096. [Google Scholar]

- Anderson, J.M.; Nidhi, K.; Stanley, K.D.; Sorensen, P.; Samaras, C.; Oluwatola, O.A. Autonomous Vehicle Technology: A Guide for Policymakers; Rand Corporation: Perth, Australia, 2014; ISBN 0-8330-8437-2. [Google Scholar]

- Maddox, J.; Sweatman, P.; Sayer, J. Intelligent Vehicles+ Infrastructure to Address Transportation Problems—A Strategic Approach. In Proceedings of the 24th international technical conference on the enhanced safety of vehicles (ESV), Gothenburg, Sweden, 8–11 June 2015. [Google Scholar]

- Sivaraman, S. Learning, Modeling, and Understanding Vehicle Surround Using Multi-Modal Sensing; University of California: San Diego, CA, USA, 2013; ISBN 1-303-38570-8. [Google Scholar]

- Lavrinc, D. This Is How Bad Self-Driving Cars Suck in Rain; Technology Report. 2014. Available online: https://jalopnik.com/ (accessed on 10 August 2022).

- McFarland, M. Who’s Responsible When an Autonomous Car Crashes. Available online: https://www.scientificamerican.com/article/who-s-responsible-when-a-self-driving-car-crashes/ (accessed on 10 August 2022).

- Davies, A. Google’s Self-Driving Car Caused Its First Crash; Wired: London, UK, 2016. [Google Scholar]

- Stoma, M.; Dudziak, A.; Caban, J.; Droździel, P. The Future of Autonomous Vehicles in the Opinion of Automotive Market Users. Energies 2021, 14, 4777. [Google Scholar] [CrossRef]

| Fault Type | Technique | Advantages |

|---|---|---|

| Noise in sensor data due to weather or geographical changes [21] | Unscented Kalman filter | Decreasing computational cost |

| Displacements in sensor data [55] | Support vector machine (SVM) algorithm | Reducing uncertainties using sensor fusion |

| Sensor data due to various environmental conditions (rain, fog, lighting) [58] | Deep neural network (DNN) driven | Reducing software complexities using DNN-based model |

| Camera-based fog detection [57] | Power spectrum slope | Self-diagnosis mechanism to warn the system against critical conditions |

| Possible defects (blooming, smear, picture element defects, thermal noise) [22] | Edge analysis of consecutive frames | Proposed techniques work under diverse weather conditions |

| Misalignment [14] | Bayesian belief network approach | The presented model shows good performance and overall navigation can predict accurately |

| Subsystem of LiDAR | Fault Conditions | Hazards |

|---|---|---|

| Position encoder | Failure to read position data | Malfunction of mirror motor |

| Electrical | Short-circuit and overvoltage | Electrical component failure |

| Optical receiver | Misalignment | Error in the optical receiver |

| Optical filter | Damage | Error in the optical receiver |

| Mirror motor | Malfunction | LiDAR failure |

| PHM Category | Description/References |

|---|---|

| Fault category | Subcomponent failure [63,64] |

| Mechanical fault of the sensor protector [65] | |

| Coating on the sensor protector [65,66] | |

| Escalating problem [67,68] | |

| Security breach [69,70,71] | |

| Adverse climatic circumstance [65,71] | |

| Intermodulation distortion [72,73] | |

| FDI category | Correlation with sensor framework [43] |

| Output of the monitoring sensor [63] | |

| Correlation to passive ground truth [43] | |

| Correlation to active ground truth [43] | |

| Correlation to another similar sensor [43] | |

| Correlation to another and different sensor [43] | |

| Matching of various interfaces [43] | |

| Recovery category | Software adjustment [74] |

| Hardware adjustment [67] | |

| Temperature adjustment [75] | |

| Mopping of sensor shield [75] |

| Fault Type | Technique |

|---|---|

| Noise in data due to weather condition [21] | Unscented Kalman filter |

| Displacements in sensor data [55] | Support vector machine (SVM) algorithm |

| Various faults (failure to read data, short-circuit, overvoltage, or misalignment) [14] | Bayesian belief network approach |

| Sensor data due to various environmental conditions (rain, fog, or lighting) [58] | Deep neural network driven |

| Fault/Problem | Technique | Consequence |

|---|---|---|

| Probabilistic fault for an acceleration sensor and RADAR [90] | A longitudinal kinematic model-based probabilistic fault detection and diagnostic algorithm | Unsafe longitudinal control of the AV |

| Fault in the range rate signal (mobility of vehicle) [87] | Redundant sensor combined with a specially designed nonlinear filter | Continuously monitors the RADAR sensor, and detects a failure when it happens |

| Acceleration sensor fault diagnosis [25] | Using a sliding mode observer, a probabilistic fault diagnosis algorithm is developed | Inaccurate relative displacement and velocity measurement |

| Fault in the sensor used for longitudinal control [91] | Multi-sliding mode observer-based predictive fault detection algorithm | Faulty measurements from the environment sensors |

| Fault in the sensor used for longitudinal control [24] | Multi-sliding mode observer | Acceleration of the ego vehicles and inaccurate data from the forward objects |

| Continuous diagnostics of external factors, such as water layer or dirt on the bumper [16] | Statistical model for RADAR cross-section (RCS) of repetitive targets | Can significantly affect RADAR performance |

| A cyberattack on a transmission medium and RADAR health monitoring for a connected vehicle both happening at the same time [15] | Observer-based controller in connected ACC Vehicles | Detect a cyber-attack or a fault in the velocity measurement RADAR channel |

| Both RADAR sensor failure and cyber-attack linked to the presence of two unknown inputs [88] | Sensor fault detection and cyber-attack detection observer | Unsmooth operation of ACC vehicles |

| Signal processing of RADAR [23] | Multi-input multi-output and cognitive RADAR | Inaccurate detection of still or moving objects, and measurement of their motion parameters |

| RADAR fault signal reconstruction [92] | A failsafe architecture that focuses on fault reconstruction, detection, and tolerance control | Insecure functional safety of autonomous vehicle |

| Fault/Problem | Techniques | Consequence |

|---|---|---|

| Acoustic and electronic noise [19] | Levenberg–Marquardt backpropagation artificial neural network (LMBP-ANN) architecture using mean squared error (MSE) and R-values | Major effect on ultrasonic sensor operation and distance measurements |

| Vehicle corner error [104] | Parallel parking assist system (PPAS) | The corners of the vehicle are not regular right angles; hence, the ultrasonic sensor has a large error at the corner of the vehicle during the measurement process |

| Vehicle corner error [16] | Combined ultrasonic sensors and three-dimensional vision sensors to detect parking spaces | This method uses a vision sensor to make up for the inaccurate measurement of the ultrasonic at the corner of the vehicle. |

| Performance degradation under bright ambient light [105] | Processing the data acquired by the three-dimensional time-of-flight (ToF) camera and reconstructing objects around the vehicle | Results in shadows and brightness in the image, which limits the detection of low-reflective objects, such as dark cars |

| Cross echoes (direction of sensor) [106] | Error detection model | Unreliable and non-robust sensor assessment |

| False echoes caused by turbulence [18] | Signal processing, such as filtering or Hilbert transform | Prevent vehicle collision with pedestrians and other obstacles |

| Fault, such as cross-eyed, dreaming, and blind sensor errors [107] | Fault detection based on Grid Map | Sensor calibration error |

| Simulated fault in the ultrasonic sensor [108] | Fault detection by statistical estimations | Inaccurate ultrasonic sensor-based parking operation |

| Obstacle avoidance [109] | Artificial neural network with supervised learning | Unsuitable classification and pattern recognition of data collected by an ultrasonic sensor |

| Obstacle detection and avoidance [110] | Policy-free, model-free Q-learning-based RLalgorithm with the multilayer perceptron neural network (MLP−NN) | Optimal vehicle future action based on the current state of the vehicle through better obstacle prediction |

| Fault Type/Faulty Data | Technique | Advantages |

|---|---|---|

| Jumps in GPS observation and noise due to drift in state evaluation [122] | Kalman filter | Minimize chances of undetected faults |

| Disturbance in data due to various traffic (hazardous, risky, and safe events) [123] | Markov blanket (MB) algorithm | Evaluation of the proposed approach in real application of hazardous environmental situation |

| Injected error in the GPS sensor via log file [124] | Pseudo-code on inference algorithm | Robust model for timely fault detection and autonomous fault recovery system for sensors |

| Satellite and received signal faults [125] | Simultaneous localization and mapping (Graph-SLAM) framework | Applicability in AVs for urban areas |

| Sensor data with and without curbs on the roadsides [126] | Unscented Kalman filter (UKF) | Apply to urban areas by improving performance of previous methods for UKF |

| Sensor fault (current and voltage), environment (such as skidding, heating up, missing the trace) [127] | Sensor redundancy fault detection model | Evaluating the system in the real environment with experimental testing |

| Type of Car | Reason/Consequence | Remarks |

|---|---|---|

| Hyundai autonomous car [134] | Raining/crashed during testing | The sensors failed to detect street signs, lane markings, and even pedestrian crossings due to the angle of the car switching in the rain, and the orientation of the sun. |

| Tesla autonomous car [135] | Image contrast/the driver was killed | Failure of camera and confusion of white truck with clear sky. |

| Google autonomous car [136] | Speed estimation failure/collision with bus while lane changing | The car assumed that the bus would stop while merging with the traffic. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raouf, I.; Khan, A.; Khalid, S.; Sohail, M.; Azad, M.M.; Kim, H.S. Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey. Mathematics 2022, 10, 3233. https://doi.org/10.3390/math10183233

Raouf I, Khan A, Khalid S, Sohail M, Azad MM, Kim HS. Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey. Mathematics. 2022; 10(18):3233. https://doi.org/10.3390/math10183233

Chicago/Turabian StyleRaouf, Izaz, Asif Khan, Salman Khalid, Muhammad Sohail, Muhammad Muzammil Azad, and Heung Soo Kim. 2022. "Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey" Mathematics 10, no. 18: 3233. https://doi.org/10.3390/math10183233

APA StyleRaouf, I., Khan, A., Khalid, S., Sohail, M., Azad, M. M., & Kim, H. S. (2022). Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey. Mathematics, 10(18), 3233. https://doi.org/10.3390/math10183233