1. Introduction

With the increased frequency of pollution in recent years and new concerns about mega-cities in developing countries, fine particulate matter (FPM) has received much interest from artificial intelligence scientists [

1]. The types of environmental pollutants in China have shifted dramatically as industrialization has progressed, the economy has grown, and the manufacturing method has shifted [

2]. PM2.5 with an equivalent diameter of less than or equal to

can enter the atmosphere for an extended period [

3]. The greater the amount of PM2.5 in the atmosphere, the greater the pollution. Additionally, when opposed to heavier ambient air particulate matter, PM2.5 seems to have a narrow size distribution and higher density and, thus, is easily associated with hazardous and destructive compounds (e.g., toxic elements, germs) [

4]. Moreover, PM2.5 has a considerable residence period in the air, which can significantly influence human health and environmental conditions [

5].

PM2.5 concentration prediction problems are multi-parameter, complicated, nonlinear procedures, and the efficacy of the linear models is challenging to match with the expectations of the decision-makers when dealing with such nonlinear features [

6,

7]. Many novel approaches for predicting PM2.5 concentrations have been presented in the past few years [

8]. A review of the recent developments in computational models for predicting the PM2.5 series was presented in [

9]. Generally, the PM2.5 prediction ideas are classified into four mother types: (1) deterministic algorithms, (2) statistical approaches, (3) artificial intelligence frameworks, and (4) mixed models [

10]. The emission, accumulation, dissemination, and transmission of air pollutants are all designed to be simulated by a deterministic model [

11]. Its complex meteorological variables and chemical reaction process possess tangible advantages. For example, the authors released a new post-processing technique for outdoor PM2.5 prediction, and their idea was applied to the CMAQ models in [

12]. However, our research in this paper primarily utilizes the historical time series info obtained from the prior observations. This technique is highly complicated and expensive and has substantial uncertainty. The statistical model appears simple and effective [

13]; however, its system performance is strongly influenced by the ability of linear mapping in the nonlinear procedure [

14]. Only information that is linear or nearly linear may be accurately estimated. The actual PM2.5 series, alternatively, appears nonlinear and temperamental [

15].

Many researchers have utilized artificial intelligence (AI) paradigms to overcome this disadvantage [

16]. For example, in the work by Banga et al. [

17], the performance of the extra tree, decision tree, XGBoost, random forest, Light GBM, and AdaBoost regression models was compared for predicting PM2.5 in five cities in China. The artificial intelligence algorithms can handle complicated nonlinear relationships between the involved pollutants and meteorological features and significantly boost the PM2.5 prediction accuracy [

18]. Some of the effective models are ELM and KELM, which have been validated in many prediction fields; for instance, they were optimized by the biogeography-based optimizer (BBO) and BBOKELM utilized by Li and Li [

19] to estimate ultra-short-term wind speed in various places. In another research work, cuckoo-search-based ELM was trained for PM10 data of Beijing and Harbin in China, and the results confirmed that the optimization method has a positive impact on the performance of the ELM technique [

20].

Since the PM2.5 series has always been a nonlinear dynamic model with nonlinearity, non-stationarity, and complexity [

21], a single prediction system cannot reliably estimate the PM2.5 concentration. However, the notion of “decomposition and integration” in the regression method overcomes this drawback by combining the benefits of data decomposition, swarm-based optimization algorithms, machine learning models, and feature selection. It decreases the system’s nonlinear and non-stationarity traits, significantly increases the prediction accuracy, and helps decision-makers obtain more high-quality, optimal solutions [

22]. Yang et al. [

23] developed a hybrid method based on feature analysis, secondary decomposition, and optimized ELM with the chimp optimizer for a case study on hourly data of Shanghai and Shenyang, China. Furthermore, another research work by Li et al. [

10] used crow-search-based KELM hybridized with differential symbolic entropy (DSE) and variational mode decomposition improved by butterfly optimization (BVMD), the results of which were verified on PM2.5 data in Beijing, Shenyang, and Shanghai from 1 January 2016 to 31 March 2021. Liu et al. [

24] presented an innovative hybrid system for four towns in China called WPD-PSO-BP-Adaboost, based on wavelet packet decomposition (WPD), the particle swarm algorithm (PSO) algorithm, the back propagation neural network (BPNN), and the Adaboost model. Sun and Xu [

25] proposed a new hybrid hourly PM2.5 prediction framework, called RF-GSA-TVFEMD-SE-MFO-ELM, in which they decomposed the series by time-varying filtering-based empirical mode decomposition (TVFEMD) and then utilized the optimized ELM by moth flame optimization (MFO) for the prediction of hourly PM2.5 series of four cities in the Beijing Tianjin Hebei region. In another paper by Yin et al. [

26], the authors proposed two boosting approaches, adapted AdaBoost.RT and gradient boosting (GB), to improve the ELM for ensemble prediction of the PM2.5. A simple salp-swarm-based ELM was proposed by Liu and Ye [

27] for the PM2.5 data of Hangzhou from 2016 to 2020. In another research work, a multi-objective Harris hawks optimizer (HHO) was integrated with ELM to predict the PM2.5 of three cities in China [

28]. Another HHO-based ELM model was developed for PM2.5 datasets from Beijing, Tianjin, and Shijiazhuang in China [

29]. A similar work developed an ensemble pigeon-inspired ELM with the multidimensional scaling and K-means clustering component for air quality prediction [

30]. A WAV-VMD-KELM model consisting of wavelet denoising, variational mode decomposition (VMD) of the data, and KELM as the regressor was developed by Xing et al. [

31] for predicting hourly PM2.5 series in Xian, China. A group teaching optimized ELM with the wavelet transform (WT) and ICEEMDAN was proposed for PM2.5 data by Jiang et al. [

32]. In many hybrid models, in addition to the optimization core, the models have been integrated with decomposition methods, including empirical mode decomposition (EMD), variational mode decomposition (VMD), wavelet decomposition (WD), and secondary decomposition (SD). The data decomposition methodology can decompose air quality signals into a predetermined number of sub-sequences, and its use dramatically enhances hybrid models’ prediction capabilities. Each of these methods has its benefits and weaknesses, while the WD is one of the most effective methods in the literature. A comprehensive review of the multi-scale decomposition strategies was presented by Liu et al. [

33].

Although China has gained traction in PM2.5 management in context, reducing emissions has proven to be a complex problem. As a motivation of this research, the precise prediction of PM2.5 concentrations with an efficient model is critical for public health protection and developing preventative strategies. Such efficient models can be utilized within integrated information systems to help decision-makers act autonomously. However, there is a significant gap in research about this problem, and although previous prediction models have their advantages, various problems still need to be addressed. Because of their excellent learning capacity and capability to deal with nonlinear data, AI-based approaches have been frequently employed; yet, such systems are prone to being trapped in local optima and generalization error. The integrated optimization methods are swarm-based and basic versions, and the imbalance of exploration and exploitation may result in being trapped in local optima and poor regression accuracy. Moreover, the learning models’ performance depends on the optimized set of hyper-parameters, which highly affect the regression accuracy. According to the no free lunch (NFL) theorem, no optimization or machine learning model or hybrid version can outperform all possible models on a specific set of problems [

34]. Therefore, there is room for developing more efficient hybrid models for specific PM2.5 datasets. In addition, hybrid models still cannot show the best performance using only one regression method with a basic optimizer since the model with premature regression performance cannot recognize various patterns in the set of features. Hence, there is a need to pre-process the input data more effectively and optimize the model’s performance more efficiently to obtain more accurate results.

The new contributions of this research are as follows. This research introduces a new efficient kernel extreme learning machine model (WD-OSMSSA-KELM model) based on B-XGB feature selection and an enhanced multi-strategy variation of the salp swarm algorithm (SSA) and wavelet decomposition to increase the accuracy of PM2.5 prediction findings. To begin, we used B-XGB feature selection to determine the best characteristics for forecasting hourly PM2.5 concentrations and remove redundant features. Then, to reduce the prediction error caused by time series data, we used the wavelet decomposition (WD) technique to achieve multi-scale decomposition results and single-branch reconstruction of PM2.5 concentrations. In the subsequent steps, we optimized the parameters of the KELM model for each regenerated component using the proposed OSMSSA versus other competitive peers. An enhanced version of the SSA with multiple exploratory and exploitative trends was developed to achieve higher performance for the basic SSA optimization and avoid local stagnation concerns. This paper presents novel procedures based on oppositional-based learning and simplex-based search to address the fundamental flaws of traditional SSA. Furthermore, we used a time-varying parameter instead of the SSA’s primary parameter. We suggest utilizing random leaders to drive the swarm towards new parts of the feature space based on a conditional framework to increase the SSA’s exploration tendencies. The developed model was assessed using data from the Beijing Municipal Environmental Monitoring Center’s hourly database, six key air pollutants, and six significant meteorological components.

Here, we also review the related work on the proposed SSA optimizer in the remaining part.

Literature Review of SSA

Though there are many applications for the SSA, it suffers from the problems of unbalanced exploitation and exploration operations, local optimum stagnation, and poor exploitation. In order to alleviate these issues and enhance the working properties, many scholars actively study improving the performance of the SSA. Ren et al. [

35] presented an adaptive weight Lévy-assisted SSA (WLSSA) and analyzed the optimization ability of the WLSSA. The adaptive weight mechanism extended the global exploration of the basic SSA, and the Lévy flight strategy improved the probability of the whole SSA to escape from local optima. The proposed WLSSA showed excellent performance by integrating the SSA with an adaptive weight mechanism and Lévy flight strategy. Besides, it was applied to three constrained engineering optimization problems in practice. Çelik et al. [

36] propounded a modified SSA (mSSA) to solve the optimization problem on a large scale. The most important parameters for balancing exploitation and exploration in the basic SSA are changed chaotically from the first iteration to the last iteration by embedding a sinusoidal map. Besides, the reciprocal relationship between two leader individuals was introduced into the mSSA to improve its search performance. Moreover, a randomized technique was systematically applied to followers to provide the chain with diversity. This method solved several optimization issues in terms of the accuracy of the effective solution and the convergence trend line.

Aljarah et al. [

37] proposed an improved multi-objective SSA with two basic components: dynamic time-varying strategy and local optimal solution. These components help the SSA balance local exploitation capacity and global exploration capacity. Salgotra et al. [

38] presented a new enhancement to the SSA and proposed seven mutation operators to improve the working properties of the SSA, including Cauchy, Gaussian, Lévy, neighborhood-based mutation, trigonometric mutation, mutation clock, and diversity mutation.

Liu et al. [

39] developed a new modified version of the SSA with a chaos-assisted trend and multi-population structure. The chaotic strategy was used to enrich the local exploitation of the SSA, and a multi-population structure with three sub-strategies was arranged to enhance the global exploration of the SSA. In the beginning, divide all the individuals into multiple sub-populations, which only explore the feasible region. Then, it should be noted that with the continuous development of evolution, the algorithm would gradually replace the global exploration with the focus on local exploration. Hence, depending on the iteration of this paper, the number of sub-populations would have different settings. The whole population would be dynamically divided into different numbers of sub-populations during evolution. Following the update of the SSA’s individual position, the chaos-assisted exploitation approach was implemented to obtain additional possibilities to examine more interesting search regions.

Tubishat et al. [

40] presented a new dynamic SSA (DSSA) for feature selection, which used Singer’s chaotic map to increase diversification and provide a new local search strategy to boost the exploitative capability. Kansal and Dhillon [

41] proposed an emended SSA (ESSA) to settle the multi-objective electric power load dispatch problem. The fuzzy set theory was used to change the multi-objective optimization problem into scalar objectives and through the basic priority to solve the conflict nature of the target. External penalty variable elimination was used to deal with the physical and operational constraints of the unit.

Zhang et al. [

42] found a composite mutation strategy and restarted mechanism to improve the basic SSA. The former mutation schemes were inspired by the DE rand local mutation method of Adaptive CoDE, and the latter schemes help the worst individuals jump out of local optima. Elaziz et al. [

43] used the DE operator as the local search operator to enhance the capability of the SSA to deal with multi-objective big data optimization. Tu et al. [

44] proposed a valuable localization method based on reliable anchor pair selection (RAPS) and the quantum behavior SSA (QSSA) for anisotropic wireless sensor networks. The QSSA was a new SSA variant based on quantum mechanics and trajectory analysis.

Salgotra et al. [

45] presented the adaptive SSA (ASSA), adding a logarithmic distributed parameter, which was based on the division of a generation. Equations based on GWO and CS were used for the first half of the generation, while the general SSA equations were used for the second half of the generation. It was explored by GWO-CS and developed by the SSA algorithm. Meanwhile, the logarithmic decreasing function replaced the basic parameter C1 to achieve a new equilibrium of global exploration and local exploitation.

Ren et al. [

46] used a random replacement mechanism to speed up convergence and a double-adaptive weighting mechanism to enhance the SSA’s exploitation and exploration capabilities. This enhanced method was named RDSSA. In the random replacement mechanism, according to the ratio of the remaining number of runs of the algorithm to the total number of runs compared with the Cauchy random number, the current position had a certain probability to be close to the optimal position, and for the later, the replacement probability was smaller. Inspired by PSO and RDWOA, the double-adaptive weight mechanism introduced two key weights to make the SSA have better global optimization ability in the early stage and better local search ability in the later stage.

Chouhan et al. [

47] introduced the concept of inertia weight to the SSA for optimizing the coverage and energy efficiency of wireless sensor networks. Wang et al. [

48] proposed a novel orthogonal lens opposition-based learning SSA, named the OOSSA. An adaptive strategy was used to develop the exploration capacity, and the lens opposition-based learning and orthogonal design were used to avoid local optima, while the use of ranking-based dynamic learning strategies also enhanced the local exploitation capacity. Majhi et al. [

49] improved the performance of the SSA using a chaotic oscillation generated by the quadratic integration and fire neural model for function optimization.

At present, the combination of two algorithms to improve the performance and solve optimization problems is also a popular research trend. In fact, the advantages of the hybrid algorithm are eliminating each other’s weaknesses to a certain extent and achieving a balance between exploitation and exploration to solve optimization problems. Neggaz et al. [

50] improved the SSA for feature selection by taking inspiration from the sine cosine algorithm (SCA), which updated the position of followers in the SSA using sine/cosine operators. The combination strengthened the convergence capacity. Ewees et al. [

51] modified the SSA by the firefly algorithm (FA) for an unrelated parallel machine scheduling problem. The FA technique was taken as the local search operator to improve the SSA’s performance. Saafan and El-Gendy [

52] improved the basic WOA by using the exponential relationships of its key parameters instead of linear relationships and introduced the improved WOA to the SSA for optimization problems. Ibrahim et al. [

53] presented a hybrid method to improve the efficacy of exploitation and exploration for feature selection, which combined the SSA with PSO. Zhang et al. [

54] was inspired by the SSA and embedded the SSA into the conventional HHO to expand the search ability and increase the diversity of the population. Therefore, the hybridized SSA with other methods maintains a balance between global exploration and local exploitation and has been applied in many fields.

Despite all the advantages of the SSA in dealing with the different optimization cases reviewed above, there is still room for improvement. The satisfactory results of the SSA are diminished in some numerical cases due to its inertia to LOs and immature convergence. The basic SSA can still be improved in terms of diversification and intensification inclinations and their fine balancing state. It may be stuck in LOs. To accelerate the convergence propensities and avoid LOs, as well as control a fine balance among the searching trends of the SSA, we modified the original structure of the SSA. The extensive results show that the proposed mechanisms in the new variant of the SSA can highly mitigate the core problems of the SSA and improve its efficacy in dealing with the studied problems.

The remainder of this work is organized as follows: In

Section 2, we review the main concepts. In

Section 3, the proposed method is described in detail.

Section 6 presents the results. Finally,

Section 7 concludes with the main remarks of this work, in addition to presenting the main future directions for this paper.

7. Conclusions

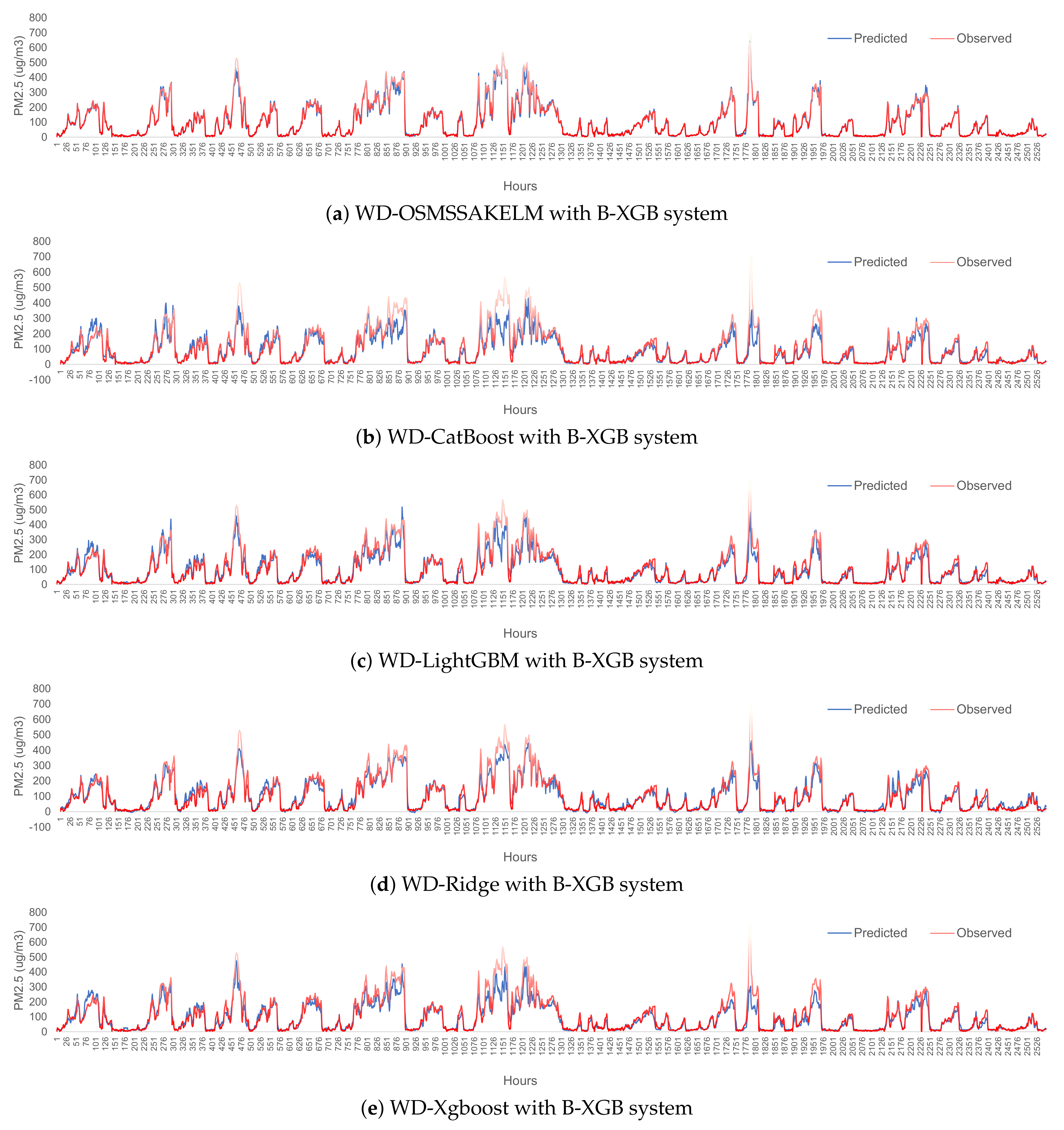

This paper proposed a new efficient wavelet PM2.5 prediction system based on an improved variant of the SSA (OSMSSA), wavelet decomposition, and Boruta-XGBoost (B-XGB) feature selection, which is called WD-OSMSSA-KELM. First, the B-XGB feature selection was applied to remove redundant features. Then, wavelet decomposition (WD) was applied to reach the multi-scale decomposition results and single-branch reconstruction of PM2.5 concentrations and alleviate the prediction error formed by time series data. Then, the new framework optimized the structure of the KELM model under each reconstructed component. To mitigate the premature performance of the SSA, a time-varying version of the SSA with random leaders was proposed based on OBL and simplex-based search. The optimized model was utilized to predict the PM2.5 data, and 10 error metrics were applied to evaluate the model’s performance and accuracy. The experimental results showed that the proposed WD-OLMSSA-KELM model (R: 0.995, RMSE: 11.906, MdAE: 2.424, MAPE: 9.768, KGE: 0.963, : 0.990) can predict the PM2.5 data with superior performance compared to the WD-CatBoost, WD-LightGBM, WD-Xgboost, and WD-Ridge methods.

Despite all the advantages of the proposed model, we also have some limitations in the new system. One is that the user-defined values in the optimization core are chosen by the user and are not fully dynamic. The other limitation is the evolutionary nature of the KELM optimization, which may fall in local optima in some other datasets and needs further tuning and a bigger population size or iteration number. For future works, the proposed model can be extended with evolutionary-based feature selection models, and ensemble models will be investigated. Furthermore, we will compare the performance of the WD-OSMSSA-KELM prediction system with more new models and studies using new datasets in other cities in China. Another future work will be the extension of the proposed model using other variants of the KELM and a multi-objective variant of the OSMSSA.