Abstract

This paper is concerned with the parameter estimation of non-linear discrete-time systems from noisy state measurements in the state-space form. A novel sparse Bayesian convex optimisation algorithm is proposed for the parameter estimation and prediction. The method fully considers the approximation method, parameter prior and posterior, and adds Bayesian sparse learning and optimization for explicit modeling. Different from the previous identification methods, the main identification challenge resides in two aspects: first, a new objective function is obtained by our improved Stein approximation method in the convex optimization problem, so as to capture more information of particle approximation and convergence; second, another objective function is developed with L-regularization, which is sparse method based on recursive least squares estimation. Compared with the previous study, the new objective function contains more information and can easily mine more important information from the raw data. Three simulation examples are given to demonstrate the proposed algorithm’s effectiveness. Furthermore, the performances of these approaches are analyzed, including parameter estimation of root mean squared error (RMSE), parameter sparsity and prediction of state and output result.

Keywords:

sparse Bayesian identification; state-space; convex optimisation; Stein approximation method MSC:

37M

1. Introduction

In the actual world, non-linear systems are commonplace, such as social networks, industry systems, biological systems, finance, and chemical engineering. Identification of non-linear systems are widely acknowledged for its importance and difficulties [1,2], such as fractional order system [3,4,5], neural networks [6], non-linear ARMAX (NARMAX) [7], and Hammerstein–Wiener [8] models. The non-linear state-space model is a kind of expression for all these non-linear systems. A common method for identifying non-linear state-space models is to look for a concise description that is consistent with some non-linear terms (kernel functions) based on data [9,10]. Classic functional decomposition methods, such as Volterra expansion, Taylor polynomial expansion, or Fourier series [9,11], provide a few options for kernel functions. These methods are founded on the idea that there are a finite set of fundamental kernel functions whose linear combination can be utilized to describe the dynamics of a non-linear state-space system. However, under the condition of more kernel functions, the efficiency of this kind of method decreases rapidly. A promising way to identify non-linear state-space systems is probabilistic method [12,13,14,15], which has received a lot of attention over the past few years. Earlier non-linear state-space systems identification methods based on probabilistic method, such as regression method, maximum likelihood (ML) [16], expectation-maximization (EM) [17], mainly utilize gradient descent method while ignoring the parameter identification and approximation process information.

To further improve the efficiency of parameter identification, many identification techniques have been presented that combine the gradient descent technique and Bayesian approximation recently. In particular, those based on the variational inference method have attracted more attention due to their superior performance, such as variational inference in the Gaussian process (GP) [12], Gaussian-process state-space model (GP-SSM) [13], deep variational Bayes filters (DVBF) [14], optimistic inference control (OIC) [15]. Various approximation expectation-maximization (EM)-based techniques have also been studied. In [18], the authors have examined applying EM method to estimate the parameters of a non-linear state space model of the disease dynamics. In [19,20], both Bayesian and a ML estimation strategy are employed in addition to a competitive GP approximation method. For learning, Monte Carlo technique and EM method are utilized in [21], which also includes variational method using the same GP approximation.

Notably, none of these methods take into account the prediction’s resilience and all of them presuppose that the structure of non-linear state-space models is unknown. However, in reality, many parameters are missing precision due to reasons, such as slow convergence rate or falling into local optimum. In this article, we concentrate on the optimum problem since it is more difficult and has numerous real-world implications. For example, in a pilot plant PH process, Hammerstein–Wiener model is used in the prediction of the neutral liquid pH value [22]. In addition, the problem of non-linear state-space systems with missing precision is related to complexity of parameters. Sparse representation can be used in dealing with sparse solutions of linear regression equations, which can effectively reduce the complexity of parametric solutions. There are just a few papers that deal with the sparse representation identification issue and multiple constraints on the system’s parameters [23,24]. In [25], the convex constraint is added to the parameters sparse representation in the non-linear identification algorithm. These works of literature only consider the selection of sparse methods and do not consider conditional restrictions in the process of parameter approximation.

Unlike the previous work, our suggested framework, on the other hand, allows us to include more constraints on the corresponding model parameters, e.g., inequality constraint, priori information and Stein discrepancy constraints. The dynamical system discovery issue is reimagined in this paper from the perspectives of sparse regression [26,27,28], compressed sensing [29,30,31], Stein approximation theory [32], and convex optimization method [23]. The use of sparsity approaches is relatively new [33,34,35] in dynamical systems. We all know that most non-linear state-space systems have only a few important dynamics elements, resulting in sparse parameter set in a high-dimensional non-linear function space.

Although these efforts are focused on non-linear system identification using state-space models, they have some significant flaws. First, these methods carry out system identification in a two-stage way, that is, compute the posterior objective function with parameters, and then learning system parameter with noise. The computing posterior objective function and the learning system parameter are two different processes in these two-stage approaches, and their parameters cannot be modified together. System identification will perform worse as a result of this. The ideal relationship between these two processes would be one of complementarity. That is, learning system identification should contribute to computing posterior objective function, and the updated posterior objective function should contribute to learning system parameter with noise. For non-linear systems with state-space formulation, ref. [36] addresses the recursive joint inference and learning problem, and a reduced rank formulation of GP-SSMs is used to model the system as a Gaussian-process state space model (GP-SSM). In [37], a two-stage Bayesian optimization framework is introduced, which consists of representation of the objective function in low-dimensional parameter space and surrogate model selection in the reduced space. In this study, the only posterior objective function is considered, which can not achieve effective interactive learning, and may also compromise the optimization performance for system parameters.

To address the problems of existing identification methods for non-linear state-space systems, we propose non-linear state-space identification algorithm with Sparse Bayesian and Stein Approach (NSSI-SBSA), which is an optimization approach for improving the accuracy of system parameter identification and posterior distribution computing simultaneously in an integrated structure as opposed to the conventional two-stage method. In our new method, we select least absolute shrinkage and selection operator (LASSO) [26] as parameter sparsity algorithms. The sparse parameter is taken into posterior distribution, which reduced the complexity of posterior distribution. Compared with some sparse method of least angle regression (LARS) [38], sequentially thresholded least squares (STLS) [39], and basis pursuit denoising (BPDN) [40], LASSO is more suitable for high-dimensional data. In our article, the sparse model identification results strikes a natural balance between model sparsity and precision, and prevent the model from being overfit to the data.

From a statistical perspective, we will discuss how a Bayesian technology, optimization method, and Stein approximation strategy might mitigate the difficulties of large correlations in the state matrix. The following are some of the technical note’s most important contributions:

- (1)

- The NSSI-SBSA algorithm is proposed. In the algorithm, the sparse method is used for the parameter estimation and prediction. Parameter prior, Bayesian sparse learning, and optimisation are used in an integrated computing framework instead of the classical two-stage method. The sparse model identification results strikes a natural balance between model sparsity and precision, preventing the model from overfit to the data.

- (2)

- A nonconvex optimisation problem is constructed in the non-linear state-space system identification issue with additive noise. Compared with other related methods, we not only take the evidence maximisation as an objective function, but also consider the Stein discrepancy of parameters as another objective function in the non-convex optimization problem. The two functions are integrated into one objective function containing more information. It can captures more important information from the raw date and reduce the complexity of parametric solutions.

The rest of this paper is organized as follows. Section 2 describes problem statement and background. In Section 3, construct the model in Bayesian framework. In Section 4, non-convex optimisation with Stein constrain for identification is introduced. Three numerical illustration, including Narendra-Li Model, NARX model, Kernel state-space models (KSSM) are presented in Section 5. Finally, we give some closing remarks in Section 6.

2. Problem Statement and Background

We consider the following non-linear state-space model [25]

where is the state variable in time step k, and is the external control input. When system is time continuous, ; When system is time discrete, or ; is noise (when ), which is set to be i.i.d. Gaussian distribution. and is Lipschitz continuous function, and and are the dimensions of and , respectively. is the weight of basis functions. Together, and determine the dynamics. It is worth emphasizing that we make no assumptions about the non-linear functions on the right-hand side of (1).

If the system can provide M data samples that meet (1), the system in (1) can be represented as

where , and is called dictionary matrix. j-th column in is described by

In this article, the identification task is to estimate from the measured data of . This leads to the solution of a linear regression issue, in which the least square (LS) method can be used if some of model’s non-linear part is understood, i.e., is known. In (1), we merely discuss the identification problem, as we do in . Because of the potential non-relevant or independent columns in , the solution in is often sparse, and a few frequently used non-linear dynamical models need to be considered. For the convenience of expression, we rewrite the linear regression issues in (2) into the following form

3. Constructing the Model in Bayesian Framework

All unknowns in Bayesian modeling are evaluated as they are random variables with specific distributions [41]. For in (3), noisy variables is Gaussian independently identically distribution (i.i.d.), i.e., , identity matrix . We can obtain the likelihood of the data . is a prior distribution defined as , and is hyper parameter. For the convenience of calculating sparse parameters , is selected as a concave non-decreasing function of . The priors of includes Gaussian and t-distribution (see [42] for details).

The posterior is heavily linked and non-Gaussian, so computing the posterior mean is often difficult. To solve this problem, take as an approximation of Gaussian distribution. In [41], effective posterior computation algorithms are used in the computation.

Another method is to use super Gaussian priors, in which the priors is computed by the variational EM algorithms [42]. We define hyperparameters . The priors of can be written as: , , where is probability density function and . , where . Considering the data , the posterior probability of can be represented as From [43], the posterior mean and covariance are given by:

where is a diagonal matrix written as . It is obvious to maximize the most important question is how to select the best . and are taken as prior information, so we need only consider . Using type-II ML [43], the marginal likelihood can be maximised, and the selected is written as

After is computed in (5), the estimation of can be obtained as , with It indicates that picking the most likely hyperparameters is capable of explaining the data .

4. Non-Convex Optimisation with Stein Method for Identification

4.1. Stein Operators Selection and Stein Constrain Design

The approach can be sketched as follows for a target distribution P with support Z. Find a suitable operator (referred to as the Stein operator) and a large class of functions (referred to as the Stein class) such that Z has distribution P, denoted , if, and only if, we have for all functions

A Stein operator can be designed in a variety of ways [32]. In our framework, Stein’s identity and kernelized Stein difference are crucial. is probability density function, which is continuous and differentiable on . According to Stein’s theory, suitable smooth and derivable function and are selected in (6), which are expressed as , .

where

The Stein operator operates on the function and produces a zero mean function under .

Assume mild zero boundary conditions on , when is compact, . The expectation of under are no longer equal zero. The magnitude of is related with the difference between P and Q. The probability distances for and in some proper function set are defined as

The discriminative power and computational tractability of the Stein discrepancy are determined by the set . includes sets of functions with bounded Lipschitz norms, each of which is a difficult and intractable functional optimization problem with special considerations. To tackle this trouble of calculation, and are selected in the unit sphere of a reproducing kernel Hilbert space (RKHS) [32]. Kernelized Stein discrepancy (KSD) between and is described as

The optimal solution of (8) is where

A direct calculation shows that

For any fixed , kernel function belongs to RKHS. =0, that is to say only if . The radial basis function kernel is purely positive definite in a strict sense. When approaches , the converges to 1. Then, contains the information of parameter approximation, which is an important factor affecting the accuracy of parameters . For convenience, we define and . and are from the Gaussian distribution with different hyperparameters. is defined as follows

By subtracting and into we have

Based on , we have

According to the results, is written as

It is easy to derive the expectation of

For convenience, let , we have

Putting every together, we obtain the following result:

Based on the (11), we see that is non-convex objective function in the -space. The optimisation problem is described as

Remark 1.

Stein method is improved and kernel function is also from Stein class , but the dynamic characteristics of proposed function is considered in the designing of operator for the approximation of in the unit sphere of RKHS. The new operator can increase the chance to jump out of the local non-convex optimum. In the optimization problem, is a new objective function, which can accelerate approaching speed between and

4.2. Parameter Sparse Identification of Constraints from Data

In (12), is another objective function, which makes the parameter less sensitive to noisy data and converges to true value. The problem of system identification with convex constraints is given by a sparse Bayesian formulation, which is then handled as a non-convex optimisation problem in this section. To obtain a better parameter , the new objective function is constructed as

4.2.1. Objective Function in Parameter Identification

Theorem 1.

Use the notation as the objective function

By minimising , the optimal hyperparameters in (13) is derived, where . The mean of is calculated and represented as .

Proof.

Using the Woodbury inversion identity, re-express and in (4):

Since the data likelihood P is Gaussian, we can express the integral in (13) as follow:

where

We obtain using the Woodbury inversion identity.

Just for the sake of calculation, we evaluate the integral of as follows

Then, applying a transformation to (17), we have

where , From (13), we then obtain

To acquire an approximation of , we compute the posterior

mean .

Remark 2.

In (13), the objective function of recursive least squares estimation with L1-regularization is developed, which is integrated into the objective function of Stein approximation. The new one contains more information and can captures more important information from the raw date. We can obtain the relative good parameter probability distribution.

Lemma 1.

in (14) is non-convex function.

Proof of Lemmma 1.

The data-dependent term in (14) is studied. By (15) and (16), it can be transformed as

The minimisation issue is simply demonstrated to be convex in and dimensions. Define . is concave function. Furthermore, is an affine function of . When it is positive semi-definite. This means is a concave non-decreasing function of . We can see that is a concave function with respect to .

4.2.2. Modified Objective Function in Estimation

We use the modified objective function of with a penalty function. By analyzing the corresponding objective function of (14) in the space, the analogous objective function is subsequently shown to be non-convex as well.

Theorem 2.

Solving the optimisation problem below yields the estimated value for θ given restrictions.

where penalty function .

Proof of Theorem 2.

Using the data-dependent term in and in (14), a stringent upper boundary auxiliary function can be created on as

When we minimise over and obtain

From the derivations in (21), we can see that the posterior mean is the estimate of the parameter .

Lemma 2.

In Theorem 2, the penalty function promotes sparsity on the weights by being a non-decreasing concave function of θ.

Proof of Lemma 2.

It is obvious that in Lemma 1 is concave. Using the duality lemma (see Section 4.2 in [35]), is denoted as , where is defined as the concave conjugate of and . By (21), function can be re-written as

is re-expressed as

It is easy to see that when , reaches the minimum over . Substitute into

When is minimum, is much more sparse. From (26), is non-decreasing concave function of .

4.2.3. Parameter Estimation with Sparse Method

From (23), we can see that does not affect the estimation of parameters , so is redefined as

For a fixed , we notice that is jointly convex in and . In (27), we can obtain . When , is minimized for any . Substitute the into , can be obtained as follows:

Due to the concavity of , the objective function in Theorem 2 can be optimised using a re-weighted -minimisation in a similar kth way as was considered in (27).

In order to obtain more stable and accurate parameter , the re-estimated method is put forward (Algorithm 1). At k-th iteration, the modified weight is then supplied by:

where On the basis of the aforementioned, we can now describe how the parameters can be updated. To begin, we set the iteration count k to zero, and initialise

| Algorithm 1: Non-linear state-space identification algorithm with sparse Bayesian and Stein approach (NSSI-SBSA) |

Input: 1: Generate time series data from the system of discrete-time dynamics characterized by (1) 2: Choose the dictionary functions that will be used to build the dictionary matrix mentioned in Section 2; , stopping threshold 3: for do 4: Solve the minimisation problem with L1-regularization and optimization method on .

5: Update parameter and in (28) and (29). 6: 7: Update parameter 8: end for 9: if then 10: Break 11: end if Output: The sparse weight set of . |

We obtain . is considered again. For any fixed and , the tightest bound can be obtained by minimising over . is estimated, which equals the gradient of the function in Lemma 1. The estimation of is computed as

where , . The optimal can then be replaced by After computing the estimation of

we can compute , which gives

can be defined

Substitute and into (27). Certain weights are estimated at each iteration k until , where is stopping threshold. Algorithm 1 summarizes the above-mentioned procedure.

5. Numerical Example

All examples are conducted on a computer with an Intel Core i7-6500U CPU@2.50-GHz and 16 GB of RAM. CVX package is used to solve convex programs in MATLAB2016 platform. We will give three numerical examples: Narendra-Li Model [20], NARX model [25], and Kernel state-space models [44]. The utility and performance of Algorithm 1 is proven on three simulation cases in this section. The performance of Algorithm 1 on examples is then illustrated involving a well studied and challenging non-linear system. The root mean squared error (RMSE) criterion will be utilized to demonstrate the performance of the suggested identification approach against noise perturbation. The ith estimate of parameter is denoted by at the Monte Carlo experiment. The RMSE at the experiment is defined as

where represents the true system parameter vector, and n is the trials. To validate the theoretical results, the identification of the structured state-space model in cases will be simulated in this part.

5.1. Example 1: Parameters Identification General Narendra-Li Model

Consider the state space representation of a non-linear system:

where the state variable . is Gaussian white noise. To generate the estimation data, the system is excited with a uniformly distributed random input signal with . The validation dataset is generated with the input

Let Because there are two state variables, the dictionary matrix can be built as follows:

Then, the state set can be defined as

Using the dictionary matrix in (34), the true value of parameter for the model in (32), (33) should be as follows:

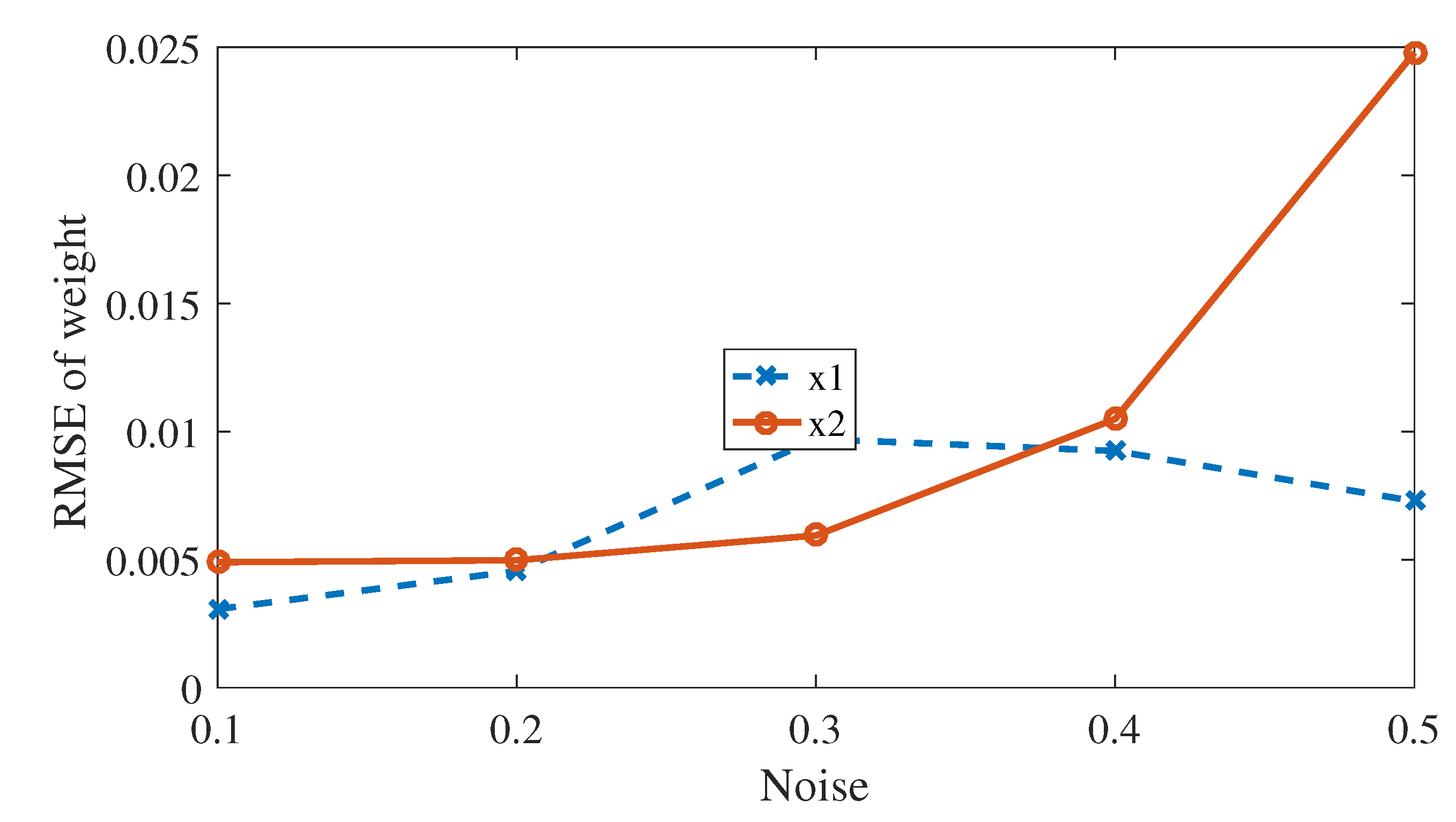

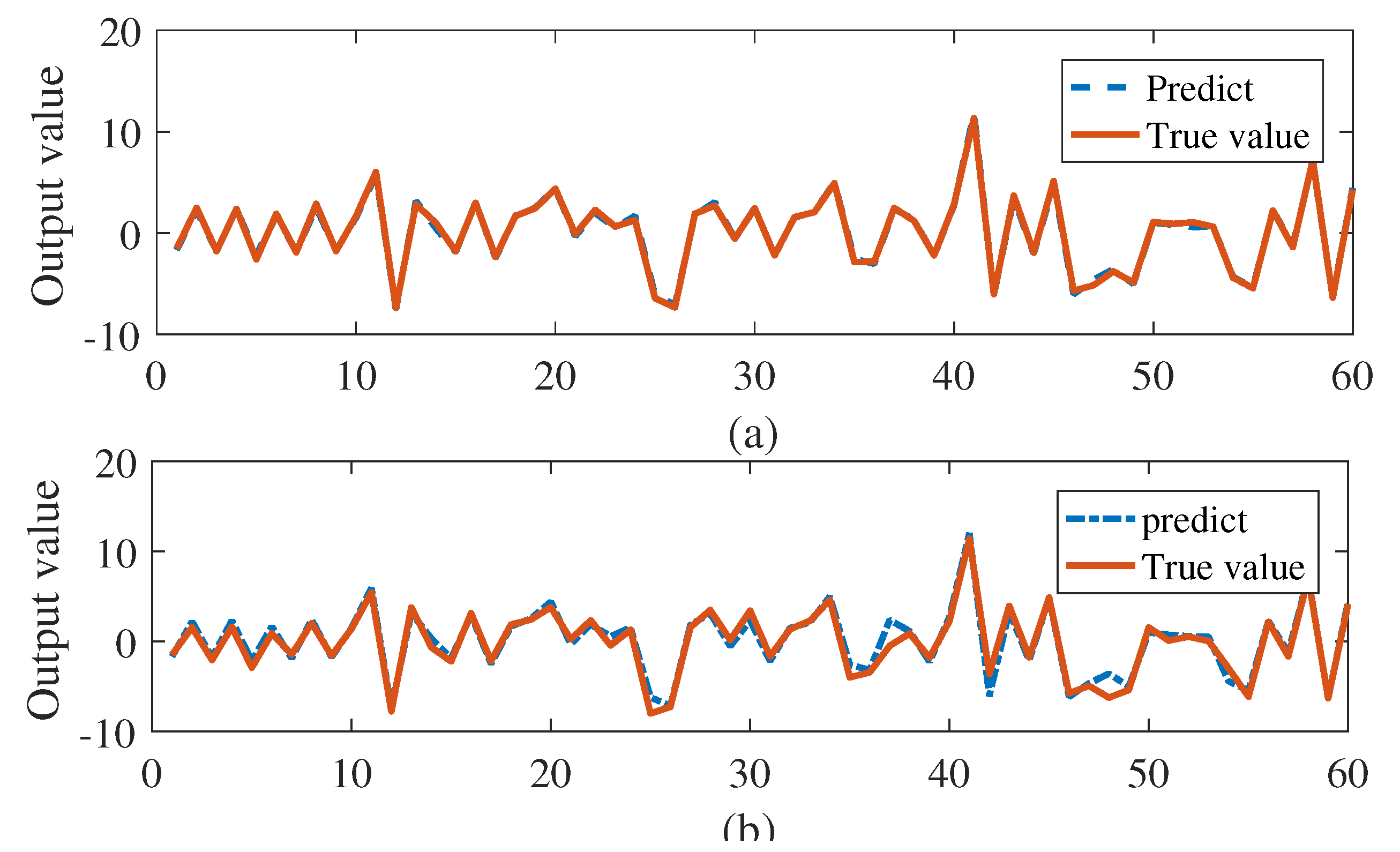

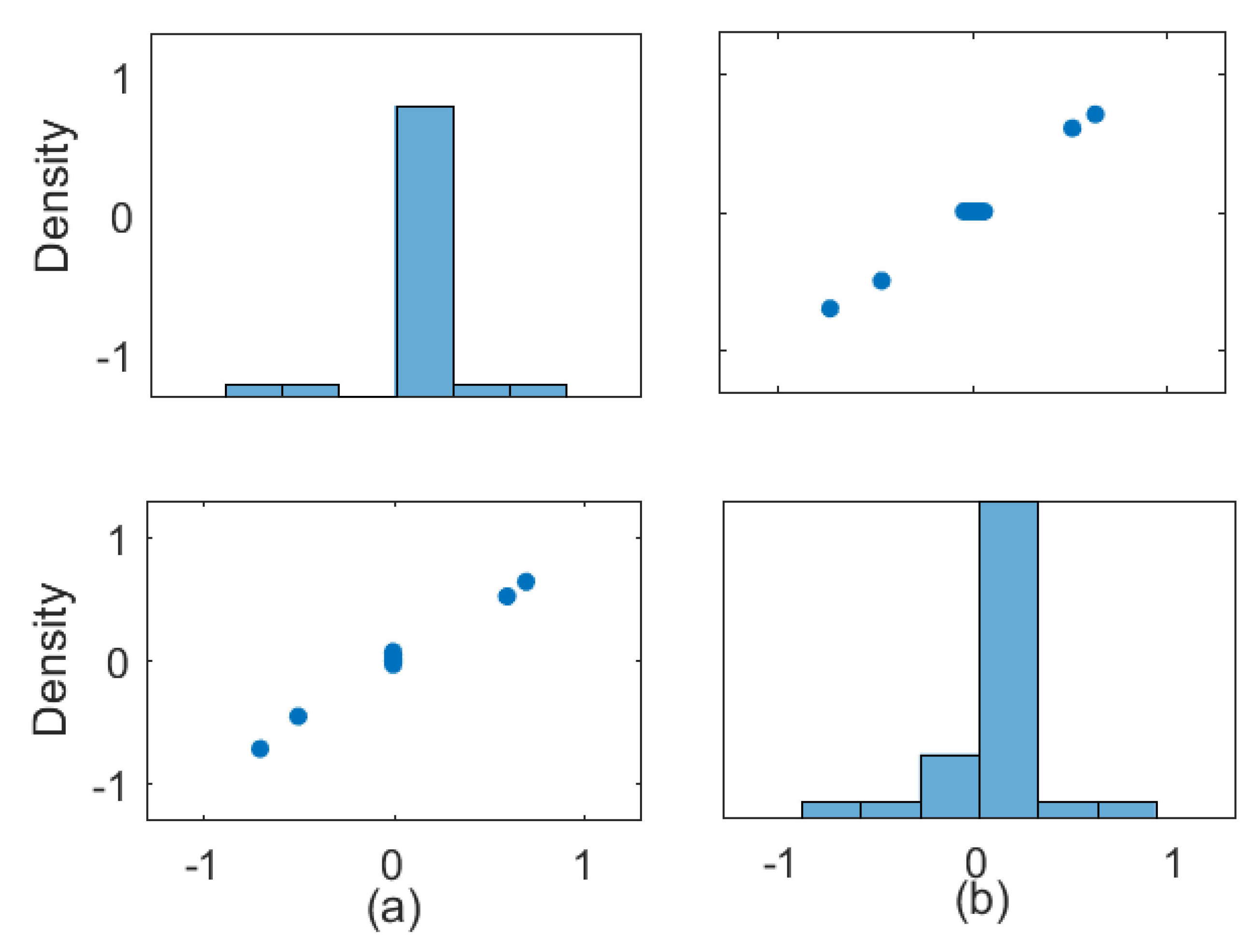

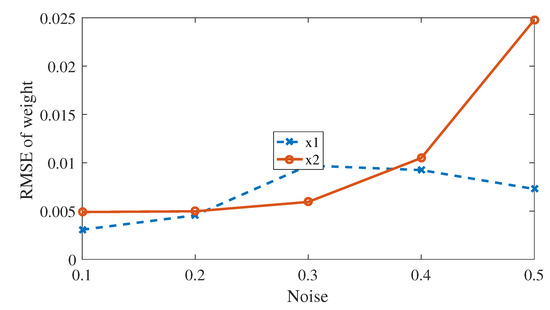

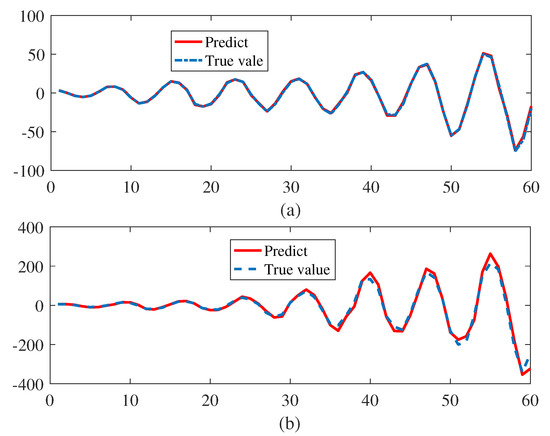

The parameters in bracket are true value. In our study, we use samples for learning and add white Gaussian measurement noise of to the training data. In (32) and (33), Algorithm 1 is used for identifying parameters. The coefficients is learned from prior data in (35). RMSE of is computed in the simulation, the result of which is . When the noise are 0.2, 0.3, 0.4, and 0.5, there is little change in the value of RMSE of in Figure 1. Despite using 2000 data points of [20], our method is substantially better than [20] in Table 1. Table 1 compares some previous results reported in the literature [45,46,47] with our method, we can also see that our method perform the best. In this experiment, we also examine how the method performs for various . The last 60 in generated data sequence is selected for testing and the predicting result is compared with the true value. When the Algorithm 1 is executed 8 times, the average of the RMSE of output is 0.06, and is not increased fast from Figure 2.

Figure 1.

RMSE of state and .

Table 1.

Accuracy comparison of different methods ().

Figure 2.

Output of the state mode for 60 testing data in example 1: (a) and (b) .

In general, it is clear that the proposed model is capable enough to well describe the system behavior.

5.2. Example 2: Application to a NARX Model

We analyze the following polynomial terms model for a single-input single-output (SISO) non-linear autoregressive system with exogenous (NARX) input in this example [25].

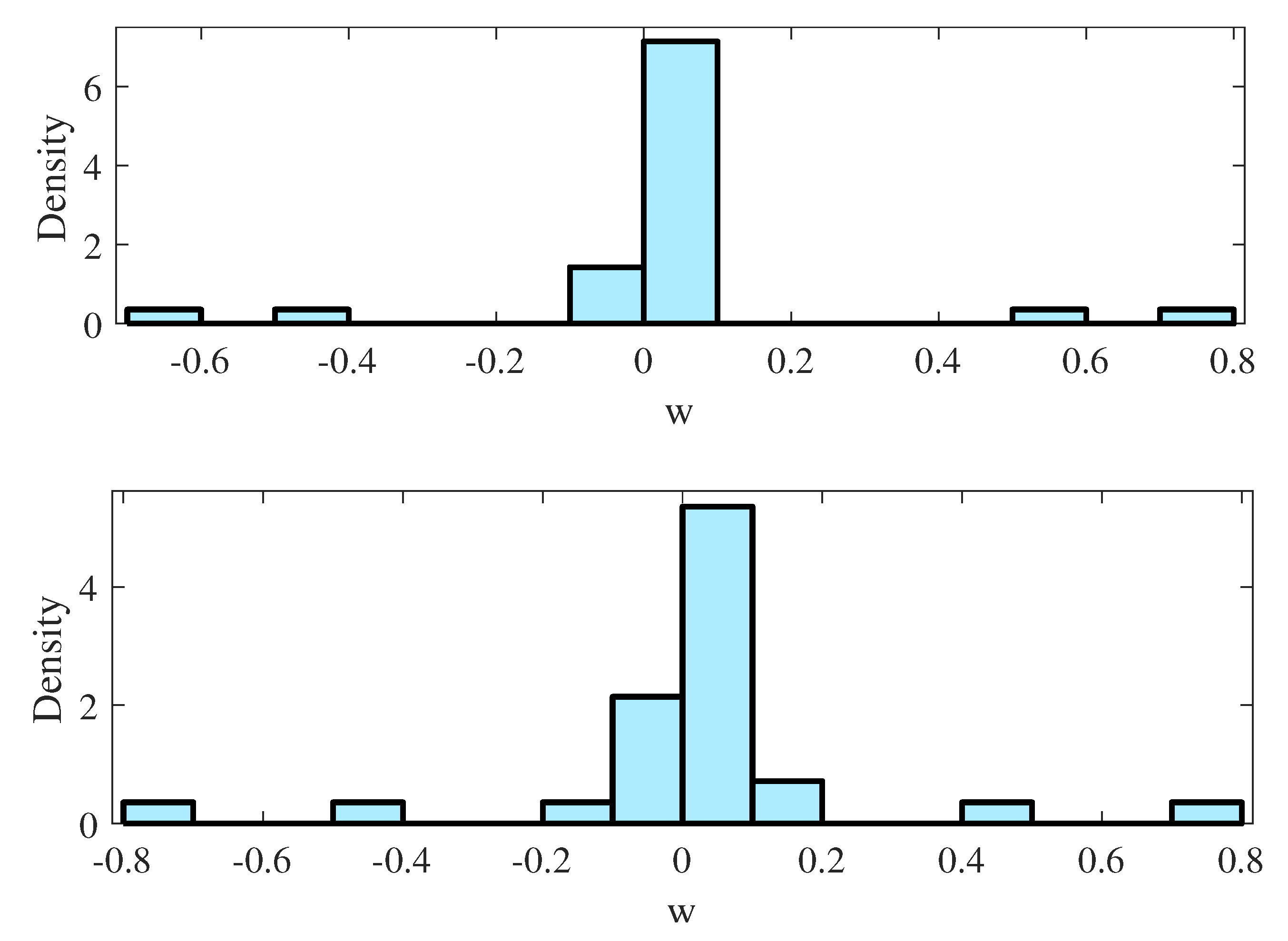

with . In expanded form, we may write (36) as:

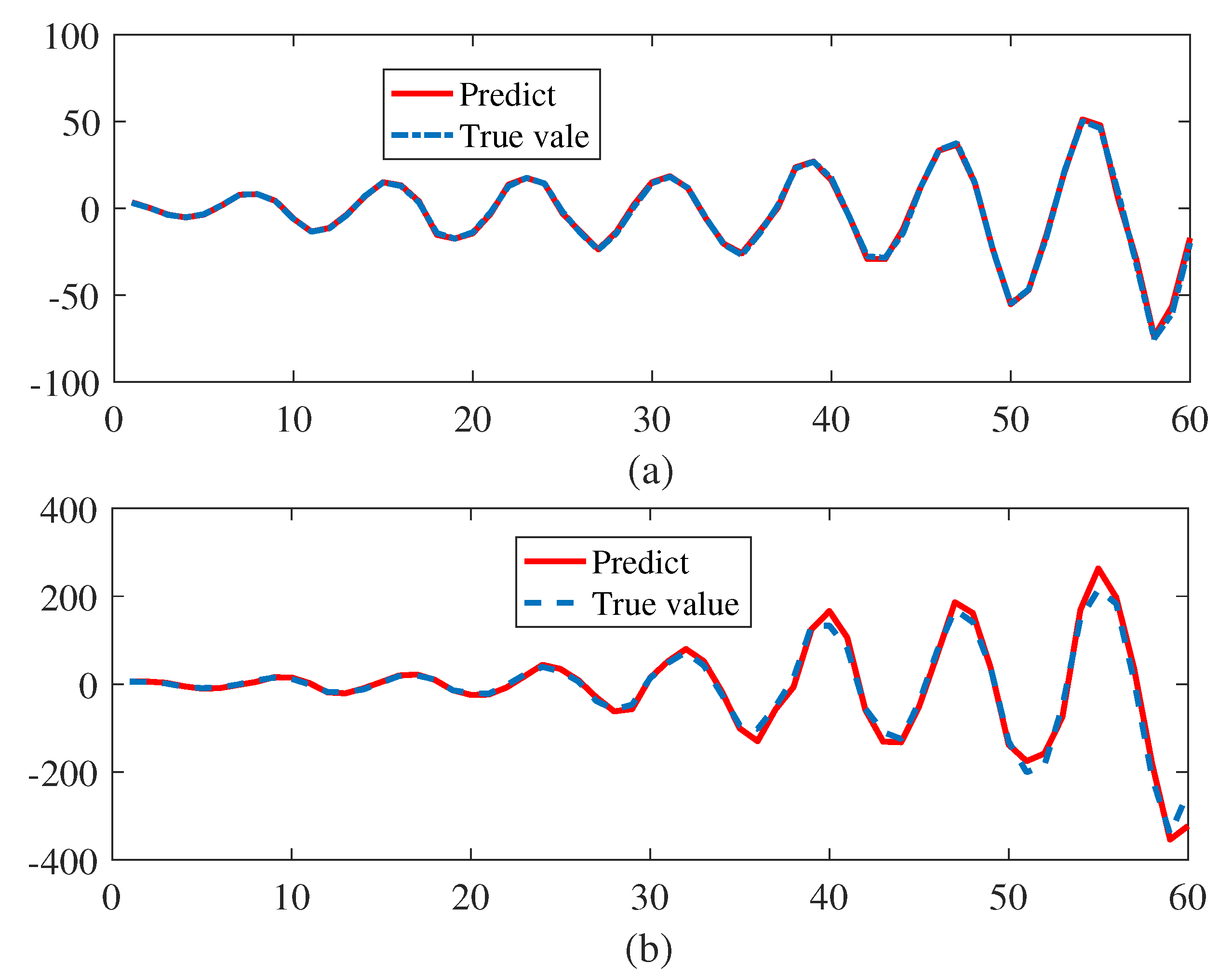

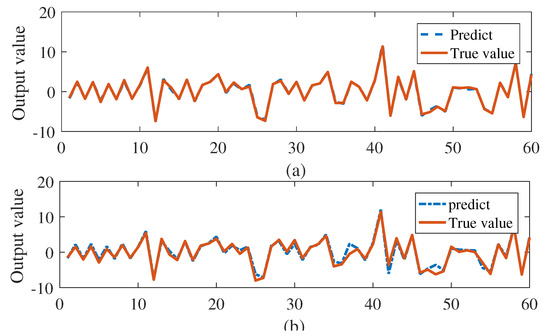

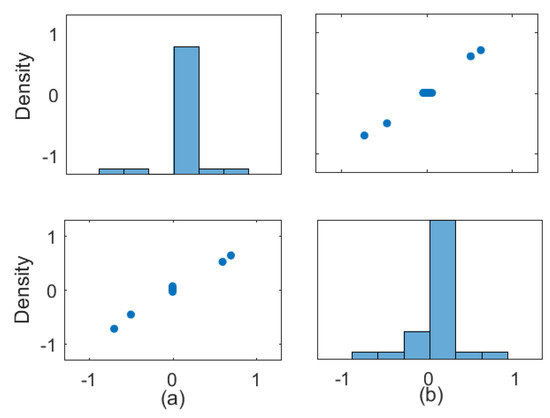

Model (36) is the general form of (37). and are the degree of the output and input; and is the given memory order of the output and input; is the weight vector; and is the functions vector. Taking the NARX model (36) as an example, we set that , , , . This yields and, thus, . Since , only 4 of the 28 linked weights are non-zero. In our study, we use k = 1000 samples for learning with white Gaussian noise. The last 60 in generated data sequence is selected for testing and the predicting result is compared with the true value. The estimated parameter w agrees with the true value as shown in Figure 3. The predicting performance of Algorithm 1 is shown in the Figure 4. From the Figure 4, the predicted and exact trajectories match well with different . When Algorithm 1 is executed 8 times, the average of the RMSE of output are 0.021 and 0.074, which are tolerable in the application.

Figure 3.

The distribution of w: the above is true w, the below is the estimated w model.

Figure 4.

Output of the state model for 60 testing data in example 2: (a) and (b) .

5.3. Example 3: Kernel State-Space Models (KSSM) for Autoregressive Modeling

Kernel state-space models (KSSM) is autoregressive model, which satisfy the -order difference equation. As seen below, the model may be described as a first-order multivariate process.

where , , and .

The hidden state of an SSM can then be viewed as the process , producing an SSM formulation of a complex autoregressive model with noisy . By using non-linear autoregressive modeling with a fixed number of delayed samples, the model can be utilized to predict time series. In addition, if the state-transition function is defined using kernels (39), we derive the suggested KSSM suited for autoregressive time series.

where is the observed process, is the observation function, is observation noise, and is the weight. Periodic time series is widely used in physics, engineering and biology. We take Fourier kernel function in the KSSM. Consider 5 candidate kernel functions for : , , , and

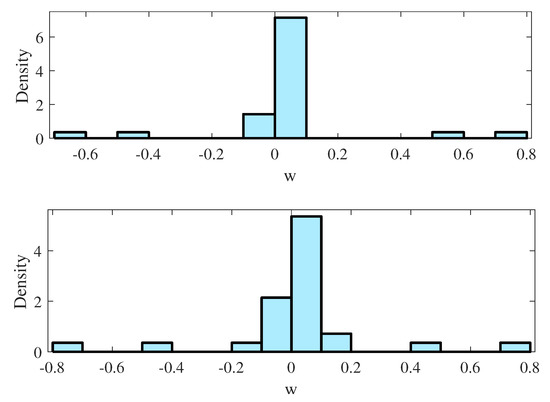

Algorithm 1 is applied in the identification of parameter w in (39). The RMSE of w is 0.16, which is a satisfactory result. The estimation data in the experiment has 500 sample points, and Figure 5 shows the simulated outputs of the two processes evaluated on the validation set. In Figure 5, we compare the true and estimated value w using the probability distribution and dispersoid distribution. It can see that the sparse effect of the algorithm proposed in this paper is obvious.

Figure 5.

Compare of distribution of w with sparsity: (a) sparse value and (b) true value.

6. Conclusions

The parameter estimation of non-linear discrete-time state-space systems with noisy state data are the subject of this work. For parameter estimation and prediction, a novel sparse Bayesian convex optimisation method (NSSI-SBSA) is presented, which considers approximation method, parameter prior, and posterior. The fundamental problem with identification is divided into two parts: the first step, the improved Stein approach is used to create a new optimisation objective function. The second step is to create a reweighted -regularized least squares solver, with the regularization value chosen from the optimization point. The new objective function is more information-rich and can easily extract more critical information from the raw data than the previous study. From the three examples, the NSSI-SBSA algorithm usually captures more information about the reliance of the data indicators than the methods discussed in the introduction part.

Author Contributions

Methodology, L.Z. and J.L.; Formal analysis, W.Z.; Writing—review and editing, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation (NNSF) of China under Grant (61703149) and the Natural Science Foundation of Hebei Province of China (F2019111009).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ljung, L. Perspectives on system identification. Annu. Control. 2010, 34, 1–12. [Google Scholar] [CrossRef]

- Luo, G.; Yang, Z.; Zhan, C.; Zhang, Q. Identification of nonlinear dynamical system based on raised-cosine radial basis function neural networks. Neural Process. Lett. 2021, 53, 355–374. [Google Scholar] [CrossRef]

- Yakoub, Z.; Naifar, O.; Ivanov, D. Unbiased Identification of Fractional Order System with Unknown Time-Delay Using Bias Compensation Method. Mathematics 2022, 10, 3028. [Google Scholar] [CrossRef]

- Yakoub, Z.; Amairi, M.; Aoun, M.; Chetoui, M. On the fractional closed-loop linear parameter varying system identification under noise corrupted scheduling and output signal measurements. Trans. Inst. Meas. Control. 2019, 41, 2909–2921. [Google Scholar] [CrossRef]

- Yakoub, Z.; Aoun, M.; Amairi, M.; Chetoui, M. Identification of continuous-time fractional models from noisy input and output signals. In Fractional Order Systems—Control Theory and Applications; Springer: Cham, Switzerland, 2022; pp. 181–216. [Google Scholar]

- Kumpati, S.N.; Kannan, P. Identification and control of dynamical systems using neural networks. IEEE Trans. Neural Netw. 1990, 1, 4–27. [Google Scholar]

- Leontaritis, I.J.; Billings, S.A. Input-output parametric models for non-linear systems part II: Stochastic non-linear systems. Int. J. Control. 1985, 41, 329–344. [Google Scholar] [CrossRef]

- Rangan, S.; Wolodkin, G.; Poolla, K. New results for Hammerstein system identification. Proceedings of 1995 34th IEEE Conference on Decision and Control, New Orleans, LA, USA, 13–15 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 697–702. [Google Scholar]

- Billings, S.A. Nonlinear System Identification: NARMAX Methods in the Time, Frequency, and Spatio-Temporal Domains; John Wiley and Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Haber, R.; Unbehauen, H. Structure identification of nonlinear dynamic systems—A survey on input-output approaches. Automatica 1990, 26, 651–677. [Google Scholar] [CrossRef]

- Barahona, M.; Poon, C.S. Detection of nonlinear dynamics in short, noisy time series. Nature 1996, 381, 215–217. [Google Scholar] [CrossRef]

- Frigola, R.; Lindsten, F.; Schon, T.B.; Rasmussen, C.E. Bayesian inference and learning in Gaussian process state-space models with particle MCMC. Adv. Neural Inf. Process. Syst. 2013, 26. [Google Scholar]

- Frigola, R.; Chen, Y.; Rasmussen, C.E. Variational Gaussian process state-space models. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Karl, M.; Soelch, M.; Bayer, J.; Van der Smagt, P. Deep variational bayes filters: Unsupervised learning of state space models from raw data. arXiv 2016, arXiv:1605.06432. [Google Scholar]

- Raiko, T.; Tornio, M. Variational Bayesian learning of nonlinear hidden state-space models for model predictive control. Neurocomputing 2009, 72, 3704–3712. [Google Scholar] [CrossRef]

- Ljung, L. Theory for the User. In System Identification; Prentice Hall: Hoboken, NJ, USA, 1987. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Ser. B 1977, 39, 1–22. [Google Scholar]

- Duncan, S.; Gyongy, M. Using the EM algorithm to estimate the disease parameters for smallpox in 17th century London. In Proceedings of the 2006 IEEE International Symposium on Intelligent Control, Munich, Germany, 4–6 October 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3312–3317. [Google Scholar]

- Solin, A.; Sarkka, S. Hilbert space methods for reduced-rank Gaussian process regression. Stat. Comput. 2020, 30, 419–446. [Google Scholar] [CrossRef]

- Svensson, A.; Schon, T.B. A flexible state–space model for learning nonlinear dynamical systems. Automatica 2017, 80, 189–199. [Google Scholar] [CrossRef]

- Frigola, R. Bayesian Time Series Learning with Gaussian Processes; University of Cambridge: Cambridge, UK, 2015. [Google Scholar]

- Wilson, A.G.; Hu, Z.; Salakhutdinov, R.R.; Xing, E.P. Stochastic variational deep kernel learning. Adv. Neural Inf. Process. 2016, 2586–2594. [Google Scholar]

- Cerone, V.; Piga, D.; Regruto, D. Enforcing stability constraints in set-membership identification of linear dynamic systems. Automatica 2011, 47, 2488–2494. [Google Scholar] [CrossRef][Green Version]

- Zavlanos, M.M.; Julius, A.A.; Boyd, S.P.; Pappas, G.J. Inferring stable genetic networks from steady-state data. Automatica 2011, 47, 1113–1122. [Google Scholar] [CrossRef]

- Pan, W.; Yuan, Y.; Goncalves, J.; Stan, G.B. A sparse Bayesian approach to the identification of nonlinear state space systems. IEEE Trans. Autom. Control. 2015, 61, 182–187. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.H.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: New York, NY, USA, 2009. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction To Statistical Learning; Springer: New York, NY, USA, 2013. [Google Scholar]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Stein, C. A bound for the error in the normal approximation to the distribution of a sum of dependent random variables. In Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, Volume 2: Probability Theory, University of California, Berkeley, CA, USA, 21 June–18 July 1970, 9–12 April, 16–21 June and 19–22 July 1971; The Regents of the University of California: Berkeley, CA, USA, 1972. [Google Scholar]

- Brunton, S.L.; Tu, J.H.; Bright, I.; Kutz, J.N. Compressive sensing and low-rank libraries for classification of bifurcation regimes in nonlinear dynamical systems. Siam J. Appl. Dyn. Syst. 2014, 13, 1716–1732. [Google Scholar] [CrossRef]

- Bai, Z.; Wimalajeewa, T.; Berger, Z.; Wang, G.; Glauser, M.; Varshney, P.K. Low-dimensional approach for reconstruction of airfoil data via compressive sensing. AIAA J. 2015, 53, 920–933. [Google Scholar] [CrossRef]

- Arnaldo, I.; O’Reilly, U.M.; Veeramachaneni, K. Building predictive models via feature synthesis. In Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation, Madrid, Spain, 11–15 July 2015; pp. 983–990. [Google Scholar]

- Berntorp, K. Online Bayesian inference and learning of Gaussian-process state–space models. Automatica 2021, 129, 109613. [Google Scholar] [CrossRef]

- Imani, M.; Ghoreishi, S.F. Two-stage Bayesian optimization for scalable inference in state-space models. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–12. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Ann. Stat. 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 2016, 113, 3932–3937. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern recognition. Mach. Learn. 2006, 128. [Google Scholar]

- Ma, Z.; Lai, Y.; Kleijn, W.B.; Song, Y.Z.; Wang, L.; Guo, J. Variational Bayesian learning for Dirichlet process mixture of inverted Dirichlet distributions in non-Gaussian image feature modeling. IEEE Trans. Neural Networks And Learn. Syst. 2018, 30, 449–463. [Google Scholar] [CrossRef] [PubMed]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Tobar, F.; Djuric, P.M.; Mandic, D.P. Unsupervised state-space modeling using reproducing kernels. IEEE Trans. Signal Process. 2015, 63, 5210–5221. [Google Scholar] [CrossRef]

- Roll, J.; Nazin, A.; Ljung, L. Nonlinear system identification via direct weight optimization. Automatica 2005, 41, 475–490. [Google Scholar] [CrossRef]

- Stenman, A. Model on Demand: Algorithms, Analysis And Applications; Department of Electrical Engineering, Linköping University: Linköping, Sweden, 1999. [Google Scholar]

- Xu, J.; Huang, X.; Wang, S. Adaptive hinging hyperplanes and its applications in dynamic system identification. Automatica 2009, 45, 2325–2332. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).