A Weld Surface Defect Recognition Method Based on Improved MobileNetV2 Algorithm

Abstract

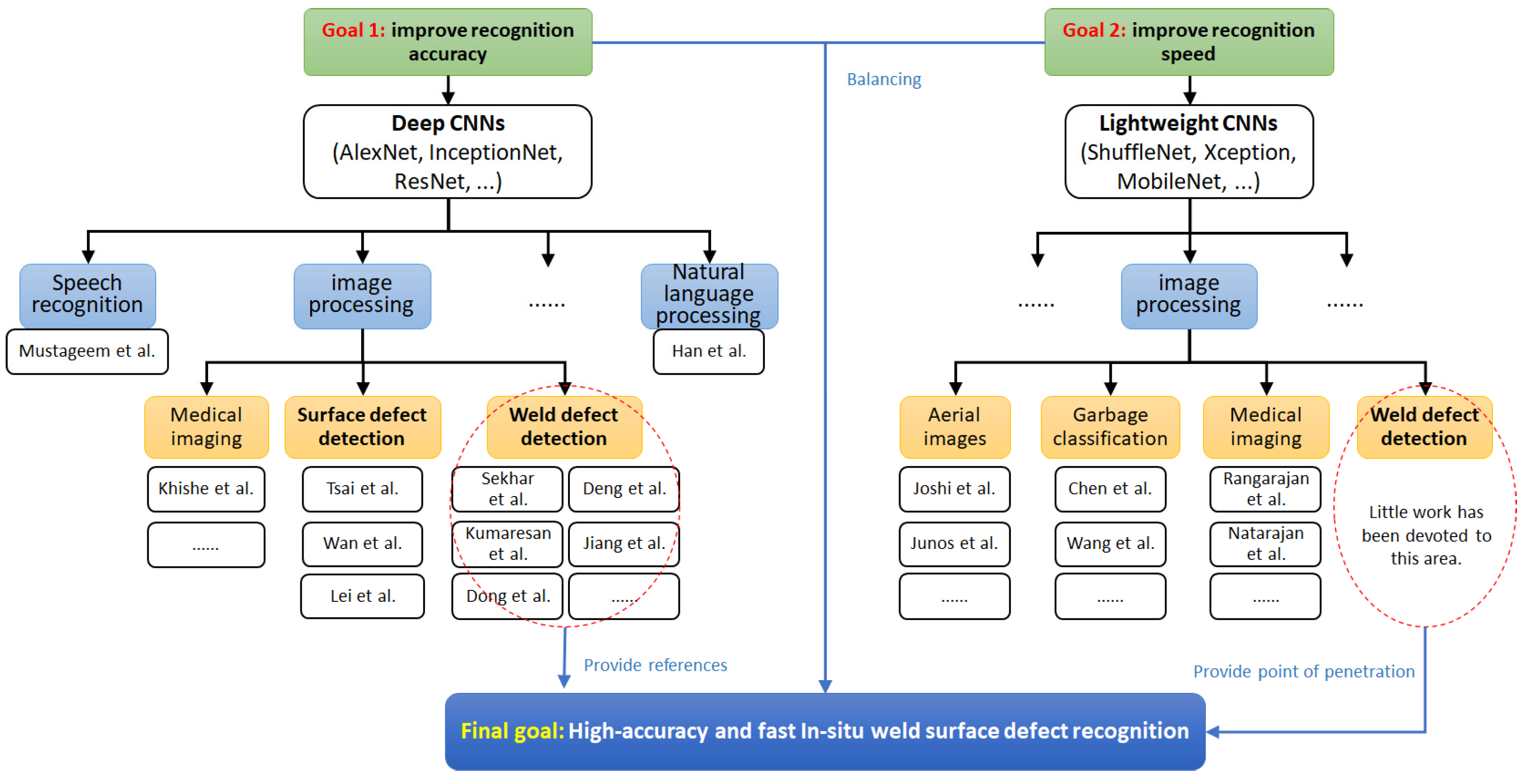

1. Introduction

2. Literature Review

2.1. Applications of Deep CNNs

- (1)

- Surface defect detection

- (2)

- Weld defect detection

2.2. Applications of Lightweight CNNs

3. Weld Surface Defect Recognition Model

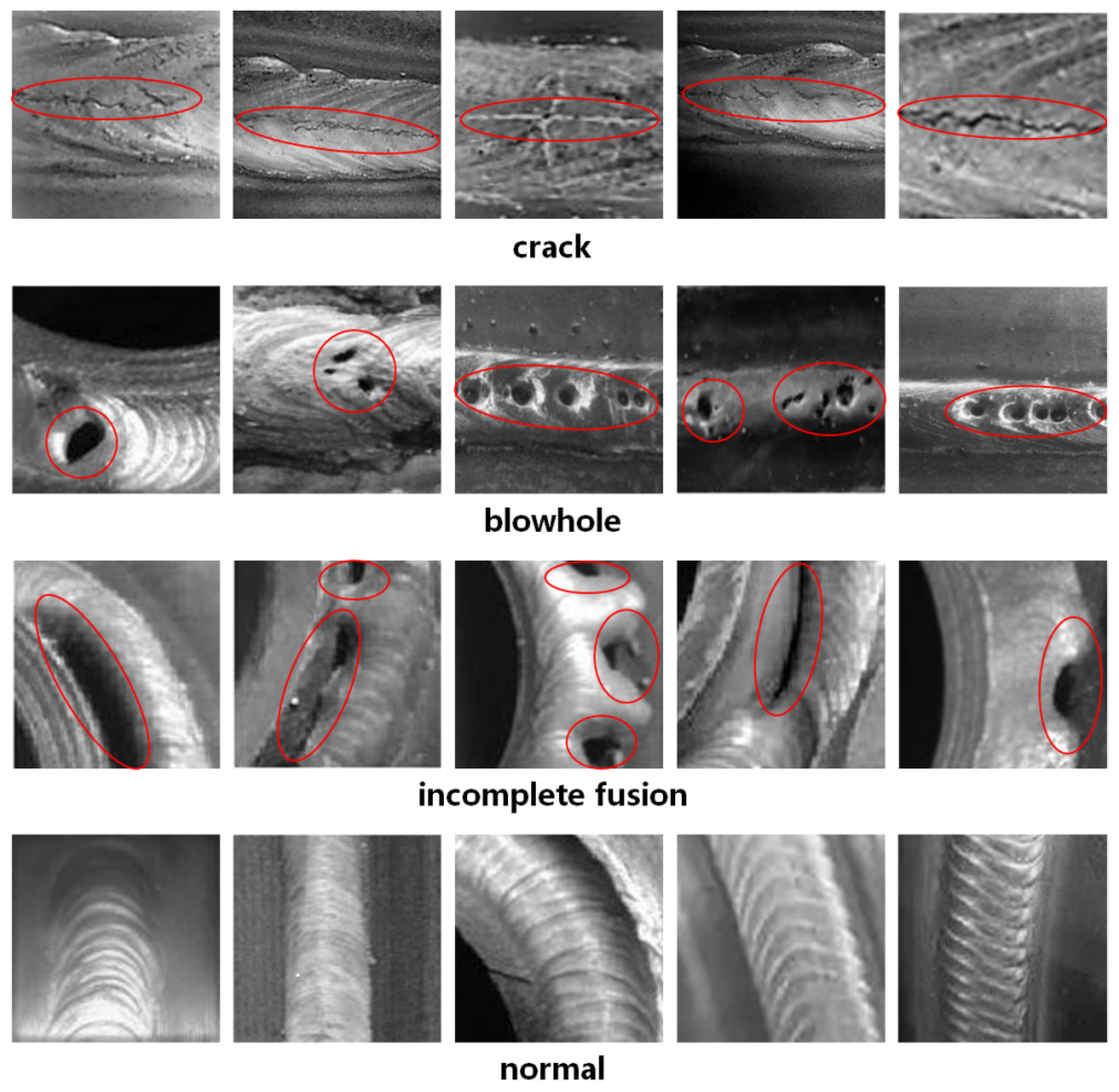

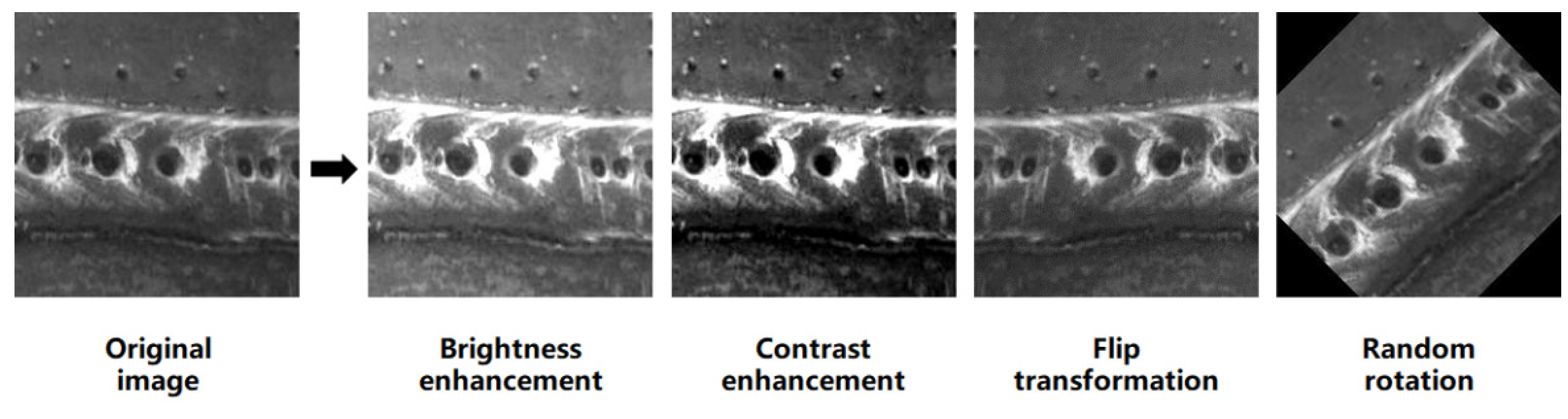

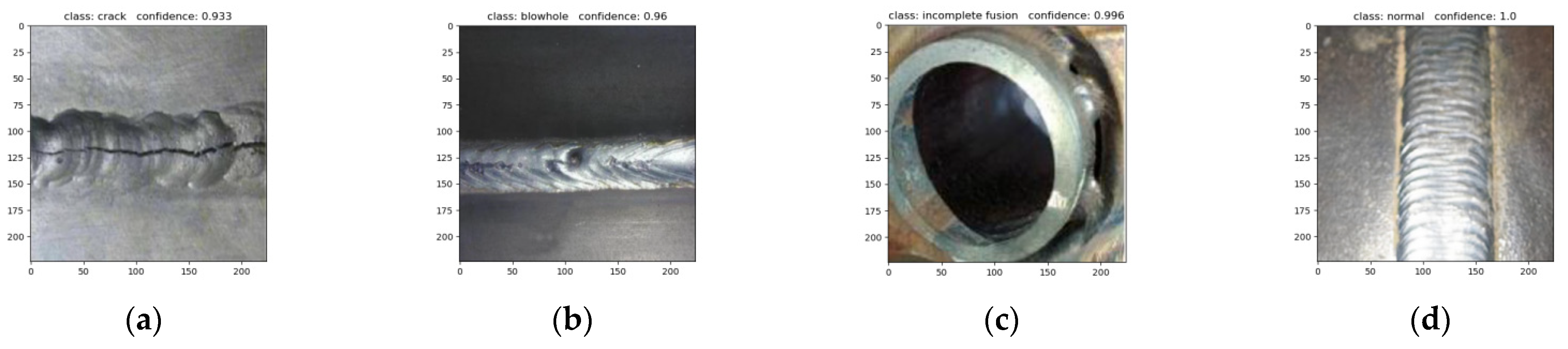

3.1. Weld Surface Defect Dataset

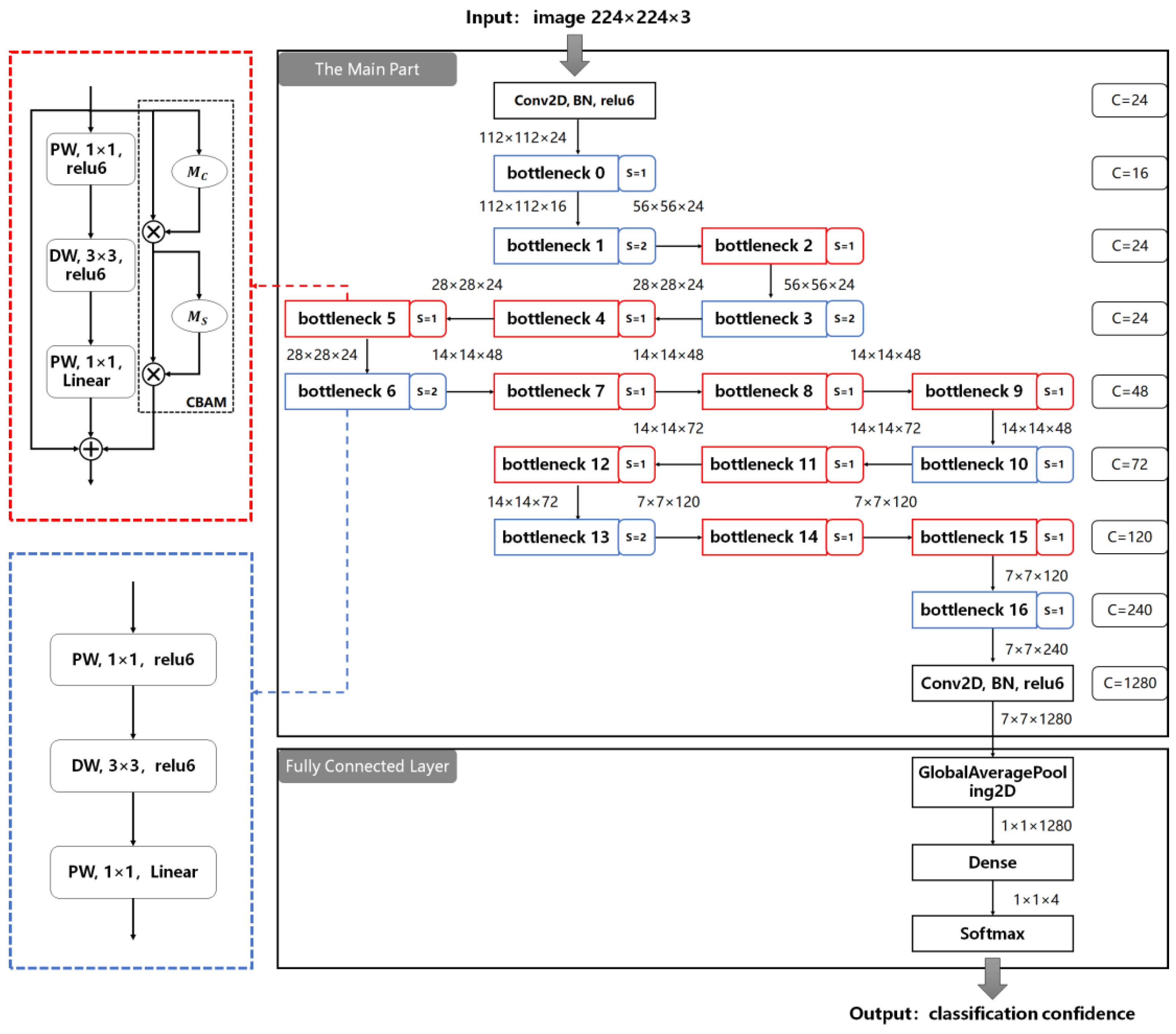

3.2. Algorithm Design

3.2.1. Lightweight MobileNetV2

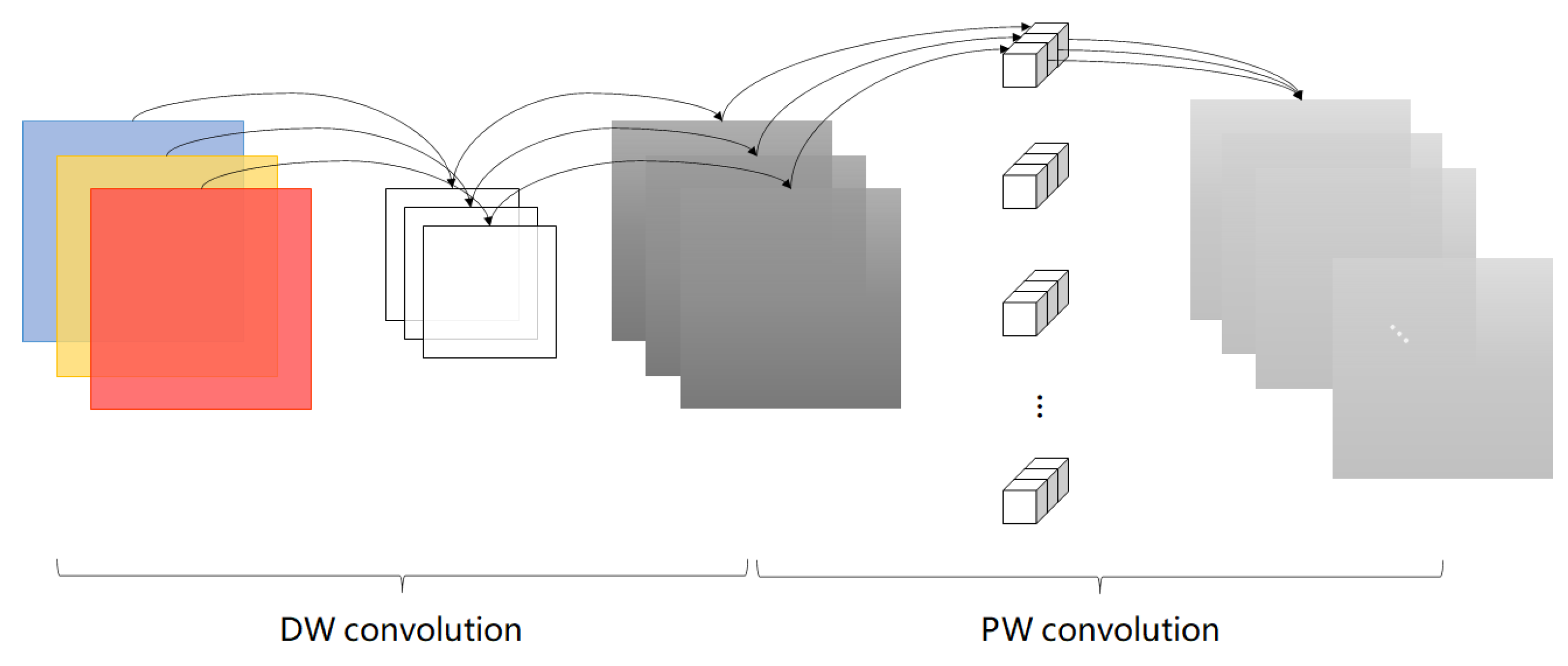

- (1)

- Depthwise separable convolution is the core of MobileNetV2 to achieve lightweight performance.

- (2)

- The inverted residual structure effectively solves the gradient vanishing.

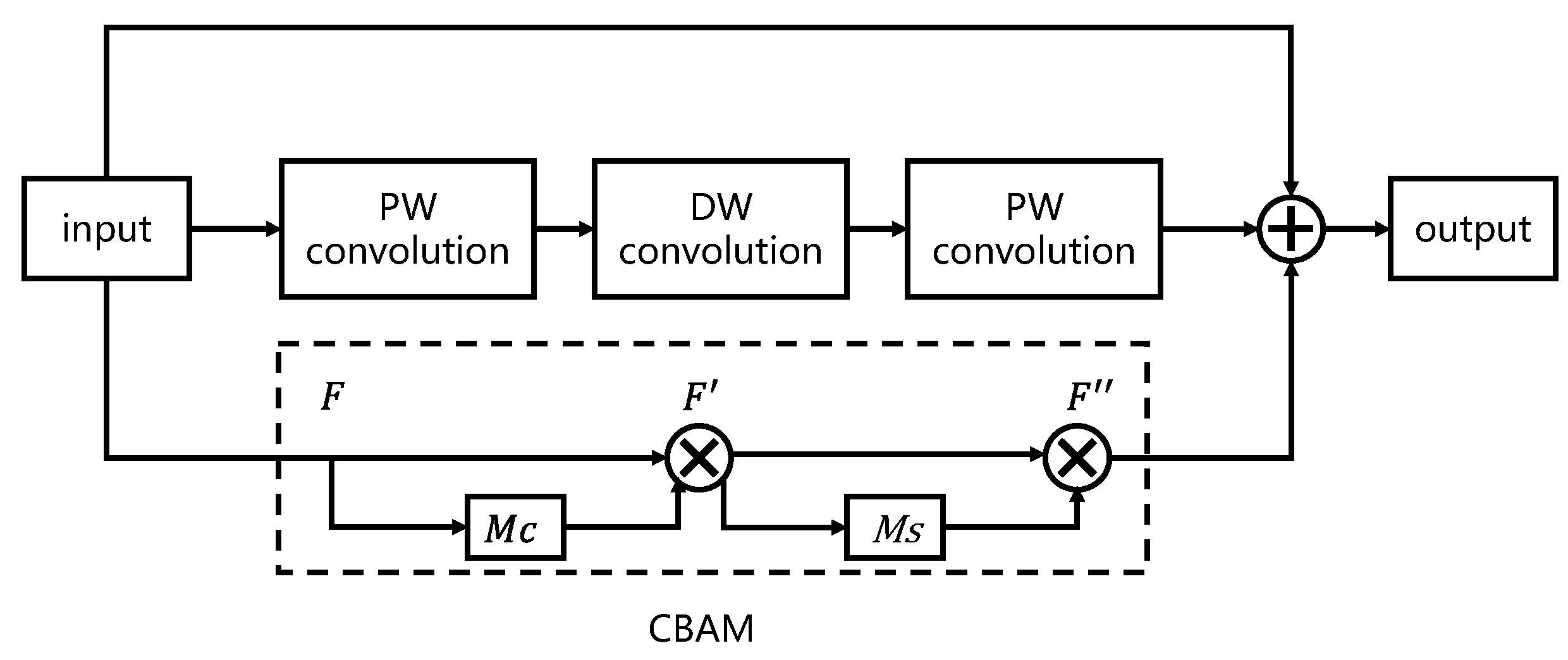

3.2.2. Improved MobileNetV2

- (1)

- Embed the Convolutional Block Attention Module

- (2)

- Reduce the width factor α

4. Experiment and Results

4.1. Experiment Environment

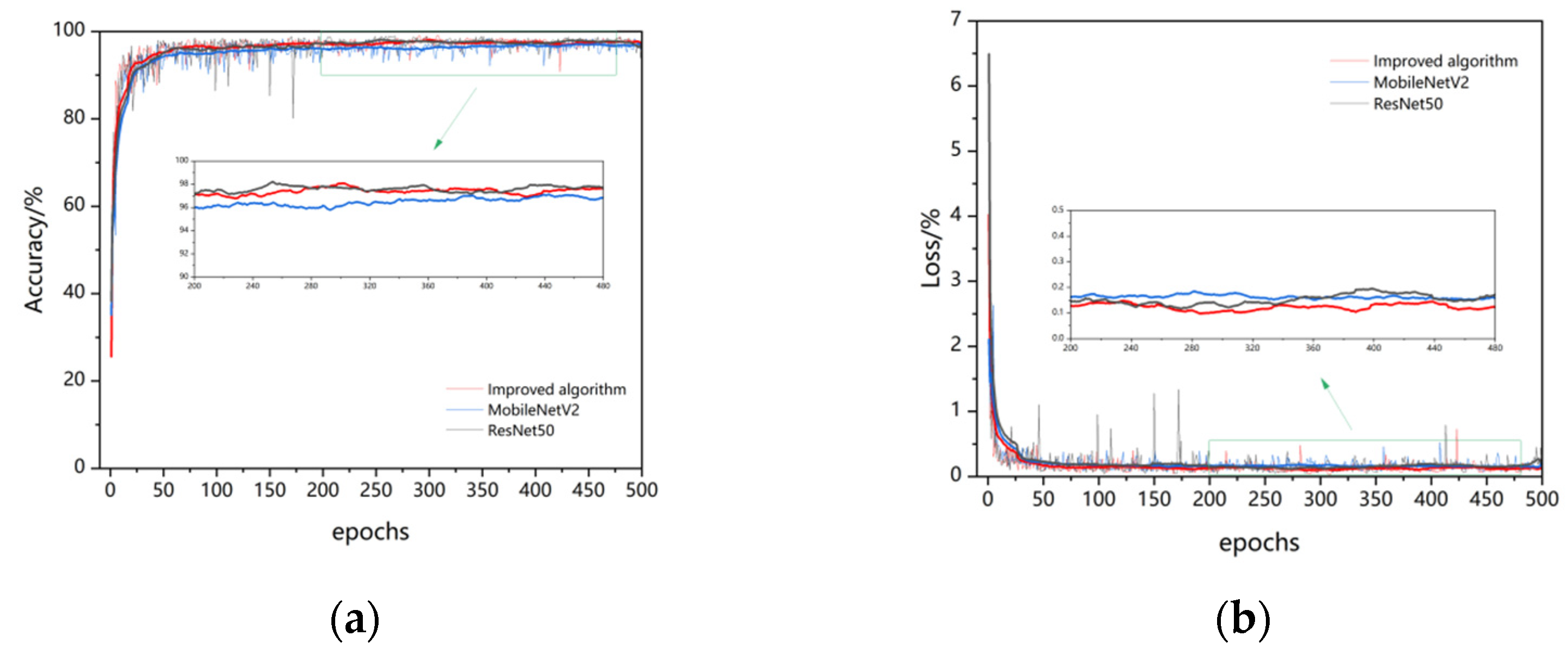

4.2. Algorithm Comparison and Analysis

4.2.1. Comparison among Algorithms on the Self-Built Dataset

4.2.2. Comparison among Algorithms on the GDX-ray Dataset

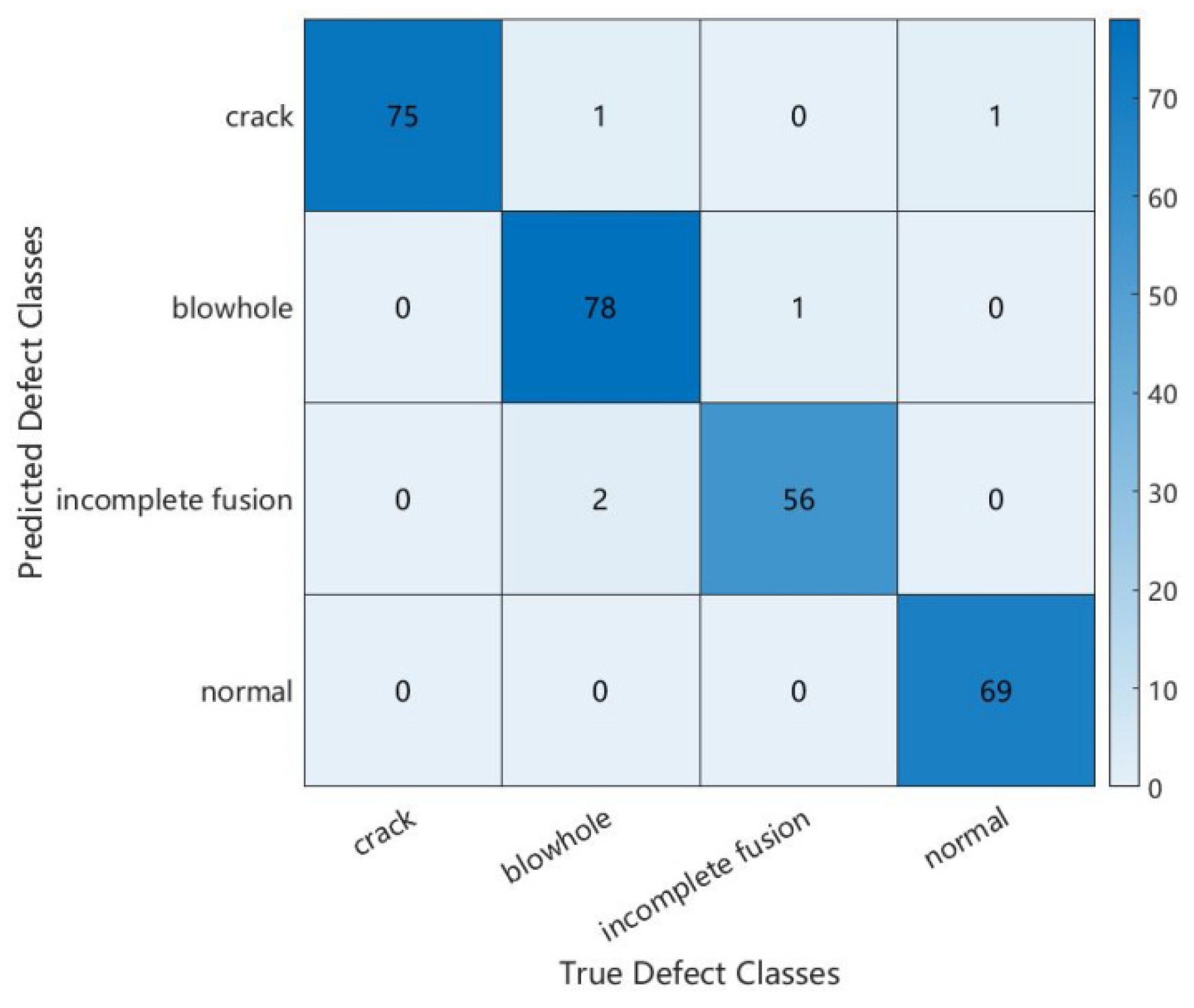

4.3. Model Testing

- (1)

- Model Performance Evaluation Metrics

- (2)

- Recognition accuracy test and defect prediction

5. Discussion

- (1)

- Advantages

- (2)

- Limitations

- (3)

- Extension

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, X.S.; Wu, C.S.; Goecke, S.F.; Kuegler, H. Effects of process parameters on weld bead defects in oscillating laser-GMA hybrid welding of lap joints. Int. J. Adv. Manuf. Tech. 2017, 93, 1877–1892. [Google Scholar] [CrossRef]

- Fang, D.; Liu, L. Analysis of process parameter effects during narrow-gap triple-wire gas indirect arc welding. Int. J. Adv. Manuf. Tech. 2017, 88, 2717–2725. [Google Scholar] [CrossRef]

- Liu, G.; Tang, X.; Xu, Q.; Lu, F.; Cui, H. Effects of active gases on droplet transfer and weld morphology in pulsed-current NG-GMAW of mild steel. Chin. J. Mech. Eng. 2021, 34, 66. [Google Scholar] [CrossRef]

- He, Y.; Tang, X.; Zhu, C.; Lu, F.; Cui, H. Study on insufficient fusion of NG-GMAW for 5083 Al alloy. Int. J. Adv. Manuf. Tech. 2017, 92, 4303–4313. [Google Scholar] [CrossRef]

- Feng, Q.S.; Li, R.; Nie, B.H.; Liu, S.C.; Zhao, L.Y.; Zhang, H. Literature Review: Theory and application of in-line inspection technologies for oil and gas pipeline girth weld defection. Sensors 2017, 17, 50. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, I.; Tang, C.; Ni, C.; Hu, X.; Shen, Y.; Chen, P.; Xie, Y. A Novel, Efficient Implementation of a Local Binary Convolutional Neural Network. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 1413–1417. [Google Scholar] [CrossRef]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech recognition using deep neural networks: A systematic review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Razzak, M.I.; Naz, S.; Zaib, A. Deep learning for medical image processing: Overview, challenges and the future. In Classification in BioApps: Automation of Decision Making; Dey, N., Ashour, A.S., Borra, S., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 323–350. ISBN 978-3-319-65981-7. [Google Scholar]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A survey of the usages of deep learning for natural language processing. IEEE Trans. Neur. Net. Lear. 2021, 32, 604–624. [Google Scholar] [CrossRef]

- Li, Y.; Huang, C.; Ding, L.Z.; Li, Z.X.; Pan, Y.J.; Gao, X. Deep learning in bioinformatics: Introduction, application, and perspective in the big data era. Methods 2019, 166, 4–21. [Google Scholar] [CrossRef]

- Mustageem; Kwon, S. CLSTM: Deep feature-based speech emotion recognition using the hierarchical ConvLSTM network. Mathematics 2020, 8, 2133. [Google Scholar] [CrossRef]

- Khishe, M.; Caraffini, F.; Kuhn, S. Evolving deep learning convolutional neural networks for early COVID-19 detection in chest X-ray images. Mathematics 2021, 9, 1002. [Google Scholar] [CrossRef]

- Han, H.; Wu, Y.H.; Qin, X.Y. An interactive graph attention networks model for aspect-level sentiment analysis. J. Electron. Inf. Technol. 2021, 43, 3282–3290. [Google Scholar] [CrossRef]

- Tsai, C.Y.; Chen, H.W. SurfNetv2: An improved real-time SurfNet and its applications to defect recognition of calcium silicate boards. Sensors 2020, 20, 4356. [Google Scholar] [CrossRef]

- Wan, X.; Zhang, X.; Liu, L. An improved VGG19 transfer learning strip steel surface defect recognition deep neural network based on few samples and imbalanced datasets. Appl. Sci. 2021, 11, 2606. [Google Scholar] [CrossRef]

- Lei, L.; Sun, S.; Zhang, Y.; Liu, H.; Xie, H. Segmented embedded rapid defect detection method for bearing surface defects. Machines 2021, 9, 40. [Google Scholar] [CrossRef]

- Sekhar, R.; Sharma, D.; Shah, P. Intelligent classification of tungsten inert gas welding defects: A transfer learning approach. Front. Mech. Eng. 2022, 8, 824038. [Google Scholar] [CrossRef]

- Kumaresan, S.; Aultrin, K.S.J.; Kumar, S.S.; Anand, M.D. Transfer learning with CNN for classification of weld defect. IEEE Access 2021, 9, 95097–95108. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, Q.; Zhi, Z.; Gao, J.; Gao, Z.; Wang, R.; He, S.; Li, H. Convolution neural network model with improved pooling strategy and feature selection for weld defect recognition. Weld. World 2021, 65, 731–744. [Google Scholar] [CrossRef]

- Dong, X.; Taylor, C.J.; Cootes, T.F. Automatic aerospace weld inspection using unsupervised local deep feature learning. Knowl. Based Syst. 2021, 221, 106892. [Google Scholar] [CrossRef]

- Deng, H.G.; Cheng, Y.; Feng, Y.X.; Xiang, J.J. Industrial laser welding defect detection and image defect recognition based on deep learning model developed. Symmetry 2021, 13, 1731. [Google Scholar] [CrossRef]

- Madhvacharyula, A.S.; Pavan, A.V.S.; Gorthi, S.; Chitral, S.; Venkaiah, N.; Kiran, D.V. In situ detection of welding defects: A review. Weld. World 2022, 66, 611–628. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.Y.; Lin, M.X.; Sun, R. ShuffleNet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6848–6856. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1800–1807. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar]

- Joshi, G.P.; Alenezi, F.; Thirumoorthy, G.; Dutta, A.K.; You, J. Ensemble of deep learning-based multimodal remote sensing image classification model on unmanned aerial vehicle networks. Mathematics 2021, 9, 2984. [Google Scholar] [CrossRef]

- Junos, M.H.; Khairuddin, A.; Dahari, M. Automated object detection on aerial images for limited capacity embedded device using a lightweight CNN model. Alex. Eng. J. 2022, 61, 6023–6041. [Google Scholar] [CrossRef]

- Chen, Z.C.; Yang, J.; Chen, L.F.; Jiao, H.N. Garbage classification system based on improved ShuffleNet v2. Resour. Conserv. Recy. 2022, 178, 106090. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, R.; Liu, Y.; Huang, J.; Chen, Z. Improved YOLOv3 garbage classification and detection model for edge computing devices. Laser Optoelectron. Prog. 2022, 59, 0415002. [Google Scholar] [CrossRef]

- Rangarajan, A.K.; Ramachandran, H.K. A fused lightweight CNN model for the diagnosis of COVID-19 using CT scan images. Automatika 2022, 63, 171–184. [Google Scholar] [CrossRef]

- Natarajan, D.; Sankaralingam, E.; Balraj, K.; Karuppusamy, S. A deep learning framework for glaucoma detection based on robust optic disc segmentation and transfer learning. Int. J. Imag. Syst. Tech. 2022, 32, 230–250. [Google Scholar] [CrossRef]

- Ma, D.; Tang, P.; Zhao, L.; Zhang, Z. Review of data augmentation for image in deep learning. J. Image Graph. 2021, 26, 487–502. [Google Scholar]

- Woo, S.H.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision—ECCV 2018, PT VII, 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Berlin, Germany, 2018; Volume 11211, pp. 3–19. [Google Scholar]

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragon, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The Database of X-ray Images for Nondestructive Testing. J. Nondestruct. Eval. 2015, 34, 42. [Google Scholar] [CrossRef]

- Ferguson, M.; Ak, R.; Lee, Y.T.; Law, K.H. Detection and segmentation of manufacturing defects with convolutional neural networks and transfer learning. Smart Sustain. Manuf. Syst. 2018, 2, 137–164. [Google Scholar] [CrossRef] [PubMed]

- Nazarov, R.M.; Gizatullin, Z.M.; Konstantinov, E.S. Classification of Defects in Welds Using a Convolution Neural Network. In Proceedings of the 2021 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (Elconrus), Moscow, Russia, 26–29 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1641–1644. [Google Scholar]

- Hu, A.D.; Wu, L.J.; Huang, J.K.; Fan, D.; Xu, Z.Y. Recognition of weld defects from X-ray images based on improved convolutional neural network. Multimed. Tools Appl. 2022, 81, 15085–15102. [Google Scholar] [CrossRef]

- Faghihi, R.; Faridafshin, M.; Movafeghi, A. Patch-based weld defect segmentation and classification using anisotropic diffusion image enhancement combined with support-vector machine. Russ. J. Nondestruct. Test. 2021, 57, 61–71. [Google Scholar] [CrossRef]

- Torralba, A.; Russell, B.C.; Yuen, J. LabelMe: Online image annotation and applications. Proc. IEEE 2010, 98, 1467–1484. [Google Scholar] [CrossRef]

- Wang, Z.H.; Zhu, H.Y.; Jia, X.Q.; Bao, Y.T.; Wang, C.M. Surface defect detection with modified real-time detector YOLOv3. J. Sens. 2022, 2022, 8668149. [Google Scholar] [CrossRef]

- Liu, T.; Zheng, H.; Bao, J.; Zheng, P.; Wang, J.; Yang, C.; Gu, J. An explainable laser welding defect recognition method based on multi-scale class activation mapping. IEEE Trans. Instrum. Meas. 2022, 71, 5005312. [Google Scholar] [CrossRef]

- Han, J.; Zhou, J.; Xue, R.; Xu, Y.; Liu, H. Surface morphology reconstruction and quality evaluation of pipeline weld based on line structured light. Chin. J. Lasers-Zhongguo Jiguang 2021, 48, 1402010. [Google Scholar] [CrossRef]

- Yang, Y.; Yan, B.; Dong, D.; Huang, Y.; Tang, Z. Method for extracting the centerline of line structured light based on quadratic smoothing algorithm. Laser Optoelectron. Prog. 2020, 57, 101504. [Google Scholar] [CrossRef]

- Zhang, B.; Chang, S.; Wang, J.; Wang, Q. Feature points extraction of laser vision weld seam based on genetic algorithm. Chin. J. Lasers-Zhongguo Jiguang 2019, 46, 0102001. [Google Scholar] [CrossRef]

| Sample Class | Before Enhancement | After Enhancement |

|---|---|---|

| Crack | 198 | 753 |

| Blowhole | 186 | 810 |

| Incomplete fusion | 26 | 576 |

| Normal | 200 | 706 |

| Total | 610 | 2845 |

| Width Factor | MACs (M) | Parameters (M) | Top 1 Accuracy | Top 5 Accuracy |

|---|---|---|---|---|

| 1 | 300 | 3.47 | 71.8% | 91.0% |

| 0.75 | 209 | 2.61 | 69.8% | 89.6% |

| 0.5 | 97 | 1.95 | 65.4% | 86.4% |

| Model | Recognition Accuracy (%) | E | Parameters (M) | ||

|---|---|---|---|---|---|

| Improved model | 99.08 | 96.45 | 97.16 | 25 | 1.40 |

| MobileNetV2 | 98.53 | 95.30 | 96.10 | 36 | 2.26 |

| ResNet50 | 98.90 | 96.31 | 97.19 | 47 | 23.57 |

| Defect Class | Precision (%) | Recall (%) | Specificity (%) |

|---|---|---|---|

| Crack | 97.40 | 100.00 | 99.04 |

| Blowhole | 98.73 | 96.30 | 99.50 |

| Incomplete fusion | 96.55 | 98.25 | 99.12 |

| Normal | 100.00 | 98.57 | 100.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, K.; Niu, Z.; Hui, J.; Zhou, X.; Chan, F.T.S. A Weld Surface Defect Recognition Method Based on Improved MobileNetV2 Algorithm. Mathematics 2022, 10, 3678. https://doi.org/10.3390/math10193678

Ding K, Niu Z, Hui J, Zhou X, Chan FTS. A Weld Surface Defect Recognition Method Based on Improved MobileNetV2 Algorithm. Mathematics. 2022; 10(19):3678. https://doi.org/10.3390/math10193678

Chicago/Turabian StyleDing, Kai, Zhangqi Niu, Jizhuang Hui, Xueliang Zhou, and Felix T. S. Chan. 2022. "A Weld Surface Defect Recognition Method Based on Improved MobileNetV2 Algorithm" Mathematics 10, no. 19: 3678. https://doi.org/10.3390/math10193678

APA StyleDing, K., Niu, Z., Hui, J., Zhou, X., & Chan, F. T. S. (2022). A Weld Surface Defect Recognition Method Based on Improved MobileNetV2 Algorithm. Mathematics, 10(19), 3678. https://doi.org/10.3390/math10193678