This section starts with an introduction to the traditional EDMD formulation to identify nonlinear models of dynamical systems. The procedure is exemplified by the Duffing equation, a benchmark problem in the literature for testing the reliability of the algorithm.

2.1. The Basic EDMD Formulation

Consider as an example the unforced Duffing oscillator, which is a nonlinear spring that has different behaviors depending on the parameterization. The set of differential equations that govern this system is

where state

is the displacement, state

is the velocity,

is the stiffness of the spring, which is related to the linear force of the spring according to Hooke’s law,

is the amount of damping in the system, and

is the proportion of nonlinearity present in the stiffness of the spring.

Figure 1 shows the phase plane of the system for three different sets of parameters and six random initial conditions (i.e., six initial conditions are generated randomly within the range

starting from a known seed rng(1)). This choice of initial conditions produces an appropriate set of trajectories for calculating the approximation and testing its accuracy. The system of Equations (

2) and (

3) is integrated with a Matlab ODE solver, e.g., ode23s, and the results are collected at a constant sample period

s for a total of 20 s. The result of this numerical integration is a set of six trajectories of two state variables with 201 points per variable. Each of these trajectories is an element of a structure array in Matlab with the fields “Time” and “SV”. The choice of a structure array instead of a tensor comes from the possibility of having trajectories of different lengths, e.g., experimental data of different lengths, a feature that becomes important in systems where having redundant data near the asymptotically stable attractors has a negative impact on the approximation.

As the EDMD is a data-driven algorithm, certain trajectories serve as a training set while others serve as a testing set. The amount of data necessary to obtain an accurate approximation depends on the system under consideration as well as its information content (large data sets can bear little information content if experiments are not properly designed). The EDMD algorithm captures the dynamic of the system on the portion of the state space covered by the trajectories in the training set. Therefore, designing experiments that maximize the coverage of the state space can reduce the amount of data while having a positive effect on accuracy.

Each trajectory of the training set is in discrete-time, i.e., , where are the states of the system, is the non-negative discrete time, and is an unknown nonlinear mapping that provides the evolution of the discretetime trajectories. To construct the database, the training trajectories are organized in so-called snapshot pairs , where . The snapshots function presented in Listing 1 handles the available trajectories, dividing them into training and testing sets of the appropriate type; the training set consists of matrices containing the x and y data, while the testing set is a cell array containing one orbit per index of the cell. The choice of cell arrays instead of a tensor is to offer the possibility of testing trajectories of different lengths. The tr_ts argument is a Matlab structure containing the indexes of the original set of orbits, which serve as the training and testing sets. The fields of this structure must be tr_index and ts_index, respectively. In addition, there is a normalization flag to use when necessary, e.g., when the order of magnitude of different states is dissimilar.

| Listing1. Function snapshots used to create the data pairs for training and testing. |

![Mathematics 10 03859 i001]() |

Notice that the generation of the snapshots avoids the last element in each trajectory, SV(1:end-2) for x and SV(2:end-1) for y. As stated before, avoiding redundant data at the asymptotically stable attractors improves the performance of the algorithm. In Matlab, stopping the simulation early, e.g., as convergence towards the attractor has been achieved, causes the last output interval . This small difference can increase the error in the construction of the approximation. Conversely, if the numerical integration of the system is not stopped near the steady state, it is not necessary to eliminate the last element in the trajectories.

The next step in the development of the EDMD is the definition of the observable space as a set of functions

for

, which represent a transformation from the state space into an arbitrary function space. This transformation of the state is equivalent to a change of variables

, where

. In the Matlab library, the observables are described by orthogonal polynomials, where each element of the set of observables is the tensor product of

n univariate polynomials up to order

. For example, in the Duffing oscillator, a set of observables with

and a Hermite basis of orthogonal polynomials is provided by

Note that the first entry is the product of a zero-order polynomial in both of the state variables; the orders of the polynomial basis in the two state variables can be summarized by

making the generation of observables a problem of accurately handling indexes. Notice that the full basis of indexes (

5) is equivalent to counting numbers on a

basis with

n significant figures. From such a set of indexes, a method to generate a set of observables with a Hermite base is proposed in Listing 2.

| Listing2. Generation of a set of observables with a Hermite basis. |

![Mathematics 10 03859 i002]() |

The function f can evaluate the complete set of training trajectories at once with the omission of the first observable that corresponds to the intercept or constant value (the consideration of this observable would require another programming strategy involving loops, resulting in higher computational time and memory allocation). Notice the versatility of using orthogonal polynomials, as the whole realm of available orthogonal polynomials in Matlab is a valid choice, e.g., Laguerre, Legendre, Jacobi, etc. Note that the code snippet defines the function of observables as a row vector, instead of the column vector notation in the theoretical descriptions.

After the observables have been defined, their time evolution can be computed according to

where

is the matrix that provides the linear evolution of the observables and

is the error in the approximation. One of the main advantages of the EDMD algorithm resides in the fact that the system description is linear in the function space. The solution to (

6) is the matrix

U that minimizes the residual term

, which can be expressed as a least-squares criterion:

where

N is the total number of samples in the training set. The ordinary least-squares (OLS) solution is provided by

where the

notation replaces the inverse of the design matrix

G, as even when using a basis formed by the products of orthogonal polynomials, the design matrix can be close to ill-conditioned (i.e., close to singular). This notation, particularly in Matlab, specifies that a more robust algorithm compared to the inverse or pseudo-inverse is necessary to obtain the approximation.

For the solution of (

8), the matrices

are defined by

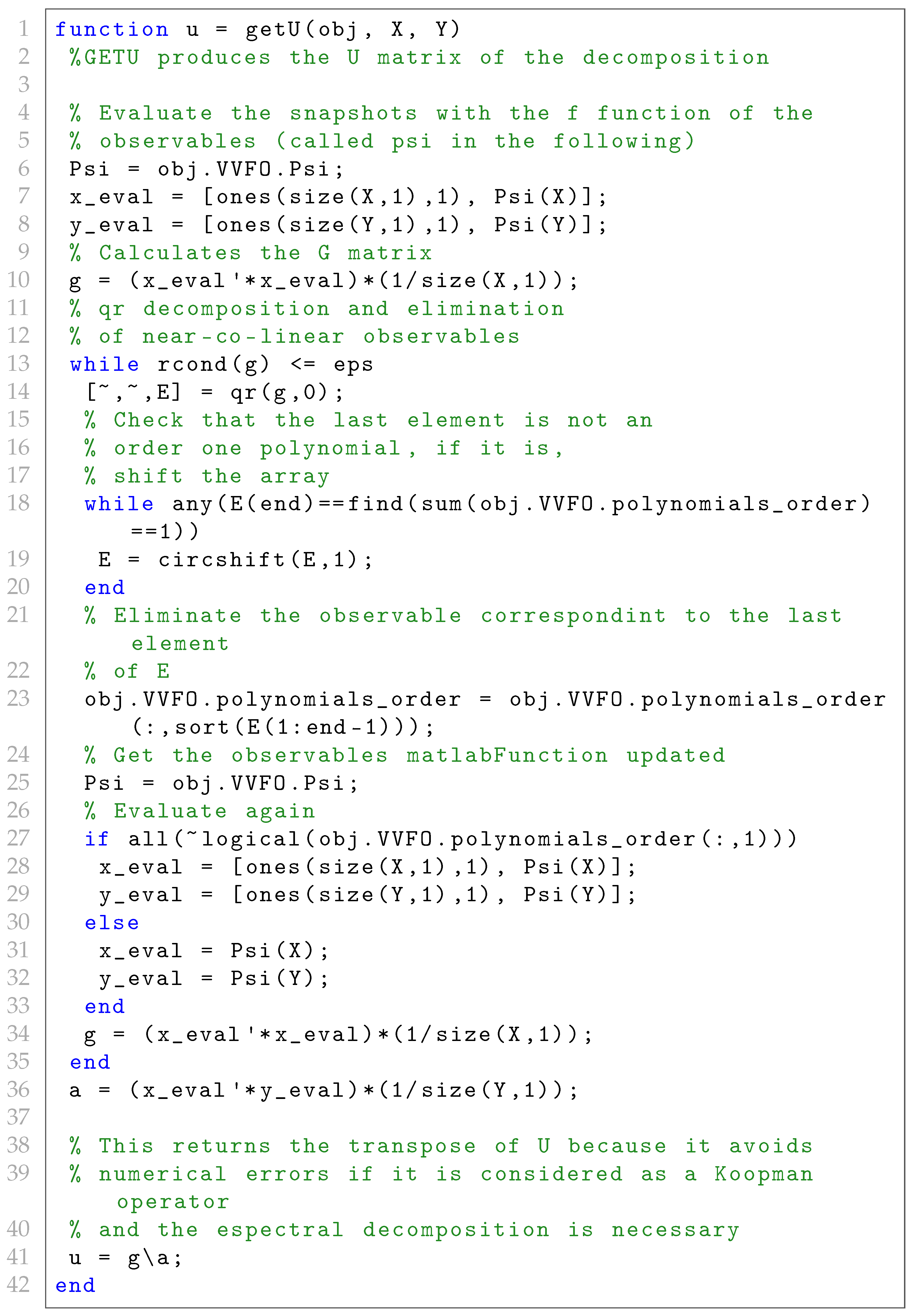

Setting the observables as products of univariate orthogonal polynomials is an improvement, as it generally avoids the need to use a pseudo-inverse approach. Even though the sequence of polynomials in the set of observables is no longer orthogonal, it is less likely to have co-linear columns in the design matrix, improving the numerical stability of the solution. With the training set and the observables, the method for calculating the regression matrix U is shown in Listing 3. Notice that this code defines and uses all the arrays as their transpose. This change is related to the approximation of the Koopman operator, where it is necessary to calculate the right and left eigenvectors of U. The eigenfunctions of the Koopman operator are determined from the left eigenvectors of the spectral decomposition. In Matlab, the left eigenvectors result from additional algebraic manipulations of the right eigenvectors and the diagonal matrix of eigenvalues, thereby decreasing the numerical precision of the eigenfunctions. This problem is alleviated by computing and its spectral decomposition so that the left eigenvectors are immediately available. In general, if U is a normal matrix (diagonalizable), the additional steps involve the inverse of the right eigenvectors to obtain the left eigenvectors and the calculation of this inverse, considering again that the problem is close to being ill-conditioned, which reduces the accuracy of the eigenfunctions.

| Listing3. Computation of the approximate Koopman operator for an OLS problem. |

![Mathematics 10 03859 i003]() |

Numerical Results with EDMD

Here, the EDMD algorithm is tested with the second case scenario for the Duffing oscillator with hard damping. The EDMD algorithm can capture the dynamics of the portion of the state space covered by the training set, which is therefore selected as the outermost trajectory in

Figure 2. The five remaining trajectories are used for testing.

Table 1 provides the parameters of the original EDMD algorithm.

In

Figure 2, the graph on the left displays the phase plane of the system and shows the training trajectory and testing trajectories along with their approximation by the EDMD algorithm with the Laguerre polynomial basis. EDMD achieves a good approximation while using only a small amount of data. However, notice that the discrete-time approximation of a system of order 2 is of dimension twenty-five. In view of this dimensionality explosion with regard to the original dimension of the state and the complexity of the system, it is necessary to introduce reduction techniques that decrease the necessary number of observables to increase the accuracy of the algorithm [

24].

2.2. pqEDMD Algorithm

The extension of the EDMD algorithm is a result of speculation on the good performance of the original algorithm when coupled with a set of observables based on products of univariate-orthogonal polynomials. The idea is to introduce a reduction method, based on p-q quasi-norms, first introduced by Konakli and Sudret [

27] for fault detection in polynomial chaos problems. The reduction proceeds in the following way: if the q-quasi-norm of the indexes that provide the order of the univariate-orthogonal polynomials is less than the maximum order

p of a particular observer, then this observer is eliminated from the set. To implement this procedure, the orders of an observer are defined as

, and the q-quasi norm of these orders as

where

and Equation (

11) represent a norm only when

q is an integer. When

p is redefined as the maximum order of a particular multivariate polynomial instead of the maximum order of the univariate elements, the sets of polynomial orders that remain in the basis are those that satisfy

The code snippet used to generate a set of observables based on Laguerre polynomials with a maximum multivariate order and a q-quasi-norm is provided in Listing 4.

The reduction of the basis is not only dimensional, as the p-q quasi-norm reduction reduces the maximum order of the observables as well. As a rule of thumb (considering various application examples), the higher-order observables usually have a negative contribution to the accuracy of the solution.

| Listing4. Generation of a p-q-reduced set of observables with a Laguerre basis. |

![Mathematics 10 03859 i004]() |

Numerical Results with the pqEDMD

The algorithm is now applied to the Duffing oscillator with soft damping. The pqEDMD algorithm can capture the dynamics of the two attractors provided that the training set has at least one trajectory that converges to each of them. Additionally, as is the case for the hard damping, each of these trajectories should be the outermost (see

Figure 3).

Table 2 lists the parameters of the pqEDMD algorithm.

Even though the full basis achieves a low approximation error of , the reduction of the observables order reduces the empirical error by two orders of magnitude. Comparing the dimension of the full basis to the reduced one does not represent a large improvement. However, this result is due to the comparison between the best result after performing a sweep over several p-q values. Imposing lower p-q values on the approximation has the potential to provide smaller sets of observables while sacrificing accuracy.

Next, the first case scenario is considered; here, the damping parameter is zero and the system oscillates around the fixed point at the origin. The pqEDMD algorithm can capture the dynamics of the system if the innermost and outermost limit cycles compose the training set; otherwise, the algorithm cannot capture the dynamics.

Table 3 shows a summary of the simulations along with the results; it is apparent that even though the empirical error is higher than in the other two case scenarios, the approximation is accurate (see

Figure 4).

The sweep over different p-q values provides a reduced basis with lower error than with the full basis.

Even though p-q quasi-norm reduction produces more accurate and tractable solutions, having products of orthogonal univariate polynomials does not necessarily produce an orthogonal basis. In certain scenarios, the evaluation of the observables produces an ill-conditioned design matrix G. Therefore, the next section proposes a way to eliminate even more observables from the basis, improving the numerical stability of the solution.

2.3. Improving Numerical Stability via QR Decomposition

QR decomposition [

28] can be used to improve the numerical stability and reduce the number of observables even further. If we assume that the design matrix

in Equation (

9) is obtained based on the products of orthogonal polynomials and that there are no co-linear columns, or, in other words, that

holds, then it is possible to decompose this matrix into the product

where

is orthogonal, i.e.,

and

is upper triangular. Column pivoting methods for QR decomposition rely on exchanging the rows of

G such that in every step of the diagonalization of

R and the subsequent calculation of the orthogonal columns of

Q the procedure starts with a column that is as independent as possible from the columns of

G already processed. This method yields a permutation matrix

such that

where the permutation of columns makes the absolute value of the diagonal elements in

R non-increasing, i.e.,

. Furthermore, considering that the permutation process selects the most linearly independent column of

G in every step of the process, the last columns in the analysis are the ones that are close to being co-linear. Therefore, eliminating the observable related to the last column improves the residual condition number of

G. The modified function for the calculation of the regression matrix

U is provided in Listing 5.

| Listing5. Computation of the regression matrix based on QR decomposition. |

![Mathematics 10 03859 i005]() |

In addition, the code snippet shows particular aspects of the overall solution. First, an object containing the observables, i.e., the matlabFunction obj.VVFO.Psi, replaces the original matlabFunction f for the evaluation of the snapshots. Second, note that the exclusion of observables avoids the elimination of the first order univariate polynomials in the basis, as they are used to recover the state. Finally, the method checks for the existence of the constant observable or the intercept, as it could be eliminated due to being close to co-linear with another observable, which we obviously do not want to happen.

2.4. Matlab Package

pqEDMD() is the main class of the Matlab package that provides an array of decompositions based on the pqEDMD algorithm pqEDMD_array. The cardinality of the array of solutions may be less than the product of the cardinality of p and q, as certain p-q pairs produce the same set of indices, i.e., the algorithm would compute the same decomposition more than once. In addition, it calculates the empirical error of the approximations based on the test set and returns the best-performing approximation from the array as a separate attribute best_pqEDMD. The code provides the complete set of solutions, as a user may opt to use a compact solution that is not as accurate as the best one for tractability reasons, e.g., an MPC controller, where a smaller basis guarantees feasibility for longer horizons and has a lower computational cost.

The only required input for pqEDMD() is the system argument, where it is necessary to provide a structure array with the Time and SV fields with at least two trajectories in the array, one for training and one for testing. The remaining arguments are optional, e.g., the array of positive-integer values p, the array of positive values q, the structure of training and testing trajectories with the fields tr_index and ts_index, the string specifying the type of polynomial, the array of polynomial parameters (if the polynomial type is either “Jacobi” or “Gegenbauer”), the boolean flag of normalization, and the string indicating the decomposition method. For example, Listing 6 shows a call to the algorithm with a complete set of arguments.

| Listing6. Complete call to the pqEDMD algorithm. |

![Mathematics 10 03859 i006]() |

To provide the different approximations in the main class, pqVVFO() handles the observables for different values of p, q, and the polynomial type. Its output is the matrix of polynomial indexes, a symbolic vector of observable functions, and a matlabFunction Psi to evaluate the observables arithmetically and efficiently; it accepts a matrix of values, avoiding evaluation with loops.

The remaining classes are the implementations of different decompositions based on different algorithms. The ExtendedDecomposition() is the traditional least-squares method described in this article. In addition, there are two additional available decompositions. MaxLikeDecomposition() is used for data with noise, where the maximum likelihood algorithm assumes that the transformation of the states in the function space preserves a unimodal Gaussian distribution of the noise in the state space (this is a work in progress; preliminary results can be found in [

29]). These properties of the distribution of noise in the function space are a strong assumption; nonetheless, it is sometimes possible to identify dynamical systems corrupted with noise. The last decomposition leverages the advantages of regularized lasso regression to produce sparse solutions, i.e., RegularizedDecomposition(). Even though the solutions are more tractable, the regularized method sacrifices accuracy.

Figure 5 shows the architecture of the solution with the relationship between classes. The current functionality requires the user to call pqEDMD() with the appropriate inputs and options in order to obtain an array of decompositions. This class handles the creation of the necessary pqVVFO() objects to feed into the required decomposition. It is possible to use and extend the observable class to use in other types of decompositions without the use of the main class. The code is available for download in the

Supplementary Materials section of this paper.