Functional Ergodic Time Series Analysis Using Expectile Regression

Abstract

:1. Introduction

2. Methodology

2.1. The Ergodic Functional Data Framework

- (H1)

2.2. Model and Estimator

3. Main Results

- (A1)

- is differentiable in and it satisfies: , , ,

- (A2)

- For ,

- (A3)

- The kernel K is supported within and has a continuous derivative on , such that

- (A4)

- The sequence of the bandwidth parameter such that

4. Some Special Cases

- The classical kernel case: Evidently, this case can be viewed as a special case of our proposed method once , for all . Hence, condition (A4(ii)) is automatically fulfilled and (H1(iii)) and (A4(ii)) are replaced bywhere the following corollary gives the convergence rate.Corollary 1.Considering conditions (A1)–(A3) and (6), we obtainRemark 1.As far as we know, this result is also new in the field of nonparametric functional data analysis. In other words, no work in the literature considers conditional expectile estimation in the case of functional ergodic data.

- Independence case: When the independent situation is considered, the (H1) condition can be reduced to the (H1(i)) condition. Therefore, Theorem 1 leads to the following corollary.Corollary 2.Considering conditions (A1)–(A4), we obtainRemark 2.Once again, the above corollary is unique in the field of nonparametric functional data analysis. Indeed, the recursive estimate of functional expectile regression data has not been addressed previously in functional statistics.

- The classical regression case: It should be clear to readers that classical regression is regarded as a special case of the expectile regression. It can be obtained easily by putting . So, by simple calculation, we prove thatFor this functional model, the condition (A2) is reformulated asTheorem 1 is now presented as follows.Corollary 3.Consider conditions (A1), (A3), (A4) and if (7) holds, then, as , we obtainRemark 3.Note that Amiri et al. [1] studied the function version of the recursive estimation method of the conditional expectation. However, they only stated the consistency of the estimator in the i.i.d. case. The novelty of the present paper is the treatment of the functional ergodic case. Thus, we can say that the result of corollary 3 is new in the context of nonparametric functional data analysis.

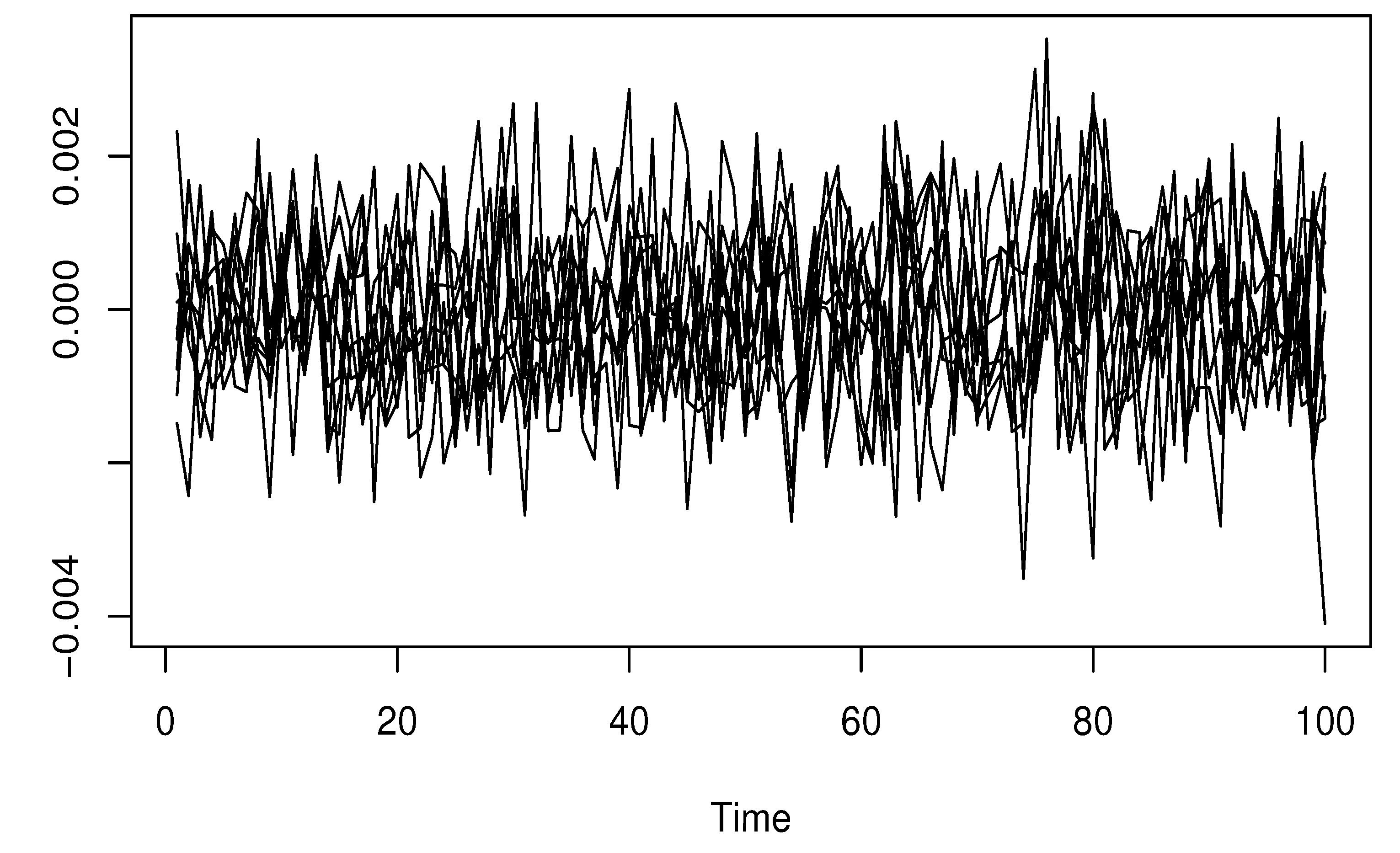

5. A Simulation Study

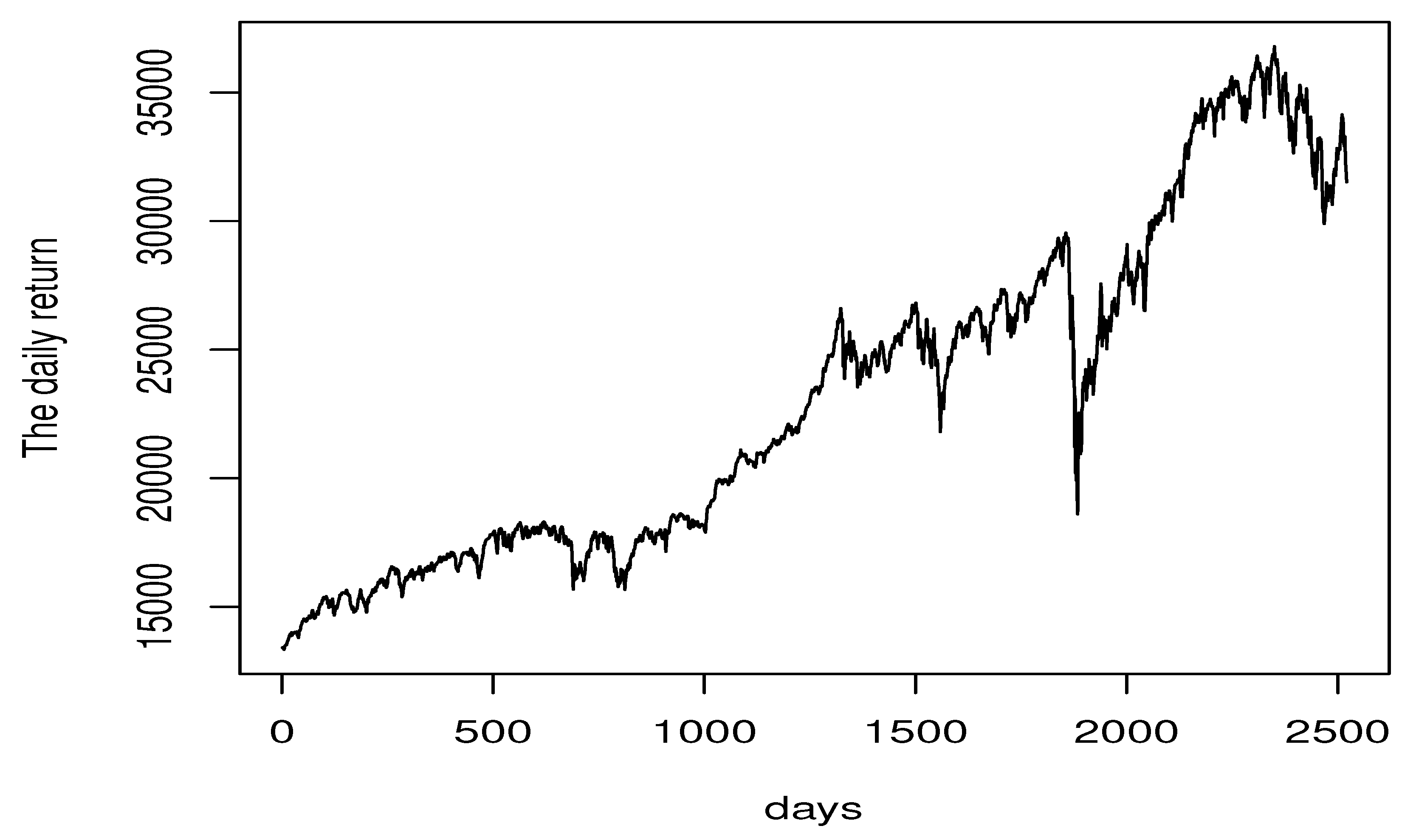

Real Data Example

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Amiri, A.; Crambes, C.; Thiam, B. Recursive estimation of nonparametric regression with functional covariate. Comput. Stat. Data Anal. 2014, 69, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Ardjoun, F.; Ait Hennani, L.; Laksaci, A. A recursive kernel estimate of the functional modal regression under ergodic dependence condition. J. Stat. Theory Pract. 2016, 10, 475–496. [Google Scholar] [CrossRef]

- Benziadi, F.; Laksaci, A.; Tebboune, F. Recursive kernel estimate of the conditional quantile for functional ergodic data. Commun. Stat. Theory Methods 2016, 45, 3097–3113. [Google Scholar] [CrossRef]

- Slaoui, Y. Recursive nonparametric regression estimation for independent functional data. Stat. Sin. 2020, 30, 417–437. [Google Scholar] [CrossRef]

- Slaoui, Y. Recursive nonparametric regression estimation for dependent strong mixing functional data. Stat. Inference Stoch. Process. 2020, 23, 665–697. [Google Scholar] [CrossRef]

- Laksaci, A.; Khardani, S.; Semmar, S. Semi-recursive kernel conditional density estimators under random censorship and dependent data. Commun. Stat. Theory Methods 2022, 51, 2116–2138. [Google Scholar] [CrossRef]

- Laïb, N.; Louani, D. Nonparametric kernel regression estimate for functional stationary ergodic data: Asymptotic properties. J. Multivariate Anal. 2010, 101, 2266–2281. [Google Scholar] [CrossRef] [Green Version]

- Laïb, N.; Louani, D. Rates of strong consistencies of the regression function estimator for functional stationary ergodic data. J. Stat. Plan. Inference 2011, 141, 359–372. [Google Scholar] [CrossRef]

- Gheriballah, A.; Laksaci, A.; Sekkal, S. Nonparametric M-regression for functional ergodic data. Stat. Probab. Lett. 2013, 83, 902–908. [Google Scholar] [CrossRef]

- Pratesi, M.; Ranalli, M.G.; Salvati, N. Nonparametric M-quantile regression using penalised splines. J. Nonparametr. Stat. 2009, 21, 287–304. [Google Scholar] [CrossRef]

- Waltrup, L.S.; Sobotka, F.; Kneib, T.; Kauermann, G. Expectile and quantile regression—David and goliath? Stat. Model. 2015, 15, 433–456. [Google Scholar] [CrossRef] [Green Version]

- Farooq, M.; Steinwart, I. Learning rates for kernel-based expectile regression. Mach. Learn. 2019, 108, 203–227. [Google Scholar] [CrossRef] [Green Version]

- Daouia, A.; Paindaveine, D. From halfspace m-depth to multiple-output expectile regression. arXiv 2019, arXiv:1905.12718. [Google Scholar]

- Mohammedi, M.; Bouzebda, S.; Laksaci, A. The consistency and asymptotic normality of the kernel type expectile regression estimator for functional data. J. Multivariate Anal. 2021, 181, 104673. [Google Scholar] [CrossRef]

- Almanjahie, I.; Bouzebda, S.; Chikr Elmezouar, Z.; Laksaci, A. The functional kNN estimator of the conditional expectile: Uniform consistency in number of neighbors. Stat. Risk Model. 2022, 38, 47–63. [Google Scholar] [CrossRef]

- Roussas, G.; Tran, L.T. Asymptotic normality of the recursive kernel regression estimate under dependence conditions. Ann. Stat. 1992, 20, 98–120. [Google Scholar] [CrossRef]

- Almanjahie, I.; Bouzebda, S.; Kaid, Z.; Laksaci, A. Nonparametric estimation of expectile regression in functional dependent data. J. Nonparametr. Stat. 2022, 34, 250–281. [Google Scholar] [CrossRef]

- Wolverton, C.T.; Wagner, T.J. Asymptotically optimal discriminant functions for a pattern classification. IEEE Trans. Inf. Theory 1969, 15, 258–265. [Google Scholar] [CrossRef]

- Yamato, H. Sequential estimation of a continous probability density funciton and mode. Bull. Math. Stat. 1971, 14, 1–12. [Google Scholar] [CrossRef]

- Bouzebda, S.; Slaoui, Y. Nonparametric recursive method for moment generating function kernel-type estimators. Stat. Probab. Lett. 2022, 184, 109422. [Google Scholar] [CrossRef]

- Slaoui, Y. Moderate deviation principles for nonparametric recursive distribution estimators using Bernstein polynomials. Rev. Mat. Complut. 2022, 35, 147–158. [Google Scholar] [CrossRef]

- Jones, M.C. Expectiles and M-quantiles are quantiles. Stat. Probab. Lett. 1994, 20, 149–153. [Google Scholar] [CrossRef]

- Abdous, B.; Rémillard, B. Relating quantiles and expectiles under weighted-symmetry. Ann. Inst. Stat. Math. 1995, 47, 371–384. [Google Scholar] [CrossRef]

- Bellini, F.; Bignozzi, V.; Puccetti, G. Conditional expectiles, time consistency and mixture convexity properties. Insurance Math. Econom. 2018, 82, 117–123. [Google Scholar] [CrossRef]

- Girard, S.; Stupfler, G.; Usseglio-Carleve, A. Extreme conditional expectile estimation in heavy-tailed heteroscedastic regression models. Ann. Stat. 2021, 49, 3358–3382. [Google Scholar] [CrossRef]

- So, M.K.P.; Chu, A.M.Y.; Lo, C.C.Y.; Ip, C.Y. Volatility and dynamic dependence modeling: Review, applications, and financial risk management. Wiley Interdiscip. Rev. Comput. Stat. 2022, 14, e1567. [Google Scholar] [CrossRef]

- Feng, Y.; Beran, J.; Yu, K. Modelling financial time series with SEMIFAR-GARCH model. IMA J. Manag. Math. 2007, 18, 395–412. [Google Scholar] [CrossRef]

- Bogachev, V.I. Gaussian Measures: Mathematical Surveys and Monographs; American Mathematical Society: Providence, RI, USA, 1999; Volume 62. [Google Scholar]

- Ferraty, F.; Vieu, P. Nonparametric Functional Data Analysis; Springer Series in Statistics. Theory and Practice; Springer: New York, NY, USA, 2006. [Google Scholar]

| Distribution | p | NE (l = 0) | NE (l = 0.5) | NE (l = 1) | CKE |

|---|---|---|---|---|---|

| Log-normal distribution | 0.1 | 0.14 | 0.12 | 0.18 | 0.69 |

| 0.1 | 0.14 | 0.12 | 0.18 | 0.69 | |

| 0.5 | 0.09 | 0.05 | 0.08 | 0.23 | |

| 0.9 | 0.17 | 0.15 | 0.16 | 0.57 | |

| Normal distribution | 0.1 | 0.19 | 0.22 | 0.24 | 0.87 |

| 0.5 | 0.04 | 0.1 | 0.08 | 0.75 | |

| 0.9 | 0.23 | 0.28 | 0.21 | 0.96 | |

| Exponential distribution | 0.1 | 0.12 | 0.17 | 0.13 | 0.45 |

| 0.5 | 0.02 | 0.09 | 0.06 | 0.39 | |

| 0.9 | 0.17 | 0.25 | 0.24 | 0.77 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshahrani, F.; Almanjahie, I.M.; Elmezouar, Z.C.; Kaid, Z.; Laksaci, A.; Rachdi, M. Functional Ergodic Time Series Analysis Using Expectile Regression. Mathematics 2022, 10, 3919. https://doi.org/10.3390/math10203919

Alshahrani F, Almanjahie IM, Elmezouar ZC, Kaid Z, Laksaci A, Rachdi M. Functional Ergodic Time Series Analysis Using Expectile Regression. Mathematics. 2022; 10(20):3919. https://doi.org/10.3390/math10203919

Chicago/Turabian StyleAlshahrani, Fatimah, Ibrahim M. Almanjahie, Zouaoui Chikr Elmezouar, Zoulikha Kaid, Ali Laksaci, and Mustapha Rachdi. 2022. "Functional Ergodic Time Series Analysis Using Expectile Regression" Mathematics 10, no. 20: 3919. https://doi.org/10.3390/math10203919

APA StyleAlshahrani, F., Almanjahie, I. M., Elmezouar, Z. C., Kaid, Z., Laksaci, A., & Rachdi, M. (2022). Functional Ergodic Time Series Analysis Using Expectile Regression. Mathematics, 10(20), 3919. https://doi.org/10.3390/math10203919