To solve the problem in

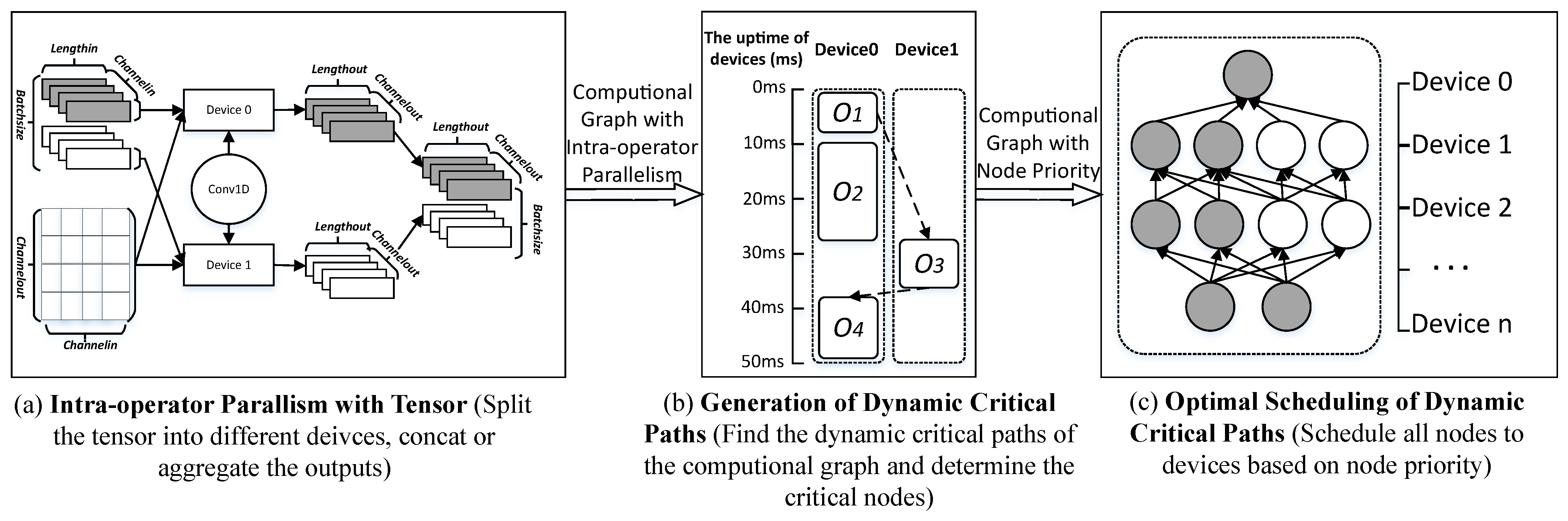

Section 3, this section proposes an adaptive distributed parallel training method based on the dynamic generation of critical DAG paths, called FD-DPS (

Figure 1). Firstly, FD-DPS implements intra-operator parallelism with a tensor dimension to build a graph search space with fine granularity according to operator attributes (

Figure 1a). Secondly, it finds critical nodes and generates dynamic critical paths to determine node priority changes in the DAG of neural network models, which can capture the effect of the dynamic environment on model execution performance (

Figure 1b). Finally, it implements the optimal scheduling of dynamic critical paths based on node priority to dynamically search for an optimal distributed parallel strategy for deep learning models (

Figure 1c). The specific implementation method is as follows.

4.2. Generation of Dynamic Critical Path

Intra-operator parallelism makes the graph search space more complex than inter-operator parallelism. Simultaneously, as operators are dynamically scheduled to be executed on different devices, the available devices of the cluster will change dynamically. In a DAG, the critical path is the path with the most expensive cost from the entry node to the exit node, which determines the maximum end-to-end execution time of the computational graph. The dynamic environment will lead to changes in the critical path of the computational graph, which affects the end-to-end execution time of the computational graph. For the DAG graph, this paper adaptively generates critical paths based on the dynamic scenarios and guides the placement of nodes in the device.

To implement the above method, we introduce the concepts of the earliest start time (EST), latest start time (LST), critical node (CN), critical path (CP), and node priority (NP) based on [

20]. Their definitions are as follows:

Definition 8 (Earliest Start Time)

. The earliest start time (EST) of node on device is the earliest time when can start the execution in the entire computational graph. It can be defined as Formula (6):where represents the execution time of node on device and represents the communication cost between on and on . The EST of the entry node . According to Formula (6), the EST can be calculated by traversing the computational graph in a breadth-first search method starting from . Definition 9 (Latest Start Time)

. The latest start time (LST) of node on device is the latest time when can start execution without delaying the execution of the entire computational graph. It can be defined as Formula (7):where the latest start time of the exit node is the total time for the execution of the critical path. Similar to the calculation of the EST, the LST can also be calculated by traversing the inverse computational graph in a breadth-first search method. Definition 10 (Critical Node)

. The node on the critical path is the critical node. In the DAG, if the EST and LST of node on device satisfy , node is a critical node: Definition 11 (Critical Path). Define the path from the entry node to the exit node in the DAG that satisfies the maximum cost (the computational cost and the communication cost) as the critical path (CP).

Definition 12 (Node Priority)

. Node priority refers to the importance of a node during scheduling. The smaller the difference between the EST and LST of a node is, the greater the impact is on the end-to-end execution time of the critical path and the greater the priority of the node is. The node priority of is expressed by Formula (9):where represents the node priority of , represents a non-zero constant value, it prevents an exception from occurring when . According to the above definition, the critical path determines the longest end-to-end execution time of the computational graph. Actually, in the progress of the schedule, the available resources of the device cluster change dynamically. This leads to changes in the execution performance of nodes on different devices. Moreover, this also leads to dynamic changes in the critical nodes and critical paths. For example, as shown in

Figure 3a,

and

have no dependencies during execution, so they can be executed in parallel. As shown in

Figure 3b, if

is scheduled on Device0 for execution and

is scheduled on Device1 for execution, the critical path of the DAG is

-

-

and the critical path length is 53. As shown in

Figure 3c, if

is scheduled on Device0 for execution and

is scheduled on Device1 for execution, the critical path of the DAG becomes

-

-

and the critical path length becomes 35. Obviously, the strategy in

Figure 3c is more optimal. Since operators are scheduled on different devices, the critical path of the computational graph will change. Therefore, the main goal of the dynamic critical path generation is to find the optimal scheduling strategy.

As shown in

Figure 3, different operator scheduling strategies affect the selection of devices in the scheduling process, which will lead to changes in critical nodes and critical paths. In this paper, we call the critical path that changes with the dynamic scenarios the dynamic critical path (DCP).

The above analysis shows that the critical path length of the computational graph is an important indicator for the scheduling of the operators. It represents the end-to-end execution time of the computational graph. Therefore, we define the dynamic critical path length (DCPL) as follows:

where

represents the EST of node

on device

and

represents the execution time of node

on device

.

Since DCPL is the maximum value of the earliest finish time (e.g., ) of all paths, it can be used to determine the upper limit of the node start time. Therefore, in the dynamic priority scheduling process, the EST and LST can be generated through DCPL dynamically if a schedulable node is not assigned.

To determine the dynamic critical nodes (DCN) on the dynamic critical path (DCP), we assume that the DCP ranges from the entry node to the exit node . The specific method is as follows:

For all nodes in the computational graph, calculate the corresponding EST and LST.

According to Formula (

8), determine the critical nodes. If node

satisfies

, it can be marked as a critical node.

According to the identified critical nodes and the node dependencies in the computational graph, use the breadth-first search method to generate the DCP.

4.3. Optimal Scheduling Based on Operator Priority

According to

Section 4.2, the DCP determines the longest end-to-end execution time of the entire computational graph in dynamic scenarios. In this section, we propose a critical path optimal scheduling method based on node priority, which can optimize the end-to-end longest execution time of the computational graph.

The key to this method is to schedule nodes with high priority, which ensures that nodes with a small time-optimizable execution range are finished at the earliest time. The critical nodes satisfy and , which have the highest priority. Therefore, the marked critical nodes can be scheduled preferentially to reduce the cost of computing the node priority.

The specific method to implement the optimal scheduling of critical paths according to the node priority is as follows:

(1). Find the marked critical nodes from the schedulable nodes based on the priority scheduling of critical nodes.

If all the parent nodes of a node

have been scheduled to the device, but

has not been scheduled and

is a schedulable node, the selection of the optimal schedulable node is as follows: First of all, the marked critical nodes among the schedulable nodes will be scheduled preferentially. Then, if there is no marked critical node in the schedulable nodes, calculate the node priority of all nodes using Formula (

9). Sort the schedulable nodes by node priority from largest to smallest. The first node (which has the highest priority) from the sequence is the optimal schedulable node. The specific details are shown in Algorithm 1:

| Algorithm 1: The algorithm for finding the optimal schedulable node (Sort_Node) |

![Mathematics 10 04788 i001]() |

(2). Find the matching device for the optimal schedulable node.

For the optimal schedulable node, we adopt the following method to find the matching device.

Firstly, according to the node (operator) resource requirements (e.g., communication, computation, and memory) and the idle resources of the device, we select the device set that satisfies the resource requirements for the nodes (operators).

Then, assuming that the total number of nodes in the graph search space is n, we select devices that satisfy resource requirements for schedulable node

(

); where the devices need to satisfy the execution time requirement of node

, the scheduling process cannot affect the execution of scheduled nodes on these devices. For a device

that satisfies the resource requirements of node

, suppose that

(

) and

(

) are the scheduled nodes on this device. If

will be scheduled to device

, the execution order of

,

, and

on device

is

. Suppose that

(

) represents a parent node of

in the computational graph. Then, we adopt Formula (

11) to determine whether node

can be scheduled to device

:

When

is not scheduled to

, if the execution time of

on

is less than the difference between the latest start time of

on

(

) and the earliest finish time of

on

(

), then node

can be scheduled between

and

on

. When

is a scheduled node on

, the communication cost between

and

on

is 0 if

is scheduled to

. However, the EST (calculated by Formula (

6)) and LST (calculated by Formula (

7)) of

on

include the (

,

) between

on

and

on

. Therefore, it is necessary to recalculate the earliest start time

and the latest start time

of

when

and

are scheduled to the same device

, where

and

. If the execution time of

on

is less than the difference between the latest start time of

on

(

) and the earliest finish time of

on

(

), then node

can be scheduled between

and

on

. For node

, there may be multiple devices that satisfy Formula (

11). We can record all satisfied devices with a device subset.

Lastly, we find the optimal device from the selected device subset. (1) If node

and its parent node

can be scheduled to the same device

, select

preferentially as the optimal device of node

to reduce the communication cost. (2) If node

and its leaf node

can be scheduled to the same device

, select

preferentially as the optimal device of node

, where

needs to be selected based on the node priority and

needs to be selected based on the execution time of the node

on the device. The selection of the optimal device

and the optimal child node

is shown in Formula (

12):

where

has the highest node priority in the leaf node set of

. V presents the new device subset which satisfies the scheduling of

and

in the original device subset.

is the device that minimizes the execution time of

and

(

).

(3) We select the device from the device subset that satisfies the earliest execution of as the optimal device. can execute as soon as possible. (4) When no device satisfies the above conditions, according to the node priority, select the device that executes the lowest priority node as the optimal device of node .

The specific details are shown in Algorithm 2.

| Algorithm 2: Algorithm of finding optimal device (Find_Slot(, )) |

![Mathematics 10 04788 i002]() |

(3). Update the graph search space according to the scheduling.

After the node scheduling is finished, update the graph search space according to the scheduling situation. The updated content mainly includes the weight of the edge, the EST and LST of the scheduled node , and the EST and LST of the unscheduled node. The specific update method is as follows.

Firstly, when the scheduled node

is scheduled to the device where the parent node

is located, the communication cost of edge from

to

(

) is set to 0. Then, according to the scheduling of the scheduled node

on the device, update the EST and LST of

. Finally, iteratively update the EST and LST of all unscheduled nodes through Formulas (

6) and (

7) after the update of the graph search space.

After the graph search space update, perform a new round of node optimization scheduling, and repeat this process until the optimal parallel strategy is found.