Continuous Hybrid BCI Control for Robotic Arm Using Noninvasive Electroencephalogram, Computer Vision, and Eye Tracking

Abstract

:1. Introduction

2. Methods and Experiments

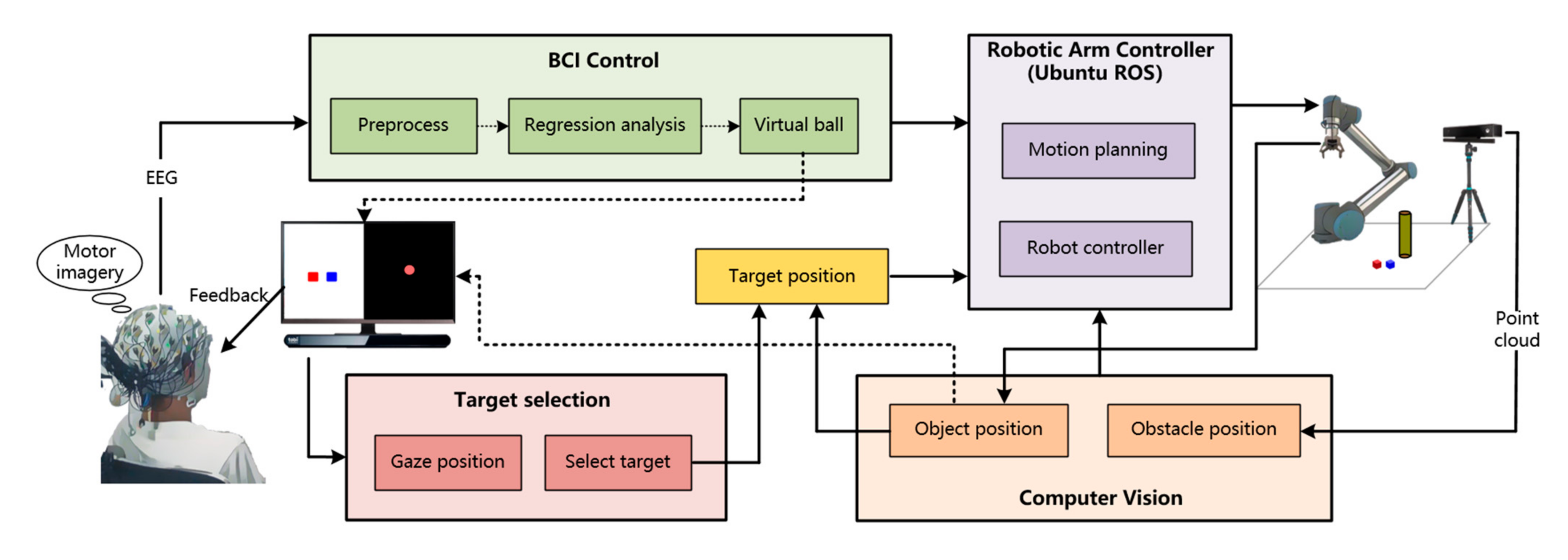

2.1. System Architecture

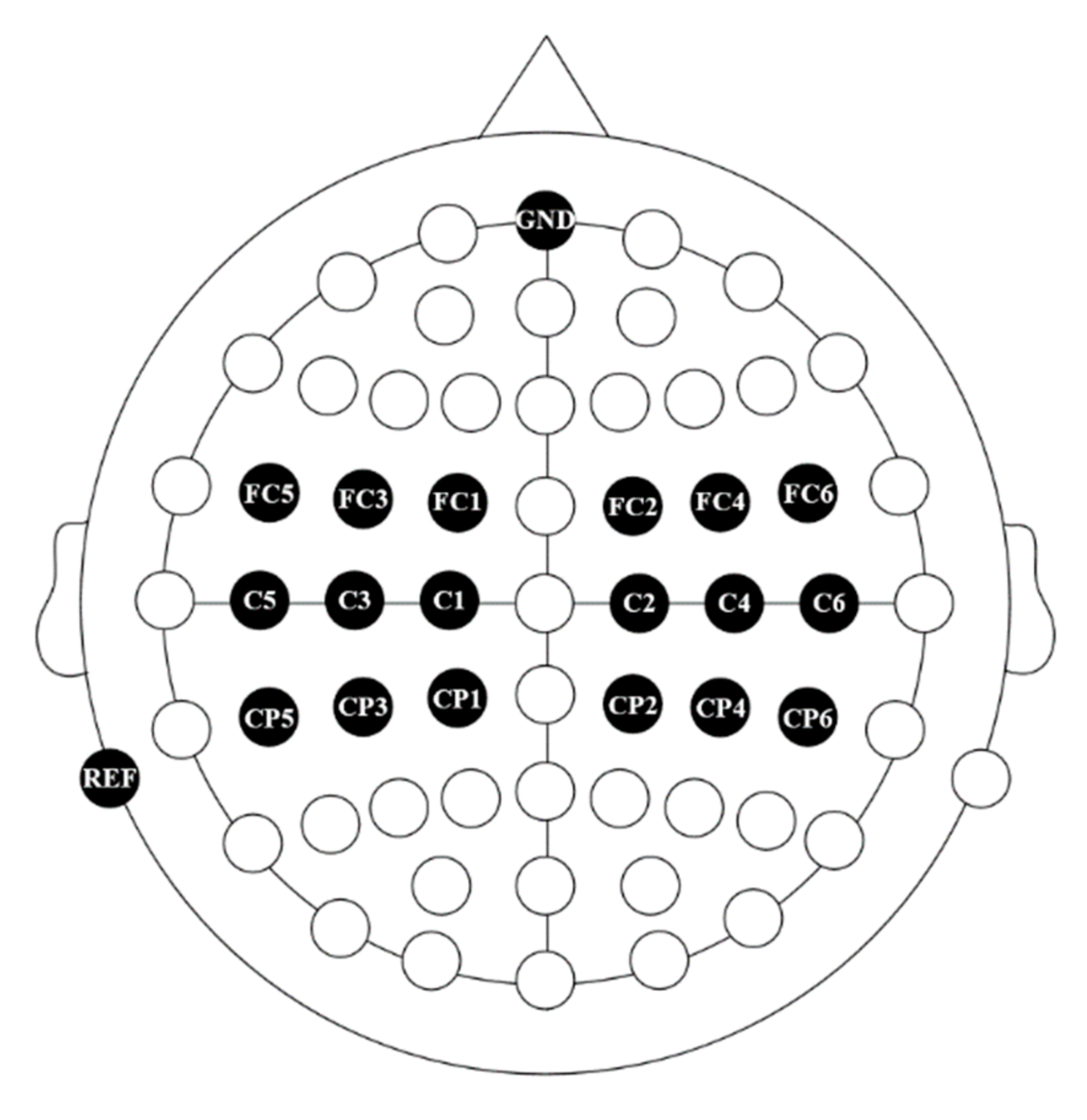

2.1.1. BCI System

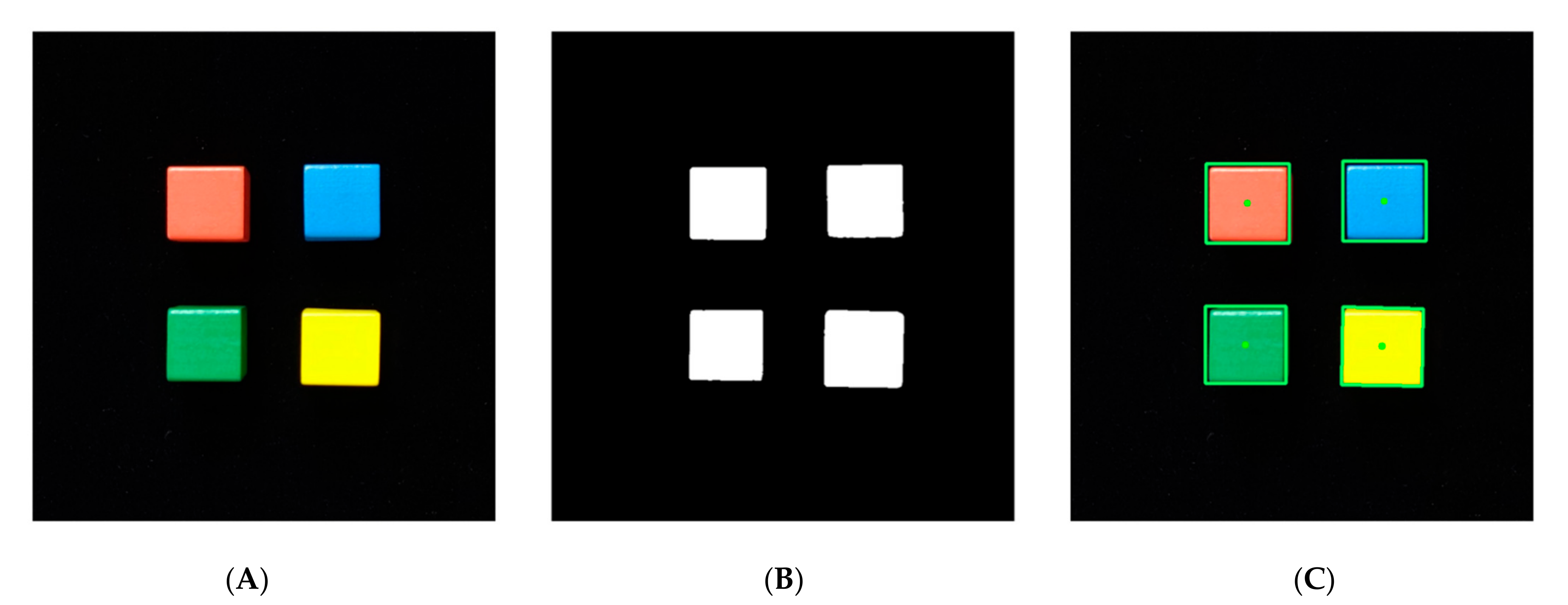

2.1.2. Object Identification and Target Selection

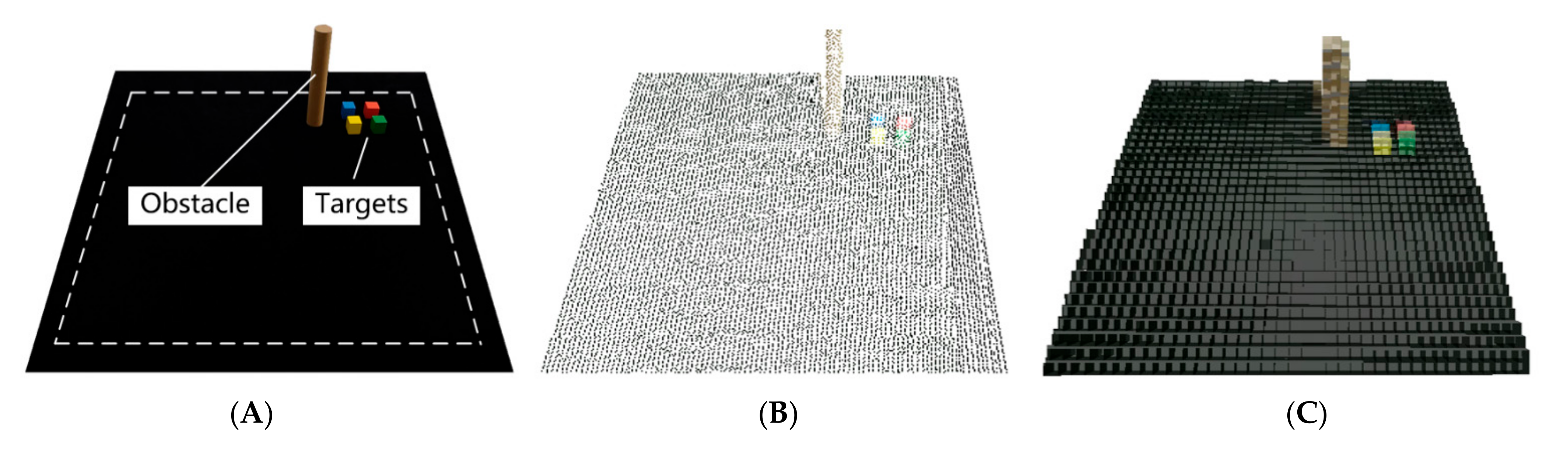

2.1.3. Robotic Arm Control and Obstacle Avoidance

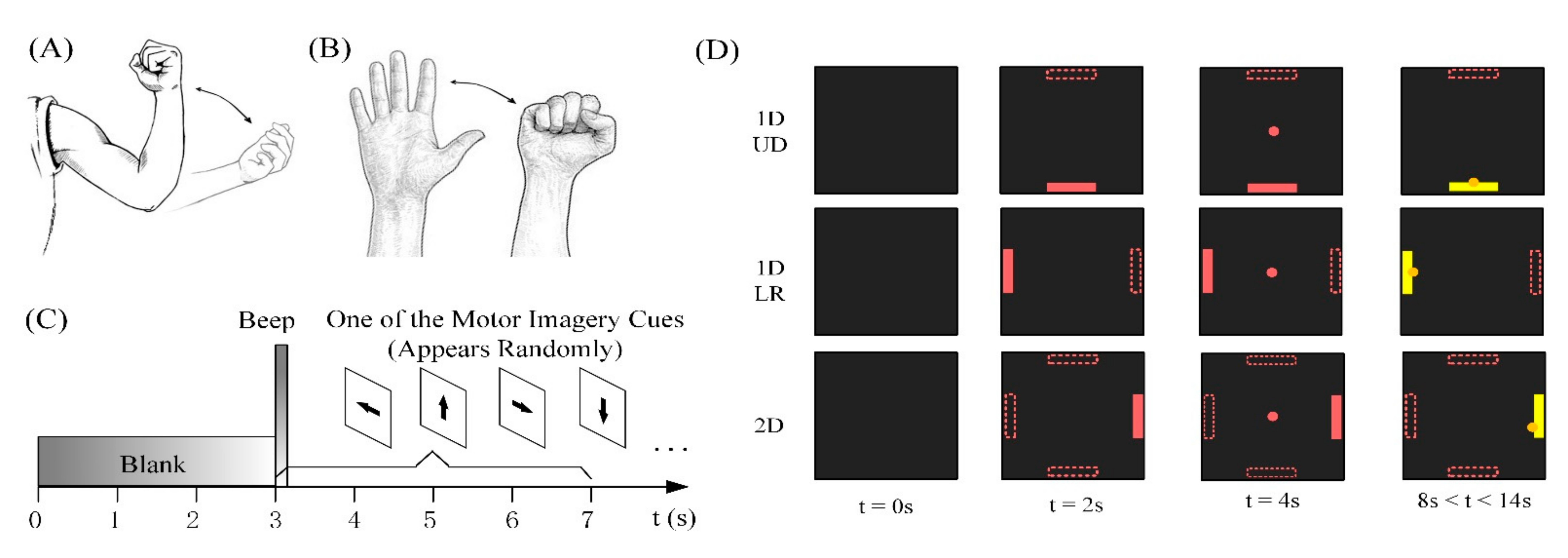

2.2. Experimental Paradigm

2.2.1. Subjects

2.2.2. MI without Feedback

2.2.3. Virtual Ball Movement Control Training

2.2.4. Online Robotic Arm Control Experiments

3. Results

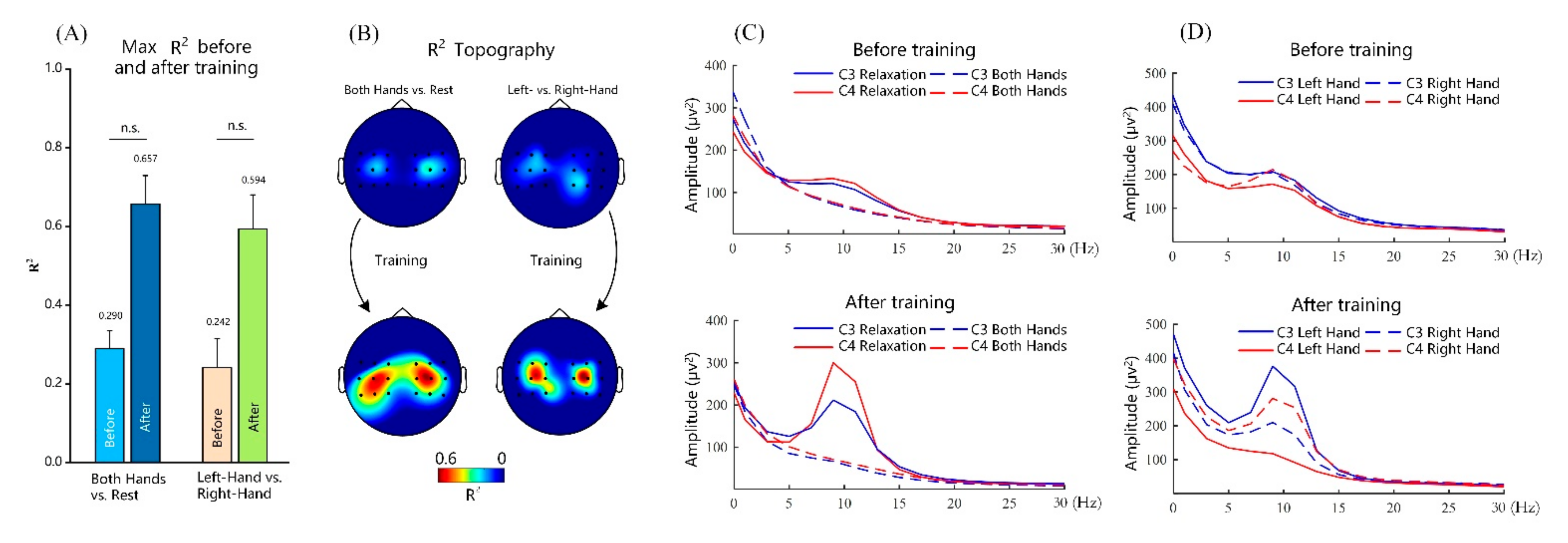

3.1. Performance of MI without Feedback

3.2. Performance of Virtual Ball Control Training

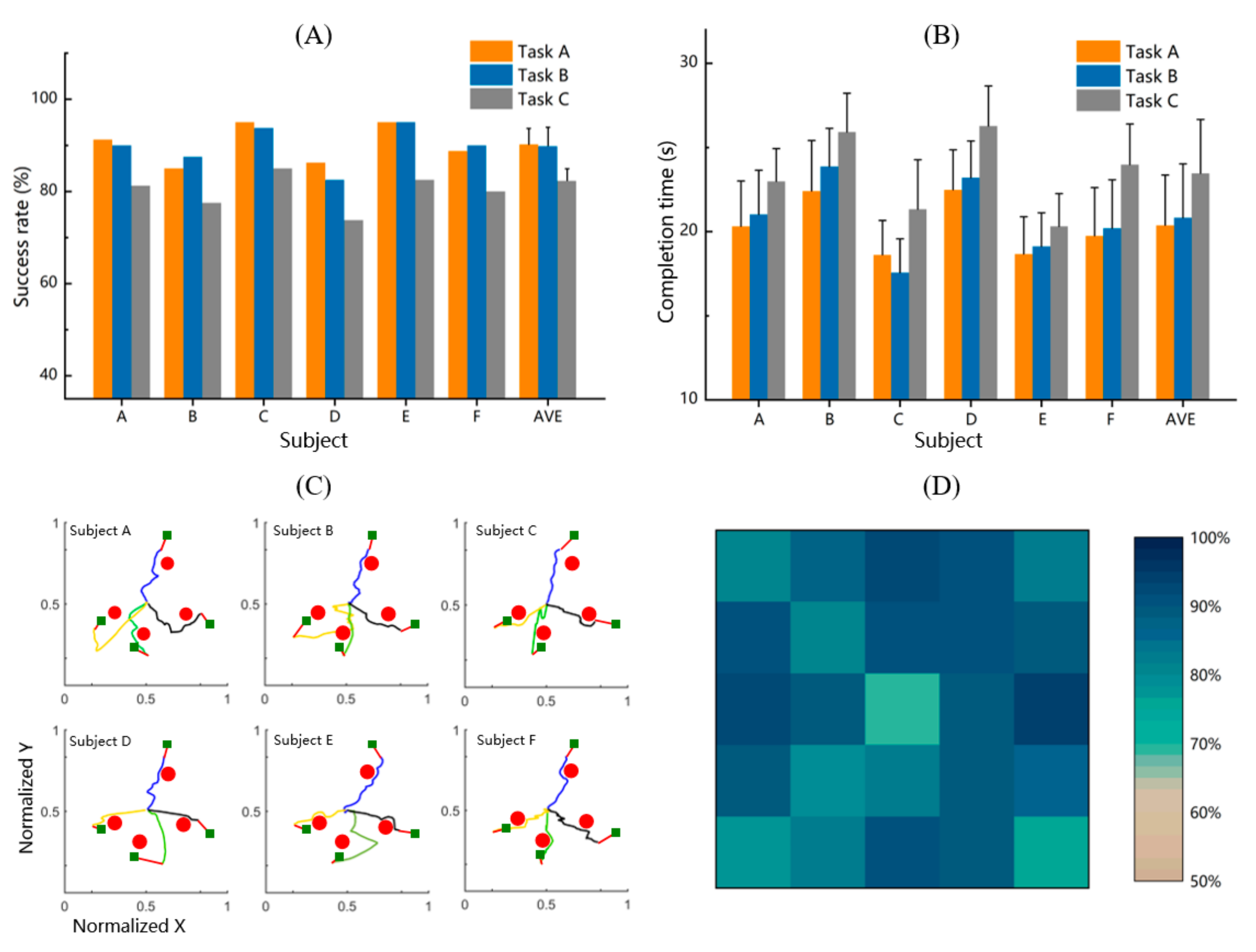

3.3. Performance of Online Experiments

4. Discussion

5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McFarland, D.J.; Wolpaw, J.R. Brain-Computer Interfaces for Communication and Control. Commun. ACM 2011, 54, 60–66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, Y.; Li, J. A Hybrid BCI Based on SSVEP and EOG for Robotic Arm Control. Front. Neurorob. 2020, 14, 583641. [Google Scholar] [CrossRef] [PubMed]

- Hochberg, L.R. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012, 485, 372–375. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Allison, B.Z.; Brunner, C.; Kaiser, V.; Müller-Putz, G.R.; Neuper, C.; Pfurtscheller, G. Toward a hybrid brain-computer interface based on imagined movement and visual attention. J. Neural Eng. 2010, 7, 26007. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; McFarland, D.J. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc. Natl. Acad. Sci. USA 2004, 101, 17849–17854. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McFarland, D.J.; Sarnacki, W.A.; Wolpaw, J.R. Electroencephalographic (EEG) control of three-dimensional movement. J. Neural Eng. 2010, 7, 36007. [Google Scholar] [CrossRef] [Green Version]

- Meng, J.; Streitz, T.; Gulachek, N.; Suma, D.; He, B. Three-Dimensional Brain-Computer Interface Control Through Simultaneous Overt Spatial Attentional and Motor Imagery Tasks. IEEE Trans. Biomed. Eng. 2018, 65, 2417–2427. [Google Scholar] [CrossRef]

- Duan, X.; Xie, X.; Xie, X.; Meng, Y.; Xu, Z. Quadcopter Flight Control Using a Non-invasive Multi-Modal Brain Computer Interface. Front. Neurorobot. 2019, 13, 23. [Google Scholar] [CrossRef]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-Based Hybrid Brain-Computer Interface: Application on Controlling an Integrated Wheelchair Robotic Arm System. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef] [Green Version]

- Utama, J.; Saputra, M.D. Design of electric wheelchair controller based on brainwaves spectrum EEG sensor. IOP Conf. Ser. Mater. Sci. Eng. 2018, 407, 12080. [Google Scholar] [CrossRef] [Green Version]

- Chowdhury, A.; Meena, Y.; Raza, H.; Bhushan, B.; Uttam, A.; Pandey, N.; Hashmi, A. Active Physical Practice Followed by Mental Practice Using BCI-Driven Hand Exoskeleton: A Pilot Trial for Clinical Effectiveness and Usability. IEEE J. Biomed. Health Inf. 2018, 22, 1786–1795. [Google Scholar] [CrossRef]

- López-Larraz, E.; Trincado-Alonso, F.; Rajasekaran, V.; Perez-Nombela, S.; del-Ama, A.; Aranda, J.; Minguez, J. Control of an Ambulatory Exoskeleton with a Brain–Machine Interface for Spinal Cord Injury Gait Rehabilitation. Front. Neurorobot. 2016, 10, 359. [Google Scholar] [CrossRef] [PubMed]

- Meng, J.; Zhang, A.; Bekyo, A.; Olsoe, J.; Baxter, B.; He, B. Noninvasive Electroencephalogram Based Control of a Robotic Arm for Reach and Grasp Tasks. Sci. Rep. 2016, 6, 38565. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McMullen, D.; Hotson, G.; Katyal, K.; Wester, B.; Fifer, M.; McGee, T.; Harris, A. Demonstration of a Semi-Autonomous Hybrid Brain-Machine Interface Using Human Intracranial EEG, Eye Tracking, and Computer Vision to Control a Robotic Upper Limb Prosthetic. IEEE Trans. Neural Syst. 2014, 22, 784–796. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, Y.; Ding, C.; Shu, X.; Gui, K.; Bezsudnova, Y.; Sheng, X.; Zhang, D. Shared control of a robotic arm using non-invasive brain–computer interface and computer vision guidance. Rob. Auton. Syst. 2019, 115, 121–129. [Google Scholar] [CrossRef]

- Mondini, V.; Kobler, R.J.; Sburlea, A.I.; Müller-Putz, G.R. Continuous low-frequency EEG decoding of arm movement for closed-loop, natural control of a robotic arm. J. Neural Eng. 2020, 17, 46031. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, Y.; Wang, Y.; Xu, S.; Gao, X. Control of a 7-DOF Robotic Arm System With an SSVEP-Based BCI. Int. J. Neural Syst. 2018, 28, 1850018. [Google Scholar] [CrossRef] [PubMed]

- Lebedev, M.A.; Nicolelis, M.A.L. Brain-machine interfaces: Past, present and future. Trends Neurosci. 2006, 29, 536–546. [Google Scholar] [CrossRef]

- Nicolelis, M.A.L. Brain-machine interfaces to restore motor function and probe neural circuits. Nat. Rev. Neurosci. 2003, 4, 417–422. [Google Scholar] [CrossRef]

- Clancy, K.B.; Mrsic-Flogel, T.D. The sensory representation of causally controlled objects. Neuron 2021, 109, 677–689. [Google Scholar] [CrossRef]

- Vidal, J.J. Real-time detection of brain events in EEG. Proc. IEEE 1977, 65, 633–641. [Google Scholar] [CrossRef]

- Ahn, S.; Kim, K.; Jun, S.C. Steady-State Somatosensory Evoked Potential for Brain-Computer Interface-Present and Future. Front. Hum. Neurosci. 2015, 9, 716. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Müller-Putz, G.; Leeb, R.; Tangermann, M.; Höhne, J.; Kübler, A.; Cincotti, F.; Mattia, D.; Rupp, R.; Müller, K.-R.; Millán, J.d.R. Towards Noninvasive Hybrid Brain–Computer Interfaces: Framework, Practice, Clinical Application, and Beyond. Proc. IEEE 2015, 9, 716. [Google Scholar] [CrossRef]

- Nierhaus, T.; Vidaurre, C.; Sannelli, C.; Mueller, K.-R.; Villringer, A. Immediate brain plasticity after one hour of brain-computer interface (BCI). J. Physiol. 2021, 599, 2435–2451. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sannelli, C.; Vidaurre, C.; Müller, K.-R.; Blankertz, B. A large scale screening study with a SMR-based BCI: Categorization of BCI users and differences in their SMR activity. PLoS ONE 2019, 14, e0207351. [Google Scholar] [CrossRef] [Green Version]

- Suma, D.; Meng, J.; Edelman, B.; He, B. Spatial-temporal aspects of continuous EEG-based neurorobotic control. J. Neural Eng. 2020, 17, 066006. [Google Scholar] [CrossRef]

- Xu, M.; Han, J.; Wang, Y.; Ming, D. Control of a 9-DoF Wheelchair-mounted robotic arm system using a P300 Brain Computer Interface: Initial experiments. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics (ROBIO), Bangkok, Thailand, 22–25 February 2009. [Google Scholar]

- Xu, M.; Han, J.; Wang, Y.; Ming, D. Optimizing visual comfort and classification accuracy for a hybrid P300-SSVEP brain-computer interface. In Proceedings of the 2017 8th International IEEE/EMBS Conference on Neural Engineering (NER), Shanghai, China, 25–28 May 2017. [Google Scholar]

- McFarland, D.J.; Miner, L.A.; Vaughan, T.M.; Wolpaw, J.R. Mu and beta rhythm topographies during motor imagery and actual movements. Brain Topogr. 2000, 12, 177–186. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Da Lopes Silva, F.H. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Hortal, E.; Planelles, D.; Costa, A.; Iáñez, E.; Úbeda, A.; Azorín, J.M.; Fernández, E. SVM-based Brain–Machine Interface for controlling a robot arm through four mental tasks. Neurocomputing 2015, 151, 116–121. [Google Scholar] [CrossRef]

- Zeng, H.; Wang, Y.; Wu, C.; Song, A.; Wen, P. Closed-Loop Hybrid Gaze Brain-Machine Interface Based Robotic Arm Control with Augmented Reality Feedback. Front. Neurorobot. 2017, 11, 60. [Google Scholar] [CrossRef] [Green Version]

- Edelman, B.J.; Meng, J.; Suma, D.; Zurn, C.; Nagarajan, E.; Baxter, B.S.; Cline, C.C.; He, B. Noninvasive neuroimaging enhances continuous neural tracking for robotic device control. Sci. Robot. 2019, 4, eaaw6844. [Google Scholar] [CrossRef] [PubMed]

- Frisoli, A.; Loconsole, C.; Leonardis, D.; Banno, F.; Barsotti, M.; Chisari, C.; Bergamasco, M. A New Gaze-BCI-Driven Control of an Upper Limb Exoskeleton for Rehabilitation in Real-World Tasks. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 1169–1179. [Google Scholar] [CrossRef]

- Kim, Y.J.; Park, S.W.; Yeom, H.G.; Bang, M.S.; Kim, J.S.; Chung, C.K.; Kim, S. A study on a robot arm driven by three-dimensional trajectories predicted from non-invasive neural signals. Biomed. Eng. Online 2015, 14, 81. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iturrate, I.; Montesano, L.; Minguez, J. Shared-control brain-computer interface for a two dimensional reaching task using EEG error-related potentials. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013. [Google Scholar]

- Xu, Y.; Zhang, H.; Cao, L.; Shu, X.; Zhang, D. A Shared Control Strategy for Reach and Grasp of Multiple Objects Using Robot Vision and Noninvasive Brain-Computer Interface. IEEE Trans. Automat. Sci. Eng. 2020, 19, 360–372. [Google Scholar] [CrossRef]

- Wang, H.; Dong, X.; Chen, Z.; Shi, B.E. Hybrid gaze/EEG brain computer interface for robot arm control on a pick and place task. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015. [Google Scholar]

- Sharbrough, F.; Chatrian, G.E.; Lesser, R.P.; Luders, H.; Picton, T.W. American Electroencephalographic Society guidelines for standard electrode position nomenclature. Clin. Neurophysiol. 1991, 8, 200–202. [Google Scholar]

- McFarland, D.J.; McCane, L.M.; David, S.V.; Wolpaw, J.R. Spatial filter selection for EEG-based communication. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 386–394. [Google Scholar] [CrossRef]

- Xu, B.; Li, W.; He, X.; Wei, Z.; Song, A. Motor Imagery Based Continuous Teleoperation Robot Control with Tactile Feedback. Electronics 2020, 9, 174. [Google Scholar] [CrossRef] [Green Version]

- Beeson, P.; Ames, B. TRAC-IK: An open-source library for improved solving of generic inverse kinematics. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015. [Google Scholar]

- Hornung, A.; Wurm, K.M. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robots 2013, 34, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Schalk, G.; McFarland, D.J.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J.R. BCI2000: A general-purpose brain-computer interface (BCI) system. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar] [CrossRef]

- Xu, B.; Wei, Z.; Song, A.; Zeng, H. Phase Synchronization Information for Classifying Motor Imagery EEG From the Same Limb. IEEE Access 2019, 7, 153842–153852. [Google Scholar] [CrossRef]

- Neuper, C.; Wörtz, M.; Pfurtscheller, G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog. Brain Res. 2006, 159, 211–222. [Google Scholar] [PubMed]

- Miller, K.J.; Schalk, G.; Fetz, E.E.; den Nijs, M.; Ojemann, J.G.; Rao, R.P.N. Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proc. Natl. Acad. Sci. USA 2010, 107, 4430–4435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tonin, L.; Bauer, F.C.; Millan, J.; Del, R. The Role of the Control Framework for Continuous Teleoperation of a Brain–Machine Interface-Driven Mobile Robot. IEEE Trans. Robot. 2020, 36, 78–91. [Google Scholar] [CrossRef]

- McFarland, D.J.; Lefkowicz, A.T.; Wolpaw, J.R. Design and operation of an EEG-based brain-computer interface with digital signal processing technology. Behav. Res. Methods Instrum. Comput. 1997, 29, 337–345. [Google Scholar] [CrossRef] [Green Version]

- McFarland, D.J.; Wolpaw, J.R. EEG-Based Communication and Control Speed-Accuracy Relationships. Appl. Psychophysiol. Biofeedback 2003, 28, 217–231. [Google Scholar] [CrossRef]

- McFarland, D.J.; Wolpaw, J.R. Sensorimotor Rhythm-Based Brain-Computer Interface (BCI): Feature Selection by Regression Improves Performance. IEEE Trans. Neural Syst. Rehabil. Eng. 2005, 13, 372–379. [Google Scholar] [CrossRef]

| Subject ID | Main Electrode | Actions of Hands | Frequency (Hz) |

|---|---|---|---|

| A | C3, C4 | Action 2 | 9 |

| B | CP3, CP4 | Action 2 | 9 |

| C | C3, C4 | Action 1 | 12 |

| D | C3, C4 | Action 1 | 10 |

| E | C3, C4 | Other action | 9 |

| F | CP3, CP4 | Other action | 12 |

| G | C3, C4 | Other action | 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, B.; Li, W.; Liu, D.; Zhang, K.; Miao, M.; Xu, G.; Song, A. Continuous Hybrid BCI Control for Robotic Arm Using Noninvasive Electroencephalogram, Computer Vision, and Eye Tracking. Mathematics 2022, 10, 618. https://doi.org/10.3390/math10040618

Xu B, Li W, Liu D, Zhang K, Miao M, Xu G, Song A. Continuous Hybrid BCI Control for Robotic Arm Using Noninvasive Electroencephalogram, Computer Vision, and Eye Tracking. Mathematics. 2022; 10(4):618. https://doi.org/10.3390/math10040618

Chicago/Turabian StyleXu, Baoguo, Wenlong Li, Deping Liu, Kun Zhang, Minmin Miao, Guozheng Xu, and Aiguo Song. 2022. "Continuous Hybrid BCI Control for Robotic Arm Using Noninvasive Electroencephalogram, Computer Vision, and Eye Tracking" Mathematics 10, no. 4: 618. https://doi.org/10.3390/math10040618