A Comprehensive Comparison of the Performance of Metaheuristic Algorithms in Neural Network Training for Nonlinear System Identification

Abstract

:1. Introduction

- In this study, the success cases of metaheuristic algorithms are revealed. As it is known, the identification of nonlinear systems is one of the difficult problems. Which metaheuristic algorithm is more effective in solving this problem? There is no clear answer to this in the literature. Therefore, the performances of sixteen metaheuristic algorithms are compared in this study. Although this comparison is valid only for nonlinear systems, it will be a reference for different types of problems. It is thought that this study will guide the future studies of many researchers in different fields. It is an important innovation that it is one of the first studies to compare the aforementioned sixteen metaheuristic algorithms. At the same time, the results make a significant contribution to the literature.

- The success of ANNs is directly related to the training process. In particular, metaheuristic algorithms have been used in ANN training. Some metaheuristic algorithms are heavily used, while others are more limited. However, there is no clear information about what the most effective metaheuristic-based ANN training algorithms are. This study is one of the first studies to identify the most effective metaheuristic-based ANN training algorithms. Therefore, it is innovative. The use of a training algorithm without relying on any analysis in solving a problem may be insufficient for success. This study gives an idea to the literature about which metaheuristic algorithms can be used in ANN training.

- The importance of nonlinear systems has been emphasized above. This study is one of the most comprehensive studies in terms of the identification of nonlinear systems. It is innovative in terms of the technique used. This study was carried out on nonlinear test systems. Many systems in the real world exhibit nonlinear behavior. Therefore, this study will be a guide for the solution of many problems and will make important contributions to the literature.

2. Related Works

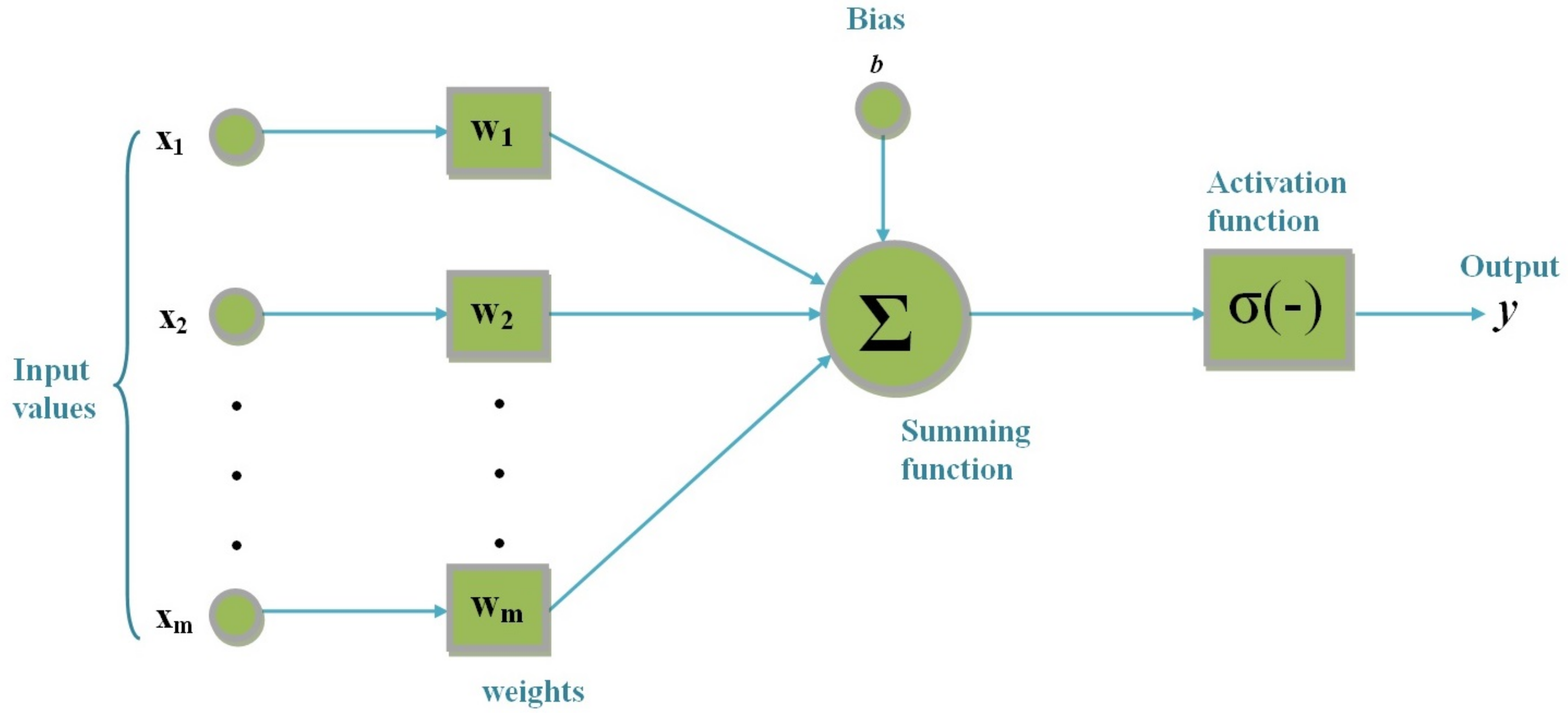

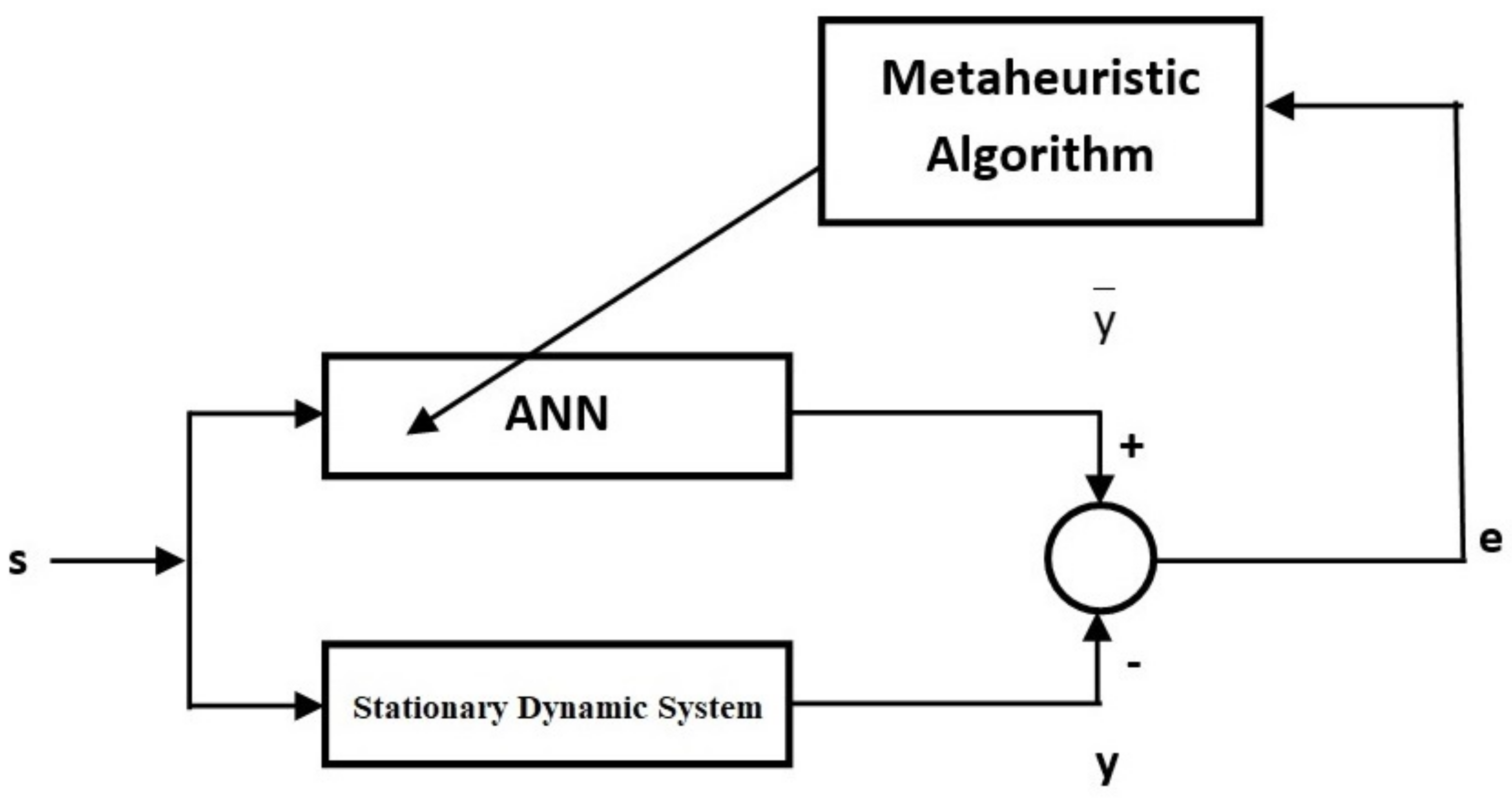

3. Artificial Neural Networks

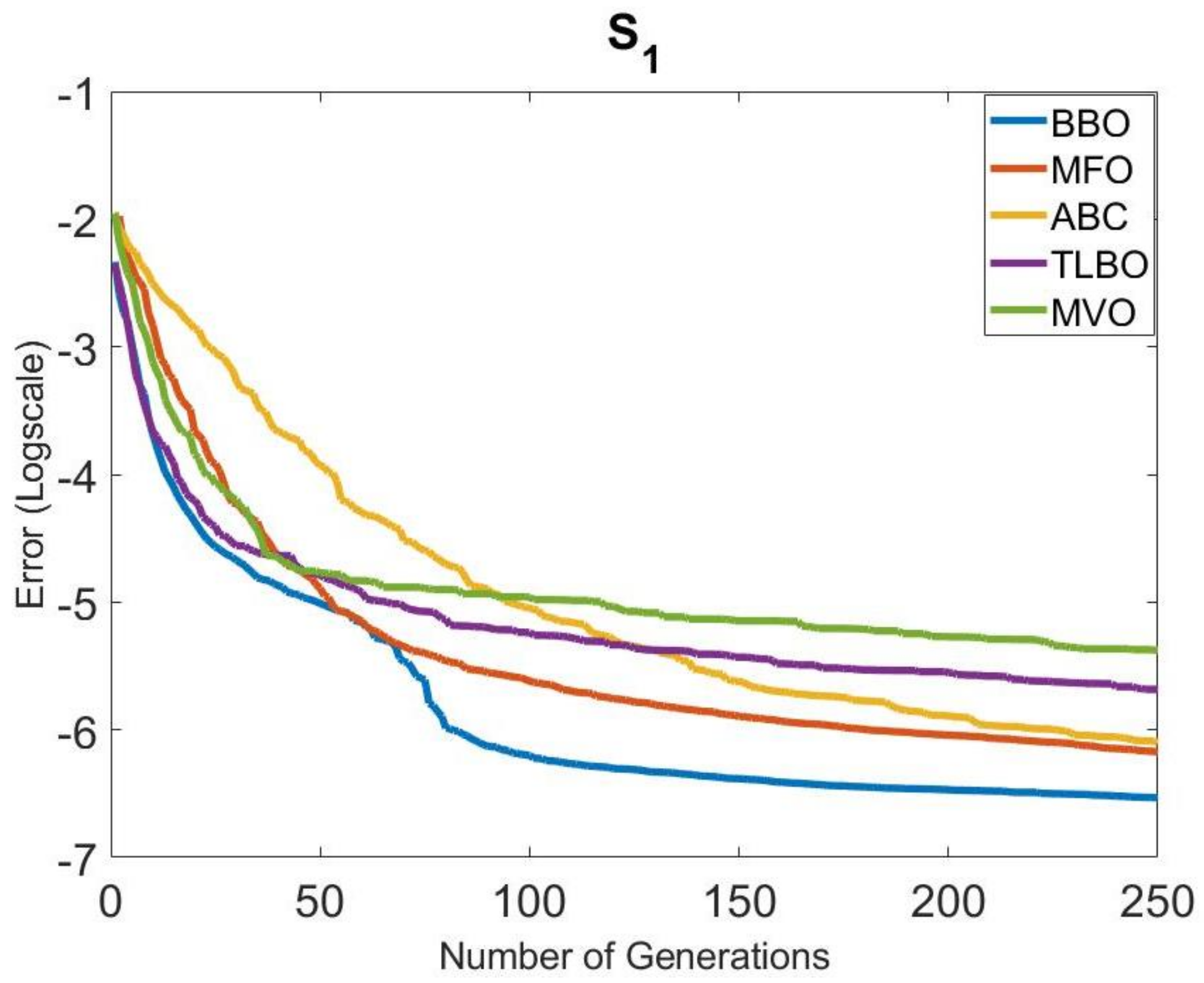

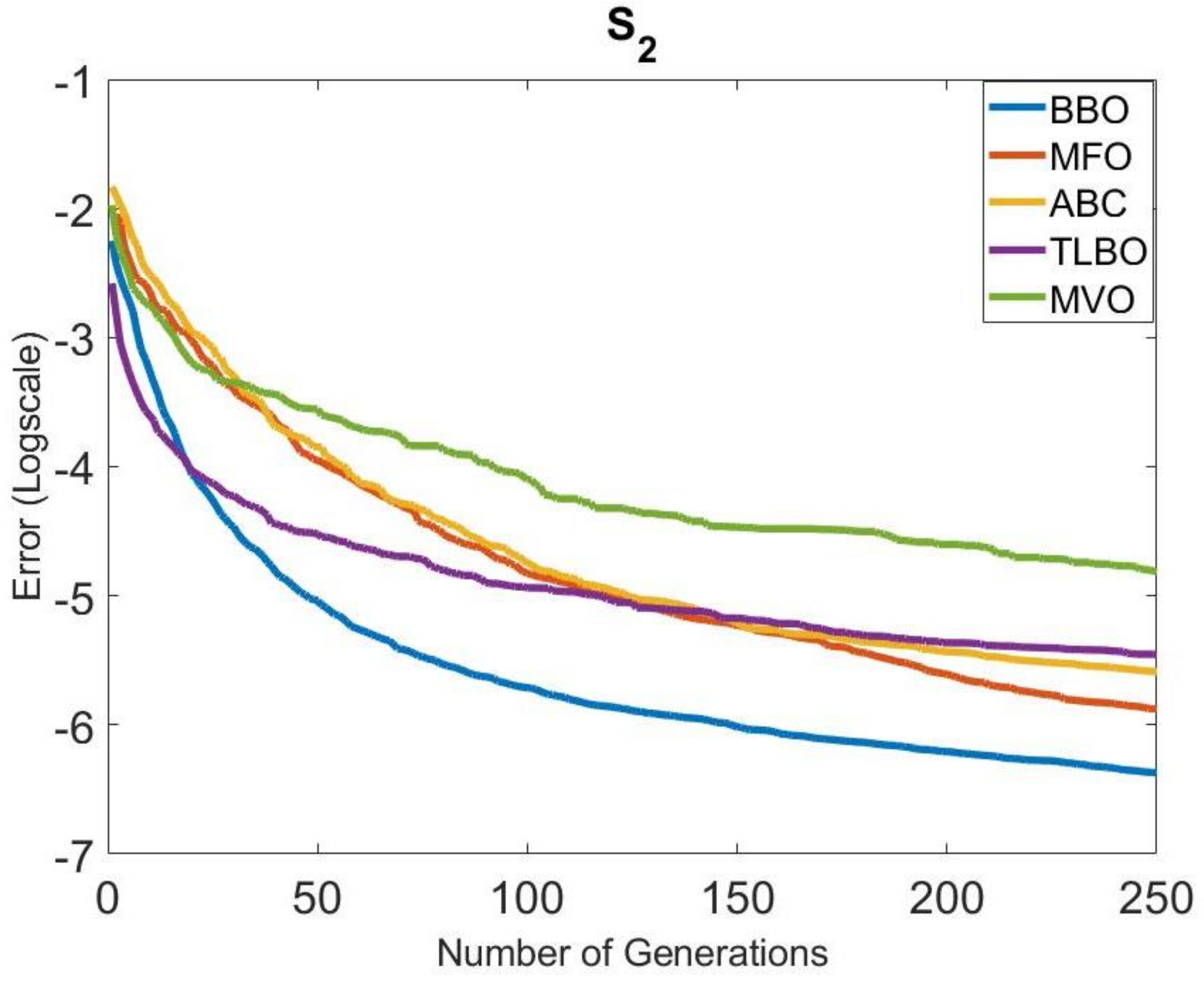

4. Simulation Results

5. Discussion

6. Conclusions

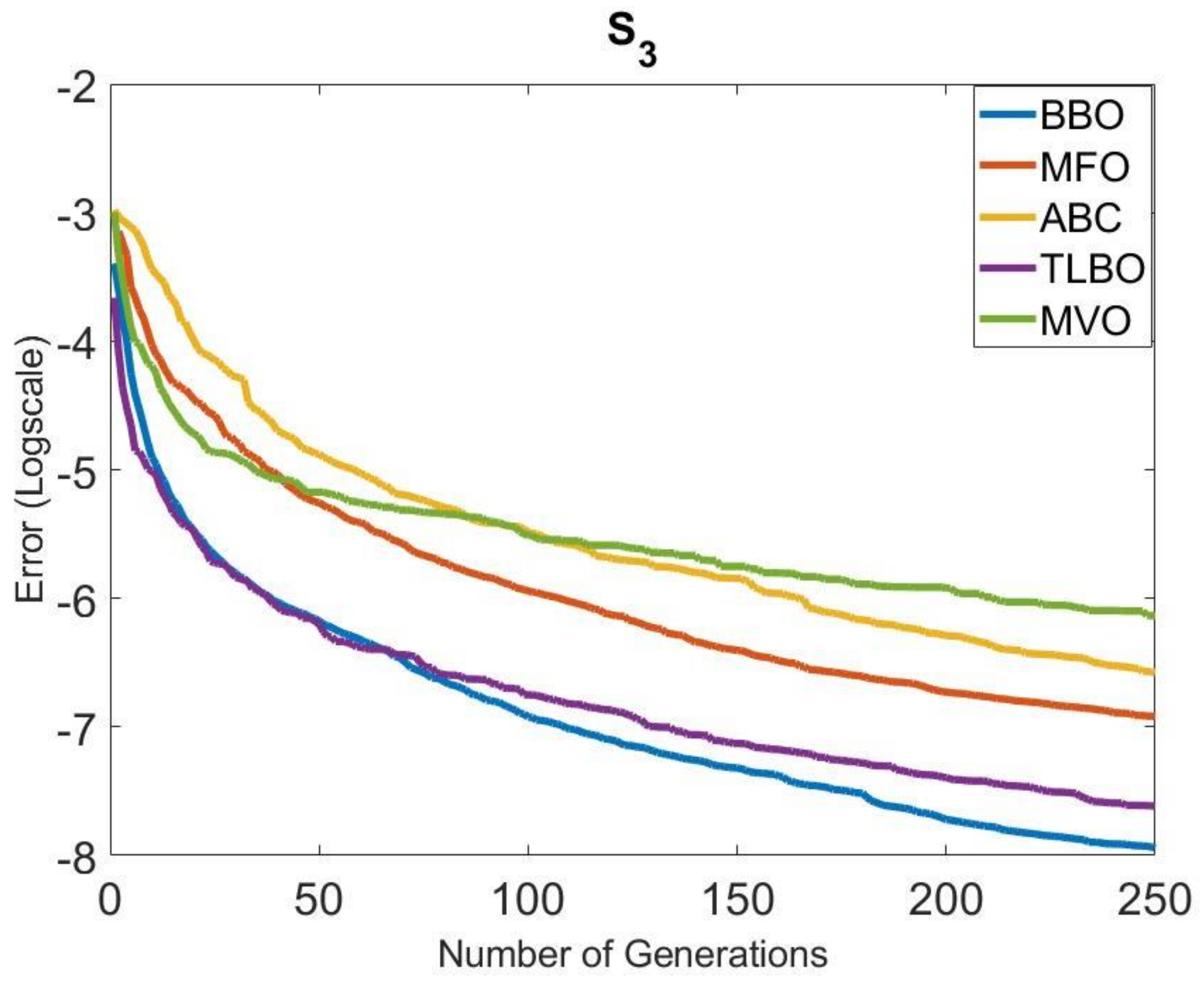

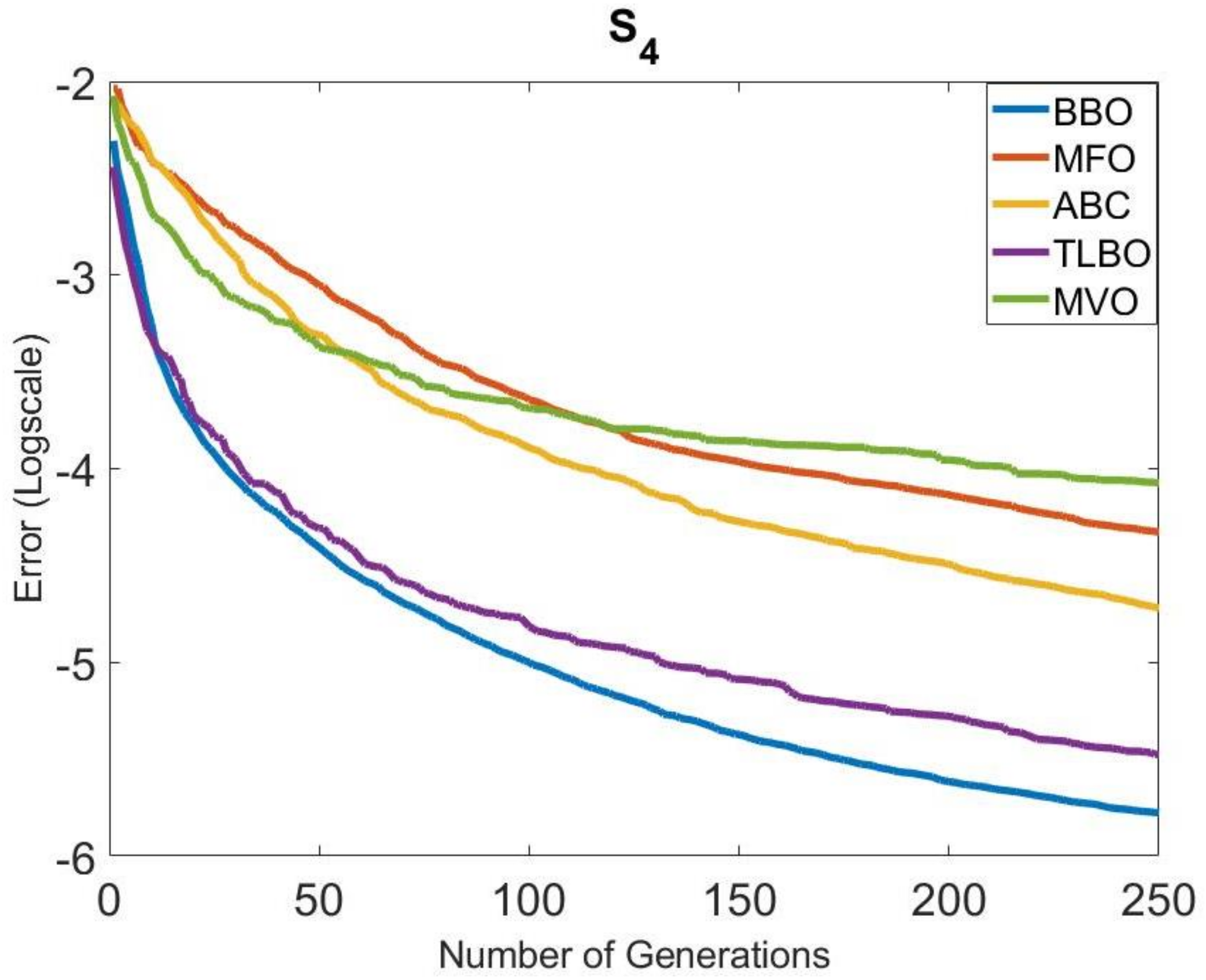

- The performances of metaheuristic algorithms were examined in three groups. BBO, MFO, ABC, TLBO, and MVO were in Group 1. The most effective results were obtained with these algorithms. The algorithms CS, SSA, PSO, FPA, and SCA were in Group 2. Compared to Group 3, acceptable results were achieved in Group 2. The algorithms WOA, BAT, HS, BSA, BA, and JAYA were included in Group 3. It was seen that these algorithms were ineffective in solving the related problem. All rankings were valid within the stated limitations of the study.

- The type of nonlinear systems, network structures, and training/testing processes affected the performance of the algorithms.

- Nonlinear system identification is a difficult problem due to its structure. It was determined that most algorithms that were successful in solving numerical optimization problems cannot show the same resistance in system identification.

- The speed of convergence is also an important criterion. The speed of convergence was very good, as was the solution quality of BBO.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ABC | Artificial bee colony |

| BAT | Bat algorithm |

| CS | Cuckoo search |

| FPA | Flower pollination algorithm |

| PSO | Particle swarm optimization |

| TLBO | Teaching–learning-based optimization |

| JAYA | Jaya algorithm |

| SCA | Sine-cosine algorithm |

| BBO | Biogeography-based optimization |

| WOA | Whale optimization algorithm |

| BSA | Bird swarm algorithm |

| HS | Harmony search |

| SSA | Salp swarm algorithm |

| BA | Bee algorithm |

| MFO | Moth-flame optimization |

| MVO | Multi-verse optimizer |

| ANNs | Artificial neural networks |

| FFNN | Feedforward neural network |

References

- Fethi, M.D.; Pasiouras, F. Assessing bank efficiency and performance with operational research and artificial intelligence techniques: A survey. Eur. J. Oper. Res. 2010, 204, 189–198. [Google Scholar] [CrossRef]

- Alizadehsani, R.; Khosravi, A.; Roshanzamir, M.; Abdar, M.; Sarrafzadegan, N.; Shafie, D.; Khozeimeh, F.; Shoeibi, A.; Nahavandi, S.; Panahiazar, M. Coronary artery disease detection using artificial intelligence techniques: A survey of trends, geographical differences and diagnostic features 1991–2020. Comput. Biol. Med. 2021, 128, 104095. [Google Scholar] [CrossRef] [PubMed]

- Ganesh Babu, R.; Amudha, V. A Survey on Artificial Intelligence Techniques in Cognitive Radio Networks. In Emerging Technologies in Data Mining and Information Security; Abraham, A., Dutta, P., Mandal, J., Bhattacharya, A., Dutta, S., Eds.; Springer: Singapore, 2019; pp. 99–110. [Google Scholar]

- Bannerjee, G.; Sarkar, U.; Das, S.; Ghosh, I. Artificial Intelligence in Agriculture: A Literature Survey. Int. J. Sci. Res. Comput. Sci. Appl. Manag. Stud. 2018, 7, 1–6. [Google Scholar]

- Abdel-Basset, M.; Shawky, L.A. Flower pollination algorithm: A comprehensive review. Artif. Intell. Rev. 2019, 52, 2533–2557. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B.; Ozturk, C.; Karaboga, N. A comprehensive survey: Artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 2014, 42, 21–57. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. On The performance of Artificial Bee Colony (ABC) algorithm. Appl. Soft Comput. 2008, 8, 687–697. [Google Scholar] [CrossRef]

- Yang, X.S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin, Germany, 2010; pp. 65–74. [Google Scholar]

- Yang, X.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the IEEE World Congress on Nature & Biologically Inspired Computing (NaBIC 2009), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Yang, X.S. Flower pollination algorithm for global optimization. In Unconventional Computation and Natural Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Institute of Electrical and Electronics Engineers (IEEE), Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Rao, R. Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int. J. Ind. Eng. 2016, 7, 19–34. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Meng, X.B.; Gao, X.Z.; Lu, L.H. A new bio-inspired optimisation algorithm: Bird Swarm Algorithm. J. Exp. Theory Artif. 2016, 28, 673–687. [Google Scholar] [CrossRef]

- Lee, K.S.; Geem, Z.W. A new meta-heuristic algorithm for continuous engineering optimization: Harmony search theory and practice. Comput. Methods Appl. Mech. Eng. 2005, 194, 3902–3933. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Sidorov, D. Integral Dynamical Models: Singularities, Signals & Control; World Scientific Series on Nonlinear Science Series A; Chua, L.O., Ed.; World Scientific Publ. Pte: Singapore, 2015; Volume 87. [Google Scholar]

- Cherkassky, V.; Gehring, D.; Mulier, F. Comparison of adaptive methods for function estimation from samples. IEEE Trans. Neural Netw. 1996, 7, 969–984. [Google Scholar] [CrossRef]

- Du, H.; Zhang, N. Application of evolving Takagi-Sugeno fuzzy model to nonlinear system identification. Appl. Soft Comput. 2008, 8, 676–686. [Google Scholar] [CrossRef]

- Tavoosi, J.; Suratgar, A.A.; Menhaj, M.B. Stable ANFIS2 for Nonlinear System Identification. Neurocomputing 2016, 182, 235–246. [Google Scholar] [CrossRef]

- Shoorehdeli, M.A.; Teshnehlab, M.; Sedigh, A.K.; Khanesar, M.A. Identification using ANFIS with intelligent hybrid stable learning algorithm approaches and stability analysis of training methods. Appl. Soft Comput. 2009, 9, 833–850. [Google Scholar] [CrossRef]

- Karaboga, D.; Kaya, E. An adaptive and hybrid artificial bee colony algorithm (aABC) for ANFIS training. Appl. Soft Comput. 2016, 49, 423–436. [Google Scholar] [CrossRef]

- Karaboga, D.; Kaya, E. Training ANFIS by using the artificial bee colony algorithm. Turk. J. Electr. Eng. 2017, 25, 1669–1679. [Google Scholar] [CrossRef]

- Karaboga, D.; Kaya, E. Training ANFIS by Using an Adaptive and Hybrid Artificial Bee Colony Algorithm (aABC) for the Identification of Nonlinear Static Systems. Arab. J. Sci. Eng. 2018, 44, 3531–3547. [Google Scholar] [CrossRef]

- Kaya, E.; Baştemur Kaya, C. A Novel Neural Network Training Algorithm for the Identification of Nonlinear Static Systems: Artificial Bee Colony Algorithm Based on Effective Scout Bee Stage. Symmetry 2021, 13, 419. [Google Scholar] [CrossRef]

- Subudhi, B.; Jena, D. A differential evolution based neural network approach to nonlinear system identification. Appl. Soft Comput. 2011, 11, 861–871. [Google Scholar] [CrossRef]

- Giannakis, G.; Serpedin, E. A bibliography on nonlinear system identification. Signal Process. 2001, 81, 533–580. [Google Scholar] [CrossRef]

- Chiuso, A.; Pillonetto, G. System identification: A machine learning perspective. Annu. Rev. Control Robot. Auton. Syst. 2019, 2, 281–304. [Google Scholar] [CrossRef]

- Xavier, J.; Patnaik, S.; Panda, R.C. Process Modeling, Identification Methods, and Control Schemes for Nonlinear Physical Systems—A Comprehensive Review. ChemBioEng Rev. 2021, 8, 392–412. [Google Scholar] [CrossRef]

- Paulo Vitor de Campos Souza, P.V. Fuzzy neural networks and neuro-fuzzy networks: A review the main techniques and applications used in the literature. Appl. Soft Comput. 2020, 92, 106275. [Google Scholar] [CrossRef]

- Gonzalez, J.; Yu, W. Non-linear system modeling using LSTM neural networks. IFAC PapersOnLine 2018, 51, 485–489. [Google Scholar] [CrossRef]

- Karaboga, D.; Kaya, E. Adaptive network based fuzzy inference system (ANFIS) training approaches: A comprehensive survey. Artif. Intell. Rev. 2019, 52, 2263–2293. [Google Scholar] [CrossRef]

- Ambrosino, F.; Sabbarese, C.; Roca, V.; Giudicepietro, F.; Chiodini, G. Analysis of 7-years Radon time series at Campi Flegrei area (Naples, Italy) using artificial neural network method. Appl. Radiat. Isot. 2020, 163, 109239. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Sharshir, S.W.; Elaziz, M.A.; Kabeel, A.E.; Guilan, W.; Zhang, H. Modeling of solar energy systems using artificial neural network: A comprehensive review. Sol. Energy 2019, 180, 622–639. [Google Scholar] [CrossRef]

- Cabaneros, S.M.; Calautit, J.K.; Hughes, B.R. A review of artificial neural network models for ambient air pollution prediction. Environ. Model. Softw. 2019, 119, 285–304. [Google Scholar] [CrossRef]

- Moayedi, H.; Mosallanezhad, M.; Rashid, A.S.A.; Jusoh, W.A.W.; Muazu, M.A. A systematic review and meta-analysis of artificial neural network application in geotechnical engineering: Theory and applications. Neural Comput. Appl. 2020, 32, 495–518. [Google Scholar] [CrossRef]

- Bharati, S.; Podder, P.; Mondal, M. Artificial neural network based breast cancer screening: A comprehensive review. arXiv 2020, arXiv:2006.01767. [Google Scholar]

- Ma, S.W.; Zhang, X.; Jia, C.; Zhao, Z.; Wang, S.; Wanga, S. Image and Video Compression with Neural Networks: A Review. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1683–1698. [Google Scholar] [CrossRef] [Green Version]

- Sidorov, D.N.; Sidorov, N.A. Convex majorants method in the theory of nonlinear Volterra equations. Banach J. Math. Anal. 2012, 6, 1–10. [Google Scholar] [CrossRef]

- Ozturk, C.; Karaboga, D. Hybrid artificial bee colony algorithm for neural network training. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; Volume 30, pp. 84–88. [Google Scholar]

- Abusnaina, A.A.; Ahmad, S.; Jarrar, R.; Mafarja, M. Training neural networks using salp swarm algorithm for pattern classification. In Proceedings of the 2nd International Conference on Future Networks and Distributed Systems, Amman, Jordan, 26–27 June 2018; p. 17. [Google Scholar]

- Ghanem, W.A.; Jantan, A. A new approach for intrusion detection system based on training multilayer perceptron by using enhanced Bat algorithm. Neural Comput. Appl. 2019, 32, 1–34. [Google Scholar] [CrossRef]

- Jaddi, N.S.; Abdullah, S.; Hamdan, A.R. Optimization of neural network model using modified bat-inspired algorithm. Appl. Soft Comput. J. 2015, 37, 71–86. [Google Scholar] [CrossRef]

- Valian, E.; Mohanna, S.; Tavakoli, S. Improved Cuckoo Search Algorithm for Feedforward Neural Network Training. Int. J. Artif. Intell. Appl. 2009, 2, 36–43. [Google Scholar]

- Kueh, S.M.; Kuok, K.K. Forecasting Long Term Precipitation Using Cuckoo Search Optimization Neural Network Models. Environ. Eng. Manag. J. 2018, 17, 1283–1291. [Google Scholar] [CrossRef]

- Baştemur Kaya, C.; Kaya, E. A Novel Approach Based to Neural Network and Flower Pollination Algorithm to Predict Number of COVID-19 Cases. Balkan J. Electr. Comput. Eng. 2021, 9, 327–336. [Google Scholar] [CrossRef]

- Gupta, K.D.; Dwivedi, R.; Sharma, D.K. Prediction of Covid-19 trends in Europe using generalized regression neural network optimized by flower pollination algorithm. J. Interdiscip. Math. 2021, 24, 33–51. [Google Scholar] [CrossRef]

- Das, G.; Pattnaik, P.K.; Padhy, S.K. Artificial Neural Network trained by Particle Swarm Optimization for nonlinear channel equalization. Expert Syst. Appl. 2014, 41, 3491–3496. [Google Scholar] [CrossRef]

- Ghashami, F.; Kamyar, K.; Riazi, S.A. Prediction of Stock Market Index Using a Hybrid Technique of Artificial Neural Networks and Particle Swarm Optimization. Appl. Econ. Financ. 2021, 8, 1–8. [Google Scholar] [CrossRef]

- Kankal, M.; Uzlu, E. Applications, Neural network approach with teaching–learning-based optimization for modeling and forecasting long-term electric energy demand in Turkey. Neural Comput. Appl. 2017, 28, 737–747. [Google Scholar] [CrossRef]

- Chen, D.; Lu, R.; Zou, F.; Li, S. Teaching-learning-based optimization with variable-population scheme and its application for ANN and global optimization. Neurocomputing 2016, 173, 1096–1111. [Google Scholar] [CrossRef]

- Wang, S.; Rao, R.V.; Chen, P.; Liu, A.; Wei, L. Abnormal Breast Detection in Mammogram Images by Feed-forward Neural Network Trained by Jaya Algorithm. Fundam. Inform. 2017, 151, 191–211. [Google Scholar] [CrossRef]

- Uzlu, E. Application of Jaya algorithm-trained artificial neural networks for prediction of energy use in the nation of Turkey. Energy Source Part B 2019, 14, 183–200. [Google Scholar] [CrossRef]

- Hamdan, S.; Binkhatim, S.; Jarndal, A.; Alsyouf, I. On the performance of artificial neural network with sine-cosine algorithm in forecasting electricity load demand. In Proceedings of the 2017 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 21–23 November 2017. [Google Scholar]

- Pashiri, R.T.; Rostami, Y.; Mahrami, M. Spam detection through feature selection using artificial neural network and sine-cosine algorithm. Math. Sci. 2020, 14, 193–199. [Google Scholar] [CrossRef]

- Pham, B.T.; Nguyen, M.D.; Bui, K.-T.T.; Prakash, I.; Chapi, K.; Bui, D.T. A novel artificial intelligence approach based on Multi-layer Perceptron Neural Network and Biogeography-based Optimization for predicting coefficient of consolidation of soil. Catena 2018, 173, 302–311. [Google Scholar] [CrossRef]

- Mousavirad, S.J.; Jalali, S.M.J.; Ahmadian, S.; Khosravi, A.; Schaefer, G.; Nahavandi, S. Neural network training using a biogeography-based learning strategy. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Aljarah, I.; Faris, H.; Mirjalili, S. Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 2018, 22, 1–15. [Google Scholar] [CrossRef]

- Alameer, Z.; Ewees, A.; Elsayed Abd Elaziz, M.; Ye, H. Forecasting gold price fluctuations using improved multilayer perceptron neural network and whale optimization algorithm. Resour. Policy 2019, 61, 250–260. [Google Scholar] [CrossRef]

- Aljarah, I.; Faris, H.; Mirjalili, S.; Al-Madi, N.; Sheta, A.; Mafarja, M. Evolving neural networks using bird swarm algorithm for data classification and regression applications. Clust. Comput. 2019, 22, 1317–1345. [Google Scholar] [CrossRef] [Green Version]

- Xiang, L.; Deng, Z.; Hu, A. Forecasting Short-Term Wind Speed Based on IEWT-LSSVM model Optimized by Bird Swarm Algorithm. IEEE Access 2019, 7, 59333–59345. [Google Scholar] [CrossRef]

- Kulluk, S.; Ozbakir, L.; Baykasoglu, A. Training neural networks with harmony search algorithms for classification problems. Eng. Appl. Artif. Intell. 2012, 25, 11–19. [Google Scholar] [CrossRef]

- Kulluk, S.; Ozbakir, L.; Baykasoglu, A. Self-adaptive global best harmony search algorithm for training neural networks. Procedia Comput. Sci. 2011, 3, 282–286. [Google Scholar] [CrossRef] [Green Version]

- Muthukumar, R.; Balamurugan, P. A Novel Power Optimized Hybrid Renewable Energy System Using Neural Computing and Bee Algorithm. Automatika 2019, 60, 332–339. [Google Scholar] [CrossRef] [Green Version]

- Bairathi, D.; Gopalani, D. Salp swarm algorithm (SSA) for training feed-forward neural networks. In Soft Computing for Problem Solving; Springer: Singapore, 2019; pp. 521–534. [Google Scholar]

- Han, F.; Jiang, J.; Ling, Q.H.; Su, B.Y. A survey on metaheuristic optimization for random single-hidden layer feedforward neural network. Neurocomputing 2019, 335, 261–273. [Google Scholar] [CrossRef]

- Ojha, V.K.; Abraham, A.; Snášel, V. Metaheuristic Design of Feedforward Neural Networks: A Review of Two Decades of Research. Eng. Appl. Artif. Intell. 2017, 60, 97–116. [Google Scholar] [CrossRef] [Green Version]

- Elaziz, M.A.; Dahou, A.; Abualigah, L.; Yu, L.; Alshinwan, M.; Khasawneh, A.M.; Lu, S. Advanced metaheuristic optimization techniques in applications of deep neural networks: A review. Neural Comput. Appl. 2021, 33, 14079–14099. [Google Scholar] [CrossRef]

| System | Equation | Inputs | Output | Number of Training/Test Data |

|---|---|---|---|---|

| y | 80\20 | |||

| y | 80\20 | |||

| y | 80\20 | |||

| y | 173\43 | |||

| 200\50 | ||||

| 200\50 |

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| BAT | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| CS | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| FPA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| PSO | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| JAYA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| TLBO | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| SCA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| BBO | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| WOA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| BSA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| HS | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| BA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| MVO | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| MFO | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| SSA | 1-5-1 | ||||

| 1-10-1 | |||||

| 1-15-1 | |||||

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BAT | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| CS | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| FPA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| PSO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| JAYA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| TLBO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| SCA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BBO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| WOA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BSA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| HS | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| MVO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| MFO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| SSA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BAT | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| CS | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| FPA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| PSO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| JAYA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| TLBO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| SCA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BBO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| WOA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BSA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| HS | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| BA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| MVO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| MFO | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| SSA | 2-5-1 | ||||

| 2-10-1 | |||||

| 2-15-1 | |||||

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BAT | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| CS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| FPA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| PSO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| JAYA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| TLBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SCA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| WOA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| HS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MVO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MFO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BAT | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| CS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| FPA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| PSO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| JAYA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| TLBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SCA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| WOA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| HS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MVO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MFO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| Algorithm | Network Structure | Train | Test | ||

|---|---|---|---|---|---|

| Mean | SD. | Mean | SD. | ||

| ABC | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BAT | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| CS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| FPA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| PSO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| JAYA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| TLBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SCA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BBO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| WOA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| HS | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| BA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MVO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| MFO | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| SSA | 3-5-1 | ||||

| 3-10-1 | |||||

| 3-15-1 | |||||

| Algorithm | ||||||||

|---|---|---|---|---|---|---|---|---|

| NS | Mean | NS | Mean | NS | Mean | NS | Mean | |

| ABC | 1-15-1 | 2-10-1 | 2-10-1 | 3-10-1 | ||||

| BAT | 1-10-1 | 2-15-1 | 2-15-1 | 3-5-1 | ||||

| CS | 1-15-1 | 2-15-1 | 2-5-1 | 3-10-1 | ||||

| FPA | 1-15-1 | 2-15-1 | 2-5-1 | 3-5-1 | ||||

| PSO | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| JAYA | 1-10-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| TLBO | 1-10-1 | 2-10-1 | 2-5-1 | 3-10-1 | ||||

| SCA | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| BBO | 1-15-1 | 2-15-1 | 2-10-1 | 3-10-1 | ||||

| WOA | 1-10-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| BSA | 1-10-1 | 2-10-1 | 2-15-1 | 3-15-1 | ||||

| HS | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| BA | 1-15-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| MVO | 1-15-1 | 2-15-1 | 2-10-1 | 3-15-1 | ||||

| MFO | 1-15-1 | 2-15-1 | 2-10-1 | 3-15-1 | ||||

| SSA | 1-15-1 | 2-15-1 | 2-15-1 | 3-15-1 | ||||

| Algorithm | ||||

|---|---|---|---|---|

| NS | Mean | NS | Mean | |

| ABC | 3-15-1 | 3-15-1 | ||

| BAT | 3-15-1 | 3-15-1 | ||

| CS | 3-10-1 | 3-15-1 | ||

| FPA | 3-10-1 | 3-15-1 | ||

| PSO | 3-5-1 | 3-5-1 | ||

| JAYA | 3-5-1 | 3-10-1 | ||

| TLBO | 3-10-1 | 3-10-1 | ||

| SCA | 3-5-1 | 3-5-1 | ||

| BBO | 3-5-1 | 3-10-1 | ||

| WOA | 3-5-1 | 3-5-1 | ||

| BSA | 3-15-1 | 3-10-1 | ||

| HS | 3-5-1 | 3-5-1 | ||

| BA | 3-10-1 | 3-10-1 | ||

| MVO | 3-15-1 | 3-15-1 | ||

| MFO | 3-15-1 | 3-15-1 | ||

| SSA | 3-15-1 | 3-15-1 | ||

| Algorithm | ||||||||

|---|---|---|---|---|---|---|---|---|

| NS | Mean | NS | Mean | NS | Mean | NS | Mean | |

| ABC | 1-15-1 | 2-10-1 | 2-5-1 | 3-10-1 | ||||

| BAT | 1-10-1 | 2-15-1 | 2-15-1 | 3-5-1 | ||||

| CS | 1-10-1 | 2-10-1 | 2-5-1 | 3-5-1 | ||||

| FPA | 1-15-1 | 2-10-1 | 2-5-1 | 3-15-1 | ||||

| PSO | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| JAYA | 1-15-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| TLBO | 1-10-1 | 2-10-1 | 2-10-1 | 3-10-1 | ||||

| SCA | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| BBO | 1-15-1 | 2-15-1 | 2-10-1 | 3-5-1 | ||||

| WOA | 1-10-1 | 2-15-1 | 2-5-1 | 3-5-1 | ||||

| BSA | 1-10-1 | 2-10-1 | 2-15-1 | 3-15-1 | ||||

| HS | 1-5-1 | 2-5-1 | 2-5-1 | 3-5-1 | ||||

| BA | 1-10-1 | 2-10-1 | 2-5-1 | 3-5-1 | ||||

| MVO | 1-15-1 | 2-15-1 | 2-15-1 | 3-15-1 | ||||

| MFO | 1-15-1 | 2-15-1 | 2-10-1 | 3-15-1 | ||||

| SSA | 1-15-1 | 2-15-1 | 2-15-1 | 3-15-1 | ||||

| Algorithm | ||||

|---|---|---|---|---|

| NS | Mean | NS | Mean | |

| ABC | 3-15-1 | 3-15-1 | ||

| BAT | 3-15-1 | 3-15-1 | ||

| CS | 3-5-1 | 3-15-1 | ||

| FPA | 3-10-1 | 3-15-1 | ||

| PSO | 3-5-1 | 3-5-1 | ||

| JAYA | 3-5-1 | 3-10-1 | ||

| TLBO | 3-10-1 | 3-10-1 | ||

| SCA | 3-5-1 | 3-5-1 | ||

| BBO | 3-5-1 | 3-10-1 | ||

| WOA | 3-5-1 | 3-5-1 | ||

| BSA | 3-15-1 | 3-10-1 | ||

| HS | 3-5-1 | 3-5-1 | ||

| BA | 3-10-1 | 3-10-1 | ||

| MVO | 3-15-1 | 3-15-1 | ||

| MFO | 3-15-1 | 3-15-1 | ||

| SSA | 3-15-1 | 3-15-1 | ||

| System | ABC | BAT | CS | FPA | PSO | JAYA | TLBO | SCA | BBO | WOA | BSA | HS | BA | MVO | MFO | SSA |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4 | 16 | 5 | 7 | 9 | 15 | 8 | 11 | 3 | 10 | 13 | 14 | 12 | 2 | 1 | 6 | |

| 3 | 13 | 6 | 7 | 9 | 16 | 5 | 11 | 1 | 14 | 12 | 10 | 15 | 4 | 2 | 8 | |

| 9 | 11 | 5 | 6 | 8 | 16 | 1 | 10 | 2 | 11 | 13 | 13 | 15 | 4 | 6 | 3 | |

| 3 | 14 | 8 | 9 | 6 | 16 | 1 | 10 | 2 | 11 | 13 | 11 | 15 | 5 | 7 | 4 | |

| 2 | 11 | 7 | 8 | 9 | 16 | 4 | 10 | 1 | 12 | 14 | 13 | 15 | 5 | 3 | 6 | |

| 3 | 11 | 6 | 8 | 9 | 16 | 4 | 10 | 1 | 12 | 15 | 14 | 13 | 5 | 1 | 7 | |

| TOTAL | 24 | 76 | 37 | 45 | 50 | 95 | 23 | 62 | 10 | 70 | 80 | 75 | 85 | 25 | 20 | 34 |

| System | ABC | BAT | CS | FPA | PSO | JAYA | TLBO | SCA | BBO | WOA | BSA | HS | BA | MVO | MFO | SSA |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 16 | 5 | 7 | 7 | 15 | 5 | 11 | 2 | 10 | 13 | 14 | 12 | 2 | 1 | 9 | |

| 5 | 11 | 7 | 8 | 1 | 16 | 4 | 11 | 6 | 14 | 13 | 10 | 15 | 3 | 2 | 9 | |

| 3 | 11 | 4 | 8 | 1 | 16 | 2 | 10 | 4 | 12 | 15 | 14 | 12 | 6 | 9 | 6 | |

| 3 | 14 | 7 | 8 | 3 | 16 | 1 | 10 | 2 | 12 | 13 | 11 | 15 | 6 | 8 | 5 | |

| 3 | 11 | 7 | 8 | 9 | 16 | 4 | 10 | 1 | 12 | 14 | 13 | 15 | 5 | 2 | 6 | |

| 3 | 11 | 6 | 8 | 9 | 16 | 4 | 10 | 1 | 12 | 15 | 13 | 14 | 4 | 1 | 7 | |

| TOTAL | 19 | 74 | 36 | 47 | 30 | 95 | 20 | 62 | 16 | 72 | 83 | 75 | 83 | 26 | 23 | 42 |

| Order | Algorithm | Train Ranking | Test Ranking | Total Ranking | The Group |

|---|---|---|---|---|---|

| 1 | BBO | 10 | 16 | 26 | Group 1: The most successful algorithms |

| MFO | 20 | 23 | 43 | ||

| 2 | ABC | 24 | 19 | 43 | |

| TLBO | 23 | 20 | 43 | ||

| 3 | MVO | 25 | 26 | 51 | |

| 4 | CS | 37 | 36 | 73 | Group 2: Moderately successful algorithms |

| 5 | SSA | 34 | 42 | 76 | |

| 6 | PSO | 50 | 30 | 80 | |

| 7 | FPA | 45 | 47 | 92 | |

| 8 | SCA | 62 | 62 | 124 | |

| 9 | WOA | 70 | 72 | 142 | Group 3: The most unsuccessful algorithms |

| 10 | BAT | 76 | 74 | 150 | |

| HS | 75 | 75 | 150 | ||

| 11 | BSA | 80 | 83 | 163 | |

| 12 | BA | 85 | 83 | 168 | |

| 13 | JAYA | 95 | 95 | 190 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaya, E. A Comprehensive Comparison of the Performance of Metaheuristic Algorithms in Neural Network Training for Nonlinear System Identification. Mathematics 2022, 10, 1611. https://doi.org/10.3390/math10091611

Kaya E. A Comprehensive Comparison of the Performance of Metaheuristic Algorithms in Neural Network Training for Nonlinear System Identification. Mathematics. 2022; 10(9):1611. https://doi.org/10.3390/math10091611

Chicago/Turabian StyleKaya, Ebubekir. 2022. "A Comprehensive Comparison of the Performance of Metaheuristic Algorithms in Neural Network Training for Nonlinear System Identification" Mathematics 10, no. 9: 1611. https://doi.org/10.3390/math10091611

APA StyleKaya, E. (2022). A Comprehensive Comparison of the Performance of Metaheuristic Algorithms in Neural Network Training for Nonlinear System Identification. Mathematics, 10(9), 1611. https://doi.org/10.3390/math10091611