Self-Adaptive Method and Inertial Modification for Solving the Split Feasibility Problem and Fixed-Point Problem of Quasi-Nonexpansive Mapping

Abstract

:1. Introduction

- ()

- ;

- ()

- , ;

- ()

- , ;

- ()

- , ;

- ()

- .

- ()

- ;

- ()

- ;

- ()

- , ;

- ()

- ;

- ()

- .

2. Preliminaries

- (i)

- A nonexpansive mapping if for any ;

- (ii)

- A quasi-nonexpansive mapping if and for every , ;

- (iii)

- A firmly nonexpansive mapping if for any ;

- (iv)

- A Lipschitz continuous mapping if there is such that for any ;

- (v)

- A contraction mapping if there exists such that , for any .

- (1)

- ;

- (2)

- ;

- (3)

- implies , where is a subsequence of .

3. Main Results

- ;

- ;

- , ;

- ;

- .

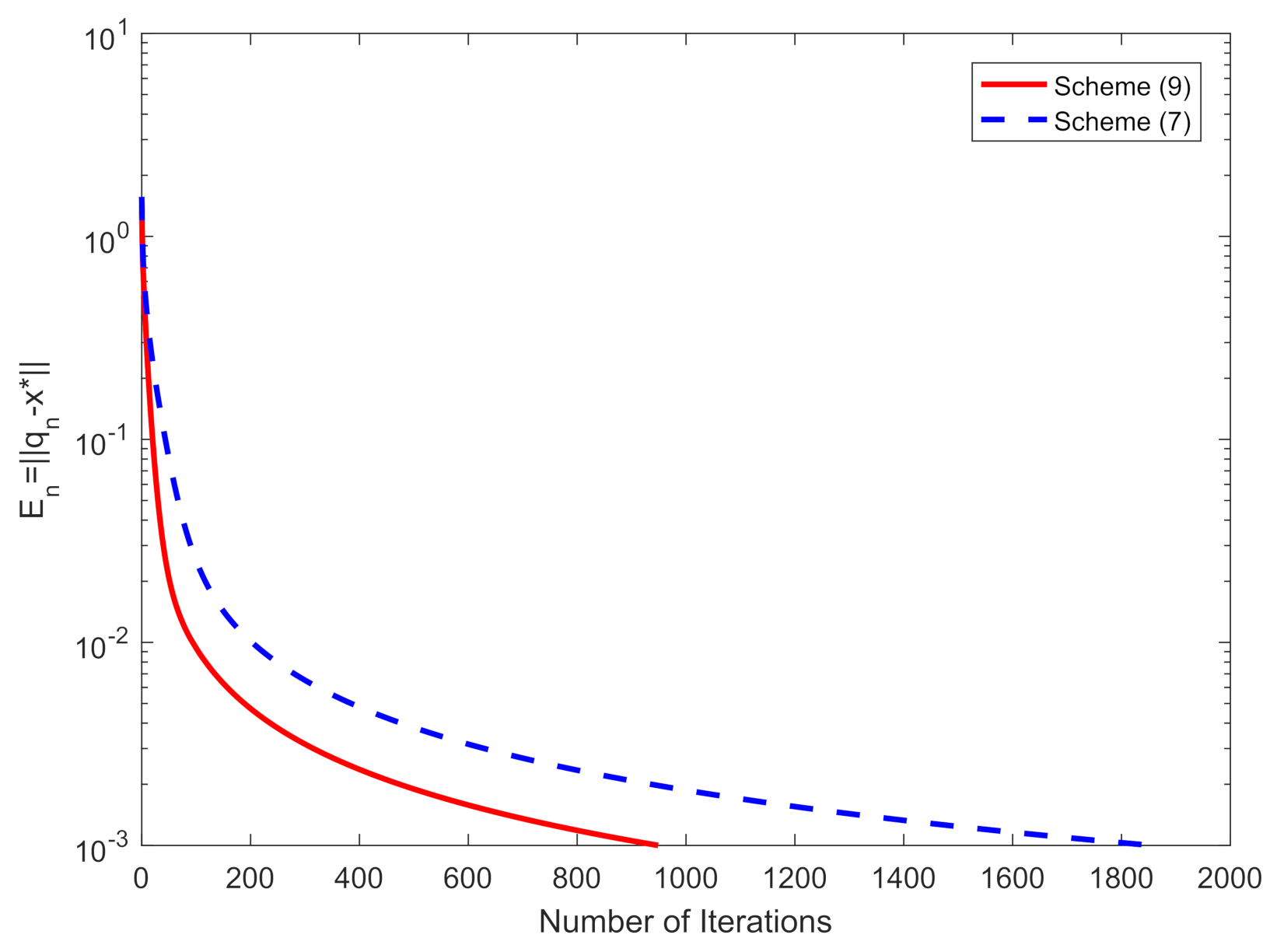

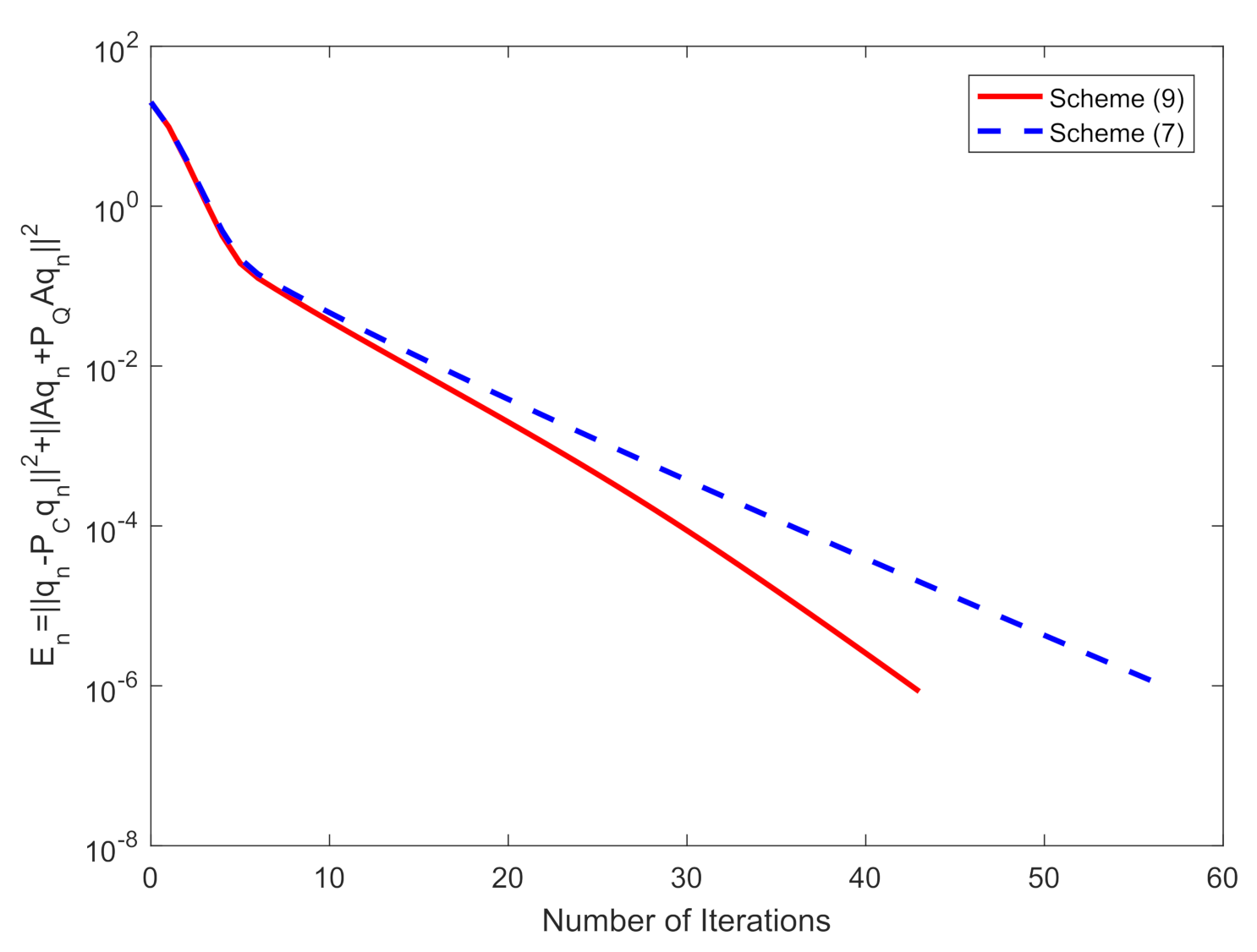

4. Numerical Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algor. 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex set and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 2010, 26, 105018. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Qin, X.; Wang, L. A fixed point method for solving a split feasibility problem in Hilbert spaces. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. RACSAM 2019, 13, 215–325. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. A simple look at the method for solving split feasibility problems in Hilbert spaces. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. RACSAM 2020, 114, 117. [Google Scholar] [CrossRef]

- Kesornprom, S.; Pholasa, N.; Cholamjiak, P. On the convergence analysis of the gradient-CQ algorithms for the split feasibility problem. Numer. Alogr. 2020, 84, 997–1017. [Google Scholar] [CrossRef]

- Dong, Q.L.; He, S.; Rassias, T.M. General splitting methods with linearization for the split feasibility problem. J. Global Optim. 2021, 79, 813–836. [Google Scholar] [CrossRef]

- Shehu, Y.; Dong, Q.L.; Liu, L.L. Global and linear convergence of alternated inertial methods for split feasibility problems. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. RACSAM 2021, 115, 53. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H. Strong convergence result for solving monotone variational inequalities in Hilbert space. Numer. Algor. 2019, 80, 741–752. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

- Xu, H.K. Averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 2011, 150, 360–378. [Google Scholar] [CrossRef]

- Wang, Y.; Yuan, M.; Jiang, B. Multi-step inertial hybrid and shrinking Tseng’s algorithm with Meir-Keeler contractions for variational inclusion problems. Mathematics 2021, 9, 1548. [Google Scholar] [CrossRef]

- Jiang, B.; Wang, Y.; Yao, J.C. Multi-step inertial regularized methods for hierarchical variational inequality problems involving generalized Lipschitzian mappings. Mathematics 2021, 9, 2103. [Google Scholar] [CrossRef]

- He, S.; Yang, C. Solving the variational inequality problem defined on intersection of finite level sets. Abstr. Appl. Anal. 2013, 2013, 942315. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef] [Green Version]

- Tian, M.; Xu, G. Inertial modified Tsneg’s extragradient algorithms for solving monotone variational inequalities and fixed point problems. J. Nonlinear Funct. Anal. 2020, 2020, 35. [Google Scholar]

- Wang, Y.H.; Xia, Y.H. Strong convergence for asymptotically pseudocontractions with the demiclosedness principle in Banach spaces. Fixed Point Theory Appl. 2012, 2012, 45. [Google Scholar] [CrossRef] [Green Version]

| 0 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.5675 × 10 |

| 10 | 0.2146 | 0.1868 | 0.2938 | 0.4159 | 0.8249 | 2.5806 × 10 |

| 50 | 0.0704 | 0.1281 | 0.2543 | 0.4935 | 0.9827 | 2.0782 × 10 |

| 100 | 0.0658 | 0.1263 | 0.2519 | 0.4971 | 0.9921 | 9.3812 × 10 |

| 500 | 0.0632 | 0.1253 | 0.2504 | 0.4994 | 0.9984 | 1.8944 × 10 |

| 1000 | 0.0628 | 0.1251 | 0.2502 | 0.4997 | 0.9992 | 9.4829 × 10 |

| 5000 | 0.0626 | 0.1250 | 0.2500 | 0.4999 | 0.9998 | 1.8983 × 10 |

| 10000 | 0.0625 | 0.1250 | 0.2500 | 0.5000 | 0.9999 | 9.4925 × 10 |

| m | s | p | Scheme (9) | Scheme (7) | ||

|---|---|---|---|---|---|---|

| Iter. | Time (s) | Iter. | Time (s) | |||

| 240 | 1024 | 30 | 40 | 0.0584 | 181 | 1.7113 |

| 480 | 2048 | 60 | 98 | 0.0933 | 337 | 13.8633 |

| 720 | 3072 | 90 | 142 | 0.1578 | 455 | 50.5543 |

| 960 | 4096 | 120 | 117 | 0.2073 | 544 | 138.2107 |

| 1200 | 5120 | 150 | 246 | 0.3534 | 795 | 706.2521 |

| 1440 | 6144 | 180 | 291 | 0.5483 | 883 | 1029.8199 |

| Scheme (9) | Scheme (7) | |||

|---|---|---|---|---|

| Iter. | Time (s) | Iter. | Time (s) | |

| 43 | 0.0413 | 57 | 0.0261 | |

| 37 | 0.0406 | 55 | 0.0249 | |

| 10 | 0.0338 | 25 | 0.0146 | |

| 21 | 0.0363 | 46 | 0.0208 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Xu, T.; Yao, J.-C.; Jiang, B. Self-Adaptive Method and Inertial Modification for Solving the Split Feasibility Problem and Fixed-Point Problem of Quasi-Nonexpansive Mapping. Mathematics 2022, 10, 1612. https://doi.org/10.3390/math10091612

Wang Y, Xu T, Yao J-C, Jiang B. Self-Adaptive Method and Inertial Modification for Solving the Split Feasibility Problem and Fixed-Point Problem of Quasi-Nonexpansive Mapping. Mathematics. 2022; 10(9):1612. https://doi.org/10.3390/math10091612

Chicago/Turabian StyleWang, Yuanheng, Tiantian Xu, Jen-Chih Yao, and Bingnan Jiang. 2022. "Self-Adaptive Method and Inertial Modification for Solving the Split Feasibility Problem and Fixed-Point Problem of Quasi-Nonexpansive Mapping" Mathematics 10, no. 9: 1612. https://doi.org/10.3390/math10091612

APA StyleWang, Y., Xu, T., Yao, J.-C., & Jiang, B. (2022). Self-Adaptive Method and Inertial Modification for Solving the Split Feasibility Problem and Fixed-Point Problem of Quasi-Nonexpansive Mapping. Mathematics, 10(9), 1612. https://doi.org/10.3390/math10091612