An Information-Reserved and Deviation-Controllable Binary Neural Network for Object Detection

Abstract

:1. Introduction

- We propose a method with multiple entropy constraints to improve the performance of information retention networks, including information entropy and relative entropy.

- We propose a dynamic scaling factor to control the deviation between the binary network and the full-precision network, so that the performance of the binary network is closer to the full-precision network.

- We simultaneously optimize the binary network from both the perspectives of information retention and deviation control for effective object detection.

- We evaluate the IR-DC Net method on PASCAL VOC, COCO, KITTI, and VisDrone datasets to enable a comprehensive comparison with the state-of-the-art binary networks in object detection.

2. Related work

2.1. Binary Convolutional Neural Network

2.2. Object Detection

3. Preliminaries

4. Proposed Method

4.1. Information Retention with Multi-Entropy

4.2. Deviation Control with Dynamic Scale Factor

5. Experiments

5.1. Datasets

5.2. Implementation Details

5.3. Ablation Study

5.3.1. Effect of Information Retention

5.3.2. Effect of KL-Divergency Loss

5.3.3. Effect of Dynamic Scale Factor

6. Discussion

6.1. The Results on PASCAL VOC

6.2. The Results on COCO2014

6.3. The Results on KITTI

6.4. The Results on VisDrone2019

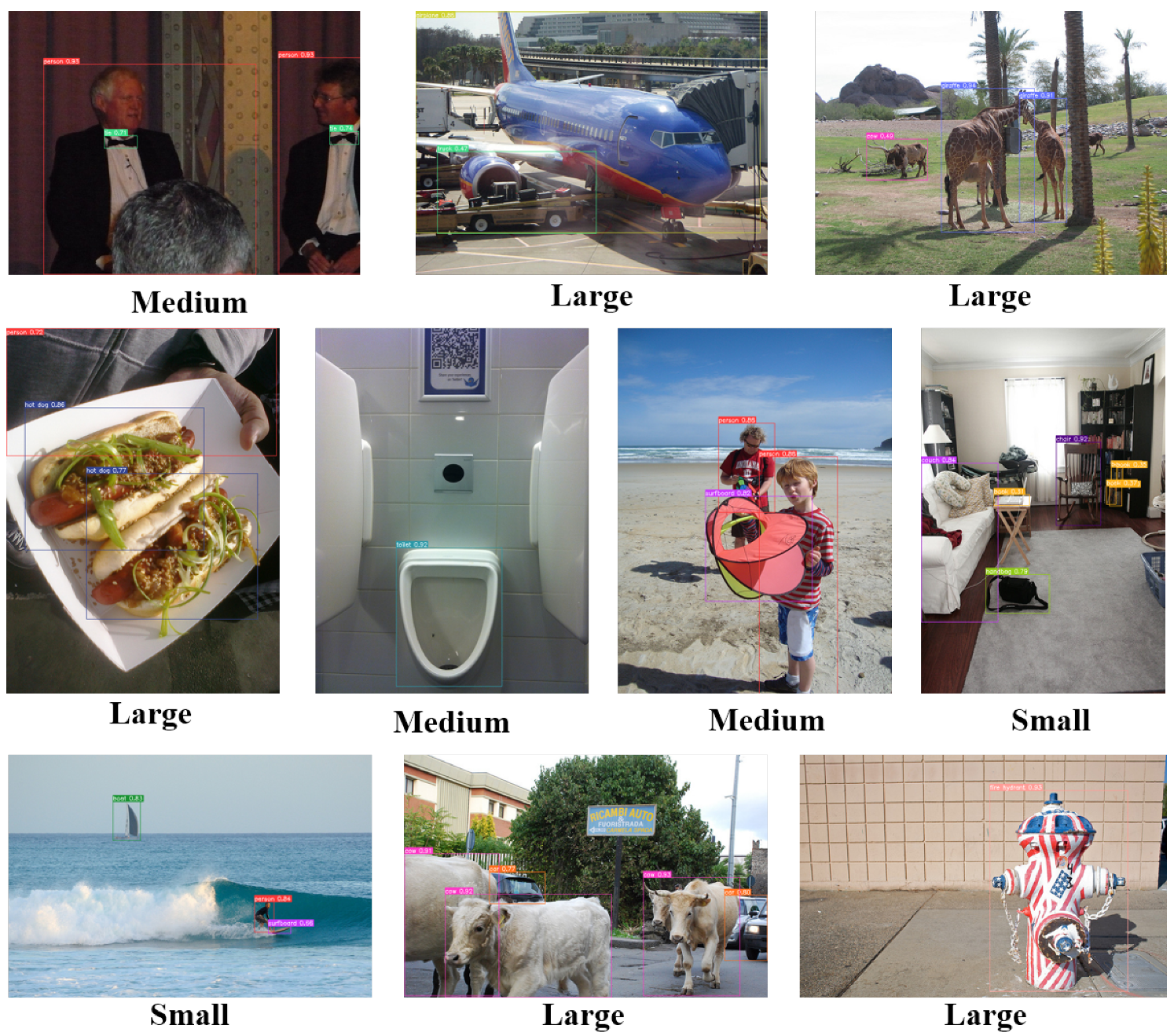

6.5. Visualization Results

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Szeliski, R. Computer Vision-Algorithms and Applications, 2nd ed.; Texts in Computer Science; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Wu, Y.; Wu, Y.; Gong, R.; Lv, Y.; Chen, K.; Liang, D.; Hu, X.; Liu, X.; Yan, J. Rotation Consistent Margin Loss for Efficient Low-Bit Face Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6865–6875. [Google Scholar]

- Boutros, F.; Siebke, P.; Klemt, M.; Damer, N.; Kirchbuchner, F.; Kuijper, A. PocketNet: Extreme Lightweight Face Recognition Network Using Neural Architecture Search and Multistep Knowledge Distillation. IEEE Access 2022, 10, 46823–46833. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, A.; Pu, J.; Yu, J. Multi-Modal Neural Feature Fusion for Automatic Driving Through Perception-Aware Path Planning. IEEE Access 2021, 9, 142782–142794. [Google Scholar] [CrossRef]

- Chen, T.; Lu, M.; Yan, W.; Fan, Y. 3D LiDAR Automatic Driving Environment Detection System Based on MobileNetv3-YOLOv4. In Proceedings of the IEEE International Conference on Consumer Electronics, ICCE 2022, Las Vegas, NV, USA, 7–9 January 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–2. [Google Scholar]

- Wang, Q.; Bhowmik, N.; Breckon, T.P. Multi-Class 3D Object Detection Within Volumetric 3D Computed Tomography Baggage Security Screening Imagery. arXiv 2020, arXiv:2008.01218. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Peng, H.; Wu, J.; Zhang, Z.; Chen, S.; Zhang, H. Deep Network Quantization via Error Compensation. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4960–4970. [Google Scholar] [CrossRef]

- Liang, T.; Glossner, J.; Wang, L.; Shi, S.; Zhang, X. Pruning and quantization for deep neural network acceleration: A survey. Neurocomputing 2021, 461, 370–403. [Google Scholar] [CrossRef]

- Wang, Z.; Lu, J.; Zhou, J. Learning Channel-Wise Interactions for Binary Convolutional Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3432–3445. [Google Scholar] [CrossRef] [PubMed]

- Qin, Z.; Li, Z.; Zhang, Z.; Bao, Y.; Yu, G.; Peng, Y.; Sun, J. ThunderNet: Towards Real-Time Generic Object Detection on Mobile Devices. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6717–6726. [Google Scholar]

- Sandler, M.; Howard, A.G.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; IEEE Computer Society: Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar]

- Molchanov, P.; Mallya, A.; Tyree, S.; Frosio, I.; Kautz, J. Importance Estimation for Neural Network Pruning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 11264–11272. [Google Scholar]

- Zhao, C.; Ni, B.; Zhang, J.; Zhao, Q.; Zhang, W.; Tian, Q. Variational Convolutional Neural Network Pruning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2780–2789. [Google Scholar]

- Liu, C.; Ding, W.; Xia, X.; Zhang, B.; Gu, J.; Liu, J.; Ji, R.; Doermann, D.S. Circulant Binary Convolutional Networks: Enhancing the Performance of 1-Bit DCNNs With Circulant Back Propagation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2691–2699. [Google Scholar]

- Xu, Y.; Dong, X.; Li, Y.; Su, H. A Main/Subsidiary Network Framework for Simplifying Binary Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7154–7162. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. In Proceedings of the Computer Vision—ECCV 2016—14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV. Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9908, pp. 525–542. [Google Scholar]

- Hou, L.; Yao, Q.; Kwok, J.T. Loss-aware Binarization of Deep Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Martínez, B.; Yang, J.; Bulat, A.; Tzimiropoulos, G. Training binary neural networks with real-to-binary convolutions. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Ding, R.; Chin, T.; Liu, Z.; Marculescu, D. Regularizing Activation Distribution for Training Binarized Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 11408–11417. [Google Scholar]

- Gu, J.; Zhao, J.; Jiang, X.; Zhang, B.; Liu, J.; Guo, G.; Ji, R. Bayesian Optimized 1-Bit CNNs. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4908–4916. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.M.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 16–21 June 2012. [Google Scholar]

- Wen, L.; Du, D.; Zhu, P.; Hu, Q.; Wang, Q.; Bo, L.; Lyu, S. Detection, Tracking, and Counting Meets Drones in Crowds: A Benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7812–7821. [Google Scholar]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, B.; Luo, W.; Yang, X.; Liu, W.; Cheng, K. Bi-Real Net: Enhancing the Performance of 1-Bit CNNs with Improved Representational Capability and Advanced Training Algorithm. In Proceedings of the Computer Vision–ECCV 2018–15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part XV. Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11219, pp. 747–763. [Google Scholar]

- Qin, H.; Gong, R.; Liu, X.; Shen, M.; Wei, Z.; Yu, F.; Song, J. Forward and Backward Information Retention for Accurate Binary Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2247–2256. [Google Scholar]

- Liu, Z.; Shen, Z.; Savvides, M.; Cheng, K. ReActNet: Towards Precise Binary Neural Network with Generalized Activation Functions. In Proceedings of the Computer Vision—ECCV 2020—16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV. Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12359, pp. 143–159. [Google Scholar]

- Wang, Z.; Wu, Z.; Lu, J.; Zhou, J. BiDet: An Efficient Binarized Object Detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2046–2055. [Google Scholar]

- Jiang, X.; Wang, N.; Xin, J.; Li, K.; Yang, X.; Gao, X. Training Binary Neural Network without Batch Normalization for Image Super-Resolution. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021; AAAI Press: Washington, DC, USA, 2021; pp. 1700–1707. [Google Scholar]

- Zhuang, B.; Shen, C.; Tan, M.; Chen, P.; Liu, L.; Reid, I. Structured Binary Neural Networks for Image Recognition. Int. J. Comput. Vis. 2022, 130, 2081–2102. [Google Scholar] [CrossRef]

- Jing, W.; Zhang, X.; Wang, J.; Di, D.; Chen, G.; Song, H. Binary Neural Network for Multispectral Image Classification. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 580–587. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, ICCV 2015, Santiago, Chile, 7–13 December 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 936–944. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016—14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905, Lecture Notes in Computer Science. pp. 21–37. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 2999–3007. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 770–778. [Google Scholar]

- Wang, Z.; Lu, J.; Wu, Z.; Zhou, J. Learning efficient binarized object detectors with information compression. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3082–3095. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C. SE-SSD: Self-Ensembling Single-Stage Object Detector From Point Cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 14494–14503. [Google Scholar]

| Year | Method | Main Idea | Authors |

|---|---|---|---|

| 2016 | BNN | It quantifies the activation function and weight as +1 and −1. | COURBARIAUX M et al. |

| 2016 | XNOR-Net | On the basis of binary weight and activation, it introduces scale factor a to reduce the error. | RASTEGARI M et al. |

| 2018 | Bi-Real Net | It provides a user-defined ApprovSign function to replace the sign function for gradient operation in backpropagation. | Z. C. LIU et al. |

| 2020 | IR-Net | It keeps the information in the network from both the forward propagation and the back propagation. | H. T. QIN et al. |

| 2020 | ReActNet | It improves the sign and PReLU functions and increases the learnable coefficients to reduce the distribution error. | Z. C. LIU et al. |

| 2020 | BiDet | It introduces the information bottleneck principle to reduce false positives by eliminating redundancy. | Z. W. WANG et al. |

| 2021 | BTM | It proposes a binary training mechanism based on feature distribution and a multi-stage knowledge distillation strategy. | X. R. JIANG et al. |

| 2022 | GroupNet | It divides the network into several groups and proposes binary parallel convolution, which can embed multi-scale information into BNN. | B. H. ZHUANG et al. |

| 2022 | CABNN | It introduces a self-adjusting activation distribution of RPReLU with learnable coefficients for feature screening. | W. P. JING et al. |

| Year | Method | Main Idea | Authors |

|---|---|---|---|

| 2014 | R-CNN | It applies CNN to bottom-up candidate regions to locate and segment objects. | Ross Girshick et al. |

| 2015 | Fast R-CNN | It proposes RoI Pooling layer to unify features, which can train detectors and bounding box regressors simultaneously. | Ross Girshick |

| 2015 | Faster R-CNN | It uses Region Proposal Network instead of Selective Search, and it is the first near real-time and end-to-end deep learning detector. | S. H. REN et al. |

| 2016 | YOLO | It applies a single neural network to the whole image and transforms the object detection problem into a regression problem. It is the first single stage detector. | Joseph Redmon et al. |

| 2016 | SSD | It introduces multi-reference and multi-resolution technology to improve the detection accuracy, including small objects. | W. LIU et al. |

| 2017 | FPN | It develops a top-down architecture with lateral connections for difficult object-locating problems. | Tsung-Yi Lin et al. |

| 2017 | RetinaNet | It solves the imbalance between foreground and background levels by reconstructing the standard cross entropy loss. | Tsung-Yi Lin et al. |

| Dataset | Types | Training Set | Validation Set | Evaluation Standard |

|---|---|---|---|---|

| PASCAL VOC | 20 | 16k | 5k | mAP |

| COCO | 80 | 83k | 40k | [email protected] |

| KITTI | 3 | 3.7k | 3.8k | mAP |

| VisDrone | 4 | 16k | 9k | mAP |

| Methods | Type | Car (IoU = 0.5) | Car (IoU = 0.7) | ||||

|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | Easy | Moderate | Hard | ||

| SE-SSD | FP | 96.69 | 95.6 | 90.53 | 96.65 | 93.27 | 88.14 |

| SE-SSD* | 1/1 | 56.69 | 46.87 | 44.68 | 53.07 | 43.43 | 41.34 |

| BNN | 1/1 | 63.3 | 50.73 | 32.23 | 58.27 | 43.24 | 27.67 |

| XNOR-Net | 1/1 | 65.01 | 51.26 | 35.73 | 59.07 | 47.06 | 32.61 |

| BiDet | 1/1 | 64.1 | 56.36 | 40.56 | 59.76 | 44.06 | 29.07 |

| AutoBiDet | 1/1 | 65.57 | 57.88 | 41.16 | 61.38 | 46.63 | 32.2 |

| IR-DC | 1/1 | 69.8 | 59.09 | 49.45 | 61.66 | 48.63 | 41.69 |

| Methods | Type | Cyclist (IoU = 0.5) | ||

|---|---|---|---|---|

| Easy | Moderate | Hard | ||

| SE-SSD | FP | 88.99 | 78.71 | 72.03 |

| SE-SSD* | 1/1 | 61.42 | 45.41 | 38.26 |

| BNN | 1/1 | 49.98 | 28.46 | 22.41 |

| Xnor-Net | 1/1 | 59.09 | 35.22 | 26.54 |

| BiDet | 1/1 | 52.47 | 42.86 | 34.97 |

| AutoBiDet | 1/1 | 62.44 | 47.15 | 40.87 |

| IR-DC | 1/1 | 61.53 | 48.4 | 39.43 |

| Methods | Type | Pedestrain (IoU = 0.3) | ||

|---|---|---|---|---|

| Easy | Moderate | Hard | ||

| SE-SSD | FP | 72.33 | 60.51 | 56.28 |

| SE-SSD* | 1/1 | 46.69 | 35.87 | 31.68 |

| BNN | 1/1 | 34.35 | 23.92 | 20.43 |

| Xnor-Net | 1/1 | 46.38 | 30.39 | 26.17 |

| BiDet | 1/1 | 41.38 | 31.97 | 30.15 |

| AutoBiDet | 1/1 | 49.55 | 33.5 | 27.12 |

| IR-DC | 1/1 | 51.58 | 35.79 | 31.14 |

| IR | KL-D | DSF | Car | |||||

|---|---|---|---|---|---|---|---|---|

| IoU = 0.5 | IoU = 0.7 | |||||||

| Easy | Moderate | Hard | Easy | Moderate | Hard | |||

| ✓ | 53.17 | 35.81 | 33.01 | 35.8 | 24.05 | 19.97 | ||

| ✓ | 53.19 | 33.49 | 30.73 | 34.82 | 25.31 | 18.32 | ||

| ✓ | 53.22 | 41.38 | 33.65 | 36.14 | 25.75 | 19.73 | ||

| ✓ | ✓ | 63.2 | 53.7 | 40.68 | 45.77 | 33.29 | 27.7 | |

| ✓ | ✓ | 63.07 | 54.15 | 41.17 | 45.15 | 35.57 | 28.59 | |

| ✓ | ✓ | 60.13 | 51.15 | 43.01 | 47.5 | 36.64 | 31.81 | |

| ✓ | ✓ | ✓ | 69.8 | 59.09 | 49.45 | 61.66 | 48.63 | 41.69 |

| Baseline | Bit-Width | Params | mAP | |

|---|---|---|---|---|

| VGG16 | BNN | 1/1 | 22.06 | 42 |

| XNOR-Net | 1/1 | 22.16 | 50.2 | |

| BiDet | 1/1 | 22.06 | 52.4 | |

| AutoBiDet | 1/1 | 22.06 | 53.5 | |

| IR-DC | 1/1 | 22.16 | 55.3 | |

| MobileNet | 32/32 | 100.28 | 72.4 | |

| ResNet20 | BNN | 1/1 | 2.38 | 35.6 |

| XNOR-Net | 1/1 | 2.48 | 48.4 | |

| BiDet | 1/1 | 2.38 | 50 | |

| AutoBiDet | 1/1 | 2.38 | 50.7 | |

| IR-DC | 1/1 | 2.48 | 51.2 | |

| IR-DC | 4/1 | 2.68 | 58.1 | |

| Methods | AP | |||||

|---|---|---|---|---|---|---|

| S | M | L | IoU = 0.3 | IoU = 0.5 | ||

| VGG16 | BNN | 2.4 | 10 | 9.9 | 28.1 | 15.9 |

| XNOR-Net | 2.6 | 8.3 | 13.3 | 33.4 | 19.5 | |

| BiDet | 5.1 | 14.3 | 20.5 | 46.1 | 28.3 | |

| AutoBiDet | 5.6 | 16.1 | 21.9 | 48.4 | 30.3 | |

| IR-DC | 6.2 | 17.6 | 22 | 49.7 | 32 | |

| ResNet20 | BNN | 2 | 8.5 | 9.3 | 26 | 14.3 |

| XNOR-Net | 2.7 | 11.8 | 15.9 | 34.4 | 21.6 | |

| BiDet | 4.9 | 16.7 | 25.4 | 47.6 | 31 | |

| AutoBiDet | 5 | 17.2 | 25.9 | 48.4 | 31.5 | |

| IR-DC | 5.3 | 17.3 | 25.1 | 49.9 | 32 | |

| Method | Pedestrian | Bicycle | Car | Van | Tricycle | Bus | Motor | mAP |

|---|---|---|---|---|---|---|---|---|

| BNN | 12.08 | 9.84 | 48.08 | 34.65 | 15.47 | 42.95 | 13.1 | 20.05 |

| XNOR-Net | 13.7 | 8.66 | 51.71 | 34.75 | 13.11 | 44.94 | 15.98 | 20.25 |

| BiDet | 15.46 | 10.02 | 52.33 | 34.63 | 14.83 | 50.78 | 20.69 | 22.05 |

| AutoBiDet | 18.17 | 14.73 | 57.38 | 34.81 | 18.64 | 50.22 | 19.67 | 26.23 |

| IR-DC | 19.18 | 14.29 | 59.19 | 34.37 | 15.62 | 52.29 | 20.03 | 26.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, G.; Fei, H.; Hong, J.; Luo, Y.; Long, J. An Information-Reserved and Deviation-Controllable Binary Neural Network for Object Detection. Mathematics 2023, 11, 62. https://doi.org/10.3390/math11010062

Zhu G, Fei H, Hong J, Luo Y, Long J. An Information-Reserved and Deviation-Controllable Binary Neural Network for Object Detection. Mathematics. 2023; 11(1):62. https://doi.org/10.3390/math11010062

Chicago/Turabian StyleZhu, Ganlin, Hongxiao Fei, Junkun Hong, Yueyi Luo, and Jun Long. 2023. "An Information-Reserved and Deviation-Controllable Binary Neural Network for Object Detection" Mathematics 11, no. 1: 62. https://doi.org/10.3390/math11010062