1. Introduction

In recent years, remote sensing (RS) technology, which plays an important role in the satellite and unmanned surveillance aircraft industry, is developing rapidly. Under this trend, RS images have shown explosive growth [

1,

2], which bring challenges to multiple tasks, such as large-scale RS image recognition, detection, classification, and retrieval. Among these, remote sensing cross-modal text-image retrieval (RSCTIR) [

3,

4,

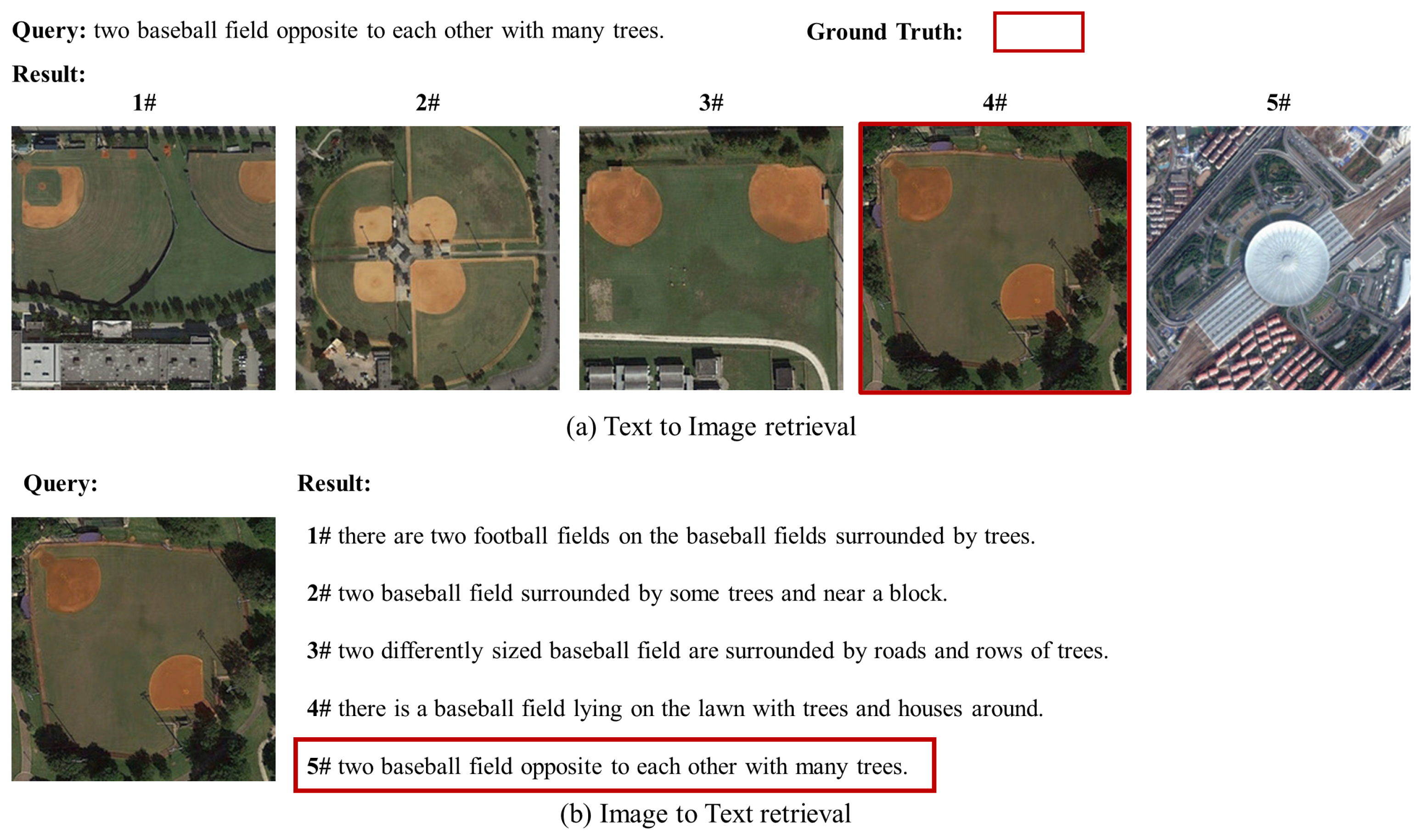

5,

6,

7] aims to find the same or similar images in a large-scale RS image dataset according to the given natural language descriptions, and vice versa, as

Figure 1. RSCTIR enables ordinary users, not limited to professionals or researchers, to achieve retrieval tasks only by natural language or visual input. It presents a better application value of human–computer interaction and information filtering, which leads to a wide range of application prospects in military intelligence generation, natural disaster monitoring, agricultural production, search and rescue activities, urban planning, and other scenarios [

8,

9].

The RSCTIR task, belonging to multi-modal machine learning, is becoming an emerging research area at the intersection of natural language processing (NLP) and computer vision (CV), which enables computers to understand image-text pairs in semantic terms [

10]. In the RSCTIR task, there is often a huge semantic gap in feature expression between the query and target data modality. Therefore, major research focuses on establishing connections between samples of different modalities which have the same semantics. Specifically, current RSCTIR methods are mainly divided into image caption-based methods and vision-language-embedding-based methods. The former is mainly to semantically represent each RS image to generate keyword tags that can describe the image, thus transforming RSCTIR into keyword-based text retrieval. The core of such methods is to use generative models to improve the performance of image caption tasks. For example, to make the generated tags interpretable, Bao et al. [

7] designed an interpretable word-sentence framework, decomposing the task into two subtasks: word classification and ranking. In order to enable tag generators to have a more comprehensive semantic understanding of complex RS images, Devlin et al. [

11] proposed a recurrent attention and semantic gating framework to generate better context features. Different from image caption-based methods, the vision-language-embedding-based methods utilize a trained image and text encoder to map the same or similar semantics sample into feature vectors with closer distances. The main point of these works is how to choose the best loss optimization strategy to minimize the distance between similar images and texts [

12]. Lv et al. [

13] solves the heterogeneous gap problem through knowledge distillation. On the basis of knowledge distillation, Yuan et al. [

14] expects the model to be as lightweight as possible to achieve fast retrieval. Yuan et al. [

5] proposed an asymmetric multi-modal feature matching network (AMFMN) and contributed a fine-grained RS image-text dataset to this task. Due to the well-studied vision-language representing the model on pre-training tasks, the embedding-based methods have become the preferred retrieval model in recent years.

We thus focus on the embedding based methods, which mainly generate visual and text features from two uni-modal encoders, respectively, and optimize the encoders by minimizing vector distances with similar semantics in a high-dimensional space. In view of the high intra-class similarity and large inter-class differences of RS images, the current RSCTIR framework is mainly based on convolutional encoders to extract the global features of images [

15,

16] and object-detector-based encoders for the local information [

17]. Finally, the two uni-modal features will be compared for loss optimization. In other words, the core of the whole framework is to build a language or visual uni-modal encoder with better feature expression ability. Although these methods have achieved good retrieval performance, they do not integrate the features of text and image in the training process by vision-language fusion. Secondly, most of them use pre-trained object detectors for image local feature extraction. Although it can effectively improve the ability of the model to express the detailed features of RS images, the training processes for the object detectors and the cross-modal retrieval model are fragmented.

Inspired by the current mainstream vision-language pre-trained model design, we believe that an end-to-end training framework will eliminate the training gap brought by the pre-trained object detectors, and improve the effect of the model by adapting more training data. At the same time, under the whole framework, a vision-language fusion model is required for image-text semantic alignment to optimize the feature expression capabilities of uni-modal and multi-modal encoders simultaneously. By solving the above problem, the end-to-end framework based on vision-language-fusion will ultimately bring better results for RSCTIR tasks.

Driven by the above motivation, we introduced a multi-modal encoder to the current most popular uni-modal encoder architecture, which is used to learn the semantic association between two modalities. Meanwhile, we discard the pre-trained object detector, extract the local features of the image by introducing a vision transformer model, and use multi-modal fusion and text erasure strategies to enhance feature interaction between different modalities, so that the model can have the ability to represent local features based on main objects. This end-to-end multi-modal framework demonstrates excellent training convenience and shows more attractive performance. Compared with the most popular method, our experiments finally achieved about a two point improvement measured by the mR criterion, which represents the average of all calculated recalls on the RSICD and RSITMD dataset. Furthermore, the trained multi-modal encoder can further improve the top-one and top-five ranking performances after retrieval processing.

Our main contributions are as follows:

We design a framework with two uni-modal encoders and a multi-modal encoder for RSCTIR named EnVLF. In this framework, the uni-modal encoders are used to extract visual and text features, respectively, and the multi-modal encoder is used for modality fusion. A multi-task optimization in the training process is used to improve the feature representation for each encoder.

Inside the vision encoder, a shallow vision transformer model is chosen to extract the local features of images instead of a pre-trained object detector, which transforms the pipe-lined ”object detection + retrieval” process into an end-to-end training process. The gap between the object detector and retrieval model training process is bridged by this end-to-end framework.

In the inference process, the multi-modal encoder, which learns the fusion features of image-text pairs, is used in the post-processing for reranking. It can improve the top-one and top-five ranking performances based on the results on the retrieval task.

Our method is proved to be effective. The results on two commonly used RS text-image datasets can compare with state-of-the-art (SOTA) methods. Subsequently, we first introduce related works of cross-modal retrieval and vision-language pre-training in

Section 2. Then, we introduce our proposed EnVLF method and each submodule in detail in

Section 3.

Section 4 presents the extensive experiments we conduct to verify the effectiveness of EnVLF. Finally, our work is concluded.

3. Methods

In this section, we first outline the overall architecture of our model and the design purpose of each encoder in

Section 3.1. Then,

Section 3.2 introduces the optimization objective in detail. Finally, we describe the training process for the entire architecture in

Section 3.3.

3.1. Model Architecture

Most traditional RSCTIR tasks are mainly to compute the vector similarity between the features of the input query (RS image or text caption) and target features stored in the database. A typical case is GaLR [

4], which has been reported to achieve SOTA performance. The core of GaLR is a well-designed vision and language encoder. Specifically, the image

I and text

T are encoded by vision encoder

and language encoder

separately as two independent vectors which are mapped into the same space. Moreover, for the visual module, GaLR specifically introduces an object detection branch followed by a graph convolution network (GCN) based on the global encoder, which may cause laborious model assembly. In the training optimization procedure, triplet loss is used for optimization.

Through the detailed exploration of the above architecture, we found that most traditional RSCTIR methods mainly focus on improving the performance of uni-modal features extraction. A multi-modal encoder which fuses vision-language features by completing multitask training optimization objectives can further improve the performance of the entire retrieval task. Illustrated in

Figure 2, we propose our EnVLF model, which introduces a multi-modal encoder based on the traditional RSCTIR framework for cross-modal semantic feature learning. It is worth noting that we do not use the object detection branch, thus, the whole training process is end-to-end. We also optimized the training process to enhance the feature representation performance of uni-modal and multi-modal encoders by simultaneously optimizing three kinds of losses: image-text-triplet (ITT), masked-language-model (MLM) and image-text-match (ITM). This process can be represented as follows:

,

, and

denote the local visual features, fused visual features, and text features extracted by uni-modal encoders

and

separately. Cross-modal similarity

and pair-wised image-text similarity

are calculated by multi-modal encoder

.

Next, we will describe our framework with regard to three aspects: vision encoder, language encoder and multi-modal encoder.

3.1.1. Vision Encoder

It has been found in the literature [

5,

7,

32] that in vision-language pre-training tasks, the information carried by the vision side is much more than the language side, so a more complex vision encoder is needed to express comprehensive features. Inspired by this, we believe that a vision encoder can be divided into a global feature extraction module

, an object-based local feature extraction module

, and a vision fusion module

, and in this way, the vision encoder can fully extract the multi-dimensional features of the image. This can be formalized as follows:

where

and

are global and local features of the same RS image presented by

and

separately. The

is the features fused by

.

CNN-based methods directly map the global features of an image into the high-dimensional space, which have shown advantages in image embedding tasks. In more detail, referring to the method of GaLR, we use ResNet-18 and a multi-level visual self-attention (MVSA) module as the global features encoder for RS images.

An object-detection-based model pre-trained on other RS-related datasets can identify the main objects in RS images, such as airplanes, cars, buildings, etc. Capturing local features of RS images by characterizing the relationships between these main objects can improve the RSCTIR performance [

4]. However, training an effective object detector relies on well annotated RS object detection datasets, such as DOTA [

33], NWPU-RESISC45 [

34], and UCAS-AOD [

35]. Meanwhile, it is worth noting that the difference of data annotation forms between object detection and cross-modal retrieval tasks brings a data gap which results in a pipeline mode of the training process. For this imperfection, we adopt

, a transformer-based vision model [

36], and exploit its image patch-based feature representing characteristics to replace the pre-trained object detector. By optimizing with MLM and ITC loss in training, the visual features from

and masked text features from

are aligned with the key semantics, which means

has the same ability as a pre-trained object detector for image local feature extraction.

The vision transformer module

is the key for the local feature encoder, which consists of stacked blocks that include a multi-head self-attention (MSA) layer and a multi-layer perceptron (MLP) layer.

The input image is slid into a flattened two-dimensional patch of size , where is the patch size and . N is the number of blocks that affect the length of the input sequence. Followed by the linear projection and position embedding , v is embedded into .

The MIDF module proposed by GaLR is used to achieve the dynamic fusion of global and local features in vision encoder . After fusing the local and global information, the comprehensive features of RS images are represented by the , which are subsequently used to interact and fuse with the text features generated by the language encoder .

3.1.2. Language Encoder

As analyzed in

Section 3.1.1, the information contained in language is less than that in vision, so a lightweight language encoder that can represent text features is needed. To align with sequenced vision features generated by

, the same language encoder as BERT [

11], where a [CLS] token is appended to the beginning of the text input to summarize the sentence, is the best choice. Specifically, aiming to simplify the computational complexity, we initialize the first six layers of the BERT-based model

as the language encoder

. The language encoder transforms the input text

T into a sequence of embeddings

, which is fed to the multi-modal encoder for cross modal representation learning and language-vision fusion.

In order to use the semantic correlation relationship between modalities to improve the extraction of features for each modal encoder, we perform a targeted masked strategy on the target category information contained in RS images as well as the traditional random mask processing in the language mask module. The masked text is passed through the language encoder to generate the feature . The local feature generated by vision encoder and the masked text feature are fused in the multi-modal encoder. By optimizing with the MLM loss in training, the in the language encoder and the in the vision local encoder can reach a better performance in specific target recognition.

3.1.3. Multi-Modal Encoder

Considering that the semantic features of image

I and text

T extracted by the uni-modal encoder with vector distance constraint cannot be sufficiently aligned in traditional methods. Besides the cross-modal similarity module, a visual-language fusion module is introduced in the multi-modal encoder, which is initialized with the last six layers of the BERT-based model and uses an additional cross-attention layer to model the visual-language interaction. The multi-modal encoder can be formalized as

where the

calculates the similarity between image features

and text features

by using cosine distance and optimizes the parameters in uni-modal encoders by using ITT loss. The

uses MLM loss to optimize the expression of visual local feature encoder

and text feature encoder

by fusing the features

and

. Furthermore,

also utilizes ITM loss to simultaneously optimize the feature representation ability of the uni-modal encoders and multi-modal encoder.

Training with vision-language fusion can improve the performance of each uni-modal encoder and ultimately affects the RSCTIR results. Since the vision-language fusion model requires image-text pairs as input in the inference process, it cannot be used for a large-scale data recall process in RSCTIR. Considering that the trained has a better fine-grained discrimination ability for image-text pairs, so we further use it to rerank the results to improve the top-one and top-five retrieval performances.

3.2. Multitask Optimization

During the training process, the end-to-end RSCTIR framework should improve the performance of each encoder by optimizing multiple targeted tasks. Thus we designed three training objectives for the framework: ITT on the uni-modal encoders with cosine similarity, MLM loss for capturing main objects based features, and ITM on language-vision fusion for image-text pairwise similarity learning.

3.2.1. Image-Text-Triplet Loss for Uni-Modal Encoder

Triplet loss is a loss commonly used in the field of image-text alignment. It calculates the loss function value by comparing the distance between three samples (anchor, positive, and negative). The main idea is to learn a feature representation space, so that anchor samples of the same category are closer to positive samples in this space, and anchor samples of different categories are farther away from negative samples. This can be formalized as follows:

where

represents the minimum margin designed to widen the gap between anchor and positive/negative sample pairs,

.

is a paired image-text sample.

is the text that is not paired with the image

I, and

is the RS image not paired with the text

T.

The advantage of ITT loss lies in the distinction of details, that is, when the two inputs are similar, triplet loss can better model the details. Therefore, we choose the triplet loss in our RSCTIR task to constrain feature representation for uni-modal encoders. The strategy for choosing positive and negative examples is: given the N image-text pairs in one batch are positive samples, and the other unpaired image-text pairs are negative samples. The purpose of this strategy is to make the uni-modal encoder learn a complex representation of both inter-class and intra-class differences.

3.2.2. Image-Text-Match Loss for Pairwise Similarity

In image-text matching, the model predicts whether a pair of input image and text is matched or not. Inspired by most VLP models treating image-text matching as a binary classification problem, we use this training target for the multi-modal encoder. Specifically, a special token, such as [CLS], is inserted at the beginning of the input sentence, which tries to learn a cross-modal representation. Different from the multi-modal encoder of VLP for binary classification, we add a fully connected layer to calculate a two-class probability

for each image-text pair which can be used as a reranking model in the inference procedure. ITM loss can be formalized as follows:

3.2.3. Masked Language Model for Object-Based Image Representation

The masking language modeling (MLM) loss strategy uses images and contextual text to predict masked words, which was originally used in language training tasks, providing better feature expression for pre-trained models. In RSCTIR, it has also been proved to have the same importance as the ITM loss strategy. In our framework, in order to allow the vision encoder to express the region features without using the object detection module, we use MLM with the patch embedding ability of the vision transformer to express the features of the main target of RS images. At the same time, we optimize the masked strategy by using two methods: targeted masked strategy and random masked strategy. The targeted masked strategy aims to enhance the generalization ability of the model, and the targeted mask mainly focuses on important information in the retrieval process, such as the type and number of objects. The text is input into the encoder through the above two masking methods, and then the model is trained to reconstruct the original mark to achieve the ability to represent the information of the main targets. The optimization objective of MLM is minimizing a cross-entropy loss:

where the masked text is denoted by

, and the predicted probability of the model for a masked token is denoted by

.

3.3. Training Procedure of EnVLF

The training and inference procedures of our proposed EnVLF are summarized in Algorithm 1 and Algorithm 2, respectively.

| Algorithm 1 Training Procedure of the Proposed EnVLF |

Input: Image-Text pairs of RS dataset Through: Global visual feature Local visual feature Vision fusion feature Text feature Multi-modal fusion feature Repeat until convergence: - 1:

for each batch do Dual visual feature extraction - 2:

- 3:

- 4:

Text feature extraction - 5:

Multi-model extraction - 6:

Calculate the ITT loss - 7:

Calculate the ITM loss - 8:

Calculate the MLM loss - 9:

- 10:

Update , , , , by - 11:

end for - 12:

return

|

| Algorithm 2 Inference Procedure of the Proposed EnVLF |

Input: Image-Text pairs of RS dataset Through: EnVLF model Initialize the similarity matrix S Vision-Language Fusion module rerank Calculate image-text similarity matrix: - 1:

for each batch do Visual and text feature extraction - 2:

- 3:

- 4:

Append to S - 5:

end for - 6:

return S Multi-model module top-10 rerank: (optional) - 7:

- 8:

return

|

In the training process, we mainly optimize the image encoder , the text encoder , and the multi-modal encoder . First, we input the RS image-text pair batch for training, and then pass it through the uni-model image encoder and text encoder . contains dual modules and to extract global features and local features , respectively, and finally visual features are formed through the vision fusion module . The whole process uses three loss strategies to guide the training process, and the loss is used to optimize the generation of and . and are jointly optimized by text encoder and module in multi-modal encoder , and finally after multiple epoch iterative training, the optimal EnVLF model is constructed.

For the inference process, the RS image-text test pair batch is required, which is sent to the trained EnVLF model to obtain the uni-model image and text feature , . Afterwards, the cosine distance is used to calculate the similarity between query and targets, and all test datasets are traversed to generate a large matrix S, thereby completing the final recall task. At the same time, in order to utilize the pairwise similarity calculated by multi-modal encoder, we choose to use it to complete the reranking of top-10 results.

4. Experiments

4.1. Datasets and Evaluation Metrics

In our experiments, we validate our EnVLF on two datasets: RSICD [

9] and RSITMD [

5]. Each dataset is given in the form of a large number of image-text pairs, as shown in

Figure 3, where RSICD has 10,921 pairs, of which the image size is

, making it the most numerous retrieval dataset currently available. RSITMD has 4743 pairs with the image size of

. Compared with RSICD, RSITMD has a finer-grained text caption. In our experiments, we follow the data partitioning approach of Yuan et al. [

5] and use 80%, 10%, and 10% of the dataset as the training set, validation set, and test set, respectively. For the evaluation criteria, we use R@k and mR [

37] to evaluate the recall performance of EnVLF. R@k indicates the proportion of ground truth contained in the top k results recalled. Consistent with GaLR, we choose k to be 1, 5, or 10 to evaluate the results more fairly. mR represents the average value of each R@k, which can be used as the final evaluation criterion of the overall performance of the model.

4.2. Implementation Details

All our experiments are performed on a single Tesla V100 GPU. For images, we unified the size of all images to and sent them to the image encoder, and then applied data enhancement methods such as random rotation and random cropping. We directly chose ResNet-18 to apply to the global encoder. For the local encoder, we used a 6-layer standard VIT model, and the final generated image and text uni-model features were both 512. For the text model, we used the first six layers of BERT to build a text encoder, and the last six layers to build a multi-model encoder. The batch size we applied in the training procedure was 64 and adjusted to 128 during validation. The learning rate was initialized to 2 × 10, and decayed by a factor of 0.7 every 15 epochs of validation following the iterative process. We trained the entire model for 30 epochs and optimized the model with the Adam optimizer. The rest of the parameters except the learning rate were kept as default values and were not adjusted during the entire procedure.

4.3. Comparisons with the SOTA Methods

We compare the excellent work based on RS cross-modal retrieval task on RSICD and RSITMD datasets: including VSE++ [

23], SCAN [

12], CAMP [

38], MTFN [

39], AMFMN [

5], LW-MCR [

14], and GaLR [

4].

VSE++ is one of the pioneers of using uni-modal encoders to extract image and text features for cross-modal retrieval. A triplet loss is used to optimize the training objective.

SCAN enhances VSE++ by using an object detector to extract local features. The object detector has been proved to achieve excellent performance in local feature extraction.

CAMP propose a message passing mechanism, which adaptively controls the information flow for message passing across modalities. The triplet loss and the BCE loss method are used as control groups.

MTFN designs a multi-modal fusion network based on the idea of rank decomposition to improve the retrieval performance by a reranking process.

AMFMN utilizes an asymmetric approach based on triplet loss that uses visual features to guide text presentation.

LW-MCR takes advantage of methods such as knowledge distillation and contrast learning for lightweight retrieval models.

GaLR optimizes the representation matrix and the adjacency matrix of local features by using GCN. The quantitative analysis on multiple RS text-image datasets demonstrates the effectiveness of the proposed method for RS retrieval.

For the methods above, we use the results reported in the literature [

14]. Following the same strategy for our experiments as these works, we provide the performance of our final model for comparison.

EnVLF: We use the whole EnVLF framework in training progress, and use the uni-modal encoder for RS cross-modal retrieval.

Table 1 shows that the performance of the EnVLF is particularly impressive on both RSICD and RSITMD datasets, and we can obtain conclusions as follows:

The performance of EnVLF on the RSICD dataset, which is a large RS image-to-text dataset, strongly demonstrates the effectiveness of our proposed method. For the EnVLF model, the mR score is even 1.86 points higher than the most outstanding models, reaching 20.82. Whether it is text-to-image retrieval or image-to-text retrieval, EnVLF model shows the best performance compared with the previous SOTA method on R@1, R@5, and R@10 results.

RSITMD has more fine-grained representation in text than RSICD, which makes the retrieval task even more challenging. However, EnVLF still performs well on the RSITMD dataset. The mR of EnVLF finally reached 33.50, which is 2.09 points ahead of GaLR. In more detail, the most difficult R@1 results for text-to-image and image-to-text retrieval of our EnVLF are slightly lower than SOTA. This may be due to the difference between the training set and the test set. Perhaps the semantic distribution of the test set is a little simpler than that of the training set. While compared with AMFMN, our model is more complex, leading to poor results, which can be a future research point. However, it is worth noting that the R@5 and R@10 results are well ahead of the SOTA.

We further compare the qualitative results of our EnVLF with GaLR in

Figure 4. For text-to-image retrieval, we chose an example of the playground. The results show that EnVLF hit the ground truth of the image at the top-one position, and in the top-five results, most of the results centered on the playground. While for GaLR, the hit is not only realized in the top-three position, but most of the results are centered around the baseball field, which indicates that EnVLF can learn more matching, semantically similar features through our cross-modal design. The image-to-text retrieval results are shown below. We can find that EnVLF can hit more ground truth texts in the top-five results, and all of them rank within the top two, while GaLR only hit one low-ranked ground truth text.

We then explore the reasons why EnVLF exhibits more attractive performance compared with GaLR, which we believe can be attributed to the following factors: Firstly, EnVLF adds an

local vision encoder on the basis of the global vision encoder. However, GaLR contains a global encoder and an object detector whose effect has been proved to be closely related to the number of objects contained in the image [

4]. When there are fewer objects, the detector will show weaker performance. Secondly, the language encoder of EnVLF also adopts the transformer model and carefully designs a targeted masked strategy, thus improving the expression of the main features, which are not considered in GaLR. Finally, GaLR only uses the triplet loss to optimize the vision and language encoder, while EnVLF introduces a multi-modal encoder which can better perform semantic alignment across modalities and uses three kinds of losses during the optimization.

4.4. Reranking Process Based on Multi-Modal Encoder

In

, the cross-modal similarity calculation module

can be used to efficiently find the top-N similar results for large-scale data retrieval. Furthermore, in order to take full advantage of the vision-language fusion module

that learns pairwise image-text feature representations, we train it to be used as a reranking process. In our experiment, the

model only uses each uni-modal encoder for feature extracting, and

in multi-modal encoder for feature similarity calculating. However, the results show that the recall rate of the model on the more difficult top-one and top-five is always lower, so based on the results of

, we use the

model to calculate the pairwise similarity between the query and top-ten recalled candidates. The results in

Table 2 shows the reranking process can further improve the mR criterion by about 0.1 points. During our experiment, we found that the performance of the reranking process is not stable, but a slightly higher result can be achieved on most of the top-one and top-five results, especially in some difficult cases, such as

Figure 5; although

recalls similar candidates,

can still rerank the ground truth to top-one, and can even find more top-five correct retrieval results, which shows the potential of the reranking process. Thus, we believe that with more careful design in the future, the reranking process can play a greater role.

4.5. Ablation Studies of Structures

We conducted ablation experiments on the RSITMD dataset for the cross-modal retrieval task to validate each module separately. Specifically, four control experiments were designed to explore the influence of every proposed module on retrieval performance, and we kept the language encoder the same in all experiments. The ablation experiment modules are as follows:

: Use the vision encoder only containing the global feature extractor for visual features and multi-modal encoder only containing for cosine similarity calculating.

: Use the full vision encoder containing the global feature extractor , local feature extractor and vision fusion module for visual features, and multi-modal encoder only containing for cosine similarity calculating.

: Use the full vision encoder and full multi-modal encoder containing and vision-language fusion module . We do not interfere in the selection of masks in this experiment, and a random masked strategy is used for text preprocessing.

: The overall model is the same as , but our proposed targeted masked strategy proposed is used for the text preprocessing to guide the text encoder to learn more targeted features that are conducive to the image-text retrieval process. At the same time, we do not remove the random masked strategy module.

In these models, the comparison between and shows the performance of the module without vision-language fusion. The results of compared with shows the influence of vision-language fusion module in multi-modal encoder . The control group (, ) verifies the superiority of the targeted masked strategy with vision transformer in local feature extraction.

Table 3 shows the results of the above three groups of experiments.

Compared with , the mR criterion of the model increased by 0.77 points after adding the vision transformer for local feature extraction without multi-modal fusion.

Subsequently, we show the results of . Through adding the vision-language fusion module to the multi-modal encoder , the mR criterion is further improved by 0.44 points compared with .

The results of control-experiment group (, ) show that the object-related information masked strategy can improve the representation for local features, especially for the top-10 recall results, through can reach 1.69 points of improvement. This experiment can also prove that the vision and language transformers trained by multi-modal fusion show a comparable ability with pre-trained object-detector-based methods.

5. Conclusions

In this paper, we proposed an end-to-end RS cross-modal retrieval framework named EnVLF, which consists of three modules: a vision encoder and a language encoder for uni-model feature extraction, and a multi-modal encoder for vision-language fusion. Specifically, for the vision encoder, we introduce a vision transformer module trained with a multi-modal encoder to achieve the ability of object detection, which transforms the pipelined training process into an end-to-end process. By optimizing multiple target tasks for training, EnVLF can obtain competitive retrieval performance and bridge the training gap between object detection and retrieval tasks. In addition, the trained multi-modal encoder can improve the top-one and top-five ranking performances after retrieval processing. The experiments and analysis on multiple RS text-image datasets demonstrate the effectiveness of our EnVLF method for RS retrieval.

Visual grounding and image captioning, as two other typical tasks of multi-modal machine learning, have potential benefits for text-to-image retrieval and image-to-text retrieval separately. Therefore, in future work, we will further improve by means of multi-level cross-modal feature learning and generative transformer modals, which are proved to be efficient in visual grounding and image captioning tasks [

40,

41]. Furthermore, obtaining accurate results from noisy data will also be part of our follow-up research to improve the practical value of RSCTIR [

42].