Abstract

Human decision-making processes are complex. It is thus challenging to mine human strategies from real games in social networks. To model human strategies in social dilemmas, we conducted a series of human subject experiments in which the temporal two-player non-cooperative games among 1092 players were intensively investigated. Our goal is to model the individuals’ moves in the next round based on the information observed in each round. Therefore, the developed model is a strategy model based on short-term memory. Due to the diversity of user strategies, we first cluster players’ behaviors to aggregate them with similar strategies for the following modeling. Through behavior clustering, our observations show that the performance of the tested binary strategy models can be highly promoted in the largest behavior groups. Our results also suggest that no matter whether in the classical mode or the dissipative mode, the influence of individual accumulated payoffs on individual behavior is more significant than the gaming result of the last round. This result challenges a previous consensus that individual moves largely depend on the gaming result of the last round. Therefore, our model provides a novel perspective for understanding the evolution of human altruistic behavior.

MSC:

91A90

1. Introduction

Searching for the origin of human cooperation is a longstanding topic of interest in the social sciences [1,2,3,4,5]. People are presumptively selfish [6], but cooperation is ubiquitous in human society, even under extremely adverse conditions [5]. Counterintuitive behavior has been extensively studied under the framework of non-cooperative games, such as the prisoner’s dilemma game [7,8,9,10,11,12,13,14,15,16] and price war [17,18]. A variety of cooperation-promoting mechanisms have been investigated, such as reciprocity [19,20,21,22], punishment [23,24,25,26,27], reputation [7,28], trustworthiness [29], onymity [11], and psychological factors [30], which have been demonstrated to have significant impacts on the emergence of cooperation.

Apart from the aforementioned intrinsic mechanisms, social structure is found to be a major factor that promotes cooperation [19,31,32,33,34,35]. Over the years, the promotive effects in social structures have been studied both theoretically and empirically [10,13,19,35,36,37,38]. Note, however, that a recent human experimental work argued about the influence of social structures [39], triggering a heated debate. Although human interactions generally follow aggregated social connections, such connections are merely necessary for their interactions. Clearly, an individual does not normally interact with all their friends all the time but only occasionally with some. The implication of the dynamical interaction patterns on human cooperation is sometimes dramatic. Recent investigations show that the dynamical update of social connections fundamentally promotes the level of cooperation [12,13,14], where a social reconnection at a certain pace is allowed after a number of rounds of the underlying social games [36,40]. The behavior has been extensively investigated [12,13,14,36,40,41,42,43]. There are claims that the observation demonstrates the effect of reputation [41]. Briefly, individuals may connect with unfamiliar individuals after browsing their gaming records, while cutting the existing connections with unsatisfactory partners. Some may take breaking ties, instead of performing defection, as a way to penalize defectors [12]. Interestingly, the implication of dynamic reconnection fades out when individuals choose a specific move to play with each partner [41]. The results naturally raise two interesting questions: What are the main factors that affect the level of human cooperation? Given these factors, can human gaming strategies be modeled? This paper will address such important problems.

In real social networks, each interaction between individuals will take some time, and the total gaming time for everybody is the same, which is limited. We see that researchers are modeling human behaviors [30,44,45,46], but none of them have considered such an essential factor. Accordingly, individuals are required to reasonably arrange their time in interacting with different partners. For each individual, their partner in a game shares the same gaming time, and more time will be taken to interact with altruistic partners, while less or none to defectors. The dynamic process defined in [12,13,14] can be regarded as an abnormal time assignment, where the total gaming time grows with the number of connections in the gaming network. Dynamic networks are considered as a framework closer to real gaming scenarios than static networks, since they can represent the co-evolution of individuals’ moves and their social connections [13]. However, it ignores the fact that the total game time for each individual is limited, which is irrelevant to their connectivity. In this paper, the total gaming time for each individual is assumed to be the same and limited. Each gaming partner is assigned a fraction of the time slot. In the scenario, social reconnection essentially becomes a redistribution of the total gaming time.

In order to earn higher payoffs in iterated two-player two-strategy () games, researchers have proposed numerous strategy models, for instance, the well-known tit-for-tat [4], Pavlovian strategies [47], win–stay–lose shift [48], among others. The first representative strategy model in structured populations was proposed by Nowak and May [31] in 1992. The model simulates a deterministic learning mechanism that replicates the behavior of the neighbor with the highest payoff from the previous round. When rational individuals play a two-player non-cooperative game such as the prisoner’s dilemma in a regular lattice, the strategy successfully promotes the emergence of cooperation. The second widely circulated strategy, proposed by Santos and Pacheco [35], simulates a learning mechanism with randomness. The mechanism randomly selects a reference neighbor and then decides whether to replicate its move based on its payoff in the previous round. If the selected neighbor’s payoff is higher than that of the decision maker, then the decision maker may replicate its move, which is proportional to the difference between the two payoffs. In scale-free networks, this strategy successfully explains why rational individuals cooperate extensively in a two-person non-cooperative game. The authors claim that the main reason for the high level of cooperation in this scenario is the degree of heterogeneity in the networks, which has not been proven in public goods games [49]. In an empirical experiment, C. Gracia-lázaro et al. also questioned the universality of this conclusion [39]. In this paper, we break away from the framework of the learning mechanism modeling and directly model human strategies from empirical data. This approach can directly identify the factors influencing human decision making and analyze the weight of each factor from the perspective of feature importance. We designed two modes of matches. The classical mode simulates the gaming scenario between individuals in a real collaboration network. The dissipative mode simulates a collaboration network with an elimination mechanism.

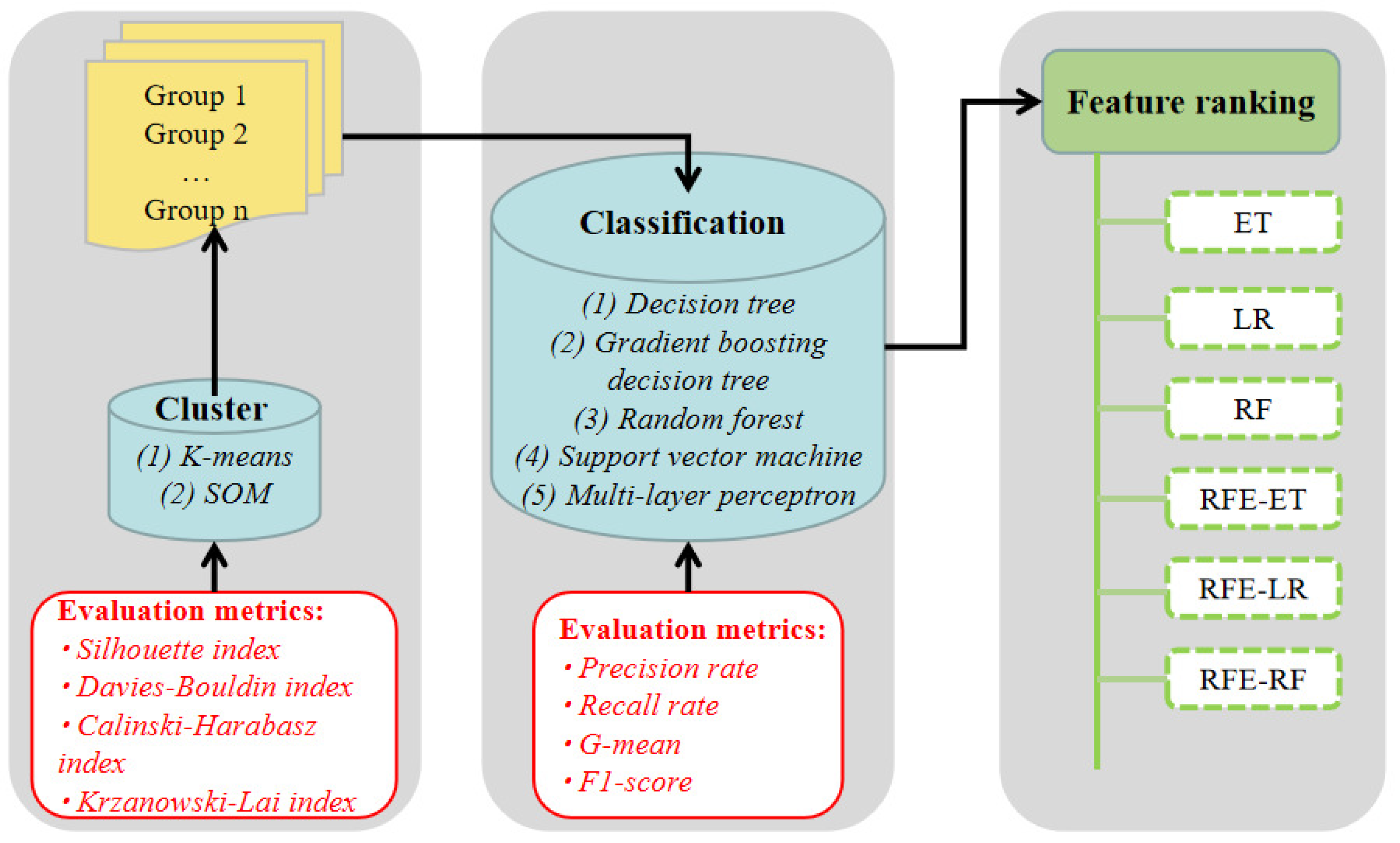

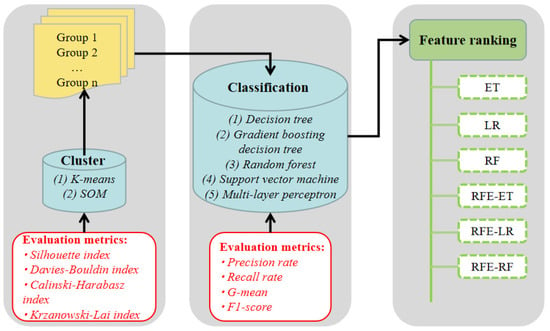

The workflow of this paper is shown in Figure 1. First of all, we extract the statistic features of the user behaviors in the dissipative mode and the classical mode, respectively. Secondly, we cluster users based on their behavior characteristics. We adopted two clustering methods and selected the best-performing method and number of clusters through four evaluation metrics. Then, we used the decision samples in each cluster of users to train a series of binary classification models. We tested five machine learning models and evaluated their performance against four evaluation metrics. Finally, we used six methods to analyze the significance of features. Before we introduce our model, for better understanding the feature selection of the model. Let us first introduce the game model and the preliminary knowledge of one-step memory strategy model.

Figure 1.

Workflow of the proposed method.

2. Preliminaries

2.1. Game Model

To better illustrate the experimental framework, we first define five variables: fixed cost in a round (denoted by ); payoff of mutual cooperation (denoted by ); payoff of cooperating a defector (denoted by ); payoff of defecting a cooperator (denoted by ); and payoff of mutual defection (denoted by ). In a two-strategy game, player i’s strategy can be written as

can only take 1 or 0 in each game. For , player i’s move is C, and their payoff is () for j’s move is C (D). For , i’s move is D, and their payoff is () when j’s move is C (D). When player i has to play with all their social neighbors in a round, their payoff can be written as

where is the set of i’s neighbors.

2.2. Memory-One Strategies

For two-player games with two moves ( games), a player may face four outcomes: denoting mutual cooperation; denoting cooperate with a defector; denoting a defecting cooperator; and denoting mutual defection. Define the vector of the current state , in which each element represents the probabilities of the four aforementioned cases. Generally, a memory-one strategy can be written as , corresponding to the probabilities of cooperation with each of the previous outcomes.

As players update their moves with their respective memory-one strategies, the update of is a Markovian process. We can find a Markov transition matrix M to realize the update. Assume that and are the strategies of individuals i and j, respectively. Then,

where the vectors and denote i and j’s probabilities of cooperations in the next round after experiencing , , , and cases, respectively. One can see that the sum of each row in the matrix is always equal to 1. The evolution of the state can be written as

Notably, the background information in our experiments is much greater than the previous gaming outcomes, resulting in the individual’s state at a time step which cannot be simply represented by , , , and . Considering what a user can observe, the state vector is redefined as a 7-dimensional vector, which will be introduced in Section 2.3. Before modeling the users’ strategy, a problem has to be addressed in advance, which is the diversity of human strategies. Instead, if we model a strategy with the whole dataset, the strategy will become a mixture of a bunch of strategies. If we model a strategy with the gaming record of an individual, the data collected in a match are not enough to train a convincing model, regardless of whether it is a machine learning model or a statistical model. More importantly, the model is neither representative nor generalized. Therefore, it cannot be used to predict other human subjects’ behaviors. To establish a useful model, we plan to cluster the subjects with their moves at first.

2.3. Behavior Clustering

2.3.1. Feature Selection

We select nine features to represent the behaviors of individuals, which are representing the probability of player i taking cooperative action after experiencing in the last round of the game; denote their probability of cooperation after ; and denote the probabilities of cooperation after and , respectively; represents their probability of cooperation when playing with a new partner; the last four elements, respectively, denote the probabilities of refusing to play the game with their partner after experiencing the above four cases.

It is worth mentioning that, in the traditional iterated prisoner’s dilemma, an individual cannot freely reject to play the game with their opponent. With this freedom, Individuals can allocate their gaming time as they wish. In social networks, such freedom leads to a subtle co-evolution of the network structure and individual behavior, which is common in real social dilemmas, while rarely allowed in existing experimental frameworks.

2.3.2. Model Selection

The clustering algorithms tested in our model are K-means and self-organizing maps (SOM). The former is adopted for its strong interpretability and easy implementation. The latter is adopted since it is similar to the organizational mechanism of social networks.

2.3.3. Evaluation of the Clustering Algorithms

The evaluation methods of clustering can be roughly sorted into two categories, one of which is the external metrics including the Rand index, adjusted Rand index, Mirkin index, Hubert index, and the other is the internal metrics. As the external metrics are derived from actual categories and the types of behavior in our dataset are not clearly labeled, one can only choose unsupervised clustering metrics. In this paper, we test four internal metrics, the Silhouette (Sil) index, Davies–Bouldin (DB) index, Calinski–Harabasz (CH) index, and Krzanowski–Lai (KL) index, to measure the clustering performance of the models.

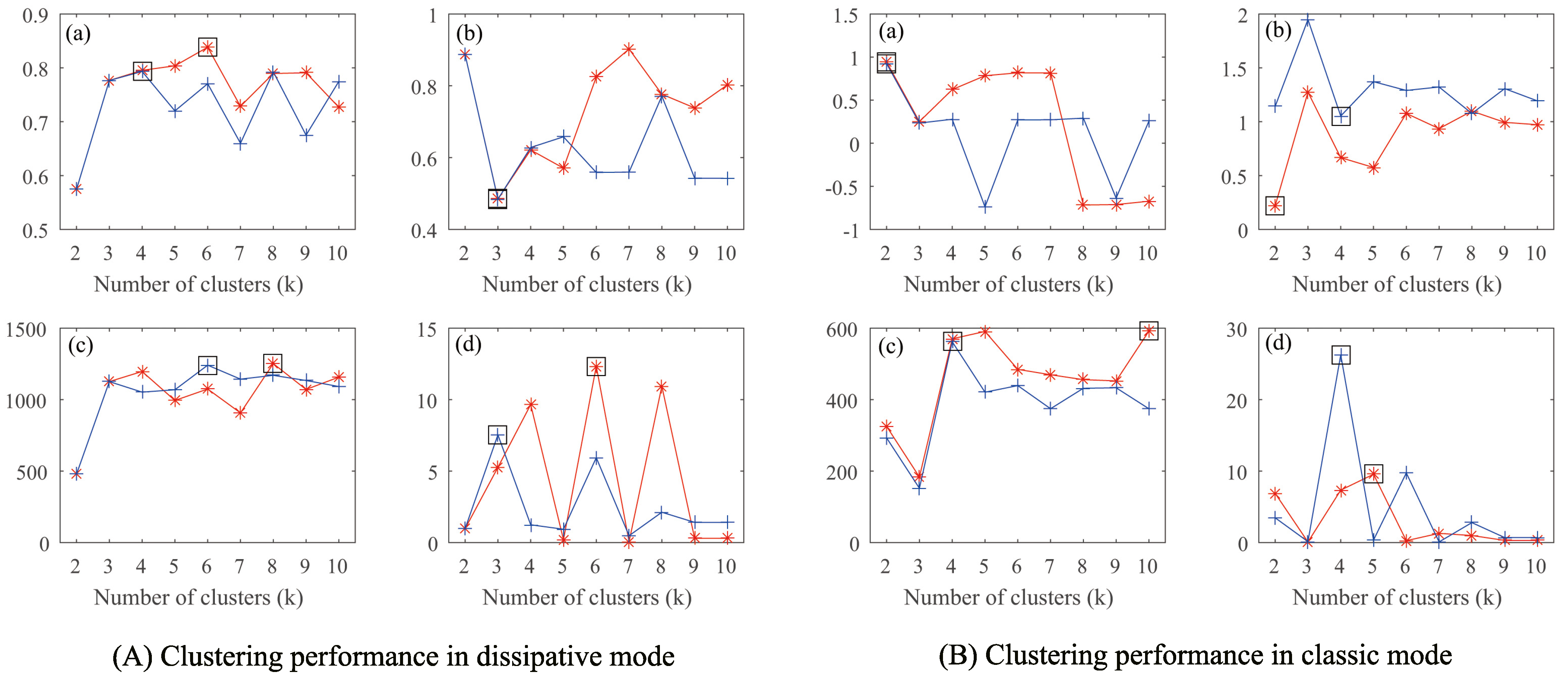

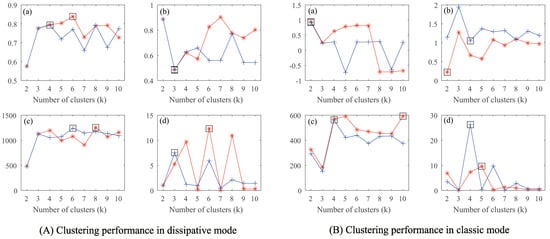

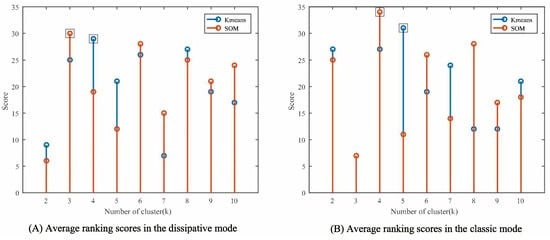

We show the four metrics for both algorithms in the dissipative and classic modes, respectively. We test a series of k values from 2 to 10, which denote the presumed numbers of behavior groups. As shown in Figure 2A, the result of the silhouette index indicates that the optimal result is achieved with for the K-means algorithm, but achieved with for the SOM algorithm in the dissipative mode. With respect to the DB index, is the best choice for both algorithms. For the CH index, () achieves the best performance for the K-means (SOM) algorithm. For the KL index, () is the optimal number of clusters for the K-means (SOM) algorithm.

Figure 2.

Clustering performance measured by (a) silhouette; (b) Davies–Bouldin, (c) Calinski–Harabasz; and (d) Krzanowski–Lai in (A) dissipative mode and (B) classic mode. The red star denotes the result of K-means and the blue cross denotes the result of SOM.

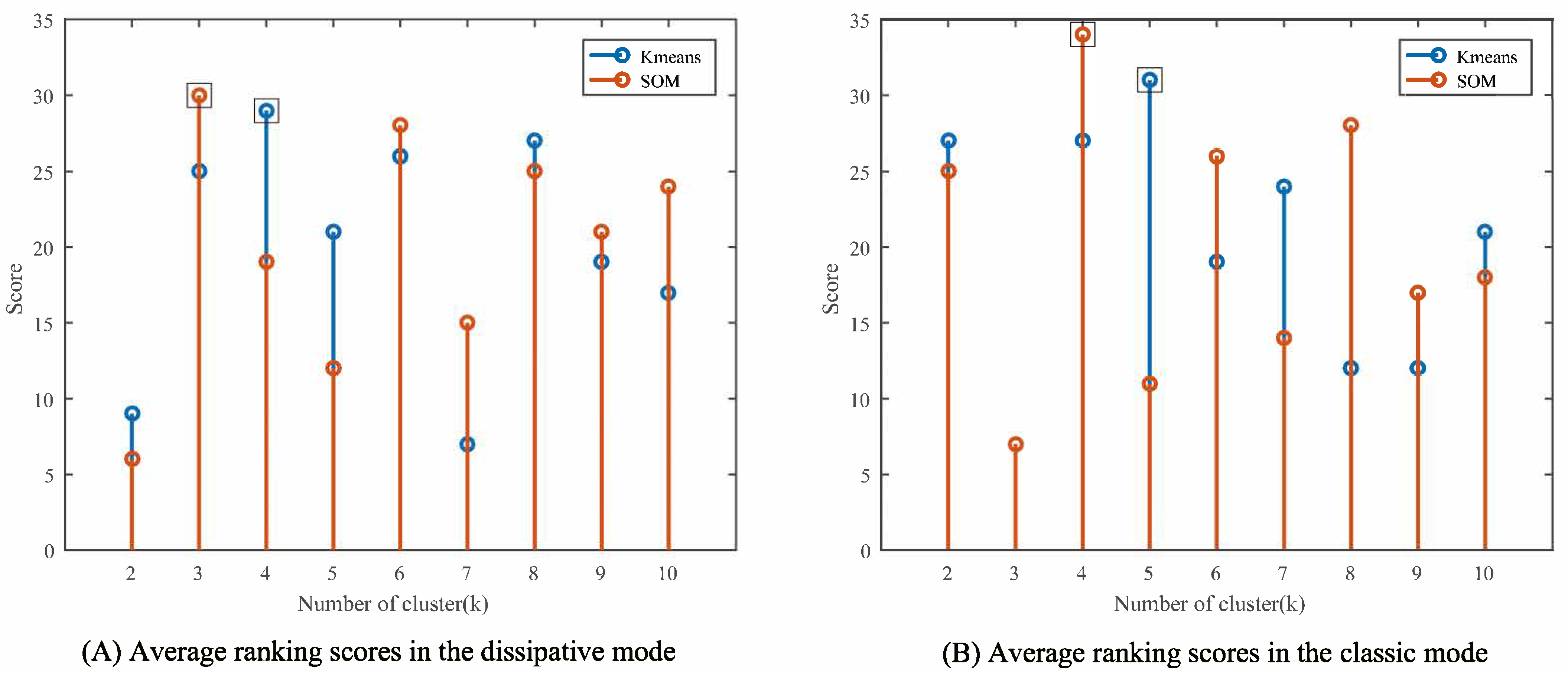

To select proper k values for the models, we adopted a ranking scoring mechanism. As we tested nine values of k, the best setting scored 9 and the worst setting scored 1. The scores of the remaining settings are assigned accordingly. Clearly, the highest score for a certain k value is 36 for the four metrics.

Figure 3A,B show the ranking scores of the clustering algorithms for a series of k values in both modes. As shown in Figure 3A, the K-means algorithm achieves the best performance and scores 27 for , while the SOM algorithm scores 30 for . Comparing the performance of the two algorithms, we finally choose the SOM algorithm and set in the dissipative mode. Figure 3B shows that the K-means algorithm scores 31 for , while the SOM algorithm scores 34 for . Therefore, we finally choose the SOM algorithm and set in our model for the classic mode.

Figure 3.

Average ranking scores of both clustering algorithms for a series of k values in (A) dissipative mode and (B) classic mode.

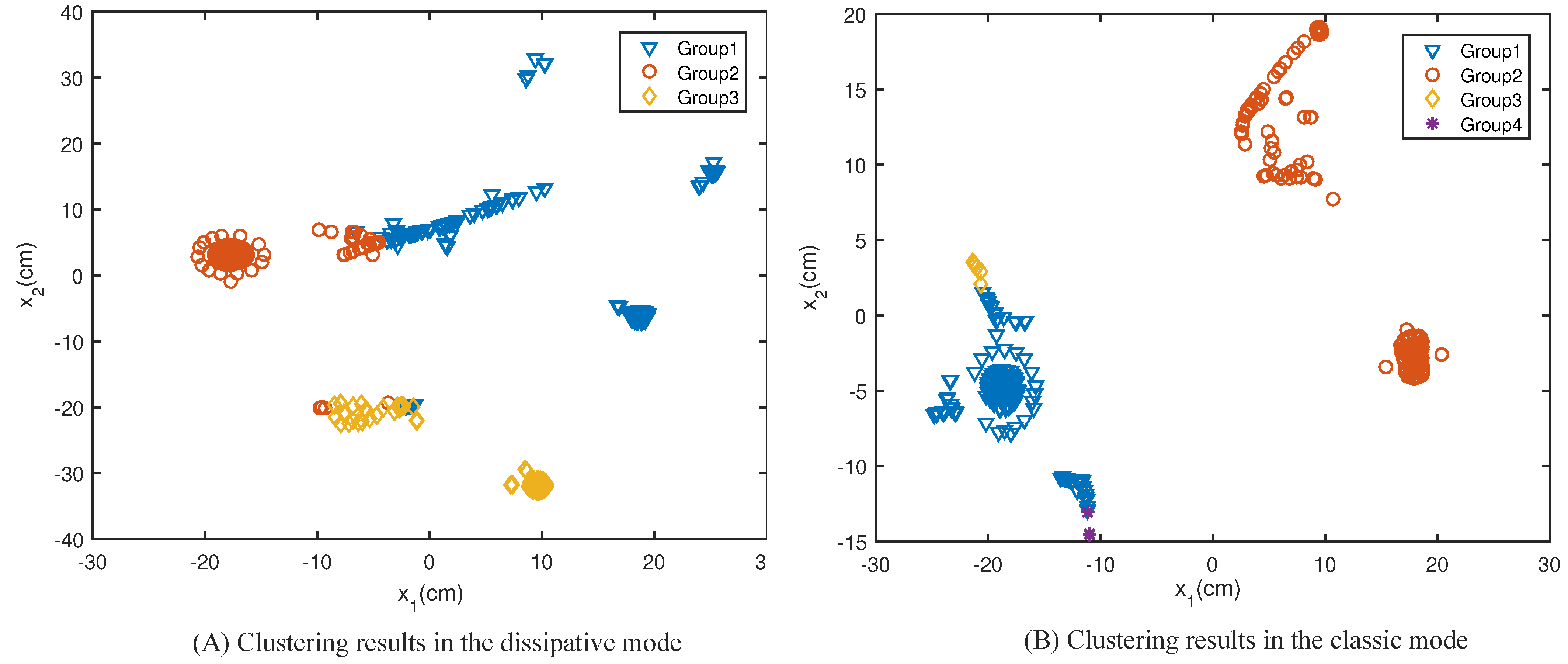

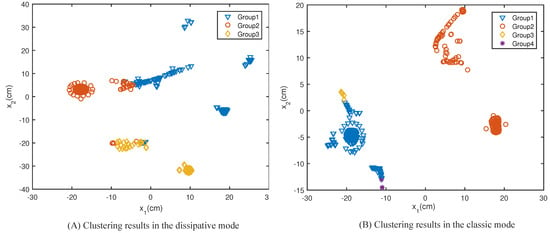

To verify the performance of clustering, we adopt the t-sne algorithm to reduce the dimension of behavior vectors and visualize them in Figure 4.

Figure 4.

Visualization of clustering results in (A) dissipative mode and (B) classic mode.

As shown in Figure 4, our clustering results basically match the distribution of behavior samples. The difference between Figure 4A,B indicates that the behaviors of individuals begin to be unified after the dissipation mechanism is introduced. This unity reflects the adaptive search for survival strategies in the face of crisis. As mutual cooperation is an easy survival strategy to find at the beginning of the matches, the boundaries between the behavior classes begin to be blurred in Figure 4A.

2.4. Strategy Modeling

2.4.1. Feature Selection

Through behavior clustering, we can model the gaming strategy for a group of individuals with certain behavioral commonalities. For each individual, one can only observe the previous gaming record, accumulated payoff, number of surviving neighbors, and the remaining time resource in each round. In the server log, we thus mined the information related to these features.

Gaming result . Since we only consider short-term memory decisions, the first feature that comes to mind is the gaming outcomes in the last round. There are four outcomes in a game, namely , , , . After the one-hot encoding, one has a four-dimensional vector for each outcome. For instance, is encoded as . Since this feature is the only input to the traditional iterated gaming strategy, we keep the vector in our model.

Accumulated payoff F. In the dissipative mode, an individual will be eliminated when their accumulated payoff is less than 0. In the classical model, F is always greater than 0. Therefore, no one will be eliminated. However, as the final reward is proportional to F, F should be an important feature in both modes.

Number of surviving neighbors . Neighbors in a social network are potential gaming partners. We observe that the number of surviving social neighbors in each round has a nontrivial impact on the moves of each individual. A simple explanation is that, if there are no potential gaming partners around, individuals will tend to cooperate to maintain existing cooperative relationships. If there are a large number of possible gaming partners around, the individual may defect the existing gaming partners for higher profits. In the round after defection, they can look for new partners to continue the game. Therefore, we take the number of surviving neighbors as a feature of the model.

Time resource T. In our experiment, the individual can choose not only their opponent but also the duration. The payoff of each game will be in direct proportion to the agreed gaming duration. The duration of the game can reflect not only whether the players trust each other, but also how much they trust each other. Normally, the longer they assign to a game, the more they trust each other. Therefore, the time resource is also capsulated in our input.

2.4.2. Model Selection

The complexity of human decision making makes it difficult for researchers to characterize it with a simple physical model. To summarize the gaming scenarios and records, we translate the decision-making problem into a binary classification problem. As our data are directly extracted from the server log, the data are noise-free. Based on this advantage, we test a series of supervised binary classification models. Five representative machine learning models are used in this paper, including decision tree (DT), gradient boosting decision tree (GBDT), random forest (RF), support vector machine (SVM), and multi-layer perceptron (MLP). Considering that the neural network-based models do not perform well on this issue, we did not continue to increase the number of hidden layers.

3. Experiment

3.1. Experiment Design

Our gaming experiments were performed on an online gaming platform, called War of Strategies (strategywar.net). The platform is specifically developed for temporal social gaming experiments. In our experiments, all participants were required to register an account on the experimental platform in advance. After logging in, they need to check the experimental schedule on the landing page. The scenario and schedule of the matches are shown on the page. Then, they can choose whether to participate in the match. For beginners, we offer a training model. There are two modes in our experiment, namely the dissipative mode and classical mode. Each participant’s initial resource (accumulated payoff) is set to 5 units in the dissipative mode and 0 units in the classical mode. At each round, the living cost for each individual is 3 units in the dissipation mode and 0 units in the classic mode. The specific payoff matrices are shown in Table 1. In our experiment, 571 participants attended 52 matches in the dissipative scenario, and 521 participants attended the remaining 29 matches in the classic scenario.

Table 1.

Payoff matrices of the dissipative and classic modes.

Because of the substantial diversity of user strategies, directly modeling group strategies is not very sensible. In this paper, we first cluster user behaviors to group players with similar strategies into the same sample set. Then, we train strategy models for different sample sets, aiming to mine typical strategies from the groups, which will later lay the foundation for correlation analysis later.

3.2. Dataset

By mining the server logs, we abstract the information that every individual can observe into a seven-dimensional vector

as defined in Section 2.4.1. After 52 (29) matches, a total of 3309 (3523) decision samples are recorded in the server logs in the dissipative (classic) mode. According to the clustering results introduced in Section 2.3, the sample distributions of behavior groups are shown in Table 2. As building a convincing classification model requires a certain number of samples, we, respectively, select the three largest behavior groups in the dissipative mode and classic mode.

Table 2.

Sample distributions of the largest groups in both modes.

In Table 2, one can observe that the samples are not balanced. The ratio of the number of cooperative moves to the number of defective moves in the dissipative mode is close to 9:1. In order to balance the samples, we used the SMOTE [50] in the scikit-learn package of Python to oversample the small samples. In order to eliminate the effect of random sampling, we adopted the 10-fold cross-validation method [51]. Our training sets in the dissipative mode are composed of 2410 (group 1), 273 (group 2), and 294 (group 3) samples, respectively. Our training sets in the classic mode are composed of 683 (group 1), 2363 (group 2), and 115 (group 3) samples, respectively.

3.3. Evaluation and Result

3.3.1. Evaluation Metrics

Precision, recall, and F1-score are the most widely used metrics to evaluate the performance of machine learning algorithms on classification problems. The definitions of the true positive (TP), false negative (FN), false positive (FP), and true negative (TN) in our model are shown in Table 3.

Table 3.

Definition of the confusion matrix in our model.

Based on the definitions in Table 3, the evaluation metrics can be written as

denoting the recall rate of the predicted cooperative moves;

denoting the recall rate of the predicted defective moves;

denoting the precision rate of the predicted cooperative moves;

denoting the precision rate of the predicted defective moves;

which is used to comprehensively evaluate the recall rates of the two types of unbalanced samples;

taking into account the precision and recall rate of the smaller class, which is used to measure the performance of the model on unbalanced datasets.

3.3.2. Experimental Results

To find the best classification model, we tested five classification models, namely DT, GBDT, RF, SVM, and MLP. The hyper-parameters are optimized with the grid search method in the scikit-learn package [51]. After optimization, was set to 100 for the DT and GBDT. The kernel of the SVM model is set to RBF. For the MLP model, the number of hidden layers is set to 3, with each layer containing 13 neurons. The rest of the hyper-parameters in the models are set to default.

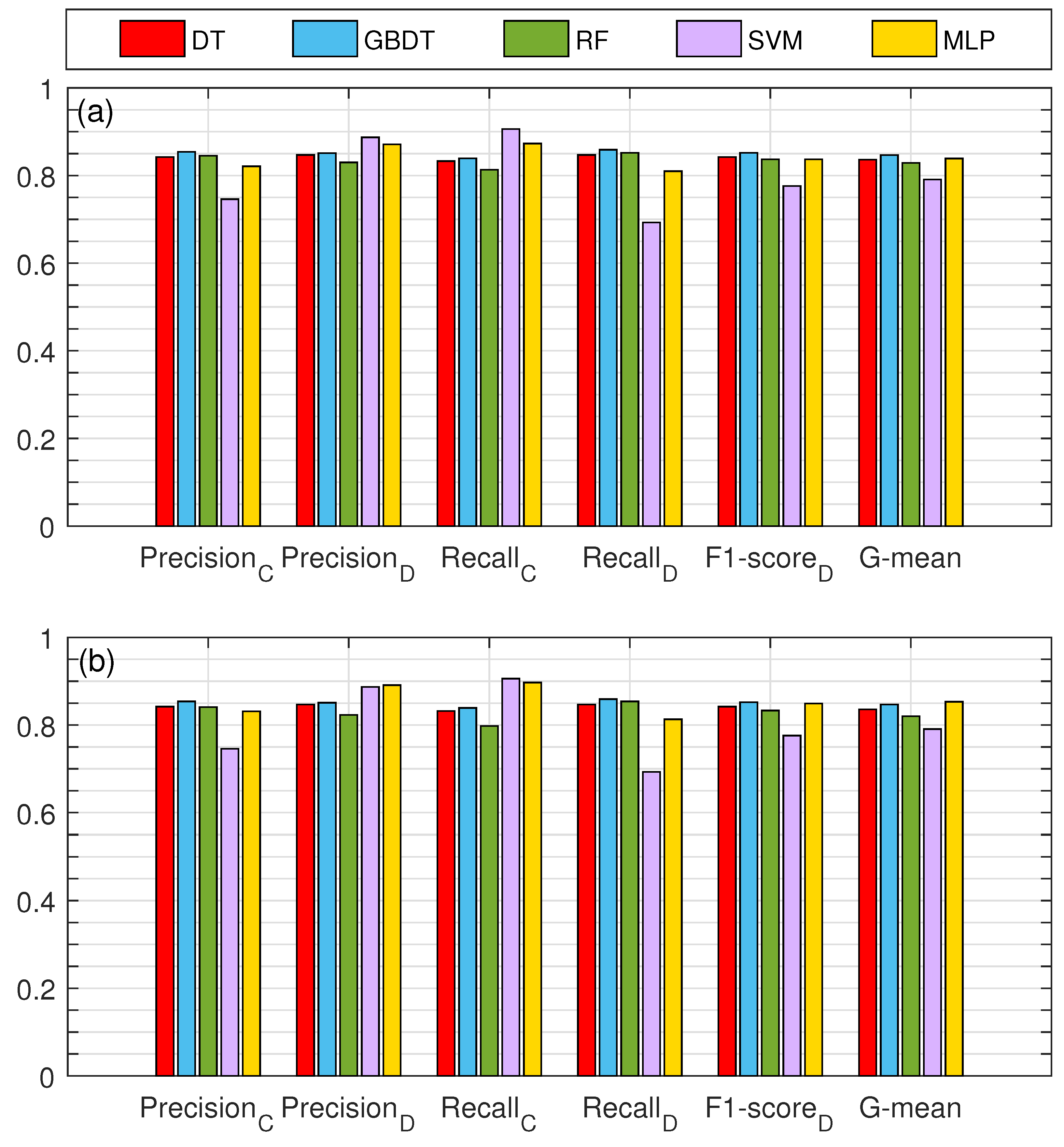

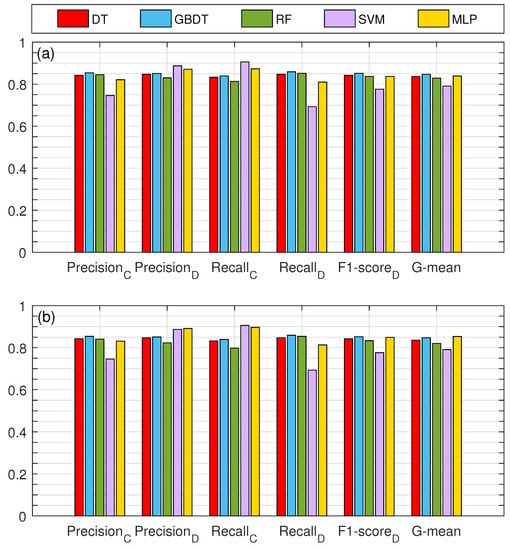

To compare the performance of the strategy model before and after clustering, Figure 5 shows the performance of modeling all samples together in both modes before clustering. Whether predicting C or D, concerning F1-score, the performance of the GBDT model is relatively better in both modes. One can observe that the F1-score values of the five models all exceed 70%, which indicates that the gaming strategies of human beings exhibit strong similarities. It has long been assumed that human gaming strategies vary widely, and our findings suggest that this may be a misconception. Note that none of the F1-score values exceed 85% in either mode.

Figure 5.

Performance evaluation of the tested models in (a) dissipative mode and (b) classic mode before clustering.

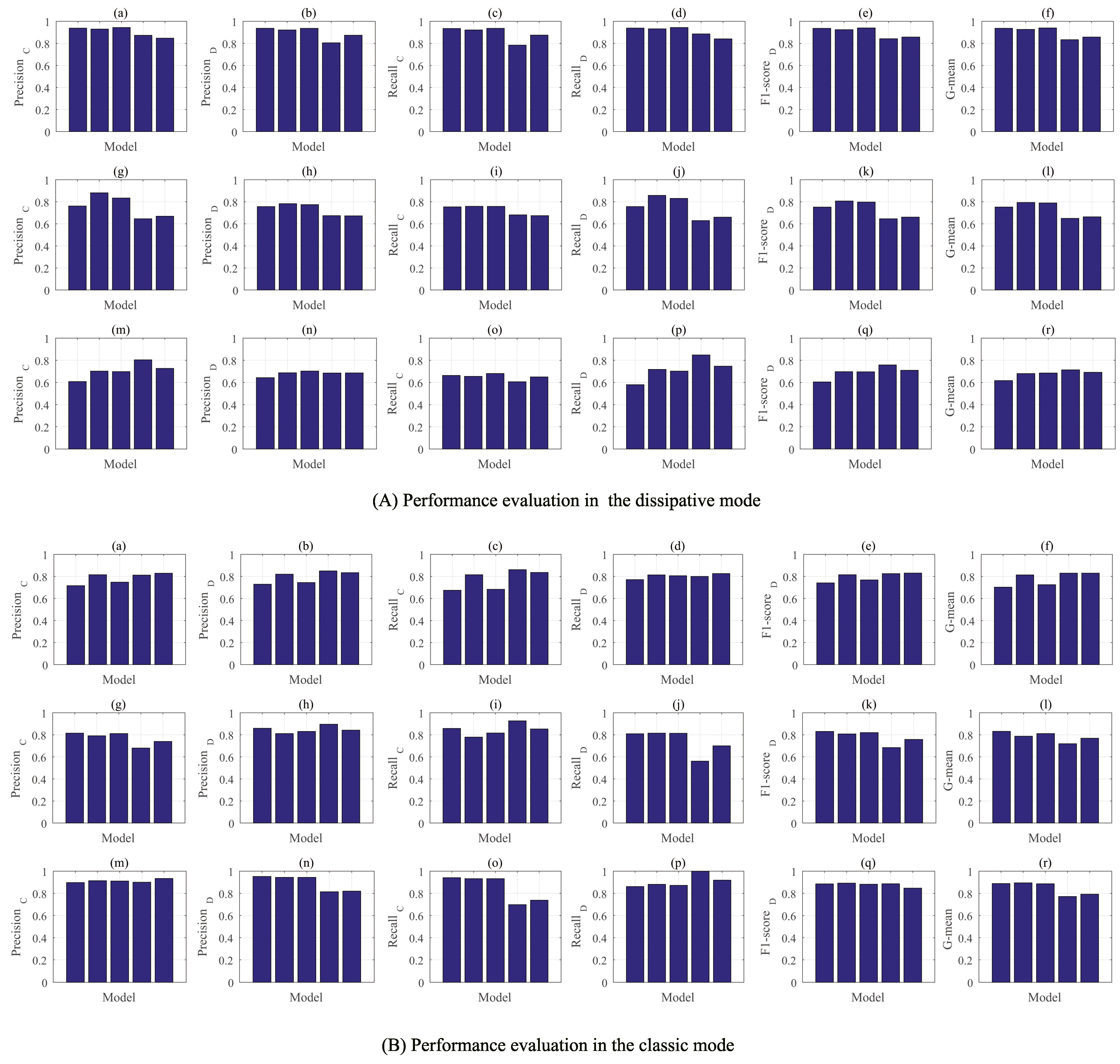

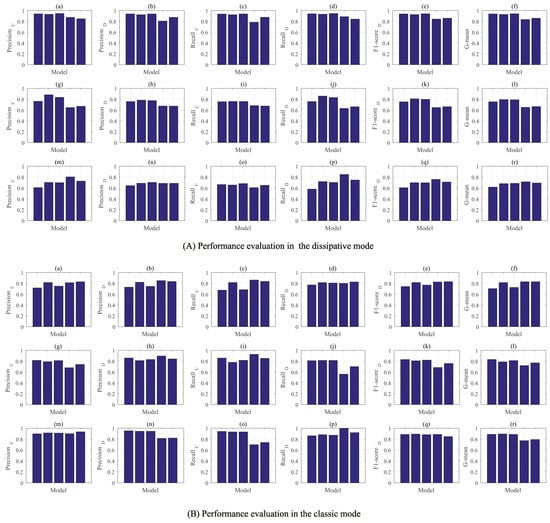

After clustering, the performance of the above models is shown in Figure 6. For the three largest groups in the dissipative mode, one can see that the models performed best in the first group. As shown in Figure 6A(d), the F1-score of the RF algorithm reaches 94%, while the minimum is 84.2%, achieved by the SVM algorithm. For the largest three groups in the classic mode, the models performed better in the third group, where the F1-score of the GBDT algorithm reaches 89.2%, while the minimum is 84.7%, which was achieved by the MLP algorithm as shown in Figure 6B(q). Clearly, the performance of the models is highly promoted after clustering.

Figure 6.

Performance evaluation of the tested models after clustering. Panel (a–f) show the results in group 1; panel (g–l) show the results in group 2; panel (m–r) show the results in group 3 in the dissipative mode and classic mode. The results of the DT, GBDT, RF, SVM, and MLP models are listed from left to right in the panels.

Overall, the good performance of the five machine learning models suggests that human gaming strategies are not so unpredictable as they might seem. With the help of behavioral clustering, the prediction accuracy of the strategic models for some groups reaches as high as 95%. The results have been rarely reported in previous studies, indicating that the studies on human strategy modeling are promising.

In the field of network gaming, the topic of finding factors that promote human cooperation has attracted a large number of researchers. There is not much work on analyzing this problem from a strategy modeling perspective. The main reasons include: it is difficult to design and implement human game experiments; too many factors need to be considered in modeling; human decision-making processes are very complicated. To analyze the significance of features, we adopt six approaches, including extra trees (), logistic regression (), random forest (), and recursive feature elimination () for each classifier mentioned above, which are , , and . The and algorithms can directly derive the importance of each feature by randomly disturbing the feature in the out-of-band samples and measuring its impact on the performance of the model. For the model, the weight of the variable represents its importance. To be consistent with the results of and , we normalize the weights in the model as

where q denotes the number of features and denotes the weight of feature i. The results derived by the three former approaches are shown in Table 4. To fuse with the results derived by the latter three feature selection algorithms, we provide the average ranking for each approach. Instead, the approaches can only provide a ranking for each feature. We show the ranking results for each feature in Table 5. Although the approaches are quite different, the feature ranking given by the two groups of methods is basically the same. One can see that F, which denotes the accumulated payoff in a match, ranks first not only in the dissipative mode but also in the classic mode. In the decision-making process of the game, human subjects give priority to their current accumulated payoff and then consider the gaming results with their opponents. The result is surprising, since most existing memory-one strategies can be considered a feedback mechanism for the outcome of the last round of the game in previous studies. Few have reported how this strategy differs so dramatically from what humans do.

Table 4.

Feature significance ranking by the , , and model.

Table 5.

Feature significance ranking by the algorithms.

4. Conclusions

On an online gaming platform, we organized 81 matches of a temporal Prisoner’s dilemma game in two modes: dissipation and classic. A total of 1092 users participated in the experiment, among which 571 participated in 52 matches in the dissipative mode and 521 participated in 29 matches in the classic mode. Based on the data mined from the server log, we presented a novel approach based on short memory to model human gaming strategies in social dilemmas. As human strategies are diverse, we clustered the gaming behaviors of the human subjects before modeling their strategies. We modeled the individuals’ strategies as a whole for the clusters where the numbers of samples are large enough to train a convincing binary classification model. Extensive experimental results show that human gaming strategies have a substantial diversity. One can hardly train a meaningful model if all samples are modeled together. Behavioral clustering effectively differentiates individuals with different strategies. Our results show that the performance of the tested binary strategy models is significantly promoted in the largest behavior groups. On the other hand, we analyze the impact of observable information on decision making. The experimental results show that, in human decision making, regardless of whether the mode is classical or dissipative, the influence of individuals’ accumulated payoffs on individual behavior is more significant than that of the gaming result of the last round. This result challenges a previous consensus that individual moves largely depend on the gaming result of the last round. Therefore, our work will promote studies on human strategy modeling in temporal collaboration systems, especially systems with elimination mechanisms. More interestingly, this experimental result also implies that the cooperative behavior of individuals has a strong correlation with the cumulative payoffs of individuals. This result will shed some light on studying the evolution of human cooperation.

Admittedly, the experimental results have implied that the short-memory mode applies to modeling human gaming strategies, while it does not mean that this mode is the only one. Other modes, such as the long-memory and long-short-memory mixing modes, may likewise be applicable. Furthermore, the topology of social networks may have a particular impact on human decision making. All of the possible extensions will be explored in our further work.

Author Contributions

Conceptualization, X.-H.Y. and Y.-C.Z.; methodology, X.-H.Y. and Y.-C.Z.; software, H.-Y.H.; validation, X.-H.Y. and Y.-C.Z.; formal analysis, Y.-C.Z.; investigation, H.-Y.H.; resources, Y.-C.Z.; data curation, J.-S.W.; writing—original draft preparation, H.-Y.H.; writing—review and editing, Y.-C.Z.; visualization, H.-Y.H.; supervision, Y.-C.Z.; project administration, X.-H.Y.; funding acquisition, J.-H.G. and S.-G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

Y.-C.Z. was supported by the Municipal Natural Science Foundation of Shanghai (Grant No. 17ZR1446000).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stirling, W.C. Conditional Coordination Games on Cyclic Social Influence Networks. IEEE Trans. Comput. Soc. Syst. 2019, 8, 250–267. [Google Scholar] [CrossRef]

- Lu, J.; Xin, Y.; Zhang, Z.; Tang, S.; Tang, C.; Wan, S. Extortion and Cooperation in Rating Protocol Design for Competitive Crowdsourcing. IEEE Trans. Comput. Soc. Syst. 2021, 8, 246–259. [Google Scholar] [CrossRef]

- Van Lange, P.; Rand, D.G. Human cooperation and the crises of climate change, COVID-19, and misinformation. Annu. Rev. Psychol. 2022, 73, 379–402. [Google Scholar] [CrossRef]

- Axelrod, R.; Hamilton, W. The evolution of cooperation. Science 1981, 211, 1390–1396. [Google Scholar] [CrossRef]

- Pennisi, E. On the origin of cooperation. Science 2009, 1196–1199. [Google Scholar] [CrossRef]

- Smith, J.M. Did Darwin get it right? In Did Darwin Get It Right? Essays on Games, Sex and Evolution; Springer: Boston, MA, USA, 1988; pp. 148–156. [Google Scholar]

- Gallo, E.; Yan, C. The effects of reputational and social knowledge on cooperation. Proc. Natl. Acad. Sci. USA 2015, 112, 3647–3652. [Google Scholar] [CrossRef]

- Rand, D.G.; Nowak, M.A.; Fowler, J.H.; Christakis, N.A. Static network structure can stabilize human cooperation. Proc. Natl. Acad. Sci. USA 2014, 111, 17093. [Google Scholar] [CrossRef]

- Mao, A.; Dworkin, L.; Suri, S.; Watts, D.J. Resilient cooperators stabilize long-run cooperation in the finitely repeated prisoner’s dilemma. Nat. Commun. 2017, 8, 13800. [Google Scholar] [CrossRef]

- Gruji, J.; Fosco, C.; Araujo, L.; Cuesta, J.A.; Sánchez, A. Social experiments in the mesoscale: Humans playing a spatial prisoner’s dilemma. PLoS ONE 2010, 5, e13749. [Google Scholar] [CrossRef]

- Wang, Z.; Jusup, M.; Wang, R.; Shi, L.; Iwasa, Y.; Moreno, Y.; Kurths, J. Onymity promotes cooperation in social dilemma experiments. Sci. Adv. 2017, 3, e1601444. [Google Scholar] [CrossRef]

- Rand, D.G.; Arbesman, S.; Christakis, N.A. Dynamic social networks promote cooperation in experiments with humans. Proc. Natl. Acad. Sci. USA 2011, 108, 19193–19198. [Google Scholar] [CrossRef]

- Fehl, K.; Post, D.; Semmann, D. Co-evolution of behaviour and social network structure promotes human cooperation. Ecol. Lett. 2011, 14, 546–551. [Google Scholar] [CrossRef]

- Wang, J.; Suri, S.; Watts, D.J. Cooperation and assortativity with dynamic partner updating. Proc. Natl. Acad. Sci. USA 2012, 109, 14363–14368. [Google Scholar] [CrossRef]

- Li, X.; Jusup, M.; Wang, Z.; Li, H.; Shi, L.; Podobnik, B.; Stanley, H.E.; Havlin, S.; Boccaletti, S. Punishment diminishes the benefits of network reciprocity in social dilemma experiments. Proc. Natl. Acad. Sci. USA 2018, 115, 30–35. [Google Scholar] [CrossRef]

- Wang, L.; Jia, D.; Zhang, L.; Zhu, P.; Perc, M.; Shi, L.; Wang, Z. Lévy noise promotes cooperation in the prisoner’s dilemma game with reinforcement learning. Nonlinear Dyn. 2022, 108, 1837–1845. [Google Scholar]

- Brandenburger, A.; Nalebuff, B. The right game: Use game theory to shape strategy. Harv. Bus. Rev. 1995, 28, 128. [Google Scholar]

- Zhang, Z.; Ren, D.; Lan, Y.; Yang, S. Price competition and blockchain adoption in retailing markets. Eur. J. Oper. Res. 2022, 300, 647–660. [Google Scholar] [CrossRef]

- Ohtsuki, H.; Hauert, C.; Lieberman, E.; Nowak, M.A. A simple rule for the evolution of cooperation on graphs and social networks. Nature 2006, 441, 502–505. [Google Scholar]

- Nowak, M.A. Five rules for the evolution of cooperation. Science 2006, 314, 1560–1563. [Google Scholar]

- Van, V.M.; García, J.; Rand, D.G.; Nowak, M.A. Direct reciprocity in structured populations. Proc. Natl. Acad. Sci. USA 2012, 109, 9929–9934. [Google Scholar]

- Stewart, A.; Raihani, N. Group reciprocity and the evolution of stereotyping. Proc. R. Soc. B 2023, 290, 20221834. [Google Scholar] [CrossRef]

- Fehr, E.; Gächter, S. Cooperation and punishment in public goods experiments. Soc. Sci. Electron. Publ. 2000, 90, 980–994. [Google Scholar] [CrossRef]

- Sigmund, K.; Hauert, C.; Nowak, M.A. Reward and punishment. Proc. Natl. Acad. Sci. USA 2001, 98, 10757–10762. [Google Scholar] [CrossRef]

- Dreber, A.; Rand, D.G.; Fudenberg, D.; Nowak, M.A. Winners do not punish. Nature 2008, 452, 348–351. [Google Scholar] [CrossRef]

- Mussweiler, T.; Ockenfels, A. Similarity increases altruistic punishment in humans. Proc. Natl. Acad. Sci. USA 2013, 110, 19318–19323. [Google Scholar] [CrossRef]

- Yang, H.X.; Rong, Z. Mutual punishment promotes cooperation in the spatial public goods game. Chaos Solitons Fractals 2015, 77, 230–234. [Google Scholar] [CrossRef]

- Mcnamara, J.M.; Doodson, P. Reputation can enhance or suppress cooperation through positive feedback. Nat. Commun. 2015, 6, 6134. [Google Scholar] [CrossRef]

- Jordan, J.J.; Hoffman, M.; Nowak, M.A.; Rand, D.G. Uncalculating cooperation is used to signal trustworthiness. Proc. Natl. Acad. Sci. USA 2016, 113, 8658. [Google Scholar] [CrossRef]

- Gao, C.; Liu, J. Network-based modeling for characterizing human collective behaviors during extreme events. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 171–183. [Google Scholar]

- Nowak, M.A.; May, R.M. Evolutionary games and spatial chaos. Nature 1992, 359, 826–829. [Google Scholar] [CrossRef]

- Szabo, G.; Fath, G. Evolutionary games on graphs. Phys. Rep. 2007, 446, 97–216. [Google Scholar] [CrossRef]

- Gómez-GardeñEs, J.; Campillo, M.; Floría, L.M.; Moreno, Y. Dynamical organization of cooperation in complex topologies. Phys. Rev. Lett. 2007, 98, 108103. [Google Scholar] [CrossRef]

- Nowak, M.A.; Bonhoeffer, S.; May, R.M. Spatial games and the maintenance of cooperation. Proc. Natl. Acad. Sci. USA 1994, 91, 4877–4881. [Google Scholar] [CrossRef]

- Santos, F.C.; Pacheco, J.M. Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 2005, 95, 098104. [Google Scholar] [CrossRef]

- Suri, S.; Watts, D.J. Cooperation and contagion in web-based and networked public goods experiments. PLoS ONE 2011, 10, 3–8. [Google Scholar]

- Traulsen, A.; Semmann, D.; Sommerfeld, R.D.; Krambeck, H.; Milinski, M. Human strategy updating in evolutionary games. Proc. Natl. Acad. Sci. USA 2010, 107, 2962–2966. [Google Scholar] [CrossRef]

- Hamilton, M.J.; Burger, O.; DeLong, J.P.; Walker, R.S.; Moses, M.E.; Brown, J.H. Population stability and cooperation and the invasibility of the human species. Proc. Natl. Acad. Sci. USA 2009, 106, 12255–12260. [Google Scholar] [CrossRef]

- Gracia-Lazaro, C.; Ferrer, A.; Ruiz, G.; Tarancon, A.; Cuesta, J.A.; Sanchez, A.; Moreno, Y. Heterogeneous networks do not promote cooperation when humans play a prisoner’s dilemma. Proc. Natl. Acad. Sci. USA 2012, 109, 12922–12926. [Google Scholar] [CrossRef]

- Pacheco, J.M.; Traulsen, A.; Nowak, M.A. Active linking in evolutionary games. J. Theor. Biol. 2006, 243, 437–443. [Google Scholar] [CrossRef]

- Melamed, D.; Harrell, A.; Simpson, B. Cooperation and clustering and assortative mixing in dynamic networks. Proc. Natl. Acad. Sci. USA 2018, 115, 951. [Google Scholar] [CrossRef]

- Peng, H.; Qian, C.; Zhao, D.; Zhong, M.; Han, J.; Wang, W. Targeting attack hypergraph networks. Chaos An Interdiscip. J. Nonlinear Sci. 2022, 32, 073121. [Google Scholar] [CrossRef]

- Peng, H.; Qian, C.; Zhao, D.; Zhong, M.; Ling, X.; Wang, W. Disintegrate hypergraph networks by attacking hyperedge. J. King Saud Univ.-Comput. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Zhao, S. Player Behavior Modeling for Enhancing Role-Playing Game Engagement. IEEE Trans. Comput. Soc. Syst. 2021, 8, 464–474. [Google Scholar] [CrossRef]

- Wang, J.; Hipel, K.W.; Fang, L.; Xu, H.; Kilgour, D.M. Behavioral analysis in the graph model for conflict resolution. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 904–916. [Google Scholar] [CrossRef]

- Bristow, M.; Fang, L.; Hipel, K.W. Agent-based modeling of competitive and cooperative behavior under conflict. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 834–850. [Google Scholar] [CrossRef]

- Kraines, D.P.; Kraines, V.Y. Pavlov and the prisoner’s dilemma. Theory Decis. 1989, 26, 47–79. [Google Scholar] [CrossRef]

- Nowak, M.; Sigmund, K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the prisoner’s dilemma game. Nature 1993, 364, 56–58. [Google Scholar] [CrossRef]

- Perc, M. Does strong heterogeneity promote cooperation by group interactions. New J. Phys. 2011, 13, 123027. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.; Mao, B. Borderline-smote: A new over-sampling method in imbalanced data sets learning. In Proceedings of the International Conference on Intelligent Computing, ICIC 2005, Hefei, China, 23–26 August 2005; pp. 878–887. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 2012, 12, 2825–2830. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).