FDDS: Feature Disentangling and Domain Shifting for Domain Adaptation

Abstract

:1. Introduction

- We proposed a new non-linear feature disentangling method, which determines the proportion of content features and style features in source and target domains through learnable weights. This approach enables the precise separation of content and style features based on their corresponding proportions;

- We integrated a dual-attention mechanism involving both spatial and channel attention into the domain shifter network, which preserves the performance of the source domain after domain shifting. As a result, our model can seamlessly transition between serving the source and target domains;

- To evaluate our approach, we conducted experiments in the digit classification and semantic segmentation tasks. Our method exhibited superior performance compared to competing approaches, particularly in the semantic segmentation tasks. Specifically, our FDDS method outperformed the competition in 11 out of 19 classification labels and achieves optimal or suboptimal results in 16 categories.

2. Related Work

2.1. Deep Domain Adaptation

2.2. Attention Mechanism in Image Generation

2.3. Feature Disentangling

3. Materials and Methods

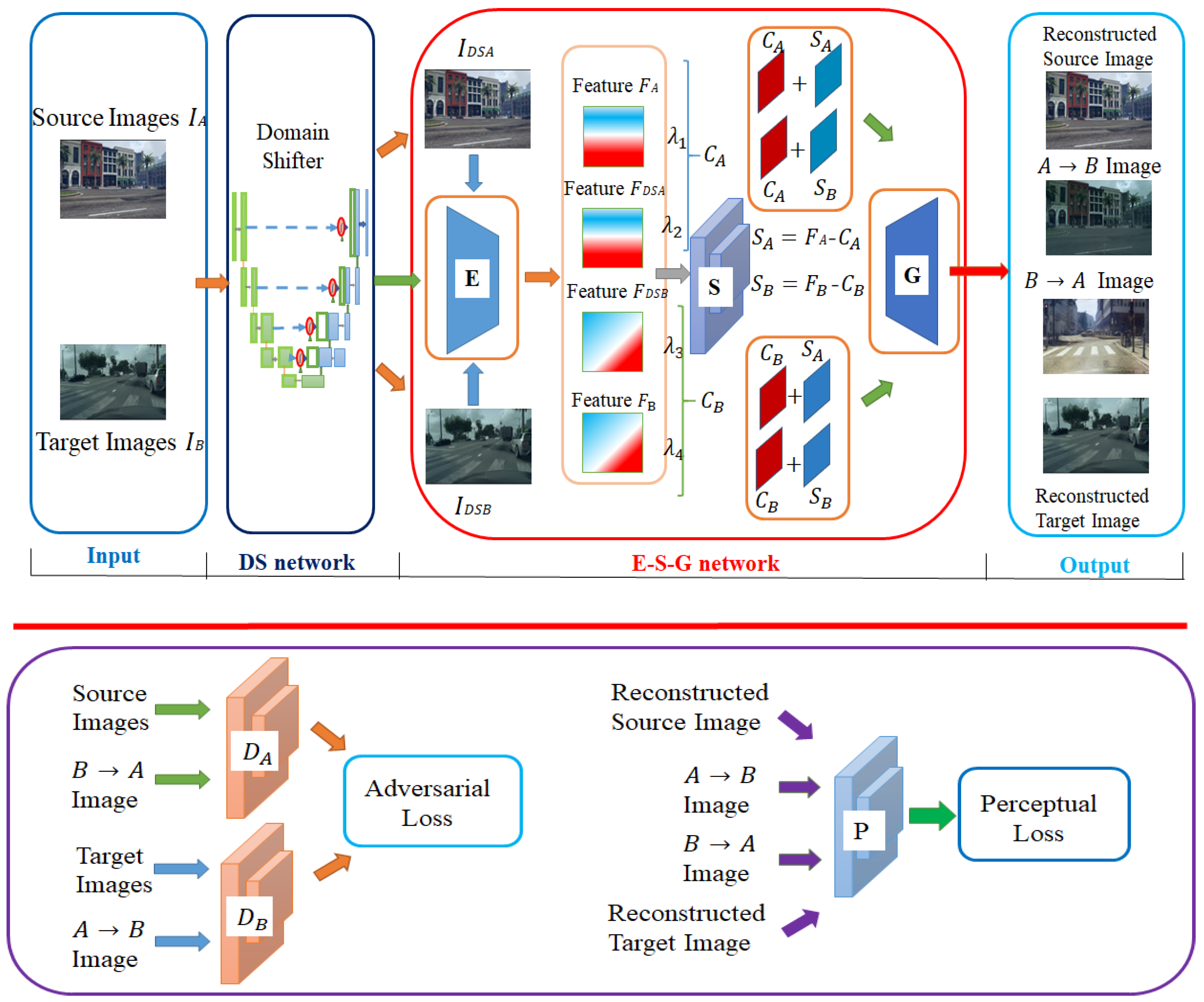

3.1. Model Description

3.2. Domain Shifter Network

3.3. Feature Disentangling Module

3.4. Training Loss

4. Results

4.1. Digit Classification

4.1.1. Dataset

4.1.2. Baselines and Implementation Details

- • Source Only: The classifier trained in the source domain is directly used in the target domain;

- • DANN [24]: GAN is used to improve the feature extraction ability of the network;

- • DSN [16]: Decouple features from private features and common features, and identify the target domain through common features;

- • ADDA [9]: GAN method based on discriminative model;

- • CyCADA [13]: Combine features from features and pixels, and introduce cyclic loss into domain adaptive learning;

- • GTA [43]: Using the ideas of generation and discrimination, learning similar features by using GAN;

- • LC [14]: It is equally important to put forward the lightweight calibrator component and start to pay attention to the performance of the source domain;

- • DRANet [15]: Decouple features into style and content features, and propose nonlinear decoupling;

- • CDA [44]: Using two-stage comparison to learn good feature separation boundary;

- • Target Only: Training directly in the target domain and testing in the target domain is equivalent to supervised learning.

4.1.3. Experimental Process

4.2. Semantic Segmentation Task

4.2.1. Dataset

4.2.2. Baselines and Implementation Details

- • Source Only: The classifier trained in the source domain is directly used in the target domain;

- • FCNs [50]: A classical pixel-level method for semantic segmentation using full convolution networks;

- • CyCADA [13]: Combine features from features and pixels, and introduce cyclic loss into domain adaptive learning;

- • SIBAN [51]: Classify by extracting shared features;

- • LC [14]: It is equally important to put forward the lightweight calibrator component and start to pay attention to the performance of the source domain;

- • DRANet [15]: Decouple features into style and content features, and propose nonlinear decoupling;

- • Target Only: Training directly in the target domain and testing in the target domain is equivalent to supervised learning.

4.2.3. Experimental Process

5. Discussion

5.1. Feature and Pixel-Level Domain Adaptation

5.2. Feature Disentangling Method

5.3. Domain Shifter Component

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhuang, B.H.; Tan, M.K.; Liu, J.; Liu, L.Q.; Reid, I.; Shen, C.H. Effective Training of Convolutional Neural Networks With Low-Bitwidth Weights and Activations. IEEE Transations Pattern Anal. Mach. Intell. 2022, 44, 6140–6152. [Google Scholar] [CrossRef] [PubMed]

- Matsuo, Y.; LeCun, Y.; Sahani, M.; Precup, D.; Silver, D.; Sugiyama, M.; Uchibe, E.; Morimoto, J. Deep learning, reinforcement learning, and world models. Neural Netw. 2022, 152, 267–275. [Google Scholar] [CrossRef] [PubMed]

- Recht, B.; Roelofs, R.; Schmidt, L.; Shankar, V. Do imagenet classifiers generalize to imagenet? In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 5389–5400. [Google Scholar]

- Liang, J.; Hu, D.; Feng, J. Domain adaptation with auxiliary target domain-oriented classifier. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashvile, TN, USA, 19–25 June 2021; pp. 16632–16642. [Google Scholar]

- Mao, C.; Jiang, L.; Dehghani, M.; Vondrick, C.; Sukthankar, R.; Essa, I. Discrete representations strengthen vision transformer robustness. arXiv 2021, arXiv:2111.10493. [Google Scholar]

- Chadha, A.; Andreopoulos, Y. Improved techniques for adversarial discriminative domain adaptation. IEEE Trans. Image Process. 2020, 29, 2622–2637. [Google Scholar] [CrossRef] [Green Version]

- Noa, J.; Soto, P.J.; Costa, G.; Wittich, D.; Feitosa, R.Q.; Rottensteiner, F. Adversarial discriminative domain adaptation for deforestation detection. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 3, 151–158. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Wang, J. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning (ICML), Lile, France, 6–11 July 2015; pp. 97–105. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Xu, Y.; Fan, H.; Pan, H.; Wu, L.; Tang, Y. Unsupervised Domain Adaptive Object Detection Based on Frequency Domain Adjustment and Pixel-Level Feature Fusion. In Proceedings of the 2022 12th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Baishan, China, 27–31 July 2022; pp. 196–201. [Google Scholar]

- Li, Z.; Togo, R.; Ogawa, T.; Haseyama, M. Improving Model Adaptation for Semantic Segmentation by Learning Model-Invariant Features with Multiple Source-Domain Models. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 421–425. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Darrell, T. CyCADA: Cycle-consistent adversarial domain adaptation. In Proceedings of the International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018; pp. 1989–1998. [Google Scholar]

- Ye, S.; Wu, K.; Zhou, M.; Yang, Y.; Tan, S.H.; Xu, K.; Song, J.; Bao, C.; Ma, K. Light-weight calibrator: A separable component for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 13736–13745. [Google Scholar]

- Lee, S.; Cho, S.; Im, S. Dranet: Disentangling representation and adaptation networks for unsupervised cross-domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashvile, TN, USA, 19–25 June 2021; pp. 15252–15261. [Google Scholar]

- Bousmalis, K.; Trigeorgis, G.; Silberman, N.; Krishnan, D.; Erhan, D. Domain separation networks. Adv. Neural Inf. Process. Syst. 2016, 29, 343–351. [Google Scholar]

- Liu, Y.C.; Yeh, Y.Y.; Fu, T.C.; Wang, S.D.; Chiu, W.C.; Wang, Y. Detach and adapt: Learning cross-domain disentangled deep representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8867–8876. [Google Scholar]

- Zhang, R.; Tang, S.; Li, Y.; Guo, J.; Zhang, Y.; Li, J.; Yan, S. Style separation and synthesis via generative adversarial networks. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 183–191. [Google Scholar]

- Li, C.; Tan, Y.; Chen, W.; Luo, X.; Wang, Z. Attention unet++: A nested attention-aware u-net for liver ct image segmentation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Virtual Conference, 25–28 October 2020; pp. 345–349. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar]

- Long, M.; Wang, J.; Jordan, M.I. Unsupervised domain adaptation with residual transfer networks. Adv. Neural Inf. Process. Syst. 2016, 29, 136–144. [Google Scholar]

- Sun, J.; Wang, Z.H.; Wang, W.; Li, H.J.; Sun, F.M.; Ding, Z.M. Joint Adaptive Dual Graph and Feature Selection for Domain Adaptation. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1453–1466. [Google Scholar] [CrossRef]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.O.; Marchand, M.; Lempitsky, V. Domain-adversarial neural networks. arXiv 2014, arXiv:1412.4446. [Google Scholar]

- Akada, H.; Bhat, S.F.; Alhashim, I.; Wonka, P. Self-Supervised Learning of Domain Invariant Features for Depth Estimation. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–7 January 2022; pp. 997–1007. [Google Scholar]

- Bousmalis, K.; Silberman, N.; Dohan, D.; Erhan, D.; Krishnan, D. Unsupervised pixel-level domain adaptation with generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3722–3731. [Google Scholar]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Chen, X.; Xu, C.; Yang, X.; Tao, D. Attention-gan for object transfiguration in wild images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 164–180. [Google Scholar]

- Emami, H.; Aliabadi, M.M.; Dong, M.; Chinnam, R.B. Spa-gan: Spatial attention gan for image-to-image translation. IEEE Trans. Multimed. 2020, 23, 391–401. [Google Scholar] [CrossRef] [Green Version]

- Daras, G.; Odena, A.; Zhang, H.; Dimakis, A.G. Your local GAN: Designing two dimensional local attention mechanisms for generative models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 14531–14539. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, D.L.; Xiang, S.L.; Zhou, Y.; Mu, J.Z.; Zhou, H.B.; Irampaye, R. Multiple-Attention Mechanism Network for Semantic Segmentation. Sensors 2022, 22, 4477. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Garcia, A.; Joost, V.; Bengio, Y. Image-to-image translation for cross-domain disentanglement. arXiv 2018, arXiv:1805.09730. [Google Scholar]

- Zou, Y.; Yang, X.; Yu, Z.; Kumar, B.V.; Kautz, J. Joint disentangling and adaptation for cross-domain person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual Coference, 23–28 August 2020; pp. 87–104. [Google Scholar]

- Tenenbaum, J.B.; Freeman, W.T. Separating style and content with bilinear models. Neural Comput. 2000, 12, 1247–1283. [Google Scholar] [CrossRef]

- Elgammal, A.; Lee, C.S. Separating style and content on a nonlinear manifold. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 27 June–2 July 2004; pp. 478–485. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 694–711. [Google Scholar]

- Lecun, Y.; Bottou, L. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Hull, J.J. A database for handwritten text recognition research. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 16, 550–554. [Google Scholar] [CrossRef]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Ng, A.Y. Reading digits in natural images with unsupervised feature learning. In Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning, Sierra Nevada, Spain, 16–17 December 2011; pp. 1–9. [Google Scholar]

- Sankaranarayanan, S.; Balaji, Y.; Castillo, C.D.; Chellappa, R. Generate to adapt: Aligning domains using generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8503–8512. [Google Scholar]

- Yadav, N.; Alam, M.; Farahat, A.; Ghosh, D.; Gupta, C.; Ganguly, A.R. CDA: Contrastive-adversarial domain adaptation. arXiv 2023, arXiv:2301.03826. [Google Scholar]

- Richter, S.R.; Vineet, V.; Roth, S.; Koltun, V. Playing for data: Ground truth from computer games. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 102–118. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Zheng, Z.; Yang, Y. Rectifying Pseudo Label Learning via Uncertainty Estimation for Domain Adaptive Semantic Segmentation. Int. J. Comput. Vis. 2021, 129, 1106–1120. [Google Scholar] [CrossRef]

- Guan, L.; Yuan, X. Iterative Loop Method Combining Active and Semi-Supervised Learning for Domain Adaptive Semantic Segmentation. arXiv 2023, arXiv:2301.13361. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar]

- Luo, Y.; Liu, P.; Guan, T.; Yu, J.; Yang, Y. Significance-Aware Information Bottleneck for Domain Adaptive Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6777–6786. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Hu, S.X.; Li, D.; Stühmer, J.; Kim, M.; Hospedales, T.M. Pushing the Limits of Simple Pipelines for Few-Shot Learning: External Data and Fine-Tuning Make a Difference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orieans, LA, USA, 18–24 June 2022; pp. 9058–9067. [Google Scholar]

- Zhong, H.Y.; Yu, S.M.; Trinh, H.; Lv, Y.; Yuan, R.; Wang, Y.A. Fine-tuning transfer learning based on DCGAN integrated with self-attention and spectral normalization for bearing fault diagnosis. Measurement 2023, 210, 112421. [Google Scholar] [CrossRef]

| Method | MNIST to USPS | USPS to MNIST | SVHN to MNIST |

|---|---|---|---|

| Source Only | 80.2 | 44.9 | 67.1 |

| DANN (2014) [24] | 85.1 | 73.0 | 70.7 |

| DSN (2016) [16] | 85.1 | - | 82.7 |

| ADDA (2017) [9] | 90.1 | 95.2 | 80.1 |

| CyCADA (2018) [13] | 95.6 | 96.5 | 90.4 |

| GTA (2018) [43] | 93.4 | 91.9 | 93.5 |

| LC (2020) [14] | 95.6 | 97.1 | 97.1 |

| DRANet (2021) [15] | 97.6 | 96.9 | - |

| CDA (2023) [44] | 96.6 | 97.4 | 96.8 |

| Ours | 98.1 | 97.6 | 96.9 |

| Target Only | 97.8 | 99.1 | 99.5 |

| Road | Sidewalk | Building | Wall | Fence | Pole | Traffic Light | Traffic Sign | Vegetation | Terrain | Sky | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Source Only | 42.7 | 26.3 | 51.7 | 5.5 | 6.8 | 13.8 | 23.6 | 6.9 | 75.5 | 11.5 | 36.8 |

| FCNs (2015) [50] | 70.5 | 32.3 | 62.2 | 14.8 | 5.4 | 10.8 | 14.3 | 2.7 | 79.3 | 21.2 | 64.6 |

| CyCADA (2018) [13] | 79.1 | 33.1 | 77.9 | 23.4 | 17.3 | 32.1 | 33.3 | 31.8 | 81.5 | 26.7 | 69.0 |

| SIBAN (2019) [51] | 83.4 | 13.1 | 77.8 | 20.3 | 17.6 | 24.5 | 22.8 | 9.7 | 81.4 | 29.5 | 77.3 |

| LC (2020) [14] | 83.5 | 35.2 | 79.9 | 24.6 | 16.2 | 32.8 | 33.1 | 31.8 | 81.7 | 29.2 | 66.3 |

| DRANet (2021) [15] | 83.5 | 33.7 | 80.7 | 22.7 | 19.2 | 25.2 | 28.6 | 25.8 | 84.1 | 32.8 | 84.4 |

| Ours | 84.1 | 35.7 | 80.9 | 23.5 | 20.7 | 26.7 | 29.0 | 27.5 | 84.5 | 33.9 | 79.6 |

| Target Only | 97.3 | 79.8 | 88.6 | 32.5 | 48.2 | 56.3 | 63.6 | 73.3 | 89.0 | 58.9 | 93.0 |

| Person | Rider | Car | Truck | Bus | Train | Motorbike | Bicycle | mIoU | fwIoU | Pixel Acc. | |

| Source Only | 49.3 | 0.9 | 46.7 | 3.4 | 5.0 | 0.0 | 5.0 | 1.4 | 21.7 | 47.4 | 62.5 |

| FCNs (2015) [50] | 44.1 | 4.3 | 70.3 | 8.0 | 7.2 | 0.0 | 3.6 | 0.1 | 27.1 | - | - |

| CyCADA (2018) [13] | 62.8 | 14.7 | 74.5 | 20.9 | 25.6 | 6.9 | 18.8 | 20.4 | 39.5 | 72.4 | 82.3 |

| SIBAN (2019) [51] | 42.7 | 10.8 | 75.8 | 21.8 | 18.9 | 5.7 | 14.1 | 2.1 | 34.2 | - | - |

| LC (2020) [14] | 63.0 | 14.3 | 81.8 | 21.0 | 26.5 | 8.5 | 16.7 | 24.0 | 40.5 | 75.1 | 84.0 |

| DRANet (2021) [15] | 53.3 | 13.6 | 75.7 | 21.7 | 30.6 | 15.8 | 20.3 | 19.5 | 40.6 | 75.6 | 84.9 |

| Ours | 52.9 | 15.3 | 75.8 | 21.8 | 31.3 | 9.7 | 20.9 | 26.7 | 41.1 | 76.2 | 85.7 |

| Target only | 78.2 | 55.2 | 92.2 | 45.0 | 67.3 | 39.6 | 49.9 | 73.6 | 67.4 | 89.6 | 94.3 |

| Non-Linear Disentangling | Normalization | USPS to MNIST | MNIST to USPS | SVHN to MNIST |

|---|---|---|---|---|

| 86.2 | 12.7 | 70.4 | ||

| √ | 90.5 | 91.6 | 83.5 | |

| √ | 91.1 | 97.3 | 90.6 | |

| √ | √ | 97.6 | 98.1 | 96.9 |

| Method | Source Acc. (Before Adapt) | Source Acc. (After Adapt) | TargetAcc. (After Adapt) |

|---|---|---|---|

| ADDA (2017) [9] | 90.5 | 67.1 | 80.1 |

| CyCADA (2018) [13] | 92.3 | 31.4 | 90.4 |

| LC (2020) [14] | 93.9 | 90.8 | 97.1 |

| Ours | 94.1 | 92.6 | 96.9 |

| Original Network (ON) | Num of Param. in ON (M) | Num of Param. in DS (M) | Radio of DS to ON (%) | |

|---|---|---|---|---|

| Digit Classification | LeNet | 3.1 | 0.19 | 6.12 |

| Semantic Segmentation | DRN-26 | 20.6 | 0.06 | 0.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Gao, F.; Zhang, Q. FDDS: Feature Disentangling and Domain Shifting for Domain Adaptation. Mathematics 2023, 11, 2995. https://doi.org/10.3390/math11132995

Chen H, Gao F, Zhang Q. FDDS: Feature Disentangling and Domain Shifting for Domain Adaptation. Mathematics. 2023; 11(13):2995. https://doi.org/10.3390/math11132995

Chicago/Turabian StyleChen, Huan, Farong Gao, and Qizhong Zhang. 2023. "FDDS: Feature Disentangling and Domain Shifting for Domain Adaptation" Mathematics 11, no. 13: 2995. https://doi.org/10.3390/math11132995

APA StyleChen, H., Gao, F., & Zhang, Q. (2023). FDDS: Feature Disentangling and Domain Shifting for Domain Adaptation. Mathematics, 11(13), 2995. https://doi.org/10.3390/math11132995