A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization

Abstract

:1. Introduction

- A significant improvement in HJSPSO in terms of accuracy at fast convergence rates compared to the original PSO and JSO techniques.

- The superiority of HJSPSO is verified by comparing it with nine well-known optimization techniques, including the existing hybrid algorithm.

- The robustness of HJSPSO is validated through unimodal, multimodal, and large-scale benchmark test functions.

2. Review of PSO and JSO Applications

3. The Existing Optimization Formulation

3.1. PSO Formulation

3.2. JSO Formulation

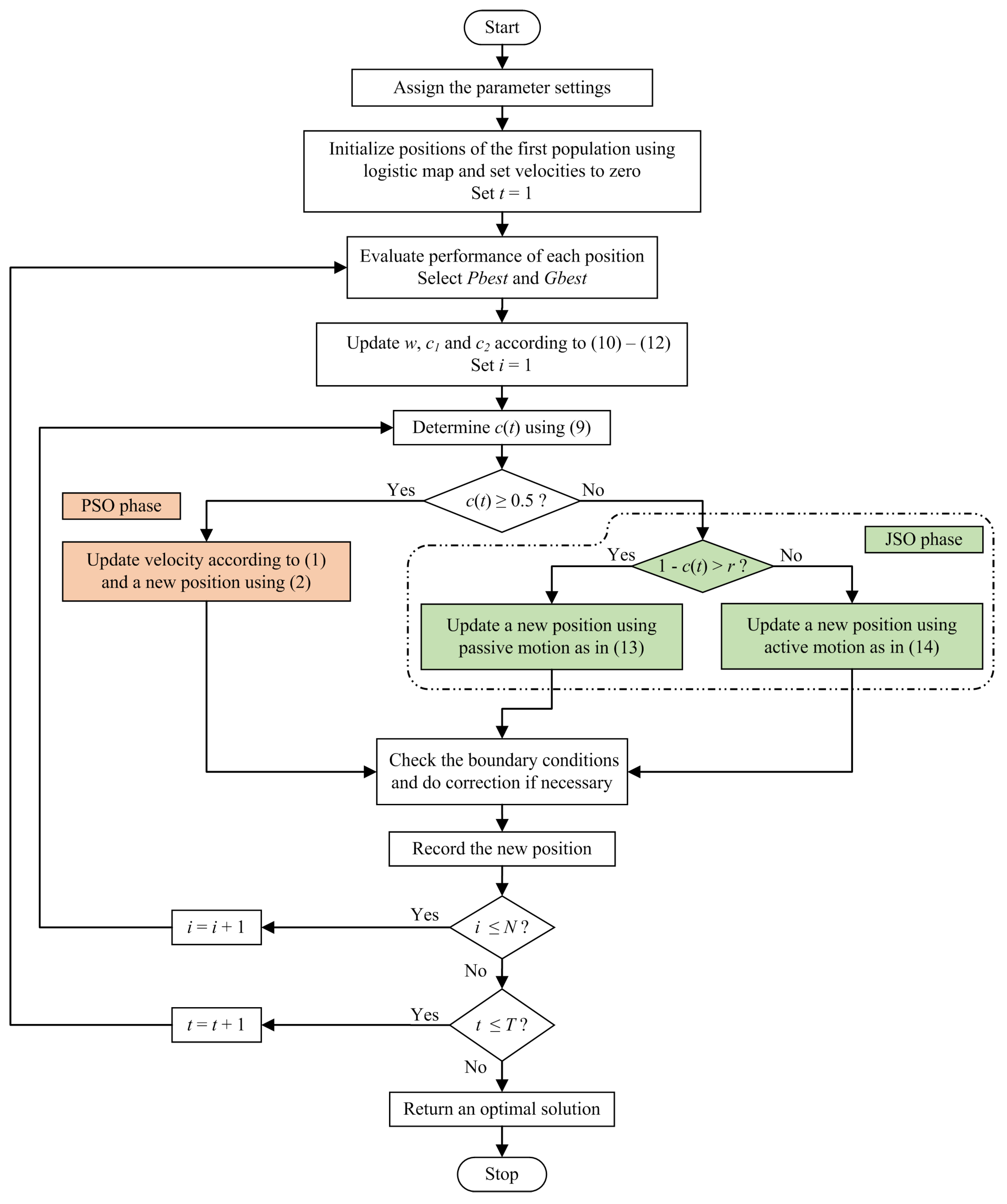

4. Proposed Optimization Formulation

- The movement of following the ocean current in JSO is replaced with the velocity and position-updating mechanism of PSO to take advantage of its exploration capability (referred as PSO phase).

- The passive motion in JSO is modified by introducing a new formulation with respect to the global solution to improve the exploration capability (referredto as JSO phase).

- Nonlinear time-varying inertia weight and cognitive and social coefficients are added to enable the technique to escape from the local optimum.

- The time control mechanism of JSO is used to switch between PSO and JSO phases.

5. Results and Discussion

5.1. Benchmark Test Functions

5.2. Metaheuristic Techniques for Comparison

- Grey Wolf Optimizer [45]: GWO was introduced in 2014. It is one of the swarm-intelligence-based techniques inspired by the hunting strategy of grey wolves, which includes searching, surrounding, and attacking the prey.

- Lightning Search Algorithm [19]: LSA was proposed in 2015. It is one of the physical-based techniques that simulates the lightning phenomena and the mechanism of spreading the step leader by using the conception of fast particles known as projectiles.

- Hybrid Heap-Based and Jellyfish Search Algorithm [40]: HBJSA was proposed in 2021. It is a hybrid optimization technique based on HBO and JSO that benefits from the exploration feature of HBO and the exploitation feature of JSO.

- Rat Swarm Optimizer [24]: RSO was introduced in 2020. It is one of the swarm-intelligence-based algorithms that imitates rats’ behavior in chasing and attacking prey.

- Ant Colony Optimization [46]: Ant colony optimization (ACO) was introduced in 1999. It is one of the intelligence-based swarm algorithms that simulates the foraging behavior of ants in finding food and depositing pheromones on the ground to guide other ants to the food.

- Biogeography-based Optimizer [16]: BBO was introduced in 2008. It is an evolutionary-based technique closely related to GA and DE. BBO is inspired by the migration behavior of species between habitats.

- Coronavirus Herd Immunity Optimizer [21]: CHIO was proposed in 2020. It is one of the human-based techniques that mimics the concept of herd immunity to face the coronavirus.

5.3. Comparison of Optimization Performance

5.4. Convergence Performance Analysis

5.5. Nonparametric Statistical Test

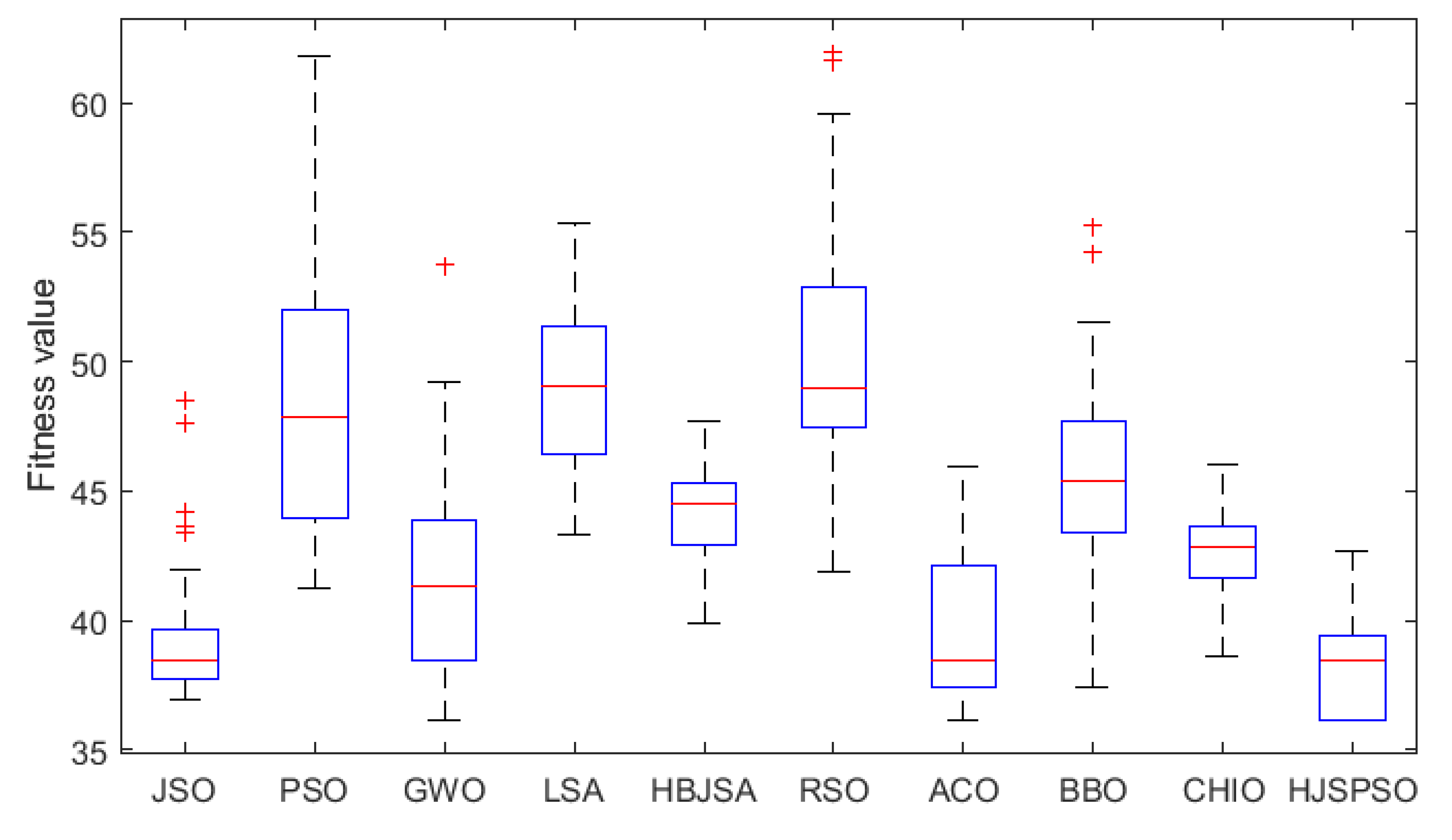

5.6. Case Study: Traveling Salesman

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACO | Ant colony optimization |

| BBO | Biography-based optimizer |

| CHIO | Coronavirus herd immunity optimizer |

| CNN | Convolutional neural network |

| DE | Differential evolution |

| FA | Firefly algorithm |

| GA | Genetic algorithm |

| GWO | Grey wolf optimizer |

| HBO | Heap-based optimizer |

| HBJSA | Hybrid heap-based and jellyfish search algorithm |

| HGSPSO | Hybrid gravitational search particle swarm optimization |

| HJSPSO | Hybrid jellyfish search and particle swarm optimization |

| HPSO-DE | Hybrid algorithm based on PSO and DE |

| HPSSHO | Hybrid particle swarm optimization spotted hyena optimizer |

| JSO | Jellyfish search optimizer |

| LSA | Lightning search algorithm |

| PSO | Particle swarm optimization |

| PS-FW | Hybrid algorithm based on particle swarm and fireworks |

| RSO | Rat swarm optimizer |

| TLBO | Teaching–learning-based optimization |

| TSP | Traveling salesman problem |

Appendix A

| Function | Indicator | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 2.1909 | 0 | 0 | 0 | |

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Worst | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 0 | |

| F2 | Mean | 0 | 0.066667 | 0 | 1 | 0 | 0 | 0.23333 | 0 | 0.033333 | 0 |

| Std. | 0 | 0.25371 | 0 | 1.0828 | 0 | 0 | 0.50401 | 0 | 0.18257 | 0 | |

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Worst | 0 | 1 | 0 | 4 | 0 | 0 | 2 | 0 | 1 | 0 | |

| F3 | Mean | 0 | 0 | 0.024273 | 0.17284 | 0 | |||||

| Std. | 0 | 0 | 0 | 0 | 0 | 0.008728 | 0.22456 | 0 | |||

| Best | 0 | 0 | 0.011924 | 0 | |||||||

| Worst | 0 | 0 | 0.04123 | 1.1317 | 0 | ||||||

| F4 | Mean | 0 | 0 | 0.003411 | 0.020798 | 0 | |||||

| Std. | 0 | 0 | 0 | 0 | 0 | 0.001301 | 0.02349 | 0 | |||

| Best | 0 | 0 | 0.001423 | 0 | |||||||

| Worst | 0 | 0 | 0.005714 | 0.088923 | 0 | ||||||

| F5 | Mean | 0.000177 | 0.001899 | 0.000065 | 0.011081 | 0.000011 | 0.000023 | 0.002001 | 0.000768 | 0.08211 | 0.000113 |

| Std. | 0.000062 | 0.000635 | 0.000041 | 0.002462 | 0.000023 | 0.000994 | 0.000203 | 0.019275 | 0.000052 | ||

| Best | 0.000093 | 0.000813 | 0.000012 | 0.006049 | 0.000744 | 0.000373 | 0.041471 | 0.000045 | |||

| Worst | 0.000338 | 0.003381 | 0.000169 | 0.015718 | 0.000027 | 0.000084 | 0.005450 | 0.001188 | 0.11218 | 0.000255 | |

| F6 | Mean | 0 | 0 | 0 | 0 | 0.000176 | 0 | 0.000097 | 0 | ||

| Std. | 0 | 0 | 0 | 0 | 0.000186 | 0 | 0.000113 | 0 | |||

| Best | 0 | 0 | 0 | 0 | 0 | 0 | |||||

| Worst | 0 | 0 | 0 | 0 | 0.000611 | 0.76207 | 0.000427 | 0 | |||

| F7 | Mean | −1 | −1 | −1 | −1 | −1 | −0.9987 | −1 | −1 | −0.9916 | −1 |

| Std. | 0 | 0 | 0 | 0 | 0.001276 | 0 | 0 | 0.031089 | 0 | ||

| Best | −1 | −1 | −1 | −1 | −1 | −0.99995 | −1 | −1 | −1 | −1 | |

| Worst | −1 | −1 | −1 | −1 | −1 | −0.9938 | −1 | −1 | −0.83808 | −1 | |

| F8 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000025 | 0 | |

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000030 | 0 | ||

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| Worst | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000132 | 0 | ||

| F9 | Mean | 0.13307 | 1.5407 | 0.001242 | 0.007284 | 0.4057 | |||||

| Std. | 0.406 | 0.2954 | 0.004956 | 0.006838 | 0.30376 | ||||||

| Best | 0.29806 | 0.000147 | 0.065115 | ||||||||

| Worst | 1.3345 | 2.3817 | 0.025051 | 0.028474 | 1.0787 | ||||||

| F10 | Mean | −50 | −50 | −50 | −50 | −50 | −19.3568 | −50 | −50 | −49.952 | −50 |

| Std. | 10.2918 | 0.055344 | |||||||||

| Best | −50 | −50 | −50 | −50 | −50 | −38.6815 | −50 | −50 | −49.9988 | −50 | |

| Worst | −50 | −50 | −50 | −50 | −50 | 0.48768 | −50 | −50 | −49.7493 | −50 | |

| F11 | Mean | −210 | −210 | −204.5386 | −210 | −206.7294 | −6.0603 | −210 | −209.9979 | −206.3257 | −210 |

| Std. | 16.6674 | 0.9209 | 6.1459 | 0.00057 | 3.8949 | ||||||

| Best | −210 | −210 | −209.9999 | −210 | −209.0753 | −22.3292 | −210 | −209.9992 | −209.7321 | −210 | |

| Worst | −210 | −210 | −154.3612 | −210 | −204.6705 | 4.6506 | −210 | −209.9964 | −192.7169 | −210 | |

| F12 | Mean | 0 | 0 | 3.2438 | |||||||

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 1.7601 | 0 | |||

| Best | 0 | 0 | 0 | 0.43572 | |||||||

| Worst | 0 | 0 | 7.1901 | ||||||||

| F13 | Mean | 0.000212 | 0.000038 | 0 | 0 | 0.000107 | 0.015393 | 0.44318 | |||

| Std. | 0.000277 | 0.000074 | 0 | 0 | 0.004426 | 0.24949 | |||||

| Best | 0.000031 | 0 | 0 | 0.007004 | 0.085003 | ||||||

| Worst | 0.000040 | 0.001514 | 0.000381 | 0 | 0 | 0.000134 | 0.022289 | 1.0397 | |||

| F14 | Mean | 0.000021 | 0 | 0 | 0.044577 | 0.19129 | |||||

| Std. | 0.000077 | 0 | 0 | 0.008846 | 0.079441 | 0 | |||||

| Best | 0 | 0 | 0.029445 | 0.000365 | |||||||

| Worst | 0.000347 | 0.000021 | 0 | 0 | 0.063703 | 0.34191 | |||||

| F15 | Mean | 0 | 0 | 0.34451 | 2.314 | 0 | |||||

| Std. | 0 | 0 | 0 | 0 | 0 | 0.11744 | 4.7075 | 0 | |||

| Best | 0 | 0 | 0.17032 | 0 | |||||||

| Worst | 0 | 0 | 0.63924 | 25.4599 | 0 | ||||||

| F16 | Mean | 0.05827 | 29.6144 | 26.0825 | 3.7089 | 15.546 | 28.3194 | 21.6441 | 59.0824 | 134.028 | 20.3876 |

| Std. | 0.19107 | 24.266 | 0.73251 | 3.8172 | 11.2623 | 0.37112 | 28.0842 | 37.7391 | 65.8768 | 0.32964 | |

| Best | 0.000018 | 0.93178 | 24.9843 | 0.000141 | 0.000035 | 27.7826 | 0.000653 | 25.015 | 7.0648 | 19.7712 | |

| Worst | 1.0276 | 76.6784 | 27.9087 | 15.1608 | 28.1724 | 28.9608 | 111.2683 | 142.212 | 265.6228 | 21.3463 | |

| F17 | Mean | 0.01068 | 0.66667 | 0.66667 | 0.66667 | 0.54369 | 0.66667 | 0.66667 | 0.9842 | 2.4386 | 0.66667 |

| Std. | 0.05605 | 0.18008 | 0.83249 | 1.6745 | |||||||

| Best | 0.66667 | 0.66667 | 0.66667 | 0.070622 | 0.66667 | 0.66667 | 0.66887 | 0.087339 | 0.66667 | ||

| Worst | 0.30717 | 0.66667 | 0.66667 | 0.66667 | 0.68381 | 0.66667 | 0.66667 | 4.8128 | 5.5449 | 0.66667 | |

| F18 | Mean | 0.998 | 1.3291 | 2.4375 | 0.998 | 0.998 | 1.9239 | 2.0781 | 2.3097 | 0.998 | 0.998 |

| Std. | 0.60211 | 2.9447 | 1.0068 | 2.5852 | 2.5508 | ||||||

| Best | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | |

| Worst | 0.998 | 2.9821 | 10.7632 | 0.998 | 0.998 | 2.9821 | 10.7632 | 10.7632 | 0.998 | 0.998 | |

| F19 | Mean | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.57724 | 0.39789 | 0.39789 | 0.39789 | 0.39789 |

| Std. | 0 | 0 | 0 | 0 | 0.15066 | 0 | |||||

| Best | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39805 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | |

| Worst | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.93725 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | |

| F20 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000047 | 0 | |

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Worst | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000259 | 0 | |

| F21 | Mean | 0 | 0 | 0 | 0 | 0.000138 | 0 | 0 | |||

| Std. | 0 | 0 | 0 | 0 | 0.000185 | 0 | 0.000013 | 0 | |||

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| Worst | 0 | 0 | 0 | 0 | 0.000761 | 0 | 0.000068 | 0 | |||

| F22 | Mean | 4.5872 | 42.9158 | 0 | 57.9397 | 0 | 0 | 16.6821 | 21.8759 | 3.2392 | 0 |

| Std. | 4.752 | 12.9218 | 0 | 13.4727 | 0 | 0 | 4.1204 | 6.1509 | 1.7512 | 0 | |

| Best | 0 | 25.8689 | 0 | 37.8084 | 0 | 0 | 9.9496 | 12.9555 | 0.041411 | 0 | |

| Worst | 13.9294 | 70.642 | 0 | 80.5915 | 0 | 0 | 25.8689 | 44.78 | 6.2076 | 0 | |

| F23 | Mean | −8097.69 | −6548.23 | −5987.234 | −8279.335 | −10486.3 | −5822.831 | −8765.300 | −9168.879 | −11631.16 | −8249.76 |

| Std. | 596.0855 | 862.8825 | 627.9572 | 622.6347 | 1663.726 | 701.2344 | 635.8039 | 504.1961 | 190.2833 | 460.9557 | |

| Best | −9209.117 | −8339.086 | −7508.985 | −9544.808 | −12150.5 | −6951.771 | −9915.361 | −9959.251 | −12029.93 | −9248.716 | |

| Worst | −6651.382 | −5101.433 | −4716.264 | −7156.004 | −7486.446 | −3579.892 | −7572.415 | −8123.383 | −11300.07 | −7432.332 | |

| F24 | Mean | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −1.4896 | −1.8013 | −1.8013 | −1.8013 | −1.8013 |

| Std. | 0.27273 | ||||||||||

| Best | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −1.7815 | −1.8013 | −1.8013 | −1.8013 | −1.8013 | |

| Worst | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −1.8013 | −0.94607 | −1.8013 | −1.8013 | −1.8013 | −1.801 | |

| F25 | Mean | −4.6793 | −4.538 | −4.5439 | −4.6003 | −4.6877 | −2.3764 | −4.5908 | −4.6491 | −4.6877 | −4.6701 |

| Std. | 0.016991 | 0.18897 | 0.2033 | 0.089854 | 0.31557 | 0.0897 | 0.054374 | 0.047281 | |||

| Best | −4.6877 | −4.6877 | −4.6876 | −4.6877 | −4.6877 | −2.8405 | −4.6877 | −4.6877 | −4.6877 | −4.6877 | |

| Worst | −4.6459 | −3.8658 | −3.8446 | −4.3331 | −4.6877 | −1.7803 | −4.3331 | −4.4831 | −4.6877 | −4.5377 | |

| F26 | Mean | −9.5319 | −8.8762 | −7.9497 | −8.9966 | −9.6602 | −3.7965 | −9.3772 | −9.2552 | −9.6589 | −9.5186 |

| Std. | 0.094523 | 0.57564 | 1.0323 | 0.30029 | 0.58715 | 0.16955 | 0.26029 | 0.002079 | 0.093213 | ||

| Best | −9.6602 | −9.5527 | −9.3656 | −9.4641 | −9.6602 | −5.0334 | −9.6602 | −9.6176 | −9.6602 | −9.6184 | |

| Worst | −9.3281 | −6.9144 | −5.7263 | −8.3181 | −9.6591 | −2.8528 | −9.0305 | −8.5856 | −9.6526 | −9.3356 | |

| F27 | Mean | 0 | 0 | 0 | 0.002911 | 0 | 0 | 0.0044 | 0 | 0.002658 | 0 |

| Std. | 0 | 0 | 0 | 0.01108 | 0 | 0 | 0.0133 | 0 | 0.008571 | 0 | |

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| Worst | 0 | 0 | 0 | 0.043671 | 0 | 0 | 0.043671 | 0 | 0.043671 | 0 | |

| F28 | Mean | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 |

| Std. | |||||||||||

| Best | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | |

| Worst | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | |

| F29 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Worst | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.000017 | 0 | |

| F30 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.001155 | 0 | |

| Std. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.001576 | 0 | ||

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| Worst | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.007948 | 0 | ||

| F31 | Mean | −186.7309 | −186.7309 | −186.7284 | −186.7309 | −186.7309 | −186.7286 | −186.7309 | −186.7309 | −186.7308 | −186.7309 |

| Std. | 0.009404 | 0.007582 | 0.000254 | ||||||||

| Best | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | −186.7309 | |

| Worst | −186.7309 | −186.7309 | −186.6817 | −186.7309 | −186.7309 | −186.6946 | −186.7309 | −186.7309 | −186.7297 | −186.7309 | |

| F32 | Mean | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Std. | 0.000013 | ||||||||||

| Best | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | |

| Worst | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3.0001 | 3 | |

| F33 | Mean | 0.000307 | 0.000368 | 0.002435 | 0.000338 | 0.000307 | 0.000675 | 0.001675 | 0.000354 | 0.000677 | 0.000307 |

| Std. | 0.000244 | 0.006086 | 0.000167 | 0.000267 | 0.005083 | 0.00006 | 0.000123 | ||||

| Best | 0.000307 | 0.000307 | 0.000307 | 0.000307 | 0.000307 | 0.000354 | 0.000307 | 0.000307 | 0.000317 | 0.000307 | |

| Worst | 0.000307 | 0.001594 | 0.020363 | 0.001223 | 0.000308 | 0.0013 | 0.020363 | 0.000514 | 0.00087 | 0.000307 | |

| F34 | Mean | −10.1532 | −5.9744 | −9.6449 | −8.3806 | −10.1532 | −1.3749 | −6.2478 | −7.228 | −10.1528 | −10.1532 |

| Std. | 3.37 | 1.5509 | 2.5859 | 0.77448 | 3.7292 | 3.2898 | 0.000973 | ||||

| Best | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −3.1593 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | |

| Worst | −10.1532 | −2.6305 | −5.0552 | −2.6305 | −10.1532 | −0.4962 | −2.6305 | −2.6305 | −10.1489 | −10.1532 | |

| F35 | Mean | −10.4029 | −7.6896 | −10.2257 | −8.1426 | −10.4029 | −1.5068 | −7.9193 | −7.7295 | −10.4024 | −10.4029 |

| Std. | 3.4513 | 0.97043 | 3.2909 | 1.3311 | 3.5796 | 3.5849 | 0.00173 | ||||

| Best | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −7.7302 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | |

| Worst | −10.4029 | −2.7519 | −5.0877 | −2.7659 | −10.4029 | −0.58241 | −2.7519 | −2.7519 | −10.3939 | −10.4029 | |

| F36 | Mean | −10.5364 | −7.9068 | −10.5364 | −9.4643 | −10.5364 | −1.6671 | −7.0608 | −7.9283 | −10.5349 | −10.5364 |

| Std. | 3.7907 | 0.000015 | 2.4485 | 0.88478 | 3.8008 | 3.5144 | 0.003992 | ||||

| Best | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −4.4413 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | |

| Worst | −10.5364 | −2.4217 | −10.5363 | −3.8354 | −10.5364 | −0.67852 | −2.4273 | −1.6766 | −10.515 | −10.5364 | |

| F37 | Mean | 0.00485 | 0.095574 | 0.33591 | 0.014761 | 0.016679 | 4.0769 | 0.1465 | 0.11938 | 0.11862 | 0.004616 |

| Std. | 0.001181 | 0.15826 | 0.5384 | 0.036154 | 0.019922 | 5.7771 | 0.18854 | 0.13962 | 0.09118 | 0.001398 | |

| Best | 0.002045 | 0.001936 | 0.06555 | 0.00062 | 0.00169 | 0.000088 | |||||

| Worst | 0.006389 | 0.47231 | 1.5377 | 0.13066 | 0.090347 | 28.3114 | 0.47231 | 0.47231 | 0.37234 | 0.006388 | |

| F38 | Mean | 0.000114 | 0.000221 | 0.030126 | 0.000192 | 0.005479 | 17.2902 | 0.000938 | 0.001726 | 0.010195 | 0.000083 |

| Std. | 0.000173 | 0.000177 | 0.16084 | 0.000173 | 0.003202 | 24.8812 | 0.003020 | 0.002537 | 0.007209 | 0.000093 | |

| Best | 0.000026 | 0.001122 | 0.38614 | 0.000441 | |||||||

| Worst | 0.000831 | 0.000428 | 0.88168 | 0.000419 | 0.011985 | 124.7192 | 0.015394 | 0.008246 | 0.030697 | 0.000325 | |

| F39 | Mean | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −2.7081 | −3.8628 | −3.8628 | −3.8628 | −3.8628 |

| Std. | 0.002405 | 0.924 | |||||||||

| Best | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8537 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | |

| Worst | −3.8628 | −3.8628 | −3.8549 | −3.8628 | −3.8628 | −0.57993 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | |

| F40 | Mean | −3.3224 | −3.2588 | −3.2579 | −3.2469 | −3.3224 | −2.1921 | −3.2826 | −3.3065 | −3.3224 | −3.3224 |

| Std. | 0.060487 | 0.067123 | 0.058427 | 0.44001 | 0.057155 | 0.041215 | |||||

| Best | −3.3224 | −3.3224 | −3.3224 | −3.3224 | −3.3224 | −2.9142 | −3.3224 | −3.3224 | −3.3224 | −3.3224 | |

| Worst | −3.3224 | −3.2032 | −3.1376 | −3.2032 | −3.3224 | −1.3712 | −3.2032 | −3.2032 | −3.3224 | −3.3224 | |

| F41 | Mean | 0 | 0.010826 | 0 | 0.008372 | 0 | 0 | 0.000986 | 0.062207 | 0.14432 | 0 |

| Std. | 0 | 0.012326 | 0 | 0.011661 | 0 | 0 | 0.003077 | 0.022368 | 0.14044 | 0 | |

| Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.027649 | 0 | ||

| Worst | 0 | 0.051369 | 0 | 0.044263 | 0 | 0 | 0.012321 | 0.12754 | 0.46368 | 0 | |

| F42 | Mean | 0.15683 | 1.8126 | 0.040649 | 0.11434 | ||||||

| Std. | 0.41725 | 0 | 1.1327 | 0 | 0.007439 | 0.097559 | |||||

| Best | 0.025084 | ||||||||||

| Worst | 1.5017 | 3.9346 | 0.055848 | 0.31791 | |||||||

| F43 | Mean | 0.003456 | 0.009783 | 0.27412 | 0.31638 | 0.027645 | 0.000057 | 0.002775 | |||

| Std. | 0.018927 | 0.008449 | 0.6863 | 0.12895 | 0.081371 | 0.000023 | 0.002182 | ||||

| Best | 0.091686 | 0.000019 | |||||||||

| Worst | 0.10367 | 0.039231 | 3.4496 | 0.78704 | 0.41467 | 0.000119 | 0.008432 | ||||

| F44 | Mean | 0.006036 | 0.003383 | 0.1448 | 0.006264 | 2.7347 | 0.000697 | 0.036811 | 0.029482 | ||

| Std. | 0.022972 | 0.018532 | 0.10267 | 0.023854 | 0.055416 | 0.000236 | 0.034919 | 0.051025 | |||

| Best | 2.6245 | 0.000278 | |||||||||

| Worst | 0.090543 | 0.1015 | 0.38505 | 0.097371 | 2.8429 | 0.001363 | 0.10215 | 0.14521 | |||

| F45 | Mean | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −0.73561 | −1.0809 | −1.0494 | −1.0809 | −1.0809 |

| Std. | 0.23848 | 0.058208 | 0.000013 | ||||||||

| Best | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −1.0661 | −1.0809 | −1.0809 | −1.0809 | −1.0809 | |

| Worst | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −1.0809 | −0.098209 | −1.0809 | −0.94563 | −1.0809 | −1.0809 | |

| F46 | Mean | −1.5 | −1.1997 | −1.2323 | −1.2909 | −1.5 | −0.21821 | −1.3641 | −1.0043 | −1.4064 | −1.5 |

| Std. | 0.28879 | 0.29904 | 0.28109 | 0.22497 | 0.25189 | 0.36935 | 0.21175 | ||||

| Best | −1.5 | −1.5 | −1.5 | −1.5 | −1.5 | −0.90126 | −1.5 | −1.5 | −1.5 | −1.5 | |

| Worst | −1.5 | −0.73607 | −0.57409 | −0.79773 | −1.5 | −0.011193 | −0.79782 | −0.51319 | −0.90597 | −1.5 | |

| F47 | Mean | −0.97768 | −0.71091 | −0.62771 | −0.58377 | −0.89084 | −0.000724 | −0.89442 | −0.56605 | −0.77381 | −0.72769 |

| Std. | 0.35818 | 0.36188 | 0.36267 | 0.27362 | 0.2944 | 0.001587 | 0.24859 | 0.24859 | 0.093677 | 0.22809 | |

| Best | −1.5 | −1.5 | −1.5 | −1.5 | −1.4993 | −0.006644 | −1.5 | −0.79769 | −0.96436 | −1.5 | |

| Worst | −0.46585 | −0.27494 | −0.13427 | −0.27494 | −0.41215 | −0.35577 | −0.14546 | −0.51318 | −0.27494 | ||

| F48 | Mean | 0 | 0 | 0.000029 | 0 | 0.018916 | 0.007613 | 0 | |||

| Std. | 0 | 0 | 0.000054 | 0 | 0.030807 | 0.000026 | 0.009496 | 0 | |||

| Best | 0 | 0 | 0 | 0 | 0.000327 | 0 | 0.00021 | 0 | |||

| Worst | 0 | 0 | 0.000194 | 0 | 0.1483 | 0.000121 | 0.03329 | 0 | |||

| F49 | Mean | 463.6839 | 75.4101 | 158.0587 | 152.2686 | 1842.241 | 91.0691 | 231.8756 | 7.5535 | ||

| Std. | 1188.208 | 167.4468 | 291.4037 | 273.25 | 1737.654 | 200.0792 | 324.0105 | 6.1844 | 0.000035 | ||

| Best | 0 | 0 | 0.004088 | 0 | 0.000025 | 92.7308 | 0.16645 | 0 | |||

| Worst | 5066.931 | 692.4573 | 677.3945 | 692.4565 | 6188.255 | 677.3945 | 692.4565 | 29.5654 | 0.000194 | ||

| F50 | Mean | 528.6916 | 81.3611 | 67.7395 | 426.0675 | 1824.006 | 63.7245 | 209.8042 | 6.1735 | ||

| Std. | 1084.965 | 165.7652 | 206.6924 | 302.0439 | 2047.863 | 170.1124 | 317.1288 | 5.3754 | |||

| Best | 0 | 0 | 10.5397 | 0 | 3.1356 | 72.1616 | 0.23867 | 0 | |||

| Worst | 4348.837 | 692.4587 | 677.3945 | 692.4565 | 6139.581 | 692.4565 | 692.4565 | 23.6686 | |||

| Number of best hits | 23 | 14 | 9 | 13 | 29 | 15 | 13 | 8 | 3 | 32 | |

| Hit rate (%) | 46 | 28 | 18 | 26 | 58 | 30 | 26 | 16 | 6 | 64 | |

| Function | Indicator | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CEC01 | Mean | 1897.46 | 9347.033 | 471.4735 | 7110.052 | 1 | 1 | 30345.25 | 75567.76 | 2159895 | 47.7927 |

| Std. | 2867.538 | 10741.98 | 1809.455 | 10272.97 | 0 | 30030.01 | 79953.37 | 1323728 | 103.9644 | ||

| Best | 7.8959 | 8.663 | 1 | 2.6238 | 1 | 1 | 397.1488 | 278.5102 | 329903.2 | 1 | |

| Worst | 12599.68 | 35716.12 | 9008.052 | 41270.07 | 1 | 1 | 94955.56 | 338853.2 | 5778371 | 465.4388 | |

| CEC02 | Mean | 158.8989 | 211.8816 | 182.3192 | 276.0412 | 4.9543 | 4.3106 | 334.29 | 274.2638 | 1456.037 | 22.8269 |

| Std. | 71.7895 | 98.4277 | 157.8209 | 88.4675 | 0.16205 | 0.18938 | 130.13 | 92.8797 | 336.3427 | 18.0358 | |

| Best | 28.4168 | 41.025 | 5.359 | 129.4601 | 4.246 | 4.2195 | 4.2181 | 140.061 | 809.6388 | 4.2165 | |

| Worst | 347.462 | 413.0268 | 607.7932 | 468.4655 | 5 | 5 | 689.29 | 562.2011 | 2146.505 | 66.2613 | |

| CEC03 | Mean | 1.7018 | 1.6056 | 1.7967 | 1.6192 | 4.316 | 4.7439 | 4.6179 | 2.2463 | 1.8273 | 1.3745 |

| Std. | 0.38958 | 1.1557 | 1.1508 | 1.1505 | 0.4813 | 1.0933 | 0.5127 | 2.1707 | 0.28045 | 0.1407 | |

| Best | 1.4092 | 1 | 1 | 1.4091 | 3.506 | 1.5614 | 3.3381 | 1.4091 | 1.4713 | 1 | |

| Worst | 2.8196 | 7.7119 | 6.6594 | 7.7109 | 5.33 | 7.4524 | 5.3898 | 7.7104 | 2.5396 | 1.6115 | |

| CEC04 | Mean | 5.227 | 16.6208 | 11.489 | 24.5473 | 15.161 | 58.7958 | 8.0011 | 9.3577 | 9.4372 | 8.7707 |

| Std. | 2.2936 | 9.0368 | 5.9399 | 10.6468 | 3.1066 | 10.0195 | 6.9928 | 3.7647 | 2.5852 | 3.1094 | |

| Best | 2.0785 | 5.9748 | 2.9972 | 6.9698 | 9.8662 | 41.6655 | 1 | 2.9899 | 5.4216 | 3.9849 | |

| Worst | 11.9445 | 39.8033 | 24.7274 | 46.768 | 22.4859 | 79.5955 | 72.0561 | 18.9092 | 15.5778 | 15.9244 | |

| CEC05 | Mean | 1.0372 | 1.118 | 1.3418 | 1.1254 | 1.0715 | 37.0238 | 1.0400 | 1.0865 | 1.0832 | 1.0343 |

| Std. | 0.02394 | 0.076811 | 0.21881 | 0.073322 | 0.037814 | 9.068 | 0.0954 | 0.04696 | 0.046503 | 0.023769 | |

| Best | 1.0074 | 1.0296 | 1.0807 | 1.0172 | 1.0085 | 22.8897 | 1 | 1.0197 | 1.0114 | 1 | |

| Worst | 1.0935 | 1.3568 | 1.7945 | 1.3761 | 1.1425 | 66.7916 | 1.5356 | 1.2143 | 1.1841 | 1.0935 | |

| CEC06 | Mean | 1.1217 | 1.6565 | 1.6752 | 2.991 | 1.0389 | 7.3074 | 1.3068 | 1.3217 | 2.5675 | 1.1953 |

| Std. | 0.21817 | 0.87821 | 0.65429 | 1.3046 | 0.033307 | 0.99091 | 0.4634 | 0.6039 | 0.48689 | 0.39333 | |

| Best | 1 | 1 | 1.079 | 1.0813 | 1.0042 | 5.6741 | 1 | 1.0083 | 1.6862 | 1 | |

| Worst | 1.965 | 4.1344 | 3.4022 | 5.5246 | 1.1199 | 9.6955 | 2.5143 | 3.4939 | 3.6444 | 2.5774 | |

| CEC07 | Mean | 176.394 | 780.0061 | 506.8545 | 770.3265 | 640.8752 | 1231.502 | 103.99 | 676.6212 | 310.6974 | 309.9096 |

| Std. | 157.7418 | 226.8214 | 243.8092 | 325.8487 | 119.8646 | 199.7113 | 108.2556 | 299.1341 | 98.4024 | 145.5422 | |

| Best | 1.1249 | 416.0599 | 1.4188 | 126.5957 | 388.7151 | 837.7197 | 7.8924 | 4.6023 | 38.2768 | 1.2498 | |

| Worst | 544.3641 | 1284.386 | 1073.91 | 1705.947 | 855.2098 | 1662.029 | 506.7367 | 1138.152 | 508.1458 | 593.3495 | |

| CEC08 | Mean | 2.2995 | 3.4544 | 3.0741 | 3.4037 | 3.7788 | 4.5351 | 2.2804 | 3.3504 | 3.3275 | 2.2913 |

| Std. | 0.43068 | 0.57327 | 0.60597 | 0.50727 | 0.1974 | 0.2013 | 0.43776 | 0.53345 | 0.26203 | 0.41965 | |

| Best | 1.2409 | 2.4356 | 2.201 | 2.0071 | 3.1342 | 4.1857 | 1.6891 | 2.0506 | 2.834 | 1.6462 | |

| Worst | 3.1437 | 4.475 | 4.524 | 4.4785 | 4.0983 | 5.0861 | 3.2139 | 4.4073 | 3.6896 | 3.1452 | |

| CEC09 | Mean | 1.0775 | 1.1329 | 1.0951 | 1.2409 | 1.2019 | 1.5009 | 1.0804 | 1.0943 | 1.1646 | 1.1155 |

| Std. | 0.025239 | 0.052233 | 0.044148 | 0.12048 | 0.035946 | 0.41023 | 0.017094 | 0.038149 | 0.03367 | 0.029268 | |

| Best | 1.0369 | 1.0522 | 1.0509 | 1.0451 | 1.1324 | 1.3755 | 1.0346 | 1.0273 | 1.1144 | 1.0635 | |

| Worst | 1.1524 | 1.2664 | 1.2025 | 1.6425 | 1.257 | 3.6701 | 1.1132 | 1.177 | 1.2318 | 1.1796 | |

| CEC10 | Mean | 5.8004 | 20.327 | 19.9744 | 19.1228 | 15.8228 | 21.1176 | 20.5397 | 20.9998 | 19.6332 | 4.2985 |

| Std. | 7.9161 | 3.6503 | 4.9802 | 5.7526 | 8.9673 | 0.51525 | 3.7005 | 0.000733 | 4.3111 | 6.833 | |

| Best | 1 | 1 | 1.0836 | 2.1551 | 1.0044 | 18.764 | 1 | 20.9961 | 5.8547 | 1 | |

| Worst | 21.3698 | 21 | 21.3918 | 21.1417 | 21.209 | 21.4353 | 21.4105 | 21 | 21.0587 | 21.3619 | |

| Number of best hits | 2 | 0 | 0 | 0 | 1 | 2 | 2 | 0 | 0 | 3 | |

| Hit rate (%) | 20 | 0 | 0 | 0 | 10 | 20 | 20 | 0 | 0 | 30 | |

References

- Chong, E.K.; Zak, S.H. An Introduction to Optimization; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 75. [Google Scholar]

- Yang, X.S. Metaheuristic optimization: Algorithm analysis and open problems. In Proceedings of the Experimental Algorithms: 10th International Symposium (SEA 2011), Kolimpari, Chania, Greece, 5–7 May 2011; pp. 21–32. [Google Scholar]

- Vasiljević, D.; Vasiljević, D. Classical algorithms in the optimization of optical systems. In Classical and Evolutionary Algorithms in the Optimization of Optical Systems; Springer: Boston, MA, USA, 2002; pp. 11–39. [Google Scholar]

- Dhiman, G.; Kaur, A. A hybrid algorithm based on particle swarm and spotted hyena optimizer for global optimization. In Proceedings of the Soft Computing for Problem Solving (SocProS 2017), Bhubaneswar, India, 23–24 December 2017; pp. 599–615. [Google Scholar]

- Dahmani, S.; Yebdri, D. Hybrid algorithm of particle swarm optimization and grey wolf optimizer for reservoir operation management. Water Resour. Manag. 2020, 34, 4545–4560. [Google Scholar] [CrossRef]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Shi, Y. On the exploration and exploitation in popular swarm-based metaheuristic algorithms. Neural Comput. Appl. 2019, 31, 7665–7683. [Google Scholar] [CrossRef]

- Singh, S.; Chauhan, P.; Singh, N. Capacity optimization of grid connected solar/fuel cell energy system using hybrid ABC-PSO algorithm. Int. J. Hydrogen Energy 2020, 45, 10070–10088. [Google Scholar] [CrossRef]

- Wong, L.A.; Shareef, H.; Mohamed, A.; Ibrahim, A.A. Optimal battery sizing in photovoltaic based distributed generation using enhanced opposition-based firefly algorithm for voltage rise mitigation. Sci. World J. 2014, 2014, 752096. [Google Scholar] [CrossRef]

- Wong, L.A.; Shareef, H.; Mohamed, A.; Ibrahim, A.A. Novel quantum-inspired firefly algorithm for optimal power quality monitor placement. Front. Energy 2014, 8, 254–260. [Google Scholar] [CrossRef]

- Long, W.; Cai, S.; Jiao, J.; Xu, M.; Wu, T. A new hybrid algorithm based on grey wolf optimizer and cuckoo search for parameter extraction of solar photovoltaic models. Energy Convers. Manag. 2020, 203, 112243. [Google Scholar] [CrossRef]

- Ting, T.; Yang, X.S.; Cheng, S.; Huang, K. Hybrid metaheuristic algorithms: Past, present, and future. In Recent Advances in Swarm Intelligence and Evolutionary Computation; Springer: Cham, Switzerland, 2015; pp. 71–83. [Google Scholar]

- Farnad, B.; Jafarian, A.; Baleanu, D. A new hybrid algorithm for continuous optimization problem. Appl. Math. Model. 2018, 55, 652–673. [Google Scholar] [CrossRef]

- Askari, Q.; Saeed, M.; Younas, I. Heap-based optimizer inspired by corporate rank hierarchy for global optimization. Expert Syst. Appl. 2020, 161, 113702. [Google Scholar] [CrossRef]

- Ibrahim, A.A.; Mohamed, A.; Shareef, H. Optimal placement of power quality monitors in distribution systems using the topological monitor reach area. In Proceedings of the 2011 IEEE International Electric Machines & Drives Conference (IEMDC), Niagara Falls, ON, Canada, 15–18 May 2011; pp. 394–399. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef] [Green Version]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Shareef, H.; Ibrahim, A.A.; Mutlag, A.H. Lightning search algorithm. Appl. Soft Comput. 2015, 36, 315–333. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Alyasseri, Z.A.A.; Awadallah, M.A.; Abu Doush, I. Coronavirus herd immunity optimizer (CHIO). Neural Comput. Appl. 2021, 33, 5011–5042. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks (ICNN’95), Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Chou, J.S.; Truong, D.N. A novel metaheuristic optimizer inspired by behavior of jellyfish in ocean. Appl. Math. Comput. 2021, 389, 125535. [Google Scholar] [CrossRef]

- Dhiman, G.; Garg, M.; Nagar, A.; Kumar, V.; Dehghani, M. A novel algorithm for global optimization: Rat swarm optimizer. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8457–8482. [Google Scholar] [CrossRef]

- Khare, A.; Kakandikar, G.M.; Kulkarni, O.K. An insight review on jellyfish optimization algorithm and its application in engineering. Rev. Comput. Eng. Stud. 2022, 9, 31–40. [Google Scholar] [CrossRef]

- Manita, G.; Zermani, A. A modified jellyfish search optimizer with orthogonal learning strategy. Procedia Comput. Sci. 2021, 192, 697–708. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Li, Z.; Zhang, S.; Truong, T.K. A hybrid algorithm based on particle swarm and chemical reaction optimization. Expert Syst. Appl. 2014, 41, 2134–2143. [Google Scholar] [CrossRef]

- Dokeroglu, T.; Sevinc, E.; Kucukyilmaz, T.; Cosar, A. A survey on new generation metaheuristic algorithms. Comput. Ind. Eng. 2019, 137, 106040. [Google Scholar] [CrossRef]

- Cui, G.; Qin, L.; Liu, S.; Wang, Y.; Zhang, X.; Cao, X. Modified PSO algorithm for solving planar graph coloring problem. Prog. Nat. Sci. 2008, 18, 353–357. [Google Scholar] [CrossRef]

- Ibrahim, A.A.; Mohamed, A.; Shareef, H.; Ghoshal, S.P. Optimal power quality monitor placement in power systems based on particle swarm optimization and artificial immune system. In Proceedings of the 2011 3rd Conference on Data Mining and Optimization (DMO), Putrajaya, Malaysia, 28–29 June 2011; pp. 141–145. [Google Scholar]

- Gupta, S.; Devi, S. Modified PSO algorithm with high exploration and exploitation ability. Int. J. Softw. Eng. Res. Pract. 2011, 1, 15–19. [Google Scholar]

- Yan, C.m.; Lu, G.y.; Liu, Y.t.; Deng, X.y. A modified PSO algorithm with exponential decay weight. In Proceedings of the 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Guilin, China, 29–31 July 2017; pp. 239–242. [Google Scholar]

- Al-Bahrani, L.T.; Patra, J.C. A novel orthogonal PSO algorithm based on orthogonal diagonalization. Swarm Evol. Comput. 2018, 40, 1–23. [Google Scholar] [CrossRef]

- Yu, X.; Cao, J.; Shan, H.; Zhu, L.; Guo, J. An adaptive hybrid algorithm based on particle swarm optimization and differential evolution for global optimization. Sci. World J. 2014, 2014, 215472. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, S.; Liu, Y.; Wei, L.; Guan, B. PS-FW: A hybrid algorithm based on particle swarm and fireworks for global optimization. Comput. Intell. Neurosci. 2018, 2018, 6094685. [Google Scholar] [CrossRef]

- Khan, T.A.; Ling, S.H. A novel hybrid gravitational search particle swarm optimization algorithm. Eng. Appl. Artif. Intell. 2021, 102, 104263. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chakrabortty, R.K.; Ryan, M.J.; El-Fergany, A. An improved artificial jellyfish search optimizer for parameter identification of photovoltaic models. Energies 2021, 14, 1867. [Google Scholar] [CrossRef]

- Juhaniya, A.I.S.; Ibrahim, A.A.; Mohd Zainuri, M.A.A.; Zulkifley, M.A.; Remli, M.A. Optimal stator and rotor slots design of induction motors for electric vehicles using opposition-based jellyfish search optimization. Machines 2022, 10, 1217. [Google Scholar] [CrossRef]

- Rajpurohit, J.; Sharma, T.K. Chaotic active swarm motion in jellyfish search optimizer. Int. J. Syst. Assur. Eng. Manag. 2022, 1–17. [Google Scholar] [CrossRef]

- Ginidi, A.; Elsayed, A.; Shaheen, A.; Elattar, E.; El-Sehiemy, R. An innovative hybrid heap-based and jellyfish search algorithm for combined heat and power economic dispatch in electrical grids. Mathematics 2021, 9, 2053. [Google Scholar] [CrossRef]

- Chou, J.S.; Truong, D.N.; Kuo, C.C. Imaging time-series with features to enable visual recognition of regional energy consumption by bio-inspired optimization of deep learning. Energy 2021, 224, 120100. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.; Yin, L.; Wang, S.; Wang, Y.; Wan, F. A hybrid particle swarm optimizer with sine cosine acceleration coefficients. Inf. Sci. 2018, 422, 218–241. [Google Scholar] [CrossRef]

- Karaboga, D.; Akay, B. A comparative study of artificial bee colony algorithm. Appl. Math. Comput. 2009, 214, 108–132. [Google Scholar] [CrossRef]

- Rahman, C.M.; Rashid, T.A. Dragonfly algorithm and its applications in applied science survey. Comput. Intell. Neurosci. 2019, 2019, 9293617. [Google Scholar] [CrossRef] [PubMed]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Dorigo, M.; Di Caro, G. Ant colony optimization: A new meta-heuristic. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 6–7 July 1999; pp. 1470–1477. [Google Scholar]

- Chou, J.S.; Ngo, N.T. Modified firefly algorithm for multidimensional optimization in structural design problems. Struct. Multidiscip. Optim. 2017, 55, 2013–2028. [Google Scholar] [CrossRef]

- Gomes, W.J.; Beck, A.T.; Lopez, R.H.; Miguel, L.F. A probabilistic metric for comparing metaheuristic optimization algorithms. Struct. Saf. 2018, 70, 59–70. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

| No. | Function’s Name | Types | Optimal Value | Dimension | Range |

|---|---|---|---|---|---|

| 1 | Setpint | US | 0 | 5 | [−5.12, 5.12] |

| 2 | Step | US | 0 | 30 | [−100, 100] |

| 3 | Sphere | US | 0 | 30 | [−100, 100] |

| 4 | SumSquares | US | 0 | 30 | [−10, 10] |

| 5 | Quartic | US | 0 | 30 | [−1.28, 1.28] |

| 6 | Beale | UN | 0 | 2 | [−4.5, 4.5] |

| 7 | Easom | UN | −1 | 2 | [−100, 100] |

| 8 | Matyas | UN | 0 | 2 | [−10, 10] |

| 9 | Colville | UN | 0 | 4 | [−10, 10] |

| 10 | Trid 6 | UN | −50 | 6 | [−D2, D2] |

| 11 | Trid 10 | UN | −210 | 10 | [−D2, D2] |

| 12 | Zakharov | UN | 0 | 10 | [−5, 10] |

| 13 | Powell | UN | 0 | 24 | [−4, 5] |

| 14 | Schwefel 2.22 | UN | 0 | 30 | [−10, 10] |

| 15 | Schwefel 1.2 | UN | 0 | 30 | [−100, 100] |

| 16 | Rosenbrock | UN | 0 | 30 | [−30, 30] |

| 17 | Dixon-Price | UN | 0 | 30 | [−10, 10] |

| 18 | Foxholes | MS | 0.998 | 2 | [−65.536, 65.536] |

| 19 | Branin | MS | 0.398 | 2 | [−5, 10], [0, 15] |

| 20 | Bohachevsky1 | MS | 0 | 2 | [−100, 100] |

| 21 | Booth | MS | 0 | 2 | [−10, 10] |

| 22 | Rastrigin | MS | 0 | 30 | [−5.12, 5.12] |

| 23 | Schwefel | MS | −12,569.5 | 30 | [−500, 500] |

| 24 | Michalewicz 2 | MS | −1.8013 | 2 | [0, ] |

| 25 | Michalewicz 5 | MS | −4.6877 | 5 | [0, ] |

| 26 | Michalewicz 10 | MS | −9.6602 | 10 | [0, ] |

| 27 | Schaffer | MS | 0 | 2 | [−100, 100] |

| 28 | Six Hump Camel Back | MS | −1.03163 | 2 | [−5, 5] |

| 29 | Bohachevsky 2 | MS | 0 | 2 | [−100, 100] |

| 30 | Bohachevsky 3 | MS | 0 | 2 | [−100, 100] |

| 31 | Shubert | MS | −186.73 | 2 | [−10, 10] |

| 32 | Goldstein-Price | MS | 3 | 2 | [−2, 2] |

| 33 | Kowalik | MS | 0.00031 | 4 | [−5, 5] |

| 34 | Shekel 5 | MS | −10.15 | 4 | [0, 10] |

| 35 | Shekel 7 | MS | −10.4 | 4 | [0, 10] |

| 36 | Shekel 10 | MS | −10.53 | 4 | [0, 10] |

| 37 | Perm | MS | 0 | 4 | [−D, D] |

| 38 | Powersum | MS | 0 | 4 | [0, 1] |

| 39 | Hartman 3 | MS | −3.86 | 3 | [0, D] |

| 40 | Hartman 6 | MS | −3.32 | 6 | [0, 1] |

| 41 | Griewank | MS | 0 | 30 | [−600, 600] |

| 42 | Ackley | MS | 0 | 30 | [−32, 32] |

| 43 | Penalized | MS | 0 | 30 | [−50, 50] |

| 44 | Penalized 2 | MS | 0 | 30 | [−50, 50] |

| 45 | Langermann 2 | MS | −1.08 | 2 | [0, 10] |

| 46 | Langermann 5 | MS | −1.5 | 5 | [0, 10] |

| 47 | Langermann 10 | MS | NA | 10 | [0, 10] |

| 48 | Fletcher Powell 2 | MS | 0 | 2 | [] |

| 49 | Fletcher Powell 5 | MS | 0 | 5 | [] |

| 50 | Fletcher Powell 10 | MS | 0 | 10 | [] |

| No. | Function | Function’s Name | Optimal Value | Dimension | Range |

|---|---|---|---|---|---|

| 1 | CEC01 | Storn’s Chebyshev polynomial fitting problem | 1 | 9 | [−5.12, 5.12] |

| 2 | CEC02 | Inverse Hilbert matrix problem | 1 | 16 | [−100, 100] |

| 3 | CEC03 | Lennard–Jones minimum energy cluster | 1 | 18 | [−100, 100] |

| 4 | CEC04 | Rastrigin’s function | 1 | 10 | [−10, 10] |

| 5 | CEC05 | Grienwank’s function | 1 | 10 | [−1.28, 1.28] |

| 6 | CEC06 | Weierstrass function | 1 | 10 | [−4.5, 4.5] |

| 7 | CEC07 | Modified Schwefel’s function | 1 | 10 | [−100, 100] |

| 8 | CEC08 | Expanded Schaffer’s F6 function | 1 | 10 | [−10, 10] |

| 9 | CEC09 | Happy CAT function | 1 | 10 | [−10, 10] |

| 10 | CEC10 | Ackley Function | 1 | 10 | [−D2, D2] |

| Technique | Parameter Settings |

|---|---|

| HJSPSO | ; ; ; ; ; ; ; ; |

| PSO [22] | ; ; ; ; ; |

| JSO [23] | ; ; , |

| GWO [45] | ; ; control parameter a linearly decreases from 2 to 0 |

| LSA [19] | ; ; channel time is set to 10 |

| HBJSA [40] | ; ; adaptive coefficient increases gradually until reaching 0.5 |

| RSO [24] | ; ; ranges of R and C are set to [1, 5] and [0, 2], respectively |

| ACO [46] | ; ; pheromone evaporation rate, = 0.5; pheromone exponential weight; = 1; heuristic exponential weight, = 2 |

| BBO [16] | ; ; habitat modification probability = 1; immigration probability bound per iteration = [0, 1]; step size for numerical integration of probability at 1; mutation probability, ; maximal immigration rate, ; maximal emigration rate, ; elitism parameter = 2 |

| CHIO [21] | ; ; number of initial infected cases = 1; basic reproduction rate, , and maximum infected cases age, , are positive integers |

| Function | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| F2 | 1 | 8 | 1 | 10 | 1 | 9 | 1 | 1 | 7 | 1 |

| F3 | 9 | 6 | 5 | 8 | 1 | 1 | 7 | 9 | 10 | 1 |

| F4 | 8 | 6 | 4 | 7 | 1 | 1 | 5 | 9 | 10 | 1 |

| F5 | 5 | 7 | 3 | 9 | 1 | 2 | 8 | 6 | 10 | 4 |

| F6 | 1 | 1 | 8 | 1 | 1 | 10 | 1 | 7 | 9 | 1 |

| F7 | 1 | 1 | 8 | 1 | 1 | 9 | 1 | 1 | 10 | 1 |

| F8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 9 | 10 | 1 |

| F9 | 2 | 4 | 8 | 3 | 5 | 10 | 6 | 7 | 9 | 1 |

| F10 | 2 | 3 | 8 | 4 | 7 | 10 | 5 | 6 | 9 | 1 |

| F11 | 3 | 1 | 9 | 4 | 8 | 10 | 1 | 6 | 7 | 5 |

| F12 | 7 | 4 | 3 | 5 | 1 | 1 | 8 | 9 | 10 | 6 |

| F13 | 5 | 8 | 3 | 6 | 1 | 1 | 7 | 9 | 10 | 4 |

| F14 | 6 | 8 | 4 | 7 | 1 | 1 | 5 | 9 | 10 | 3 |

| F15 | 8 | 5 | 4 | 7 | 1 | 1 | 6 | 9 | 10 | 1 |

| F16 | 1 | 8 | 6 | 2 | 3 | 7 | 5 | 9 | 10 | 4 |

| F17 | 1 | 3 | 6 | 4 | 8 | 7 | 2 | 9 | 10 | 5 |

| F18 | 1 | 6 | 10 | 4 | 1 | 7 | 8 | 9 | 5 | 1 |

| F19 | 1 | 1 | 9 | 1 | 1 | 10 | 7 | 6 | 8 | 1 |

| F20 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 10 | 1 |

| F21 | 1 | 1 | 8 | 1 | 1 | 10 | 1 | 7 | 9 | 1 |

| F22 | 6 | 9 | 1 | 10 | 1 | 1 | 7 | 8 | 5 | 1 |

| F23 | 7 | 8 | 9 | 5 | 2 | 10 | 4 | 3 | 1 | 6 |

| F24 | 1 | 1 | 9 | 1 | 1 | 10 | 1 | 7 | 8 | 1 |

| F25 | 3 | 9 | 8 | 6 | 1 | 10 | 7 | 5 | 2 | 4 |

| F26 | 3 | 8 | 9 | 7 | 1 | 10 | 5 | 6 | 2 | 4 |

| F27 | 1 | 1 | 1 | 9 | 1 | 1 | 10 | 1 | 8 | 1 |

| F28 | 2 | 2 | 8 | 2 | 2 | 10 | 2 | 1 | 9 | 2 |

| F29 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 10 | 1 |

| F30 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 9 | 10 | 1 |

| F31 | 1 | 7 | 10 | 4 | 2 | 9 | 5 | 5 | 8 | 3 |

| F32 | 5 | 5 | 9 | 1 | 4 | 8 | 1 | 7 | 10 | 1 |

| F33 | 2 | 6 | 10 | 4 | 3 | 7 | 9 | 5 | 8 | 1 |

| F34 | 1 | 9 | 5 | 6 | 1 | 10 | 8 | 7 | 4 | 1 |

| F35 | 2 | 9 | 5 | 6 | 1 | 10 | 7 | 8 | 4 | 2 |

| F36 | 2 | 8 | 4 | 6 | 2 | 10 | 9 | 7 | 5 | 1 |

| F37 | 2 | 5 | 9 | 3 | 4 | 10 | 8 | 7 | 6 | 1 |

| F38 | 2 | 4 | 9 | 3 | 7 | 10 | 5 | 6 | 8 | 1 |

| F39 | 2 | 2 | 9 | 2 | 2 | 10 | 2 | 1 | 8 | 2 |

| F40 | 2 | 7 | 8 | 9 | 3 | 10 | 6 | 5 | 4 | 1 |

| F41 | 1 | 8 | 1 | 7 | 1 | 1 | 6 | 9 | 10 | 1 |

| F42 | 4 | 9 | 6 | 10 | 1 | 2 | 5 | 7 | 8 | 3 |

| F43 | 2 | 6 | 7 | 9 | 3 | 10 | 8 | 4 | 5 | 1 |

| F44 | 5 | 4 | 9 | 6 | 2 | 10 | 1 | 3 | 8 | 7 |

| F45 | 1 | 1 | 7 | 1 | 1 | 10 | 1 | 9 | 8 | 1 |

| F46 | 1 | 8 | 7 | 6 | 1 | 10 | 5 | 9 | 4 | 1 |

| F47 | 1 | 6 | 7 | 8 | 3 | 10 | 2 | 9 | 4 | 5 |

| F48 | 1 | 1 | 8 | 1 | 6 | 10 | 5 | 7 | 9 | 1 |

| F49 | 1 | 9 | 4 | 7 | 6 | 10 | 5 | 8 | 3 | 2 |

| F50 | 2 | 9 | 6 | 5 | 8 | 10 | 4 | 7 | 3 | 1 |

| No. best hits | 23 | 14 | 9 | 13 | 29 | 15 | 13 | 8 | 2 | 32 |

| Hit rate (%) | 46 | 28 | 18 | 26 | 58 | 30 | 26 | 16 | 4 | 64 |

| Function | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| CEC01 | 5 | 7 | 4 | 6 | 2 | 1 | 8 | 9 | 10 | 3 |

| CEC02 | 4 | 6 | 5 | 8 | 2 | 1 | 9 | 7 | 10 | 3 |

| CEC03 | 4 | 2 | 5 | 3 | 8 | 10 | 9 | 7 | 6 | 1 |

| CEC04 | 1 | 8 | 6 | 9 | 7 | 10 | 2 | 4 | 5 | 3 |

| CEC05 | 2 | 7 | 9 | 8 | 4 | 10 | 3 | 6 | 5 | 1 |

| CEC06 | 2 | 6 | 7 | 9 | 1 | 10 | 4 | 5 | 8 | 3 |

| CEC07 | 2 | 9 | 5 | 8 | 6 | 10 | 1 | 7 | 4 | 3 |

| CEC08 | 3 | 8 | 4 | 7 | 9 | 10 | 1 | 6 | 5 | 2 |

| CEC09 | 1 | 6 | 4 | 9 | 8 | 10 | 2 | 3 | 7 | 5 |

| CEC10 | 2 | 7 | 6 | 4 | 3 | 10 | 8 | 9 | 5 | 1 |

| No. best hits | 2 | 0 | 0 | 0 | 1 | 2 | 2 | 0 | 0 | 3 |

| Hit rate (%) | 20 | 0 | 0 | 0 | 10 | 20 | 20 | 0 | 0 | 30 |

| Technique | Time Taken per Iteration (ms) | Convergence Point (Iteration) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F7 | F9 | F33 | F50 | CEC03 | CEC10 | F7 | F9 | F33 | F50 | CEC03 | CEC10 | |

| JSO | 0.228 | 0.255 | 0.216 | 0.475 | 0.957 | 0.883 | 183 | 2989 | 2978 | 1750 | 2998 | 2115 |

| PSO | 1.329 | 2.218 | 1.531 | 1.519 | 2.980 | 3.049 | 114 | 3000 | 2969 | 2034 | 324 | 714 |

| GWO | 0.359 | 0.344 | 0.362 | 0.595 | 0.756 | 0.646 | 2997 | 2999 | 2999 | 3000 | 3000 | 1999 |

| LSA | 1.367 | 3.490 | 3.792 | 6.153 | 16.40 | 7.193 | 37 | 2998 | 1059 | 225 | 397 | 522 |

| HBJSA | 0.344 | 0.316 | 0.438 | 0.482 | 1.076 | 1.043 | 435 | 2198 | 2231 | 2238 | 403 | 2163 |

| RSO | 0.147 | 0.123 | 0.126 | 0.233 | 0.507 | 0.524 | 1933 | 2321 | 2359 | 2597 | 2913 | 1369 |

| ACO | 1.162 | 2.674 | 2.676 | 3.249 | 10.47 | 9.009 | 38 | 3000 | 3000 | 2315 | 2447 | 169 |

| BBO | 0.719 | 1.437 | 1.548 | 2.239 | 5.988 | 4.252 | 724 | 2999 | 3000 | 2605 | 2960 | 2939 |

| CHIO | 1.064 | 1.049 | 1.127 | 1.109 | 2.666 | 2.522 | 2800 | 2322 | 2903 | 2922 | 2869 | 2997 |

| HJSPSO | 0.268 | 0.255 | 0.233 | 0.467 | 0.870 | 1.001 | 229 | 2971 | 1616 | 1755 | 2994 | 2367 |

| Function | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO |

|---|---|---|---|---|---|---|---|---|---|

| CEC01 | 0.943 | ||||||||

| CEC02 | |||||||||

| CEC03 | 0.053 | 0.002 | 0.059 | ||||||

| CEC04 | 0.079 | 0.015 | 0.393 | 0.658 | |||||

| CEC05 | 0.704 | 0.001 | 0.043 | ||||||

| CEC06 | 0.544 | 0.045 | 0.171 | 0.688 | 0.382 | ||||

| CEC07 | 0.003 | 0.002 | 0.910 | ||||||

| CEC08 | 0.910 | 0.471 | |||||||

| CEC09 | 0.229 | 0.052 | 0.072 | 0.03 | |||||

| CEC10 | 0.295 |

| Function | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| CEC01 | 5.60 | 7.00 | 3.63 | 6.13 | 2.03 | 1.12 | 7.47 | 8.47 | 10 | 3.55 |

| CEC02 | 5.20 | 6.03 | 5.57 | 6.93 | 2.17 | 1.17 | 7.93 | 7.20 | 10 | 2.80 |

| CEC03 | 5.83 | 2.43 | 4.90 | 2.42 | 8.43 | 8.93 | 8.40 | 4.87 | 6.40 | 2.35 |

| CEC04 | 2.28 | 6.60 | 5.30 | 7.95 | 7.00 | 9.97 | 0.48 | 4.58 | 4.53 | 4.30 |

| CEC05 | 2.62 | 6.17 | 8.53 | 6.43 | 4.67 | 10 | 3.45 | 5.60 | 5.20 | 2.33 |

| CEC06 | 3.38 | 4.73 | 6.20 | 7.77 | 3.30 | 10 | 3.38 | 4.77 | 7.93 | 3.53 |

| CEC07 | 2.33 | 7.50 | 5.33 | 7.60 | 6.77 | 9.73 | 1.57 | 6.77 | 3.83 | 3.57 |

| CEC08 | 2.57 | 6.43 | 5.13 | 6.37 | 8.10 | 9.93 | 1.80 | 6.23 | 6.10 | 2.33 |

| CEC09 | 2.67 | 5.07 | 3.23 | 7.77 | 7.97 | 9.40 | 3.70 | 3.40 | 6.77 | 4.50 |

| CEC10 | 3.08 | 3.52 | 8.30 | 4.32 | 5.73 | 8.17 | 9.17 | 4.67 | 5.63 | 2.42 |

| Cities | Coordinates | Cities | Coordinates | Cities | Coordinates | Cities | Coordinates | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 4.38 | 7.51 | 6 | 4.89 | 9.59 | 11 | 2.76 | 8.4 | 16 | 4.98 | 3.49 |

| 2 | 3.81 | 2.55 | 7 | 4.45 | 5.47 | 12 | 6.79 | 2.54 | 17 | 9.59 | 1.96 |

| 3 | 7.65 | 5.05 | 8 | 6.46 | 1.38 | 13 | 6.55 | 8.14 | 18 | 3.4 | 2.51 |

| 4 | 7.9 | 6.99 | 9 | 7.09 | 1.49 | 14 | 1.62 | 2.43 | 19 | 5.85 | 6.16 |

| 5 | 1.86 | 8.9 | 10 | 7.54 | 2.57 | 15 | 1.19 | 9.29 | 20 | 2.23 | 4.73 |

| Indicator | JSO | PSO | GWO | LSA | HBJSA | RSO | ACO | BBO | CHIO | HJSPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 39.67 | 48.84 | 41.83 | 48.94 | 44.08 | 50.42 | 39.51 | 45.71 | 42.61 | 37.87 |

| Std. | 2.98 | 5.08 | 4.00 | 3.35 | 1.80 | 5.51 | 2.58 | 4.16 | 1.53 | 1.87 |

| Best | 36.97 | 41.22 | 36.12 | 43.32 | 39.90 | 41.91 | 36.12 | 37.42 | 38.60 | 36.12 |

| Worst | 48.47 | 61.80 | 53.77 | 55.33 | 47.70 | 61.98 | 45.93 | 55.26 | 46.05 | 45.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nayyef, H.M.; Ibrahim, A.A.; Mohd Zainuri, M.A.A.; Zulkifley, M.A.; Shareef, H. A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization. Mathematics 2023, 11, 3210. https://doi.org/10.3390/math11143210

Nayyef HM, Ibrahim AA, Mohd Zainuri MAA, Zulkifley MA, Shareef H. A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization. Mathematics. 2023; 11(14):3210. https://doi.org/10.3390/math11143210

Chicago/Turabian StyleNayyef, Husham Muayad, Ahmad Asrul Ibrahim, Muhammad Ammirrul Atiqi Mohd Zainuri, Mohd Asyraf Zulkifley, and Hussain Shareef. 2023. "A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization" Mathematics 11, no. 14: 3210. https://doi.org/10.3390/math11143210

APA StyleNayyef, H. M., Ibrahim, A. A., Mohd Zainuri, M. A. A., Zulkifley, M. A., & Shareef, H. (2023). A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization. Mathematics, 11(14), 3210. https://doi.org/10.3390/math11143210