In order to assess the performance of the DOSSOR, DOAOR, and DOSAOR, we test the following 2D boundary value problem of the Poisson equation:

where

,

,

,

, and

are given functions.

5.1. Matrix–Vector Form of Equations

The process of constructing the matrix–vector form of Equation (

1) from Equation (

29) using the finite difference was described by Liu [

27]. By using a standard five-point finite difference method that is applied to Equation (

29), one can obtain a system of matrix–vector-type linear equations:

where

,

, and

are numerical values of

at the grid point

, in which

,

,

, and

are determined by the given boundary values.

By letting

, running

i from 1 to

, and running

j from 1 to

, we can, respectively, set

in Equation (

1) and the algebraic equations

as the following:

At the same time, the components of the input vector

and the coefficient matrix

are constructed as follows:

Here, we have

linear equations.

First, we consider the following:

which renders

and

.

In the numerical tests, we took as a convergence criterion; as a result, , , and the usual SSOR with converged at 221 steps; additionally, the accuracy was measured by the maximum error (ME) = , and the root mean square error (RMSE) = . For the SOR with , it converged at 414 steps, the accuracy was ME = , and the RMSE = . Obviously, the SSOR converged faster than the SOR by about a factor of two.

By using the DOSSOR, we took

and

, and, through 105 steps, it converged, as shown in

Figure 1a, to satisfy the convergence criterion

, where the residual is defined as

. The convergence speed of the DOSSOR was improved by about a factor of two compared to the SSOR. An ME =

and a RMSE =

were obtained by the DOSSOR, which were more accurate values than those obtained by the SSOR. The accuracy and convergence speeds of the presented method DOSSOR were enhanced owing to the use of the merit function (

22). As shown in

Figure 1b,

f quickly tended to 1, and the optimal values of

w varied between 1.8527 and 1.8836, as are shown in

Figure 1c. It is amazing that when

was taken, we could obtain an ME =

and a RMSE =

, although the DOSSOR did not converge within 300 steps. The numerical solution obtained was almost equal to the exact one.

Table 1 lists the number of iterations (NI), the ME, and the RMSE for different

using the DOSSOR with a fixed

.

By using the DOAOR, we took

,

, and through 119 steps, it converged, as shown in

Figure 2a, to satisfy the convergence criterion

. The convergence speed of the DOAOR was improved by about two times more than the ad hoc AOR with

and

, which spent 257 steps. An ME =

and a RMSE =

obtained by the DOAOR were more accurate than those obtained by the AOR with ME =

and RMSE =

. The optimal values of

w and

are shown in

Figure 2b,c.

Table 2 lists the NI, ME, and RMSE for different

using the DOAOR with a fixed

. Upon comparing the values to those in

Table 1 with the same

, the DOAOR converged slightly slower than the DOSSOR, but the accuracy was raised by one or two orders.

In

Table 3, we list the NI, ME, and RMSE for different mesh size

h using the algorithm DOAOR with a fixed

. It is interesting that the accuracy was very good, even for a larger mesh size. When

, the accuracy was decreased and did not converge within 300 steps under a stringent convergence criterion of

.

Using the DOSAOR, we took

,

, and

, and, through 125 steps, it converged as shown in

Figure 3a to satisfy the convergence criterion

. An ME =

and a RMSE =

were more accurate than those values obtained by the AOR with ad hoc values of

and

, whose ME =

and RMSE =

. The optimal values of

w and

are shown in

Figure 3b,c. By comparing the values to those in

Table 3, it can be seen that the DOSAOR converged faster than the DOAOR, as is shown in

Table 4, with a competitive accuracy.

5.2. Lyapunov Equation

In addition, we can derive a Lyapunov equation for Equation (

29):

where

denotes the numerical solution of

at a grid point

.

is an

-dimensional tridiagonal matrix, and

Here,

, and

. Hence, we have

.

At

, we take

. However, for other nodal points, the

are constructed according to the following:

We can transform Equation (

34) into a vectorized linear equation system with dimension

n:

where ⊗ is the Kronecker product, and

is an

n-dimensional vector that consists of all rows of the matrix

.

Using the DOSSOR, we took

and

, and, through 204 steps, it converged as shown in

Figure 4a to satisfy

, where the residual is defined by

. An ME =

and a RMSE =

were obtained, which were more accurate and faster than those values shown in

Table 1. As seen in

Figure 4b,

f quickly tended to 1, and the optimal values of

w varied between 1.821 and 1.845, as are shown in

Figure 4c.

Using the DOAOR, we took

and

, and, through 184 steps, it converged as shown in

Figure 5a to satisfy the convergence criterion

. An ME =

and a RMSE =

were more accurate and faster than those values obtained by the DOSSOR. The optimal values of

w and

are shown in

Figure 5b,c.

In

Table 5, we list the NI, ME, and RMSE for different mesh size

h using the algorithm DOAOR to solve the Lyapunov equation with a fixed

. It is interesting that the accuracy was very good, even for a larger mesh size. By comparing these values to

Table 3 with the same

, the convergence of the DOAOR for the Lyapunov equation has been shown to be faster than that for the matrix–vector equation in

Section 5.1; however, the accuracy was worse by about two and three orders, respectively.

Using the DOSAOR, we took

and

. In

Table 6, we list the NI, ME, and RMSE for different mesh size

h using the algorithm DOSAOR to solve the Lyapunov equation with a fixed

. Upon comparing those values to

Table 4, it can be seen that the convergence of the DOSAOR for the Lyapunov equation was faster, but the accuracy was decreased by about two orders.

To improve the accuracy shown in

Table 7, the convergence criterion for the DOSAOR with the Lyapunov equation was raised to

. It is interesting that the accuracy was very good, even for a larger mesh size. By comparing those values to

Table 4, the convergence of the DOSAOR for the Lyapunov equation has been shown to be faster than that for the matrix–vector equation in

Section 5.1, where for

did not converge within 300 iterations under a larger

.

Next, we consider a a non-homogeneous boundary value problem of the Poisson equation with

which renders

,

,

,

, and

.

Using the DOSSOR in

Section 5.1, we took

and

, and, through 82 steps, it converged to satisfy the convergence criterion

. An ME =

and a RMSE =

were obtained.

Using the DOAOR, we took

,

, and

, and, through 64 steps, it converged as shown in

Figure 6a to satisfy the convergence criterion

. An ME =

and a RMSE =

were obtained. The optimal values of

w and

are shown in

Figure 6b,c.

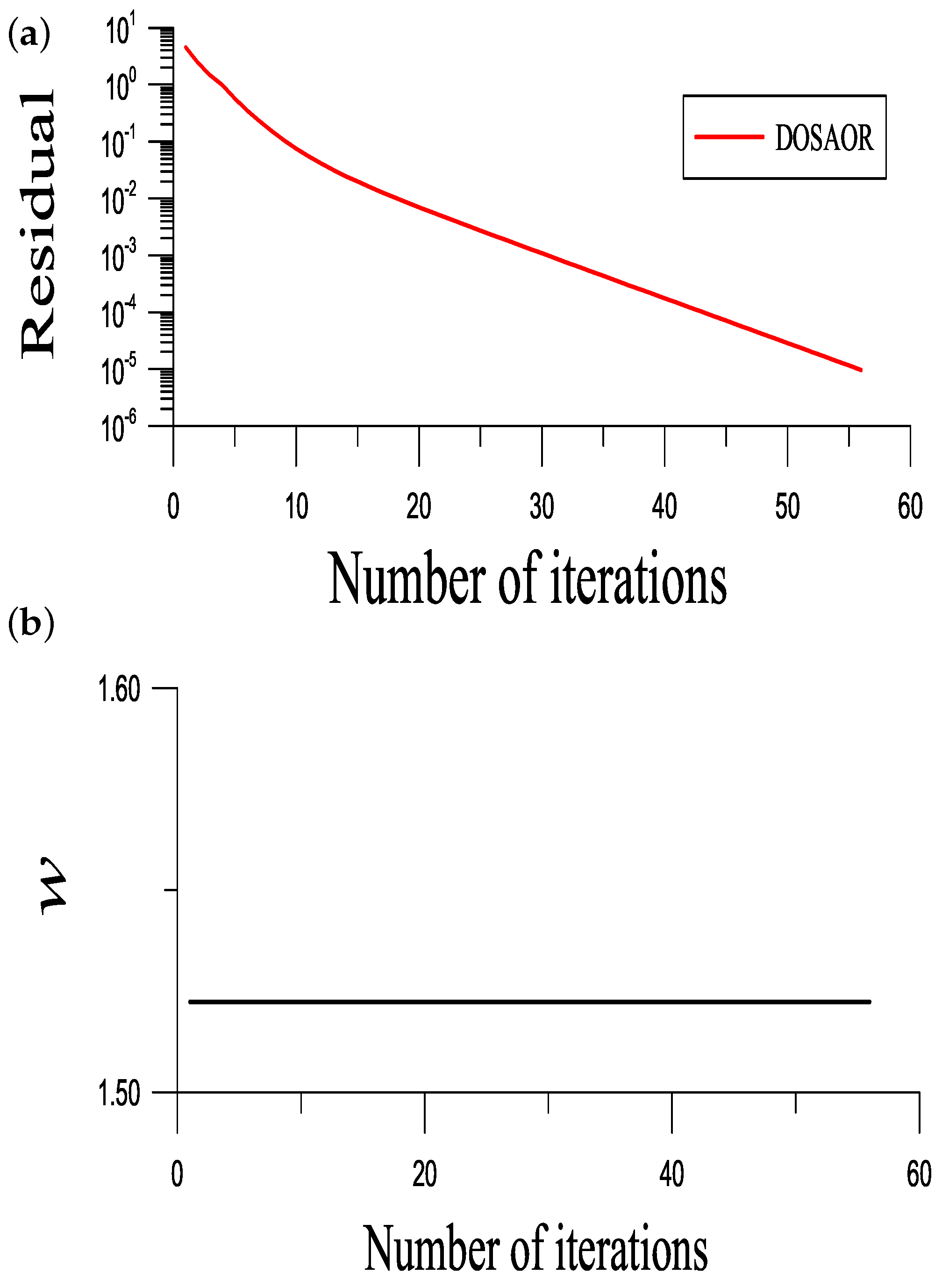

Using the DOSAOR, we took

,

, and

, and, through 56 steps, it converged as shown in

Figure 7a to satisfy the convergence criterion

. An ME =

and a RMSE =

were obtained. The optimal values of

w and

are shown in

Figure 7b,c.

5.3. Other Linear System

The proposed iterative methods can be used to solve the general linear system. We cannot exhaust all linear systems; however, we consider a Hilbert problem:

We suppose that

are exact solutions, and

can be computed exactly. The Hilbert problem is a highly ill-posed linear equations system. We fix

. Using the DOSSOR, we took

and

, and, through NI = 281 steps, it converged as shown in

Figure 8a to satisfy the convergence criterion

. An ME =

was obtained by the DOSSOR.

w quickly tended to 0.1032522475, as is shown in

Figure 8b, which is the optimal value of

w for the Hilbert problem with

. By adopting an ad hoc value

in the SSOR, NI was raised to NI = 463 under the same convergence criterion

, and more worse is that the ME increased to ME =

. Therefore, the presented DOSSOR outperformed the SSOR with an ad hoc value for the parameter

w.

We consider a least-square linear system [

12]:

We suppose that

and

are exact solutions. We transform it to a normal system:

Using the DOSSOR, we took

and

, and, through NI = 12 steps, it converged as shown in

Figure 9a to satisfy the convergence criterion

. An ME =

was obtained by the DOSSOR.

w tended to 0.4527637493 as shown in

Figure 9b, which was close to the optimal value

for the original least-square problem obtained in [

12].