LB-GLAT: Long-Term Bi-Graph Layer Attention Convolutional Network for Anti-Money Laundering in Transactional Blockchain

Abstract

:1. Introduction

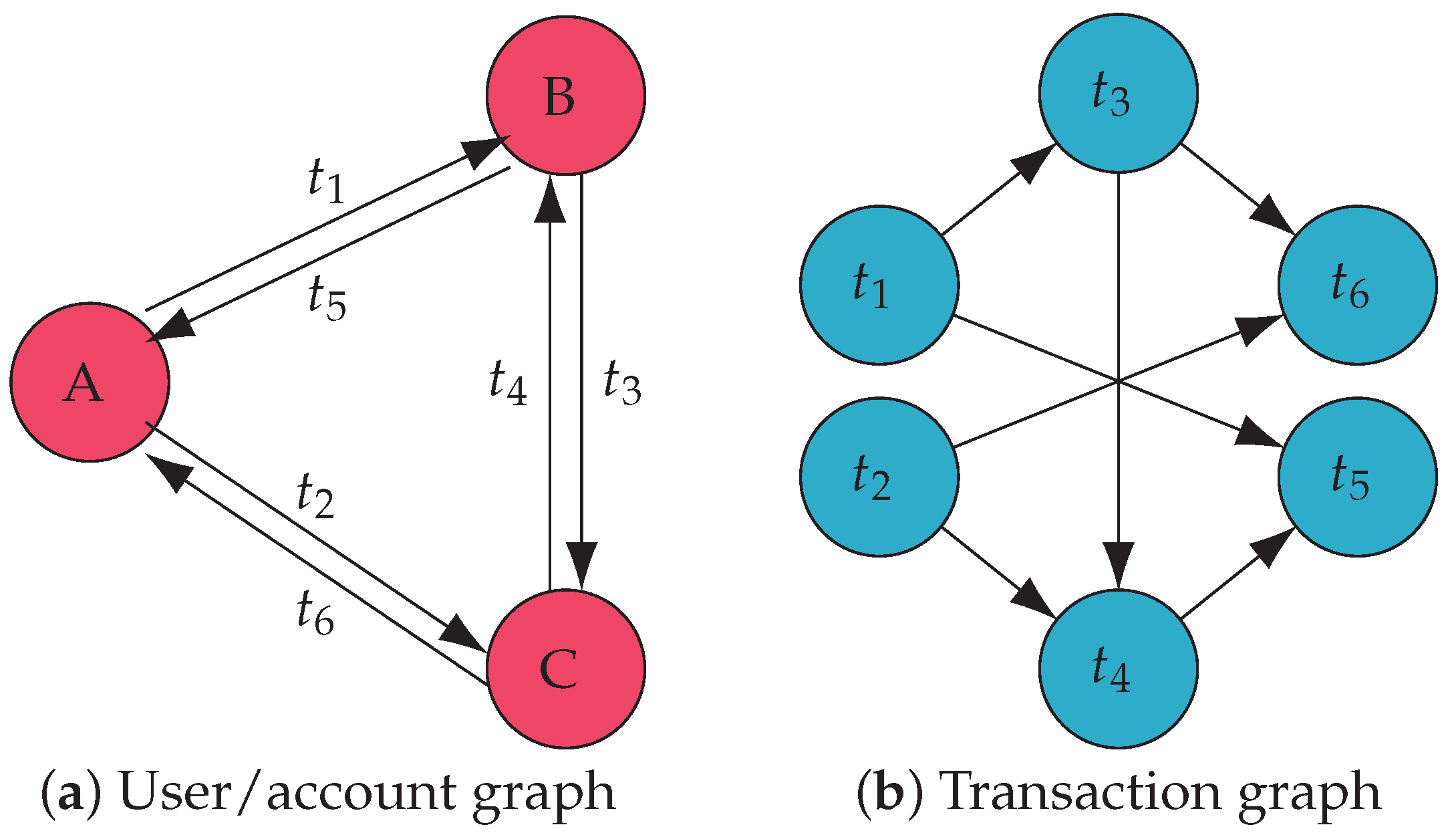

- The blockchain transaction graph lacks closed loops, which causes the GCN to fail to learn where the money is going, reducing the accuracy. For example, in Figure 1a, the user/account graph of financial transactions has loops due to frequent transactions between accounts, so the sources and destinations of funds can be embedded using a directed GCN. Nevertheless, if we convert the graph to a transaction graph of blockchain, shown in Figure 1b, because of the chronology of transactions, it cannot have closed loops, which prevents the GCN from aggregating the destination information in the directed transaction graph.

- The GCNs suffer from an over-smoothing problem, since transaction graphs have vast numbers of nodes, further reducing the accuracy of the detection results. When the user/account graph is converted to the transaction graph, the original transaction edges become nodes. For example, , the first transaction, transfers funds to account B in Figure 1a. Subsequently, account B initiates two transactions, and . Therefore, in Figure 1b, points to both and . We observe that the transaction graph often has more nodes than the user/account graph. This is because, due to frequent transactions between accounts, the number of nodes in the account graph remains constant while the number of nodes in the transaction graph increases. For example, Figure 1a has only three nodes, but when converted to Figure 1a, there are six. In the GCNs, node A only needs to aggregate the information of two nodes (B and C), and node needs to aggregate the data of four nodes (, , , and ). Moreover, if the nodes in Figure 1a make more complex transactions, the nodes in Figure 1b are more numerous and require deeper GCNs to capture the complete information. These will speed up the over-smoothing.

- We considered the differences between financial and blockchain transactions and addressed the implications of these differences.

- To solve the problem of being unable to detect the whereabouts of funds due to the chronological order of the transaction graph, we proposed the use of a bi-graph, including a transaction graph and a reverse transaction graph, to detect the source and destination of money laundering activities using blockchain.

- We designed a long-term layer attention mechanism that can combine the characteristics of money laundering at each stage and alleviate the over-smoothing problem.

2. Related Work

2.1. Machine-Learning-Based Methods

2.2. Deep-Learning-Based Methods

3. Model Architecture

3.1. Overview

- Bi-Graph: To address the limitation of the GCN in capturing the money laundering structure due to the absence of loops in the blockchain transaction graph, we adopted a bi-graph module. This module creates a reverse graph from the forward graph, allowing the model to learn the characteristics of both the source and destination of transaction funds.

- Spatial feature extraction: To better capture the money laundering structure, we designed a spatial feature extraction module that convolves the forward and reverse transaction graphs multiple times. Additionally, we incorporated a long-term layer attention mechanism to mitigate the over-smoothing problem of the GCN. This module independently learns money laundering information from the node features aggregated by the GCN each time, and the result is input into the classification head.

- Classification head: Since a transaction graph can consist of transactions over a certain period, some nodes may have a zero in-degree on one or both graphs. To address this issue, we created two classification heads. Depending on whether a node’s in-degree is zero on both graphs, the node vectors obtained from the spatial feature extraction module are divided into two categories and input into the corresponding classification head to obtain the node classification label.

3.2. Spatial Feature Extraction Module

3.2.1. Spatial-Domain GCN

3.2.2. Long-Term Layer Attention

3.3. Bi-Graph

3.4. Classification Head

4. Implementation

4.1. Dataset

4.2. Training

4.3. Complexity Analysis

5. Experiment

5.1. Experimental Setup

- GAT—GAT [28] is a GCN network combining masked self-attention layers. The model allows different weights to be implicitly assigned to different nodes in the neighborhood and is suitable for induction and transformation problems.

- GraphSAGE—GraphSAGE [29] is a popular graph neural network for large graphs. The model learns a function to generate embeddings by sampling and aggregating features from a node’s local neighborhood.

- LR: Logistic Regression [14] is a statistical method commonly used for binary classification. We applied the L2 normalization set inverse of regularization strength . We set the tolerance for stopping criteria to and the max iterations to 200 and used liblinear [37] as the solver for the optimization algorithm.

- DT: Decision Tree Classifier [15] is a machine learning method used to build predictive models from data. The model divides the data space recursively, fits a simple prediction model into each partition, and measures the error using misclassification costs. We set the minimum leaf sample to five to prevent over-fitting and the gini coefficient as the impurity criterion.

- SVM: Support Vector Machine [16] is a machine learning method for classification problems. It maps the input vector nonlinearly to a very high-dimensional feature space. A decision surface for classification is constructed in the feature space. We applied the Gaussian kernel as a kernel function in SVM.

- GCN: The GCN used in the experiment was the same as in our paper. We applied a four-layer spatial-domain GCN with a node embedding size of 32 for each layer and then passed through an MLP module of two hidden layers with sizes 64 and 32. We refer to this model as GCN+MLP in later experiments.

- GNN-FiLM: GNN-FiLM was used by Han et al. [1] for the anomaly detection of blockchain. They used k-rate sampling for the data and labeled unknown transaction nodes by feature similarity to reduce the imbalance between positive and negative samples.

- Weighted GraphSAGE: Weighted GraphSAGE [6] is an improved GraphSAGE method based on weighted sampling neighborhood nodes. This model is trained to learn more efficient aggregate input features in the local neighborhood of nodes in order to find and analyze the implied interrelationships between blockchain transaction features.

- GCN assisted by linear layers: This is a novel approach proposed by Alarab et al. [13] for modeling using the existing graph convolutional network intertwined with linear layers. The node embeddings obtained from the convolutional layer of the graph are connected with a single hidden layer obtained from the linear transformation of the node feature matrix, followed by a multi-layer perception layer. In the later experiments, we refer to this model as GCN-linear for short.

5.2. Experiment Results

5.2.1. Training Process

5.2.2. Ablation Experiments

5.2.3. Comparison Experiments

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, H.; Wang, R.; Chen, Y.; Xie, K.; Zhang, K. Research on Abnormal Transaction Detection Method for Blockchain. In Proceedings of the International Conference on Blockchain and Trustworthy Systems; Svetinovic, D., Zhang, Y., Luo, X., Huang, X., Chen, X., Eds.; Springer: Singapore, 2022; pp. 223–236. [Google Scholar]

- Zhou, J.; Hu, C.; Chi, J.; Wu, J.; Shen, M.; Xuan, Q. Behavior-Aware Account de-Anonymization on Ethereum Interaction Graph. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3433–3448. [Google Scholar] [CrossRef]

- Alarab, I.; Prakoonwit, S. Graph-Based LSTM for Anti-Money Laundering: Experimenting Temporal Graph Convolutional Network with Bitcoin Data. Neural Process. Lett. 2023, 55, 689–707. [Google Scholar] [CrossRef]

- Ciphertrace. Spring 2020 Cryptocurrency Crime and Anti-Money Laundering Report; Technical Report; Ciphertrace: Los Gatos, CA, USA, 2020. [Google Scholar]

- Grauer, K.; Jardine, E.; Leosz, E.; Updegrave, H. The 2023 Crypto Crime Report; Technical Report; Chainalysis: New York, NY, USA, 2023. [Google Scholar]

- Li, A.; Wang, Z.; Yu, M.; Chen, D. Blockchain Abnormal Transaction Detection Method Based on Weighted Sampling Neighborhood Nodes. In Proceedings of the 2022 3rd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Xi’an, China, 15–17 June 2022; pp. 746–752. [Google Scholar]

- FATF. Updated Guidance for a Risk-Based Approach to Virtual Assets and Virtual Asset Service Providers; Technical Report; FATF: Paris, France, 2021. [Google Scholar]

- Hallak, I. Markets in Crypto-Assets (MiCA); Technical Report; European Parliamentary Research Service: Brussels, Belgium, 2022. [Google Scholar]

- Pettersson Ruiz, E.; Angelis, J. Combating Money Laundering with Machine Learning—Applicability of Supervised-Learning Algorithms at Cryptocurrency Exchanges. J. Money Laund. Control 2022, 25, 766–778. [Google Scholar] [CrossRef]

- Pham, T.; Lee, S. Anomaly Detection in the Bitcoin System—A Network Perspective. arXiv 2017, arXiv:1611.03942. [Google Scholar]

- Yang, L.; Dong, X.; Xing, S.; Zheng, J.; Gu, X.; Song, X. An Abnormal Transaction Detection Mechanim on Bitcoin. In Proceedings of the 2019 International Conference on Networking and Network Applications (NaNA), Daegu, Republic of Korea, 10–13 October 2019; pp. 452–457. [Google Scholar]

- Weber, M.; Domeniconi, G.; Chen, J.; Weidele, D.K.I.; Bellei, C.; Robinson, T.; Leiserson, C.E. Anti-Money Laundering in Bitcoin: Experimenting with Graph Convolutional Networks for Financial Forensics. arXiv 2019, arXiv:1908.02591. [Google Scholar]

- Alarab, I.; Prakoonwit, S.; Nacer, M.I. Competence of Graph Convolutional Networks for Anti-Money Laundering in Bitcoin Blockchain. In Proceedings of the 2020 5th International Conference on Machine Learning Technologies, Beijing, China, 19–21 June 2020; pp. 23–27. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Softw. 2010, 33, 1–22. [Google Scholar] [CrossRef]

- Loh, W.Y. Classification and Regression Trees. WIREs Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Banfield, C.F.; Bassill, L.C. Algorithm AS 113: A Transfer for Non-Hierarchical Classification. J. R. Stat. Soc. Ser. 1977, 26, 206–210. [Google Scholar] [CrossRef]

- Pham, T.; Lee, S. Anomaly Detection in Bitcoin Network Using Unsupervised Learning Methods. arXiv 2017, arXiv:1611.03941. [Google Scholar]

- Lorenz, J.; Silva, M.I.; Aparício, D.; Ascensão, J.T.; Bizarro, P. Machine Learning Methods to Detect Money Laundering in the Bitcoin Blockchain in the Presence of Label Scarcity. In Proceedings of the First ACM International Conference on AI in Finance, New York, NY, USA, 15–16 October 2020; pp. 1–8. [Google Scholar]

- Li, Y.; Cai, Y.; Tian, H.; Xue, G.; Zheng, Z. Identifying Illicit Addresses in Bitcoin Network; Springer: Singapore, 2020; Volume 1267, pp. 99–111. [Google Scholar]

- Alarab, I.; Prakoonwit, S.; Nacer, M.I. Comparative Analysis Using Supervised Learning Methods for Anti-Money Laundering in Bitcoin. In Proceedings of the 2020 5th International Conference on Machine Learning Technologies, Beijing China, 19–21 June 2020; pp. 11–17. [Google Scholar]

- Vassallo, D.; Vella, V.; Ellul, J. Application of Gradient Boosting Algorithms for Anti-Money Laundering in Cryptocurrencies. SN Comput. Sci. 2021, 2, 143. [Google Scholar] [CrossRef]

- Stefánsson, H.P.; Grímsson, H.S.; Þórðarson, J.K.; Oskarsdottir, M. Detecting Potential Money Laundering Addresses in the Bitcoin Blockchain Using Unsupervised Machine Learning. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2022. [Google Scholar]

- Hu, Y.; Seneviratne, S.; Thilakarathna, K.; Fukuda, K.; Seneviratne, A. Characterizing and Detecting Money Laundering Activities on the Bitcoin Network. arXiv 2019, arXiv:1912.12060. [Google Scholar]

- Poursafaei, F.; Rabbany, R.; Zilic, Z. SigTran: Signature Vectors for Detecting Illicit Activities in Blockchain Transaction Networks. In Advances in Knowledge Discovery and Data Mining; Karlapalem, K., Cheng, H., Ramakrishnan, N., Agrawal, R.K., Reddy, P.K., Srivastava, J., Chakraborty, T., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 12712, pp. 27–39. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017, arXiv:1609.02907. [Google Scholar]

- Chen, Z.; Chen, F.; Zhang, L.; Ji, T.; Fu, K.; Zhao, L.; Chen, F.; Wu, L.; Aggarwal, C.; Lu, C.T. Bridging the Gap between Spatial and Spectral Domains: A Survey on Graph Neural Networks. arXiv 2021, arXiv:2002.11867. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive Representation Learning on Large Graphs. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 5–6 December 2017. [Google Scholar]

- Yang, C.; Wang, R.; Yao, S.; Liu, S.; Abdelzaher, T. Revisiting Over-Smoothing in Deep GCNs. arXiv 2020, arXiv:2003.13663. [Google Scholar]

- Rong, Y.; Huang, W.; Xu, T.; Huang, J. DropEdge: Towards Deep Graph Convolutional Networks on Node Classification. arXiv 2020, arXiv:1907.10903. [Google Scholar]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and Deep Graph Convolutional Networks. In Proceedings of the 37th International Conference on Machine Learning, Virtual Event, 13–18 July 2020. [Google Scholar]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral Networks and Locally Connected Networks on Graphs. arXiv 2014, arXiv:1312.6203. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Bian, T.; Xiao, X.; Xu, T.; Zhao, P.; Huang, W.; Rong, Y.; Huang, J. Rumor Detection on Social Media with Bi-Directional Graph Convolutional Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Fawcett, T. An Introduction to ROC Analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Lin, C.J. LIBLINEAR—A Library for Large Linear Classification. 2023. Available online: https://www.csie.ntu.edu.tw/~cjlin/liblinear/ (accessed on 21 July 2023).

- Li, G.; Xiong, C.; Thabet, A.; Ghanem, B. DeeperGCN: All You Need to Train Deeper GCNs. arXiv 2020, arXiv:2006.07739. [Google Scholar]

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Training epoch | 200 | Initial learning rate | 0.01 |

| Learning decay rate | 1 | Optimizer | Adam |

| Weight decay | Loss function | Cross-entropy | |

| GCN layer number | 4 | The node embedding size of each GCN layer | 32 |

| The projection size of LTLA | 32 | Transformer depth | 6 |

| Transformer size | 32 | The head number of MSA | 4 |

| The head size of MSA | 8 | The MLP hidden layer size of the transformer | 64 |

| The hidden layer size of the classification head | 64 32 | Dropout | 0.5 |

| The ratio of training, validation, and test data | 8:1:1 | The ratio of positive and negative samples | 1:5 |

| Model | Accuracy | Precision | Recall | F1−Score | AUC |

|---|---|---|---|---|---|

| No-LTLA | 0.9248 | 0.7191 | 0.4679 | 0.5669 | 0.9377 |

| No-BG | 0.9730 | 0.8936 | 0.8434 | 0.8678 | 0.9778 |

| GAT-No-GCN | 0.9688 | 0.8723 | 0.8233 | 0.8471 | 0.9728 |

| GraphSAGE-No-GCN | 0.9637 | 0.8300 | 0.8233 | 0.8266 | 0.9630 |

| LB-GLAT | 0.9776 | 0.9317 | 0.8494 | 0.8887 | 0.9806 |

| Model | Accuracy | Precision | Recall | F1−Score | AUC |

|---|---|---|---|---|---|

| LR | 0.9617 | 0.8297 | 0.7744 | 0.8011 | 0.8784 |

| DT | 0.9779 | 0.8901 | 0.8872 | 0.8886 | 0.9376 |

| SVM | 0.9702 | 0.9005 | 0.7873 | 0.8401 | 0.8889 |

| GCN+MLP | 0.9329 | 0.6636 | 0.7329 | 0.6966 | 0.9315 |

| GAT+MLP | 0.8949 | - | 0 | - | 0.8994 |

| GraphSAGE+MLP | 0.9555 | 0.7682 | 0.8253 | 0.7957 | 0.9699 |

| GNN-FiLM | 0.9701 | 0.9520 | 0.5679 | 0.7114 | - |

| Weighted GraphSAGE | - | 0.879 | 0.884 | 0.875 | - |

| GCN-linear | 0.974 | 0.899 | 0.678 | 0.773 | - |

| LB-GLAT | 0.9776 | 0.9317 | 0.8494 | 0.8887 | 0.9806 |

| Model | L = 2 | L = 3 | L = 4 | L = 5 | L = 10 |

|---|---|---|---|---|---|

| GAT+MLP | 0.6450 | 0.6325 | - | - | - |

| GAT+LTLA+MLP | 0.8678 | 0.8531 | 0.8429 | 0.8506 | 0.8381 |

| No-LTLA | 0.6357 | 0.7346 | 0.5669 | - | - |

| LB-GLAT | 0.8760 | 0.8663 | 0.8887 | 0.8666 | 0.8502 |

| BG+DeeperGCN+MLP | 0.7113 | 0.6574 | 0.6113 | 0.6451 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, C.; Zhang, S.; Zhang, P.; Alkubati, M.; Song, J. LB-GLAT: Long-Term Bi-Graph Layer Attention Convolutional Network for Anti-Money Laundering in Transactional Blockchain. Mathematics 2023, 11, 3927. https://doi.org/10.3390/math11183927

Guo C, Zhang S, Zhang P, Alkubati M, Song J. LB-GLAT: Long-Term Bi-Graph Layer Attention Convolutional Network for Anti-Money Laundering in Transactional Blockchain. Mathematics. 2023; 11(18):3927. https://doi.org/10.3390/math11183927

Chicago/Turabian StyleGuo, Chaopeng, Sijia Zhang, Pengyi Zhang, Mohammed Alkubati, and Jie Song. 2023. "LB-GLAT: Long-Term Bi-Graph Layer Attention Convolutional Network for Anti-Money Laundering in Transactional Blockchain" Mathematics 11, no. 18: 3927. https://doi.org/10.3390/math11183927