Order-Restricted Inference for Generalized Inverted Exponential Distribution under Balanced Joint Progressive Type-II Censored Data and Its Application on the Breaking Strength of Jute Fibers

Abstract

:1. Introduction

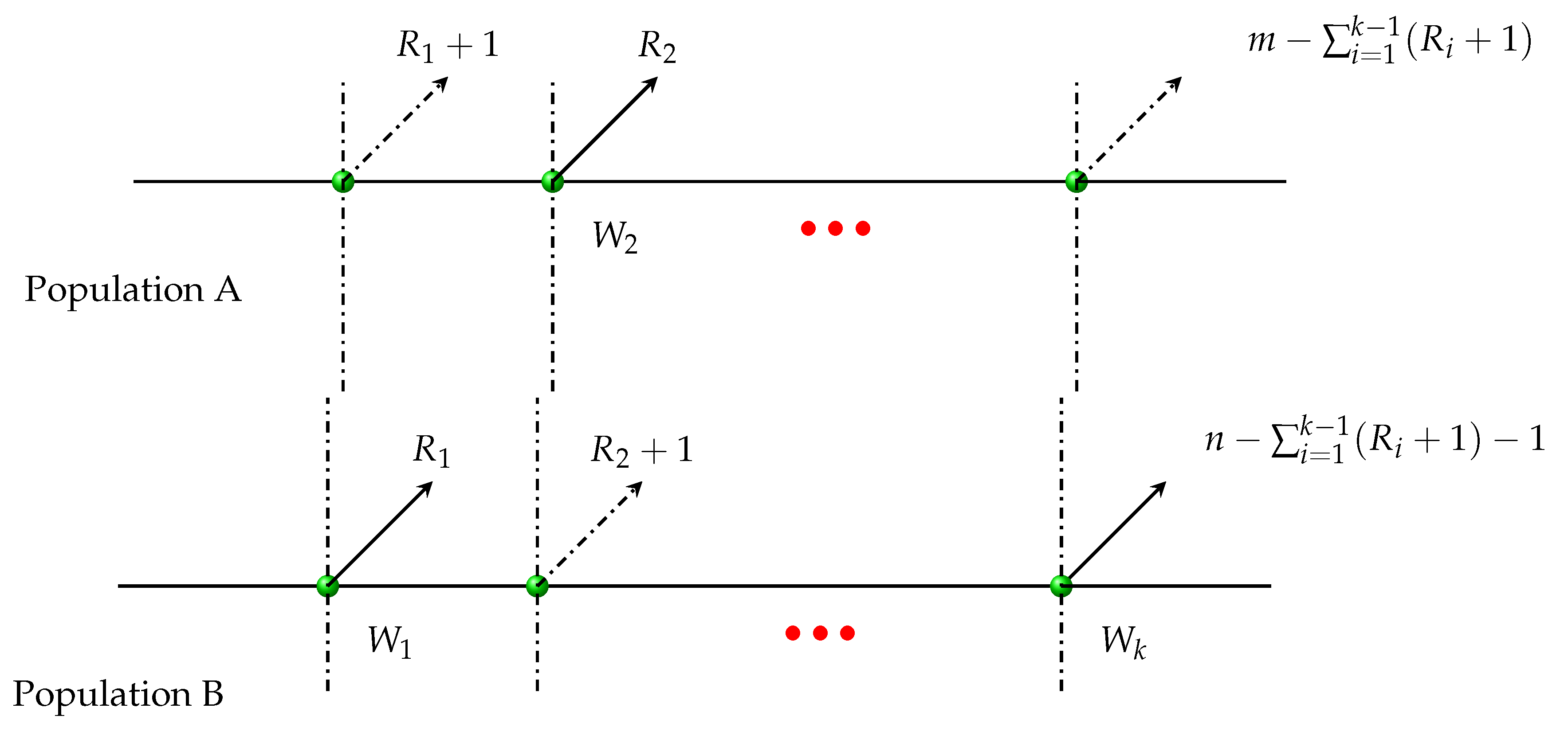

2. Notations, Model Description and Assumption

2.1. Notations

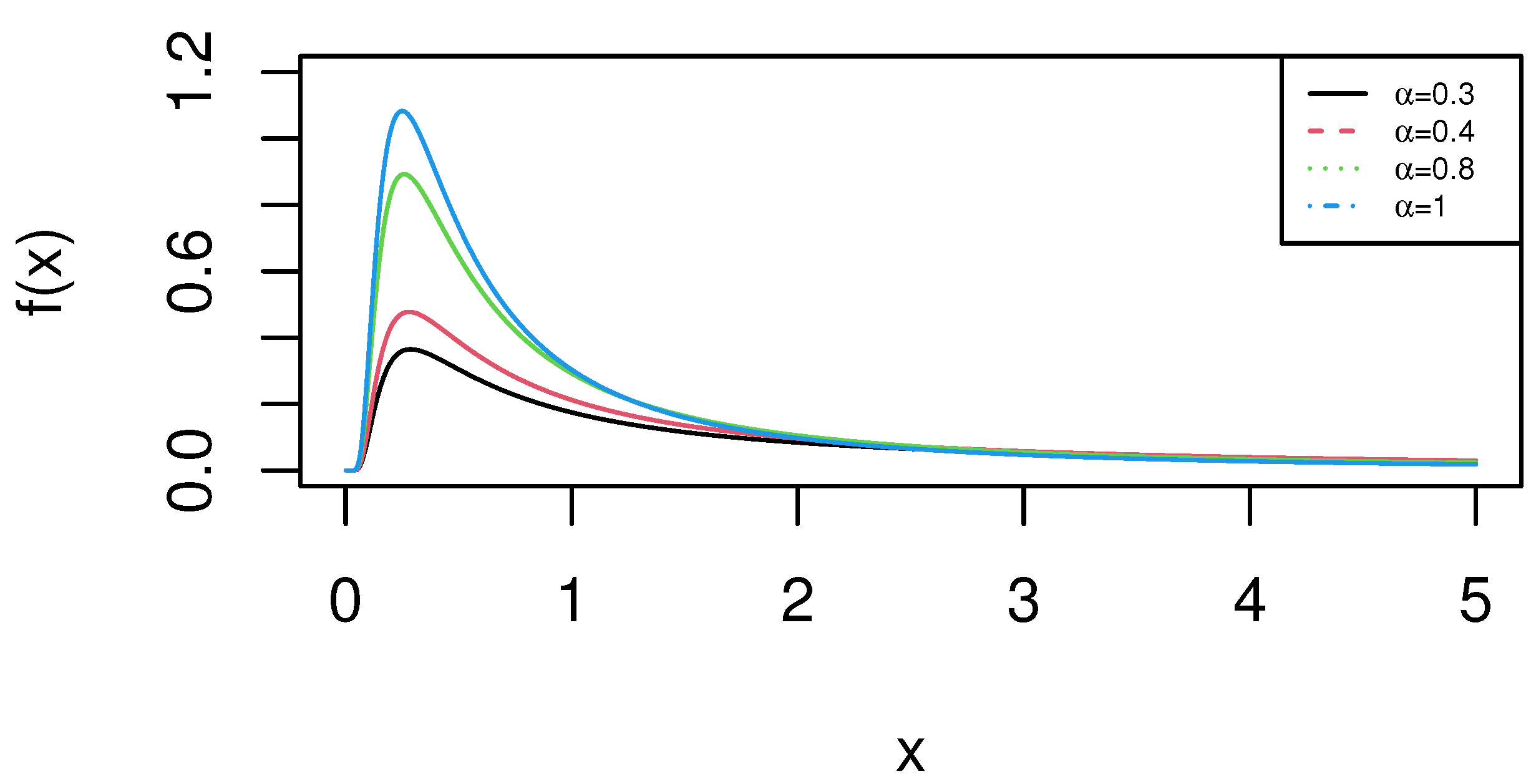

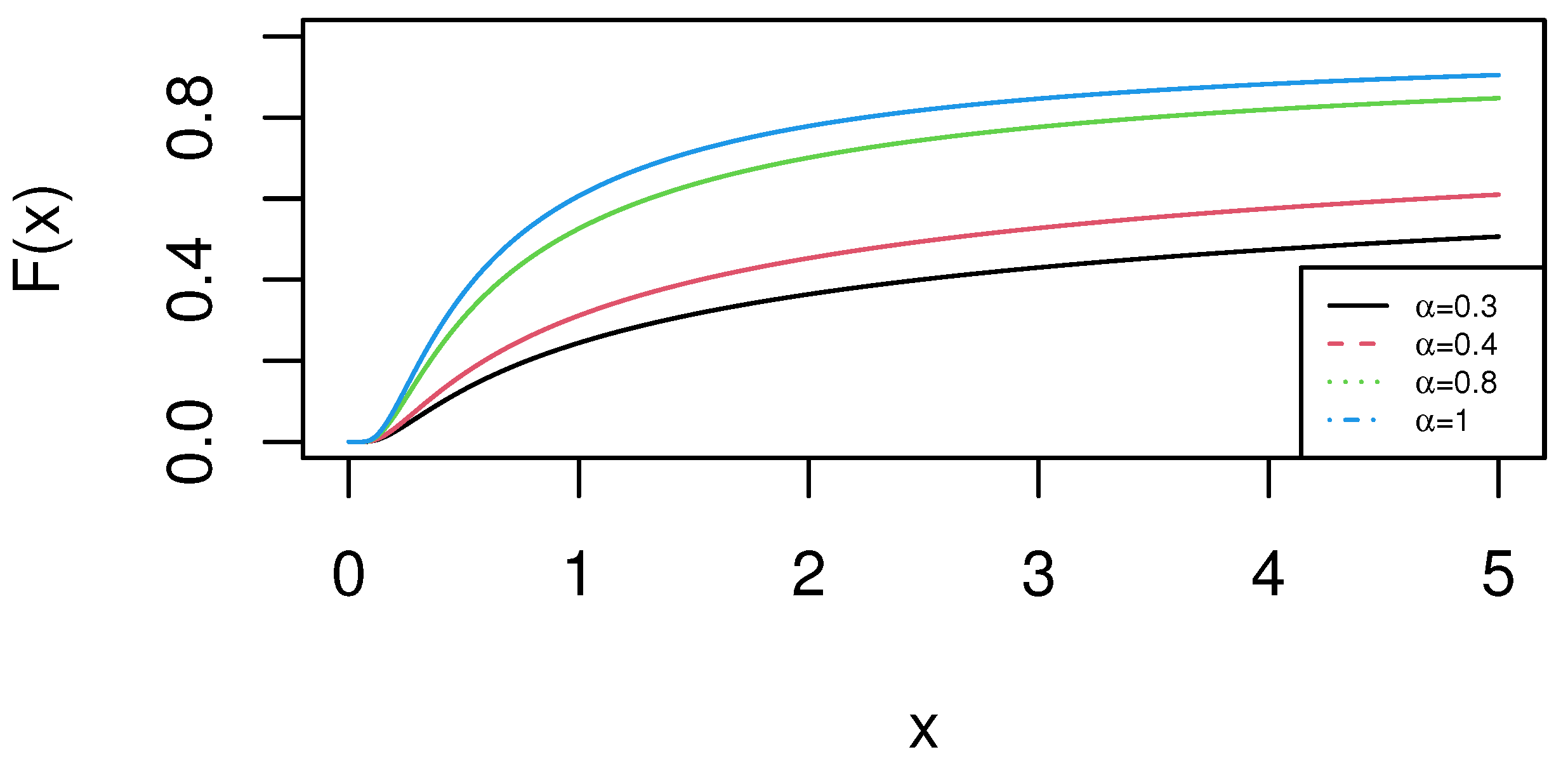

2.2. Model Description and Assumption

3. Maximum Likelihood Estimation

3.1. Point Estimation

| Algorithm 1: Generate the B-JPC sample from GIED. |

Step 1: Given the initial values of k, m, n, R, , and . Step 2: Generate , ⋯, from GIED (, ) and sort them as , ⋯, . Step 3: Generate , ⋯, from GIED (, ) and sort them as , ⋯, . Step 4: Calculate = min(), if , = 1, otherwise = 0. Step 5: Calculate = min(). Similarly, if , = 1, otherwise = 0 (i = 2, 3, ⋯, k), here . Step 6: Here (), ⋯, () are the B-JPC sample from GIED that we need. |

3.2. Asymptotic Confidence Interval

4. Bayesian Estimation

4.1. Without Order Restriction of Shape Parameters

4.1.1. Posterior Analysis: Scale Parameter Is known

4.1.2. Posterior Analysis: Scale Parameter Is Not Known

| Algorithm 2: The application of the importance sampling technique in Bayesian estimates. |

Step 1: Under the given observed data, generate from . Step 2: Generate and from for the given . Step 3: Repeat step 1 and 2 M times to acquire . Step 4: In order to calculate the Bayesian estimates of , the and are computed. Here, Step 5: The approximate Bayesian estimates of are given by

|

4.2. With Order Restriction of Shape Parameters

4.2.1. Posterior Analysis: Scale Parameter Is Known

4.2.2. Posterior Analysis: Scale Parameter Is Not Known

4.3. HPD Credible Interval

5. Simulation Study and Data Analysis

5.1. Simulation Study

5.2. Real Data Analysis

6. Optimum Censoring Scheme

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. When the Scale Parameter λ Is Known

| Algorithm A1: The application of the importance sampling technique in Bayesian estimates and HPD credible intervals for known . |

Step 1: Under the given data, and are generated from . Step 2: Repeat step 1 and 2 M times to acquire . Step 3: To acquire Bayesian estimates about , we calculate and . Here, and . Step 4: The approximate Bayesian estimate about is given by

Step 5: To obtain the CIs of and , arrange and

HPD credible intervals of , for a significance are constructed as , , where j satisfies that

where is the integer part of y. |

Appendix A.2. When the Scale Parameter λ Is Not Known

| Algorithm A2: The application of the importance sampling technique in Bayesian estimates and HPD credible intervals for unknown . |

Step 1: Under the observed given data, is generated from . Step 2: Based on the known , the and are obtained from . Step 3: Repeat step 1 and 2 M times to acquire . Step 4: To acquire the Bayesian estimate about , the and , are calculated. Here and . Step 5: The approximate Bayesian estimate about is given by

Step 6: To obtain the CIs of all parameters , and , arrange and be the ordered value of , , ⋯, . Where the HPD credible intervals of parameters , and for are given by , and , here j satisfies that

where is the integer part of y. |

References

- Balakrishnan, N.; Cramer, E. The Art of Progressive Censoring: Applications to Reliability and Quality; Springer: New York, NY, USA, 2014. [Google Scholar]

- Dey, S.; Pradhan, B. Generalized inverted exponential distribution under hybrid censoring. Stat. Methodol. 2014, 18, 101–114. [Google Scholar] [CrossRef]

- Dube, M.; Krishna, H.; Garg, R. Generalized inverted exponential distribution under progressive first-failure censoring. J. Stat. Comput. Simul. 2015, 86, 1095–1114. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Rasouli, A. Exact likelihood inference for two exponential populations under joint Type-II censoring. Comput. Stat. Data Anal. 2008, 52, 2725–2738. [Google Scholar] [CrossRef]

- Rasouli, A.; Balakrishnan, N. Exact likelihood inference for two exponential populations under joint progressive type-II censoring. Commun. Stat. Methods 2010, 39, 2172–2191. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Su, F.; Liu, K.Y. Exact likelihood inference for k exponential populations under joint progressive type-II censoring. Commun. Stat.-Simul. Comput. 2015, 44, 902–923. [Google Scholar] [CrossRef]

- Parsi, S.; Ganjali, M.; Farsipour, N.S. Conditional maximum likelihood and interval estimation for two Weibull populations under joint Type-II progressive censoring. Commun. Stat.-Theory Methods 2011, 40, 2117–2135. [Google Scholar] [CrossRef]

- Mondal, S.; Kundu, D. Point and Interval Estimation of Weibull Parameters Based on Joint Progressively Censored Data. Sankhya Indian J. Stat. 2019, 81, 1–25. [Google Scholar] [CrossRef] [Green Version]

- Mondal, S.; Kundu, D. On the joint Type-II progressive censoring scheme. Commun. Stat. Theory Methods 2019, 49, 958–976. [Google Scholar] [CrossRef]

- Krishna, H.; Goel, R. Inferences for two Lindley populations based on joint progressive type-II censored data. Commun. Stat. Simul. Comput. 2020, 51, 4919–4936. [Google Scholar] [CrossRef]

- Mondal, S.; Kundu, D. A new two sample type-II progressive censoring scheme. Commun. Stat. Theory Methods 2018, 48, 2602–2618. [Google Scholar] [CrossRef]

- Mondal, S.; Kundu, D. Inference on Weibull parameters under a balanced two-sample type-II progressive censoring scheme. Qual. Reliab. Eng. Int. 2019, 36, 1–17. [Google Scholar] [CrossRef]

- Mondal, S.; Kundu, D. Bayesian Inference for Weibull distribution under the balanced joint type-II progressive censoring scheme. Am. J. Math. Manag. Sci. 2019, 39, 56–74. [Google Scholar] [CrossRef]

- Mondal, S.; Bhattacharya, R.; Pradhan, B.; Kundu, D. Bayesian optimal life-testing plan under the balanced two sample type-II progressive censoring scheme. Appl. Stoch. Model. Bus. Ind. 2020, 36, 628–640. [Google Scholar] [CrossRef]

- Bhattacharya, R.; Pradhan, B.; Dewanji, A. On optimum life-testing plans under Type-II progressive censoring scheme using variable neighborhood search algorithm. Test 2016, 25, 309–330. [Google Scholar] [CrossRef]

- Goel, R.; Krishna, H. Statistical inference for two Lindley populations under balanced joint progressive Type-II censoring scheme. Comput. Stat. 2022, 37, 263–286. [Google Scholar] [CrossRef]

- Chen, Q.; Gui, W. Statistical Inference of the Generalized Inverted Exponential Distribution under Joint Progressively Type-II Censoring. Entropy 2022, 24, 576. [Google Scholar] [CrossRef] [PubMed]

- Abouammoh, A.M.; Alshingiti, A.M. Reliability estimation of generalized inverted exponential distribution. J. Stat. Comput. Simul. 2009, 79, 1301–1315. [Google Scholar] [CrossRef]

- Chen, M.H.; Shao, Q.M. Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 1999, 8, 69–92. [Google Scholar]

- Sultan, K.; Alsadat, N.; Kundu, D. Bayesian and maximum likelihood estimations of the inverse Weibull parameters under progressive type-II censoring. J. Stat. Comput. Simul. 2014, 84, 2248–2265. [Google Scholar] [CrossRef]

- Pradhan, B.; Kundu, D. On progressively censored generalized exponential distribution. Test 2009, 18, 497–515. [Google Scholar] [CrossRef]

| Censoring Scheme | AV | MSE | Variance Estimate | AV | MSE | Variance Estimate | AV | MSE | Variance Estimate |

|---|---|---|---|---|---|---|---|---|---|

| k = 8, R = (2, ) | 0.573 | 0.040 | 0.035 | 0.272 | 0.070 | 0.053 | 0.572 | 0.218 | 0.166 |

| k = 8, R = (, 3, ) | 0.588 | 0.049 | 0.041 | 0.287 | 0.111 | 0.099 | 0.635 | 0.455 | 0.427 |

| k = 8, R = () | 0.580 | 0.044 | 0.038 | 0.285 | 0.072 | 0.059 | 0.596 | 0.404 | 0.362 |

| k = 8, R = (3, 2, ) | 0.612 | 0.057 | 0.044 | 0.303 | 0.084 | 0.075 | 0.702 | 0.433 | 0.423 |

| k = 8, R = (5, 4, ) | 0.658 | 0.071 | 0.046 | 0.373 | 0.131 | 0.130 | 0.904 | 0.562 | 0.551 |

| k = 8, R = (, 3, 4) | 0.610 | 0.055 | 0.043 | 0.314 | 0.108 | 0.101 | 0.675 | 0.360 | 0.345 |

| k = 10, R = (7, ) | 0.712 | 0.098 | 0.053 | 0.445 | 0.125 | 0.123 | 1.106 | 1.386 | 1.292 |

| k = 10, R = (, 2, ) | 0.667 | 0.072 | 0.044 | 0.353 | 0.066 | 0.064 | 0.790 | 0.362 | 0.361 |

| k = 10, R = (, ) | 0.663 | 0.073 | 0.046 | 0.352 | 0.078 | 0.076 | 0.792 | 0.441 | 0.440 |

| k = 10, R = (, ) | 0.714 | 0.098 | 0.051 | 0.426 | 0.113 | 0.112 | 1.018 | 0.583 | 0.536 |

| k = 10, R = (5, 3, ) | 0.719 | 0.097 | 0.049 | 0.435 | 0.132 | 0.130 | 1.055 | 0.639 | 0.574 |

| k = 10, R = (, ) | 0.757 | 0.143 | 0.077 | 0.483 | 0.126 | 0.119 | 0.424 | 0.132 | 0.117 |

| Censoring Scheme | AV | MSE | Variance Estimate | AV | MSE | Variance Estimate | AV | MSE | Variance Estimate |

|---|---|---|---|---|---|---|---|---|---|

| k = 8, R = (2, ) | 0.629 | 0.084 | 0.067 | 0.350 | 0.080 | 0.078 | 0.304 | 0.113 | 0.113 |

| k = 8, R = (, 3, ) | 0.657 | 0.098 | 0.073 | 0.377 | 0.083 | 0.083 | 0.318 | 0.073 | 0.072 |

| k = 8, R = () | 0.634 | 0.086 | 0.068 | 0.362 | 0.090 | 0.089 | 0.304 | 0.092 | 0.092 |

| k = 8, R = (3, 2, ) | 0.671 | 0.103 | 0.074 | 0.393 | 0.104 | 0.104 | 0.328 | 0.073 | 0.073 |

| k = 8, R = (5, 4, ) | 0.732 | 0.136 | 0.083 | 0.477 | 0.189 | 0.183 | 0.395 | 0.132 | 0.123 |

| k = 8, R = (, 3, 4) | 0.669 | 0.107 | 0.078 | 0.391 | 0.081 | 0.081 | 0.340 | 0.101 | 0.099 |

| k = 10, R = (7, ) | 0.810 | 0.190 | 0.094 | 0.592 | 0.226 | 0.189 | 0.511 | 0.243 | 0.198 |

| k = 10, R = (, 2, ) | 0.726 | 0.124 | 0.073 | 0.448 | 0.073 | 0.071 | 0.390 | 0.103 | 0.094 |

| k = 10, R = (, ) | 0.739 | 0.134 | 0.077 | 0.447 | 0.068 | 0.066 | 0.393 | 0.088 | 0.077 |

| k = 10, R = (, ) | 0.801 | 0.183 | 0.092 | 0.554 | 0.173 | 0.150 | 0.476 | 0.180 | 0.149 |

| k = 10, R = (5, 3, ) | 0.792 | 0.169 | 0.083 | 0.541 | 0.138 | 0.118 | 0.462 | 0.153 | 0.126 |

| k = 10, R = (, ) | 0.757 | 0.143 | 0.077 | 0.483 | 0.126 | 0.119 | 0.424 | 0.132 | 0.117 |

| AL | CP | AL | CP | AL | CP | |

|---|---|---|---|---|---|---|

| k = 8, R = (2, ) | 0.902 | 98.6% | 0.834 | 68.3% | 1.680 | 71.6% |

| k = 8, R = (, 3, ) | 0.905 | 98.7% | 0.873 | 72.8% | 1.705 | 71.4% |

| k = 8, R = () | 0.885 | 97.9% | 0.835 | 68.2% | 1.524 | 68.8% |

| k = 8, R = (3, 2, ) | 0.923 | 98.6% | 0.913 | 74.0% | 1.837 | 75.7% |

| k = 8, R = (5, 4, ) | 0.954 | 97.4% | 1.262 | 83.2% | 2.670 | 88.1% |

| k = 8, R = (, 3, 4) | 0.925 | 98.3% | 0.961 | 73.2% | 1.898 | 75.0% |

| k = 10, R = (7, ) | 0.937 | 96.2% | 1.205 | 86.1% | 2.746 | 92.6% |

| k = 10, R = (, 2, ) | 0.886 | 98.4% | 0.901 | 79.2% | 1.817 | 83.8% |

| k = 10, R = (, ) | 0.900 | 97.1% | 0.940 | 82.6% | 1.880 | 83.0% |

| k = 10, R = (, ) | 0.939 | 96.5% | 1.116 | 86.0% | 2.578 | 92.4% |

| k = 10, R = (5, 3, ) | 0.943 | 95.8% | 1.215 | 88.4% | 2.675 | 93.0% |

| k = 10, R = (, ) | 0.937 | 96.7% | 1.044 | 86.0% | 2.276 | 87.8% |

| AL | CP | AL | CP | AL | CP | |

|---|---|---|---|---|---|---|

| k = 8, R = (2, ) | 1.021 | 97.9% | 0.966 | 81.0% | 0.877 | 85.4% |

| k = 8, R = (, 3, ) | 1.029 | 98.0% | 0.948 | 83.3% | 0.872 | 85.4% |

| k = 8, R = () | 1.022 | 98.6% | 0.944 | 83.0% | 0.849 | 85.5% |

| k = 8, R = (3, 2, ) | 1.055 | 98.9% | 0.977 | 86.2% | 0.908 | 88.6% |

| k = 8, R = (5, 4, ) | 1.107 | 98.5% | 1.237 | 91.6% | 1.124 | 91.2% |

| k = 8, R = (, 3, 4) | 1.040 | 98.2% | 0.957 | 86.5% | 0.940 | 86.9% |

| k = 10, R = (7, ) | 1.082 | 94.9% | 1.322 | 96.8% | 1.314 | 96.0% |

| k = 10, R = (, 2, ) | 1.031 | 96.9% | 1.071 | 93.0% | 1.046 | 94.3% |

| k = 10, R = (, ) | 1.024 | 96.1% | 1.014 | 93.6% | 0.980 | 92.9% |

| k = 10, R = (, ) | 1.093 | 95.0% | 1.235 | 95.3% | 1.216 | 95.9% |

| k = 10, R = (5, 3, ) | 1.104 | 94.7% | 1.243 | 96.4% | 1.239 | 96.8% |

| k = 10, R = (, ) | 1.077 | 95.1% | 1.106 | 94.8% | 1.148 | 94.6% |

| Without Order Restriction | With Order Restriction | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IP | NIP | IP | NIP | ||||||

| Censoring Scheme | Parameter | BE | MSE | BE | MSE | BE | MSE | BE | MSE |

| k = 8, R = (2, ) | 0.575 | 0.045 | 0.485 | 0.034 | 0.565 | 0.039 | 0.533 | 0.043 | |

| 0.370 | 0.023 | 0.374 | 0.050 | 0.337 | 0.022 | 0.311 | 0.040 | ||

| 0.289 | 0.018 | 0.302 | 0.053 | 0.265 | 0.019 | 0.277 | 0.036 | ||

| k = 8, R = (, 3, ) | 0.580 | 0.047 | 0.494 | 0.040 | 0.594 | 0.044 | 0.541 | 0.038 | |

| 0.378 | 0.027 | 0.387 | 0.056 | 0.356 | 0.023 | 0.324 | 0.043 | ||

| 0.298 | 0.019 | 0.300 | 0.059 | 0.286 | 0.018 | 0.279 | 0.042 | ||

| k = 8, R = () | 0.576 | 0.044 | 0.494 | 0.039 | 0.595 | 0.049 | 0.530 | 0.045 | |

| 0.386 | 0.024 | 0.377 | 0.054 | 0.349 | 0.027 | 0.324 | 0.046 | ||

| 0.303 | 0.020 | 0.313 | 0.056 | 0.286 | 0.018 | 0.276 | 0.036 | ||

| k = 8, R = (3, 2, ) | 0.565 | 0.045 | 0.518 | 0.040 | 0.617 | 0.056 | 0.536 | 0.037 | |

| 0.377 | 0.030 | 0.410 | 0.104 | 0.365 | 0.020 | 0.355 | 0.043 | ||

| 0.290 | 0.020 | 0.318 | 0.051 | 0.280 | 0.016 | 0.283 | 0.036 | ||

| k = 8, R = (5, 4, ) | 0.631 | 0.071 | 0.539 | 0.038 | 0.653 | 0.070 | 0.604 | 0.058 | |

| 0.417 | 0.027 | 0.433 | 0.065 | 0.407 | 0.029 | 0.424 | 0.077 | ||

| 0.337 | 0.029 | 0.339 | 0.084 | 0.305 | 0.016 | 0.347 | 0.065 | ||

| k = 8, R = (, 3, 4) | 0.576 | 0.058 | 0.518 | 0.037 | 0.600 | 0.053 | 0.534 | 0.039 | |

| 0.389 | 0.023 | 0.368 | 0.048 | 0.354 | 0.022 | 0.337 | 0.041 | ||

| 0.306 | 0.024 | 0.333 | 0.071 | 0.299 | 0.024 | 0.290 | 0.052 | ||

| k = 10, R = (7, ) | 0.666 | 0.071 | 0.673 | 0.085 | 0.699 | 0.084 | 0.722 | 0.106 | |

| 0.487 | 0.035 | 0.559 | 0.116 | 0.467 | 0.030 | 0.527 | 0.087 | ||

| 0.403 | 0.044 | 0.370 | 0.071 | 0.385 | 0.033 | 0.452 | 0.118 | ||

| k = 10, R = (, 2, ) | 0.641 | 0.056 | 0.629 | 0.064 | 0.658 | 0.064 | 0.642 | 0.072 | |

| 0.419 | 0.022 | 0.466 | 0.068 | 0.408 | 0.021 | 0.397 | 0.033 | ||

| 0.337 | 0.018 | 0.370 | 0.071 | 0.320 | 0.018 | 0.362 | 0.086 | ||

| k = 10, R = (, ) | 0.649 | 0.069 | 0.597 | 0.053 | 0.662 | 0.072 | 0.648 | 0.073 | |

| 0.443 | 0.028 | 0.452 | 0.062 | 0.413 | 0.026 | 0.432 | 0.060 | ||

| 0.345 | 0.024 | 0.409 | 0.096 | 0.332 | 0.025 | 0.386 | 0.061 | ||

| k = 10, R = (, ) | 0.683 | 0.075 | 0.669 | 0.083 | 0.680 | 0.072 | 0.707 | 0.098 | |

| 0.472 | 0.047 | 0.492 | 0.084 | 0.449 | 0.026 | 0.507 | 0.086 | ||

| 0.372 | 0.387 | 0.427 | 0.095 | 0.354 | 0.026 | 0.431 | 0.088 | ||

| k = 10, R = (5, 3, ) | 0.705 | 0.096 | 0.688 | 0.096 | 0.679 | 0.096 | 0.710 | 0.102 | |

| 0.465 | 0.037 | 0.567 | 0.131 | 0.455 | 0.032 | 0.489 | 0.099 | ||

| 0.387 | 0.038 | 0.450 | 0.129 | 0.377 | 0.032 | 0.452 | 0.127 | ||

| k = 10, R = (, ) | 0.674 | 0.082 | 0.629 | 0.064 | 0.670 | 0.070 | 0.674 | 0.090 | |

| 0.457 | 0.036 | 0.501 | 0.084 | 0.416 | 0.019 | 0.469 | 0.067 | ||

| 0.369 | 0.034 | 0.415 | 0.091 | 0.353 | 0.021 | 0.408 | 0.102 | ||

| Without Order Restriction | With Order Restriction | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IP | NIP | IP | NIP | ||||||

| Censoring Scheme | Parameter | BE | MSE | BE | MSE | BE | MSE | BE | MSE |

| k = 8, R = (2, ) | 0.480 | 0.026 | 0.437 | 0.028 | 0.527 | 0.027 | 0.464 | 0.025 | |

| 0.322 | 0.059 | 0.253 | 0.078 | 0.275 | 0.044 | 0.253 | 0.056 | ||

| 0.604 | 0.122 | 0.571 | 0.222 | 0.543 | 0.118 | 0.428 | 0.201 | ||

| k = 8, R = (, 3, ) | 0.500 | 0.021 | 0.433 | 0.028 | 0.525 | 0.021 | 0.471 | 0.021 | |

| 0.324 | 0.054 | 0.235 | 0.059 | 0.305 | 0.039 | 0.263 | 0.068 | ||

| 0.646 | 0.145 | 0.588 | 0.137 | 0.579 | 0.117 | 0.507 | 0.185 | ||

| k = 8, R = () | 0.503 | 0.025 | 0.413 | 0.032 | 0.520 | 0.030 | 0.455 | 0.024 | |

| 0.320 | 0.049 | 0.257 | 0.081 | 0.283 | 0.040 | 0.232 | 0.070 | ||

| 0.640 | 0.127 | 0.524 | 0.193 | 0.573 | 0.191 | 0.461 | 0.199 | ||

| k = 8, R = (3, 2, ) | 0.513 | 0.027 | 0.446 | 0.023 | 0.525 | 0.020 | 0.490 | 0.030 | |

| 0.310 | 0.052 | 0.243 | 0.065 | 0.291 | 0.038 | 0.290 | 0.078 | ||

| 0.643 | 0.123 | 0.615 | 0.195 | 0.579 | 0.117 | 0.550 | 0.352 | ||

| k = 8, R = (5, 4, ) | 0.548 | 0.029 | 0.487 | 0.025 | 0.565 | 0.029 | 0.531 | 0.032 | |

| 0.342 | 0.050 | 0.277 | 0.098 | 0.325 | 0.046 | 0.298 | 0.069 | ||

| 0.791 | 0.166 | 0.829 | 0.399 | 0.697 | 0.089 | 0.665 | 0.205 | ||

| k = 8, R = (, 3, 4) | 0.506 | 0.027 | 0.452 | 0.027 | 0.537 | 0.025 | 0.475 | 0.026 | |

| 0.331 | 0.052 | 0.262 | 0.072 | 0.275 | 0.042 | 0.254 | 0.061 | ||

| 0.641 | 0.134 | 0.615 | 0.175 | 0.591 | 0.099 | 0.529 | 0.198 | ||

| k = 10, R = (7, ) | 0.604 | 0.036 | 0.554 | 0.030 | 0.613 | 0.042 | 0.566 | 0.029 | |

| 0.428 | 0.078 | 0.353 | 0.101 | 0.400 | 0.047 | 0.356 | 0.064 | ||

| 0.903 | 0.139 | 0.937 | 0.323 | 0.832 | 0.086 | 0.768 | 0.151 | ||

| k = 10, R = (, 2, ) | 0.582 | 0.038 | 0.521 | 0.024 | 0.570 | 0.032 | 0.549 | 0.025 | |

| 0.371 | 0.047 | 0.310 | 0.061 | 0.344 | 0.032 | 0.330 | 0.050 | ||

| 0.755 | 0.101 | 0.711 | 0.157 | 0.628 | 0.088 | 0.611 | 0.145 | ||

| k = 10, R = (, ) | 0.583 | 0.036 | 0.536 | 0.030 | 0.598 | 0.036 | 0.551 | 0.032 | |

| 0.331 | 0.039 | 0.324 | 0.063 | 0.339 | 0.037 | 0.308 | 0.050 | ||

| 0.777 | 0.121 | 0.725 | 0.186 | 0.653 | 0.082 | 0.621 | 0.162 | ||

| k = 10, R = (, ) | 0.626 | 0.045 | 0.585 | 0.038 | 0.605 | 0.037 | 0.589 | 0.039 | |

| 0.398 | 0.060 | 0.352 | 0.079 | 0.385 | 0.042 | 0.366 | 0.062 | ||

| 0.916 | 0.202 | 0.951 | 0.351 | 0.777 | 0.092 | 0.792 | 0.206 | ||

| k = 10, R = (5, 3, ) | 0.595 | 0.031 | 0.569 | 0.033 | 0.630 | 0.050 | 0.600 | 0.046 | |

| 0.388 | 0.047 | 0.337 | 0.073 | 0.388 | 0.045 | 0.353 | 0.053 | ||

| 0.873 | 0.168 | 0.890 | 0.241 | 0.803 | 0.101 | 0.804 | 0.188 | ||

| k = 10, R = (, ) | 0.582 | 0.032 | 0.556 | 0.031 | 0.602 | 0.035 | 0.595 | 0.046 | |

| 0.369 | 0.040 | 0.343 | 0.074 | 0.363 | 0.044 | 0.348 | 0.068 | ||

| 0.767 | 0.112 | 0.794 | 0.178 | 0.709 | 0.084 | 0.696 | 0.169 | ||

| Without Order Restriction | With Order Restriction | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IP | NIP | IP | NIP | ||||||

| Censoring Scheme | Parameter | AL | CP | AL | CP | AL | CP | AL | CP |

| k = 8, R = (2, ) | 0.382 | 77.6% | 0.370 | 70.0% | 0.453 | 88.6% | 0.448 | 80.1% | |

| 0.437 | 61.6% | 0.351 | 42.8% | 0.483 | 68.7% | 0.435 | 56.7% | ||

| 0.806 | 65.3% | 0.802 | 61.0% | 0.771 | 57.9% | 0.750 | 52.0% | ||

| k = 8, R = (, 3, ) | 0.394 | 76.9% | 0.345 | 63.0% | 0.469 | 90.3% | 0.470 | 85.1% | |

| 0.445 | 62.4% | 0.373 | 46.4% | 0.493 | 67.4% | 0.470 | 62.7% | ||

| 0.785 | 61.0% | 0.726 | 52.2% | 0.846 | 67.1% | 0.862 | 61.6% | ||

| k = 8, R = () | 0.361 | 74.2% | 0.358 | 62.3% | 0.477 | 91.2% | 0.448 | 76.8% | |

| 0.415 | 54.6% | 0.363 | 45.0% | 0.496 | 70.3% | 0.433 | 57.9% | ||

| 0.712 | 58.4% | 0.782 | 59.2% | 0.790 | 64.3% | 0.781 | 56.5% | ||

| k = 8, R = (3, 2, ) | 0.401 | 80.2% | 0.394 | 74.1% | 0.478 | 87.7% | 0.484 | 84.3% | |

| 0.465 | 60.8% | 0.364 | 44.6% | 0.524 | 73.0% | 0.509 | 61.7% | ||

| 0.861 | 70.0% | 0.873 | 63.3% | 0.836 | 65.3% | 0.858 | 60.2% | ||

| k = 8, R = (5, 4, ) | 0.434 | 84.5% | 0.429 | 80.0% | 0.509 | 92.3% | 0.489 | 88.2% | |

| 0.521 | 73.6% | 0.492 | 53.9% | 0.608 | 75.5% | 0.606 | 71.5% | ||

| 1.025 | 81.4% | 1.143 | 71.5% | 0.995 | 85.2% | 1.048 | 72.3% | ||

| k = 8, R = (, 3, 4) | 0.397 | 80.4% | 0.373 | 71.1% | 0.468 | 86.2% | 0.475 | 82.2% | |

| 0.464 | 67.7% | 0.394 | 48.8% | 0.521 | 71.5% | 0.513 | 65.2% | ||

| 0.878 | 70.0% | 0.831 | 59.8% | 0.815 | 66.1% | 0.913 | 61.1% | ||

| k = 10, R = (7, ) | 0.481 | 87.3% | 0.465 | 90.3% | 0.506 | 90.3% | 0.488 | 86.4% | |

| 0.663 | 84.0% | 0.605 | 69.5% | 0.652 | 87.6% | 0.697 | 79.7% | ||

| 1.250 | 89.0% | 1.438 | 86.6% | 1.083 | 92.6% | 1.180 | 85.3% | ||

| k = 10, R = (, 2, ) | 0.463 | 88.6% | 0.417 | 80.6% | 0.500 | 88.0% | 0.509 | 87.2% | |

| 0.527 | 76.5% | 0.454 | 59.9% | 0.534 | 82.9% | 0.578 | 69.3% | ||

| 0.971 | 79.5% | 0.977 | 70.1% | 0.886 | 78.6% | 0.965 | 75.2% | ||

| k = 10, R = (, ) | 0.442 | 89.9% | 0.429 | 81.2% | 0.489 | 88.6% | 0.498 | 92.0% | |

| 0.510 | 73.7% | 0.505 | 60.7% | 0.543 | 77.8% | 0.546 | 74.7% | ||

| 0.928 | 77.4% | 0.900 | 66.8% | 0.884 | 82.2% | 0.944 | 77.4% | ||

| k = 10, R = (, ) | 0.475 | 87.3% | 0.463 | 86.9% | 0.505 | 95.0% | 0.514 | 90.1% | |

| 0.618 | 78.6% | 0.618 | 67.8% | 0.606 | 84.6% | 0.696 | 79.5% | ||

| 1.186 | 88.0% | 1.318 | 81.9% | 0.986 | 89.3% | 1.154 | 83.7% | ||

| k = 10, R = (5, 3, ) | 0.472 | 89.0% | 0.457 | 88.1% | 0.490 | 89.0% | 0.515 | 92.0% | |

| 0.578 | 83.6% | 0.576 | 71.5% | 0.619 | 81.6% | 0.632 | 78.5% | ||

| 1.149 | 86.0% | 1.398 | 85.4% | 1.009 | 84.9% | 1.137 | 86.5% | ||

| k = 10, R = (, ) | 0.463 | 88.6% | 0.443 | 82.2% | 0.502 | 89.3% | 0.511 | 91.0% | |

| 0.535 | 79.1% | 0.526 | 67.4% | 0.601 | 84.9% | 0.603 | 74.3% | ||

| 0.980 | 80.5% | 1.138 | 76.5% | 0.941 | 80.9% | 1.013 | 76.7% | ||

| Without Order Restriction | With Order Restriction | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IP | NIP | IP | NIP | ||||||

| Censoring Scheme | Parameter | AL | CP | AL | CP | AL | CP | AL | CP |

| k = 8, R = (2, ) | 0.493 | 78.3% | 0.397 | 69.7% | 0.623 | 90.7% | 0.545 | 87.9% | |

| 0.470 | 78.3% | 0.458 | 66.2% | 0.515 | 87.7% | 0.543 | 72.1% | ||

| 0.414 | 80.7% | 0.397 | 65.5% | 0.450 | 86.3% | 0.507 | 78.3% | ||

| k = 8, R = (, 3, ) | 0.503 | 82.2% | 0.406 | 71.5% | 0.611 | 89.6% | 0.567 | 85.6% | |

| 0.467 | 84.6% | 0.465 | 64.9% | 0.520 | 86.6% | 0.566 | 77.5% | ||

| 0.413 | 81.9% | 0.407 | 64.9% | 0.467 | 90.0% | 0.501 | 78.2% | ||

| k = 8, R = () | 0.500 | 84.2% | 0.394 | 70.8% | 0.621 | 90.3% | 0.557 | 86.2% | |

| 0.474 | 84.5% | 0.433 | 72.2% | 0.517 | 86.3% | 0.520 | 77.3% | ||

| 0.411 | 85.2% | 0.392 | 65.6% | 0.450 | 85.3% | 0.459 | 70.9% | ||

| k = 8, R = (3, 2, ) | 0.541 | 84.9% | 0.459 | 75.4% | 0.654 | 92.0% | 0.572 | 90.5% | |

| 0.515 | 83.2% | 0.542 | 72.7% | 0.569 | 87.3% | 0.561 | 77.5% | ||

| 0.442 | 85.3% | 0.449 | 68.7% | 0.472 | 90.3% | 0.512 | 79.6% | ||

| k = 8, R = (5, 4, ) | 0.583 | 89.0% | 0.513 | 76.0% | 0.687 | 93.0% | 0.635 | 91.2% | |

| 0.574 | 92.0% | 0.659 | 77.0% | 0.594 | 92.0% | 0.693 | 82.5% | ||

| 0.489 | 90.7% | 0.564 | 66.9% | 0.555 | 92.0% | 0.660 | 89.5% | ||

| k = 8, R = (, 3, 4) | 0.583 | 85.7% | 0.417 | 75.6% | 0.614 | 90.6% | 0.578 | 87.1% | |

| 0.500 | 85.3% | 0.472 | 71.8% | 0.539 | 87.3% | 0.578 | 80.0% | ||

| 0.455 | 84.0% | 0.456 | 69.3% | 0.482 | 88.6% | 0.546 | 80.7% | ||

| k = 10, R = (7, ) | 0.666 | 86.7% | 0.575 | 78.3% | 0.718 | 84.3% | 0.652 | 80.8% | |

| 0.647 | 92.3% | 0.797 | 86.3% | 0.698 | 96.7% | 0.826 | 89.9% | ||

| 0.605 | 93.0% | 0.758 | 79.0% | 0.633 | 94.0% | 0.808 | 84.2% | ||

| k = 10, R = (, 2, ) | 0.566 | 83.6% | 0.527 | 81.5% | 0.660 | 91.3% | 0.623 | 87.6% | |

| 0.537 | 93.3% | 0.605 | 81.5% | 0.580 | 94.0% | 0.663 | 87.0% | ||

| 0.490 | 93.3% | 0.563 | 74.0% | 0.539 | 96.3% | 0.612 | 89.3% | ||

| k = 10, R = (, ) | 0.576 | 81.3% | 0.500 | 80.0% | 0.676 | 86.7% | 0.635 | 86.2% | |

| 0.521 | 90.0% | 0.614 | 79.3% | 0.577 | 97.0% | 0.661 | 88.2% | ||

| 0.494 | 89.0% | 0.569 | 72.3% | 0.523 | 93.7% | 0.650 | 87.6% | ||

| k = 10, R = (, ) | 0.645 | 85.3% | 0.547 | 79.9% | 0.716 | 86.7% | 0.683 | 81.8% | |

| 0.611 | 95.0% | 0.711 | 84.3% | 0.665 | 96.7% | 0.767 | 89.9% | ||

| 0.583 | 90.0% | 0.671 | 74.6% | 0.607 | 95.3% | 0.735 | 87.5% | ||

| k = 10, R = (5, 3, ) | 0.642 | 80.7% | 0.556 | 80.8% | 0.714 | 88.0% | 0.682 | 81.5% | |

| 0.653 | 93.7% | 0.698 | 83.8% | 0.660 | 97.7% | 0.736 | 91.6% | ||

| 0.573 | 91.0% | 0.629 | 82.1% | 0.619 | 94.3% | 0.728 | 89.9% | ||

| k = 10, R = (, ) | 0.581 | 85.6% | 0.513 | 80.4% | 0.666 | 89.0% | 0.648 | 83.1% | |

| 0.563 | 91.9% | 0.628 | 84.5% | 0.584 | 94.3% | 0.699 | 87.5% | ||

| 0.523 | 90.6% | 0.627 | 73.3% | 0.563 | 94.0% | 0.705 | 88.1% | ||

| MLEs with Complete Samples | ||||

|---|---|---|---|---|

| Dataset | K-S Distance | p Value | ||

| Dataset 1 | 1.353 | 0.188 | 0.162 | 0.367 |

| Dataset 2 | 1.841 | 0.293 | 0.141 | 0.536 |

| (m, n, k) | Scheme | Balanced Joint Progressive Type-II Censored Samples |

|---|---|---|

| (30, 30, 10) | R = (9, ) | 36.75, 145.96, 187.13, 200.16, 284.64, 375.81, 422.11, 530.55, 585.57, 662.66 |

| R = (, 3, ) | 36.75, 48.01, 99.72, 119.86, 187.13, 244.53, 383.43, 530.55, 594.29, 704.66 | |

| R = (6, 7, ) | 36.75, 113.85, 244.53, 350.70, 383.43, 506.60, 581.60, 594.29, 688.16, 727.23 |

| Censoring Scheme | R | R | R |

|---|---|---|---|

| 0.4743 (0.0145, 0.9341) | 0.2962 (0.0126, 0.5799) | 0.4613 (−0.0046, 0.9271) | |

| 0.1186 (−0.0644, 0.3015) | 0.1270 (−0.0360, 0.2900) | 0.1977 (−0.0639, 0.4593) | |

| 0.2111 (0.0429, 0.3794) | 0.1389 (0.0302,0.2477) | 0.2362 (0.0584, 0.4141) | |

| 0.1070 (0.0144, 0.2559) | 0.1957 (0.1049, 0.4601) | 0.2104 (0.1029, 0.5959) | |

| 0.1670 (0.1122, 0.3040) | 0.1395 (0.1041, 0.1705) | 0.2300 (0.0976, 0.2960) | |

| 0.3970 (0.2175, 0.7283) | 0.7115 (0.3430, 0.9272) | 0.6056 (0.3183, 0.8765) | |

| 0.1189 (0.0031, 0.3083) | 0.1198 (0.0386, 0.2851) | 0.1785 (0.0225, 0.4332) | |

| 0.1980 (0.0721, 0.3211) | 0.1223 (0.0573, 0.1819) | 0.2077 (0.0586, 0.3309) | |

| 0.4587 (0.1031, 0.9138) | 0.2704 (0.0359, 0.4962) | 0.4088 (0.1311, 0.7794) |

| Censoring Scheme | Criterion 1 | Criterion 2 | Criterion 3 | Criterion 4 | Criterion 5 |

|---|---|---|---|---|---|

| R = (9, ) | 1.456742 × | 0.07111649 | 0.05996095 | 475.8505 | 0.1896620 |

| R = (, 3, ) | 2.072309 × 10 | 0.02329576 | 0.05948553 | 902.4102 | 0.1402625 |

| R = (6, 7, ) | 3.260692 × | 0.08255037 | 0.06397661 | 380.0584 | 0.1001845 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Cong, T.; Gui, W. Order-Restricted Inference for Generalized Inverted Exponential Distribution under Balanced Joint Progressive Type-II Censored Data and Its Application on the Breaking Strength of Jute Fibers. Mathematics 2023, 11, 329. https://doi.org/10.3390/math11020329

Zhang C, Cong T, Gui W. Order-Restricted Inference for Generalized Inverted Exponential Distribution under Balanced Joint Progressive Type-II Censored Data and Its Application on the Breaking Strength of Jute Fibers. Mathematics. 2023; 11(2):329. https://doi.org/10.3390/math11020329

Chicago/Turabian StyleZhang, Chunmei, Tao Cong, and Wenhao Gui. 2023. "Order-Restricted Inference for Generalized Inverted Exponential Distribution under Balanced Joint Progressive Type-II Censored Data and Its Application on the Breaking Strength of Jute Fibers" Mathematics 11, no. 2: 329. https://doi.org/10.3390/math11020329

APA StyleZhang, C., Cong, T., & Gui, W. (2023). Order-Restricted Inference for Generalized Inverted Exponential Distribution under Balanced Joint Progressive Type-II Censored Data and Its Application on the Breaking Strength of Jute Fibers. Mathematics, 11(2), 329. https://doi.org/10.3390/math11020329