Sequence Prediction and Classification of Echo State Networks

Abstract

:1. Introduction

2. Related Work

2.1. Echo State Network

2.2. Deep Echo State Network

3. Methods

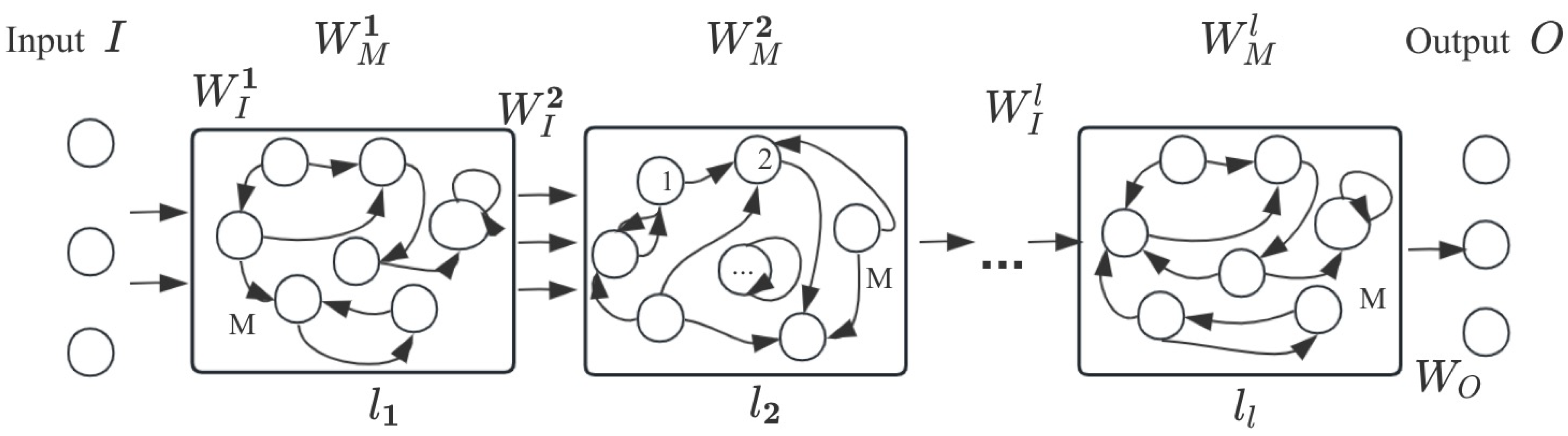

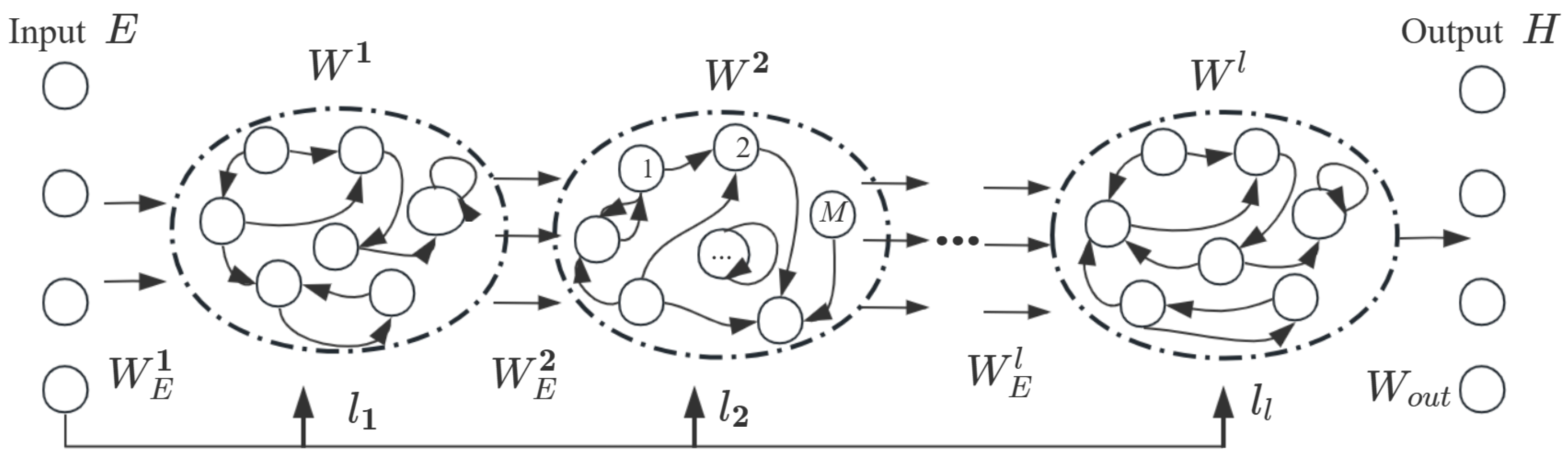

3.1. Building a Multireservoir Echo State Network

3.2. Optimization of the Output Weight

4. Experiments

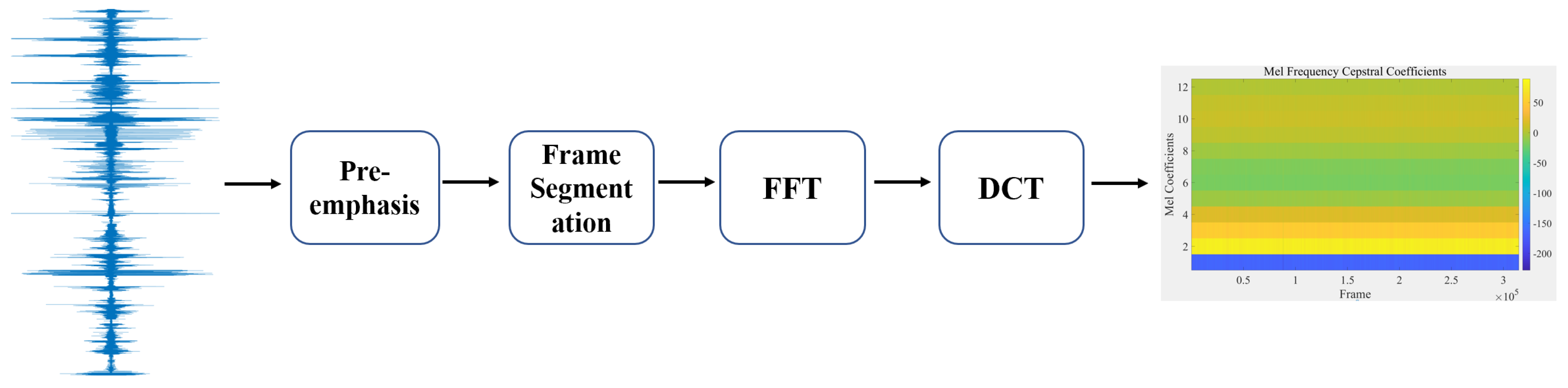

4.1. Evaluation and Data Preparation

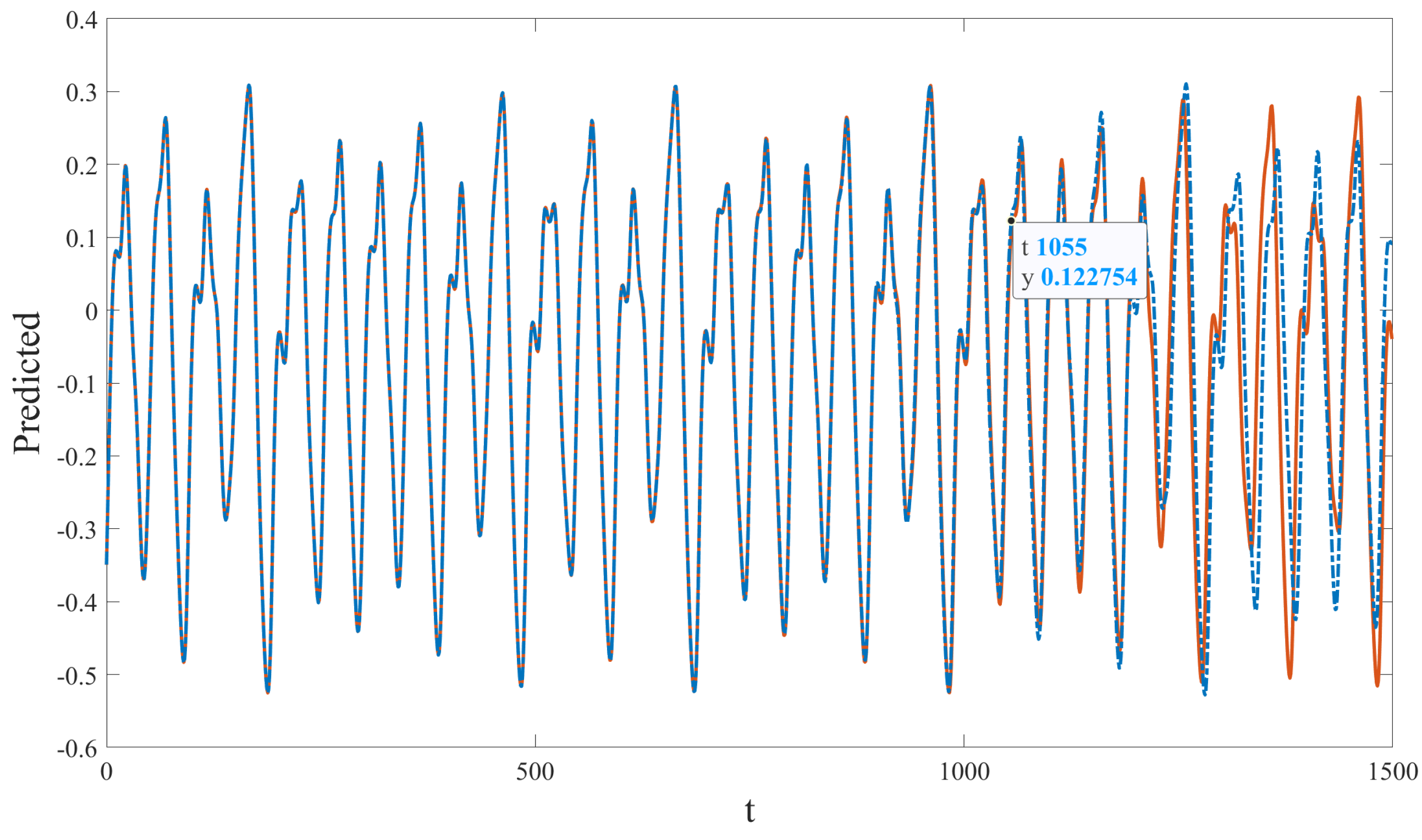

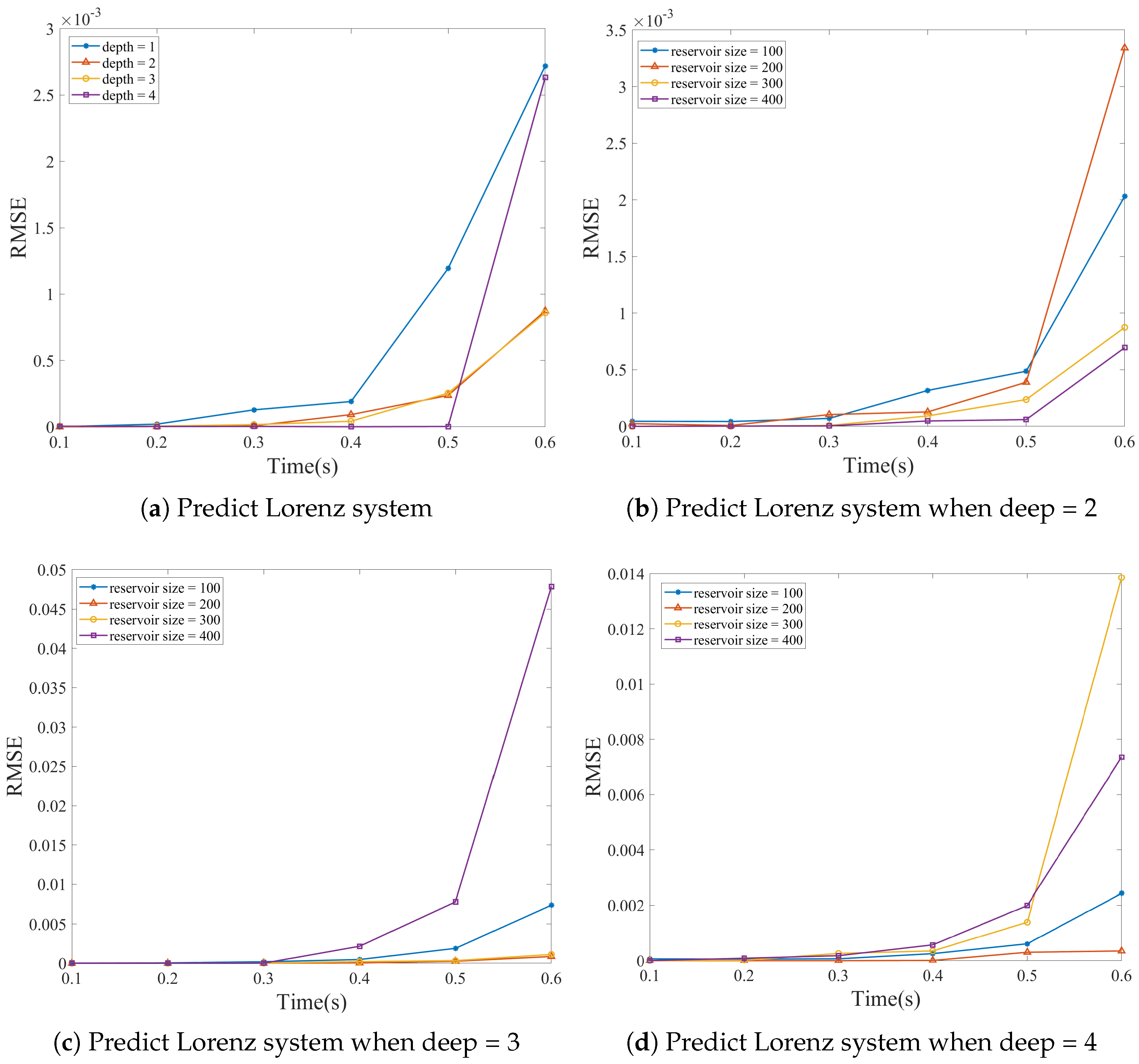

4.2. Results and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jaeger, H. The “echo state” approach to analysing and training recurrent neural networks-with an erratum note. Bonn Ger. Ger. Natl. Res. Cent. Inf. Technol. GMD Tech. Rep. 2001, 148, 13. [Google Scholar]

- Schwedersky, B.B.; Flesch, R.C.; Rovea, S.B. Echo state networks for online, multi-step MPC relevant identification. Eng. Appl. Artif. Intell. 2022, 108, 104596. [Google Scholar] [CrossRef]

- Duggento, A.; Guerrisi, M.; Toschi, N. Echo state network models for nonlinear granger causality. Philos. Trans. R. Soc. A 2021, 379, 20200256. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Li, X.; Zhang, A.; Song, Y. Neuroadaptive Tracking Control of Affine Nonlinear Systems Using Echo State Networks Embedded with Multiclustered Structure and Intrinsic Plasticity. IEEE Trans. Cybern. 2022. [Google Scholar] [CrossRef] [PubMed]

- Yao, Z.; Li, Y. Fuzzy Weighted Echo State Networks. Front. Energy Res. 2022, 9, 1029. [Google Scholar] [CrossRef]

- Liu, J.; Sun, T.; Luo, Y.; Yang, S.; Cao, Y.; Zhai, J. Echo state network optimization using binary grey wolf algorithm. Neurocomputing 2020, 385, 310–318. [Google Scholar] [CrossRef]

- Aceituno, P.V.; Yan, G.; Liu, Y.Y. Tailoring echo state networks for optimal learning. iScience 2020, 23, 101440. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, Y.; Wang, X.; Yu, W. Optimal echo state network parameters based on behavioural spaces. Neurocomputing 2022, 503, 299–313. [Google Scholar] [CrossRef]

- Steiner, P.; Jalalvand, A.; Birkholz, P. Cluster-based input weight initialization for echo state networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 7648–7659. [Google Scholar] [CrossRef]

- Xue, Y.; Zhang, Q.; Slowik, A. Automatic topology optimization of echo state network based on particle swarm optimization. Eng. Appl. Artif. Intell. 2023, 117, 105574. [Google Scholar] [CrossRef]

- Gallicchio, C.; Micheli, A.; Pedrelli, L. Deep Reservoir Computing: A Critical Experimental Analysis. Neurocomputing 2017, 268, 87–99. [Google Scholar] [CrossRef]

- Wen, S.; Hu, R.; Yang, Y.; Huang, T.; Zeng, Z.; Song, Y.D. Memristor-based echo state network with online least mean square. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1787–1796. [Google Scholar] [CrossRef]

- Alizamir, M.; Kim, S.; Kisi, O.; Zounemat-Kermani, M. Deep echo state network: A novel machine learning approach to model dew point temperature using meteorological variables. Hydrol. Sci. J. 2020, 65, 1173–1190. [Google Scholar] [CrossRef]

- Wang, S.; Li, Y.; Wang, D.; Zhang, W.; Chen, X.; Dong, D.; Wang, S.; Zhang, X.; Lin, P.; Gallicchio, C.; et al. Echo state graph neural networks with analogue random resistive memory arrays. Nat. Mach. Intell. 2023, 5, 104–113. [Google Scholar] [CrossRef]

- Jiang, R.; Zeng, S.; Song, Q.; Wu, Z. Deep-Chain Echo State Network with Explainable Temporal Dependence for Complex Building Energy Prediction. IEEE Trans. Ind. Inform. 2022, 19, 426–435. [Google Scholar] [CrossRef]

- Ghazijahani, M.S.; Heyder, F.; Schumacher, J.; Cierpka, C. On the benefits and limitations of Echo State Networks for turbulent flow prediction. Meas. Sci. Technol. 2022, 34, 014002. [Google Scholar] [CrossRef]

- Liu, Z.; Tahir, G.A.; Masuyama, N.; Kakudi, H.A.; Fu, Z.; Pasupa, K. Error-output recurrent multi-layer Kernel Reservoir Network for electricity load time series forecasting. Eng. Appl. Artif. Intell. 2023, 117, 105611. [Google Scholar] [CrossRef]

- Gao, R.; Li, R.; Hu, M.; Suganthan, P.N.; Yuen, K.F. Dynamic ensemble deep echo state network for significant wave height forecasting. Appl. Energy 2023, 329, 120261. [Google Scholar] [CrossRef]

- Lyu, H.; Huang, D.; Li, S.; Ng, W.W.; Ma, Q. Multiscale echo self-attention memory network for multivariate time series classification. Neurocomputing 2023, 520, 60–72. [Google Scholar] [CrossRef]

- Gardner, S.D.; Haider, M.R.; Moradi, L.; Vantsevich, V. A modified echo state network for time independent image classification. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS), Lansing, MI, USA, 9–11 August 2021; pp. 255–258. [Google Scholar]

- Donkor, C.; Sam, E.; Basterrech, S. Analysis of Tensor-Based Image Segmentation Using Echo State Networks. In Proceedings of the Modelling and Simulation for Autonomous Systems: 5th International Conference, MESAS 2018, Prague, Czech Republic, 17–19 October 2018; Revised Selected Papers 5. Springer: Berlin/Heidelberg, Germany, 2019; pp. 490–499. [Google Scholar]

- Kim, H.H.; Jeong, J. An electrocorticographic decoder for arm movement for brain–machine interface using an echo state network and Gaussian readout. Appl. Soft Comput. 2022, 117, 108393. [Google Scholar] [CrossRef]

- Damicelli, F.; Hilgetag, C.C.; Goulas, A. Brain connectivity meets reservoir computing. PLoS Comput. Biol. 2022, 18, e1010639. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Lin, J.; Yang, C.; Yin, X.; Li, Z.; Guo, P.; Sun, J.; Fan, X.; Pan, B. Stock Price Forecasting: An Echo State Network Approach. Comput. Syst. Sci. Eng. 2021, 36, 509–520. [Google Scholar] [CrossRef]

- Bo, Y.C.; Wang, P.; Zhang, X.; Liu, B. Modeling data-driven sensor with a novel deep echo state network. Chemom. Intell. Lab. Syst. 2020, 206, 104062. [Google Scholar] [CrossRef]

- Lu, Y.; Liao, Y.; Xu, L.; Liu, Y.; Liu, Y. Laplacian deep echo state network optimized by genetic algorithm. In Proceedings of the 2021 IEEE International Conference on Information Communication and Software Engineering (ICICSE), Chengdu, China, 19–21 March 2021; pp. 107–111. [Google Scholar]

- Lukoševičius, M.; Uselis, A. Efficient implementations of echo state network cross-validation. Cogn. Comput. 2021, 15, 1470–1484. [Google Scholar] [CrossRef]

- Yu, J.; Sun, W.; Lai, J.; Zheng, X.; Dong, D.; Luo, Q.; Lv, H.; Xu, X. Performance Improvement of Memristor-Based Echo State Networks by Optimized Programming Scheme. IEEE Electron Device Lett. 2022, 43, 866–869. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, L. Chaos-Based Image Encryption: Review, Application, and Challenges. Mathematics 2023, 11, 2585. [Google Scholar] [CrossRef]

| Size | 100 | 150 | 200 | 250 | 300 | 350 | 400 | 450 | 500 | 550 | 600 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Layer = 2 | 204 | 550 | 305 | 400 | 400 | 353 | 574 | 518 | 510 | 256 | 400 |

| Layer = 3 | 716 | 217 | 398 | 399 | 600 | 1288 | 511 | 600 | 896 | 357 | 193 |

| Layer = 4 | 560 | 896 | 516 | 230 | 398 | 696 | 1307 | 400 | 397 | 496 | 594 |

| Layer = 5 | 397 | 303 | 400 | 598 | 304 | 694 | 263 | 856 | 34 | 5 | 6 |

| Size | 100 | 150 | 200 | 250 | 300 | 350 | 400 | 450 | 500 | 550 | 600 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Layer = 2 | 730 | 880 | 795 | 990 | 940 | 810 | 950 | 970 | 900 | 890 | 850 |

| Layer = 3 | 740 | 850 | 1010 | 950 | 910 | 950 | 500 | 570 | 650 | 1100 | 750 |

| Layer = 4 | 440 | 350 | 670 | 750 | 760 | 670 | 620 | 710 | 1030 | 670 | 700 |

| Layer = 5 | 540 | 560 | 750 | 740 | 600 | 1060 | 570 | 700 | 650 | 750 | 520 |

| Deep | layer = 1 | layer = 2 | layer = 3 | layer = 4 |

|---|---|---|---|---|

| Size = 100 | 0.616 | 0.621 | 0.643 | 0.662 |

| Size = 200 | 0.685 | 0.720 | 0.758 | 0.782 |

| Size = 300 | 0.842 | 0.853 | 0.862 | 0.871 |

| Size = 400 | 0.874 | 0.882 | 0.884 | 0.898 |

| Dataset | Models | Layer = 1 | Layer = 2 | Layer = 3 | Layer = 4 | layer = 5 |

|---|---|---|---|---|---|---|

| MG | ESN | 0.1517 | / | / | / | / |

| DESN | / | 0.1472 | 0.1462 | 0.1451 | 0.1462 | |

| MIDESN | / | 0.1477 | 0.1423 | 0.1398 | 0.1321 | |

| Lorenz | ESN | 0.1488 | / | / | / | / |

| DESN | / | 0.1463 | 0.1486 | 0.1434 | 0.1418 | |

| MIDESN | / | 0.1419 | 0.1377 | 0.1311 | 0.1252 | |

| Birds | ESN | 0.681 | / | / | / | / |

| DESN | / | 0.953 | 0.922 | 0.901 | 0.867 | |

| MIDESN | / | 0.80467 | 0.8781 | 0.932 | 0.958 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Li, L.; Peng, H. Sequence Prediction and Classification of Echo State Networks. Mathematics 2023, 11, 4640. https://doi.org/10.3390/math11224640

Sun J, Li L, Peng H. Sequence Prediction and Classification of Echo State Networks. Mathematics. 2023; 11(22):4640. https://doi.org/10.3390/math11224640

Chicago/Turabian StyleSun, Jingyu, Lixiang Li, and Haipeng Peng. 2023. "Sequence Prediction and Classification of Echo State Networks" Mathematics 11, no. 22: 4640. https://doi.org/10.3390/math11224640

APA StyleSun, J., Li, L., & Peng, H. (2023). Sequence Prediction and Classification of Echo State Networks. Mathematics, 11(22), 4640. https://doi.org/10.3390/math11224640