1. Introduction

Infertility means lack of pregnancy with unprotected intercourse after 12 months. A total of 40–50% of reported cases suffer from male factor infertility. Globally, 15% of people suffer from infertility problems [

1,

2]. Research shows that semen quality in men is decreasing gradually with time [

3]. More than 60 papers were reviewed, and it was found that the seminal fluid number of sperms and quality in a given sample have declined in the past 50 years [

4]. To seek the reason behind this, scientists have performed semen analyses according to World Health Organization (WHO) rules, assessing semen volume, sperm concentration, total sperm count, sperm morphology, sperm vitality, and sperm motility [

5]. However, manual semen analysis is challenging and time-consuming even for a medical expert [

6] Therefore, researchers have been developing automatic systems for semen analysis for several decades. After the digitization of images, computer-aided sperm analysis (CASA) was announced in the 1980s, making it possible to analyze images using computer systems [

7]. CASA provides a rapid and objective assessment of sperm motility and concentration but with low accuracy due to the presence of other particles in sperm samples. So, CASA is not suggested for medical use.

In past research, researchers proposed an automatic sperm-tracking technique called a fully automated multi-sperm-tracking algorithm, which trails hundreds of spermatozoa at a given time [

8]. In another study, they proposed a method that uses a convolutional neural network (CNN) that classifies a given sample into normal and abnormal sperms. Their results were evaluated on a close dataset, and they compared it with other approaches [

9]. In previous research methodologies, the CASA tool was used with classic image processing and machine learning [

9]. Deep learning, however, has been increasingly important in computer vision applications in recent years [

10,

11,

12]. These deep-learning algorithms are state-of-the-art networks for automatic image analyses, such as VGG16, VGG19, DensNet, GoogleNet, AlexNet, ResNet, etc. [

13,

14,

15].

In this research work, we introduced new sequential deep-learning architecture for the automatic analysis of sperm morphology structure. Furthermore, we evaluated the effectiveness of modern machine-learning and deep-learning techniques over sperm microscopic videos of human sperm microscopic datasets/videos and related parameters to automate the prediction of human sperm fertility. To perform this task, we used an online freely available dataset named MHSMA [

16]. This dataset is derived from the Human Sperm Morphology Analysis (HSMA-DS) dataset [

17]. Furthermore, experts annotated this dataset with the help of guidelines provided by [

18,

19].

This is a challenging task because of the below-mentioned reasons.

Images in the given dataset are very noisy.

The sperms are not stained.

Data samples were recorded with a low-magnification microscope, so images were unclear.

The images were captured with a low-magnification microscope and thus lacked clarity.

There is a severe imbalance between the normal and abnormal sperm classes.

There are not enough sperm images for the training phase.

For the analysis to be helpful for clinical purposes, it should be performed in real time.

We use data augmentation and sampling techniques to overcome the challenges mentioned above to resolve class imbalance issues and training image shortages. Then, we suggest architecture for a sequential deep neural network that can be taught to distinguish between normal and abnormal sperm heads, acrosomes, and vacuoles. Our proposed algorithm combines Conv2d (2D convolution layer), BatchNorm2d (two-dimensional batch normalization), ReLU (rectified linear activation unit), MaxPool2d (max pool two-dimensional), and flattened layers. It shows impressive results on non-images. Furthermore, our approach can be used for medical objectives. Additionally, each sperm may be checked in as little as 25 milliseconds, allowing our approach to function in real time. Experimental findings demonstrate the efficiency of our approach in terms of accuracy, precision, recall, and F1 score, which is the most advanced way for this dataset.

Section 2 reviews previous work on sperm morphology analysis using different deep-learning techniques.

Section 3 describes the dataset and attributes of the proposed research work.

Section 4 presents our proposed model with a deep-learning algorithm.

Section 5,

Section 6,

Section 7 and

Section 8 include our experimental and training setup details, evaluation matrices, result comparison, and discussions. Finally, we summarize conclusions and future directions in

Section 9.

2. Related Work

In the literature, a vast body of knowledge is available on automated sperm selection. In one of these studies, the proportion of boar spermatozoa heads was assessed and a characteristic intracellular density distribution pattern was found [

20,

21]. A deviation model was established and calculated for each sperm’s head in this procedure. Then, an ideal value was taken into account for each sperm categorization. Sperm tails were then taken out, and the gaps in the outlines of the heads were closed using morphological closure. Finally, 60 sperm heads were isolated from the background using Otsu’s technique [

22].

In another approach, normal and abnormal sperm groups were classified using four phases. (1) Pre-processing images: converting RGB images to grayscale and using a median filter to eliminate noise; (2) separating and identifying each sperm with the help of the Sobel edge-detection technique; (3) segmentation: the head, midpiece, and tail of the sperm were separated; and (4) statistical measurement: a categorization was carried out to distinguish between healthy and unhealthy sperm [

23]. In another study, the authors compared sperm nuclear morphometric subpopulations in several animals, including pigs, goats, cows, sheep, etc. [

24]. A total of 70 sperm images were processed using ImageJ software, and the findings were utilized for clustering [

1]. This approach combines multivariate cluster analyses with computer-assisted sperm-morphology-analysis fluorescence (CASMA-F) technology. In the 1980s, computer-aided sperm analysis (CASA) was announced for sperm analysis. It was a very successful software used to measure sperm characteristics, such as sperm concentration and progressive and non-progressive motility in many animal species. However, in the case of human semen analysis, the CASA tool does not show good results due to complications in the fluid of human semen samples [

25].

On the other hand, sperm classification using conventional machine-learning (ML) techniques has been quite successful. This accomplishment was made possible by the idea of an ML pipeline that can tell the difference between normal and abnormal sperms in different sperm portions with the help of a solo sperm image, which is made up of two parts. In the first section, shape-based descriptors were used as a feature extraction technique for the manual extraction of sperm cells. The second section uses these attributes to classify sperm images using a support vector machine [

26].

In one of their investigations, a group of researchers benchmarked various combinations of descriptors/classifiers on sperm head classification into five categories: one normal class and four abnormal classes [

26]. They used a mix of three distinct shape-based descriptors to extract appropriate sperm attributes from a sperm image. These characteristics were input into four different classifiers: decision tree; naive Bayes; nearest neighbor; and support vector machine (SVM). SVM achieved the greatest mean accurate classification of 49% among these classifiers. As is clear from this illustration, conventional learning algorithms mainly rely on the manual modelling of the data format and extracting the characteristics of human sperm cells. Because the data were complicated and people created the representations, the process took time and was prone to mistakes. As a result, current research has focused on “deep learning,” a method that aims to minimize human biases.

Deep-learning algorithms have the potential to learn how to accurately categorize each sperm, as well as how to efficiently portray the data on their own, making them a viable option for overcoming these limitations. Convolutional neural networks, a subclass of deep-learning algorithms, are the most promising for image categorization tasks [

27]. These neural network designs consist of several layers that may be divided into two successive sections based on their operation. The first section uses techniques, such as convolution and pooling layers, to learn an abstract representation (i.e., several valuable properties) from a given dataset. The second section, a multi-layer feed-forward neural network, receives these abstract representations and trains an approximation function to map them into the necessary categories [

28].

These techniques have just lately begun to be used in sperm morphology studies. Early research focused on categorizing the whole sperm dataset as normal or abnormal [

28,

29,

30]. The first pre-trained deep-learning model was used in 2018 to classify healthy sperm and identify whether sperm is normal or abnormal. Researchers developed a smartphone-based microscope to differentiate between normal and abnormal sperm images and learn a deep convolutional neural network. They argued that using their network with a smartphone-based microscope could evaluate human sperm at home [

28].

Another study proposed a region-of-interest (ROI) segmentation methodology that automatically segments sperm images depending on sperm count, with the help of fuzzy C-means clustering and modified overlapping group shrinkage (MOGS). The author used the Sperm Morphology Image Dataset (SMID) to categorize each sperm image into three groups: normal; abnormal; and non-sperm. Moreover, they also trained an efficient neural network (Mobile-Net) from scratch without pre-training on other datasets and obtained stunning results [

29].

The author also proposed smartphone-based data acquisition and reporting techniques for sperm motility analysis. According to the author, this is the first smartphone technique in an expert system for semen analysis up to 2021. Furthermore, they introduced a multi-stage hybrid analyzing approach for video stabilization, sperm motility, and concentration analysis. They also used the Kalman filter for sperm tracking. Authors claim that this system can report more detailed outcomes in different situations and has more advantages than any other expert system previously used for semen analysis concerning cost, modularity, and portability [

31].

In the following study, the author classifies sperm heads into four to five categories [

32,

33], given by HuSHeM [

34] and SCIAN [

35] datasets. They employed a pre-trained VGG19 model [

33]. They fine-tuned the datasets mentioned above to classify sperm heads into four (HuSHeM dataset) and five (SCIAN dataset) different categories [

36]. After this research, researchers manually created a unique convolutional neural network, increasing the accuracy of the prior study on both datasets [

32]. Another field of study has focused on classifying the three components of human sperm images (the head, acrosome, and vacuole) into normal and abnormal sperms using freely available datasets (MHSMA) [

16]. In their initial investigation, Javadi and Ghasemian used a deep-learning technique to address this issue. In their work, they created a deep-learning system from scratch by hand and trained it using this dataset. They classified the head, vacuole, and acrosome more accurately than prior research that used hand-crafted heuristics [

37]. A more recent work, however, used a unique evolutionary technique to create the architecture of a CNN and achieved better results on the same dataset. This neural architecture method showed an accuracy of 77.33%, 77.66%, and 91.66% in the head, acrosome, and vacuole, respectively. This algorithm is called Genetic Neural Architecture Search (GeNAS) [

37]. Similarly, in 2022, Chandra et al. also used the same dataset and applied all famous pre-trained deep-learning models, such as VGG16, VGG19, ResNet50, InceptionV3, InceptionResNetV2, MobileNet, MobileNetV2, DenseNet, NASNetMobile, NASNetLarge, and Xception. According to experimental findings, the deep-learning-based system performs better than human specialists in classifying sperm with great accuracy, reliability, and throughput. By visualizing the feature activations of deep-learning models, they further analyzed the sperm cell data and offered a fresh viewpoint. Last but not least, a thorough study of the experimental findings revealed that ResNet50 and VGG19 had the highest accuracy rates of 87.33%, 71%, and 73% for the vacuole, acrosome, and head labels, respectively [

38]. The following

Table 1 shows the latest research and outcomes on the MHSMA dataset.

3. Dataset Parameters and Distribution

This paper uses a freely available benchmarked MHSMA [

16] derived from the HSMA-DS dataset [

17]. Experts marked this dataset with the help of guidelines provided by [

18,

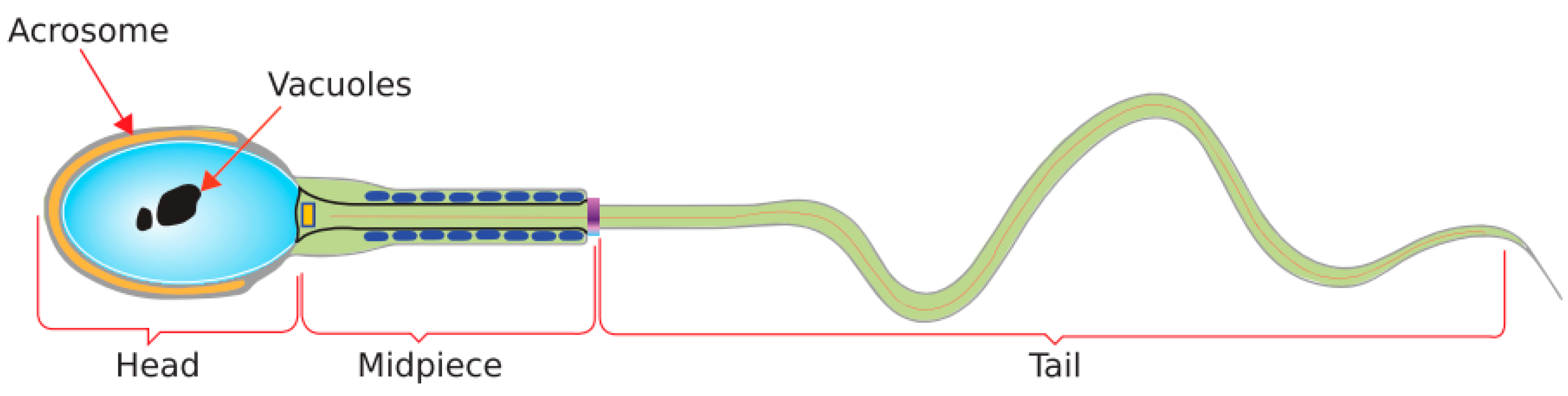

19]. A well-defined acrosome makes up 40 to 70% of the sperm head, and the sperm head’s length and width are between 3 and 5 m and 2 and 3 m, respectively. The presence of vacuoles shows that the sperm is abnormal. Axially, the midpiece is around 1 micrometer wide, making it one and a half times as big as the head. Additionally, the typical tail features, such as homogeneity, uncoiling, being thinner than the mid-piece, and having a length of 45 m, must be discernible.

Figure 1 shows a schematic of a sperm cell’s structure built on marked labels.

This dataset used a low-magnification microscope (400× and 600×) to collect the 1540 grayscale non-stained sperm images. Each image has one sperm and is available in two distinct sizes, 64 × 64 and 128 × 128 pixels. In the field of sperm morphology analysis, specialists identified the head, vacuole, acrosome, neck, and tail of the sperm into normal and abnormal sperm images.

Table 2 illustrates the distribution of samples from this collection. As can be seen from this chart, the vacuole, tail, and neck labels, in particular, suffer from the issue of data imbalance. Another concern is the limited amount of data samples because deep-learning algorithms need a lot of examples to create good function approximations for high-dimensional input-space problems [

27]. The dataset’s normal and abnormal samples are displayed in

Figure 2. Two distinct partitioning strategies were used in our research to assess each trained model. In the first technique, the training set, test set, and validation set are the three separate sets into which the MHSMA dataset is divided.

Table 3 displays the division of the dataset into normal and abnormal samples in each section. Surprisingly, the technique’s distribution of training, test, and validation test samples match that of two other studies on this dataset, allowing for a fair comparison [

16,

37,

38].

First, the entire MHSMA dataset is divided into five equal partitions. The training and validation sets comprise the left-behind 1240 samples. The test set, consisting of 300 sample photos, is chosen as one of these divisions. The validation set, composed of 240 samples drawn randomly from these four partitions, is used to test the training set, consisting of the remaining 1000 samples. The dataset split ratios for the training, testing, and validation sets are shown in

Table 4.

4. Proposed Method

This section introduces a new sequential deep-learning technique for automatically identifying a human sperm’s head, acrosome, and vacuole from grayscale non-stained images. Next, we detail how our deep-learning network was developed and trained. In the end, we review the evaluation measures and procedures employed to rate our suggested model. It is important to emphasize that we do not investigate finding a solution to the categorization issue with the tail and neck labels because classifying the labels for the head, acrosome, and vacuole is challenging work for embryologists, and classifying the labels for the tail and neck is a very simple job.

In light of this, the main topic of this work is the three different classification challenges of identifying the head, acrosome, and vacuole as normal or abnormal. The beauty of our proposed algorithm is that we use the same number of layers to classify sperm cells into the head, vacuole, and acrosome. Moreover, our proposed model outperforms all previously proposed techniques on a given dataset by a significant margin. It should be highlighted that on the suggested benchmark, our system detects abnormalities of the head and acrosome with 12.67% and 11.34% greater accuracy than present modern approaches. Furthermore, our algorithm requires less execution time, even on a low-specification computer/laptop. Moreover, our algorithm classifies images in real-time environments. This enables an embryologist to quickly decide if a given sperm should be chosen or not.

Deep-Learning Model

The design of our deep neural network is based on the Sequential model. The Sequential model is a container class; we also call this a wrapper class used to compose a neural network. We can build any neural network with the Sequential model’s help, which means we can combine different layers in our model, even though we can combine different networks in a single model. Our proposed sequential deep neural network contains three stacks of layers, and the layers present in each stack are explained below.

Convolutional 2D layer: This layer extracts image features from the input dataset with the help of the convolution kernel. Conv2d creates a convolution kernel, combining the input layer and producing a tensor of output. A bias vector is created and added to the outputs if use bias is True. Finally, if activation is not None, it is also applied to the outputs. Arguments of this layer include kernel size 4, stride 1, and padding 1.

Batch normalization: The output of Conv2d is used as the input to the batch norm2d layer, and the output of the batch norm2d layer is used as the input of the first ReLU. A ReLU activation function follows each convolutional layer.

Max Pooling2d: Max pooling reduces feature map size through downsampling. Pooling is normally conducted with the help of two common techniques. The first one is max pooling, and the second one is average pooling. However, max pooling is mostly recommended for image feature processing because it retains maximum output samples in the rectangular region. So, we also adopted the max pooling technique in this research with a max pooling stride of 2 that ensures late downsampling.

Similarly, we created three stacks of layers with minor changes. Details of our proposed sequential model are given below in

Figure 3.

5. Training Configurations

In this section, we trained our proposed model to achieve predictions with maximum accuracy and minimum loss. To achieve this, we employed the cross-entropy loss function. This function is a metric used in machine learning to assess how well a categorization model performs. Loss or error is represented between o and 1 and the ideal model represents a 0 reading. Generally speaking, the objective is to bring your model as close to 0 as possible.

This function is estimated by subtracting the actual output from the predicted output. Then, we multiply that amount by “−y × ln(y)”. In other words, we start with a negative number, raise it to the power of the y-positive logarithm, and then deduct it from our initial calculation. The cross-entropy loss calculation formula is as below:

We trained our model through images available in the training set, with the help of the hyperparameters given in

Table 5.

When our model gave the best validation accuracy, we marked its checkpoints throughout training, which will be documented. If more than one point gave us the same high validation accuracy, then we chose the highest accuracy with the lowest loss. Then, we deployed this model on the test set.

The beauty of our proposed model is that we used the same number of stack layers for the testing and validation set of the head, acrosome, and vacuole with different hyperparameters. On the training set, the parameters of the entire model was trained and adjusted for 100 iterations. The performance of our models was enhanced by obtaining many useful features for each of the three jobs.

To implement our proposed model, we used Python programming language with TORCH framework and Tensorflow backend. Furthermore, all our experiments were performed on Kaggle using NVIDIA Tesla P100 GPU.

7. Results Comparison

We separately trained the model to classify the sperm’s head, acrosome, and vacuole. We introduced our model with 1000 samples on 100 iterations (epochs) because we achieved the highest accuracy on 100 iterations. The validation set (240 pieces) determines the loss value after each iteration, and the checkpoint with the lowest validation loss is stored. We assessed the saved checkpoint on the held-out test set after training (300 samples).

Figure 5 displays the accuracy and loss during the training and validation for each label over training iterations. Our proposed technique can independently predict abnormality in the sperm head, acrosome, and vacuole with an accuracy of 88.6%, 89%, and 92%, respectively.

Table 6 compares our proposed model with pre-trained modern deep-learning architectures.

We evaluated the efficiency of our proposed technique against 11 pre-trained state-of-the-art deep-learning models [

38] tested with the same dataset. Our proposed model outperformed all modern deep-learning models by a significant margin.

Table 6 presents the big picture of these models, besides our model, concerning accuracy, F1 score, recall, and precision in different parts of sperm cells.

Similarly, after comparing with pre-trained modern deep-learning models, we also compared our results with the previously proposed technique by different researchers with the same dataset. As a result, we demonstrated that, when applied to all three head, vacuole, and acrosome labels of the MHSMA dataset, our proposed model can identify better than modern architecture previously proposed models by different researchers, such as manually designed CNN architecture, random search and image processing approaches, in terms of accuracy, precision, and

f0.5 [

37]. Eventually, as shown in

Table 7, our proposed model shows higher accuracy, precision, recall, and

f0.5 on the test set for all three labels.

8. Discussion

Our proposed deep-learning technique shows excellent accuracy on the MHSMA dataset, as we have

f0.5 and

f1 scores. These outcomes prove that our SDNN technique is highly recommended for solving sperm classification problems. This approach is more efficient than 11 pre-trained deep-learning models. It also outperformed the latest deep-learning techniques proposed by researchers, including automatically generated deep networks, including the GeNAS algorithm [

16,

37]. On the head and acrosome labels, our proposed model increases their accuracy by 12.67% and 11.34%, respectively.

After a detailed study of the literature, we concluded that deep-learning models are the finest solution that quickly solve image classification problems compared to conventional approaches, especially for sperm image datasets, such as MHSMA, HuSHeM, and SCIAN. It is interesting to know whether our algorithm can be implemented in real time, such as in fertility clinics. The answer is yes; we can use these deep-learning models in fertility clinics because their accuracy, precision, and other metrics are remarkably greater than even expert manual sperm abnormality identification. We can improve our algorithms by applying our strategies in hospitals and fertility clinics. Two methods can enhance their effectiveness. First, as we train deep-learning algorithms on larger amounts of data, their performance and dependability tend to improve. The images we encounter daily are simple to comprehend, which means they are easy to learn, but analyzing and interpreting images in the medical field requires a great deal of experience. This is particularly true for the classification issue with sperm abnormalities and many other image segmentation and classification issues in medical imaging.

As a result, we conclude that MHSMA dataset magnitude is modest compared to other freely available online datasets. Therefore, we can improve the accuracy and efficiency of our model by gathering more data. To overcome this problem, the first solution is to resolve the dataset’s shortfall and carry out a particular procedure to collect more sperm images for the training set. However, this is a complex, costly, and time-consuming procedure. However, if we paid closer attention, we would see that daily operations of this nature occur in hospitals and clinics. In light of this, we advise that medical facilities create guidelines to systematically compile information from people who refer them for an evaluation of their sperm. Here, another point to be noted is that each sperm dataset differs from the others because of the unique equipment and procedures used to gather each dataset. So, supposing one model works well on a given (MHSMA) dataset, in that case, it cannot provide similar or impressive results on other datasets, such as SCIAN and HuSHeM [

35], that record sperm images with a different microscope. This means that while creating deep-learning models, dataset variability should be taken into account. Additionally, supposing we wish to apply deep-learning techniques in fertility clinics, in that case, we will ensure that we use the same tools and methods as those who collect the dataset (especially for the test set partition of the dataset).

9. Conclusions

In this research, our proposed sequential deep-learning approach significantly outperformed existing sperm morphology analysis (SMA) methods regarding accuracy, precision, and recall, as well as the f0.5 and f1 score. Notably, the accuracy of the head, acrosome, and vacuole labels using our SDNN technique was 90%, 92%, and 89%, respectively. Significantly, sequential deep-learning has never been used to examine sperm morphology. Mainly, when combined, stack network-based deep learning is used. Furthermore, we hypothesize that our ground-breaking SDNN technique can also be applied to address issues related to SMA in the real world, such as fertility clinics, etc. In addition, although our dataset contains labels associated with four separate sperm components and is one of the largest datasets currently available, we need to increase the dataset size, specifically the test set, to conduct additional empirical testing of our state-of-the-art model. Nevertheless, our research demonstrates that this is the most effective method. Therefore, we are hopeful that the fertility departments of healthcare institutions will adopt our suggested deep-learning technique for fertility prediction and analyses.