Abstract

We investigate the numerical solution of the nonlinear Schrödinger equation in two spatial dimensions and one temporal dimension. We develop a parametric Runge–Kutta method with four of their coefficients considered as free parameters, and we provide the full process of constructing the method and the explicit formulas of all other coefficients. Consequently, we produce an adaptable method with four degrees of freedom, which permit further optimisation. In fact, with this methodology, we produce a family of methods, each of which can be tailored to a specific problem. We then optimise the new parametric method to obtain an optimal Runge–Kutta method that performs efficiently for the nonlinear Schrödinger equation. We perform a stability analysis, and utilise an exact dark soliton solution to measure the global error and mass error of the new method with and without the use of finite difference schemes for the spatial semi-discretisation. We also compare the efficiency of the new method and other numerical integrators, in terms of accuracy versus computational cost, revealing the superiority of the new method. The proposed methodology is general and can be applied to a variety of problems, without being limited to linear problems or problems with oscillatory/periodic solutions.

Keywords:

(2+1)-dimensional nonlinear Schrödinger equation; partial differential equations; parametric Runge–Kutta method; coefficient optimisation; global error MSC:

65L06; 65M20; 65M22

1. Introduction

We consider the (2+1)-dimensional nonlinear Schrödinger (NLS) equation of the form:

where , , is a complex function of the spatial variables and the temporal variable t and . The term denotes the temporal evolution, the terms and denote the dispersion with respect to x and y, respectively, while is a nonlinear term, whose introduction is motivated by several applications. Equation (1) can represent atomic Bose–Einstein condensates (BECs), in which case u expresses the mean-field function of the matter-wave, or, if applied in the context of nonlinear optics for the study of optical beams [1], u describes the complex electric field envelope, t is the propagation distance, and are the transverse coordinates [2,3].

The analytical solution of the NLS equation has attracted great interest in recent years, especially when the solutions are solitons [4,5,6,7]. Additionally, the numerical computation of the NLS equation is a critical part of the verification process of analytical theories. Different strategies have been adopted to solve the NLS equation, its linear counterpart, or differential equations with similar behaviour. A very significant factor in the efficiency of the computation lies in the time integrator; this is the case for both the scalar forms and vector forms after applying the method of lines. Preferred time integrators for the Schrödinger equation include Runge–Kutta(–Nyström) (RK/RKN) methods [8,9,10,11] and multistep methods [12,13,14,15]. RK/RKN methods are especially well-established, with various tools for achieving a high order of accuracy and obtaining intrinsic properties for specific problems, e.g., in [16,17], specialised RK methods were developed and optimised with differential evolution algorithms; in [18,19,20,21], RK/RKN methods were constructed for problems with periodic/oscillatory behaviour using fitting techniques; finally, in [22], a hybrid block method was produced for the efficient solution of differential systems. Many of the aforementioned techniques are targeted towards linear differential equations, ordinary differential equations, problems with oscillatory/periodic solutions or combinations of these. Here, we propose a general approach that can be applied to a problem without these limitations, and is tailored to a specific nonlinear partial differential equation that does not always exhibit periodic behaviour.

Following our previous work in [9], where we investigated the numerical solution of the NLS equation in (1+1) dimensions, here we extend this study to problems in (2+1) dimensions; that is, two dimensions in space and one in time. However, since this is a problem with increased significance that could be experimentally verified, we decided to follow a different approach and develop a method that is tailored to the efficient numerical solution of the problem. To achieve this, we initially developed a new parametric RK method, with as many coefficients as possible treated as free parameters. In this way, we produced an adaptable method with four degrees of freedom, which can be applied to a plethora of problems and specifically tailored to their efficient solution. Subsequently, in the case of problem (1), we selected the optimal values that correspond to the method with the minimum global error when integrating the problem for various step sizes. We chose to construct a method with six algebraic orders and eight stages, a maximised real stability interval, and coefficients with similar orders of magnitude, to minimise the round-off error.

The structure of this paper is as follows:

- In Section 2, we present the necessary theoretical concepts;

- In Section 3, we show the construction and analysis of the new RK method;

- In Section 4, we report the numerical experiments and results;

- In Section 5, we provide a discussion of the results and future perspectives;

- In Section 6, we communicate our conclusions.

2. Theory

2.1. Explicit Runge–Kutta Methods

For the numerical solution of Equation (1),

An stage explicit Runge–Kutta method for the solution of Equation (1) is presented below:

where is strictly lower triangular, , , , and are the coefficient matrices, is the step size in time, and f is defined in .

2.2. Algebraic Order Conditions

According to rooted tree analysis [23], there are 37 equations that must be satisfied to obtain a Runge–Kutta method of sixth algebraic order and 7 additional equations for an explicit Runge–Kutta method with eight stages , with a total of 44 equations, as seen below in the set of Equation (4).

Here, , the diagonal matrix has , , the operator * denotes element-wise multiplication and, finally, the powers of c, C and are defined as element-wise powers, i.e., , whereas the powers of A are defined normally, i.e., .

2.3. Stability

We consider the problem

with exact solution , which represents the circular orbit on the complex plane and its frequency.

Equation (5), solved numerically by the RK high-order method of Equation (3), yields the solution , where is called the stability polynomial; and are polynomials in . The exact solution of Equation (5) satisfies the relation . For a generic , since and we have:

Definition 1.

For the method of Equation (3), if and , for every and every suitably small positive ϵ, then the real stability interval is .

Definition 2.

The stability region is defined as the set .

3. Construction and Analysis

3.1. Criteria

For the construction of the new method, we aimed to satisfy the following criteria:

- Parametric method with as many as possible coefficients treated as free parameters.

- Minimum global error when integrating problem (1) for various step sizes.

- Sixth algebraic order and eight stages, which implies 44 equations (4).

- Maximised real stability interval, based on Definition 2.

- Coefficients with similar orders of magnitude, to minimise the round-off error.

3.2. Parametric Method—General Case

We began the development by considering the 44 equations (4). There are 43 variables involved in these equations, and we fixed 10 of these, namely

Of the remaining 33 variables, 29 are contained in , , , , , , , , , , , , , , , , , , , , , , , , , , , , and 4 are contained in . We aimed to solve the system of 44 equations for the 29 variables in , considering the 4 variables in as free parameters, thus effectively creating a general family of methods with 4 degrees of freedom. In order to solve the highly nonlinear system of equations, we used the mathematical software Maple. In Table 1 we solved the 29 equations in the specified order, solving for the variable mentioned after the equation. The order of equations and the selected variables are important, and were chosen so that the corresponding system after the variable substitution is the least complex. Otherwise, the system solution has impractical computation times.

Table 1.

The order in which the equations are solved, followed by the variable for which each one is solved.

After solving the system of 29 equations, and due to our initial fixed values of 10 coefficients, all 44 equations are now satisfied, including the ones we did not explicitly solve. All coefficients, except for the initially fixed ones, now depend on :

3.3. Parametric Method—Optimal Case

After generating the parametric method, we aimed to identify the optimal method with the best performance. In order to achieve this, we selected different step-lengths and integrated problem (1). The optimal values of the four coefficients are considered the ones that yield higher accuracy results for all different step-lengths. The latter were chosen to be , so that they can yield results with an accuracy of different orders of magnitudes. These step-lengths return results of above 4, 6.5 and 10 accurate decimal digits, respectively. These accuracy values were selected to be above average while still allowing for a plethora of feasible solutions/coefficient combinations, and will later be used as benchmarks. We are interested in the quadruples

for which the method has the highest accuracy. The optimisation process is as follows:

- We evaluate the accuracy at each grid point of the mesh defined byIn the first iteration, we choose the coefficient step-length and integration step-length , and identify the regions with maximum accuracy. We observe that all high efficient quadruples appear to satisfy the constraintas is also the case with the majority of RK methods. Thus, for the subsequent iterations, we impose this constraint in advance, which drastically reduces the computation cost without sacrificing the accuracy.

- For the next two iterations, we use , together with and , and follow the procedure of step 1. We identify the intersection of all regions for which a certain quadruple has a higher accuracy than its corresponding benchmark.

- We repeat this process for combined with , as described in step 1, but only within the regions narrowed down by step 2, i.e.,

- We run the process one last time for combined with , within the regions updated by step 3, i.e.,

The optimal quadruple is primarily chosen with respect to the accuracy, and secondarily considering the robustness of the solution. The latter is expressed by the absence of sensitivity of the solution when a coefficient value deviates from the optimal value. This is the reason why no further local optimisation is needed and why we stop at three decimal digits of accuracy for the optimal coefficient values.

The optimal values returned by the optimisation process are , , , . These can be exactly expressed as rational numbers , , , . By substituting these values to the other coefficients, we obtain the particular case that is optimal for this problem, as seen below in Table 2.

Table 2.

The Butcher tableau of the optimised Runge–Kutta method.

3.4. Error and Stability Analysis

We perform local truncation error analysis on the method of Table 2, based on the Taylor expansion series of the difference

The principal term of the local truncation error is evaluated as

which implies that while, locally, the order of accuracy is seven, globally, the order of the new method is six (see [23] for explanation).

Regarding the stability of the method, following the methodology of Section 2.3, we evaluated the stability polynomial of the method presented in Table 2. The polynomial is given by

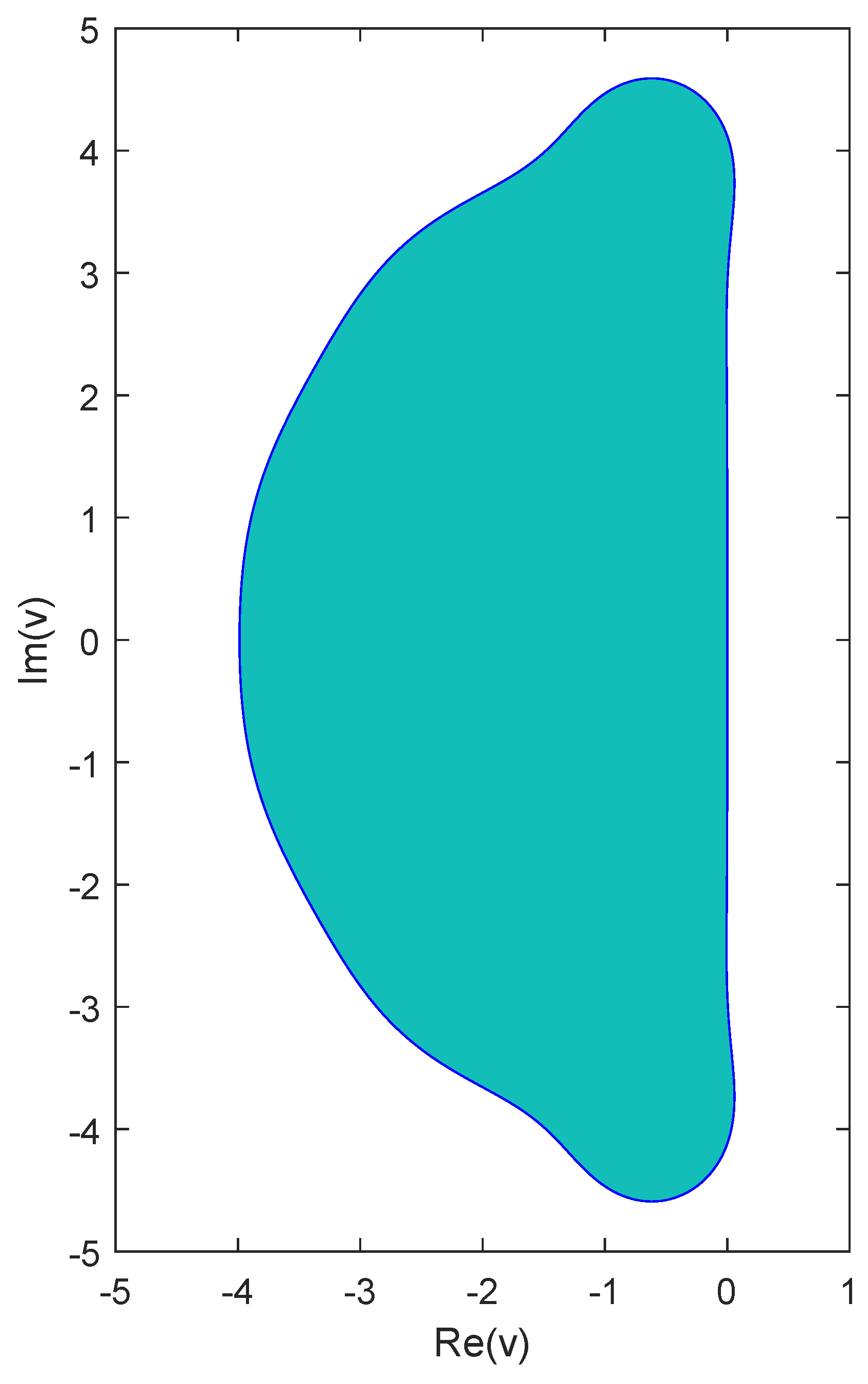

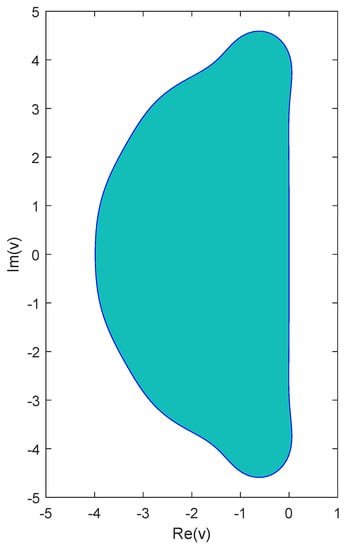

The stability analysis was carried out numerically in a mesh around the origin with , and the stability region is shown in Figure 1, as the grid points that satisfy Definition 2. Furthermore, with a similar procedure performed on the real axis and according to Definition 1, the real stability interval is .

Figure 1.

Stability region of the new Runge–Kutta method of Table 2.

4. Numerical Experiments

4.1. Theoretical Solution and Mass Conservation Law

For this particular case, the theoretical solution becomes

and

which is called density.

4.2. Numerical Solution

We performed a numerical computation of problem (1), utilising the method of lines for . We used Equation (11) for both the initial condition and the boundary conditions. Regarding the semi-discretisation of and , we chose a combination of 10th-order forward, central and backward finite difference schemes. Next, we defined the maximum absolute solution error and the maximum relative mass error of the numerical computation.

The maximum absolute solution error is given by

where denotes the numerical approximation of .

The maximum relative mass error over the region D is given by

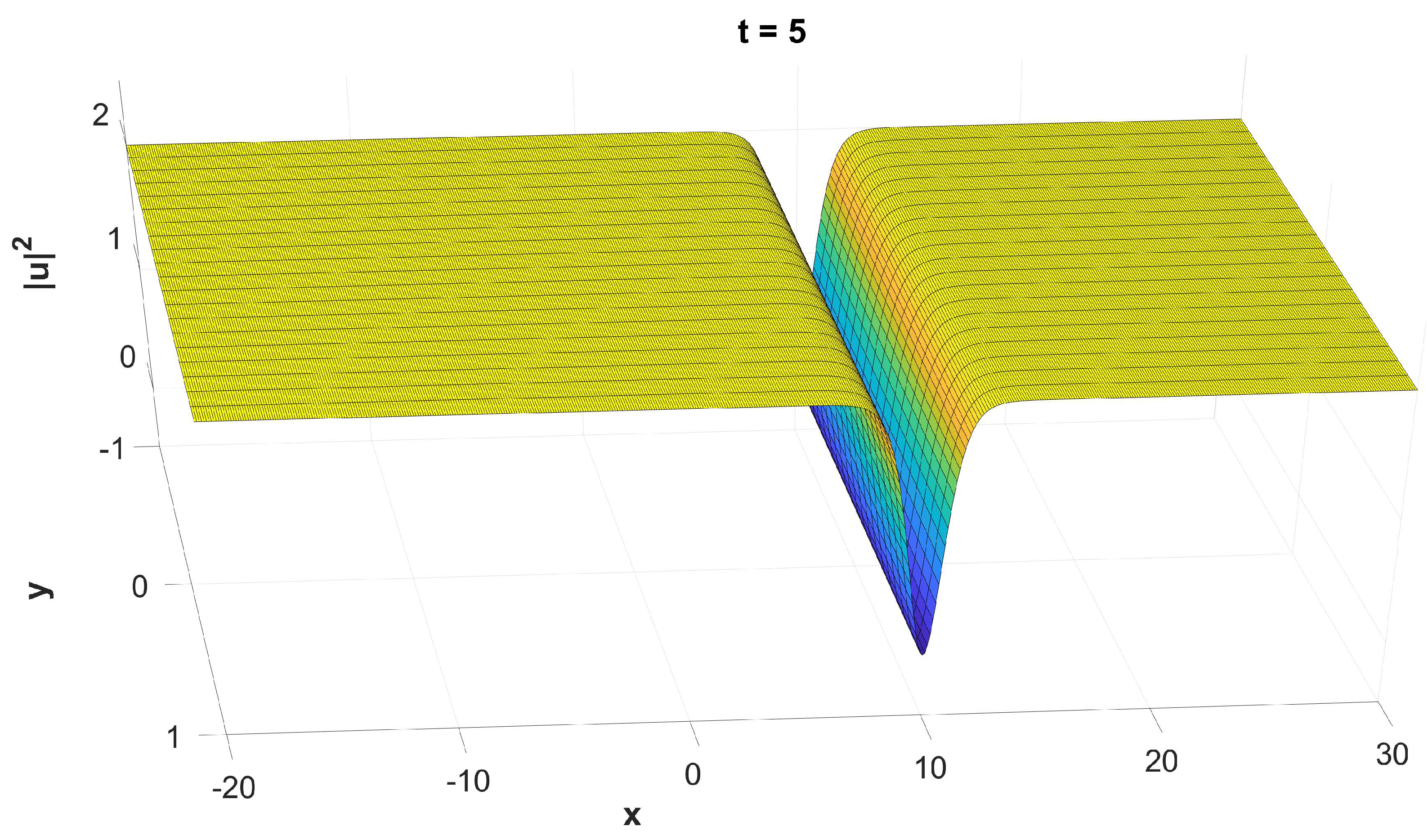

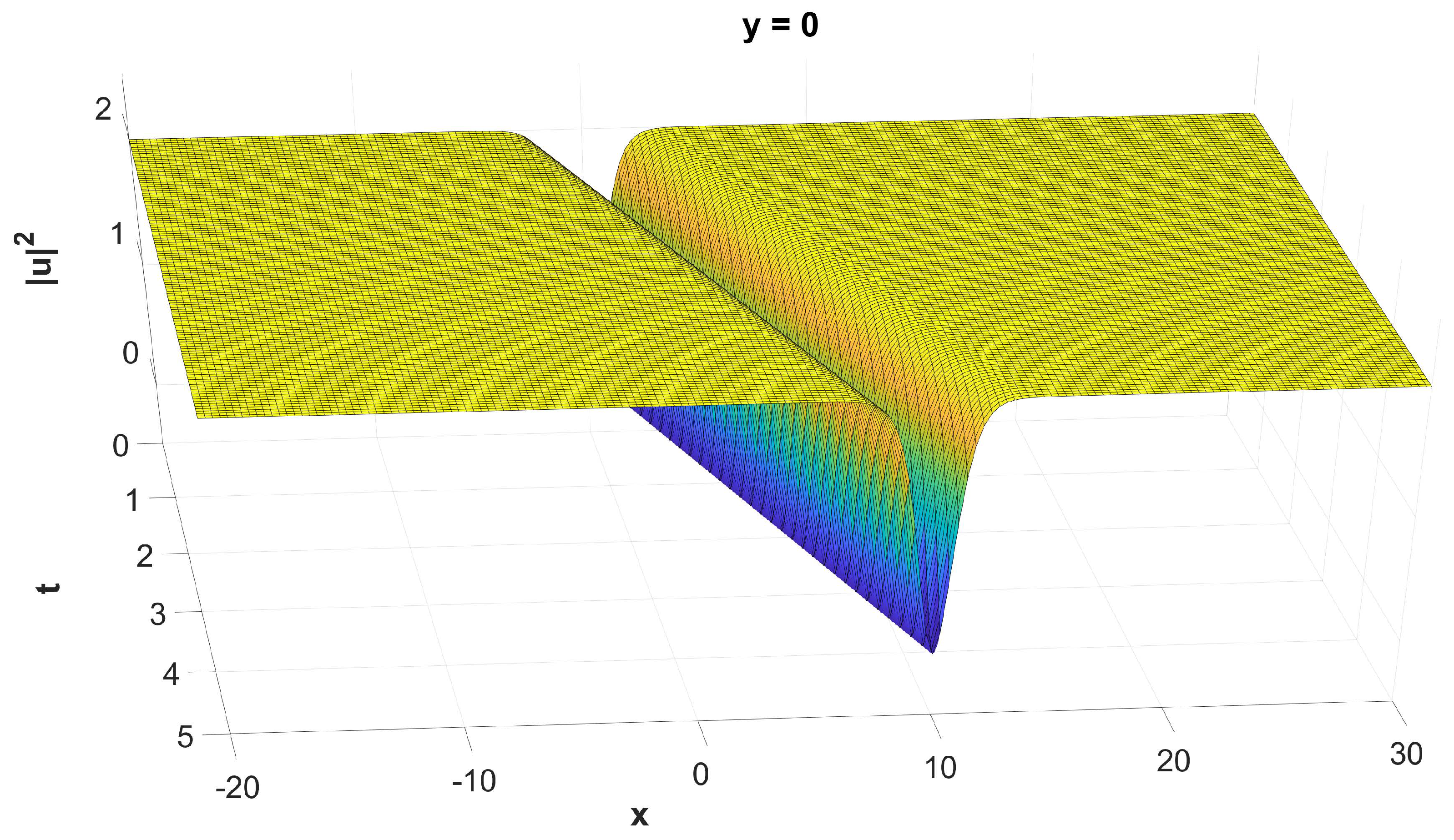

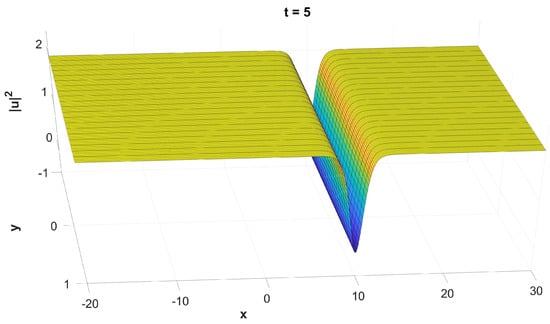

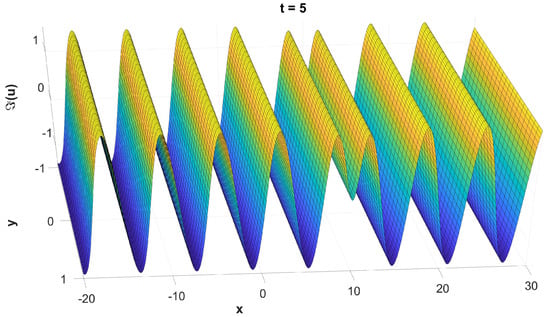

where is the numerical approximation of over the region for , which provides a sufficiently constant density outside of it, as seen in Figure 2.

Figure 2.

The graph of , evaluated numerically.

In Table 3, the maximum absolute solution error and the maximum relative mass error for different step sizes are shown. The is the maximum value that yields a stable solution for the preselected values of and .

Table 3.

The solution error and the mass relative error for different step sizes.

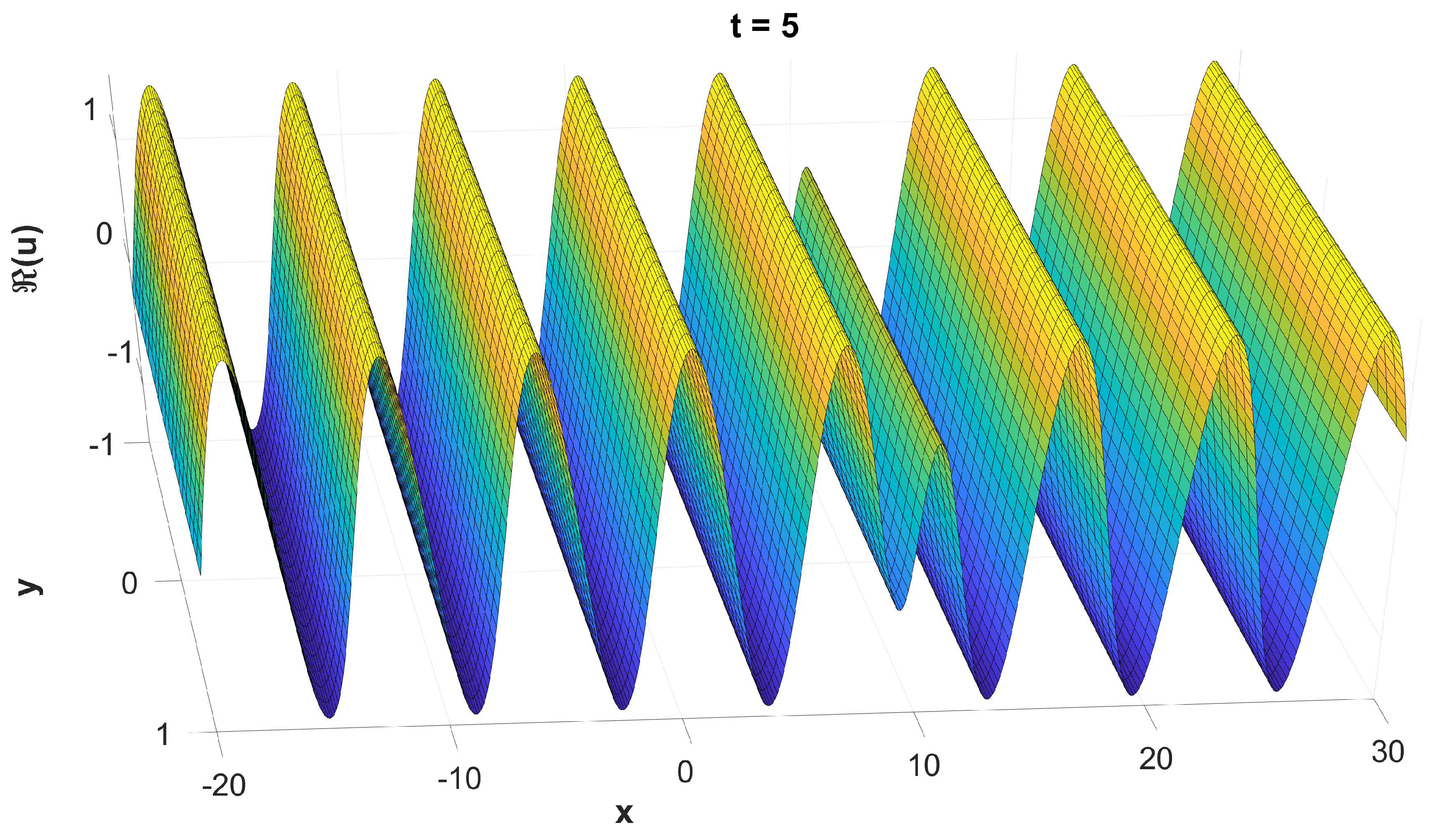

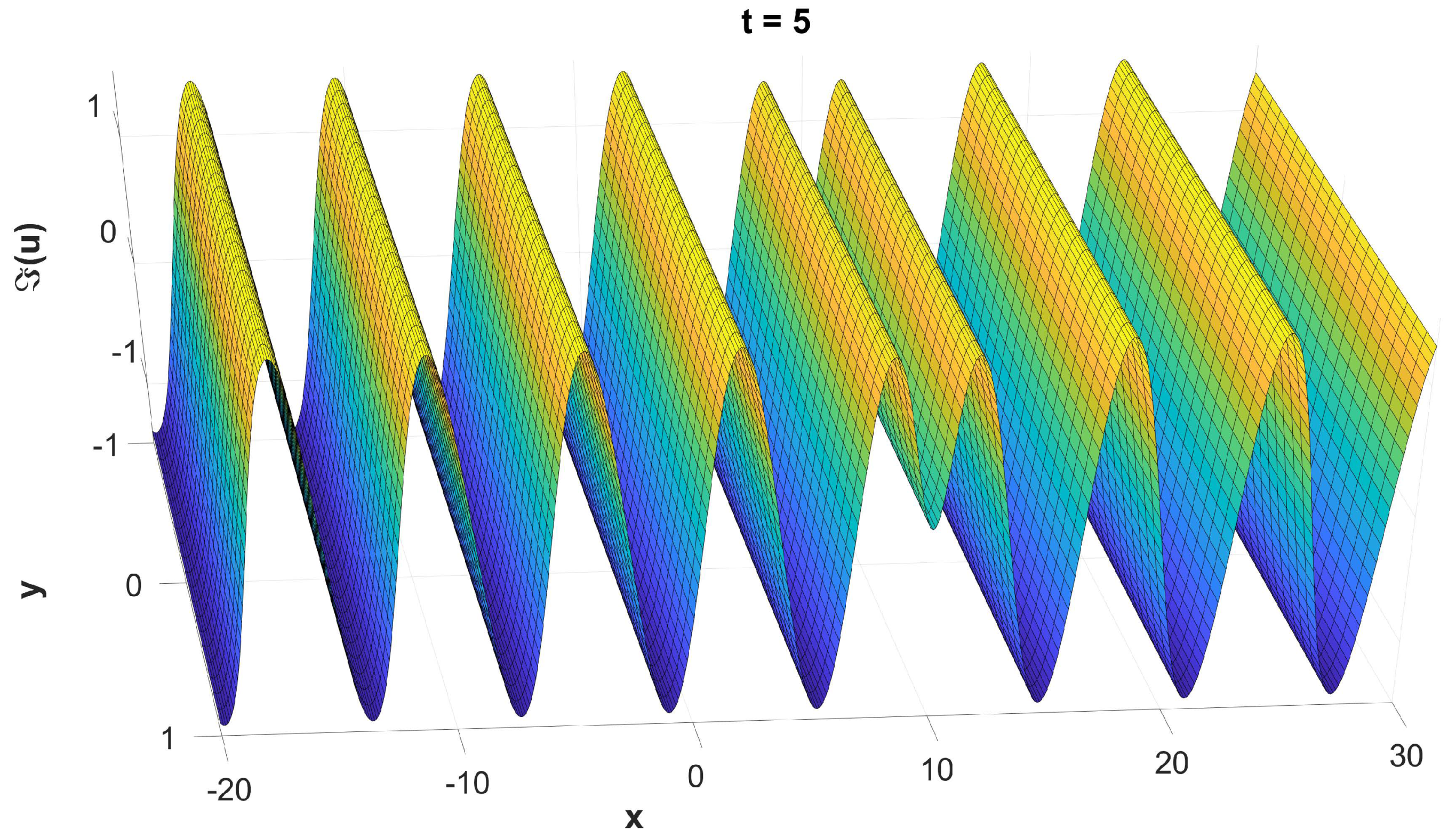

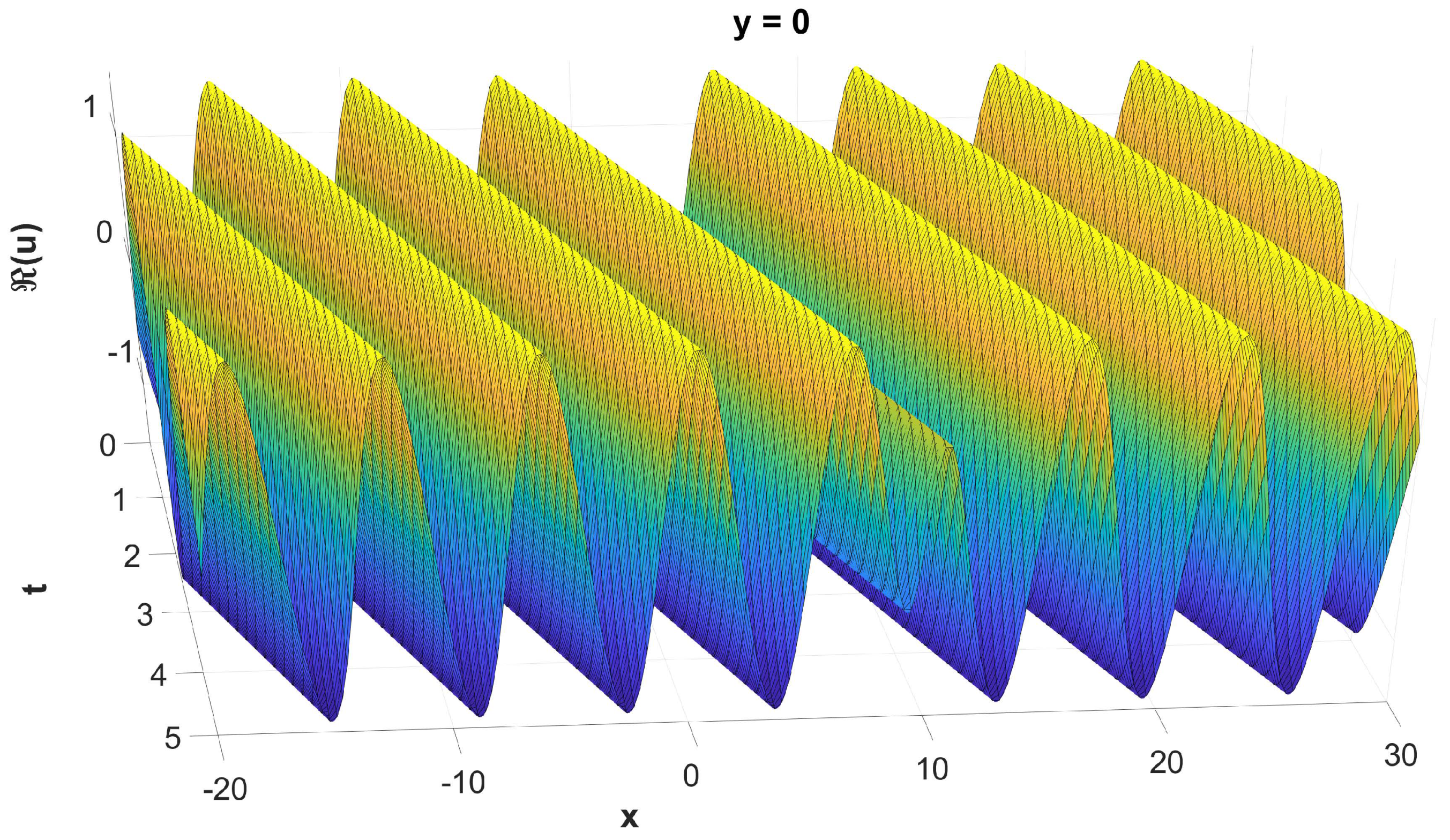

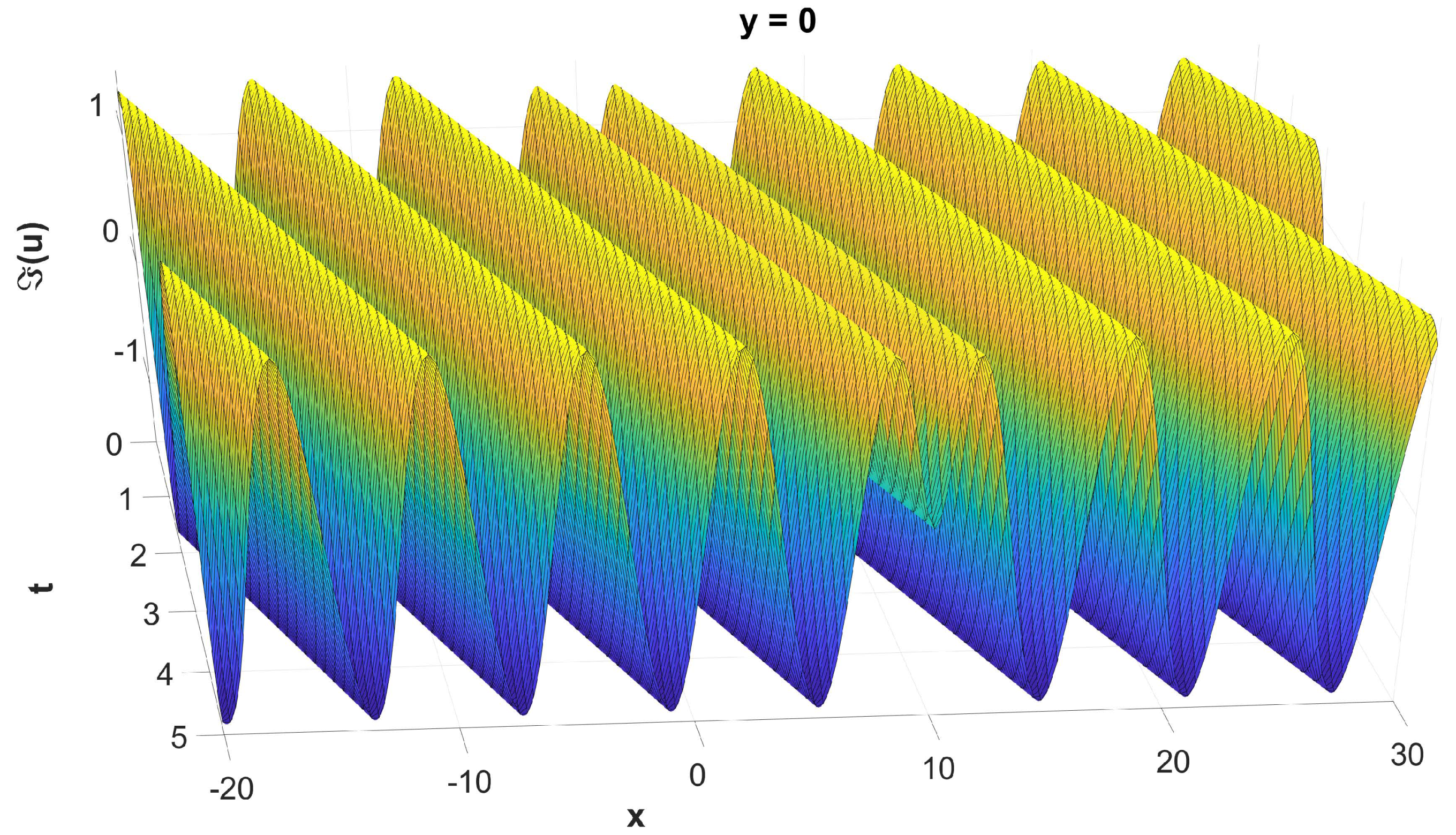

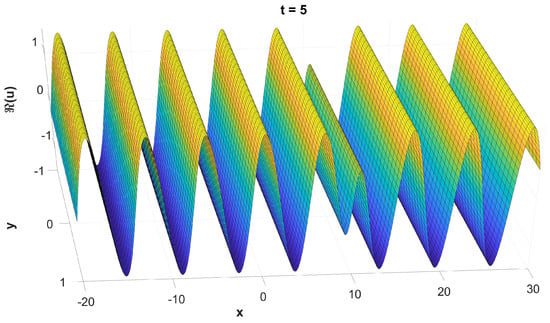

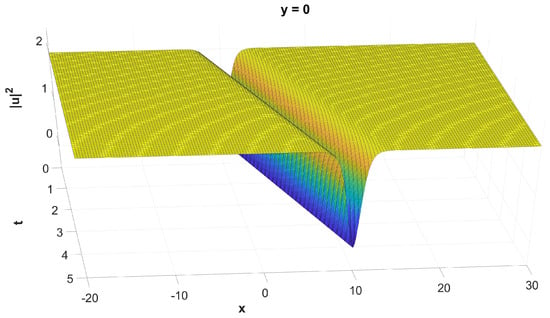

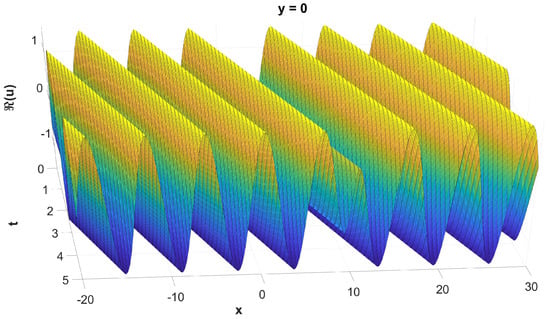

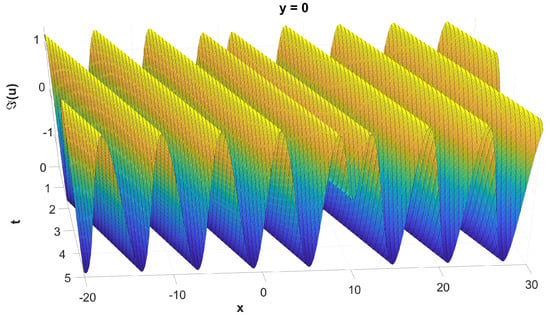

In fact, the errors in Table 3 correspond to the total error of both the finite difference schemes for the semi-discretisation along x and y, and the integrator along time t. For the purpose of eliminating the semi-discretisation error and to analyse the performance of the time integrator alone, we used the second derivatives of the solution (11) instead of applying the finite difference schemes. We used and , and the results are presented in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7.

Figure 3.

The graph of , evaluated numerically.

Figure 4.

The graph of , evaluated numerically.

Figure 5.

The graph of , evaluated numerically.

Figure 6.

The graph of , evaluated numerically.

Figure 7.

The graph of , evaluated numerically.

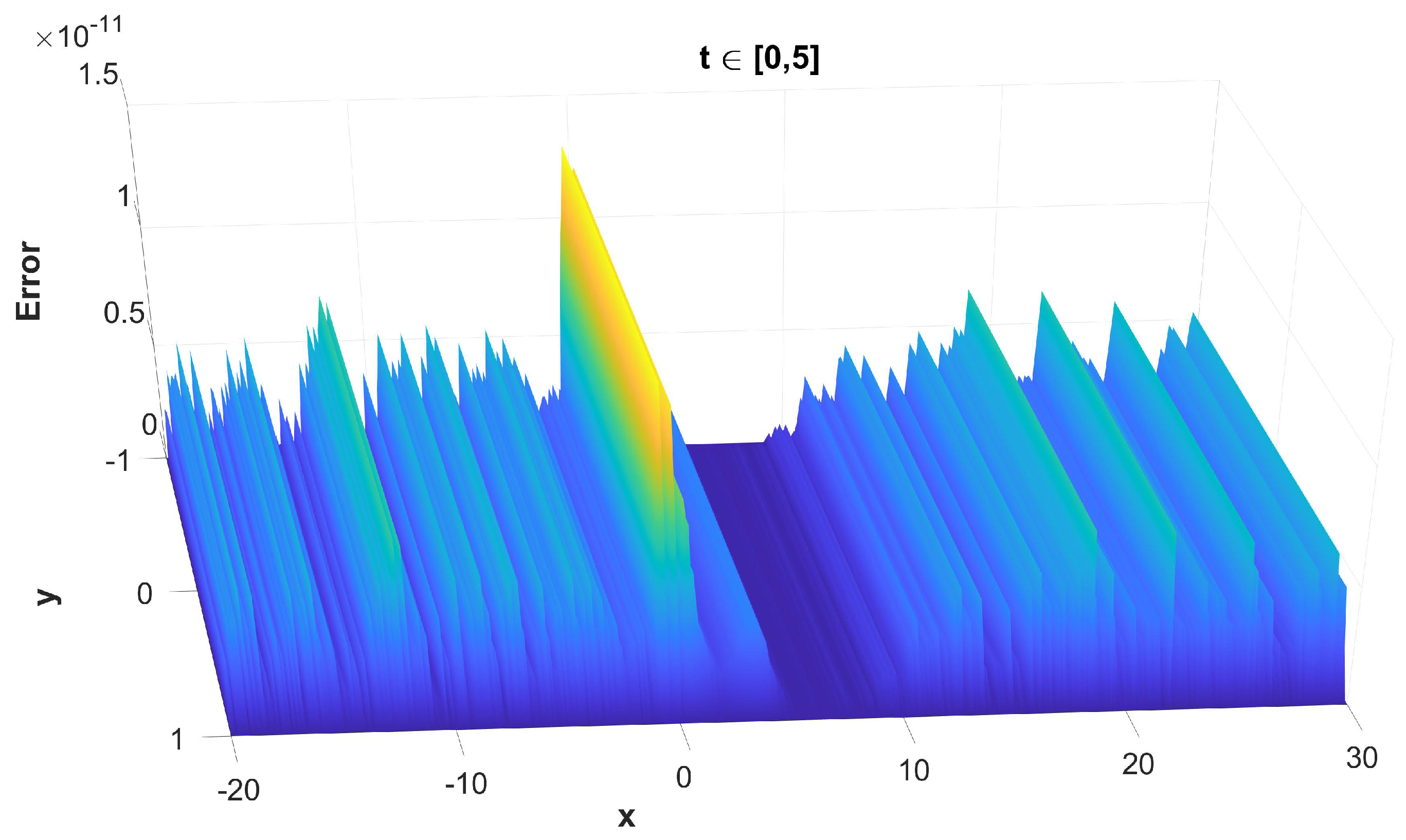

4.3. Error

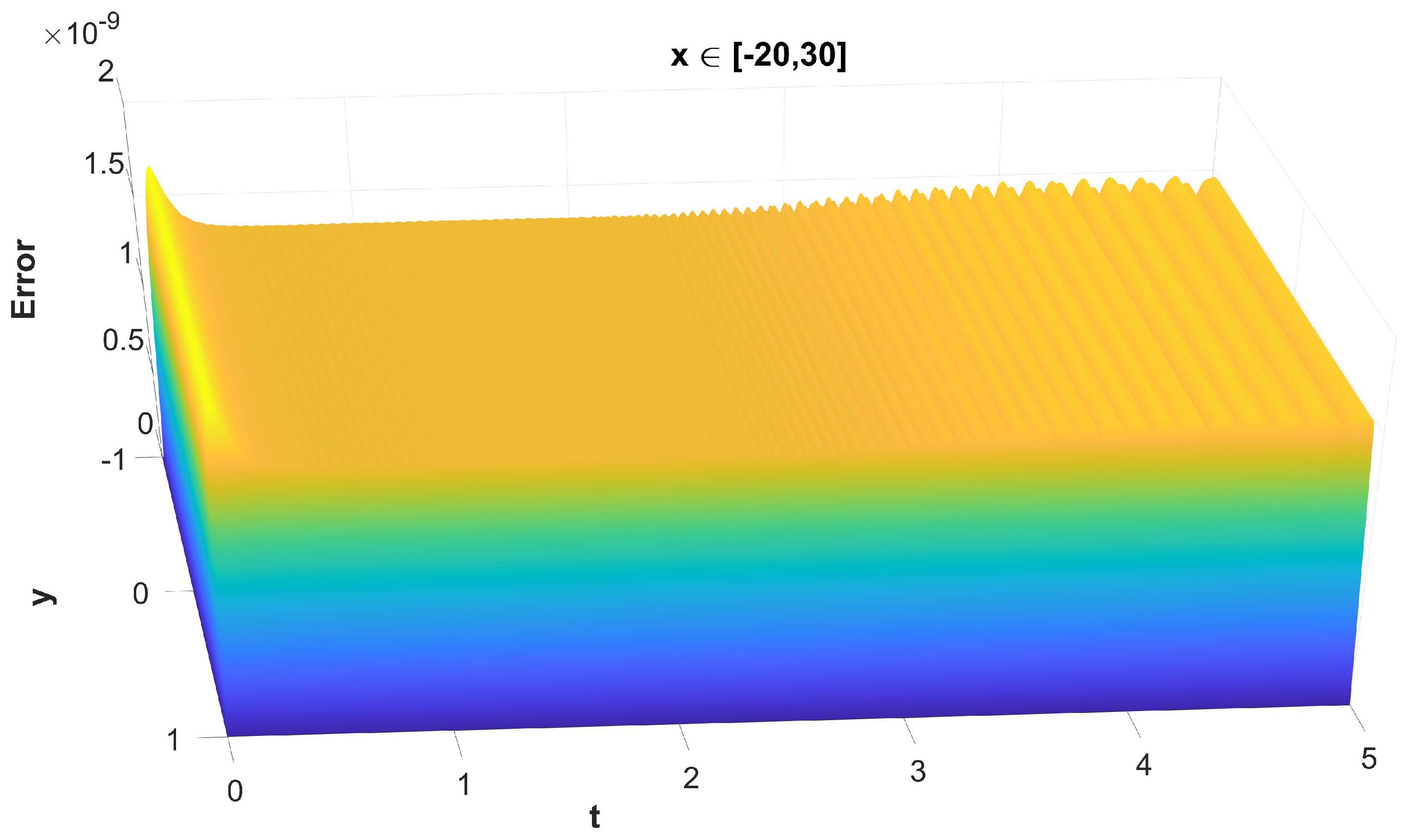

We evaluated the error of the numerical approximation compared to the theoretical solution. We used two different implementations: in the first one, we used the second derivatives of the known solution and we chose and ; in the second one, we used finite difference schemes for the spatial semi-discretisation and we chose and .

More specifically, we present

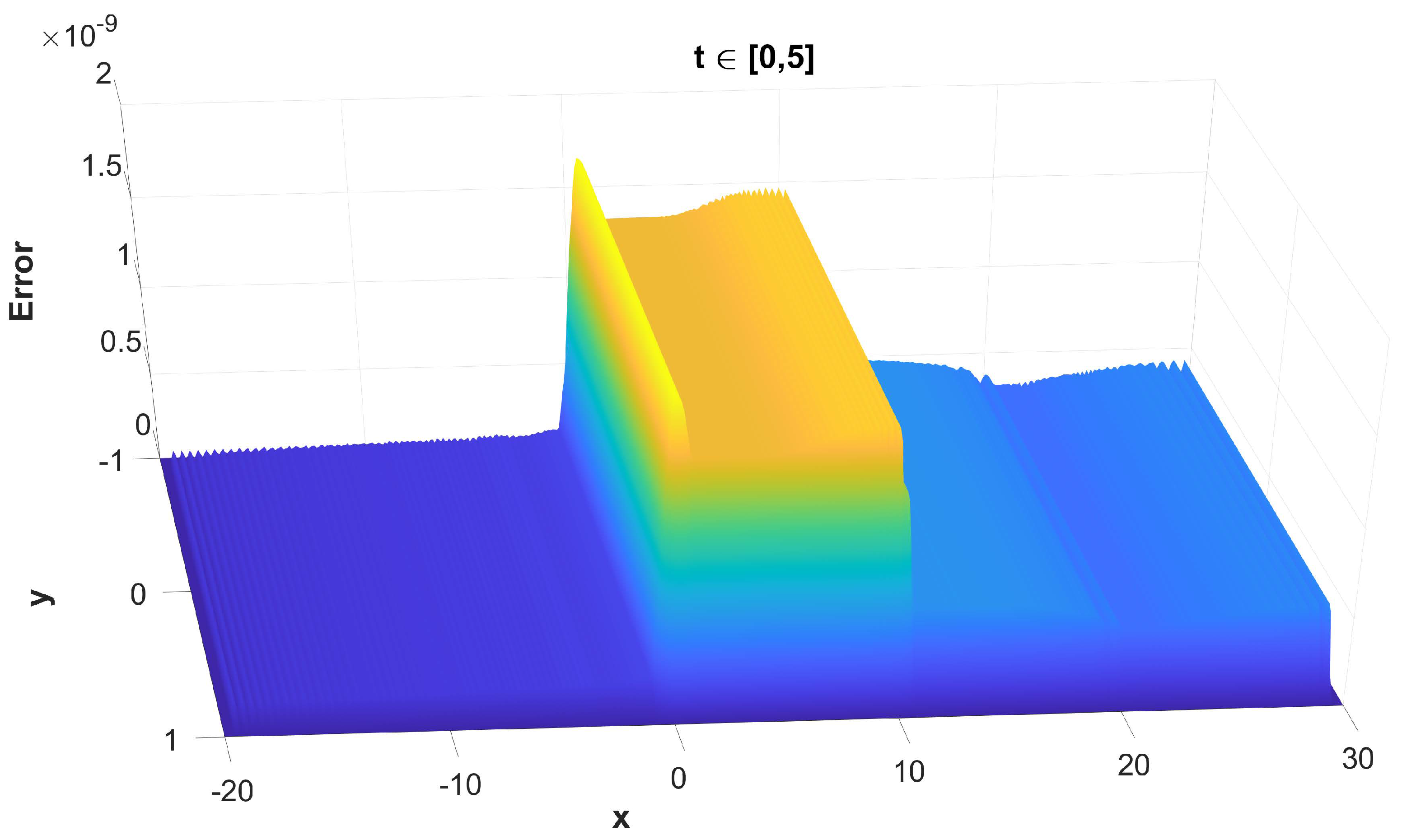

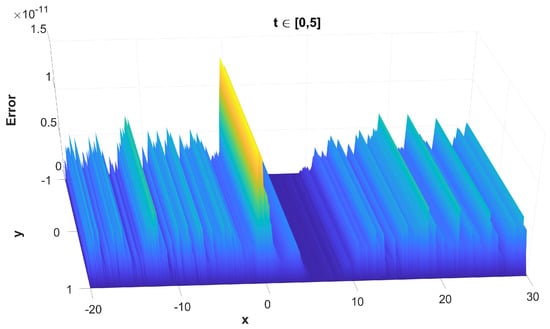

- The maximum, along , solution error without and with the use of finite difference schemes in Figure 8 and Figure 9, respectively;

Figure 8. The maximum, along t, absolute error of the solution versus x and y without the use of finite difference schemes for the spatial semi-discretisation.

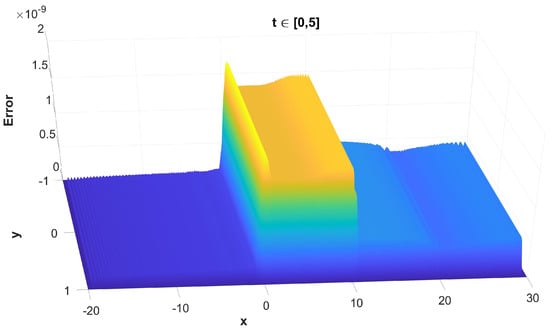

Figure 8. The maximum, along t, absolute error of the solution versus x and y without the use of finite difference schemes for the spatial semi-discretisation. Figure 9. The maximum, along t, absolute error of the solution versus x and y with the use of finite difference schemes for the spatial semi-discretisation.

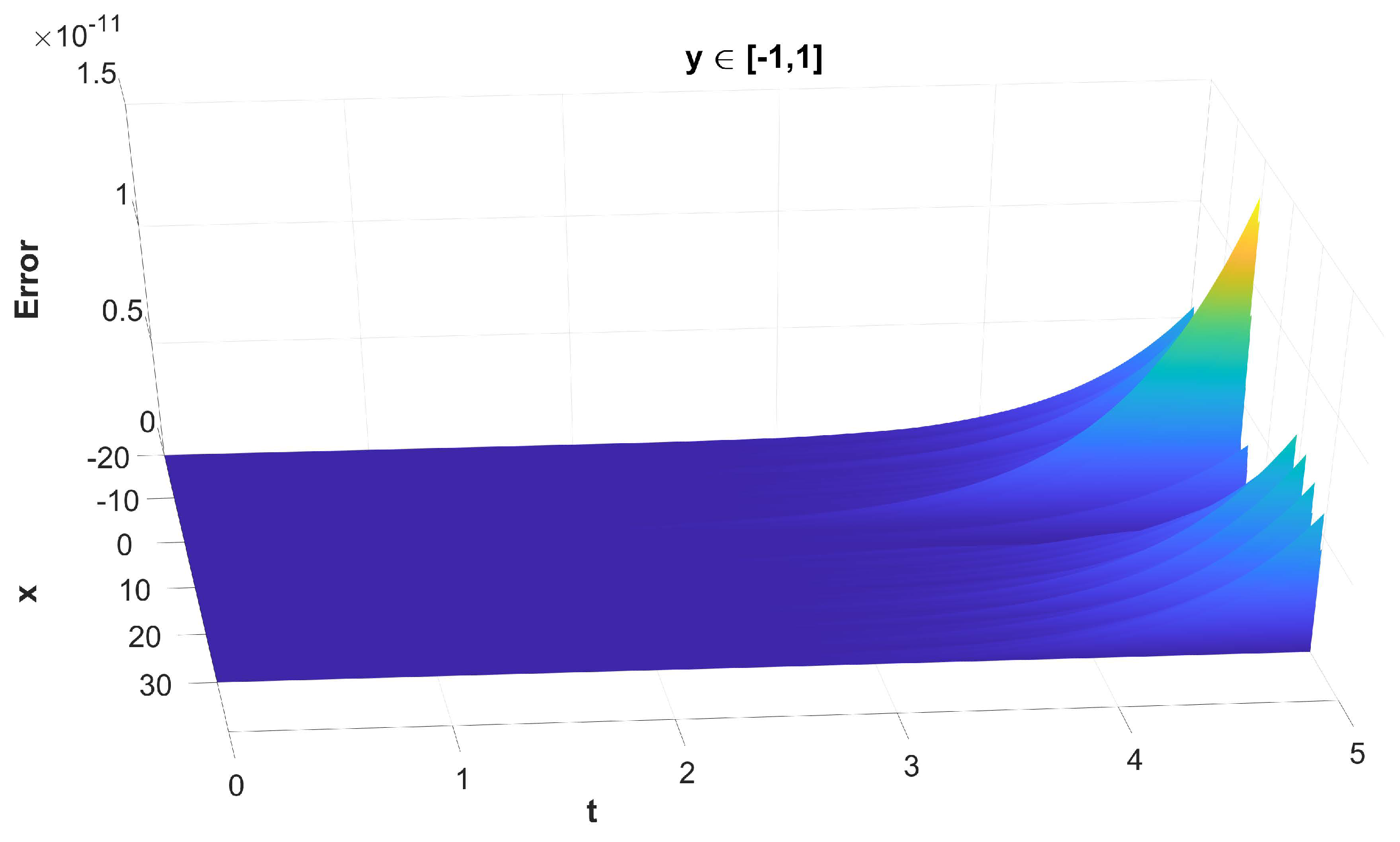

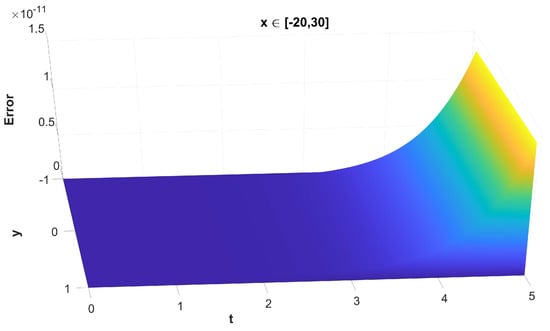

Figure 9. The maximum, along t, absolute error of the solution versus x and y with the use of finite difference schemes for the spatial semi-discretisation. - The maximum, along , solution error without and with the use of finite difference schemes in Figure 10 and Figure 11, respectively;

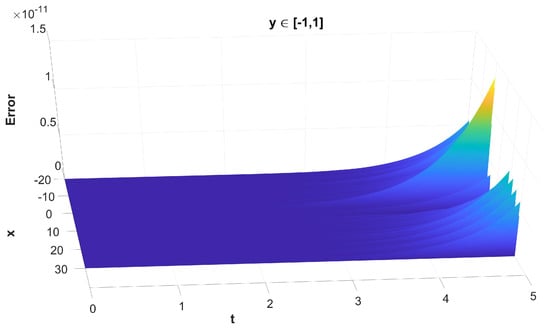

Figure 10. The maximum, along y, absolute error of the solution versus t and x without the use of finite difference schemes for the spatial semi-discretisation.

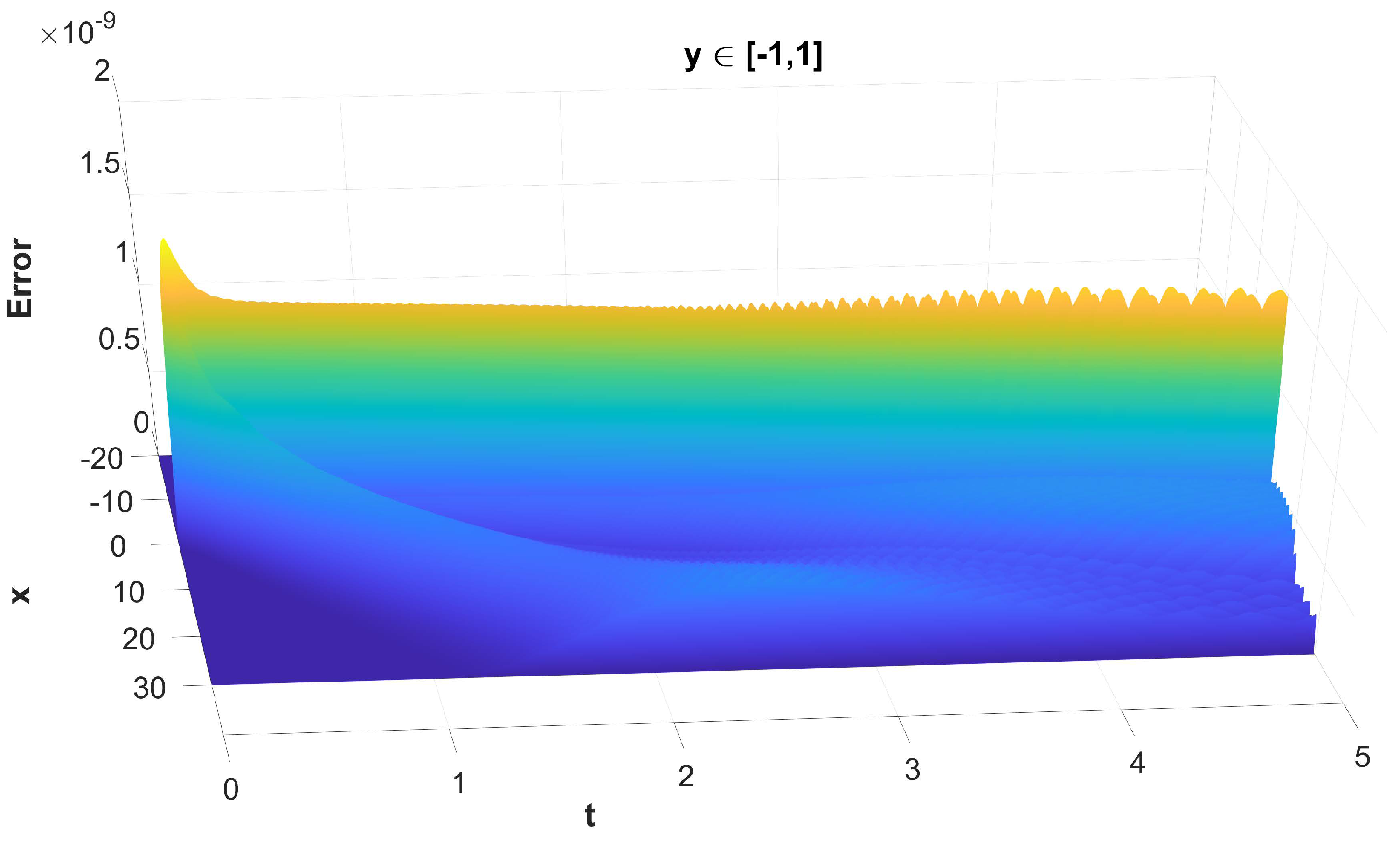

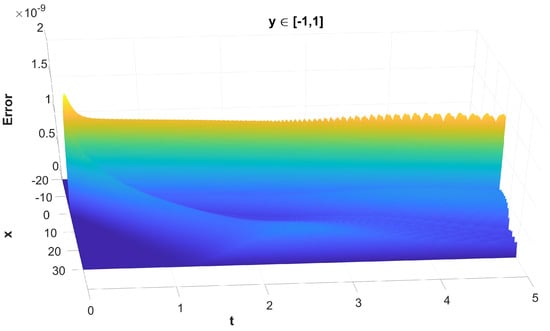

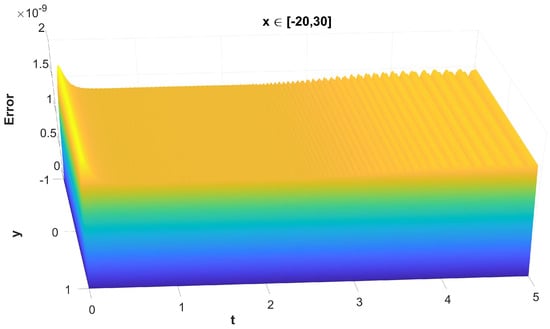

Figure 10. The maximum, along y, absolute error of the solution versus t and x without the use of finite difference schemes for the spatial semi-discretisation. Figure 11. The maximum, along y, absolute error of the solution versus t and x with the use of finite difference schemes for the spatial semi-discretisation.

Figure 11. The maximum, along y, absolute error of the solution versus t and x with the use of finite difference schemes for the spatial semi-discretisation. - The maximum, along , solution error without and with the use of finite difference schemes in Figure 12 and Figure 13, respectively;

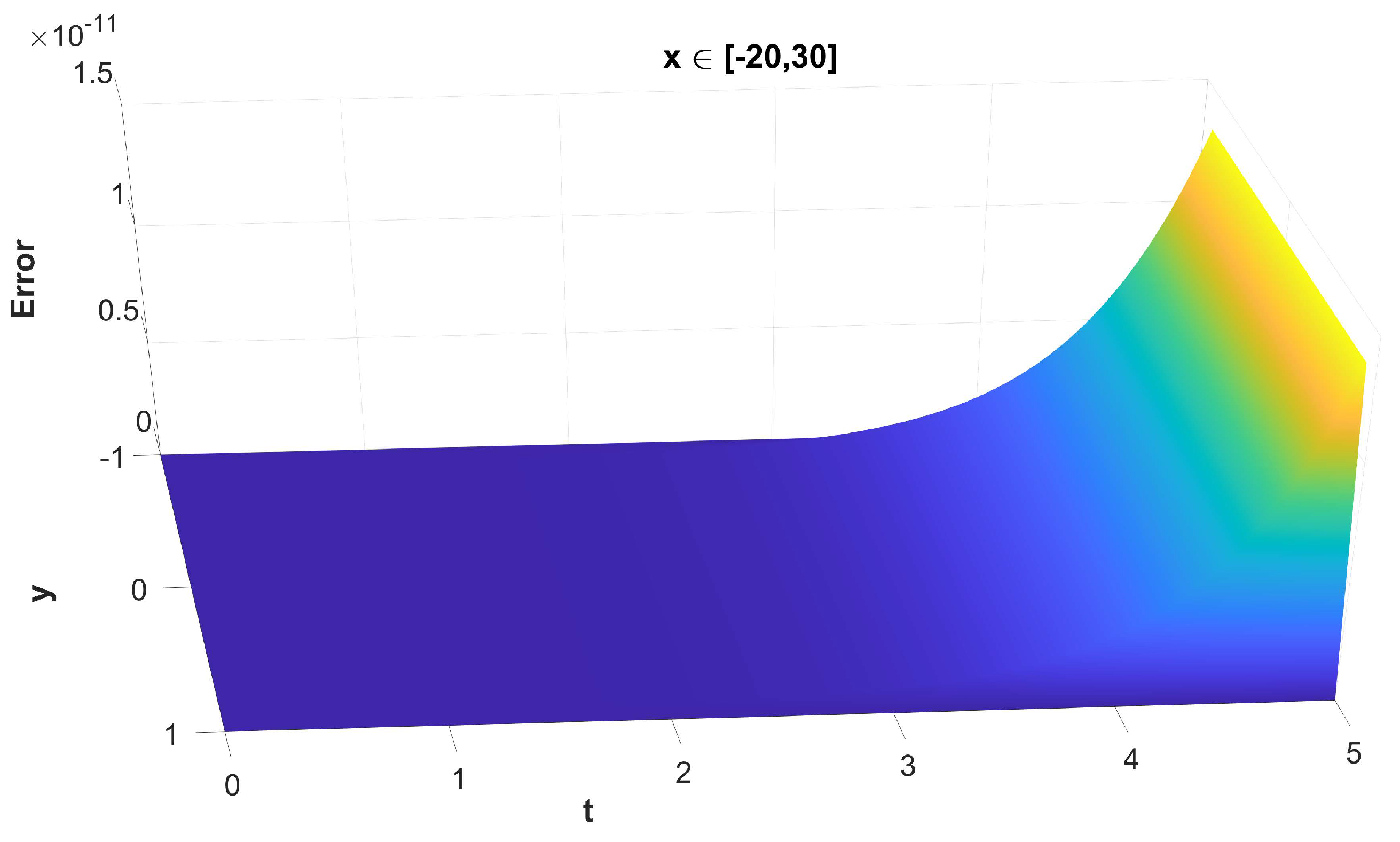

Figure 12. The maximum, along x, absolute error of the solution versus t and y without the use of finite difference schemes for the spatial semi-discretisation.

Figure 12. The maximum, along x, absolute error of the solution versus t and y without the use of finite difference schemes for the spatial semi-discretisation. Figure 13. The maximum, along x, absolute error of the solution versus t and y with the use of finite difference schemes for the spatial semi-discretisation.

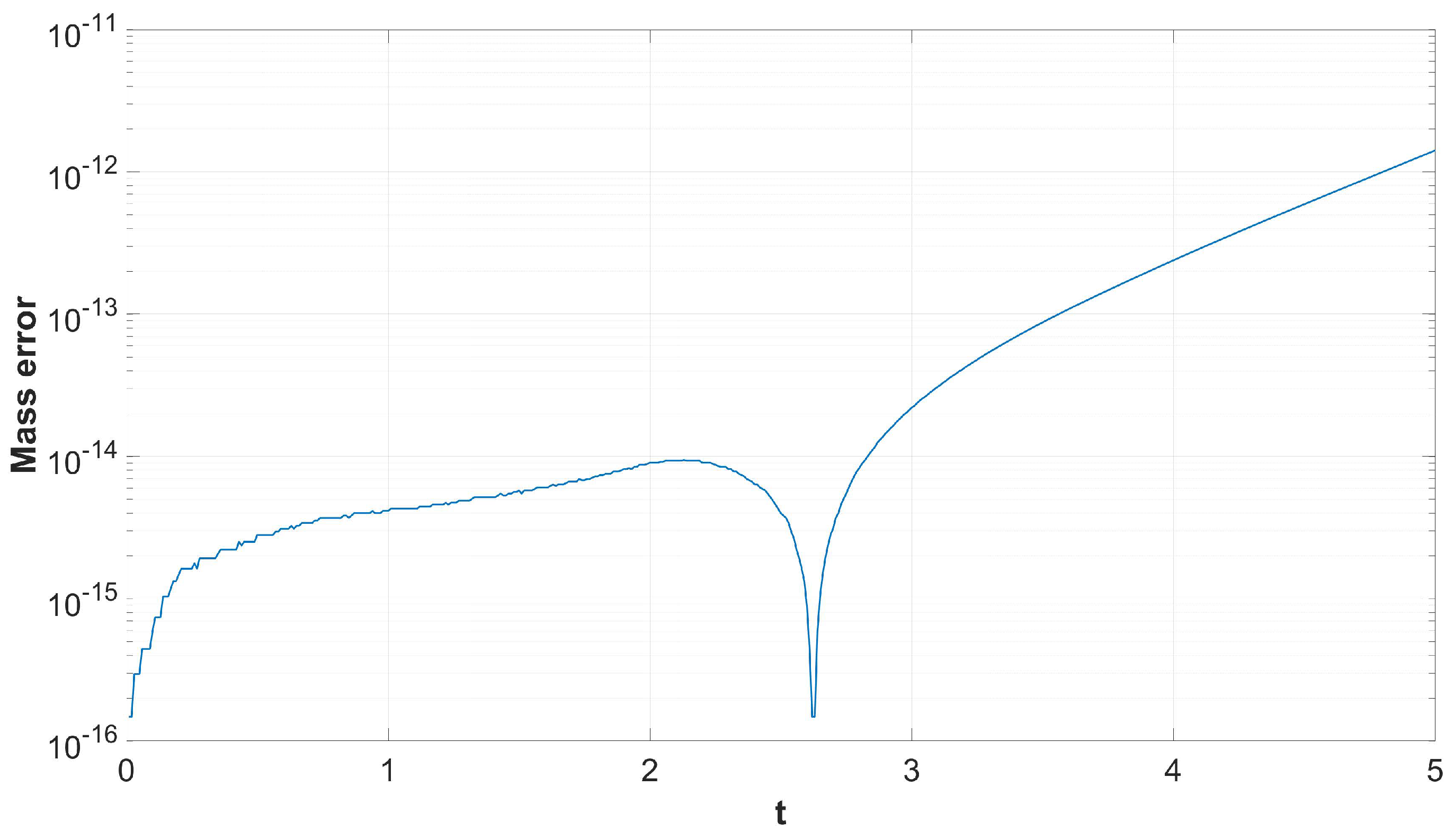

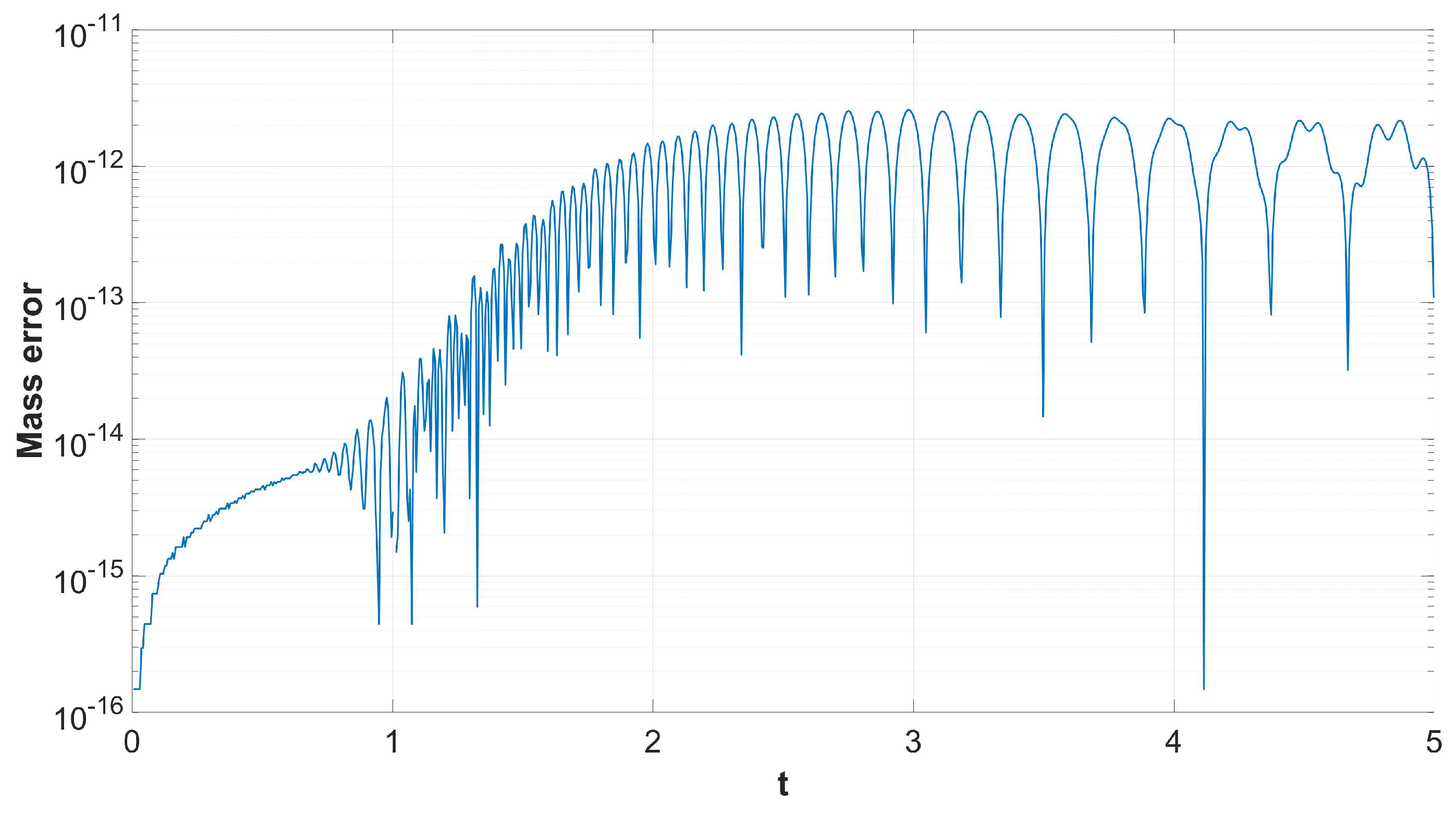

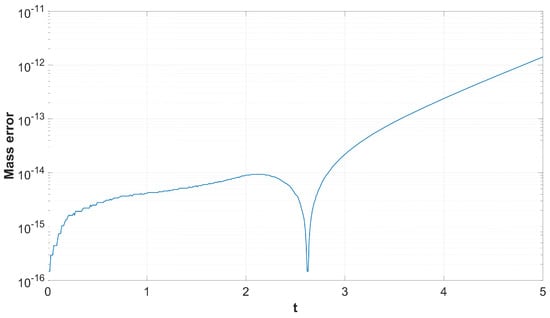

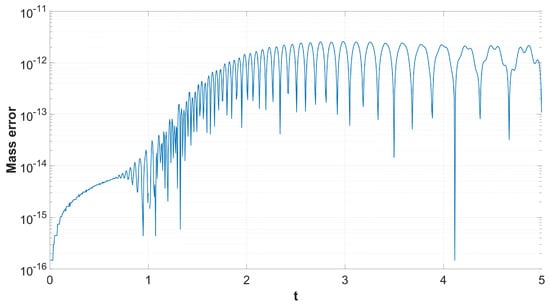

Figure 13. The maximum, along x, absolute error of the solution versus t and y with the use of finite difference schemes for the spatial semi-discretisation. - The mass error versus without and with the use of finite difference schemes in Figure 14 and Figure 15 respectively.

Figure 14. The mass error versus t without the use of finite difference schemes for the spatial semi-discretisation.

Figure 14. The mass error versus t without the use of finite difference schemes for the spatial semi-discretisation. Figure 15. The mass error versus t with the use of finite difference schemes for the spatial semi-discretisation.

Figure 15. The mass error versus t with the use of finite difference schemes for the spatial semi-discretisation.

It is important to understand that, while Figure 8, Figure 10, Figure 12 and Figure 14 represent the error of the time-stepper alone, this is not the case for Figure 9, Figure 11, Figure 13 and Figure 15, where we observe the total error of the time-stepper in addition to the error of the space semi-discretisation finite difference scheme. Furthermore, the error of the finite difference scheme is dominant, and this is why the error in the second case is larger by approximately two orders of magnitude. We observe that, without the use of finite difference schemes for the spatial semi-discretisation, the maximum error is located near the area of the initial centre of the dark soliton , without being propagated along with the dark soliton centre itself, as seen in Figure 8. However, with the use of finite difference schemes for spatial semi-discretisation, the error is propagated differently along x and y, as seen in Figure 9, mainly along the track of the soliton peak . Regarding the error propagation in time, the error without the finite difference schemes gradually increases, as seen in Figure 10 and Figure 12, while with the finite difference schemes it rapidly increases in the first time steps, then slightly decreases and stays at the same order of magnitude with small oscillations, as seen in Figure 11 and Figure 13. This is due to the effect of the space semi-discretisation scheme on the error, in combination with the time integrator. The oscillations are of a numerical nature and are a known phenomenon caused by the linear finite difference schemes applied in the spatial semi-discretisation. The same oscillations are also observed in the mass error with finite difference schemes, as seen in Figure 15, but are absent in Figure 14, where no finite difference schemes are applied.

4.4. Efficiency

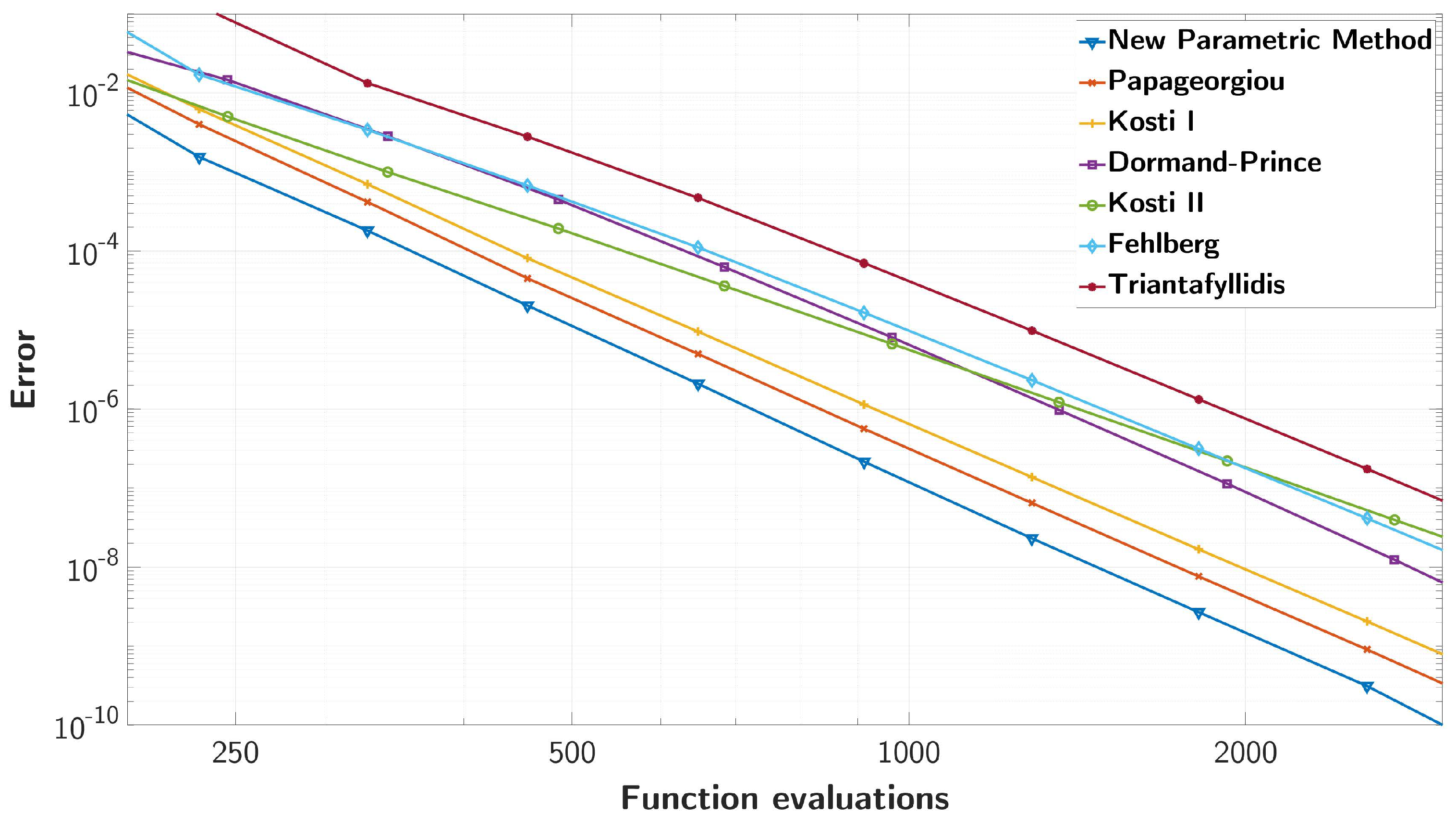

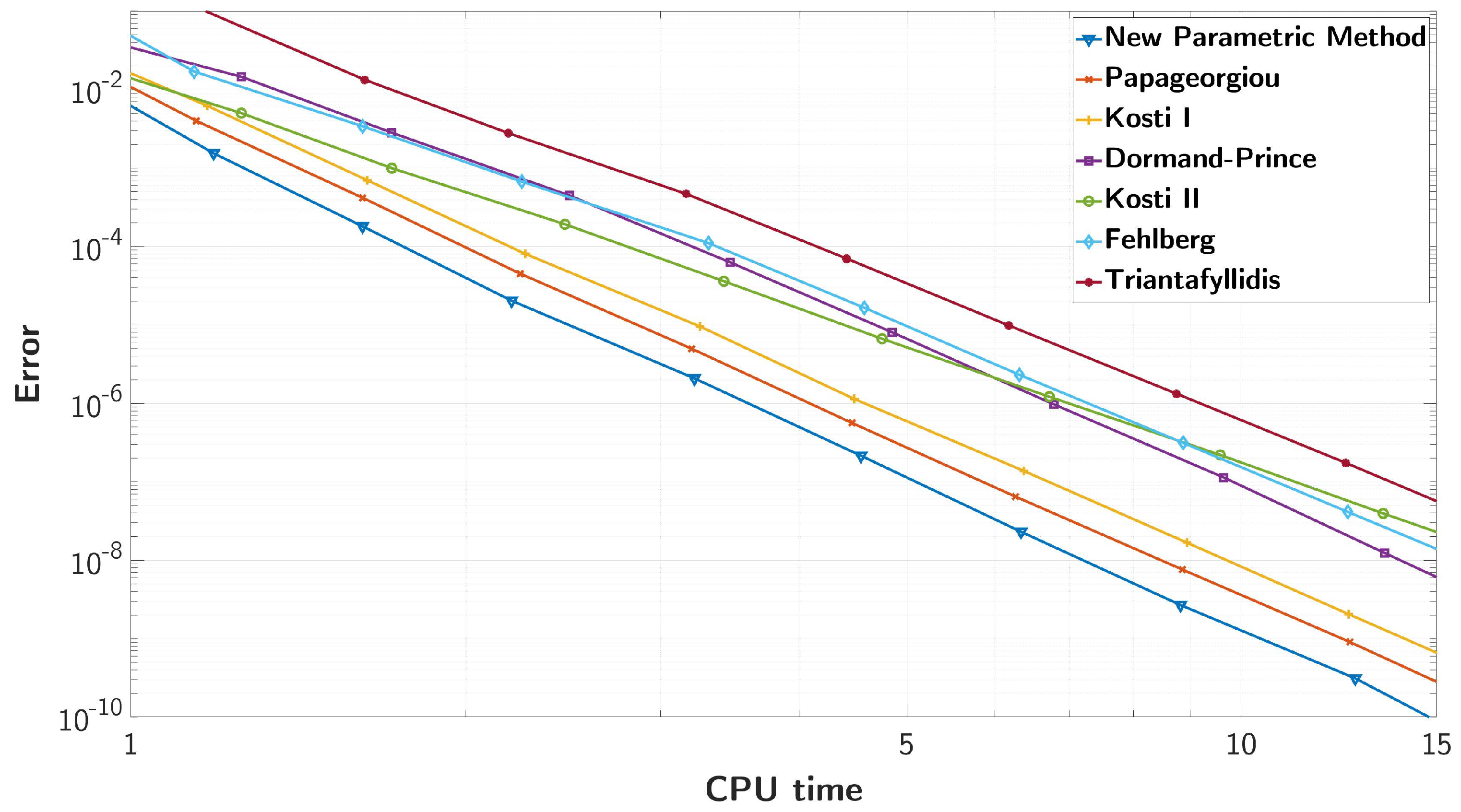

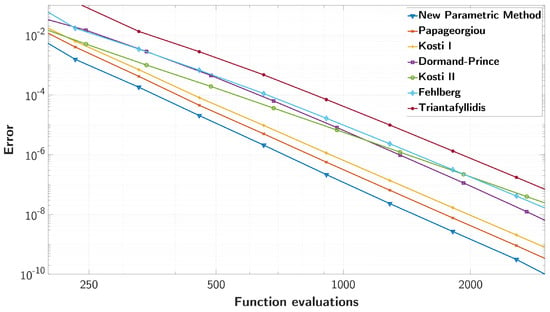

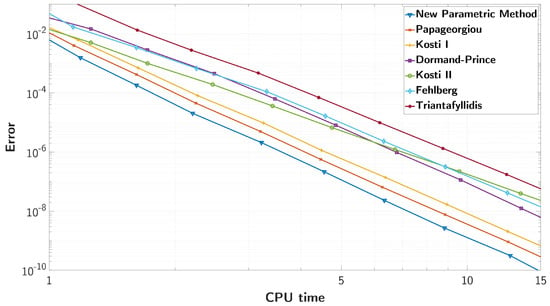

We measured the efficiency of the new method with other methods from the literature and present the results in Figure 16 and Figure 17.

Figure 16.

The maximum absolute error of the solution versus the function evaluations, for all compared methods.

Figure 17.

The maximum absolute error of the solution versus the CPU time, for all compared methods.

The compared methods are presented below:

- The new parametric method (Table 2)

- The method of Papageorgiou et al. [24]

- The method of Kosti et al. (I) [9]

- The method of Dormand et al. [23]

- The method of Kosti et al. (II) [10]

- The method of Fehlberg et al. [23]

- The method of Triantafyllidis et al. [11]

The efficiency of all compared methods in terms of the maximum absolute solution error, as expressed by (13), versus the function evaluations is presented in Figure 16. The order of accuracy of each method is represented by the slope of its corresponding efficiency line. Indeed, we verify that, except for two methods with fifth order and, thus, a smaller slope, five methods have sixth order, for which the lines are almost parallel. Additionally, we present the maximum absolute solution error versus the CPU time in seconds in Figure 17. In order to avoid fluctuations, we repeated each solution 10 times and used the median CPU time. We observe that the two graphs are similar, as the main contributor to the total computation time is the function evaluations. Furthermore, we see that the new optimised method is more efficient than all the other methods for all error orders.

5. Discussion

The numerical results are robust, exhibiting high efficiency among all step size values, with and without the use of finite difference schemes for the spatial semi-discretisation. Furthermore, the error propagation in time is typical of other explicit RK methods. The time integrator error is better showcased when no finite difference schemes are used, as in the case with finite difference schemes the error of the spatial discretisation method is dominant.

In general, the development of new RK methods that are more efficient than established methods in the literature is a challenging task, as one step of the method construction involves the solution of a system of numerous highly nonlinear equations. This is especially true for high-order methods that require a high number of stages, where the only way to solve the system is to use simplifying assumptions and/or fixed values for a set of coefficients. To our knowledge, there have been no explicit RK methods with order six or higher produced without the use of pre-determined coefficient values or simplifying assumptions. The solution with hand calculations is impossible, even for methods that use some assumptions, and even when using computer algebra software, as the solution is limited by the available computer memory and computational power. Leaving some variables free until the end of the system solution increases the complexity even further, but allows for the parameterisation of the method. Here, we managed to find a combination of a minimum number of fixed coefficients that still yields a solution with four free coefficients. The latter provide the method with some adaptability due to the four degrees of freedom. This versatility permits further optimisation, allowing for the construction of a method tailored to the nonlinear Schrödinger equation. The proposed methodology is general and can be applied to many problems, without being limited to linear problems or problems with oscillatory/periodic solutions.

Although the methodology offers satisfactory results, it also has limitations, namely the need to manually select the initially fixed coefficients and, most importantly, the actual fixed coefficients themselves, which hinder the optimisation potential of the method’s accuracy. Ideally, we aim to develop an optimisation process that concurrently maximises the number of free coefficients, minimises the number of fixed coefficients, and leads to a solvable system. Additionally, in our future work, we could combine this technique with other established techniques for better results, e.g., fitting techniques for problems with periodic solutions.

6. Conclusions

In this paper, we investigated the numerical solution of the (2+1)-dimensional nonlinear Schrödinger equation. We developed a parametric sixth-order eight-stage explicit Runge–Kutta method with four of their coefficients treated as free parameters/degrees of freedom, and we provided the full process of constructing the method and the explicit formulas of all other coefficients. We optimised the new parametric method to obtain the optimal Runge–Kutta method that performs efficiently by numerical testing. We performed stability analysis, and we utilised an exact dark soliton solution to measure the global error and the mass error of the new method. We also compared the efficiency of the new method and other numerical integrators, revealing the superiority of the new method.

Author Contributions

Conceptualisation, Z.A.A.; formal analysis, Z.A.A., A.A.K. and M.A.R.; investigation, Z.A.A., A.A.K. and M.A.R.; methodology, Z.A.A., A.A.K. and M.A.R.; verification, Z.A.A., A.A.K. and M.A.R.; writing—original draft, Z.A.A.; writing—review and editing, Z.A.A., A.A.K. and M.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

We thank the anonymous reviewers for their useful comments and remarks.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moloney, J.V.; Newell, A.C. Nonlinear optics. Phys. D Nonlinear Phenom. 1990, 44, 1–37. [Google Scholar] [CrossRef]

- Pitaevskii, L.; Stringari, S. Bose-Einstein Condensation; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Malomed, B. Multi-Component Bose-Einstein Condensates: Theory. In Emergent Nonlinear Phenomena in Bose-Einstein Condensates: Theory and Experiment; Springer: Berlin/Heidelberg, Germany, 2008; pp. 287–305. [Google Scholar] [CrossRef]

- Guardia, M.; Hani, Z.; Haus, E.; Maspero, A.; Procesi, M. Strong nonlinear instability and growth of Sobolev norms near quasiperiodic finite gap tori for the 2D cubic NLS equation. J. Eur. Math. Soc. 2022. published online first. [Google Scholar] [CrossRef]

- Tsitoura, F.; Anastassi, Z.A.; Marzuola, J.L.; Kevrekidis, P.G.; Frantzeskakis, D.J. Dark Solitons Potential Nonlinearity Steps. Phys. Rev. A 2016, 94, 063612. [Google Scholar] [CrossRef]

- Tsitoura, F.; Anastassi, Z.A.; Marzuola, J.L.; Kevrekidis, P.G.; Frantzeskakis, D.J. Dark soliton scattering in symmetric and asymmetric double potential barriers. Phys. Lett. A 2017, 381, 2514–2520. [Google Scholar] [CrossRef]

- Feng, D.; Jiao, J.; Jiang, G. Optical solitons and periodic solutions of the (2+1)-dimensional nonlinear Schrödinger’s equation. Phys. Lett. A 2018, 382, 2081–2084. [Google Scholar] [CrossRef]

- Fang, Y.; Yang, Y.; You, X.; Ma, L. Modified THDRK methods for the numerical integration of the Schrödinger equation. Int. J. Mod. Phys. C 2020, 31, 2050149. [Google Scholar] [CrossRef]

- Kosti, A.A.; Colreavy-Donnelly, S.; Caraffini, F.; Anastassi, Z.A. Efficient Computation of the Nonlinear Schrödinger Equation with Time-Dependent Coefficients. Mathematics 2020, 8, 374. [Google Scholar] [CrossRef]

- Kosti, A.A.; Anastassi, Z.A.; Simos, T.E. An optimized explicit Runge–Kutta method with increased phase-lag order for the numerical solution of the Schrödinger equation and related problems. J. Math. Chem. 2010, 47, 315. [Google Scholar] [CrossRef]

- Triantafyllidis, T.V.; Anastassi, Z.A.; Simos, T.E. Two optimized Runge–Kutta methods for the solution of the Schrödinger equation. MATCH Commun. Math. Comput. Chem. 2008, 60, 3. [Google Scholar]

- Zhang, Y.; Fang, Y.; You, X.; Liu, G. Trigonometrically-fitted multi-derivative linear methods for the resonant state of the Schrödinger equation. J. Math. Chem. 2018, 56, 1250–1261. [Google Scholar] [CrossRef]

- Shokri, A.; Mehdizadeh Khalsaraei, M. A new implicit high-order six-step singularly P-stable method for the numerical solution of Schrödinger equation. J. Math. Chem. 2021, 59, 224–249. [Google Scholar] [CrossRef]

- Shokri, A.; Vigo-Aguiar, J.; Mehdizadeh Khalsaraei, M.; Garcia-Rubio, R. A new implicit six-step P-stable method for the numerical solution of Schrödinger equation. J. Math. Chem. 2019, 97, 802–817. [Google Scholar] [CrossRef]

- Obaidat, S.; Mesloub, S. A New Explicit Four-Step Symmetric Method for Solving Schrödinger’s Equation. Mathematics 2019, 7, 1124. [Google Scholar] [CrossRef]

- Jerbi, H.; Ben Aoun, S.; Omri, M.; Simos, T.E.; Tsitouras, C. A Neural Network Type Approach for Constructing Runge–Kutta Pairs of Orders Six and Five That Perform Best on Problems with Oscillatory Solutions. Mathematics 2022, 10, 827. [Google Scholar] [CrossRef]

- Kovalnogov, V.N.; Fedorov, R.V.; Khakhalev, Y.A.; Simos, T.E.; Tsitouras, C. A Neural Network Technique for the Derivation of Runge–Kutta Pairs Adjusted for Scalar Autonomous Problems. Mathematics 2021, 9, 1842. [Google Scholar] [CrossRef]

- Anastassi, Z.A.; Kosti, A.A. A 6(4) optimized embedded Runge–Kutta–Nyström pair for the numerical solution of periodic problems. J. Comput. Appl. Math. 2015, 275, 311–320. [Google Scholar] [CrossRef]

- Kosti, A.A.; Anastassi, Z.A. Explicit almost P-stable Runge–Kutta–Nyström methods for the numerical solution of the two-body problem. Comput. Appl. Math. 2015, 34, 647–659. [Google Scholar] [CrossRef]

- Demba, M.; Senu, N.; Ismail, F. A 5(4) Embedded Pair of Explicit Trigonometrically-Fitted Runge–Kutta–Nyström Methods for the Numerical Solution of Oscillatory Initial Value Problems. Math. Comput. Appl. 2016, 21, 46. [Google Scholar] [CrossRef]

- Ahmad, N.A.; Senu, N.; Ismail, F. Phase-Fitted and Amplification-Fitted Higher Order Two-Derivative Runge–Kutta Method for the Numerical Solution of Orbital and Related Periodical IVPs. Math. Probl. Eng. 2017, 2017, 1871278. [Google Scholar] [CrossRef]

- Ramos, H.; Rufai, M.A. An adaptive one-point second-derivative Lobatto-type hybrid method for solving efficiently differential systems. Int. J. Comput. Math. 2022, 99, 1687–1705. [Google Scholar] [CrossRef]

- Butcher, J.C. Trees and numerical methods for ordinary differential equations. Numer. Alg. 2010, 53, 153–170. [Google Scholar] [CrossRef]

- Papageorgiou, G.; Tsitouras, C.; Papakostas, S.N. Runge-Kutta pairs for periodic initial value problems. Computing 1993, 51, 151–163. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).