Door State Recognition Method for Wall Reconstruction from Scanned Scene in Point Clouds

Abstract

:1. Introduction

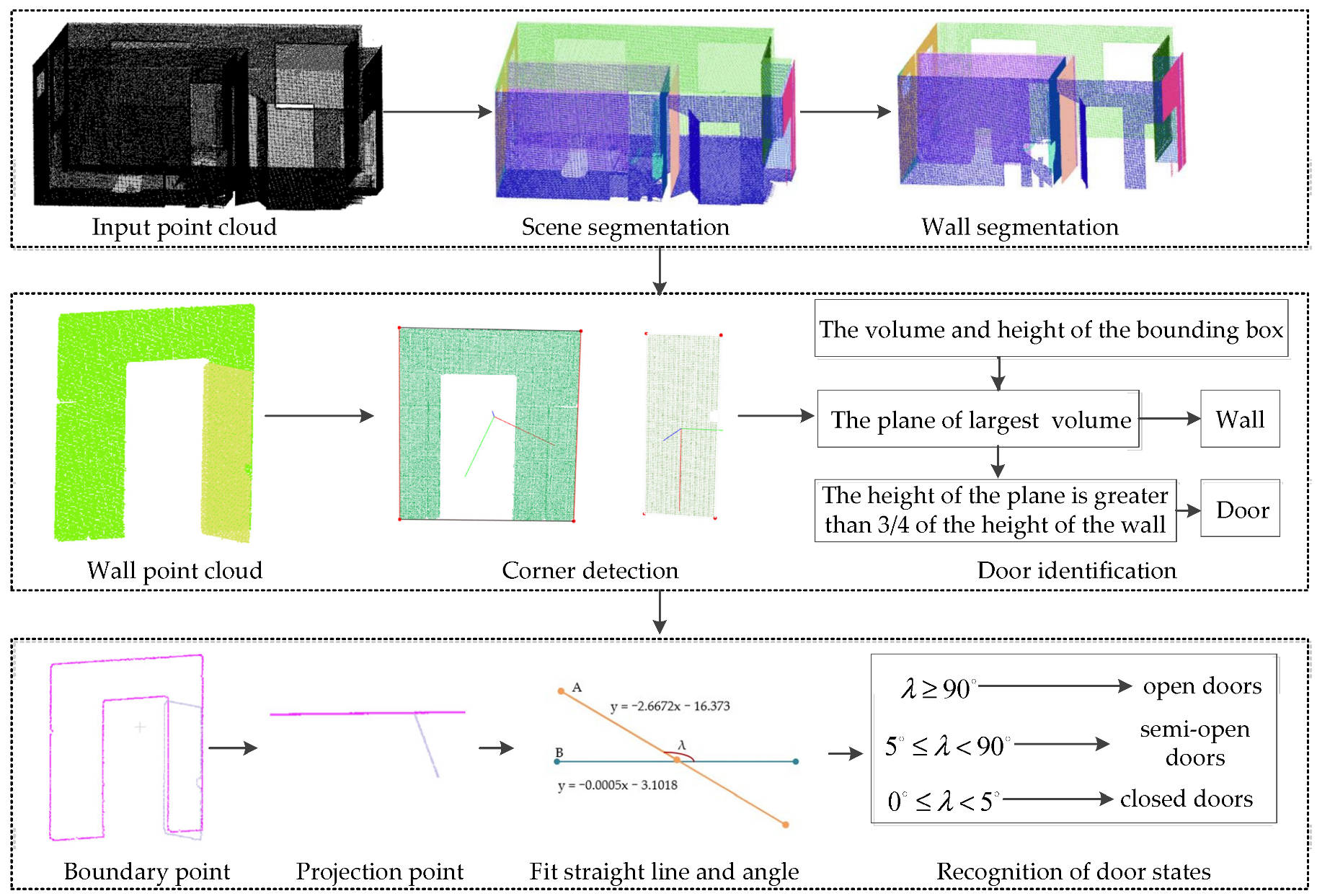

2. Overview

3. Method

3.1. Wall Segmentation and Extraction

3.2. Corner Detection for Door Recognition

3.2.1. Corner Detection

3.2.2. Door Recognition

3.3. Door State Recognition Based on Boundary Extraction and Straight-Line Fitting

3.3.1. Boundary Extraction

- (1)

- For any point pi in the indoor scene data, P = {p0, p1, p2 …, pn}, suppose the covariance matrix is established by pi and its k-nearest neighbor points Nj = {xj, yj, zj}(j = 0, 1, …, k – 1). The eigenvalues of Mi are positive and ordered as λ0 ≤ λ1 ≤ λ2, and the normal vector ni can be determined by the eigenvector corresponding to λ0.

- (2)

- We project point pi and its neighbor points Nj on the plane that is perpendicular to the normal vector, ni, as in Figure 4. and are, respectively, the projection of pi and Nj (Figure 5), and the vector uj(j = 0, 1, …, k – 1) is from to . The angle, αj, between other projection vectors, uk and uj, is calculated,where βj is the angle between uk and ni × uj.

- (3)

- The angles αj are ordered in descending, and the angle between adjacent vectors is calculated by Equation (4). If the maximum angle δj is greater than the angle threshold εth, then the point is identified as the boundary point. Continue to search for the next boundary point until all points of PCD are processed to identify all the boundary points. The extraction result of the boundary points is shown in Figure 6.

3.3.2. Straight-Line Fitting

3.3.3. Door States Recognition

- (1)

- Construct the equation group (Equation (8)) of L1 and L2 to work out the intersection, ζ(x12, y12).

- (2)

- Divide the PCD of the two straight-lines into 10 portions and analyze the density near the intersection ζ, of L1 and L2. According to the average of the x coordinate of the straight-line with the smallest density to obtain the corresponding y coordinate, represented as θ(xθ, yθ).

- (3)

- Calculate the slopes ks1 of L1 and ks2 of L2 based on intersection, ζ, and θ(xθ, yθ).

- (4)

- Determine the angle between L1 and L2 based on ks1, ks2. If ks1·ks2 = −1, then the angle β = 90°. Otherwise, β is calculated by Equation (10).

3.4. Wall Reconstruction

- (1)

- Connect the corner points and select the longest edge Lm, set the sampling number as Nup, so that the sampling distance is calculated by . Starting from the point with the smallest coordinates in the corner points, and then output points in order according to disup to perform up-sampling.

- (2)

- RANSAC is used to fit the plane. Three points are randomly selected to determine a plane. The points within the distance threshold are added to the plane, and the plane is updated until all points are processed to complete the plane fitting. The reconstruction result based on the RANSAC is represented in Figure 7.

4. Experimental Results

4.1. Door Detection and Door State Recognition

4.2. Comparisons

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cui, Y.; Li, Q.; Yang, B.; Xiao, W.; Chen, C.; Dong, Z. Automatic 3-D Reconstruction of Indoor Environment with Mobile Laser Scanning Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3117–3130. [Google Scholar] [CrossRef] [Green Version]

- Cui, Y.; Li, Q.; Dong, Z. Structural 3D reconstruction of indoor space for 5G signal simulation with mobile laser scanning point clouds. Remote Sens. 2019, 11, 2262. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Yao, Y.; Duan, P.; Chen, Y.; Li, S.; Zhang, C. Studies on three-dimensional (3D) modeling of UAV oblique imagery with the aid of loop-shooting. Int. J. Geo-Inf. 2018, 7, 356. [Google Scholar] [CrossRef] [Green Version]

- Díaz-Vilariño, L.; Boguslawski, P.; Khoshelham, K.; Lorenzo, H. Obstacle-aware indoor pathfinding using point clouds. Int. J. Geo-Inf. 2019, 8, 233. [Google Scholar] [CrossRef] [Green Version]

- Szwoch, M.; Bartoszewski, D. 3D optical reconstruction of building interiors for game development. In Proceedings of the 11th International Image Processing and Communications Conference (IP&C 2019), Bydgoszcz, Poland, 11–13 September 2020; pp. 114–124. [Google Scholar] [CrossRef]

- Zheng, Y.; Peter, M.; Zhong, R.; Elberink, S.; Zhou, Q. Space subdivision in indoor mobile laser scanning point clouds based on scanline analysis. Sensor 2018, 18, 1838. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jarząbek-Rychard, M.; Lin, D.; Maas, H. Supervised Detection of Façade Openings in 3D Point Clouds with Thermal Attributes. Remote Sens. 2020, 12, 543. [Google Scholar] [CrossRef] [Green Version]

- Adán, A.; Quintana, B.; Prieto, S.A.; Bosché, F. An autonomous robotic platform for automatic extraction of detailed semantic models of buildings. Autom. Constr. 2020, 109, 102963.1–102963.20. [Google Scholar] [CrossRef]

- Yang, J.; Kang, Z.; Zeng, L.; Akwensi, P.H.; Sester, M. Semantics-guided reconstruction of indoor navigation elements from 3D colorized points. ISPRS J. Photogramm. Remote Sens. 2021, 173, 238–261. [Google Scholar] [CrossRef]

- Quintana, B.; Prieto, S.A.; Adán, A.; Bosché, F. Door detection in 3D colored laser scans for autonomous indoor navigation. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation, Madrid, Spain, 4–7 October 2016; pp. 1–8. [Google Scholar]

- Quintana, B.; Prieto, S.A.; Adan, A.; Bosché, F. Door detection in 3D coloured point clouds of indoor environments. Autom. Constr. 2018, 85, 146–166. [Google Scholar] [CrossRef]

- Zu Borgsen, S.M.; Schöpfer, M.; Ziegler, L.; Wachsmuth, S. Automated door detection with a 3D-sensor. In Proceedings of the Canadian Conference on Computer and Robot Vision, Montreal, QC, Canada, 5–9 May 2014; pp. 276–282. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef] [Green Version]

- Xie, L.; Wang, R. Automatic indoor building reconstruction from mobile laser scanning data. ISPRS 2017, XLII-2/W7, 417–422. [Google Scholar] [CrossRef] [Green Version]

- Previtali, M.; Díaz-Vilariño, L.; Scaioni, M. Towards automatic reconstruction of indoor scenes from incomplete point clouds: Door and window detection and regularization. ISPRS 2018, XLII-4, 507–514. [Google Scholar] [CrossRef] [Green Version]

- Díaz-Vilariño, L.; Martínez-Sánchez, J.; Lagüela, S.; Armesto, J.; Khoshelham, K. Door recognition in cluttered building interiors using imagery and LiDAR data. In Proceedings of the ISPRS Technical Commission V Symposium, Trento, Italy, 23–25 June 2014; pp. 203–209. [Google Scholar]

- Kakillioglu, B.; Ozcan, K.; Velipasalar, S. Doorway detection for autonomous indoor navigation of unmanned vehicles. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3837–3841. [Google Scholar]

- Chen, W.; Qu, T.; Zhou, Y.; Weng, K.; Wang, G.; Fu, G. Door recognition and deep learning algorithm for visual based robot navigation. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics, Bali, Indonesia, 5–10 December 2014; pp. 1793–1798. [Google Scholar]

- Cheng, Y.; Cai, R.; Li, Z.; Zhao, X.; Huang, K. Locality-sensitive deconvolution networks with gated fusion for RGB-D indoor semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3029–3037. [Google Scholar]

- Zhu, B.; Cheng, X.L.; Liu, S.L.; Hu, X.H. Building Point Cloud Elevation Boundary Extraction Based on PCA Normal Vector Estimation. Geomat. Spat. Inf. Technol. 2021, 44, 38–40. [Google Scholar]

- Peng, L.J.; Lu, L.; Shu, L.J. Three-dimensional point cloud region growth segmentation based on PCL library. Comput. Inf. Technol. 2020, 165, 21–23. [Google Scholar]

- Zhang, Y. The research of fitting straight-line least square method. Inf. Commun. 2014, 44–45. [Google Scholar]

- Handa, A.; Whelan, T.; McDonald, J.; Davison, A.J. A benchmark for RGB-D visual odometry, 3D reconstruction and SLAM. In Proceedings of the IEEE International Conference on Robotics & Automation, Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Mura, C.; Mattausch, O.; Pajarola, R. Piecewise-planar Reconstruction of Multi-room Interiors with Arbitrary Wall Arrangements. Comput. Graph. Forum 2016, 35, 179–188. [Google Scholar] [CrossRef]

| Scene | Plane | Volume (m3) | Height (m) | Real Height (m) | Category |

|---|---|---|---|---|---|

| Livingroom | 1 2 3 | 0.005833 0.000804 0.003758 | 2.31575 1.84217 0.780573 | 2.331244 1.862738 0.818667 | wall door other object |

| House | 1 2 | 0.045027 0.011329 | 2.8254 2.14059 | 2.826390 2.157281 | wall door |

| Multiple-doors | 1 2 3 | 0.461809 0.077693 0.078877 | 2.83712 2.31991 2.3059 | 2.830485 2.311211 2.311746 | wall door1 door2 |

| Scene | Plane Category | Straight Line | Direction Vector | Angle λ | State of the Door |

|---|---|---|---|---|---|

| Wall of Livingroom | Wall Door Other object | Ay = 0.000041x − 1.9291 By = 0.000007x − 1.9291 Cy = 0.00029x − 1.91283 | A (1, 0.000041) B (1, 0.000007) C (1, 0.00029) | AB: 0.002° AC: 0.014° | Closed |

| Wall of House | Wall Door | Ay = −0.00049254x − 3.10184 By = −2.66718x − 16.3727 | A (1, −0.00049254) B (0.351064, −0.936352) | AB: 110.581° | Open |

| Wall of multiple candidate doors | Wall Door1 Door2 | Ay = −0.001x – 3.1099 By = 0.1246x – 2.452 Cy = 0.4706x + 1.3609 | A (1, −0.00105234) B (0.992331, 0.12361) C (0.904805, 0.425827) | AB: 7.1608° AC: 25.2633° | Semi-open Semi-open |

| Wall | Method Name | Number of Corners | Processing Time (s) |

|---|---|---|---|

| Wall 1 | Harris-based method | 109 | 3.06958 |

| Curvature based method | 400 | 0.43635 | |

| Our method | 16 | 3.47990 | |

| Wall 2 | Harris-based method | 1681 | 7.51997 |

| Curvature based method | 400 | 0.28158 | |

| Our method | 24 | 3.43450 | |

| Wall 3 | Harris-based method | 1510 | 7.38891 |

| Curvature based method | 400 | 0.28095 | |

| Our method | 16 | 3.46327 |

| Wall | The Number of Doors in the Real Scene (Closed/Semi-Open/Open) | The Number of Doors in the Reconstructed Scene (Closed/Semi-Open/Open) |

|---|---|---|

| Wall of Livingroom | 1(1/0/0) | 1(1/0/0) |

| Wall of House | 1(0/0/1) | 1(0/0/1) |

| Wall of multiple candidate doors | 2(0/2/0) | 2(0/2/0) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ning, X.; Sun, Z.; Wang, L.; Wang, M.; Lv, Z.; Zhang, J.; Wang, Y. Door State Recognition Method for Wall Reconstruction from Scanned Scene in Point Clouds. Mathematics 2023, 11, 1149. https://doi.org/10.3390/math11051149

Ning X, Sun Z, Wang L, Wang M, Lv Z, Zhang J, Wang Y. Door State Recognition Method for Wall Reconstruction from Scanned Scene in Point Clouds. Mathematics. 2023; 11(5):1149. https://doi.org/10.3390/math11051149

Chicago/Turabian StyleNing, Xiaojuan, Zeqian Sun, Lanlan Wang, Man Wang, Zhiyong Lv, Jiguang Zhang, and Yinghui Wang. 2023. "Door State Recognition Method for Wall Reconstruction from Scanned Scene in Point Clouds" Mathematics 11, no. 5: 1149. https://doi.org/10.3390/math11051149